Professional Documents

Culture Documents

Neural Network

Uploaded by

dvarsastryCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Neural Network

Uploaded by

dvarsastryCopyright:

Available Formats

Course content

Summary

Our goal is to introduce students to a powerful class of model, the Neural Network. In fact,

this is a broad term which includes many diverse models and approaches. We will first

motivate networks by analogy to the brain. The analogy is loose, but serves to introduce the

idea of parallel and distributed computation.

We then introduce one kind of network in detail: the feedforward network trained by

backpropagation of error. We discuss model architectures, training methods and data

representation issues. We hope to cover everything you need to know to get backpropagation

working for you. range of applications and e!tensions to the basic model will be presented

in the final section of the module.

Lecture 1: Introduction

"omputation in the brain

rtificial neuron models

#inear regression

#inear neural networks

$ulti%layer networks

&rror 'ackpropagation

Lecture 2: The Backprop Toolbox

(evision: the backprop algorithm

'ackprop: an e!ample

Overfitting and regulari)ation

*rowing and pruning networks

+reconditioning the network

$omentum and learning rate adaptation

Computation in the brain

The brain - that's my second most favourite oran! - "oody #llen

The Brain as an Information Processing System

The human brain contains about ,- billion nerve cells, or neurons. On average, each neuron

is connected to other neurons through about ,- --- synapses. .The actual figures vary

greatly, depending on the local neuroanatomy./ The brain0s network of neurons forms a

massively parallel information processing system. This contrasts with conventional

computers, in which a single processor e!ecutes a single series of instructions.

gainst this, consider the time taken for each elementary operation: neurons typically operate

at a ma!imum rate of about ,-- 1), while a conventional "+2 carries out several hundred

million machine level operations per second. 3espite of being built with very slow hardware,

the brain has 4uite remarkable capabilities:

its performance tends to degrade gracefully under partial damage. In contrast, most

programs and engineered systems are brittle: if you remove some arbitrary parts, very

likely the whole will cease to function.

it can learn .reorgani)e itself/ from e!perience.

this means that partial recovery from damage is possible if healthy units can learn to

take over the functions previously carried out by the damaged areas.

it performs massively parallel computations e!tremely efficiently. 5or e!ample,

comple! visual perception occurs within less than ,-- ms, that is, ,- processing

steps6

it supports our intelligence and self-awareness. (Nobody knows yet how this

occurs.)

processin

elements

elemen

t si$e

ener

y use

processin

speed

style of

computati

on

fault

toleran

t

learn

s

intellien

t%

conscious

,-

,7

synapses

,-

%8

m 9- W ,-- 1)

parallel,

distributed

yes yes usually

,-

:

transistor

s

,-

%8

m

9- W

."+2/

,-

;

1)

serial,

centrali)ed

no

a

little

not .yet/

s a discipline of rtificial Intelligence, Neural Networks attempt to bring computers a little

closer to the brain0s capabilities by imitating certain aspects of information processing in the

brain, in a highly simplified way.

Neural Networks in the

Brain

The brain is not homogeneous. t the

largest anatomical scale, we distinguish

cortex, midbrain, brainstem, and

cerebellum. &ach of these can be hierarchically subdivided into many reions, and areas

within each region, either according to the anatomical structure of the neural networks within

it, or according to the function performed by them.

The overall pattern of pro&ections .bundles of neural connections/ between areas is

e!tremely comple!, and only partially known. The best mapped .and largest/ system in the

human brain is the visual system, where the first ,- or ,, processing stages have been

identified. We distinguish feedfor'ard pro<ections that go from earlier processing stages

.near the sensory input/ to later ones .near the motor output/, from feedback connections that

go in the opposite direction.

In addition to these long%range connections, neurons also link up with many thousands of

their neighbours. In this way they form very dense, comple! local networks:

Neurons and Synapses

The basic computational unit in the nervous system is the nerve cell, or neuron. neuron

has:

3endrites .inputs/

"ell body

!on .output/

neuron receives input from other neurons .typically

many thousands/. Inputs sum .appro!imately/. Once input

e!ceeds a critical level, the neuron discharges a spike % an

electrical pulse that travels from the body, down the a!on,

to the ne!t neuron.s/ .or other receptors/. This spiking

event is also called depolari$ation, and is followed by a refractory period, during which the

neuron is unable to fire.

The a!on endings .Output =one/ almost touch the dendrites or cell body of the ne!t neuron.

Transmission of an electrical signal from one neuron to the ne!t is effected by

neurotransmittors, chemicals which are released from the first neuron and which bind to

receptors in the second. This link is called a synapse. The e!tent to which the signal from one

neuron is passed on to the ne!t depends on many factors, e.g. the amount of neurotransmittor

available, the number and arrangement of receptors, amount of neurotransmittor reabsorbed,

etc.

Synaptic

Learning

'rains learn. Of

course. 5rom what we

know of neuronal

structures, one way

brains learn is by

altering the strengths

of connections

between neurons, and

by adding or deleting

connections between

neurons. 5urthermore,

they learn >on%line>,

based on e!perience,

and typically without

the benefit of a

benevolent teacher.

The efficacy of a

synapse can change as a result of e!perience, providing both memory and learning through

lon-term potentiation. One way this happens is through release of more neurotransmitter.

$any other changes may also be involved.

#ong%term +otentiation:

n enduring .?, hour/ increase in synaptic

efficacy that results from high%fre4uency

stimulation of an afferent .input/ pathway

1ebbs +ostulate:

>When an a!on of cell ... e!cites@sA cell ' and

repeatedly or persistently takes part in firing it, some

growth process or metabolic change takes place in one or

both cells so that 0s efficiency as one of the cells firing

' is increased.>

'liss and #omo discovered #T+ in the hippocampus in

,;B9

+oints to note about #T+:

Cynapses become more or less important over

time .plasticity/

#T+ is based on e!perience

#T+ is based only on local information .1ebb0s postulate/

Summary

The following properties of nervous systems will be of particular interest in our neurally%

inspired models:

parallel, distributed information processing

high degree of connectivity among basic units

connections are modifiable based on e!perience

learning is a constant process, and usually unsupervised

learning is based only on local information

performance degrades gracefully if some units are removed

etc..........

Artificial Neuron odels

"omputational neurobiologists have constructed very elaborate computer models of neurons

in order to run detailed simulations of particular circuits in the brain. s "omputer Ccientists,

we are more interested in the general properties of neural networks, independent of how they

are actually >implemented> in the brain. This means that we can use much simpler, abstract

>neurons>, which .hopefully/ capture the essence of neural computation even if they leave out

much of the details of how biological neurons work.

+eople have implemented model neurons in hardware as electronic circuits, often integrated

on D#CI chips. (emember though that computers run much faster than brains % we can

therefore run fairly large networks of simple model neurons as software simulations in

reasonable time. This has obvious advantages over having to use special >neural> computer

hardware.

A Simple Artificial Neuron

Our basic computational element .model neuron/ is often called a node or unit. It receives

input from some other units, or perhaps from an e!ternal source. &ach input has an associated

'eiht w, which can be modified so as to model synaptic learning. The unit computes some

function f of the weighted sum of its inputs:

Its output, in turn, can serve as input to other units.

The weighted sum is called the net input to unit i, often written net

i

.

Note that w

ij

refers to the weight from unit j to unit i .not the other way around/.

The function f is the unit0s activation function. In the simplest case, f is the identity

function, and the unit0s output is <ust its net input. This is called a linear unit.

Linear Regression

Fitting a Model to Data

Consider the data below:

(Fig. 1)

&ach dot in the figure provides information about the weight .!%a!is, units: 2.C. pounds/ and

fuel consumption .y%a!is, units: miles per gallon/ for one of B7 cars .data from ,;B;/. "learly

weight and fuel consumption are linked, so that, in general, heavier cars use more fuel.

Now suppose we are given the weight of a BEth car, and asked to predict how much fuel it

will use, based on the above data. Cuch 4uestions can be answered by using a model % a short

mathematical description % of the data. The simplest useful model here is of the form

y = w

1

x + w

0

(1

)

This is a linear model: in an !y%plot, e4uation , describes a straight line with slope w

1

and

intercept w

0

with the y%a!is, as shown in 5ig. F. .Note that we have rescaled the coordinate

a!es % this does not change the problem in any fundamental way./

1ow do we choose the two parameters w

0

and w

1

of our modelG "learly, any straight line

drawn somehow through the data could be used as a predictor, but some lines will do a better

<ob than others. The line in 5ig. F is certainly not a good model: for most cars, it will predict

too much fuel consumption for a given weight.

(Fig. 2)

The Loss Function

n order to !ake precise what we !ean by being a "good predictor"# we de$ne a loss

(also called objective or error) function E o%er the !odel para!eters. & popular choice

for E is the sum-squared error:

(2

)

In words, it is the sum over all points i in our data set of the s4uared difference between the

taret value t

i

.here: actual fuel consumption/ and the model0s prediction y

i

, calculated from

the input value x

i

.here: weight of the car/ by e4uation ,. 5or a linear model, the sum%s4aured

error is a 4uadratic function of the model parameters. 5igure 9 shows E for a range of values

of w

0

and w

1

. 5igure 7 shows the same functions as a contour plot.

(Fig. ')

(Fig. ()

Minimizing the Loss

)he loss function E pro%ides us with an ob*ecti%e !easure of predicti%e error for a

speci$c choice of !odel para!eters. +e can thus restate our goal of $nding the best

(linear) !odel as $nding the %alues for the !odel para!eters that !ini!i,e E.

5or linear models, linear reression provides a direct way to compute these optimal model

parameters. .Cee any statistics te!tbook for details./ 1owever, this analytical approach does

not generali)e to nonlinear models .which we will get to by the end of this lecture/. &ven

though the solution cannot be calculated e!plicitly in that case, the problem can still be

solved by an iterative numerical techni4ue called radient descent. It works as follows:

1. Choose so!e (rando!) initial %alues for the !odel para!eters.

2. Calculate the gradient - of the error function with respect to each !odel

para!eter.

'. Change the !odel para!eters so that we !o%e a short distance in the direction

of the greatest rate of decrease of the error# i.e.# in the direction of --.

(. .epeat steps 2 and ' until - gets close to ,ero.

/ow does this work0 )he gradient of 1 gi%es us the direction in which the loss function at

the current settting of the w has the steepest slope. n ordder to decrease E# we take a

s!all step in the opposite direction# -G (Fig. 2).

(Fig. 2)

'y repeating this over and over, we move >downhill> in E until we reach a minimum, where

G H -, so that no further progress is possible .5ig. 8/.

(Fig. 3)

5ig. B shows the best linear model for our car data, found by this procedure.

(Fig. 4)

It's a neural netor!"

Our linear model of e4uation , can in fact be implemented by the simple neural network

shown in 5ig. :. It consists of a bias unit, an input unit, and a linear output unit. The input

unit makes e!ternal input x .here: the weight of a car/ available to the network, while the bias

unit always has a constant output of ,. The output unit computes the sum:

y

2

5 y

1

w

21

6 1.7 w

20

('

)

It is easy to see that this is e4uivalent to e4uation ,, with w

21

implementing the slope of the

straight line, and w

20

its intercept with the y%a!is.

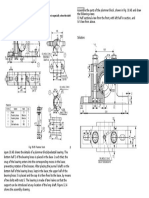

(Fig. 8)

You might also like

- Long Short Term Memory: Fundamentals and Applications for Sequence PredictionFrom EverandLong Short Term Memory: Fundamentals and Applications for Sequence PredictionNo ratings yet

- Artificial Neural Networks: Fundamentals and Applications for Decoding the Mysteries of Neural ComputationFrom EverandArtificial Neural Networks: Fundamentals and Applications for Decoding the Mysteries of Neural ComputationNo ratings yet

- Hybrid Neural Networks: Fundamentals and Applications for Interacting Biological Neural Networks with Artificial Neuronal ModelsFrom EverandHybrid Neural Networks: Fundamentals and Applications for Interacting Biological Neural Networks with Artificial Neuronal ModelsNo ratings yet

- Bio Inspired Computing: Fundamentals and Applications for Biological Inspiration in the Digital WorldFrom EverandBio Inspired Computing: Fundamentals and Applications for Biological Inspiration in the Digital WorldNo ratings yet

- Neural Networks - Lecture NotesDocument20 pagesNeural Networks - Lecture NotesdvarsastryNo ratings yet

- Soft Computing: Project ReportDocument54 pagesSoft Computing: Project ReportShūbhåm ÑagayaÇhNo ratings yet

- Soft Com PuttingDocument64 pagesSoft Com PuttingKoushik MandalNo ratings yet

- What Is An Artificial Neural Network?Document11 pagesWhat Is An Artificial Neural Network?Lakshmi GanapathyNo ratings yet

- SECA4002Document65 pagesSECA4002SivaNo ratings yet

- Ann FileDocument49 pagesAnn Filerohit148inNo ratings yet

- Artificial Neural NetworksDocument25 pagesArtificial Neural NetworksManoj Kumar SNo ratings yet

- Unit 2Document25 pagesUnit 2Darshan RaoraneNo ratings yet

- Nature and Scope of AI Techniques: Seminar Report Nov2011Document23 pagesNature and Scope of AI Techniques: Seminar Report Nov2011BaneeIshaqueKNo ratings yet

- Neural NetworksDocument6 pagesNeural Networksdavidmaya2006No ratings yet

- 1 Intro PDFDocument16 pages1 Intro PDFbrm1shubhaNo ratings yet

- Feedforward Neural Networks: Fundamentals and Applications for The Architecture of Thinking Machines and Neural WebsFrom EverandFeedforward Neural Networks: Fundamentals and Applications for The Architecture of Thinking Machines and Neural WebsNo ratings yet

- Unit-1 NNDocument39 pagesUnit-1 NNvarunfernandoNo ratings yet

- Artificial IntelligentDocument23 pagesArtificial Intelligentmohanad_j_jindeelNo ratings yet

- UNIT4Document13 pagesUNIT4Ayush NighotNo ratings yet

- Neural NetworksDocument32 pagesNeural Networksvalentinz1No ratings yet

- Unit 2 Feed Forward Neural Network: Lakshmibala - CHDocument37 pagesUnit 2 Feed Forward Neural Network: Lakshmibala - CHSrie Teja N150232No ratings yet

- Feature Extraction From Web Data Using Artificial Neural Networks (ANN)Document10 pagesFeature Extraction From Web Data Using Artificial Neural Networks (ANN)surendiran123No ratings yet

- E-Note 15956 Content Document 20240219104507AMDocument18 pagesE-Note 15956 Content Document 20240219104507AM1ds22me007No ratings yet

- Minin HandoutDocument13 pagesMinin HandoutDimas Bagus Cahyaningrat. WNo ratings yet

- Artifical Intelligence Unit 5Document15 pagesArtifical Intelligence Unit 5KP EDITZNo ratings yet

- DL Unit-2Document31 pagesDL Unit-2mdarbazkhan4215No ratings yet

- ECE/CS 559 - Neural Networks Lecture Notes #1 Neural Networks: Definitions, Motivation, PropertiesDocument2 pagesECE/CS 559 - Neural Networks Lecture Notes #1 Neural Networks: Definitions, Motivation, PropertiesNihal Pratap GhanatheNo ratings yet

- CS 611 Slides 5Document28 pagesCS 611 Slides 5Ahmad AbubakarNo ratings yet

- Artificial Neural NetworkDocument46 pagesArtificial Neural Networkmanish9890No ratings yet

- Artificial Intelligence in Robotics-21-50Document30 pagesArtificial Intelligence in Robotics-21-50Mustafa AlhumayreNo ratings yet

- Week8 9 AnnDocument41 pagesWeek8 9 AnnBaskoroNo ratings yet

- Neural Network and Fuzzy LogicDocument46 pagesNeural Network and Fuzzy Logicdoc. safe eeNo ratings yet

- SummaryDocument4 pagesSummaryhanif21746No ratings yet

- Soft Computing Unit-2Document61 pagesSoft Computing Unit-2namak sung loNo ratings yet

- Introduction To ANNDocument14 pagesIntroduction To ANNShahzad Karim KhawerNo ratings yet

- Contents:: 1. Introduction To Neural NetworksDocument27 pagesContents:: 1. Introduction To Neural NetworksKarthik VanamNo ratings yet

- Neural NetworksDocument21 pagesNeural NetworksAnonymous fM5eBwNo ratings yet

- Multilayer Perceptron: Fundamentals and Applications for Decoding Neural NetworksFrom EverandMultilayer Perceptron: Fundamentals and Applications for Decoding Neural NetworksNo ratings yet

- Unit-I Computational Intelligence - CSDocument34 pagesUnit-I Computational Intelligence - CSRaman NaamNo ratings yet

- Artificial Neural NetworksDocument14 pagesArtificial Neural NetworksprashantupadhyeNo ratings yet

- Artificial Neural NetworksDocument12 pagesArtificial Neural NetworksBhargav Cho ChweetNo ratings yet

- Introduction To Neural NetworksDocument51 pagesIntroduction To Neural NetworksAayush PatidarNo ratings yet

- Neural NetworksDocument12 pagesNeural NetworksdsjcfnpsdufbvpNo ratings yet

- Artificial Neural Network Based Power System RestoratoinDocument22 pagesArtificial Neural Network Based Power System RestoratoinBibinMathew50% (2)

- Neural NetworksDocument32 pagesNeural Networksshaik saidaNo ratings yet

- A Basic Introduction To Neural NetworksDocument23 pagesA Basic Introduction To Neural NetworksDennis Ebenezer DhanarajNo ratings yet

- Artificial Neural Network 7151 J5ZwbesDocument27 pagesArtificial Neural Network 7151 J5ZwbesJajula YashwanthdattaNo ratings yet

- AI Mod-5Document7 pagesAI Mod-5Shashank chowdary DaripineniNo ratings yet

- Introduction To Neural NetworksDocument51 pagesIntroduction To Neural NetworkssuryaNo ratings yet

- Dsa Theory DaDocument41 pagesDsa Theory Daswastik rajNo ratings yet

- A Basic Introduction To Neural NetworksDocument6 pagesA Basic Introduction To Neural Networkstamann2004No ratings yet

- Differentiable Model of Morphogenesis Karen Natalia Pulido RodriguezDocument10 pagesDifferentiable Model of Morphogenesis Karen Natalia Pulido Rodriguezkaren natalia pulido rodriguezNo ratings yet

- 1.1 Artificial Neural NetworksDocument56 pages1.1 Artificial Neural NetworkstamaryyyyyNo ratings yet

- Computational Intelligence: A Biological Approach To Artificial Neural NetworkDocument9 pagesComputational Intelligence: A Biological Approach To Artificial Neural Networkucicelos4256No ratings yet

- 10 Myths About Neutral NetworksDocument10 pages10 Myths About Neutral NetworksNialish KhanNo ratings yet

- DL Unit2Document48 pagesDL Unit2Alex SonNo ratings yet

- MLP 2Document12 pagesMLP 2KALYANpwnNo ratings yet

- Neural Networks: 1.1 What Is A Neural Network ?Document12 pagesNeural Networks: 1.1 What Is A Neural Network ?Aazim YashwanthNo ratings yet

- (ANN) Neural Nets Intro (Frohlich)Document24 pages(ANN) Neural Nets Intro (Frohlich)johnsmithxxNo ratings yet

- MonorailDocument9 pagesMonoraildvarsastryNo ratings yet

- VR SyllabusDocument1 pageVR SyllabusdvarsastryNo ratings yet

- Position Analysis QuestionsDocument3 pagesPosition Analysis Questionsdvarsastry100% (1)

- Indian History TimelineDocument5 pagesIndian History TimelineRohan PandhareNo ratings yet

- Worksheet 4: Brittle Coulomb Mohr Theory: VQ IbDocument2 pagesWorksheet 4: Brittle Coulomb Mohr Theory: VQ IbdvarsastryNo ratings yet

- Session 4Document4 pagesSession 4dvarsastryNo ratings yet

- Different Vedanta SchoolsDocument3 pagesDifferent Vedanta SchoolsdvarsastryNo ratings yet

- Agricultural Research and Development: Green RevolutionDocument12 pagesAgricultural Research and Development: Green RevolutiondvarsastryNo ratings yet

- Plummer BlockDocument1 pagePlummer BlockdvarsastryNo ratings yet

- MASS WeightDocument1 pageMASS WeightdvarsastryNo ratings yet

- Week-1 Phases of DesignDocument9 pagesWeek-1 Phases of DesigndvarsastryNo ratings yet

- Mechanical Engineering Interview Questions With AnswersDocument10 pagesMechanical Engineering Interview Questions With AnswersdvarsastryNo ratings yet

- Aircraft Manufacturing DesignDocument2 pagesAircraft Manufacturing DesigndvarsastryNo ratings yet

- Introduction To Engineering: The Wright Brothers, Orville and Wilbur Invented Aeroplane For The First Time I 1903Document3 pagesIntroduction To Engineering: The Wright Brothers, Orville and Wilbur Invented Aeroplane For The First Time I 1903dvarsastryNo ratings yet

- LectureDocument4 pagesLecturedvarsastryNo ratings yet

- Lesson 8: Unit 1Document4 pagesLesson 8: Unit 1dvarsastryNo ratings yet

- Submitted By:: S.Praveen Kumar (150070362) P.ABISHEK (150070302)Document7 pagesSubmitted By:: S.Praveen Kumar (150070362) P.ABISHEK (150070302)dvarsastryNo ratings yet

- Submitted By:: S.Praveen Kumar (150070362) P.ABISHEK (150070302)Document7 pagesSubmitted By:: S.Praveen Kumar (150070362) P.ABISHEK (150070302)dvarsastryNo ratings yet

- Engineering Design ProcessDocument4 pagesEngineering Design ProcessdvarsastryNo ratings yet

- Engineering Design ProcessDocument2 pagesEngineering Design ProcessdvarsastryNo ratings yet

- Hysteresis Modelling For A MR Damper: Rmm@itesm - MX (Ruben Morales-Menendez)Document9 pagesHysteresis Modelling For A MR Damper: Rmm@itesm - MX (Ruben Morales-Menendez)dvarsastryNo ratings yet

- Hydrodynamic Bearing TheoryDocument20 pagesHydrodynamic Bearing TheoryPRASAD32675% (4)

- Machine Design Expanded Book ViewDocument8 pagesMachine Design Expanded Book ViewdvarsastryNo ratings yet

- Cap 11Document16 pagesCap 11Prangyadeepta ChoudhuryNo ratings yet

- HWDay 1Document25 pagesHWDay 1dvarsastryNo ratings yet

- HP Pavilion 15-au118TX Portable Quickspecs: Performance Video & AudioDocument1 pageHP Pavilion 15-au118TX Portable Quickspecs: Performance Video & AudiodvarsastryNo ratings yet

- Cap 02Document0 pagesCap 02Navneet RankNo ratings yet

- Cap 01Document0 pagesCap 01Daniel SantosNo ratings yet

- Silicone FluidsDocument27 pagesSilicone FluidsdvarsastryNo ratings yet

- Silicone OilDocument3 pagesSilicone OilPrakash SharmaNo ratings yet

- Rses Rosenberg Self Esteem Scale ScoringDocument2 pagesRses Rosenberg Self Esteem Scale ScoringCezara AurNo ratings yet

- Working With Words - Budding ScientistDocument2 pagesWorking With Words - Budding Scientistسدن آرماNo ratings yet

- Textbooks 2019 Grade 5Document1 pageTextbooks 2019 Grade 5Gayathri MoorthyNo ratings yet

- Introduction To LeadershipDocument20 pagesIntroduction To LeadershipFayazul Hasan100% (2)

- Intuitive Eating JournalDocument4 pagesIntuitive Eating JournalSofia SiagaNo ratings yet

- The Level 2 of Training EvaluationDocument7 pagesThe Level 2 of Training EvaluationAwab Sibtain67% (3)

- KHDA Gems Our Own Indian School 2015 2016Document25 pagesKHDA Gems Our Own Indian School 2015 2016Edarabia.comNo ratings yet

- Artifact 4Document12 pagesArtifact 4api-519149330No ratings yet

- Training EvaluationDocument38 pagesTraining EvaluationTashique CalvinNo ratings yet

- Practical Guide To Safety Leadership - An Evidence Based ApproachDocument205 pagesPractical Guide To Safety Leadership - An Evidence Based Approachtyo100% (2)

- Chapter 5 - Translation MethodsDocument13 pagesChapter 5 - Translation Methodsayelengiselbochatay@hotmail.comNo ratings yet

- Developing Leadership & Management (6HR510) : Derby - Ac.uDocument26 pagesDeveloping Leadership & Management (6HR510) : Derby - Ac.uaakanksha_rinniNo ratings yet

- RyanairDocument22 pagesRyanairAdnan Yusufzai86% (14)

- Ghid de Educatie Timpurie WaldorfDocument48 pagesGhid de Educatie Timpurie WaldorfDana Serban100% (2)

- DLP DIASS Week B - The Discipline of CounselingDocument9 pagesDLP DIASS Week B - The Discipline of CounselingSteff SteffzkeehNo ratings yet

- EFQM Excellence Model TeaserDocument5 pagesEFQM Excellence Model TeaserMehmet SinanNo ratings yet

- Executive SummaryDocument3 pagesExecutive SummaryromnickNo ratings yet

- CU 7. Developing Teaching PlanDocument36 pagesCU 7. Developing Teaching PlanJeramie MacabutasNo ratings yet

- Zahavi Dan Husserl S Phenomenology PDFDocument188 pagesZahavi Dan Husserl S Phenomenology PDFManuel Pepo Salfate50% (2)

- Prepared By: Harris Khaliq Tayyab Roll Number:11339 Subject: Self Management SkillsDocument9 pagesPrepared By: Harris Khaliq Tayyab Roll Number:11339 Subject: Self Management Skillssana_571No ratings yet

- Principles of SupervisionDocument6 pagesPrinciples of SupervisionrmaffireschoolNo ratings yet

- K To 12 PedagogiesDocument28 pagesK To 12 PedagogiesJonna Marie IbunaNo ratings yet

- Fst511 - Sulam GuidelineDocument1 pageFst511 - Sulam Guidelinefatin umairahNo ratings yet

- Summary of Five Educational PhilosophiesDocument2 pagesSummary of Five Educational PhilosophiesKheona MartinNo ratings yet

- Using Bayes Minimum Risk To Improve Imbalanced Learning Under High-Dimensionality DifficultiesDocument38 pagesUsing Bayes Minimum Risk To Improve Imbalanced Learning Under High-Dimensionality DifficultiesImran AshrafNo ratings yet

- Guidelines and Rubric in Writing SpeechDocument3 pagesGuidelines and Rubric in Writing SpeechMonica GeronaNo ratings yet

- ICAO Language Proficiency FAA PDFDocument20 pagesICAO Language Proficiency FAA PDFNarciso PereiraNo ratings yet

- Essay Writing (English) - Soksay ClubDocument2 pagesEssay Writing (English) - Soksay ClubNica BinaraoNo ratings yet

- Assessment For External Industry Supervisors FormDocument2 pagesAssessment For External Industry Supervisors FormMathias ArianNo ratings yet

- (Aboguin, Brian E) Prelim PT1 - Edu C904Document2 pages(Aboguin, Brian E) Prelim PT1 - Edu C904Vinnie GognittiNo ratings yet