Professional Documents

Culture Documents

Discrete (And Continuous) Optimization WI4 131: November - December, A.D. 2004

Uploaded by

Anonymous N3LpAXOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Discrete (And Continuous) Optimization WI4 131: November - December, A.D. 2004

Uploaded by

Anonymous N3LpAXCopyright:

Available Formats

Discrete (and Continuous) Optimization

WI4 131

Kees Roos

Technische Universiteit Delft

Faculteit Electrotechniek, Wiskunde en Informatica

Afdeling Informatie, Systemen en Algoritmiek

e-mail: C.Roos@ewi.tudelft.nl

URL: http://www.isa.ewi.tudelft.nl/roos

November December, A.D. 2004

Course Schedule

1. Formulations (18 pages)

2. Optimality, Relaxation, and Bounds (10 pages)

3. Well-solved Problems (13 pages)

4. Matching and Assigments (10 pages)

5. Dynamic Programming (11 pages)

6. Complexity and Problem Reduction (8 pages)

7. Branch and Bound (17 pages)

8. Cutting Plane Algorithms (21 pages)

9. Strong Valid Inequalities (22 pages)

10. Lagrangian Duality (14 pages)

11. Column Generation Algorithms (16 pages)

12. Heuristic Algorithms (15 pages)

13. From Theory to Solutions (20 pages)

Optimization Group 1

Capter 2

Optimality, Relaxation, and Bounds

Optimization Group 2

Optimality and Relaxation

(IP) z = max {c(x) : x X Z

n

}

Basic idea underlying methods for solving (IP): nd a lower bound z z and an upper

bound z z such that z = z = z.

Practically, this means that any algorithm will nd a decreasing sequence

z

1

> z

2

> . . . > z

s

z

of upper bounds, and an increasing sequence

z

1

< z

2

< . . . < z

t

z

of lower bounds. The stopping criterion in general takes the form

z

s

z

t

,

where epsilon is some suitably chosen small nonnegative number.

Optimization Group 3

How to obtain Bounds?

Every feasible solution x X provides a lower bound z = c(x) z.

This is essentially the only way to obtain lower bounds. For some IPs, it is easy to nd

a feasible solution (e.g. Assignment, TSP, Knapsack), but for other IPs, nding a feasible

solution may be very dicult.

The most important approach for nding upper bounds is by relaxation. The given IP is

replaced by a simpler problem whose optimal value is at least as large as z. There are two

obvious ways to get a relaxation:

(i) Enlarge the feasible set.

(ii) Replace the objective function by a function that has the same or a larger value every-

where.

Denition 1 The problem (RP) z

R

= max {f(x) : x T Z

n

} is a relaxation of

(IP) if X T and f(x) c(x) for all x X.

Proposition 1 If (RP) is a relaxation of (IP) then z

R

z.

Proof: If x

is optimal for (IP), then x

X T and z = c(x

) f(x

). As

x

T, f(x

) is a lower bound for z

R

, it follows that z f(x

) z

R

.

Optimization Group 4

Linear Relaxations

Denition 2 For the IP max

_

c

T

x : x X = P Z

n

_

with formulation P, a linear

relaxation is the LO problem z

LP

= max

_

c

T

x : x P

_

.

Recall that P =

_

x R

n

+

: Ax b

_

. As P Z

n

P and the objective is unchanged,

this is clearly a relaxation. Not surprisingly, better formulations give tighter (upper) bounds.

Proposition 2 If P

1

and P

2

are two formulations for the feasible set X in an IP, and

P

1

P

2

, the the respective upper bounds z

LP

i

(i = 1, 2) satisfy z

LP

1

z

LP

2

.

Sometimes the relaxation RP immediately solves the IP.

Proposition 3 If the relaxation (RP) is infeasible, then so is (IP). On the other hand, if

(RP) has an optimal solution x

and x

X and c(x

) = f(x

), then x

is an optimal

solution of (IP).

Proof: If (RP) is infeasible then T = . Since X T, also X = . For the second part

of the lemma: as x

X, z c(x

) = f(x

) = z

R

and z z

R

we get c(x

) = z.

Optimization Group 5

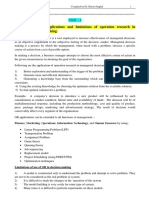

Example

z = max {4x y : 7x 2y 14, y 3, 2x 2y 3, x, y 0, x, y integer}

The gure below illustrates the situation.

0 1 2 3 4 5 6 7

0

1

2

3

4

5

6

7

c

x

y

The gure makes clear that x = 2, y = 1 is the optimal solution, with 7 as objective value.

The optimal solution of the LP relaxation is x =

20

7

, y = 3 with

59

7

as objective value. This

is an upper bound. Rounding this bound to an integer gives 8 as the linear relaxation bound.

Optimization Group 6

Combinatorial Relaxations

Whenever the relaxation is a combinatorial optimization problem, we speak of a combinatorial

relaxation. Below follow some examples.

TSP: The TSP, on a digraph D = (V, A), amounts to nding a (salesman, or Hamiltonian)

tour T with minimal length in terms of given arc weights c

ij

, (i, j) A. We have seen that

a tour is an assignment without subtours. So we have

z

TSP

= min

_

(i,j)T

c

ij

: T is a tour

_

_

min

_

(i,j)T

c

ij

: T is an assigment

_

_

.

Symmetric TSP: The STSP, on a graph G = (V, E), amounts to nding a tour T with

minimal length in terms of given edge weights c

e

, e E.

Denition 3 A 1-tree is a subgraph consisting of two edges adjacent to node 1, plus the

edges of a tree on the remaining nodes {2, . . . , n}.

Observe that a tour consists of two edges adjacent to node 1, plus the edges of a path through

the remaining nodes. Since a path is a special case of a tree we have

z

STSP

= min

_

_

_

eT

c

e

: T is a tour

_

_

_

min

_

_

_

eT

c

e

: T is a 1-tree

_

_

_

.

Optimization Group 7

Combinatorial Relaxations (cont.)

Quadratic 0-1 Problem: This is the (in general hard!) problem of maximizing a quadratic

function over the unit cube:

z = max

_

_

_

1i<jn

q

ij

x

i

x

j

+

1in

p

i

x

i

: x {0, 1}

n

_

_

_

Replacing all terms q

ij

x

i

x

j

with q

ij

< 0 by 0 the objective function does not decrease. So

we have the relaxation

z

R

= max

_

_

_

1i<jn

max

_

q

ij

, 0

_

x

i

x

j

+

1in

p

i

x

i

: x {0, 1}

n

_

_

_

This problem is also a quadratic 0-1 problem, but now the quadratic terms have nonnegative

coecients. Such a problem can be solved by solved by solving a series of (easy!) maximum

ow problems (see Chapter 9).

Knapsack Problem: The set underlying this problem is X =

_

_

_

x Z

N

+

:

n

j=1

a

j

x

j

b

_

_

_

.

This set can be extended to X

R

=

_

_

_

x Z

N

+

:

n

j=1

_

a

j

_

x

j

b

_

_

_

, where a denotes

the largest integer less than or equal to a.

Optimization Group 8

Lagrangian Relaxations

Consider the IP

(IP) z = max

_

c

T

x : Ax b, x X Z

n

_

.

If this problem is too dicult to solve directly, one possible way to proceed is to drop the

constraint Ax b. This enlarges the feasible region and so yields a relaxation of (IP).

An important extension of this idea is not just to drop complicating constraints, but then to

add them into the objective function with Lagrange multipliers.

Proposition 4 Suppose that (IP) has an optimal solution, and let u R

m

. Dene

z(u) = max

_

c

T

x +u

T

(b Ax) : x X

_

.

Then z(u) z for all u 0.

Proof: Let x

be optimal for (IP). Then c

T

x

= z, Ax

b and x

X. Since

u 0, it follows that c

T

x

+u

T

(b Ax

) c

T

x

= z.

The main challenge is, of course, to nd Lagrange multipliers that minimize z(u). The best

Lagrange multipliers are found by solving (if we can!)

min

u0

z(u) = min

u0

max

xX

_

c

T

x +u

T

(b Ax)

_

.

Optimization Group 9

LO Duality (recapitulation)

Consider the LO problem (P) z = max

_

c

T

x : Ax b, x 0

_

.

Its dual problem is (D) w = min

_

b

T

y : A

T

y c, y 0

_

.

Proposition 5 (Weak duality) If x is feasible for (P) and y is feasible for (D) then

c

T

x b

T

y.

Proof: c

T

x

_

A

T

y

_

T

x = y

T

Ax y

T

b = b

T

y.

Proposition 6 (Strong duality) Let x be feasible for (P) and y feasible for (D). Then

x and y are optimal if and only if c

T

x = b

T

y.

Scheme for dualizing:

Primal problem (P) Dual problem (D)

min c

T

x max b

T

y

equality constraint free variable

inequality constraint variable 0

inequality constraint variable 0

free variable equality constraint

variable 0 inequality constraint

variable 0 inequality constraint

Optimization Group 10

Duality

In the case of Linear Optimization every feasible solution of the dual problem gives a bound

for the optimal value of the primal problem. It is natural to ask whether it is possible to nd

a dual problem for an IP.

Denition 4 The two problems

(IP) z = max {c(x) : x X} ; (D) w = min{(u) : u U}

form a (weak)-dual pair if c(x) (u) for all x X and all u U. When moreover

w = z, they form a strong-dual pair.

N.B. Any feasible solution of a dual problem yields an upper bound for IP. On the contrary,

for a relaxation only its optimal solution yields an upper bound.

Proposition 7 The IP z = max

_

c

T

x : Ax b, x Z

n

+

_

and the IP

w

LP

= min

_

u

T

b : A

T

u c, u Z

m

+

_

form a dual pair.

Proposition 8 Suppose that (IP) and (D) are a dual pair. If (D) is unbounded then

(IP) is infeasible. On the other hand, if x

X and u

U satisfy c(x

) = w(u

), then

x

is an optimal solution of (IP) and u

is an optimal solution of (D).

Optimization Group 11

A Dual for the Matching Problem

Given a graph G = (V, E), a matching M E is a set of (vertex-)disjoint edges. A

covering by nodes is a set R V of nodes such that every edge has at least one end point

in R.

extra tak

In the graph at the left the red edges form a matching, and the green

nodes form a covering by nodes.

Proposition 9 The problem of nding a maximum cardinality

matching:

max

ME

{|M| : M is a matching}

and the problem of nding a minimum cardinality covering by nodes:

min

RV

{|R| : R is covering by nodes}

form a weak-dual pair.

Proof: If M is a matching then the end nodes of its edges are distinct, so there number is

2k, where k = |M|. Any covering by nodes R must contain at least one of the end nodes

of each edge in M. Hence |R| k. Therefore, |R| |M|.

Unfortunately, this duality is not strong! Since the given matching is maximal and the covering by node is

minimal, as easily can be veried, the above graph proves this.

Optimization Group 12

A Dual for the Matching Problem (cont.)

The former result can also be obtained from LO duality.

Denition 5 The node-edge matrix of a graph G = (V, E) is an n = |V | by m = |E|

matrix A with A

i,e

= 1 when node i is incident with edge e, and A

i,e

= 0 otherwise.

With the help of the node-edge matrix A of G the matching problem can be formulated as

the following IP:

z = max

_

1

T

x : Ax 1, x Z

m

+

_

and the covering by nodes problem as:

w = min

_

1

T

y : A

T

y 1, y Z

n

+

_

.

Using LO duality we may write

z = max

_

1

T

x : Ax 1, x Z

m

+

_

max

_

1

T

x : Ax 1, x 0

_

= min

_

1

T

y : A

T

y 1, y 0

_

min

_

1

T

y : A

T

y 1, y Z

n

+

_

= w.

Optimization Group 13

Primal Bounds: Greedy Search

The idea of a greedy heuristic is to construct a solution from scratch (the empty set), choosing

at each step the item bringing the best immediate award. We give some examples.

0-1 Knapsack problem:

z = max 12x

1

+8x

2

+17x

3

+11x

4

+6x

5

+2x

6

+2x

7

4x

1

+3x

2

+7x

3

+5x

4

+3x

5

+2x

6

+3x

7

9

x {0, 1}

7

Greedy Solution: Order the

variables so that their prot per

unit is nondecreasing. This is

already done. Variables with a

low index are now more attractive

than variables with higher indices.

So we proceed as shown in the ta-

ble.

var.

c

i

a

i

value use of resource resource remaining

x

1

3 1 4 5

x

2

8

3

1 3 2

x

3

17

7

0 7 2

x

4

11

5

0 5 2

x

5

2 0 3 2

x

6

1 1 2 2

x

7

2

3

0 3 0

The resulting solution is x

G

= (1, 1, 0, 0, 0, 1, 0) with objective value z

G

= 22. All we know is that 22 is a

lower bound for the optimal value. Observe that x = (1, 0, 0, 1, 0, 0, 0) is feasible with the higher value 23.

Optimization Group 14

Primal Bounds: Greedy Search (cont.)

Symmetric TSP: Consider an instance with the distance matrix:

_

_

_

_

_

_

_

9 2 8 12 11

7 19 10 32

29 18 6

24 3

19

_

_

_

_

_

_

_

Greedy Solution: Order the edges according to nondecreasing cost, and seek to use them

in this order. So we proceed as follows to construct the tour at the left.

3

4

2

5

1

6

Heuristic tour

step arc length

1 (1,3) 2 accept

2 (4,6) 3 accept

3 (3,6) 6 accept

4 (2,3) 7 conict in node 3

5 (1,4) 8 creates subtour

6 (1,2) 9 accept

7 (2,5) 10 accept

8 (4,5) 24 forced to accept

3

4

2

5

1

6

Better tour

The length of the created tour is z

G

= 54. The tour 1 4 6 5 2 3 1 is

shorter: it has length 49.

Optimization Group 15

Primal Bounds: Local Search

Local search methods assume that a feasible solution is known. It is called the incumbent.

The idea of a local search heuristic is to dene a neighborhood of solutions close to the

incumbent. Then the best solution in this neighborhood is found. If it is better than the

incumbent, it replaces it, and the procedure is repeated. Otherwise, the incumbent is locally

optimal with respect to the neighborhood, and the heuristic terminates.

Below we give two examples.

Optimization Group 16

Primal Bounds: Local Search (cont.)

Uncapacitated Facility Location: Consider an instance with m = 6 clients and n = 4 depots, and costs

as shown below:

(c

ij

) =

_

_

_

_

_

_

_

6 2 3 4

1 9 4 11

15 2 6 3

9 11 4 8

7 23 2 9

4 3 1 5

_

_

_

_

_

_

_

and (f

j

) = (21, 16, 11, 24)

Let N = {1, 2, 3, 4} denote the set of depots, and S the set of open depots. Let the incumbent be the

solution with depots 1 and 2 open, so S = {1, 2}. Each client is served by the open depot with cheapest cost

for the client. So the costs for the incumbent are (2 +1 +2 +9 +7 +3) +(21 +16) = 61.

A possible neighborhood Q(S) of S is the set of all solutions obtained from S by adding or removing a single

depot:

Q(S) = {T N : T = S {j} for j / S or T = S \ {i} for i S} .

In the current example: Q(S) = {{1} , {2} , {1, 2, 3} , {1, 2, 4}}. A simple computation makes clear that

the costs for these 4 solutions are 63, 66, 60 and 84, respectively. So S = {1, 2, 3} is the next incumbent.

The new neighborhood becomes Q(S) = {{1, 2} , {1, 3} , {2, 3} , {1, 2, 3, 4}}, with minimal costs 42 for

S = {2, 3}, which is the new incumbent.

The new neighborhood becomes Q(S) = {{2} , {3} , {1, 2, 3} , {2, 3, 4}}, with minimal costs 31 for

S = {3}, the new incumbent.

The new neighborhood becomes Q(S) = {{1, 3} , {2, 3} , {3, 4} , }, with all costs > 31. So S = {3} is

a locally optimal solution.

Optimization Group 17

Primal Bounds: Local Search (cont.)

Graph Equipartition Problem: Given a graph G = (V, E) and n = |V |, the problem is

to nd a subset S V with |S| =

_

n

2

_

for which the number c(S) of edges in the cut set

(S, V \ S) is minimized, where

(S, V \ S) = {(i, j) E : i S, j / S} .

In this example all feasible sets have the same size,

_

n

2

_

. A natural neighborhood of a feasible

set S V therefore consists of all subsets of nodes obtained by replacing one element in S

by one element not in S:

Q(S) = {T N : |T \ S| = |S \ T| = 1} .

3

4

2

5

1

6

Example: In the graph shown left we start with S = {1, 2, 3} for

which c(S) = 6. Then

Q(S) = {{1, 2, 4} , {1, 2, 5} , {1, 2, 6} , {1, 3, 4} , {1, 3, 5} ,

{1, 3, 6} , {2, 3, 4} , {2, 3, 5} , {2, 3, 6}}.

for which c(T) = 6, 5, 4, 4, 5, 6, 5, 2, 5 respectively. The new

incumbent is S = {2, 3, 5} with c(S) = 2. Q(S) does not

contain a better solution, as may be easily veried, so S = {2, 3, 5}

is locally optimal.

Optimization Group 18

You might also like

- Convex Optimization and System Theory: Kees Roos/A.A. Stoorvogel E-Mail: UrlDocument26 pagesConvex Optimization and System Theory: Kees Roos/A.A. Stoorvogel E-Mail: UrlAnonymous N3LpAXNo ratings yet

- Physics Homework SolutionsDocument5 pagesPhysics Homework SolutionsSachin VermaNo ratings yet

- Regression and CorrelationDocument4 pagesRegression and CorrelationClark ConstantinoNo ratings yet

- Problem Set 1 - SolutionsDocument4 pagesProblem Set 1 - SolutionsAlex FavelaNo ratings yet

- HW1 SolutionDocument7 pagesHW1 SolutionLê QuânNo ratings yet

- A Characterization of Inner Product SpacesDocument8 pagesA Characterization of Inner Product SpacesGeorge ProtopapasNo ratings yet

- SVD ComputationDocument13 pagesSVD ComputationEnas DhuhriNo ratings yet

- Allex PDFDocument42 pagesAllex PDFPeper12345No ratings yet

- Math 104 - Homework 10 Solutions: Lectures 2 and 4, Fall 2011Document4 pagesMath 104 - Homework 10 Solutions: Lectures 2 and 4, Fall 2011dsmile1No ratings yet

- Econ11 HW PDFDocument207 pagesEcon11 HW PDFAlbertus MuheuaNo ratings yet

- Chapter 4 - Inner Product SpacesDocument80 pagesChapter 4 - Inner Product SpacesChristopherNo ratings yet

- MergedDocument36 pagesMergedJeoff Libo-onNo ratings yet

- Green's Functions For The Stretched String Problem: D. R. Wilton ECE DeptDocument34 pagesGreen's Functions For The Stretched String Problem: D. R. Wilton ECE DeptSri Nivas ChandrasekaranNo ratings yet

- Lab9 BeamLabSolDocument8 pagesLab9 BeamLabSolPatrick LeeNo ratings yet

- Integration Using Algebraic SolutionDocument6 pagesIntegration Using Algebraic SolutionAANNo ratings yet

- Antenna Pattern Design Based On Minimax Algorithm: The Aerospace Corporation, El Segundo, California 90009, United StatesDocument33 pagesAntenna Pattern Design Based On Minimax Algorithm: The Aerospace Corporation, El Segundo, California 90009, United StatesJames JenNo ratings yet

- Rotational Mechanics, Part II: Swinging StickDocument7 pagesRotational Mechanics, Part II: Swinging StickMinh DucNo ratings yet

- Maximum Likelihood Estimation ExplainedDocument7 pagesMaximum Likelihood Estimation ExplainedShaibal BaruaNo ratings yet

- Application of CalculusDocument36 pagesApplication of CalculusTAPAS KUMAR JANANo ratings yet

- 4-Lyapunov Theory HandoutDocument36 pages4-Lyapunov Theory HandoutTanNguyễnNo ratings yet

- Midterm Exam SolutionsDocument26 pagesMidterm Exam SolutionsShelaRamos100% (1)

- 01 - Transformations of GraphsDocument8 pages01 - Transformations of GraphsAminuddin AmzarNo ratings yet

- MA1251 Numerical Methods Lecture NotesDocument153 pagesMA1251 Numerical Methods Lecture NotesHarshithaNo ratings yet

- Problem Sets ALL PDFDocument34 pagesProblem Sets ALL PDFLeroy ChengNo ratings yet

- EE263 Autumn 2013-14 homework problemsDocument153 pagesEE263 Autumn 2013-14 homework problemsHimanshu Saikia JNo ratings yet

- Business Mathematics Application Calculus To Solve Business ProblemsDocument51 pagesBusiness Mathematics Application Calculus To Solve Business ProblemsEida HidayahNo ratings yet

- Linear Control Systems Lecture # 8 Observability & Discrete-Time SystemsDocument25 pagesLinear Control Systems Lecture # 8 Observability & Discrete-Time SystemsRavi VermaNo ratings yet

- Solving Ordinary Differential Equations - Sage Reference Manual v7Document13 pagesSolving Ordinary Differential Equations - Sage Reference Manual v7amyounisNo ratings yet

- 12...... Probability - Standard DistributionsDocument14 pages12...... Probability - Standard DistributionsNevan Nicholas Johnson100% (1)

- Cayley Hamilton TheoremDocument5 pagesCayley Hamilton TheoremManjusha SharmaNo ratings yet

- Poisson, NB and ZIP model comparison for claim frequency dataDocument15 pagesPoisson, NB and ZIP model comparison for claim frequency dataKeshavNo ratings yet

- Integral EquationsDocument46 pagesIntegral EquationsNirantar YakthumbaNo ratings yet

- Math Camp Real Analysis SolutionsDocument3 pagesMath Camp Real Analysis SolutionsShubhankar BansodNo ratings yet

- Assignment 1 Due 1-16-2014Document4 pagesAssignment 1 Due 1-16-2014Evan Baxter0% (1)

- Consider The Differential Equation 4xy'' + 2y' + Y.Document4 pagesConsider The Differential Equation 4xy'' + 2y' + Y.Jeremy WihardiNo ratings yet

- Convergence of Taylor Series (Sect. 10.9) PDFDocument7 pagesConvergence of Taylor Series (Sect. 10.9) PDFTu ShirotaNo ratings yet

- Lec 24 Lagrange MultiplierDocument20 pagesLec 24 Lagrange MultiplierMuhammad Bilal JunaidNo ratings yet

- Breve Tabla de Integrales: Urs DDocument13 pagesBreve Tabla de Integrales: Urs DMildred MalaveNo ratings yet

- Ee263 Ps1 SolDocument11 pagesEe263 Ps1 SolMorokot AngelaNo ratings yet

- Dynamics 13esi Solutions Manual c19 PDFDocument57 pagesDynamics 13esi Solutions Manual c19 PDFNkoshiEpaphrasShoopalaNo ratings yet

- C2T Unit-IiDocument18 pagesC2T Unit-IiTAPAS KUMAR JANANo ratings yet

- 3 - Power Series PDFDocument98 pages3 - Power Series PDFفراس فيصلNo ratings yet

- Matrix - AssignmentDocument2 pagesMatrix - Assignmentapi-3742735No ratings yet

- Linear Algebra Review: Essential Resources for Quantum MechanicsDocument10 pagesLinear Algebra Review: Essential Resources for Quantum Mechanicsabo7999No ratings yet

- DC Choppers (DC-DC Converters) : Unit IvDocument29 pagesDC Choppers (DC-DC Converters) : Unit Ivseeeni100% (1)

- Halley's Method and Newton-Raphson For Solving F (X) 0Document11 pagesHalley's Method and Newton-Raphson For Solving F (X) 0Dr Srinivasan Nenmeli -KNo ratings yet

- MATLAB Basics in 40 CharactersDocument50 pagesMATLAB Basics in 40 CharactersOleg ZenderNo ratings yet

- Linear FunctionalsDocument7 pagesLinear Functionalshyd arnes100% (1)

- Numerical Integration Methods4681.6Document26 pagesNumerical Integration Methods4681.6Muhammad FirdawsNo ratings yet

- XPPAUT lecture: an example sessionDocument8 pagesXPPAUT lecture: an example sessioncalvk79No ratings yet

- Griffiths QMCH 1 P 11Document4 pagesGriffiths QMCH 1 P 11abc xyzNo ratings yet

- Basel Problem Via FourierDocument3 pagesBasel Problem Via FourierMiliyon Tilahun100% (1)

- PP 03 SolnDocument13 pagesPP 03 SolnKiyan RoyNo ratings yet

- Jacobi MethodDocument2 pagesJacobi MethodJuancho SotilloNo ratings yet

- Sinusoid Function Generator DesignDocument16 pagesSinusoid Function Generator DesignBilboBagginsesNo ratings yet

- Constrained Optimization Problem SolvedDocument6 pagesConstrained Optimization Problem SolvedMikeHuynhNo ratings yet

- tmp1295 TMPDocument9 pagestmp1295 TMPFrontiersNo ratings yet

- Cobb DouglasDocument14 pagesCobb DouglasRob WolfeNo ratings yet

- Unit IDocument50 pagesUnit Iapi-352822682No ratings yet

- Strong Duality and Minimax Equality TheoremsDocument9 pagesStrong Duality and Minimax Equality TheoremsCristian Núñez ClausenNo ratings yet

- Paper Cement PDFDocument8 pagesPaper Cement PDFAnonymous N3LpAXNo ratings yet

- Applied Thermal Engineering: Adem Atmaca, Recep YumrutasDocument10 pagesApplied Thermal Engineering: Adem Atmaca, Recep YumrutasAnonymous N3LpAX100% (1)

- A Review of The Fundamentals of The Systematic Engineering Design Process ModelsDocument13 pagesA Review of The Fundamentals of The Systematic Engineering Design Process ModelsAnonymous N3LpAXNo ratings yet

- Industrial Best Practices of Conceptual Process DesignDocument5 pagesIndustrial Best Practices of Conceptual Process DesignAnonymous N3LpAXNo ratings yet

- SI UnitsDocument90 pagesSI UnitsManas KarnureNo ratings yet

- Multiple Utilities Targeting For Heat Exchanger Networks: U. V. Shenoy, A. Sinha and S. BandyopadhyayDocument14 pagesMultiple Utilities Targeting For Heat Exchanger Networks: U. V. Shenoy, A. Sinha and S. BandyopadhyayAnonymous N3LpAXNo ratings yet

- 101Document8 pages101Anonymous N3LpAXNo ratings yet

- Papoulias (1983c)Document12 pagesPapoulias (1983c)Anonymous N3LpAXNo ratings yet

- AppkDocument2 pagesAppkAnonymous N3LpAXNo ratings yet

- Appendix F Most Efficient Temperature Difference in ContraflowDocument3 pagesAppendix F Most Efficient Temperature Difference in ContraflowAnonymous N3LpAXNo ratings yet

- Intro AS PRO IIDocument6 pagesIntro AS PRO IIAnonymous N3LpAXNo ratings yet

- On The Efficiency and Sustainability of The Process IndustryDocument5 pagesOn The Efficiency and Sustainability of The Process IndustryAnonymous N3LpAXNo ratings yet

- Intro AS PRO IIDocument6 pagesIntro AS PRO IIAnonymous N3LpAXNo ratings yet

- Model Verification Vrlo BitnoDocument18 pagesModel Verification Vrlo BitnoAnonymous N3LpAXNo ratings yet

- Exergy 1Document18 pagesExergy 1Anonymous N3LpAXNo ratings yet

- A C 9Document13 pagesA C 9Anonymous N3LpAXNo ratings yet

- Introduction To EES PDFDocument18 pagesIntroduction To EES PDFMohammad Mustafa AkbariNo ratings yet

- CDP+ Instructions For ApplicantsDocument15 pagesCDP+ Instructions For ApplicantsAnonymous N3LpAXNo ratings yet

- Education in Process Systems Engineering Past, Present and Future (PERKINS 2000)Document11 pagesEducation in Process Systems Engineering Past, Present and Future (PERKINS 2000)Jenny Paola Parra CañasNo ratings yet

- A FlowsheetingDocument30 pagesA FlowsheetingAnonymous N3LpAXNo ratings yet

- CDP+ Application FormDocument24 pagesCDP+ Application FormAnonymous N3LpAXNo ratings yet

- An Intermediate Heating and Cooling Method For A Distillation ColumnDocument7 pagesAn Intermediate Heating and Cooling Method For A Distillation ColumnAnonymous N3LpAXNo ratings yet

- Property Data: 4.1 Unit SystemDocument60 pagesProperty Data: 4.1 Unit SystemAnonymous N3LpAXNo ratings yet

- A Course in Environmentally Conscious Chemical Process EngineeringDocument12 pagesA Course in Environmentally Conscious Chemical Process EngineeringimrancenakkNo ratings yet

- 52Document12 pages52Anonymous N3LpAXNo ratings yet

- EES ManualDocument29 pagesEES ManualSydney FeeNo ratings yet

- Instructions: Please Read Carefully!Document3 pagesInstructions: Please Read Carefully!Anonymous N3LpAXNo ratings yet

- ContactDocument1 pageContactAnonymous N3LpAXNo ratings yet

- Introduction To Ees: AppendixDocument14 pagesIntroduction To Ees: AppendixLAlvesNo ratings yet

- Options For Co-GenerationDocument12 pagesOptions For Co-GenerationAnonymous N3LpAXNo ratings yet

- JN - 2013 - Li - Model and Simulation For Collaborative VRPSPDDocument8 pagesJN - 2013 - Li - Model and Simulation For Collaborative VRPSPDAdrian SerranoNo ratings yet

- Linear Programming-Solving LPP byDocument31 pagesLinear Programming-Solving LPP byAgatNo ratings yet

- Maximum Concrete Capacity of an Overhead CraneDocument31 pagesMaximum Concrete Capacity of an Overhead CranemulualemNo ratings yet

- KKT conditions for convex optimizationDocument7 pagesKKT conditions for convex optimizationRupaj NayakNo ratings yet

- Linear Algebra & Optimization: BITS PilaniDocument26 pagesLinear Algebra & Optimization: BITS Pilanimanoj kumar sethyNo ratings yet

- Linear Programming Mathematics Theory and AlgorithmsDocument502 pagesLinear Programming Mathematics Theory and AlgorithmsEmNo ratings yet

- LPP SimplexDocument107 pagesLPP SimplexAynalemNo ratings yet

- BibliographyDocument1,043 pagesBibliographyAshutosh ShakyaNo ratings yet

- LP Duality Theory Explained in 40 CharactersDocument42 pagesLP Duality Theory Explained in 40 CharactersLuis VilchezNo ratings yet

- Penaltyfunctionmethodsusingmatrixlaboratory MATLABDocument39 pagesPenaltyfunctionmethodsusingmatrixlaboratory MATLABKadir ÖzanNo ratings yet

- Advanced Linear ProgrammingDocument209 pagesAdvanced Linear Programmingpraneeth nagasai100% (1)

- Alpha Chiang Chapter 19Document10 pagesAlpha Chiang Chapter 19ansarabbasNo ratings yet

- CH18 Simplex-Based Sensitivity Analysis and DualityDocument54 pagesCH18 Simplex-Based Sensitivity Analysis and DualityTito TitoNo ratings yet

- MB0048 Concept MapDocument14 pagesMB0048 Concept MapGopalakrishnan KuppuswamyNo ratings yet

- Short-Term Hydrothermal Coordination by Lagrangian Relaxation: Solution of The Dual ProblemDocument8 pagesShort-Term Hydrothermal Coordination by Lagrangian Relaxation: Solution of The Dual Problemjuchaca36No ratings yet

- Chapter - 4 - LP Duality and Sensitivity AnalysisDocument27 pagesChapter - 4 - LP Duality and Sensitivity AnalysisJazz Kaur100% (3)

- Decision Science MCQSDocument100 pagesDecision Science MCQSDhruv GuptaNo ratings yet

- G12 - Homework 5Document8 pagesG12 - Homework 5Khánh Đoan Lê ĐìnhNo ratings yet

- L2 Basic MathematicsDocument32 pagesL2 Basic Mathematicsaarshiya kcNo ratings yet

- Optimization by Vector Space (Luenberger)Document342 pagesOptimization by Vector Space (Luenberger)Lucas Pimentel Vilela100% (1)

- Ge330fall09 Dualsimplex Postoptimal11 PDFDocument18 pagesGe330fall09 Dualsimplex Postoptimal11 PDFVeni GuptaNo ratings yet

- Maths OR Test PaperDocument4 pagesMaths OR Test PaperSURESH KANNAN100% (1)

- SVM - Hype or HallelujahDocument13 pagesSVM - Hype or HallelujahVaibhav JainNo ratings yet

- Optimization - K. LangeDocument540 pagesOptimization - K. Lange1600170100% (3)

- VTU NOTES & QUESTION PAPERSDocument46 pagesVTU NOTES & QUESTION PAPERSAnusha KulalNo ratings yet

- Applied Operation ResearchDocument340 pagesApplied Operation Researchfuad abduNo ratings yet

- Duality 1Document12 pagesDuality 1Sriharshitha DeepalaNo ratings yet

- LP-QMDocument10 pagesLP-QMCecil Kian BotalonNo ratings yet

- QTM-Theory Questions & Answers - NewDocument20 pagesQTM-Theory Questions & Answers - NewNaman VarshneyNo ratings yet

- Advertising Campaign Optimization Using Simplex MethodDocument9 pagesAdvertising Campaign Optimization Using Simplex MethodAgumas AlehegnNo ratings yet