Professional Documents

Culture Documents

F-1audio Watermarking in The FFT Domain Using Perceptual Masking

Uploaded by

xpeprisheyOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

F-1audio Watermarking in The FFT Domain Using Perceptual Masking

Uploaded by

xpeprisheyCopyright:

Available Formats

AUDIO WATERMARKING IN THE FFT DOMAIN USING

PERCEPTUAL MASKING

M. V. Rama Krishna

ECE Department

IIT Guwahati - 781039

ramakrishna mv1@yahoo.com

D. Ghosh

ECE Department

IIT Guwahati - 781039

ghosh@iitg.ernet.in

ABSTRACT

This paper presents a new oblivious technique for em-

bedding watermark into digital audio signals, which

is based on the patchwork algorithm in the FFT do-

main. The proposed watermarking scheme exploits a

psychoacoustic model of MPEG audio coding to en-

sure that the watermark does not affect the subjective

quality of the original audio. The audio is watermarked

by modifying selected FFT coefcients of an audio

frame under a constraint specied by the psychoacous-

tic model. Experimental results show that our scheme

introduces no audible distortion and is robust against

some common signal processing attacks.

1. INTRODUCTION

The outstanding progress in digital technology has not

only led to easy reproduction and retransmission of

digital data but has also helped unauthorized data ma-

nipulation. Consequently, the necessity arises for copy-

right protection of digital data (audio, image and video)

against unauthorized recording attempts. Recently, there

is a large interest in audio watermarking techniques

that are largely stimulated by the rapid progress in au-

dio compression algorithms and wide use of Internet

for compressed music distribution over the Globe. A

fewof the earliest audio watermarking techniques have

been reported in [1]. They include approaches such as

phase coding, echo coding and spread spectrum tech-

nique. In phase coding technique, watermark is em-

bedded by modifying the phase values of the Fourier

transform coefcients of audio segments. In the other

two approaches, watermark is embedded by modifying

the cepstrum at a known location using multiple de-

caying echoes or spread spectrum noise. Another au-

dio watermarking technique is proposed in [2] where

Fourier transformcoefcients over the middle frequency

bands are replaced with spectral components of the

watermark data. In [3], watermark for an audio is gen-

erated by modifying the least signicant bit of each

sample. Reports in [4, 5] discuss watermarking meth-

ods in audio by exploiting the characteristics of the hu-

man auditory systemso as to guarantee that the embed-

ded watermark is imperceptible. However, the disad-

vantage of these schemes is that the original audio sig-

nal is required in the watermark detection process, i.e.,

the algorithms are non-oblivious. Audio watermark-

ing using patchwork algorithm is developed in [6, 7].

This algorithm is based on statistical methods in the

transform domain, e.g., DCT, FFT, etc. Patchwork al-

gorithm has the advantage that it satises the security

constraint. But, here the watermark is not guaranteed

to be inaudible. Furthermore, robustness is not max-

imized. This is because the amount of modication

made to embed the watermark is estimated and not

necessarily the maximum amount possible.

In this paper, we present an oblivious audio water-

marking algorithm wherein the earlier proposed patch-

work algorithm in the FFT domain [6] is modied by

incorporating the concept of perceptual masking pro-

posed in [4]. Perceptual masking is based on the char-

acteristics of human auditory system and hence, it is

guaranteed that the embedded watermark in our pro-

posed algorithm will be inaudible. As the perceptual

characteristics of individual audio signals vary, the mag-

nitude of modication made to each coefcient adapts

to and is highly dependent on the audio being water-

marked. This is described in the sections to follow.

2. THE PATCHWORK AND MASKING

MODELS

2.1. Patchwork algorithm

The patchwork algorithm articially modies the dif-

ference (patch value) between means of samples in two

randomly chosen subsets called patches. The modi-

cation incorporated depends on the watermark data to

be embedded and is detected with a high probability by

comparing the observed patch value with the expected

one.

The two major steps in the patchwork algorithm

are: (1) Choose two patches A and B pseudo-randomly,

and (2) for watermark bit equal to 1, add a small con-

stant value d to the samples of patch A and subtract the

same value d from the samples of patch B, i.e.,

a

i

= a

i

+d

b

i

= b

i

d (1)

where a

i

, b

i

are sample values of the patches A and B,

respectively, and a

i

, b

i

are the modied samples. The

mean difference of the watermarked patches, hence, is

given as

e =

1

n

n

i=1

(a

i

b

i

) = a

= (ab) +2d. (2)

where n is the patch size, a, b are original sample

means of the patches and a

, b

are modied sample

means. For watermark bit equal to 0, d is subtracted in

patch A and is added in patch B, resulting in e = (a

b) 2d. Thus, the mean difference (patch value) for a

watermarked frame is modied by 2d than that of the

original frame. The detection process starts with the

subtraction of sample values between the two patches

and then comparing the observed mean difference with

the expected one.

2.2. Masking model

The performance of the patchwork algorithm depends

on the amount of modication d, which on the other

hand affects inaudibility. By increasing the value of d

the probability of false detection can be reduced. But,

high value of d adversely effects the inaudibility re-

quirement. Hence, the value of d is to be chosen so

as to trade-off between the inaudibility and probability

of false detection. In our work, the optimum value of

d is selected by considering the psychoacoustic model

of MPEG audio coding [8]. This model gives the max-

imum amount of modication that is possible for ev-

ery sample without any signicant degradation in the

subjective audio quality. The underlying concept be-

hind this is audio masking by which a faint but au-

dible signal becomes inaudible in the presence of an-

other stronger signal. The masking effect depends on

the spectral and temporal characteristics of both the

masked signal and the masker. Our procedure uses

frequency masking that refers to masking between fre-

quency components of the audio signals. If two sig-

nals, which occur simultaneously, are close together in

frequency, the stronger masking signal will make the

weaker signal inaudible; the low level signal will not

be audible if it is below some threshold. The mask-

ing threshold may be measured by using the MPEG

audio psychoacoustic model and is the limit for maxi-

mum modication while keeping the perceptual audio

quality high.

3. PROPOSED WATERMARK EMBEDDING

AND DETECTION ALGORITHM

3.1. Watermark embedding

In the embedding process, we repeatedly apply an em-

bedding operation on short segments of the audio sig-

nal. Each one of these segments is called a frame.

Let, the size of each frame be N. In this work, we

use binary watermarks w

j

i.e., w

j

= 0 or 1. The audio

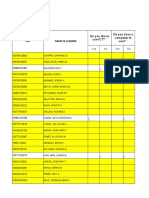

watermark-embedding scheme is shown in gure 1 and

is described below.

Step 1. Map the secret key to the seed of a random

number generator. Generate an index set I = {I

1

, .., I

2n

},

2n<N/2, whose elements are pseudo-randomly selected

index values in the range [2, N/2]. The choice of N/2 is

due to the Hermitian symmetry of FFT for real valued

signals.

Step 2. Generate the binary watermark sequence {w

j

}

of length equal to the number of frames to be water-

marked; j denotes the frame number.

Step 3. Let, for an input audio frame, the set S =

{S

1

, S

2

, ....S

N

} be the FFT coefcients whose subscripts

denote frequency range from the lowest to the highest

FFT

Psycho Achostic

Model

Algorithm

Patch Work

IFFT

Watermarked

Audio Frame M

Sequence

PN(1, 1)

FFT

Y

P

Input

S

Audio Frame

Figure 1: Watermark embedding procedure

frequencies. Dene patches A and B as

A = {a

i

| a

i

= S

I

2i1

, i = 1, ....n}

B = {b

i

| b

i

= S

I

2i

, i = 1, ......n} (3)

Step 4. Calculate the masking threshold of the current

audio frame from the set S using the psychoacoustic

model. This gives the frequency mask M={M

k

}, k=1,

2, ..N.

Step 5. Generate the pseudo-random noise (1 or 1)

with a length of N and then apply FFT to it. Let, this

be Y={Y

k

}.

Step 6. Use the mask M to weigh the FFT coefcients

of the noise like sequence, that is

P = {P

k

| P

k

= M

k

Y

k

} (4)

Step 7. Dene C and D as two subsets of P, where c

i

= P

I

2i1

and d

i

=P

I

2i

, i=1,...,n.

Step 8. Embedding of a watermark bit w

j

is done as

follows

If w

j

= 1,

i

= a

i

+|Re(c

i

)|

b

i

= b

i

|Re(d

i

)|

(5)

If w

j

= 0,

i

= a

i

|Re(c

i

)|

b

i

= b

i

+|Re(d

i

)|

(6)

where is the watermark strength parameter.

Step 9. Dene newpatches A and B with a

i

= S

N/2+I

2i1

and b

i

=S

N/2+I

2i

, i=1,...,n. Also, dene C and D as c

i

=

P

N/2+I

2i1

and d

i

=P

N/2+I

2i

, i=1,...,n. Apply watermark

embedding process following the rule given in Step 8.

Step 10. Finally, replace the selected elements a

i

and

b

i

by a

i

and b

i

, respectively, and then apply IFFT. The

output is the watermarked audio frame.

We repeat the above steps to the next frame until

no watermark bits are left for embedding. To have a

safe communication between the embedding and de-

tection of watermarks, the watermark bit code may be

repeatedly and consecutively embedded several times.

Repeated embedding of the same information and de-

tection based on majority voting play error correcting

functionality.

3.2. Watermark detection

Step 1. Map the secret key to the seed of a random

number generator and then generate the index set I

same as that in the embedding process.

Step 2. For a watermarked audio frame, obtain the

subsets A and B from the FFT coefcients and then

compute the means of the two patches as

a

=

1

n

n

i=1

Re(a

i

)

b

=

1

n

n

i=1

Re(b

i

) (7)

Step 3. Calculate the mean difference (patch value) e=

a

Step 4. Compare e with a predened threshold T and

then decide the embedded watermark bit for that par-

ticular frame. The threshold T is the expected value

E[( a

b)] over all the frames in the audio signal which

may be assumed to be generally zero. Therefore, the

watermark detection rule may be stated as

IF e > 0 then 1 is detected,

ELSE IF e < 0 then 0 is detected.

We repeat the above steps until all watermark bits

are detected.

4. EXPERIMENTAL RESULTS

A total number of ten audio sequences are used in our

experiment. Each audio sequence has a duration of 10

seconds and are sampled at 44.1 KHz with 16 bits per

sample. The audio sequences are rst watermarked us-

ing our technique and then we attempt to extract the

watermark bits from the watermarked signals. The

frame size and the patch size are taken as 512 and 30,

respectively.

In order to demonstrate the subjective quality of

the watermarked signals, we perform an informal sub-

jective listening test according to the hidden reference

listening test [9]. Ten listeners participated in the lis-

tening test. The subjective quality of a watermarked

audio signal is measured in terms of Diffgrade which

is equal to the subjective rating given to the water-

marked test item minus the rating given to the hid-

den reference. The Diffgrade scale is partitioned into

ve ranges: Watermark imperceptible (0.00), percep-

tible but not annoying (0.00 to 1.00), slightly an-

noying (1.00 to 2.00), annoying (2.00 to 3.00),

and very annoying (3.00 to 4.00). The test results,

along with the corresponding SNR of the watermarked

audio signals, are tabulated in Table 1.

Table 1: SNR and Subjective Quality of Watermarked

audio signals

Test audio SNR (dB) Diffgrade

Blues1 21.14 0.00

Blues2 25.28 -0.20

Country1 20.42 0.00

Country2 18.87 -0.20

Classic1 27.14 -0.60

Classic2 29.61 0.00

Folk1 18.61 0.00

Folk2 17.59 0.00

Pop1 18.86 0.00

Pop2 19.05 0.00

Since the proposed algorithm is only statistically

optimal, a measure for its performance may be the prob-

ability of detecting a watermark bit correctly. Con-

versely, we may use bit-error-rate (BER) as the perfor-

mance measure; lower the BER, better is the perfor-

mance. Table 2 gives the BER in the detection process

when the watermarked signals are not disturbed (no

attack) and when various signal processing operations

are applied on them. The various signal processing at-

tacks used in our experiment are down-sampling by 2,

MPEG compression (MPEG-1 layer 3 with bit-rate 64

Kbps and 128 Kbps), Band-pass ltering (using 2nd

order Butter-worth lter with cut-off frequencies 100

Hz and 6 KHz), echo addition (echo signal with a delay

of 100 ms and a decay of 50%) and equalization (using

a 10-band equalizer with +6 dB and 6 dB gain).

Table 2: Error probabilities for various attacks

Type of attack bit error BER (%)

No attack 0/861 0.00

Down sampling 0/861 0.00

MPEG-layer 3 (128 Kbps) 4/861 0.46

MPEG-layer 3 (64 Kbps) 10/861 1.16

Band-pass Filtering 10/861 1.16

Echo Addition 2/861 0.23

Equalization 0/861 0.00

It is observed that although our method introduces

large distortion (low SNR) due to watermarking the

subjective quality of the watermarked audio signal is

acceptable (Diffgrade above 1.00) for all the test sig-

nals. Also, the watermark bits are detected correctly

with a high probability under various signal process-

ing attacks (maximum BER in our experiment is only

1.16%). Therefore, in our opinion, the proposed tech-

nique is robust as well as perceptually transparent.

5. CONCLUSION

In this paper, we describe a new algorithm for digital

audio watermarking. The algorithm is better than that

in [4] in the sense that it is oblivious while the later is

a non-oblivious technique. Compared to the algorithm

in [6], the proposed watermark embedding scheme ac-

complishes perceptual transparency by exploiting the

masking effect of the human auditory system. This

embedding scheme adapts the watermark so that the

energy of the watermark is maximized under the con-

straint of keeping the auditory artifact as low as possi-

ble. Also, the proposed scheme is robust to some of the

common signal processing attacks. These are corrobo-

rated by the experimental results given in the previous

section.

Despite the success of the proposed method, it also

has a drawback a synchronization problem. The use

of a PN sequence to generate the index set is vulnera-

ble to time scale modication attack. Also, our mask-

ing model can still be improved by considering the

temporal masking effect. Further research will focus

on overcoming these problems.

REFERENCES

[1] W. Bender, D. Gruhl and N. Morimoto, Tech-

niques for data hiding, Tech. Rep., MIT Media

Lab, 1994.

[2] J. F. Tilki and A. A. Beex, Encoding a hid-

den digital signature onto an audio signal using

psychoacoustic masking, Proc. 7th Intl. Conf.

Signal Processing and Technology, pp. 476-480,

1996.

[3] P. Basia and I. Pitas, Robust audio watermark-

ing in the time domain, Proc. 9th European Sig-

nal Processing Conf. (EUSIPCO98), pp. 25-28,

1998.

[4] M. D. Swanson, B. Zhu, A. H. Tewk and L.

Boney, Robust audio watermarking using per-

ceptual masking, Signal Processing, vol. 66, no.

3, pp. 337-356, 1998.

[5] L. Boney, A. H. Tewk, and K. N. Hamdy, Dig-

ital watermarking for audio signals, Proc. 3rd

Intl. Conf. Multimedia Computing and Systems,

pp. 437-480, 1996.

[6] M. Arnold, Audio watermarking: Features, ap-

plications and algorithms, Proc. IEEE Intl. Conf.

Multimedia, vol. 2, pp. 1013-1016, 2000.

[7] I. K. Yeo and H. J. Kim, Modied patchwork al-

gorithm: A novel audio watermarking scheme,

Proc. Intl. Conf. Information Technology, Cod-

ing and Computing, pp. 237-242, 2001.

[8] ISO/IEC IS 11172, Information Technology-

Coding of Moving Pictures and Associated Au-

dio for Digital Storage up to about 1.5Mbits/s.

[9] T. Painter and A. Spanias, Perceptual Coding of

Digital Audio, Proc. IEEE, vol. 88, no. 4, 2000.

You might also like

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Development of Smart Blind StickDocument7 pagesDevelopment of Smart Blind StickxpeprisheyNo ratings yet

- TI CAN Bus TutorialDocument15 pagesTI CAN Bus TutorialMohamedHassanNo ratings yet

- Tutorial SimcouplerDocument10 pagesTutorial SimcouplerJosé Luis Camargo OlivaresNo ratings yet

- Bank Locker Security Using GSMDocument4 pagesBank Locker Security Using GSMxpeprisheyNo ratings yet

- Analyzing The Effect of Gain Time On Soft-Task Scheduling Policies in Real-Time SystemsDocument14 pagesAnalyzing The Effect of Gain Time On Soft-Task Scheduling Policies in Real-Time SystemsxpeprisheyNo ratings yet

- Ethernet (Ieee 802Document22 pagesEthernet (Ieee 802xpeprishey100% (2)

- 27 MulticoreDocument67 pages27 MulticoreKush ChaudharyNo ratings yet

- RTS Nptel MaterialDocument30 pagesRTS Nptel MaterialBavya MohanNo ratings yet

- Embedded InternetDocument31 pagesEmbedded InternetxpeprisheyNo ratings yet

- Principles of Audio WatermarkingDocument3 pagesPrinciples of Audio WatermarkingxpeprisheyNo ratings yet

- Usb ProtocolDocument27 pagesUsb Protocolxpeprishey100% (2)

- Ultrasonic Car ParkingDocument7 pagesUltrasonic Car Parkingxpeprishey100% (3)

- Trial PMR Pahang 2010 English (p1)Document9 pagesTrial PMR Pahang 2010 English (p1)abdfattah50% (2)

- Robust Audio Steganography Using Direct-Sequence Spread Spectrum TechnologyDocument6 pagesRobust Audio Steganography Using Direct-Sequence Spread Spectrum TechnologyPrakhar ShuklaNo ratings yet

- Spread Spectrum Audio Watermarking Scheme Based On Psychoacoustic ModelDocument5 pagesSpread Spectrum Audio Watermarking Scheme Based On Psychoacoustic ModelxpeprisheyNo ratings yet

- DFF and register Verilog codeDocument39 pagesDFF and register Verilog codeAswinCvrnNo ratings yet

- Reversible Resampling of Integer SignalsDocument10 pagesReversible Resampling of Integer SignalsxpeprisheyNo ratings yet

- F-1audio Watermarking in The FFT Domain Using Perceptual MaskingDocument5 pagesF-1audio Watermarking in The FFT Domain Using Perceptual MaskingxpeprisheyNo ratings yet

- Reversible Resampling of Integer SignalsDocument10 pagesReversible Resampling of Integer SignalsxpeprisheyNo ratings yet

- Signature Identification MATLAB SynopsisDocument21 pagesSignature Identification MATLAB SynopsisAditya Mehar67% (3)

- Signature Identification MATLAB SynopsisDocument21 pagesSignature Identification MATLAB SynopsisAditya Mehar67% (3)

- Iris RecognitionDocument5 pagesIris RecognitionxpeprisheyNo ratings yet

- Real-Time CommunicationDocument21 pagesReal-Time Communicationxpeprishey100% (1)

- Real-Time CommunicationDocument21 pagesReal-Time Communicationxpeprishey100% (1)

- Need and Benefits of TrainingDocument22 pagesNeed and Benefits of TrainingxpeprisheyNo ratings yet

- CAN ProtocolDocument9 pagesCAN ProtocolMalu Mohan100% (1)

- 89s51 DatasheetDocument27 pages89s51 DatasheetazizboysNo ratings yet

- Brand HaloDocument3 pagesBrand HaloxpeprisheyNo ratings yet

- Lifecycle of An Entrepreneurial VentureDocument20 pagesLifecycle of An Entrepreneurial Venturexpeprishey100% (3)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Instruction Manual: Important Safety Instructions 2Document20 pagesInstruction Manual: Important Safety Instructions 2待ってちょっとNo ratings yet

- MTS9000A Multiple Telecommunication System Installation Guide Russia Megafone MTS9513A-AD2002Document62 pagesMTS9000A Multiple Telecommunication System Installation Guide Russia Megafone MTS9513A-AD2002Влад Толчеев100% (1)

- JBL T450BT Extra Bass Bluetooth Headset: TL0400-LK0099436Document1 pageJBL T450BT Extra Bass Bluetooth Headset: TL0400-LK0099436Arbaz khanNo ratings yet

- Survey Readiness of Students For A Possible Online Learning DeliveryDocument12 pagesSurvey Readiness of Students For A Possible Online Learning DeliveryRenalyn F. AndresNo ratings yet

- Electrical Document ReviewDocument15 pagesElectrical Document ReviewJoselito Peñaranda Cruz Jr.No ratings yet

- Charming Tattoo Company Offers Permanent Makeup Machines and NeedlesDocument7 pagesCharming Tattoo Company Offers Permanent Makeup Machines and NeedlesStrelitzia AugustaNo ratings yet

- 3D Viewing and The Displaying Devices Including LCD, LED and Flat Panel Devices.Document18 pages3D Viewing and The Displaying Devices Including LCD, LED and Flat Panel Devices.Amrit Razz Shrestha0% (1)

- Cdd147558-Samsung CL21K30MQ6TXAP Chasis KS9B (N)Document28 pagesCdd147558-Samsung CL21K30MQ6TXAP Chasis KS9B (N)Jesús RamírezNo ratings yet

- CV8448 NRZ To Cdi Converter Operation and Installation GuideDocument184 pagesCV8448 NRZ To Cdi Converter Operation and Installation GuideThe OneNo ratings yet

- CVE Practice TestDocument302 pagesCVE Practice Testspanman112No ratings yet

- Combinational Mos Logic Circuits: Basic ConceptsDocument41 pagesCombinational Mos Logic Circuits: Basic Conceptsflampard24No ratings yet

- TL - AC - FundamentalsDocument31 pagesTL - AC - FundamentalsDee TrdNo ratings yet

- UGC05 - BOQ - STATIONS - MEP Elect - ICTDocument83 pagesUGC05 - BOQ - STATIONS - MEP Elect - ICTManglesh SinghNo ratings yet

- Ohms Law and ResistanceDocument9 pagesOhms Law and ResistanceMarco Ramos JacobNo ratings yet

- Absolute Arm 6-Axis: Key FeaturesDocument2 pagesAbsolute Arm 6-Axis: Key FeaturesPedroPerdigoNo ratings yet

- Kron Matrix ReductionDocument19 pagesKron Matrix Reductionelectrical_1012000No ratings yet

- Brother HS1000/XR7700 Sewing Machine Instruction ManualDocument66 pagesBrother HS1000/XR7700 Sewing Machine Instruction ManualiliiexpugnansNo ratings yet

- Watchdog Super EliteDocument76 pagesWatchdog Super EliteFernando OchoaNo ratings yet

- Samsung Electronics Balance Scorecard Focuses on InnovationDocument3 pagesSamsung Electronics Balance Scorecard Focuses on InnovationVhertotNo ratings yet

- PLCDocument119 pagesPLCamism24100% (1)

- Lesson Plan Brighter BulbsDocument1 pageLesson Plan Brighter BulbsJoanneNo ratings yet

- Polycom Realpresence Medialign 255 Slimline Base: Assembly and Wiring InstructionsDocument14 pagesPolycom Realpresence Medialign 255 Slimline Base: Assembly and Wiring InstructionsFrederiv Alexander Urpin GomezNo ratings yet

- 1.2.4.A CircuitCalculationsDocument5 pages1.2.4.A CircuitCalculationsCarolay Gabriela Aponte RodriguezNo ratings yet

- Flyer SITRANS LH300 ENDocument2 pagesFlyer SITRANS LH300 ENA GlaumNo ratings yet

- Solution Manual - Microelectronics Circuit Analysis & Desing 3rd Edition ch1Document6 pagesSolution Manual - Microelectronics Circuit Analysis & Desing 3rd Edition ch1zaid2099778567% (3)

- Current mode PWM controller with integrated MOSFETDocument3 pagesCurrent mode PWM controller with integrated MOSFETJuan Carlos SrafanNo ratings yet

- Systems of Safety Applied To Focus Four Hazards: Usdol-Osha Susan Harwood Grant SHT21005SH0Document72 pagesSystems of Safety Applied To Focus Four Hazards: Usdol-Osha Susan Harwood Grant SHT21005SH0Mohamad TaufiqNo ratings yet

- S2 Kodiak: Innovation With IntegrityDocument8 pagesS2 Kodiak: Innovation With IntegrityRobert Méndez StroblNo ratings yet

- Alstom LGPG111 Service ManualDocument387 pagesAlstom LGPG111 Service ManualHemant KumarNo ratings yet

- Ecura: Technical Specification For Stationary VRLA-GEL-Cells (DIN 40742)Document2 pagesEcura: Technical Specification For Stationary VRLA-GEL-Cells (DIN 40742)Alayn1807No ratings yet