Professional Documents

Culture Documents

33429

Uploaded by

keerthisivaOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

33429

Uploaded by

keerthisivaCopyright:

Available Formats

Large-Scale Content-Based Audio Retrieval

from Text Queries

Gal Chechik

Corresponding Author

gal@google.com

Eugene Ie

eugeneie@google.com

Martin Rehn

rehn@google.com

Samy Bengio

bengio@google.com

Dick Lyon

dicklyon@google.com

ABSTRACT

In content-based audio retrieval, the goal is to nd sound

recordings (audio documents) based on their acoustic fea-

tures. This content-based approach diers from retrieval

approaches that index media les using metadata such as

le names and user tags. In this paper, we propose a ma-

chine learning approach for retrieving sounds that is novel

in that it (1) uses free-form text queries rather than sound

based queries, (2) searches by audio content rather than via

textual meta data, and (3) scales to very large number of

audio documents and very rich query vocabulary. We han-

dle generic sounds, including a wide variety of sound ef-

fects, animal vocalizations and natural scenes. We test a

scalable approach based on a passive-aggressive model for

image retrieval (PAMIR), and compare it to two state-of-

the-art approaches; Gaussian mixture models (GMM) and

support vector machines (SVM).

We test our approach on two large real-world datasets:

a collection of short sound eects, and a noisier and larger

collection of user-contributed user-labeled recordings (25K

les, 2000 terms vocabulary). We nd that all three methods

achieved very good retrieval performance. For instance, a

positive document is retrieved in the rst position of the

ranking more than half the time, and on average there are

more than 4 positive documents in the rst 10 retrieved, for

both datasets. PAMIR was one to three orders of magnitude

faster than the competing approaches, and should therefore

scale to much larger datasets in the future.

Categories and Subject Descriptors: H.5.5 [Informa-

tion Interfaces and Presentation]: Sound and Music

Computing; I.2.6[Articial Intelligence]: Learning; H.3.1

[Information Storage and Retrieval]: Content Analysis

and Indexing.

General Terms: Algorithms. Keywords: content-based

audio retrieval, ranking, discriminative learning, large scale

Permission to make digital or hard copies of all or part of this work for

personal or classroom use is granted without fee provided that copies are

not made or distributed for prot or commercial advantage and that copies

bear this notice and the full citation on the rst page. To copy otherwise, to

republish, to post on servers or to redistribute to lists, requires prior specic

permission and/or a fee.

MIR08, October 3031, 2008, Vancouver, British Columbia, Canada.

Copyright 2008 ACM 978-1-60558-312-9/08/10 ...$5.00.

1. INTRODUCTION

Large-scale content-based retrieval of online multimedia

documents becomes a central IR problem as an increasing

amount of multimedia data, both visual and auditory, be-

comes freely available. Online audio content is available

both isolated (e.g., sound eects recordings), and combined

with other data (e.g., movie sound tracks). Earlier works

on content-based retrieval of sounds focused on two main

thrusts: classication of sounds to (usually a few high-level)

categories, or retrieval of sounds by content-based similarity.

For instance, people could use short snippets out of a music

recording, to locate similar music. This more-like-this or

query-by-example setting is based on dening a measure

of similarity between two acoustic segments.

In many cases, however, people may wish to nd exam-

ples of sounds but do not have a recorded sample at hand.

For instance, someone editing her home movie may wish to

add car-racing sounds, and someone preparing a presenta-

tion about jungle life may wish to nd samples of roaring

tigers or of tropical rain. In all these cases, a natural way

to dene the desired sound is by a textual name, label, or

description, since no acoustic example is available

1

.

Only a few systems have been suggested so far for content-

based search with text queries. Slaney [14] proposed the idea

of linking semantic queries to clustered acoustic features.

Turnbull et al. [15], described a system of Gaussian mixture

models of music tracks, that achieves good average precision

on a dataset with 1300 sound eect les.

Retrieval systems face major challenges for handling real-

world large-scale datasets. First, high precision is much

harder to obtain, since the fraction of positive examples de-

creases. Furthermore, as query vocabulary grows, more re-

ned discriminations are needed, which are also harder. For

instance, telling a lion roar from a tiger roar is harder than

telling any roar from a musical piece. The second hurdle

is computation time. The amount of sound data available

online is huge, including for instance all sound tracks of user

contributed videos on YouTube and similar websites. In-

dexing and retrieving such data requires ecient algorithms

and representations. Finally, user-generated content is in-

herently noisy, in both labels and auditory content. This is

partially due to sloppy annotation but more so because dif-

ferent people use dierent words to describe similar sounds.

1

Text queries are also natural for retrieval of speech data,

but speech recognition is outside the scope of this work.

105

Retrieval and indexing of user-generated auditory data is

therefore a very challenging task.

In this paper, we focus on large-scale retrieval of general

sounds such as animal vocalizations or sound eects, and ad-

vocate a framework that has three characteristics: (1) Uses

text queries rather than sound similarity. (2) Retrieves by

acoustic content, rather than by textual metadata. (3) Can

scale to handle large noisy vocabularies and many audio

documents while maintaining precision. Namely, we aim to

build a system that allows a user to enter a (possibly multi-

word) text query, and that then ranks the sounds in a large

collection such that the most acoustically relevant sounds

are ranked near the top.

We retrieve and rank sounds not by textual metadata,

but by acoustic features of the audio content itself. This

approach will allow us in the future to index massively more

sound data, since many sounds available online are poorly

labeled, or not labeled at all, like the sound tracks of movies.

Such a system is dierent from other information retrieval

systems that use auxiliary textual data, such as le names

or user-added tags. It requires that we learn a mapping

between textual sound description and acoustic features of

sound recordings. Similar approaches have been shown to

work well for large-scale image retrieval.

The sound-ranking framework that we propose diers from

earlier sound classication approaches in multiple aspects:

It can handle a large number of possible classes, which are

obtained from the data rather than predened. Since users

are typically interested in the top-ranked retrieved results,

it focuses on a ranking criterion, aiming to identify the few

sound samples that are most relevant to a query. Finally,

it handles queries with multiple words, and eciently uses

multi-word information.

In this paper, we propose the use of PAMIR, a scalable

machine learning approach trained to directly optimize a

ranking criterion over multiple-word queries. We compare

this approach to two other machine-learning methods trained

on the related multi-class classication task. We evalu-

ate the performance of all three methods on two real-life

and large-scale labeled datasets, and discuss their scalabil-

ity. Our results show that high-precision retrieval of general

sounds from thousands of categories can be obtained even

with real-life noisy and inconsistent labels that are available

today online. Furthermore, these high-precision results can

be scale to very large datasets using PAMIR.

2. PREVIOUS WORK

A common approach to content-based audio retrieval is

the query-by-example method. In such a system, the user

presents an audio document and is proposed a ranked list of

audio documents that are the most similar to the query,

by some measure. A number of studies thus present com-

parisons of various sound features for that similarity appli-

cation. For instance, Wan and Lu [19] evaluate features and

metrics for this task. Using a dataset of 409 sounds from

16 categories, and a collection of standard features, they

achieve about 55% precision, based on the class labels, for

the top-10 matches. The approach we present here achieves

similar retrieval performance on a much larger dataset, while

letting the user express queries using free-form text instead

of an audio example.

Turnbull et al. [15] described a system that retrieves au-

dio documents based on text queries, using trained Gaussian

mixture models as a multi-class classication system. Most

of their experiments are specialized for music retrieval, using

a small vocabulary of genres, emotions, instrument names,

and other predened semantic tags. They recently ex-

tended their system to retrieve sound eects from a library,

using a 348-word vocabulary [16]. They trained a Gaus-

sian mixture model for each vocabulary word and provided

a clever training procedure, as the normal EM procedure

would not scale reasonably for their dataset. They demon-

strated a mean average precision of 33% on a set of about

1300 sound eects with ve to ten label terms per sound le.

Compared to their system, we propose here a highly scalable

approach that still yields similar mean average precision on

much larger datasets and much larger vocabularies.

3. MODELS FOR RANKING SOUNDS

The content-based ranking problem consists of two main

subtasks: First, we need to nd a compact representation of

sounds (features) that allows to accurately discriminate dif-

ferent types of sounds. Second, given these features, we need

to learn a matching between textual tags and the acoustic

representations. Such a matching function can then be used

to rank sounds given a text query.

We focus here on the second problem: learning to match

sounds to text tags, using standard features for representing

sounds (MFCC, see Sec. 4.2 for a motivation of this choice).

We take a supervised learning approach, using corpora of

labeled sounds to learn matching functions from data.

We describe below three learning approaches for this prob-

lem, chosen to cover the leading generative and discrimina-

tive, batch and online approaches. Clearly, the best method

should not only provide good retrieval performance, but also

scale to accommodate very large datasets of sounds.

The rst approach is based on Gaussian mixture mod-

els (GMMs) [13], being a common approach in speech and

music processing literature. It was used successfully in [16]

over a similar content-base audio retrieval task, but using

a smaller dataset of sounds and a smaller text vocabulary.

The second approach is based on support vector machines

(SVMs) [17], being the main discriminant approach in the

machine learning literature for classication. The third ap-

proach, PAMIR [8], is an online discriminative approach that

achieved superior performance and scalability in the related

task of content-based image retrieval from text queries.

3.1 The Learning Problem

Consider a text query q and a set of audio documents A,

and let R(q, A) be the set of audio documents in A that are

relevant to q. Given a query q, an optimal retrieval system

should rank all the documents a A that are relevant for q

ahead of the irrelevant ones;

rank(q, a

+

) < rank(q, a

) a

+

R(q, A), a

R(q, A) (1)

where rank(q, a) is the position of document a in the ranked

list of documents retrieved for query q. Assume now that

we are given a scoring function F(q, a) R that expresses

the quality of the match between an audio document a and

a query q. This scoring function can be used to order the

audio documents by decreasing scores for a given query. Our

goal is to learn a function F from training audio documents

and queries, that correctly ranks new documents and queries

F(q, a

+

) > F(q, a

) a

+

R(q, A), a

R(q, A) . (2)

106

The three approaches considered in this paper (GMMs, SVMs,

PAMIR) are designed to learn a scoring function F that ful-

lls as many of the constraints in Eq. 2 as possible.

Unlike standard classication tasks, queries in our prob-

lem often consist of multiple terms. We wish to design a

system that can handle queries that were not seen during

training, as long as their terms come from the same vocab-

ulary as the training data. For instance, a system trained

with the queries growling lion, purring cat, should be be

able to handle queries like growling cat. Out-of-dictionary

terms are discussed in Sec. 6.

We use the bag-of-words representation borrowed from

text retrieval [4] to represent textual queries. In this con-

text, all terms available in training queries are used to create

a vocabulary that denes the set of allowed terms. This bag-

of-words representation neglects term ordering and assigns

each query a vector q R

|T|

, where |T| denotes the vocabu-

lary size. The t

th

component qt of this vector is referred to

as the weight of term t in the query q. In our case, we use

the normalized idf weighting scheme [4],

qt =

b

q

t

idft

q

P

|T|

j=1

(b

q

j

idfj)

2

t = 1, . . . , T. (3)

Here, b

q

t

is a binary weight denoting the presence (b

q

t

= 1) or

absence (b

q

t

= 0) of term t in q; idft is the inverse document

frequency of term t dened as idft = log(rt), where rt

refers to the fraction of corpus documents containing the

term t. Here rt was estimated from the training set labels.

This weighting scheme is fairly standard in IR, and assumes

that, among the terms present in q, the terms appearing

rarely in the reference corpus are more discriminant and

should be assigned higher weights.

At query time, a query-level score F(q, a) is computed as

a weighted sum of term-level scores

F(q, a) =

|T|

X

t=1

qt scoreMODEL(a, t) (4)

where qt is the weight of the t

th

term of the vocabulary in

query q and scoreMODEL() is the score provided by one of

the three models for a given term and audio document. We

now describe separately each of the three models.

3.2 The GMM Approach

Gaussian mixture models (GMMs) have been used exten-

sively in various speech and speaker recognition tasks [12,

13]. In particular, they are the leading approach today for

text-independent speaker verication systems. In what fol-

lows, we detail how we used GMMs for the task of content-

based audio retrieval from text queries.

GMMs are used in this context to model the probability

density function of audio documents. The main (obviously

wrong) hypothesis of GMMs is that each frame of a given au-

dio document has been generated independently of all other

frames; hence, the density of a document is represented by

the product of the densities of each audio document frame:

p(a|GMM) =

Y

f

p(a

f

|GMM) (5)

where a is an audio document and a

f

is a frame of a.

As in speaker verication [13], we rst train a single uni-

ed GMM on a very large set of audio documents by maxi-

mizing the likelihood of all audio documents, using the EM

algorithm, without the use of any label. This background

model can be used to compute p(a|background), the like-

lihood of observing an audio document a given the back-

ground model.

We then train a separate GMM model for each term of the

vocabulary T, using only audio documents are relevant for

that term. Once trained, each model can be used to compute

p(a|t), the likelihood of observing an audio document a given

text term t.

Training uses a maximum a posteriori (MAP) approach

[7], that constrains each term model to stay near the back-

ground model. The model of p(a|t) is rst initialized with

the parameters of the background model; Then, the mean

parameters of p(a|t) are iteratively modied as in [11]

i =

b

i

+ (1 )

P

f

p(i|a

f

)a

f

P

f

p(i|a

f

)

, (6)

where i is the new estimate of the mean of Gaussian i of

the mixture,

b

i

is the corresponding mean in the background

model, a

f

is a frame of a training set audio document corre-

sponding to the current term, and p(i|a

f

) is the probability

that a

f

was emitted by Gaussian i of the mixture. The

regularizer controls how constrained the new mean is to

stay near the background model and is tuned using cross

validation.

At query time, the score for a term t and document a is

a normalized log likelihood ratio score

scoreGMM(a, t) =

1

a

log

p(a|t)

p(a|background)

, (7)

where a is the number of frames of document a. This can

thus be seen as a frame average log likelihood ratio between

the term and the background probability models.

3.3 The SVM Approach

Support vector machines (SVMs)[17] are considered to be

an excellent baseline system for most classication tasks.

SVMs aim to nd a discriminant function that maximizes

the margin between positive and negative examples, while

minimizing the number of misclassications in training. The

trade-o between these two conicting objectives is con-

trolled by a single hyper-parameter C that is selected using

cross validation.

Similarly to the GMM approach, we train a separate SVM

model for each term of the vocabulary T. For each term t,

we use the training-set audio documents relevant to that

term as positive examples, and all the remaining training

documents as negatives.

At query time, the score for a term t and document a is

as follows:

scoreSV M(a, t) =

SV Mt(a)

SV M

t

SV M

t

, (8)

where SV Mt(a) is the score of the SVM model for term t

applied on audio document a, and

SV M

t

and

SV M

t

are re-

spectively the mean and standard deviation of the scores of

the SVM model for term t. This normalization procedure

achieved the best performance in a previous study compar-

ing various fusion procedures for multi-word queries [1].

3.4 The PAMIR Approach

The passive-aggressive model for image retrieval (PAMIR)

was proposed in [8] for the problem of content-based image

107

retrieval from text queries. It obtained very good perfor-

mance on this task, with respect to competing probabilistic

models and SVMs. Furthermore, it scales much better to

very large datasets. We thus adapted PAMIR for retrieval

of audio documents and present it below in more detail.

Let query q R

|T|

be represented by the vector of normal-

ized idf weights for each vocabulary term, and a be repre-

sented by a vector R

da

, where da is the number of features

used to represent an audio document. Let W be a matrix

of dimensions (|T| da). We dene the query-level score as

F

W

(q, a) = q

transp

Wa , (9)

which measures how well a document a matches a query q.

For more intuition, W can also be viewed as a transforma-

tion of a from an acoustic representation to a textual one,

W : R

da

R

|T|

. With this view, the score becomes a dot

product between vector representations of a text query q and

a text document Wa, as often done in text retrieval [4].

scorePAMIR(a, t) = Wta (10)

where Wt is the t

th

row of W.

3.4.1 Ranking Loss

Let us assume that we are given a nite training set

Dtrain =

(q1, a

+

1

, a

1

), . . . , (qn, a

+

n

, a

n

)

, (11)

where for all k, q

k

is a text query, a

+

k

R(q

k

, Atrain) is an

audio document relevant to q

k

and a

k

R(q

k

, Atrain) is an

audio document non-relevant to q

k

. The PAMIR approach

looks for parameters W such that

k, F

W

(q

k

, a

+

k

) F

W

(q

k

, a

k

) , > 0 (12)

This equation can be rewritten using the per-sample loss k,

l

W

(q

k

, a

+

k

, a

k

) = max

0, F

W

(q

k

, a

+

k

) + F

W

(q

k

, a

k

)

. In

other words, PAMIR aims to nd a W such that for all k,

the positive score F

W

(q

k

, a

+

k

) should be greater than the

negative score F

W

(q

k

, p

k

) by a margin of at least.

This criterion is inspired by the ranking SVM approach

[9], which has successfully been applied to text retrieval.

However, ranking-SVM requires to solve a quadratic opti-

mization procedure, which does not scale to handle very

large number of constraints.

3.4.2 Online Training

PAMIR uses the passive-aggressive (PA) family of algo-

rithms, originally developed for classication and regression

problems [5] to iteratively minimize

L(Dtrain; W) =

n

X

k=1

l

W

(q

k

, a

+

k

, a

k

). (13)

At each training iteration i, PAMIR solves the following

convex problem:

W

i

= argmin

W

1

2

WW

i1

2

+ C l

W

(qi, a

+

i

, a

i

). (14)

where is the point-wise L2 norm. Therefore, at each iter-

ation, W

i

is selected as a trade-o between remaining close

to the previous parameters W

i1

and minimizing the loss

on the current example l

W

(qi, a

+

i

, a

i

). The aggressiveness

parameter C controls this trade-o. It can be shown that

the solution of problem (14) is,

W

i

= W

i1

+ iV

i

,

where i = min

C,

l

W

i1(qi, a

+

i

, a

i

)

V

i

and V

i

= [q

1

i

(a

+

k

a

k

), . . . , q

|T|

i

(a

+

k

a

k

)]

where q

j

i

is the j

th

value of vector qi, and Vi is the gradient

of the loss with respect to W.

4. EXPERIMENTAL SETUP

We rst describe the two datasets that we used for test-

ing our framework. Then we discuss the acoustic features

used to represent audio documents. Finally, we describe the

experimental protocol.

4.1 Sound Datasets

The success of a sound-ranking system depends on the

ability to learn a matching between acoustic features and

the corresponding text queries. Its performance strongly

depends on the size and type of the sound-recording dataset

but even more so on the space of possible queries: classifying

sounds into broad acoustic types (speech, music, other) is

inherently dierent from detecting more rened categories

such as (lion, cat, wolf).

In this paper we chose to address the hard task of using

queries at varying abstraction levels. We collected two sets

of data: (1) a clean set of sound eects and (2) a larger

set of user-contributed sound les.

The rst dataset consists of sound eects that are typically

short, contain only a single auditory object, and usually

contain the prototypical sample of an auditory category.

For example, samples labeled lion usually contain a wild

roar. On the other hand, most sound content that is publicly

available, like the sound tracks of home movies and amateur

recordings, are far more complicated. They could involve

multiple auditory objects, combined into a complex audi-

tory scene. Our second dataset, user-contributed sounds,

contains many sounds with precisely these latter properties.

To allow for future comparisons, and since we cannot dis-

tribute the actual sounds in our datasets, we have made

available a companion website with the full list of sounds

for both datasets [2]. It contains links to all sounds avail-

able online, and detailed references to CD data, together

with the processed labels for each le. This can be found

online at sound1sound.googlepages.com .

4.1.1 Sound Effects

To produce the rst dataset, SFX, we collected data from

multiple sources: (1) a set of 1400 commercially available

sound eects from collections distributed on CDs; (2) a col-

lection of short sounds available from www.ndsounds.com,

including 3300 les with 565 unique single-word labels; (3)

a set of 1300 freely available sound eects, collected from

multiple online websites including partners in rhyme, acous-

tica.com, ilovewavs.com, simplythebest.net , wav-sounds.com,

wavsource.com, wavlist.com. Files in these sets usually did

not have any detailed metadata except le names.

We manually labeled all of the sound eects by listening

to them and typing in a handful of tags for each sound. This

was used for adding tags to existing tags (from ndsounds)

and to tag the non-labeled les from other sources. When

108

labeling, the original le name was displayed, so the label-

ing decision was inuenced by the description given by the

original author of the sound eect. We restrict our tags to

common terms used in le names, and those existing in the

ndsound data. We also added high level tags to each le.

For instance, les with tags such as rain, thunder and

wind were also given the tags ambient and nature. Files

tagged cat, dog, and monkey were augmented with tags

of mammal and animal. These higher level terms assist

in retrieval by inducing structure over the label space.

4.1.2 User-contributed Sounds

To produce the second dataset, Freesound, we collected

samples from the Freesound project [6]. This site allows

users to upload sound recordings and today contains the

largest collection of publicly available and labeled sound

recordings. At the time we collected the data it had more

than 40,000 sound les amounting to 150 Gb.

Each le in this collection is labeled by a set of multi-

ple tags, entered by the user. We preprocessed the tags by

dropping all tags containing numbers, format terms (mp3,

wav, aif, bpm, sound) or starting with a minus symbol, x-

ing misspellings, and stemming all words using the Porter

stemmer for English. Finally, we also ltered out very long

sound les (larger than 150 Mb).

For this dataset, we also had access to anonymized log

counts of queries, from the freesound.org site, provided by

the Freesound project. These query counts provide an excel-

lent way to measure retrieval accuracy as would be viewed

by the users, since it allows to weight more heavily queries

that are popular, and down-weight rare queries.

The query log counts included 7.6M queries. 5.2M (68%)

of the queries contained only one term, and 2.2M (28%)

had two terms. The most popular query was wind (35K in-

stances, 0.4%), followed by scream (28420) and rain (27594).

To match les with queries, we removed all queries that con-

tained non-English characters and the negation sign (less

than 0.9% of the data). We also removed format suxes

(wav, aif, mp3), non-letter characters and stemmed query

terms similarly to le tags. This resulted in 7.58M queries

(622K unique queries, 223K unique terms).

Table 1 summarizes the various statistics for the rst split

of each dataset, after cleaning.

4.2 Acoustic Features

There has been considerable work in the literature on de-

signing and extracting acoustic features for sound classi-

cation (e.g. [19]). Typical feature sets include both time-

and frequency-domain features, such as energy envelope and

distribution, frequency content, harmonicity, and pitch. The

Freesound SFX

Number of documents 15780 3431

for training 11217 2308

for test 4563 1123

Number of queries 3550 390

Avg. # of rel. doc. per q. 28.3 27.66

Text vocabulary size 1392 239

Avg. # of words per query 1.654 1.379

Table 1: Summary statistics for the rst split of each

of the two datasets.

most widely used features for speech and music classication

are mel-frequency cepstral coecients (MFCC). Moreover,

in some cases MFCCs were shown to be a sucient represen-

tation, in the sense that adding additional features did not

improve classication accuracy [3]. We believe that high-

level auditory object recognition and scene analysis could

benet considerably from more complex features and sparse

representations, but the study of these features and repre-

sentations is outside the scope of the current study.

We therefore chose to focus in this work on MFCC-based

features. We calculated the standard 13 MFCC coecients

together with their rst and second derivatives, and removed

the (rst) energy component, yielding a vector of 38 features

per time frame. We used standard parameters for calculat-

ing the MFCCs, as set by the default in the RASTA matlab

package, resulting in that each sound le was represented by

a series of a few hundreds of 38 dimensional vectors. The

GMM based experiments used exactly these MFCC features.

For the (linear) SVM and PAMIR based experiments, we

wish to represent each le by a single sparse vector. We

therefore took the following approach: we used k-means to

cluster the set of all MFCC vectors extracted from our train-

ing data. Based on small-scale experiments, we settled on

2048 clusters, since smaller numbers of clusters did not have

sucient expressive power, and larger numbers did not fur-

ther improve performance. Clustering the MFCCs trans-

forms the data into a sparse representation that can be used

eciently during learning.

We then treated the set of MFCC centroids as acous-

tic words, and viewed each audio le as a bag of acoustic

words. Specically, we represented each le using the dis-

tribution of MFCC centroids. We then normalized this joint

count using a procedure that is similar to the one used for

queries. This yields the following acoustic features:

ac =

tf

a

c

idfc

q

P

da

j=1

(tf

a

j

idfj)

2

, (15)

where da is the number of features used to represent an audio

document, tf

a

c

is the number of occurrences of MFCC cluster

t in audio document a, and idfc is the inverse document

frequency of MFCC cluster c, dened as log(rc), rc being

the fraction of training audio documents containing at least

one occurrence of MFCC cluster c.

4.3 The Experimental Procedure

We used the following procedure for all the methods com-

pared, and for each of our two datasets tested. We used two

levels of cross validation, one for selecting hyper parameters,

and another for training the models.

Specically, we rst segmented the underlying set of audio

documents into three equal non-overlapping splits. Each

split was used as a held-out test set for evaluating algorithm

performance. Models were trained on the remaining two-

thirds of the data, keeping test and training sets always non-

overlapping. Reported results are averages over 3 split sets.

To select hyper parameters for each model, we further

segmented each training set into 5-fold cross validation sets.

For the GMM experiments, the cross-validation sets were

used to tune the following hyper-parameters: the number of

Gaussians of the background model (tested between 100 and

1000, nal value is 500), the minimum value of the variances

of each Gaussian (tested between 0 and 0.6, nal value is

109

0 5 10 15 20

0

0.1

0.2

0.3

0.4

0.5

0.6

top k

p

r

e

c

i

s

i

o

n

a

t

t

o

p

k

Special Effects

GMM, avgp=0.26

PAMIR, avgp=0.28

SVM, avgp=0.27

Figure 1: Precision as a function of rank cuto, SFX

data. Error bars denote standard deviation over

three split of the data. Avg-p represents the mean

average precision for each method.

10

9

times the global variance of the data), and in (6)

(tested between 0.1 and 0.9, nal value is 0.1). For the

SVM experiments, we used a linear kernel and tuned the

value of C (tested 0.01, 0.11, 10, 100, 200, 500, 1000, nal

value is 500). Finally, for the PAMIR experiments, we tuned

C (tested 0.01, 0.1, 0.5, 1, 2 10, 100, nal value 1).

We used actual anonymized queries that were submitted

to the Freesound database to build the query set. An audio

le was said to match a query if all its tags are covered by

the query. For example, if a document was labeled with

tags growl and lion, all the queries growl, lion, growl

lion were considered as matching the document. However, a

document labeled new york is not matched by a query new

or york. All other documents were marked as negative.

The set of labels in the training set of audio documents

dene a vocabulary of textual words. We removed from the

test sets all queries that were not covered by this vocabulary.

Similarly, we pruned validation sets using vocabularies built

from the cross-validation training sets. Out-of-vocabulary

terms are discussed in Sec. 6.

Evaluations

For all experiments, we compute the per-query precision at

top k, dened as the percentage of relevant audio documents

within the top k positions of the ranking for the query. Re-

sults are then averaged over all queries of the test set, using

the observed weight of each query in the query logs. We also

report the mean average-precision for each method.

5. RESULTS

We trained the GMM, SVM and PAMIR models on both

Freesound and SFX data, and tested their performance and

running times. Figure 1 shows the precision at top k as

a function of k, for the SFX dataset. All three methods

achieve high precision at top-ranked documents with PAMIR

outperforming other methods (but not signicantly), and

GMM providing worse precision. The top-ranked document

was relevant to the query in more than 60 % of the queries.

0 5 10 15 20

0

0.1

0.2

0.3

0.4

0.5

0.6

top k

p

r

e

c

i

s

i

o

n

a

t

t

o

p

k

Freesound

GMM, avgp=0.34

PAMIR, avgp=0.27

SVM, avgp=0.20

Figure 2: Precision as a function of rank cuto,

Freesound data. Error bars denote standard de-

viation over three split of the data. All methods

achieve similar top-1 precision, but GMM outper-

forms other methods for precision over lower ranked

sounds. On average, eight of the sounds (40%)

ranked at top 20 were tagged with labels matched

by the query. Avg-p represents the mean average

precision for each method.

Similarly precise results are obtained for Freesound data,

although this data set has an order of magnitude more doc-

uments and these are tagged with a vocabulary of query

terms that is an order of magnitude larger (Fig. 2). PAMIR

is superior for the top 1 and top 2, but then outperformed

by GMM, whose precision is consistently higher by 10%

for all k > 2. On average 8 les out of the top 20 are rele-

vant for the query with GMM, and 6 with PAMIR. PAMIR

outperforms SVM, although being 10 times faster, and is

400 times faster than GMM on this data.

5.1 Error Analysis

The above results provide average precision across all queries,

but queries in our data are highly variable in the number of

relevant training les per query. For instance, the number

of les per query in Freesound data ranges from 1 to 1049,

with most queries having only few les (median = 9). The

full distribution is shown in Fig. 3(top). A possible result is

that some queries do not have enough les for training on,

and hence performance on such poorly sampled queries will

be low.

Figure 3(bottom) demonstrates the eect of training sam-

ple size per query, within our dataset. It shows that ranking

precision greatly improves when more les are available for

training, but this eect saturates with 20 les per query.

We further looked into specic rankings of our system.

Since the tags assigned to les are partial, it is often the

case that a sound may match a query by its auditory con-

tent, but the tags of the le do not match the query words.

Table 2 demonstrate this eect showing the 10 top-ranked

sound for the query bigcat. All rst ve entries are cor-

rectly retrieved, and found precise since their tags contain

the word bigcat. However, entry number 6 is tagged growl

110

0

500

1000

#

q

u

e

r

i

e

s

freesound

0 20 40 60 80 100 120 140 160 180

0.18

0.2

0.22

0.24

0.26

# files per query

p

r

e

c

i

s

i

o

n

a

t

t

o

p

1

0

Figure 3: Top: Distribution of number of matching

les per query in the training set, Freesound data.

Most queries have very few positive examples; mode

= 3, median = 9, mean = 28. Bottom: Precision at

top 5 as a function of number of les in the training

set that match each query, Freesound data. Queries

that match less than 20 les, yield on average lower

precision. Precision obtained with PAMIR, aver-

aged over all three splits of the data.

(by ndsound.com), and was ranked by the system as rele-

vant to the query. Listening to the sound, we conrm that

the recording contains the sound of a growling big cat (such

as a tiger or a lion). We provide this example online at [2]

for the readers of the paper to judge the sounds. This exam-

ple demonstrates that the actual performance of the system

may be considerably better than estimated using precision

over noisy tags. Obtaining a quantitative evaluation of this

eect requires to carefully listen to thousands of sound les,

and is outside the scope of the current work.

5.2 Scalability

The datasets handled in this paper are signicantly larger

than in previous published work. However, the set of po-

tentially available unlabeled sound is much larger, including

for instance sound tracks of user-generated movies available

online. The run-time performance of the learning methods

is therefore crucial for handling real data in practice.

Table 3 shows the total experimental time necessary to

provide all results for each method and database, including

feature extraction, hyper-parameter selection, model train-

ing, query ranking, and performance measurement. As can

be seen, PAMIR scales best, while GMMs are the slow-

est method for our two datasets. In fact, since SVMs are

quadratic with respect to the number of training examples,

we expect much longer training times as the number of doc-

uments grows to a web scale. Of the methods that we tested,

in their present form, only PAMIR would therefore be fea-

sible for a true large-scale application.

For all three methods, adding new sounds for retrieval

is computationally inexpensive. Adding new term can be

achieved by learning a specic model for the new term, which

is also feasible. Signicant changes in the set of queries and

relevant les may require to retrain all models, but initial-

ization using the older model can make this process faster.

Table 2: Top-ranked sounds for the query big cat.

The le guardian does not match the label bigcat

but its acoustic content does match the query.

human eval score le name tags

eval by

tags

+ + 4.04 panther panther, bigcat,

-roar2 animal, mammal

+ + 3.68 leopard4 leopard, bigcat,

animal, mammal

+ + 3.67 panther3 panther, bigcat

animal, mammal

+ + 3.53 jaguar jaguar, bigcat

animal, mammal

+ + 3.40 cougar5 cougar, bigcat

animal, mammal

+ 3.26 guardian growl, expression,

animal

+ + 2.76 tiger tiger, bigcat

animal, mammal

+ + 2.72 Anim-tiger tiger, bigcat

animal, mammal

2.69 bad disk x cartoon, synthesized

2.68 racecar 1 race, motor, engine,

ground, machine

Table 3: Total experimental time (training+test)

times, in hours, assuming a single modern CPU, for

all methods and both datasets, including all feature

extraction and hyper-parameter selection. File and

vocabulary sizes are for a single split as in Table 1.

Data les terms GMMs SVMs PAMIR

Freesound 15780 1392 2400 hrs 59 hrs 6 hrs

SFX 3431 239 960 hrs 5 hrs 3 hrs

6. DISCUSSION

We developed a scalable system that can retrieve sounds

by their acoustic content, opening the prospect to search

vast quantities of sound data, using text queries. This was

achieved by learning a mapping between textual tags and

acoustic features, and can be done for a large open set of tex-

tual terms. Our results show that content-based retrieval for

general sounds, spanning acoustic categories beyond speech

and music, can be accurately achieved, even with thousands

of possible terms and noisy real-world labels. Importantly,

the system can be rapidly trained on a large set of labeled

sound data, and could then be used to retrieve sounds from

a much larger (e.g. Internet) repository of unlabeled data.

We compared three learning approaches for modeling the

relation between acoustics and textual tags. The most im-

portant conclusion is that good performance can be achieved

with the highly-scalable method called PAMIR, that was

earlier developed for content-based image retrieval. For our

dataset, this approach was 10 times faster than multi-class

SVM, and 1000 times faster than a generative GMM ap-

111

proach. This suggests that the retrieval system can be fur-

ther scaled to handle considerably larger datasets.

We used a binary measure to tell if a le is relevant to

a query. In some cases, the training data also provides a a

continuous relevance score. This could help training by re-

ning the ranking of mildly vs strongly relevant documents.

Continuous ranking measures can be easily incorporated to

PAMIR since it is based on comparing pairs of documents.

This is achieved by adding constraints on the order of two

positive documents with one having a higher relevant score

than the other one. To handle continuous relevance with

SVM, one would have to modify the training objective from

a classication task (is this document related to this term?)

to a regression task (how much is this document related to

this term?). Any regression approaches can be used, in-

cluding SVM regression, but it is unclear that they would

scale and still provide good performance. Finally, it is not

clear how the GMM approach could be modied to handle

continuous relevance.

The progress of large-scale content-based audio retrieval

is largely limited by the availability of high-quality labeled

data. Approaches for collecting more labeled sounds could

include computer games [18], and using closed-captioned

movies. User-contributed data is an invaluable source of

labels, but also has important limitations. In particular,

users tend to provide annotations with information that does

not exist in the recording. This phenomenon becomes most

critical in the vision domain, where users avoid stating the

obvious and describe the context of an image rather than

the objects that appear in it. We observe similar eects in

the sound domain. In addition, dierent users may describe

the same sound with dierent terms, and this may cause

under-estimates of the system performance.

This problem is related to the issue of out-of-dictionary

searches, where search queries use terms that were not ob-

served during training. Standard techniques for address-

ing this issue make use of additional semantic knowledge

about the queries. For instance, queries can be expanded

to include additional terms like synonyms or closely related

search terms, based on semantic dictionaries or query logs [10].

This aspect is orthogonal to the problem of matching sounds

and text queries and was not addressed in this paper.

This paper focused on the feasibility of a large-scale content-

based approach to sound retrieval, and all the methods we

compared used the standard and widely used MFCC fea-

tures. The precision and computational eciency of the

PAMIR system can now help to drive progress on sound

retrieval, allowing to compare dierent representations of

sounds and queries. In particular, we are currently test-

ing sound representations based on auditory models, which

are intended to capture better some perceptual categories in

general sounds. Such models could be benecial in handling

the diverse auditory scenes that can be found in the general

auditory landscape.

7. ACKNOWLEDGMENTS

We thank D. Grangier for useful discussions and help with

earlier versions of this manuscript; Xavier Serra for dis-

cussing, information sharing and support with the freesound

database.

8. REFERENCES

[1] A. Amir, G. Iyengar, J. Argillander, M. Campbell,

A. Haubold, S. Ebadollahi, F. Kang, M. R. Naphade,

A. Natsev, J. R. Smith, J. Tesic, and T. Volkmer.

IBM research TRECVID-2005 video retrieval system.

In TREC Video Workshop, 2005.

[2] Anonymous. http://sound1sound.googlepages.com.

[3] J. J. Aucouturier. Ten Experiments on the Modelling

of Polyphonic Timbre. PhD thesis, Univ. Paris 6, 2006.

[4] R. Baeza-Yates and B. Ribeiro-Neto. Modern

Information Retrieval. Addison Wesley, England, 1999.

[5] K. Crammer, O. Dekel, J. Keshet, S. Shalev-Shwartz,

and Y. Singer. Online passive-aggressive algorithms. J.

of Machine Learning Research (JMLR), 7, 2006.

[6] Freesound. http://freesound.iua.upf.edu.

[7] J. Gauvain and C. Lee. Maximum a posteriori

estimation for multivariate gaussian mixture

observation of Markov chains. In IEEE Trans. on

Speech Audio Process., volume 2, pages 291298, 1994.

[8] D. Grangier, F. Monay, and S. Bengio. A

discriminative approach for the retrieval of images

from text queries. In European Conference on Machine

Learning, ECML, Lecture Notes in Computer Science,

volume LNCS 4212. Springer-Verlag, 2006.

[9] T. Joachims. Optimizing search engines using

clickthrough data. In International Conference on

Knowledge Discovery and Data Mining (KDD), 2002.

[10] R. Jones, B. Rey, O. Madani, and W. Greiner.

Generating query substitutions. In WWW 06:

Proceedings of the 15th international conference on

World Wide Web, pages 387396, New York, NY,

USA, 2006. ACM.

[11] J. Mariethoz and S. Bengio. A comparative study of

adaptation methods for speaker verication. In Proc.

Int. Conf. on Spoken Lang. Processing, ICSLP, 2002.

[12] L. Rabiner and B.-H. Juang. Fundamentals of speech

recognition. Prentice All, rst edition, 1993.

[13] D. A. Reynolds, T. F. Quatieri, and R. B. Dunn.

Speaker verication using adapted gaussian mixture

models. Digital Signal Processing, 10(13), 2000.

[14] M. Slaney, I. Center, and C. San Jose. Semantic-audio

retrieval. In ICASSP, volume 4, 2002.

[15] D. Turnbull, L. Barrington, D. Torres, and

G. Lanckriet. Towards musical query by semantic

description using the cal500 data set. In SIGIR 07:

30th annual international ACM SIGIR conference on

Research and development in information retrieval,

pages 439446, New York, NY, USA, 2007. ACM.

[16] D. Turnbull, L. Barrington, D. Torres, and

G. Lanckriet. Semantic annotation and retrieval of

music and sound eects. In IEEE Transactions on

Audio, Speech and Language Processing, 2008.

[17] V. Vapnik. The nature of statistical learning theory.

SpringerVerlag, 1995.

[18] L. von Ahn and L. Dabbish. Labeling images with a

computer game. In Proceedings of the SIGCHI

conference on Human factors in computing systems,

pages 319326. ACM Press New York, NY, USA, 2004.

[19] P. Wan and L. Lu. Content-based audio retrieval: a

comparative study of various features and similarity

measures. Proceedings of SPIE, 6015:60151H, 2005.

112

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Test SQL QuestionsDocument1 pageTest SQL QuestionsVinay Chourasia0% (1)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- NormalizationDocument7 pagesNormalizationDaniel Kerandi0% (1)

- Postgresql InterviewQuestionDocument5 pagesPostgresql InterviewQuestionmontosh100% (1)

- Information Bulletin No. AAV-002-2021 Notice of Hearing of Resident Registration Election Board RERB Will Convene On 19 April at 4 00 PM 1600HDocument55 pagesInformation Bulletin No. AAV-002-2021 Notice of Hearing of Resident Registration Election Board RERB Will Convene On 19 April at 4 00 PM 1600HBuddy veluzNo ratings yet

- FactoryTalk View SE - Unable To Access ODBC Data SourceDocument2 pagesFactoryTalk View SE - Unable To Access ODBC Data Sourcepankaj mankarNo ratings yet

- Addmrpt 1 12780 12781Document12 pagesAddmrpt 1 12780 12781PraveenNo ratings yet

- A Weak Security Notion For Visual Secret Sharing Schemes: Mitsugu Iwamoto, Member, IEEEDocument11 pagesA Weak Security Notion For Visual Secret Sharing Schemes: Mitsugu Iwamoto, Member, IEEEkeerthisivaNo ratings yet

- 12 Understanding NoSQL PDFDocument34 pages12 Understanding NoSQL PDFkeerthisivaNo ratings yet

- IP LabDocument48 pagesIP LabGoudam RajNo ratings yet

- Syllabus 6thDocument5 pagesSyllabus 6thkeerthisivaNo ratings yet

- CS1203 System Software UNIT I Question AnsDocument10 pagesCS1203 System Software UNIT I Question AnskeerthisivaNo ratings yet

- 4th Module DBMS NotesDocument23 pages4th Module DBMS NotesArun GodavarthiNo ratings yet

- Historian AdminDocument259 pagesHistorian AdminMauricioNo ratings yet

- 2781A Designing Microsoft SQL Server 2005 Server-Side SolutionsDocument424 pages2781A Designing Microsoft SQL Server 2005 Server-Side SolutionsihtminNo ratings yet

- 07b CommVaultDocument10 pages07b CommVaultnetapp000444No ratings yet

- Search Engine Optimization Fundamentals: Lesson 1.5: Meta Descriptions Help Too!Document32 pagesSearch Engine Optimization Fundamentals: Lesson 1.5: Meta Descriptions Help Too!Kiran KalyanamNo ratings yet

- Oracle Forms 10g - Demos, Tips and TechniquesDocument17 pagesOracle Forms 10g - Demos, Tips and TechniquesYatinNo ratings yet

- Multi Format LFDocument17 pagesMulti Format LFIssac NewtonNo ratings yet

- MAA / Data Guard 10g Setup Guide - Creating A Single Instance Physical Standby For A RAC PrimaryDocument5 pagesMAA / Data Guard 10g Setup Guide - Creating A Single Instance Physical Standby For A RAC PrimarygourabchakrabortyNo ratings yet

- Monitoring and Tuning Oracle RAC Database: Practice 7Document14 pagesMonitoring and Tuning Oracle RAC Database: Practice 7Abdo MohamedNo ratings yet

- Jtree, Jtable Java ProgrammingDocument19 pagesJtree, Jtable Java ProgrammingdwijaNo ratings yet

- D73668GC21 AppcDocument7 pagesD73668GC21 Appcpraveen2kumar5733No ratings yet

- SQL Server Ground To CloudDocument167 pagesSQL Server Ground To CloudPaul ZgondeaNo ratings yet

- Hariharan - DW Expert - BI Developer - Data ModellerDocument2 pagesHariharan - DW Expert - BI Developer - Data ModellerHari HaranNo ratings yet

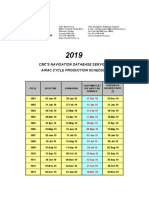

- Airac Cycle Production Schedule CMC'S Navigation Database ServicesDocument4 pagesAirac Cycle Production Schedule CMC'S Navigation Database ServicesLuis LanderosNo ratings yet

- Mysql Replication Excerpt 5.0 enDocument73 pagesMysql Replication Excerpt 5.0 enone97LokeshNo ratings yet

- PL Exception HandalingDocument6 pagesPL Exception HandalinggirirajNo ratings yet

- Al-Jabar: Jurnal Pendidikan Matematika Vol. 7, No. 2, 2016, Hal 231 - 248Document18 pagesAl-Jabar: Jurnal Pendidikan Matematika Vol. 7, No. 2, 2016, Hal 231 - 248nenengNo ratings yet

- Scenario-1 (RAHUL) : Section1Document18 pagesScenario-1 (RAHUL) : Section1DotNo ratings yet

- Pega CSSA Session 02Document14 pagesPega CSSA Session 02tariq aliNo ratings yet

- Oracle 12c TOC PDFDocument18 pagesOracle 12c TOC PDFDEVESH BHOLE100% (1)

- OODBMSDocument9 pagesOODBMSmaheshboobalanNo ratings yet

- Data Analyst Interview Questions To Prepare For in 2018Document17 pagesData Analyst Interview Questions To Prepare For in 2018Rasheeq RayhanNo ratings yet

- Database Design and Introduction To MySQL Day - 5Document16 pagesDatabase Design and Introduction To MySQL Day - 5ABHI GOUDNo ratings yet

- DWDMDocument2 pagesDWDMMahima SharmaNo ratings yet