Professional Documents

Culture Documents

A Concept-Based Model For Enhancing Text Categorization PDF

Uploaded by

AnnisaFirtriyaniOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A Concept-Based Model For Enhancing Text Categorization PDF

Uploaded by

AnnisaFirtriyaniCopyright:

Available Formats

A Concept-based Model for Enhancing Text Categorization

Shady Shehata

Pattern Analysis and Machine

Intelligence (PAMI) Research

Group

Electrical and Computer

Engineering Department

University of Waterloo

shady@pami.uwaterloo.ca

Fakhri Karray

Pattern Analysis and Machine

Intelligence (PAMI) Research

Group

Electrical and Computer

Engineering Department

University of Waterloo

karray@pami.uwaterloo.ca

Mohamed Kamel

Pattern Analysis and Machine

Intelligence (PAMI) Research

Group

Electrical and Computer

Engineering Department

University of Waterloo

mkamel@pami.uwaterloo.ca

ABSTRACT

Most of text categorization techniques are based on word

and/or phrase analysis of the text. Statistical analysis of a

term frequency captures the importance of the term within

a document only. However, two terms can have the same

frequency in their documents, but one term contributes more

to the meaning of its sentences than the other term. Thus,

the underlying model should indicate terms that capture the

semantics of text. In this case, the model can capture terms

that present the concepts of the sentence, which leads to

discover the topic of the document.

A new concept-based model that analyzes terms on the

sentence and document levels rather than the traditional

analysis of document only is introduced. The concept-based

model can eectively discriminate between non-important

terms with respect to sentence semantics and terms which

hold the concepts that represent the sentence meaning.

The proposed model consists of concept-based statisti-

cal analyzer, conceptual ontological graph representation,

and concept extractor. The term which contributes to the

sentence semantics is assigned two dierent weights by the

concept-based statistical analyzer and the conceptual onto-

logical graph representation. These two weights are com-

bined into a new weight. The concepts that have maximum

combined weights are selected by the concept extractor.

A set of experiments using the proposed concept-based

model on dierent datasets in text categorization is con-

ducted. The experiments demonstrate the comparison be-

tween traditional weighting and the concept-based weight-

ing obtained by the combined approach of the concept-based

statistical analyzer and the conceptual ontological graph.

The evaluation of results is relied on two quality mea-

sures, the Macro-averaged F1 and the Error rate. These

quality measures are improved when the newly developed

concept-based model is used to enhance the quality of the

text categorization.

Permission to make digital or hard copies of all or part of this work for

personal or classroom use is granted without fee provided that copies are

not made or distributed for prot or commercial advantage and that copies

bear this notice and the full citation on the rst page. To copy otherwise, to

republish, to post on servers or to redistribute to lists, requires prior specic

permission and/or a fee.

KDD07, August 1215, 2007, San Jose, California, USA.

Copyright 2007 ACM 978-1-59593-609-7/07/0008 ...$5.00.

Categories and Subject Descriptors

I.2.7 [Articial Intelligence]: Natural Language Process-

ingLanguage Parsing and Understanding

General Terms

Theory, Design, Algorithms, Experimentation

Keywords

Concepts, concept-based categorization

1. INTRODUCTION

Natural Language Processing (NLP) is both a modern

computational technology and a method of investigating

and evaluating claims about human language itself. NLP

is a term that links back into the history of Articial In-

telligence (AI), the general study of cognitive function by

computational processes, with an emphasis on the role of

knowledge representations. The need for representations of

human knowledge of the world is required in order to un-

derstand human language with computers.

Text mining attempts to discover new, previously un-

known information by applying techniques from natural lan-

guage processing and data mining. Categorization, one of

the traditional text mining techniques, is supervised learn-

ing paradigm where categorization methods try to assign

a document to one or more categories, based on the docu-

ment content. Classiers are trained from examples to con-

duct the category assignment automatically. To facilitate

eective and ecient learning, each category is treated as a

binary classication problem. The issue here is whether or

not a document should be assigned to a particular category

or not.

Most of current document categorization methods are based

on the vector space model (VSM) [1, 15, 14], which is a

widely used data representation. The VSM represents each

document as a feature vector of the terms (words or phrases)

in the document. Each feature vector contains term weights

(usually term-frequencies) of the terms in the document.

The similarity between documents is measured by one of

several similarity measures that are based on such a fea-

ture vector. Examples include the cosine measure and the

Jaccard measure.

Usually, in text categorization techniques, the frequency

of a term (word of phrase) is computed to explore the im-

portance of the term in the document. However, two terms

629

Research Track Paper

can have the same frequency in a document, but one term

contributes more to the meaning of its sentences than the

other term. Thus, some terms provide the key concepts in a

sentence, and indicate what a sentence is about. It is impor-

tant to note that extracting the relations between verbs and

their arguments in the same sentence has the potential for

analyzing terms within a sentence. The information about

who is doing what to whom claries the contribution of each

term in a sentence to the meaning of the main topic of that

sentence.

In this paper, a novel concept-based model is proposed. In

the proposed model, each sentence is labeled by a semantic

role labeler that determines the terms which contribute to

the sentence semantics associated with their semantic roles

in a sentence. Each term that has a semantic role in the sen-

tence, is called concept. Concepts can be either a word or

phrase and it is totally dependent on the semantic structure

of the sentence. The concept-based model analyzes each

term within a sentence and a document using the follow-

ing three components. The rst component is the concept-

based statistical analyzer that analyzes each term on the

sentence and the document levels. After each sentence is

labeled by a semantic role labeler, each term is statistically

weighted based on its contribution to the meaning of the

sentence. This weight discriminates between non-important

and important terms with respect to the sentence seman-

tics. The second component is the Conceptual Ontological

Graph (COG) representation which is based on the concep-

tual graph theory and utilizes graph properties. The COG

representation captures the semantic structure of each term

within a sentence and a document, rather than the term

frequency within a document only. After each sentence is

labeled by a semantic role labeler, all the labeled terms are

placed in the COG representation according to their contri-

bution to the meaning of the sentence. Some terms could

provide shallow concepts about the meaning of a sentence,

but, other terms could provide key concepts that hold the

actual meaning of a sentence. Each concept in the COG

representation is weighted based on its position in the repre-

sentation. Thus, the COG representation is used to provide

a denite separation among concepts that contribute to the

meaning of a sentence. Therefore, the COG representation

presents concepts into a hierarchical manner. The key con-

cepts are captured and weighted based on their positions in

the COG representation.

At this point, concepts are assigned two dierent weights

using two dierent techniques which are the concept-based

statistical analyzer and the COG representation. It is im-

portant to note that both of them achieve the same func-

tionality, in dierent ways. The output of the two tech-

niques are the important weighted concepts with respect to

the sentence semantics that each technique captures. How-

ever, the weighted concepts that are computed by each tech-

nique could not be exactly the same. The important con-

cepts to the concept-based statistical analyzer could be non-

important to the COG representation and vice versa. There-

fore, the third component, which is the concept extractor,

combines the two dierent weights computed by the concept-

based statistical analyzer and the COG representation to

denote the important concepts with respect to the two tech-

niques. The extracted top concepts are used to build stan-

dard normalized feature vectors using the standard vector

space model (VSM) for the purpose of text categorization.

Weighting based on the matching of concepts in each doc-

ument, is showed to have a more signicant eect on the

quality of the text categorization due to the similaritys in-

sensitivity to noisy terms that can lead to an incorrect simi-

larity measure. The concepts are less sensitive to noise when

it comes to calculating normalized feature vectors. This is

due to the fact that these concepts are originally extracted

by the semantic role labeler and analyzed using two dierent

techniques with respect to the sentence and document lev-

els. Thus, the matching among these concepts is less likely

to be found in non-relevant documents to a category. The

results produced by the proposed concept-based model in

text categorization have higher quality than those produced

by traditional techniques.

The explanations of the important terms, which are used

in this paper, are listed as follows:

- Verb-argument structure: (e.g John hits the ball). hits

is the verb. John and the ball are the arguments of the

verb hits,

- Label : A label is assigned to an argument. e.g: John has

subject (or Agent) label. the ball has object (or theme)

label,

- Term: is either an argument or a verb. Term is also either

a word or a phrase (which is a sequence of words),

- Concept: in the new proposed model, concept is a labeled

term.

The rest of this paper is organized as follows. Section

2 presents the thematic roles background. Section 3 intro-

duces the concept-based model. The experimental results

are presented in section 4. The last section summarizes and

suggests future work.

2. THEMATIC ROLES

Generally, the semantic structure of a sentence can be

characterized by a form of verb argument structure. This

underlying structure allows the creation of a composite mean-

ing representation from the meanings of the individual con-

cepts in a sentence. The verb argument structure permits a

link between the arguments in the surface structures of the

input text and their associated semantic roles.

Consider the following example: My daughter wants a

doll. This example has the following syntactic argument

frames: (Noun Phrase (NP) wants NP). In this case, some

facts could be driven for the particular verb wants (1)

There are two arguments to this verb (2) Both arguments

are NPs (3) The rst argument my daughter is pre-verbal

and plays the role of the subject (4) the second argument a

doll is a post-verbal and plays the role of the direct object.

The study of the roles associated with verbs is referred to a

thematic role or case role analysis [8]. Thematic roles, rst

proposed by Gruber and Fillmore [4], are sets of categories

that provide a shallow semantic language to characterize the

verb arguments.

Recently, there have been many attempts to label the-

matic roles in a sentence automatically. Gildea and Juraf-

sky [6] were the rst to apply a statistical learning technique

to the FrameNet database. They presented a discriminative

model for determining the most probable role for a con-

stituent, given the frame, predicator, and other features.

These probabilities, trained on the FrameNet database, de-

pend on the verb, the head words of the constituents, the

voice of the verb (active, passive), the syntactic category

(S, NP, VP, PP, and so on) and the grammatical function

630

Research Track Paper

(subject and object) of the constituent to be labeled. The

authors tested their model on a pre-release version of the

FrameNet I corpus with approximately 50,000 sentences and

67 frame types. Gildea and Jurafskys model was trained

by rst using Collins parser [2], and then deriving its fea-

tures from the parsing, the original sentence, and the correct

FrameNet annotation of that sentence.

A machine learning algorithm for shallow semantic pars-

ing was proposed in [13][12][11]. It is an extension of the

work in [6]. Their algorithm is based on using Support Vec-

tor Machines (SVM) which results in improved performance

over that of earlier classiers by [6]. Shallow semantic pars-

ing is formulated as a multi-class categorization problem.

SVMs are used to identify the arguments of a given verb in

a sentence and classify them by the semantic roles that they

play such as AGENT, THEME, GOAL.

3. CONCEPT-BASED MODEL

A raw text document is the input to the proposed model.

Each document has well dened sentence boundaries. Each

sentence in the document is labeled automatically based on

the PropBank notations [9]. After running the semantic

role labeler[9], each sentence in the document might have

one or more labeled verb argument structures. The number

of generated labeled verb argument structures is entirely de-

pendent on the amount of information in the sentence. The

sentence that has many labeled verb argument structures

includes many verbs associated with their arguments. The

labeled verb argument structures, the output of the role la-

beling task, are captured and analyzed by the concept-based

model on the sentence and document levels.

In this model, both the verb and the argument are con-

sidered as terms. One term can be an argument to more

than one verb in the same sentence. This means that this

term can have more than one semantic role in the same sen-

tence. In such cases, this term plays important semantic

roles that contribute to the meaning of the sentence. In the

concept-based model, a labeled term either word or phrase

is considered as concept.

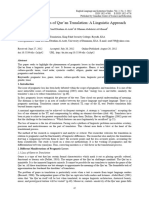

The proposed concept-based model consists of concept-

based statistical analyzer, COG representation, and con-

cept extractor. The aim of the concept-based statistical

analyzer is to weight each term on the sentence and the

document levels rather than the traditional analysis of doc-

ument only. The conceptual ontological graph representa-

tion presents the sentence structure while maintaining the

sentence semantics in the original document. Each concept

in the COG representation is weighted based on its position

in the representation. The concept extractor combines the

two dierent weights computed by the concept-based sta-

tistical analyzer and the COG representation to select the

maximum weighted concepts.

The proposed model assigns two new weights to each con-

cept in a sentence. The newly proposed weight

stat

and

weightCOG are computed by the concept-based statistical

analyzer and the COG representation respectively.

The proposed model combines the work discussed in [16]

and [17] into one concept-based model. This combination

is achieved in the proposed model by presenting the work

in [16] as the concept-based statistical analyzer component

and the work in [17] as the COG representation component.

The work discussed in [16] enhances text clustering quality

using statistical method. The proposed model utilizes the

L

a

n

g

u

a

g

e

I

n

d

e

p

e

n

d

e

n

t

Text Text

L

a

n

g

u

a

g

e

D

e

p

e

n

d

e

n

t

Natural Language Processing

Semantic Role Labeler

Syntax Parser

POS Tagger

L

a

n

g

u

a

g

e

D

e

p

e

n

d

e

n

t

Natural Language Processing

Semantic Role Labeler

Syntax Parser

POS Tagger

Concept-based Model

Sentence Separator

Concept-based Statistical

Analyzer

(tf: term frequency)

(ctf: conceptual term frequency)

Conceptual Ontological Graph

(COG)

Representation

Text Pre-processor Text Pre-processor

Concept Extractor

Concepts Concepts

Top

Concepts

Top

Concepts

Concepts Concepts

Doc-Concept

Matrix

SVM

NB

Rocchio

Figure 1: Concept-based Model

work of [16] to achieve statistical concept-based weighting,

which is the weight

stat

, on the sentence and the document

levels and applies it to text categorization. This weighting

discriminates between non-important concepts and the key

concepts with respect to the sentence meaning.

The work discussed in [17] enhances text retrieval quality

by extracting concepts from the COG representation based

on their positions only. The concept-based model proposes

new weight to each position in the COG representation to

achieve more accurate analysis with respect to the sentence

semantics. Thus, each concept in the COG representation

is assigned a proposed weight, which is weightCOG, based

on its position in the representation.

The proposed model takes advantage of the two tech-

niques discussed in [16, 17] by rst assigning two proposed

weights to each concept in a sentence. Secondly, the pro-

posed model combines the proposed weights into a new com-

bined weight and extracts the top concepts which have the

maximum combined weights by using the concept extractor.

Lastly, the combined weights are used to build normalized

feature vectors for text categorization purposes as depicted

in Fig.( 1).

3.1 Concept-based Statistical Analyzer

The objective of this task is to achieve a concept-based

statistical term analysis (word or phrase) on the sentence

and document levels rather than a single-term analysis in

the document set only.

To analyze each concept at the sentence-level, a concept-

based frequency measure, called the conceptual term fre-

quency (ctf) is utilized. The ctf is the number of occur-

rences of concept c in verb argument structures of sentence

s. The concept c, which frequently appears in dierent verb

argument structures of the same sentence s, has the princi-

pal role of contributing to the meaning of s.

631

Research Track Paper

To analyze each concept at the document-level, the term

frequency tf, the number of occurrences of a concept (word

or phrase) c in the original document, is calculated.

The concept-based weighting is one of the main factors

that captures the importance of a concept in a sentence and

a document. Thus, the concepts which have highest weights

are captured and extracted.

The following is the concept-based weighting weightstat

which is used to discriminate between non-important terms

with respect to sentence semantics and terms which hold the

concepts that present the meaning of the sentence.

weight

stat

i

= tfweight

i

+ ctfweight

i

(1)

In calculating the value of weightstat

i

in equation (1),

the tfweight

i

value presents the weight of concept i in doc-

ument d at the document-level and the ctfweight

i

value

presents the weight of the concept i in the document d at

the sentence-level based on the contribution of concept i to

the semantics of the sentences in d. The sum between the

two values of tfweight

i

and ctfweight

i

presents an accurate

measure of the contribution of each concept to the meaning

of the sentences and to the topics mentioned in a document.

In equation (2), the tfij value is normalized by the length

of the document vector of the term frequency tf

ij

in the

document d, where j = 1, 2, ..., cn

tfweight

i

=

tfij

cn

j=1

(tfij)

2

, (2)

where cn is the total number of the concepts which has a

term frequency value in the document d.

In equation (3), the ctf

ij

value is normalized by the length

of the document vector of the conceptual term frequency

ctfij in the document d where j = 1, 2, ..., cn

ctfweight

i

=

ctf

ij

cn

j=1

(ctf

ij

)

2

, (3)

where cn is the total number of concepts which has a

conceptual term frequency value in the document d.

3.2 Conceptual Ontological Graph (COG)

The COG representation is a conceptual graph G = (C, R)

where the concepts of the sentence, are represented as ver-

tices (C). The relations among the concepts such as agents,

objects, and actions are represented as (R). C is a set of

nodes {c

1

, c

2

, ..., c

n

}, where each node c represents a concept

in the sentence or a nested conceptual graph G; and R is

a set of edges {r1, r2, ..., rm}, such that each edge r is the

relation between an ordered pair of nodes (ci, cj).

The output of the role labeling task, which are verbs and

their arguments are presented as concepts with relations in

the COG representation. This allows the use of more in-

formative concept matching at the sentence-level and the

document-level rather than individual word matching.

The COG representation provides dierent nested levels

of concepts in a hierarchical manner. These levels are con-

structed based on the importance of the concepts in a sen-

tence, which makes use of analyzing the key concepts in the

sentence. The hierarchal representation of the COG, pro-

vides a denite separation among concepts which contribute

to the meaning of the sentence. This separation is needed to

distinguish between shallow concepts and the key concepts

in a sentence. The concepts (labeled terms) are placed in the

COG representation according to the amount of overlapping

between these terms with respect to the words.

To present the levels of the COG hierarchy, ve types of

verb argument structures are utilized and assigned to their

corresponding conceptual graphs:

One: There is only one generated verb argument struc-

ture.

Main: There is more than one generated verb argu-

ment structure, and the main structure has the maxi-

mum number of terms (arguments) that refer to other

terms in the rest of the verb argument structures.

Container: There is more than one generated verb ar-

gument structure, and the container structure refers to

the other arguments, and, at the same time, the con-

tainer structure does not have the maximum number

of referent terms.

Referenced: The referenced structure has terms (verb

or arguments), referred by terms in either the main or

the container structure.

Unreferenced: The terms in the unreferenced structure

are not referred by any other terms.

This scheme creates a conceptual graph for each verb ar-

gument structure. Each type of verb argument structure is

assigned to its corresponding conceptual graph. The COG

presents the conceptual graphs as levels, which are deter-

mined according to their types.

A new measure L

COG

is proposed to rank concepts with

respect to the sentence semantics in the COG representa-

tion. The proposed LCOG measure is assigned to One, Un-

referenced, Main, Container, and Referenced levels in the

COG representation with values 1,2,3,4, and 5 respectively.

Instead of selecting concept from only one level in the COG

representation, concepts in the entire levels of the COG rep-

resentation are considered and weighted.

The proposed weight

COG

is assigned to each concept pre-

sented in the COG representation and is calculated by:

weight

COG

i

= tfweight

i

L

COG

i

(4)

In equation(4), the tfweighti value presents the weight of

concept i in document d at the document-level as shown in

equation(2). The L

COG

i

value presents the importance of

the concept i in the document d at the sentence-level based

on the contribution of concept i to the semantics of the sen-

tences represented by the levels of the COG representation.

The multiplication between the two values of tfweight

i

and

L

COG

i

ranks the concepts in document d with respect to the

contribution of each concept to the meaning of the sentences

and to the topics mentioned in a document.

For implementation and performance purposes, it is im-

perative to note that the COG representation maintains

the identication number of each concept and each relation

632

Research Track Paper

node, rather than, the values of the nodes. There is a hash

table that includes the unique terms that appeared in each

verb argument structure. Thus, the COG is a hierarchy of

the identication numbers of the terms that appear in each

verb argument structure in the sentence. This is the source

of the eciency of the representation. It is also important to

note that ontology languages can not be able to capture the

semantic-based nested conceptual graphs of the hierarchical

structure of the sentence semantics.

The details of constructing of the COG representation are

discussed in [17].

3.3 Concept Extractor

The process of selecting the top concepts from the con-

cepts extracted by the concept-based statistical analyzer and

the COG representation is attained by the proposed Con-

cept Extractor Algorithm.

3.3.1 Concept-based Extractor Algorithm

1. d

doci

is a new Document

2. L is an empty List (L is a top concept list)

3. for each labeled sentence s in d do

4. create a COG for s as in [17]

5. c

i

is a new concept in s

6. for each concept c

i

in s do

7. compute tfi of ci in d

8. compute ctfi of ci in s in d

9. compute weight

stat

i

of concept c

i

10. compute weight

COG

i

of concept c

i

based on L

COG

i

11. compute weight

comb

i

= weight

stat

i

weight

COG

i

12. add concept ci to L

13. end for

14. end for

15. sort L descendingly based on weight

comb

16. output the max(weight

comb

) from list L

The concept extractor algorithm describes the process of

combining the weight

stat

(computed by the concept-based

statistical analyzer) and the weightCOG (computed by the

COG representation) into one new combined weight called

weight

comb

. The concept extractor selects the top concepts

that have the maximum weight

comb

value.

The proposed weight

comb

is calculated by:

weight

comb

i

= weight

stat

i

weight

COG

i

(5)

The procedure begins with processing a new document

(at line 1) which has well dened sentence boundaries. Each

sentence is semantically labeled according to [9].

For each labeled sentence (in the for loop at line 3), con-

cepts of the verb argument structures which represent the

semantic structures of the sentence are extracted to con-

struct the COG representation (at line 4). The same ex-

tracted concepts are weighted by the weightstat according

to the values of the tf and the ctf (at lines 7, 8, and 9).

During the concept-based statistical analysis, each con-

cept in the COG representation is also weighted with dier-

ent value by the weight

COG

(line 10). The weight

stat

and

the weightCOG are combine into the weight

comb

and added

to the concepts list L (at line 11 and 12).

The concepts list L is sorted descendingly based on the

weight

comb

values. The maximum weighted concepts are

chosen as top concepts from the concepts list L. (at line 15

and 16)

The concept extractor algorithm is capable of extracting

the top concepts in a document (d) in O(m) time, where m

is the number of concepts.

3.4 Example of the Concept-based Model

Consider the following sentence:

We have noted how some electronic techniques, developed

for the defense eort, have eventually been used in com-

merce and industry.

In this sentence, the semantic role labeler identies three

target words (verbs), marked by bold, which are the verbs

that represent the semantic structure of the meaning of the

sentence. These verbs are noted, developed, and used. Each

one of these verbs has its own arguments as follows:

[ARG0 We] [TARGET noted ] [ARG1 how some elec-

tronic techniques developed for the defense eort have

eventually been used in commerce and industry]

We have noted how [ARG1 some electronic techniques]

[TARGET developed ] [ARGM-PNC for the defense

eort] have eventually been used in commerce and in-

dustry

We have noted how [ARG1 some electronic techniques

developed for the defense eort] have [ARGM-TMP

eventually] been [TARGET used ] [ARGM-LOC in

commerce and industry]

Arguments labels

1

are numbered Arg0, Arg1, Arg2, and

so on depending on the valency of the verb in sentence. The

meaning of each argument label is dened relative to each

verb in a lexicon of Frames Files [9].

Despite this generality, Arg0 is very consistently assigned

an Agent-type meaning, while Arg1 has a Patient or Theme

meaning almost as consistently [9]. Thus, this sentence con-

sists of the following three verb argument structures:

1. First verb argument structure:

[ARG0 We]

[TARGET noted ]

[ARG1 how some electronic techniques developed

for the defense eort have eventually been used

in commerce and industry]

2. Second verb argument structure:

[ARG1 some electronic techniques]

[TARGET developed ]

[ARGM-PNC for the defense eort]

3. Third verb argument structure:

1

Each set of argument labels and their denitions is called a

frameset and provides a unique identier for the verb sense.

Because the meaning of each argument number is dened

on a per-verb basis, there is no straightforward mapping of

meaning between arguments with the same number. For

example, arg2 for verb send is the recipient, while for verb

comb it is the thing searched for and for verb ll it is the

substance lling some container [9].

633

Research Track Paper

[ARG1 some electronic techniques developed for

the defense eort]

[ARGM-TMP eventually]

[TARGET used ]

[ARGM-LOC in commerce and industry]

After each sentence is labeled by a semantic role labeler, a

cleaning step is performed to remove stop-words that have

no signicance, and to stem the words using the popular

Porter Stemmer algorithm [10]. The terms generated after

this step are called concepts.

In this example, stop words are removed and concepts are

shown without stemming for better readability as follows:

1. Concepts in the rst verb argument structure:

noted

electronic techniques developed defense eort even-

tually commerce industry

2. Concepts in the second verb argument structure:

electronic techniques

developed

defense eort

3. Concepts in the third verb argument structure:

electronic techniques developed defense eort

eventually

commerce industry

As mentioned earlier in section (1), at this point, concepts

are going to be weighted using two dierent independent

techniques which are the concept-based statistical analyzer

and the COG representation. The outputs of the two tech-

niques denote the important concepts with respect to the

sentence semantics that each technique captures. However,

the generated concepts from each technique could not be the

same. In other words, the important concepts in one tech-

nique could be non-important to the other technique or the

other way around. Thus, the concept extractor combines

the weights of both techniques and captures the top con-

cepts that are important with respect to sentence semantics

based on the combined weight.

3.4.1 Concept-based Statistical Analyzer

It is imperative to note that these concepts are extracted

from the same sentence. Thus, the concepts mentioned in

this example sentence are:

noted

electronic techniques developed defense eort eventu-

ally commerce industry

electronic techniques

developed

defense eort

electronic techniques developed defense eort

eventually

Table 1: Example of Concept-based Statistical An-

alyzer

Row Sentence Conceptual

Number Concepts Term

Frequency

(CTF)

(1) noted 1

(2) electronic techniques

developed defense 1

eort eventually

commerce industry

(3) electronic techniques 3

(4) developed 3

(5) defense eort 3

(6) electronic techniques 2

developed defense eort

(7) eventually 2

(8) commerce industry 2

Conceptual

Individual Concepts Term

Frequency

(CTF)

(9) electronic 3

(10) techniques 3

(11) defense 3

(12) eort 3

(13) eventually 2

(14) commerce 2

(15) industry 2

commerce industry

The traditional analysis methods assign same weight for

the words that appear in the same sentence. However, the

concept-based statistical analyzer discriminates among terms

that represent the concepts of the sentence. This discrimi-

nation is entirely based on the semantic analysis of the sen-

tence. In this example, some concepts have higher concep-

tual term frequency ctf than others as shown in Table 1. In

such cases, these concepts (with high ctf) contribute to the

meaning of the sentence more than concepts (with low ctf).

As shown in Table 1, the concept-based statistical ana-

lyzer computes the ctf measure for:

1. The concepts which are extracted from the verb argu-

ment structures of the sentence, which are in Table 1

from row (1) to row (8).

2. The concepts which are overlapped with other con-

cepts in the sentence. These concepts are in Table 1

from row (3) to row (8),

3. The individual concepts in the sentence, which are in

Table 1 from row (9) to row (15).

In this example, the topic of the sentence is about the elec-

tronic techniques. These concepts have the highest ctf value

with 3. In addition, the concept noted which has the lowest

ctf, has no signicant eect on the topic of the sentence.

It is important to note that concepts such as commerce

and industry have the ctf value with 2 which is not the high-

est. However, these concepts are important to the sentence

semantics. They provide signicant information about what

634

Research Track Paper

Legend

Node Refers to Conceptual Graph

Conceptual Ontological Graph (COG)

Verb Node

Argument Node

Conceptual Ontological Graph (COG) Representation

We noted

how some electronic techniques

developed for the defense effort have

eventually been used in commerce and

industry

ARG1

some electronic

techniques

for the defense effort

ARG0

developed ARGM-PNC ARG1

some electronic

techniques developed

for the defense effort

eventually

ARGM-LOC

ARG1 used ARGM-TMP

in commerce and

industry

Key

Concepts

Conceptual Graph (type: Main)

Conceptual Graph (type: Referenced) (type: Container)

Key

Concepts

Conceptual Graph (type: Referenced)

Figure 2: Conceptual Ontological Graph

electronic techniques eventually have been used in. Thus, se-

lecting the maximum ctf weight by the concept-based sta-

tistical analyzer only, leads to lose important concepts such

as commerce and industry.

3.4.2 Conceptual Ontological Graph (COG)

Generally, the verb argument structure, which has the

maximum number of overlapping words in each term with

other verbs, provides the most general concepts of a sen-

tence.

In this example, three conceptual graphs are generated for

each verb argument structure as follows:

1. (noted)

ARG0 [We]

ARG1 [how some electronic techniques, developed

for the defense eort, have eventually been used

in commerce and industry]

2. (used)

ARG1 [some electronic techniques developed for

the defense eort]

ARGM-TMP [eventually]

ARGM-LOC [in commerce and industry]

3. (developed)

ARG1 [some electronic techniques]

ARGM-PNC [for the defense eort]

In this example, the conceptual graph of the verb noted

is the most general graph that has the type main. The

other conceptual graphs are referenced graphs. The COG

representation is illustrated in (Fig. 2).

After removing the stop words which have no signicance,

the selected concepts, which provide important information

with respect to the sentence semantics, are the concepts ap-

pear in the nested conceptual graphs (as blue color in Fig. 2)

with referenced type that have the highest LCOG value

with 5. These concepts, which have the referenced type,

are electronic, techniques, developed, defense, eort, com-

merce, and industry.

It is important to note that in this example, unlike the

concept-based statistical analysis, the commerce and indus-

try concepts are extracted using the COG representation.

However, other examples can demonstrate the other way

around in which some important concepts with respect to

the sentence semantics are not captured by the COG rep-

resentation and captured by the concept-based statistical

analyzer.

3.4.3 Concept Extractor

The concept extractor combines the weights computed by

the concept-based statistical analyzer and the COG repre-

sentation into one combined weight. The weightCOG values

for the concepts that have the referenced type (the high-

est L

COG

value with 5) are combined with the weight

stat

values into new weight

comb

values.

The concepts that have the maximum weight

comb

are se-

lected as the top concepts with respect to sentence seman-

tics. In this example, these concepts are electronic, tech-

niques, developed, defense, eort, commerce, and industry.

4. EXPERIMENTAL RESULTS

To test the eectiveness of using the concepts extracted by

the proposed concept-based model as an accurate measure

to weight terms in the document, a set of experiments of the

proposed model in document categorization is conducted.

The experimental setup consisted of three datasets. The

rst data set contains 23,115 ACM abstract articles collected

from the ACM digital library. The ACM articles are clas-

sied according to the ACM computing classication sys-

tem into ve main categories: general literature, hardware,

computer systems organization, software, and data. The

second data set has 12,902 documents from the Reuters

21578 dataset. There are 9,603 documents in the training

set, 3,299 documents in the test set, and 8,676 documents

are unused. Out of the 5 category sets, the topic category

set contains 135 categories, but only 90 categories have at

least one document in the training set. These 90 categories

were used in the experiment. The third dataset consisted of

361 samples from the Brown corpus [5]. Each sample has

2000+ words. The Brown corpus main categories used in

the experiment were: press: reportage, press: reviews, reli-

gion, skills and hobbies, popular lore, belles-letters, learned,

ction: science, ction: romance, and humor.

In the datasets, the text directly is analyzed, rather than,

using metadata associated with the text documents. This

635

Research Track Paper

Table 2: Text Classication Improvement using Combined Approach (weight

comb

)

DataSet Single-Term Concept-based

Improvement

Macro Avg Macro Avg

Avg(F1) Error Avg(F1) Error

Reuters

SVM 0.7421 0.0871 0.8953 0.0121 +20.64%, -86.10%

NB 0.6127 0.2754 0.8462 0.0342 +38.11%, -87.58%

Rocchio 0.6513 0.1632 0.8574 0.0231 +31.64%, -85.84%

ACM

SVM 0.4973 0.1782 0.8263 0.0532 +66.15%, -70.14%

NB 0.4135 0.4215 0.7964 0.0641 +92.59%, -84.79%

Rocchio 0.4826 0.2733 0.7935 0.0635 +64.42%, -76.76%

Brown

SVM 0.6143 0.1134 0.8753 0.0211 +42.48%, -81.39%

NB 0.5071 0.3257 0.8372 0.0341 +65.09%, -89.53%

Rocchio 0.5728 0.2413 0.8465 0.0243 +47.78%, -89.92%

clearly demonstrates the eect of using concepts on the text

categorization process.

For each dataset, stop words are removed from the con-

cepts that are extracted by the proposed model. The ex-

tracted concepts are stemmed using the Porter stemmer al-

gorithm [10]. Concepts are used to build standard normal-

ized feature vectors using the standard vector space model

for document representation.

The concept-based weights which are calculated by the

concept-based model are used to compute a document-term

matrix between documents and concepts. Three standard

document categorization techniques are chosen for testing

the eect of the concepts on categorization quality: (1)

Support Vector Machine (SVM), (2)Rocchio, and (3) Naive

Bayesian (NB). These techniques are used as binary clas-

siers in which they recognize documents from one specic

topic against all other topics. This setup was repeated for

every topic.

It is important to note that the concept-based weight-

ing is one of the main factors that captures the impor-

tance of a concept in a document. Thus, to study the eect

of the concept-based weighting on the categorization tech-

niques, the entire set of the experiments is achieved by us-

ing the proposed concept-based weighting weight

comb

(as in

equation(5)) for the dierent categorization techniques.

For the single-term weighting, the popular TF-IDF [3]

(Term Frequency/Inverse Document Frequency) term weight-

ing is adopted. The TF-IDF weighting is chosen due to its

wide use in the document categorization literature.

In order to evaluate the quality of the text categoriza-

tion, two widely used evaluation measures in document cat-

egorization and retrieval literatures are computed with 5-

fold cross validation for each classier. These measures are

the Macro-averaged performance F1 measure (the harmonic

mean of precision and recall) and the error rate.

Recall that in binary classication (relevant/not relevant),

the following quantities are considered:

p

+

= the number of relevant documents, classied as

relevant.

p

= the number of relevant documents, classied as

not relevant.

n

= the number of not relevant documents, classied

as not relevant.

n

+

= the number of not relevant documents, classied

as relevant.

Obviously, the total number of documents N is equal to:

N = p

+

+ n

+

+ p

+ n

(6)

For the class of relevant documents:

Precision(P) =

p

+

p

+

+ n

+

(7)

Recall(R) =

p

+

p

+

+ p

(8)

The F measure is dened as:

F =

(1 + ) P R

( P) + R

(9)

The error rate is expressed by:

Error =

n

+

+ p

N

(10)

Generally, the Macro-averaged measure is determined by

rst computing the performance measures per category and

then averaging these to compute the global mean.

Basically, the intention is to maximize Macro-averaged F1

and minimize the error rate measures to achieve high quality

in text categorization.

The results listed in Table(2) show the improvement on

the categorization quality obtained by the combined ap-

proach of the concept-based statistical analyzer and the COG

representation.

The popular SVMlight implementation [7] is used with pa-

rameter C = 1000 (tradeo between training error and mar-

gin). The result listed in Table(2) show that the concept-

based weighting has higher performance than the single-

term weighting.

The percentage of improvement ranges from +20.64% to

+92.59% increase (higher is better) in the Macro-averaged

F1 and from -70.14% to -89.92% drop (lower is better) in the

636

Research Track Paper

error rate as shown in Table(2). It is obvious that the con-

cepts extracted by the concept-based model can accurately

classify documents into categories.

5. CONCLUSIONS

This work bridges the gap between natural language pro-

cessing and text categorization disciplines. A new concept-

based model composed of three components, is proposed to

improve the text categorization quality. By exploiting the

semantic structure of the sentences in documents, a better

text categorization result is achieved. The rst component

is the concept-based statistical analyzer which analyzes the

semantic structure of each sentence to capture the sentence

concepts using the conceptual term frequency ctf measure.

The second component is the conceptual ontological graph

(COG). This representation captures the structure of the

sentence semantics represented in the COG hierarchical lev-

els. Such a representation allows choosing concepts based n

their weights which represent the contribution of each con-

cept to the meaning of the sentence. This leads to perform

concept matching and weighting calculations in each docu-

ment in a very robust and accurate way. The third compo-

nent is the concept extractor which combines the weights of

concepts extracted by the concept-based statistical analyzer

and the conceptual ontological graph into one top concept

list. The extracted top concepts are used to build stan-

dard normalized feature vectors using the standard vector

space model (VSM) for the purpose of text categorization.

The quality of the categorization results achieved by the

proposed model surpasses that of traditional weighting ap-

proaches signicantly.

There are a number of suggestions to extend this work.

One direction is to link the presented work to web document

categorization. Another future direction is to investigate the

usage of such models on other corpora and its eect on docu-

ment categorization results, compared to that of traditional

methods.

6. REFERENCES

[1] K. Aas and L. Eikvil. Text categorisation: A survey.

technical report 941. Technical report, Norwegian

Computing Center, June 1999.

[2] M. Collins. Head-Driven Statistical Model for Natural

Language Parsing. PhD thesis, University of

Pennsylvania, 1999.

[3] R. Feldman and I. Dagan. Knowledge discovery in

textual databases (kdt). In Proceedings of First

International Conference on Knowledge Discovery and

Data Mining, pages 112117, 1995.

[4] C. Fillmore. The case for case. Chapter in: Universals

in Linguistic Theory. Holt, Rinehart and Winston,

Inc., New York, 1968.

[5] W. Francis and H. Kucera. Manual of information to

accompany a standard corpus of present-day edited

american english, for use with digital computers, 1964.

[6] D. Gildea and D. Jurafsky. Automatic labeling of

semantic roles. Computational Linguistics,

28(3):245288, 2002.

[7] T. Joachims. Text categorization with support vector

machines: learning with many relevant features. In

C. Nedellec and C. Rouveirol, editors, Proceedings of

ECML-98, 10th European Conference on Machine

Learning, number 1398, pages 137142, Chemnitz,

DE, 1998. Springer Verlag, Heidelberg, DE.

[8] D. Jurafsky and J. H. Martin. Speech and Language

Processing. Prentice Hall Inc., 2000.

[9] P. Kingsbury and M. Palmer. Propbank: the next

level of treebank. In Proceedings of Treebanks and

Lexical Theories, 2003.

[10] M. F. Porter. An algorithm for sux stripping.

Program, 14(3):130137, July 1980.

[11] S. Pradhan, K. Hacioglu, V. Krugler, W. Ward, J. H.

Martin, and D. Jurafsky. Support vector learning for

semantic argument classication. Machine Learning,

60(1-3):1139, 2005.

[12] S. Pradhan, K. Hacioglu, W. Ward, J. H. Martin, and

D. Jurafsky. Semantic role parsing: Adding semantic

structure to unstructured text. In Proceedings of the

3th IEEE International Conference on Data Mining

(ICDM), pages 629632, 2003.

[13] S. Pradhan, W. Ward, K. Hacioglu, J. Martin, and

D. Jurafsky. Shallow semantic parsing using support

vector machines. In Proceedings of the Human

Language Technology/North American Association for

Computational Linguistics (HLT/NAACL), 2004.

[14] G. Salton and M. J. McGill. Introduction to Modern

Information Retrieval. McGraw-Hill, 1983.

[15] G. Salton, A. Wong, and C. S. Yang. A vector space

model for automatic indexing. Communications of the

ACM, 18(11):112117, 1975.

[16] S. Shehata, F. Karray, and M. Kamel. Enhancing text

clustering using concept-based mining model. In

ICDM, pages 10431048, 2006.

[17] S. Shehata, F. Karray, and M. Kamel. Enhancing text

retrieval performance using conceptual ontological

graph. In ICDM Workshops, pages 3944, 2006.

637

Research Track Paper

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5795)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1091)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Croatian Cases Cheat SheetDocument1 pageCroatian Cases Cheat Sheet30daytrialmaths67% (3)

- Present Perfect Vs Simple PastDocument2 pagesPresent Perfect Vs Simple PastAngeles Zaragoza56% (9)

- (Aim High L5) Participle ClausesDocument2 pages(Aim High L5) Participle ClausesAbdurrahim SholehNo ratings yet

- Mid Test Perkapalan ADocument3 pagesMid Test Perkapalan AdiahrosaNo ratings yet

- Longman Academic Writing 1 (2nd)Document20 pagesLongman Academic Writing 1 (2nd)chanvongnget.seilakNo ratings yet

- Learn Arabic With GrammerDocument24 pagesLearn Arabic With GrammerFario Jones NNo ratings yet

- Assignment Adjective Clause and Noun Clause (Herlina S)Document4 pagesAssignment Adjective Clause and Noun Clause (Herlina S)Neneng SetyowatiNo ratings yet

- 12 Tenses of VerbsDocument2 pages12 Tenses of Verbscheng090% (1)

- Causative ExercisesDocument6 pagesCausative ExercisesNadia YactayoNo ratings yet

- Types of AdjectivesDocument3 pagesTypes of AdjectivesJose Luis Beltran TimoteNo ratings yet

- Pragmatic Losses of Qur'an Translation A Linguistic ApproachDocument8 pagesPragmatic Losses of Qur'an Translation A Linguistic Approachoussama moussaouiNo ratings yet

- OBE Based Course Outline FEDocument4 pagesOBE Based Course Outline FEMuhammad Ahsan ShahèénNo ratings yet

- Second Element Is Usually A Present Participle (-Ing) or Past Participle (-Ed or Irregular) - E.g: Hand-Made/ Well-Dressed/ Good-Looking"Document3 pagesSecond Element Is Usually A Present Participle (-Ing) or Past Participle (-Ed or Irregular) - E.g: Hand-Made/ Well-Dressed/ Good-Looking"Elayar MhamedNo ratings yet

- Comparatives-And-Superlatives ExerciseDocument1 pageComparatives-And-Superlatives ExerciseAndrés López100% (3)

- 12 InglesDocument30 pages12 InglesWellington BeneditoNo ratings yet

- Modal VerbsDocument8 pagesModal VerbspaulaNo ratings yet

- Marathi To English Sentence Translator For SimpleDocument5 pagesMarathi To English Sentence Translator For Simplerohitkokani026No ratings yet

- Camping Holiday: The Words and Verbs You Need To Tell The StoryDocument30 pagesCamping Holiday: The Words and Verbs You Need To Tell The StoryAlan ShieffNo ratings yet

- Eslprintables 2008108133430481374474Document2 pagesEslprintables 2008108133430481374474Mustafa ÖzdemirNo ratings yet

- 4th Meeting - PAST TENSEDocument14 pages4th Meeting - PAST TENSEGabut GameNo ratings yet

- Second Periodical Test in English VIDocument7 pagesSecond Periodical Test in English VIRuth Ann Ocsona LaoagNo ratings yet

- Ukbm Xi 3.9 SongDocument9 pagesUkbm Xi 3.9 SongSafira Rahma MeidiantiNo ratings yet

- Lesson 5a Tenses LessonDocument86 pagesLesson 5a Tenses LessonWane DavisNo ratings yet

- ESL Unit 4.3Document11 pagesESL Unit 4.3Grace PascualNo ratings yet

- Materi Cause and EffectDocument3 pagesMateri Cause and Effectbibimali maliNo ratings yet

- UNIT 1: WHO, WHOM, WHOSE QuestionsDocument13 pagesUNIT 1: WHO, WHOM, WHOSE QuestionsNguyễn LoanNo ratings yet

- Grammar Unit 5 - 1Document3 pagesGrammar Unit 5 - 1dannyNo ratings yet

- Arab World EJDocument2 pagesArab World EJAzennoud AbdessatarNo ratings yet

- Handout I CasDocument1 pageHandout I Casdacho26No ratings yet

- Basic Intro Class IvDocument4 pagesBasic Intro Class IvWagner Figueiró França Jr.No ratings yet