Professional Documents

Culture Documents

06880062

Uploaded by

Yogananda PatnaikOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

06880062

Uploaded by

Yogananda PatnaikCopyright:

Available Formats

Wavelet Based Scalable Video Coding: a

Comparative Study

Yogananda Patnaik and Dipti Patra, Member, IEEE

Abstract-- Scalable video coding provides a competent means to

transmit a video at different resolutions. The basic idea is passing

of compressed data in terms of layers, which consists of a single

base layer, and one or many enhancement layers. By decoding

the base layer, lower resolution/quality video is produced, and by

decoding the enhancement layers higher quality video is

produced. Scalability is obtaining a video, at varying

resolution/quality, by encoding or decoding a video sequence. To

achieve spatial and temporal decomposition of the input video

sequence, wavelet transform combined with motion compensated

temporal filtering (MCTF) is carried out in this paper. To

remove redundancy, the motion vectors and wavelet coefficients

are compressed followed by a bit stream representation giving

rise to a layered representation. A base layer with two

enhancement layers is taken which is verified for four different

wavelet based compression methods, namely SPIHT-3D, SPIHT,

STW and EZW. SPIHT-3D is considered to be the best among

these.

Index TermsEZW, MCTF, Scalable video coding (SVC),

SPIHT, SPIHT-3D, STW, Wavelet scalable video coding (WSVC).

I. INTRODUCTION

EVELOPMENT in video coding technology along with

the rapid expansion in network infrastructure, storage

capacity, and computing power are the motivating factors

for ever-increase in the number of video applications [1].

Users may attempt to access the multimedia content and

interact from different terminals via different networks such

as: internet and video transmission, handheld devices,

multimedia broadcasting, and video services over wireless

channels with different resolutions. Therefore, scalability is

the only solution to overcome this problem. Scalability

enables removal of portions of video bit streams, for

adjustment to diverse demands of end users, at varying

network conditions and capacities. Scalable Video Coding

(SVC) finds its application in video broadcasting, error

protection and surveillance systems.

In case of non-scalable video coding the content of the

video is encoded independent of actual channel characteristics

where total available bandwidth is simply divided depending

Yogananda Patnaik is a Doctoral Research scholar in the Department of

Electrical Engineering, National Institute of Technology, Rourkela-769008,

India (e-mail: ypatnaiknitrkl@gmail.com).

Prof Dipti Patra is an Associate Professor in the Department of Electrical

Engineering, National Institute of Technology, Rourkela-769008, India (email: dpatra@nitrkl.ac.in).

978-1-4799-4939-7/14/$31.00 2014 IEEE

on the quality desired for each independent layer that needs to

be coded. On the contrary SVC is encoded only once, by

removing certain layers or bits from the single video stream

[2]. By using this technique spatial resolutions and or temporal

resolutions could be accomplished with different qualities.

SVC includes extraction of video of many resolutions or

qualities in the form a coded bit stream from a single bit

stream. Different basic SVC types include SNR scalability,

spatial scalability and temporal scalability. Scalable coding

have to support combinations of these three basic scalability

functionalities.

Wavelet transform plays a vital role in video compression

due to its compaction as well as de-correlation properties [3].

The most significant advantage of the wavelet transform is its

inherent scalability. Wavelet transform can be applied on

single frames, as a 2D transform on image pixels or on motion

compensated frame. On the other hand it can be used as 3D

transform on group of frames in a video sequence. The

application of wavelet transform can be used in video

compression performance, which enables selection of desired

bit assignment, rate optimization and progressive transmission

functionalities. Wavelet transform based video coding consists

of five steps: three

stage motion estimation and

compensation, adaptive wavelet transform, multi stage

quantization, Zero tree coding and intelligent bit rate control.

It generally tries to convert an image signal into a set of

coefficients, which correspond, to scaled space representations

at multiple resolutions and frequency segments. Data

compaction and bit allocation can be achieved more

powerfully by arranging Wavelet coefficients in a hierarchical

data structure. Quantization reduces the data rate at the cost of

some distortions. Entropy coding encodes the quantized

coefficients into a set of compact binary bit streams.

The revolution in video coding based on wavelet started

with the work of Ohm in [4], who presented in 1994 the first

application of the filtering scheme t+2D with a motion

compensated temporal filtering (MCTF) allowing to take into

account motion in the decorrelation of video frames. This

model became the outline for all wavelet-based coders.

Scalable video coding is the scalable extension of the hybrid

video coding standard (H.264/MPEG-4) and Advanced Video

Coding (H.264/AVC), as in [5]. The bit stream of scalable

video coding is structured in a base layer that resembles non

scalable profile of H.264/AVC, which corresponds to the

lowermost quality/resolution. To the reconstructed base layer

with various enhancement layers adds the different types of

spatial, temporal, and /or SNR quality. An alternative to

block-based coding is Wavelet-based scalable video coding

(W-SVC) solutions have been developed in [6], [7] . To

achieve spatial and temporal decomposition of the input video

sequence, wavelet transform combined with motion

compensated temporal filtering (MCTF) is done here. To

remove redundancy the motion vectors and wavelet

coefficients are compressed followed by a bit stream

representation giving rise to a layered representation.

The paper is organized in the following manner: Section II

presents the design framework of W-SVC. Section III presents

different compression methods. Section IV deals with

performance evaluation and comparison. Final section

concludes the paper with future scope.

applied to perform the MCTF. 5/3 MCTF consist of prediction

from two reference frames, both just before and just after the

target frames. Fig. 2, shows the MCTF structure of the (5/ 3)

wavelet [8], [9].

Frame0

Frame1

Frame2

Frame3

Frame4

II. DESIGN FRAMEWORK OF W-SVC

Level1

A. Main Modules of SVC

The W-SVC has three blocks namely:

Encoder: the encoder encodes the input that gives bit stream.

Extractor: function of this block is to reduce the bit stream as

per the requirements of scalability and to create the modified

bit stream and its description. The resultant bit stream is

scalable and is given to the extractor again for adapting. This

type of setup is called multipoint adaptation in which the

modified bit stream is fed to the adjacent network node.

Decoder: this block function is decoding of modified bit

streams.

B. Structure of W-SVC

The structure of SVC [8] mainly consists of spatial wavelet

transform using wavelets and temporal decomposition with

wavelets. By using mixtures of spatial, temporal transform and

3D bit plane coding techniques, high efficiency coding can be

achieved. The resulting multiresolution structure from 2D sub

band coding and MCTF empowers spatial and temporal

scalability. Bit plane coding gives SNR scalability. This

depends on the order of spatial and temporal decomposition.

There are two types of non-redundant SVC architectures

namely t+2D-temporal transform followed by the spatial

transform and 2D+t- that is spatial followed by temporal

wavelet transform, producing a set of spatial and temporal

sub-bands. Fig. 1 depicts the structure of wavelet based SVC

[8], [9].

Bit

stream

L Level2

5/3

MCTF

Fig.1.The Structure of W- SVC

2D

DWT

3D SPIHT/

SPIHT

Multi-layer

motion

coding

with spatial

& quality

scalability

C. Motion compensated Temporal Filtering (MCTF)

To achieve temporal scalability MCTF is frequently used.

The SVC extension, like regular H.264/AVC, uses DCT for

the remaining frames. Whether MCTF is used in combination

with DCT or wavelet transforms, the (5/3) wavelet is often

Level3

L

Fig.2.Structure for 3 level 5/3 MCTF

TABLE I

SCALABILITY ASPECT FOR DIFFERENT VIDEO CODING STANDARDS

Video codec

Scalability Aspect

MPEG-1

No support

MPEG-2

H.264/AVC

Layered

scalability

(spatial,

temporal, SNR)

MPEG-4 Layered and fine

granular

scalability

(spatial,

temporal, SNR)

Fully scalable extension

W-SVC

Fully scalable

Entropy

Coding

Spatio Temporal

Transform

MPEG-4

Video

Frame

Level0

Ln denotes the low-pass frames at decomposition level n, Hn

denotes the high-pass frame and, level 0 is the original frame.

For every odd numbered frame, a high-pass frame is predicted

from the adjacent even-numbered frames. The even index

frame is updated, using the two nearby high-pass frames to

generate a low-pass frame. From these generated low pass

frames, a new decomposition level can then be estimated. A

new level of temporal scalability is made available for each

decomposition level. Prior to the prediction and update,

motion compensation is performed to reduce temporal

redundancy [10]. When MCTF is followed by wavelet

decomposition of the frames, the scheme is referred to as

t+2D. This way the MCTF offers temporal scalability, while

the 2D wavelet offers resolution and peak signal-to-noise ratio

(PSNR) scalability. The scalability aspect in existing video

standards is illustrated in Table 1.

III. WAVELET VIDEO COMPRESSION METHODS

Different popular wavelet compression methods are Set

partitioning in hierarchical trees (SPIHT), 3D-SPIHT,

Embedded Zero Tree (EZW) and Spatial Orientation Tree

Wavelet (STW).

A. Set partitioning in hierarchical trees:

The SPIHT algorithm [10], [11] consists of three steps: 1)

pointing out sets in spatial-orientation trees 2) dividing the

coefficients in these trees into sets with bit plane

representation of their magnitudes considering the highest

significant bit; and 3) remaining highest bit plane is coded and

transmitted first. Spatial orientation trees are groups of

wavelet transform coefficients organized into trees with lowest

frequency or sub band or is the root and higher frequency sub

bands are with the offspring in the lowest frequency or

coarsest scale sub band is with the offspring.

B. 3D-SPIHT:

The extended version of 2D SPIHT is the 3-D SPIHT [11],

[12] scheme possessing the same three characteristics. 1) By

arranging partially with the magnitude of the 3-D wavelet

transformed video using a 3-D set partitioning algorithm; 2)

transmission of refinement bits in an ordered bit plane; and 3)

utilization of self-similarity across spatio-temporal orientation

trees.

C. Embedded Zero Tree (EZW)

EZW Codec [13], [14] consists of a coder and decoder an

EZW coder and an EZW decoder. The EZW coder takes a

wavelet transformed input image and a symbol stream. The

decoder does the reverse thing. The EZW codec is the

amalgamation of two methods: the zero tree encoding and the

second one is successive approximation quantization

D. Spatial Orientation Tree Wavelet (STW) [15].

For speed improvement the algorithm avoids the use of

multiple passes of the bit plane coding. Wavelet coefficients

with dynamic ranges are coded efficiently. The algorithm

avoids the multiple passes of bit-plane coding. This ordered

dynamic range coding naturally allows resolution scalability

of a wavelet-transformed image. The dynamic range of energy

in each subset is predicted based on the dynamic range of

energy of parent set.

IV. PERFORMANCE EVALUATION

A. Performance measures/Quality measures

The above wavelet compression methods are verified with

various performance metrics. The most important one among

them is Peak Signal to Noise Ratio (PSNR) which defines the

quality. Some other quality measures include Mean Square

Error (MSE) and Compression Ratio (CR), which gives the

quantity of compression embedded in the coding process. It is

observed that PSNR and Compression ratios are inversely

linked with each other.

Mean Square Error (MSE): It is defined as the average or

aggregate of squares of the error between two images or

frames.

Monochrome image MSE is given by

2

1 N N

(1)

MSE= 2 ( X ( i, j ) Y ( i, j ) )

N i j

Colour image MSE is given by

2

2

1 N N

MSE= 2 [( r ( i, j ) r * ( i, j ) ) + ( g ( i, j ) g * ( i, j ) )

N i j

( b ( i , j ) b ( i, j ) )

*

(2)

Signal to Noise Ratio (SNR): Usually Mean Square Error is

expressed in terms of a Signal to Noise Ratio (SNR) which is

specified in decibels (dB) as the ratio of desired image

variance ( 2 ) to the average image variance ( e2 )

SNR= 10 log10

2

e2

(3)

Where 2 is the variance of the desired image and e2 is

average variance.

Peak Signal to Noise Ratio (PSNR): Peak Signal to Noise

Ratio is determined as the ratio of the signal variance to the

reconstruction error variance.

2552

(4)

PSNR = 10 log10 2

e

Compression Ratio (CR): Compression ratio is determined

as the ratio of the original image size to that of compressed

image size.

original image size

Compression Ratio=

(5)

Compressed image size

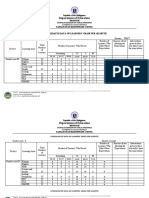

B. Simulation Results

This section deals with the experimental results which

were obtained for different compression methods like

SPIHT, SPIHT-3D, EZW, STW with different standard

video sequences namely Akiyo, Carphone, City, Foreman,

Bus, Crew, Coastguard, Mobile, and Mother Daughter,

Miss America. In this simulation initially a YUV video

sequence is taken with Quantization Q=1 and the number of

levels =3. Three layers are being considered a base layer

and two enhancement layers. The initial video is being

resized.

The size of the base layer, and the two

Enhancement layers are (128X128), (256X256), (512X512),

respectively

X1

TH

Fig.3. 30 FRAME of all the video sequences showing the Base Layer,

Enhancement Layer-1 and Enhancement Layer-2.

Wavelet transform along with motion-compensated

temporal filtering (MCTF) is carried out to achieve a

spatial-temporal decomposition of the input video sequence.

Then to remove redundancy the motion vectors and wavelet

coefficients are compressed followed by a bit stream

representation giving rise to a layered representation. The

wavelet considered here is Bior2.2. Different performance

measures such as MSE, PSNR, and CR are calculated for

quality measurement. 30 frames are considered in our

simulation. Likewise, this is carried out for all the video

sequences. From Table 2 and Fig. 4, it can be easily assured

that for most of the video sequence the compression ratio

CR is high in case of SPIHT-3D hence this compression

method is the best among the others. The First column (A,

D, G, J, M, P, S, V, Y) of Fig. 3, shows the base layer

resolution and a second column (B, E, H, K, N, Q, T, W, Z)

shows the Enhancement layer-1 (EL-1) resolution and the

third column (C, F, I, L, O, R, U, X, X1) shows

Enhancement layer-2 (EL-2) resolution. The simulations

were carried out using MATLAB-7 (2012) programming

language and was accomplished with a Pentium Intel CORE

i7 processor, 2.4 GHz CPU with 2GB RAM.

TABLE II

PERFORMANCE COMPARISON OF DIFFERENT VIDEO SEQUENCE

EL-1

CR

Carphone_qcif

(176x144)

FOREMAN

BUS_CIF

CREW

COASTGUARD

EL-1

EL-2

SPIH 452.83 9.80

1.01

T-3D

SPIH 431.1 5.99

0.47

T

EZW 6.325 4.06 0.322

STW 14.27 3.63 0.331

SPIH 281.9 9.05 0.627

T-3D

SPIH 24.56 5.20 0.482

T

EZW 6.069 5.90 0.502

STW 16.60 5.75 0.480

SPIH 20.25 55.3 37.90

T-3D

8

SPIH 40.55 38.2 35.38

T

0

EZW 11.42 46.5 36.63

9

STW 32.05 32.9 31.78

0

SPIH 325.7 10.3 0.832

T-3D

5

SPIH

34.6 8.67 0.699

T

7

EZW

28.3 14.0 0.843

3

1

STW

25.3 6.91 0.559

9

SPIH

300. 101. 20.40

T-3D

0

10

SPIH

17.8 68.9 17.97

T

4

7

EZW

12.7 83.3 16.9

9

4

STW 9.726 80.2 19.4

1

SPIH 317.6 24.2 15.7

T-3D

6

SPIH

27.2 19.4 14.254

T

8

9

EZW

8.19 17.1 16.6

1

9

STW

20.0 17.0 15.8

9

3

SPIH 147.6 21.5 1.877

T-3D

8

SPIH

40.2 14.5 1.11

T

9

4

EZW

12.6 14.0 1.01

6

8

STW 30.71 10.5 0.711

3

21.7

39.8

51.8

35.0

41.8

52.82

3.89

40.2

36.7

22.4

42.6

42.8

38.1

53.23

53.39

49.11

3.96

3.73

3.98

34.1

40.2

50.98

3.54

40.0

35.9

25.6

40.2

40.2

31.0

50.88

50.84

32.00

3.73

3.54

2.52

31.8

32.5

32.91

2.39

37.3

32.0

32.22

2.47

33.0

32.9

32.52

2.29

23.3

37.8

49.01

3.15

32.8

39.3

50.58

2.79

33.9

39.2

2.92

34.2

39.8

24.7

27.6

35.5

29.6

50.0

4

50.8

8

35.0

9

35.98

36.9

29.4

38.2

29.8

22.2

34.6

34.1

35.5

39.3

36.5

4.13

MISS AMERICA

City

BL

MOBILE

Akiyo_Qcif

(176x144)

EL-2

SPI

HT3D

SPI

HT

EZ

W

STW

100.9

12.5

1.96

28.6

36.7

44.885

3.9

22.30

10.4

1.65

34.6

37.6

45.526

3.6

7.86

9.52

1.6

39.5

38.2

45.900

3.5

15.73

8.30

1.54

36.0

38.6

46.0

SPI

HT3D

SPI

HT

EZ

W

STW

369.2 205.96

48.14

23.0 25.331

31.75

3.3

7

2.26

30

SPI

HT3D

SPI

HT

EZ

W

STW

61.87

99.2

24.1

30.2

27.9

33.4

46.50

105.

27.6

31.4

26.3

32.77

41.95

157.

6

30.6

31.9

26.4

32.62

4.81

1.32

0.10

40.8

47.2

57.900

3.6

4.81

1.32

0.10

41.2

47.3

57.94

3.6

1.68

1.08

0.09

45.8

47.8

57.70

3.9

3.11

1.01

0.09

43.0

48.1

58.376

3.4

1.8

9

2.0

3

2.0

8

35.4

36.2

26.6

35.4

32.2

37.1

37.1

37.6

33.3

38.0

35.9

2

35.9

8

36.9

1

37.1

7

37.3

2

37.2

6

46.2

2.71

2.83

2.51

2.565

5

2.542

9

5.355

9

5.046

9

5.354

9

5.143

3

2.498

48.7 1.955

5

7

49.4 2.039

4

9

49.62 1.840

6

SPIHT 3D

SPIHT

EZW

STW

5

C o m p r e s s io n R a tio

BL

Average PSNR

MOTHER DAUGHTER

Compression

method

Video sequence

MSE

4

3

2

1

1

4

5

6

7

Different video sequence (order as per table2)

10

Fig.4. CR plot for different compression methods

V. CONCLUSION

This paper describes the general idea of different

compression methods used in Wavelet based Scalable Video

coding (W-SVC). The basic operational design of W-SVC is

discussed in detail. The scalability features and functionality

of a scalable coder in the existing standards are explained.

Initially to achieve spatial and temporal decomposition of the

input video sequence, wavelet transform combined with

motion compensated temporal filtering (MCTF) is carried out.

Here 5/3 MCTF is carried out followed by two dimensional

discrete wavelet transform. The motion vectors and wavelet

coefficients are compressed followed by a bit stream

representation giving rise to a layered representation is being

done to remove redundancy. The Comparison is carried out

for different compression methods by considering 10 different

video data sets. Out of the four methods it is observed that

SPIHT-3D outperforms the others with respect to MSE,

Average PSNR and compression ratio. This work can be

extended towards development of better MCTF technique as

well as to incorporate the performance indices like SSIM and

FSIM.

VI. REFERENCES

[1]

[2]

[3]

[4]

[5]

[6]

[7]

[8]

[9]

[10]

[11]

[12]

[13]

[14]

[15]

Sudhakar Radhakrishnan "Effective Video Coding for Multimedia

Applications", pp 3-20, 2011.

Naeem Ramzan, and Ebroul Izquierdo, Scalable Video Coding and Its

Applications, Multimedia Analysis, Processing and Communications

Studies in Computational Intelligence, vol. 346, pp. 547-559, 2011.

Michel Misiti, Yves Misiti, Georges Oppenheim, and Jean-Michel

Poggi, Wavelets and their Applications. ISTE publication, pp 1-27,

2007.

J.Ohm, Three-Dimensional Subband coding with Motion

Compensation, IEEE Trans. on Image Processing vol. 3, no. 5, pp.

559571, 1994.

Jong-Seok Lee, Francesca De Simone, and Touradj Ebrahim Subjective

Quality Assessment of Scalable Video Coding: A Survey, Third IEEE

International Workshop on Quality of Multimedia Experience,

Switzerland, pp 25-30, 2011.

H. Schwarz, D. Marpe, and T. Wiegand, Overview of the

ScalableVideo Coding Extension of the H.264/AVC Standard, IEEE

Trans. Circuits Syst. Video Tech., vol. 17, no. 9, pp. 11031120, 2007.

N. Adami, A. S. Member, and R. Leonardi, State-of-the-art and trends

in scalable video compression with wavelet based approaches, IEEE

Trans. Circuits Syst. Video Tech., vol. 17, no.9, pp. 1238-55, 2007.

Zoghlami, M. Marzougui, M. Atri, and R. Tourki, High-level

implementation of Video compression chain coding based on MCTF

lifting scheme, 10th Int. Multi-Conferences Syst. Signals Devices, 2013,

pp. 16.

Seongho Park, Hyungsuk Oh, and Wonha Kim, Wavelet-based

Scalable Video Coding with Low Complex Spatial Transform Kyung

Hee University, 2006.

Wen-Hsiao Peng, Chia-yang Tsai, Tihao Chiang, and Hsuehming Hang,

Advances of mpeg scalable video coding Standard, In KnowledgeBased Intelligent Information & Engineering Systems, pp. 889895,

2005.

Said, and W. A. Pearlman, Image Compression Using the SpatialOrientation Tree, IEEE International Symposium on Circuits and

System ISCAS, vol. 1, 1993, pp. 279-282.

Beong-Jo Kim, Zixiang Xiong, and William A Paerlman Low bit rate

sclable video coding with 3D set Partioning hierarchial trees IEEE

Trans. on Circuits and Systems for Video Technology, vol. 10, no. 8, pp.

1-34, 2000.

Dorrell, Direct processing of EZW compressed image data,

Proceedings. International Conference on Image Processing, vol. 1, pp.

545 - 548, 1996.

J.M. Shapiro, Embedded Image Coding Using Zero trees of Wavelet

Coefficients, IEEE Trans. on Signal Processing vol. 41, no. 12, pp.

34453462, 1993.

Yushin Cho, Amir Said, and William A. Pearlman1, Coding the

Wavelet Spatial Orientation Tree with Low Computational Complexity,

IEEE Data Compression Conference, 2005

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- 2013-01-28 203445 International Fault Codes Eges350 DTCDocument8 pages2013-01-28 203445 International Fault Codes Eges350 DTCVeterano del CaminoNo ratings yet

- E7d61 139.new Directions in Race Ethnicity and CrimeDocument208 pagesE7d61 139.new Directions in Race Ethnicity and CrimeFlia Rincon Garcia SoyGabyNo ratings yet

- Management Accounting by Cabrera Solution Manual 2011 PDFDocument3 pagesManagement Accounting by Cabrera Solution Manual 2011 PDFClaudette Clemente100% (1)

- BPS C1: Compact All-Rounder in Banknote ProcessingDocument2 pagesBPS C1: Compact All-Rounder in Banknote ProcessingMalik of ChakwalNo ratings yet

- Distortion-Aware Scalable Video Streaming To Multinetwork ClientsDocument13 pagesDistortion-Aware Scalable Video Streaming To Multinetwork ClientsYogananda PatnaikNo ratings yet

- Genetic Algorithms: and Other Approaches For Similar ApplicationsDocument83 pagesGenetic Algorithms: and Other Approaches For Similar Applicationsveerapandian29No ratings yet

- 6 Neural NetworksDocument54 pages6 Neural NetworksYogananda PatnaikNo ratings yet

- Image Resolution Enhancement by Using Different Wavelet Image DecompositionsDocument8 pagesImage Resolution Enhancement by Using Different Wavelet Image DecompositionsYogananda PatnaikNo ratings yet

- Research PaperDocument18 pagesResearch PaperYogananda PatnaikNo ratings yet

- EE Times - Tutorial - The H.264 Scalable Video Codec (SVC)Document6 pagesEE Times - Tutorial - The H.264 Scalable Video Codec (SVC)Yogananda PatnaikNo ratings yet

- Activity-Based Motion Estimation Scheme ForDocument11 pagesActivity-Based Motion Estimation Scheme ForYogananda PatnaikNo ratings yet

- An Improved Quantum Behaved Particle Swarm Optimization Algorithm With Weighted Mean Best Position-2008Document9 pagesAn Improved Quantum Behaved Particle Swarm Optimization Algorithm With Weighted Mean Best Position-2008Yogananda PatnaikNo ratings yet

- Eslamifar MahshadDocument63 pagesEslamifar MahshadYogananda PatnaikNo ratings yet

- Multimedia Applications of The Wavelet Transform 3737Document240 pagesMultimedia Applications of The Wavelet Transform 3737mhl167No ratings yet

- PBI1991Document116 pagesPBI1991Yogananda PatnaikNo ratings yet

- Interrupt in 8051 - SingleDocument37 pagesInterrupt in 8051 - SingleYogananda PatnaikNo ratings yet

- PBI1991Document116 pagesPBI1991Yogananda PatnaikNo ratings yet

- Socket PresentationDocument13 pagesSocket PresentationYogananda PatnaikNo ratings yet

- Diverging Lenses - Object-Image Relations: Previously in Lesson 5 Double Concave LensesDocument2 pagesDiverging Lenses - Object-Image Relations: Previously in Lesson 5 Double Concave LensesleonNo ratings yet

- Swelab Alfa Plus User Manual V12Document100 pagesSwelab Alfa Plus User Manual V12ERICKNo ratings yet

- Bridge Over BrahmaputraDocument38 pagesBridge Over BrahmaputraRahul DevNo ratings yet

- 2.0. Design, Protection and Sizing of Low Voltage Electrical Installations (Wiring) To IEE Wiring Regulations BS 7671 MS IEC (IEC) 60364 - 16&17.12.15Document2 pages2.0. Design, Protection and Sizing of Low Voltage Electrical Installations (Wiring) To IEE Wiring Regulations BS 7671 MS IEC (IEC) 60364 - 16&17.12.15Edison LimNo ratings yet

- Configuration Guide - Interface Management (V300R007C00 - 02)Document117 pagesConfiguration Guide - Interface Management (V300R007C00 - 02)Dikdik PribadiNo ratings yet

- Thesis TipsDocument57 pagesThesis TipsJohn Roldan BuhayNo ratings yet

- "Organized Crime" and "Organized Crime": Indeterminate Problems of Definition. Hagan Frank E.Document12 pages"Organized Crime" and "Organized Crime": Indeterminate Problems of Definition. Hagan Frank E.Gaston AvilaNo ratings yet

- Ficha Técnica Panel Solar 590W LuxenDocument2 pagesFicha Técnica Panel Solar 590W LuxenyolmarcfNo ratings yet

- Transfert de Chaleur AngDocument10 pagesTransfert de Chaleur Angsouhir gritliNo ratings yet

- 11-Rubber & PlasticsDocument48 pages11-Rubber & PlasticsJack NgNo ratings yet

- End-Of-Chapter Answers Chapter 7 PDFDocument12 pagesEnd-Of-Chapter Answers Chapter 7 PDFSiphoNo ratings yet

- Aditya Academy Syllabus-II 2020Document7 pagesAditya Academy Syllabus-II 2020Tarun MajumdarNo ratings yet

- Understanding The Contribution of HRM Bundles For Employee Outcomes Across The Life-SpanDocument15 pagesUnderstanding The Contribution of HRM Bundles For Employee Outcomes Across The Life-SpanPhuong NgoNo ratings yet

- Third Party Risk Management Solution - WebDocument16 pagesThird Party Risk Management Solution - Webpreenk8No ratings yet

- Department of Education: Consolidated Data On Learners' Grade Per QuarterDocument4 pagesDepartment of Education: Consolidated Data On Learners' Grade Per QuarterUsagi HamadaNo ratings yet

- FINAL SMAC Compressor Control Philosophy Rev4Document6 pagesFINAL SMAC Compressor Control Philosophy Rev4AhmedNo ratings yet

- SCD Course List in Sem 2.2020 (FTF or Online) (Updated 02 July 2020)Document2 pagesSCD Course List in Sem 2.2020 (FTF or Online) (Updated 02 July 2020)Nguyễn Hồng AnhNo ratings yet

- WWW Ranker Com List Best-Isekai-Manga-Recommendations Ranker-AnimeDocument8 pagesWWW Ranker Com List Best-Isekai-Manga-Recommendations Ranker-AnimeDestiny EasonNo ratings yet

- ISO Position ToleranceDocument15 pagesISO Position ToleranceНиколай КалугинNo ratings yet

- Job Satisfaction of Library Professionals in Maharashtra State, India Vs ASHA Job Satisfaction Scale: An Evaluative Study Dr. Suresh JangeDocument16 pagesJob Satisfaction of Library Professionals in Maharashtra State, India Vs ASHA Job Satisfaction Scale: An Evaluative Study Dr. Suresh JangeNaveen KumarNo ratings yet

- SDS ERSA Rev 0Document156 pagesSDS ERSA Rev 0EdgarVelosoCastroNo ratings yet

- L 11Document3 pagesL 11trangNo ratings yet

- Strategic Management SlidesDocument150 pagesStrategic Management SlidesIqra BilalNo ratings yet

- Governance Operating Model: Structure Oversight Responsibilities Talent and Culture Infrastructu REDocument6 pagesGovernance Operating Model: Structure Oversight Responsibilities Talent and Culture Infrastructu REBob SolísNo ratings yet

- .CLP Delta - DVP-ES2 - EX2 - SS2 - SA2 - SX2 - SE&TP-Program - O - EN - 20130222 EDITADODocument782 pages.CLP Delta - DVP-ES2 - EX2 - SS2 - SA2 - SX2 - SE&TP-Program - O - EN - 20130222 EDITADOMarcelo JesusNo ratings yet

- Documentation Report On School's Direction SettingDocument24 pagesDocumentation Report On School's Direction SettingSheila May FielNo ratings yet