Professional Documents

Culture Documents

Istqb Key Terms Ch1

Uploaded by

karishma100 ratings0% found this document useful (0 votes)

21 views9 pagesThis document defines key terms related to software testing. It provides definitions for over 40 terms including software, defect, failure, quality, testing, test case, test objective, debugging, and test plan. The definitions cover the software development lifecycle from requirements to testing to maintenance. They also discuss test techniques like reviews, test coverage, exit criteria, and test monitoring and control.

Original Description:

Istqb Key Terms Ch1

Copyright

© © All Rights Reserved

Available Formats

DOCX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThis document defines key terms related to software testing. It provides definitions for over 40 terms including software, defect, failure, quality, testing, test case, test objective, debugging, and test plan. The definitions cover the software development lifecycle from requirements to testing to maintenance. They also discuss test techniques like reviews, test coverage, exit criteria, and test monitoring and control.

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

21 views9 pagesIstqb Key Terms Ch1

Uploaded by

karishma10This document defines key terms related to software testing. It provides definitions for over 40 terms including software, defect, failure, quality, testing, test case, test objective, debugging, and test plan. The definitions cover the software development lifecycle from requirements to testing to maintenance. They also discuss test techniques like reviews, test coverage, exit criteria, and test monitoring and control.

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

You are on page 1of 9

Istqb Key Terms - Chapter 1

Software

Computer programs, procedures, and

possibly associated documentation

and data pertaining to the operation

of a computer system.

Risk

A factor that could result in future

negative consequences; usually

expressed as impact and likelihood.

Error (mistake)

A human action that produces an

incorrect result.

Defect (bug, fault)

A flaw in a component or system that

can cause the component or system

to fail to perform its required function,

e.g. an incorrect statement or data

definition. A defect, if encountered

during execution, may cause a failure

of the component or system.

Failure

Deviation of the component or

system from its expected delivery,

service or result.

Quality

The degree to which a component,

system or process meets specified

requirements and/or user/customer

needs and expectations.

Exhaustive testing (complete testing)

A test approach in which the test

suite comprises all combinations of

input values and preconditions.

Testing

The process consisting of all life cycle

activities, both static and dynamic,

concerned with planning, preparation

and evaluation of software products

and related work products to

determine that they satisfy specified

requirements, to demonstrate that

they are fit for purpose and to detect

defects.

Software development

All the activities carried out during the

construction of a software product

(the software development life cycle),

for example, the definition of

requirements, the design of a

solution, the building of the software

products that make up the system

(code, documentation, etc.), the

testing of those products and the

implementation of the developed and

tested products.

Code

Computer instructions and data

definitions expressed in a

programming language or in a form

output by an assembler, compiler or

other translator.

Test basis

All documents from which the

requirements of a component or

system can be inferred. The

documentation on which the test

cases are based. If a document can

be amended only by way of formal

amendment procedure, then the test

basis is called a frozen test basis.

Requirement

A condition or capability needed by a

user to solve a problem or achieve an

objective that must be met or

possessed by a system or system

component to satisfy a contract,

standard, specification, or other

formally imposed document.

Review

An evaluation of a product or project

status to ascertain discrepancies

from planned results and to

recommend improvements.

Examples include management

review, informal review, technical

review, inspection, and walkthrough.

Test case

A set of input values, execution

preconditions, expected results and

execution postconditions, developed

for a particular objective or test

condition, such as to exercise a

particular program path or to verify

compliance with a specific

requirement.

Test objective

A reason or purpose for designing

and executing a test.

Debugging

The process of finding, analyzing and

removing the causes of failures in

software

Testing shows presence of defects

Testing can show that defects are

present, but cannot prove that there

are no defects. Testing reduces the

probability of undiscovered defects

remaining in the software but, even if

no defects are found, it is not a proof

of correctness.

Exhaustive testing is impossible

Testing everything (all combinations

of inputs and preconditions) is not

feasible except for trivial cases.

Instead of exhaustive testing, we use

risks and priorities to focus testing

efforts.

Early testing

Testing activities should start as early

as possible in the software or system

development life cycle and should be

focused on defined objectives.

Defect clustering

A small number of modules contain

most of the defects discovered during

prerelease testing or show the most

operational failures.

Pesticide paradox

If the same tests are repeated over

and over again, eventually the same

set of test cases will no longer find

any new bugs. To overcome this

'pesticide paradox', the test cases

need to be regularly reviewed and

revised, and new and different tests

need to be written to exercise

different parts of the software or

system to potentially find more

defects.

Testing is context dependent

Testing is done differently in different

contexts. For example, safety-critical

software is tested differently from an

e-commerce site.

Absence-of-errors fallacy

Finding and fixing defects does not

help if the system built is unusable

and does not fulfill the users' needs

and expectations.

1. planning and control;

2. analysis and design;

Fundamental Test Process

3. implementation and execution;

4. evaluating exit criteria and

reporting;

5. test closure activities.

Test plan

A document describing the scope,

approach, resources and schedule of

intended test activities. It identifies,

amongst others, test items, the

features to be tested, the testing

tasks, who will do each task, degree

of tester independence, the test

environment, the test design

techniques and entry and exit criteria

to be used and the rationale for their

choice, and any risks requiring

contingency planning. It is a record of

the test planning process.

Test policy

A high-level document describing the

principles, approach and major

objectives of the organization

regarding testing.

Test strategy

A high-level description of the test

levels to be performed and the testing

within those levels for an organization

or program (one or more projects).

Test approach

The implementation of the test

strategy for a specific project. It

typically includes the decisions made

based on the (test) project's goal and

the risk assessment carried out,

starting points regarding the test

process, the test design techniques

to be applied, exit criteria and test

types to be performed.

Coverage (test coverage)

The degree, expressed as a

percentage, to which a specified

coverage item has been exercised by

a test suite.

Exit criteria

The set of generic and specific

conditions, agreed upon with the

stakeholders, for permitting a process

to be officially completed. The

purpose of exit criteria is to prevent a

task from being considered

completed when there are still

outstanding parts of the task which

have not been finished. Exit criteria

are used by testing to report against

and to plan when to stop testing.

Test control

A test management task that deals

with developing and applying a set of

corrective actions to get a test project

on track when monitoring shows a

deviation from what was planned.

Test monitoring

A test management task that deals

with the activities related to

periodically checking the status of a

test project. Reports are prepared

that compare the actual status to that

which was planned.

Test basis

All documents from which the

requirements of a component or

system can be inferred. The

documentation on which the test

cases are based. If a document can

be amended only by way of formal

amendment procedure, then the test

basis is called a frozen test basis.

Test condition

An item or event of a component or

system that could be verified by one

or more test cases, e.g. a function,

transaction, feature, quality attribute,

or structural element.

Test case

A set of input values, execution

preconditions, expected results and

execution postconditions, developed

for a particular objective or test

condition, such as to exercise a

particular program path or to verify

compliance with a specific

requirement.

Test design specification

A document specifying the test

conditions (coverage items) for a test

item, the detailed test approach and

the associated high-level test cases.

Test procedure specification (test

script, manual test script)

A document specifying a sequence of

actions for the execution of a test.

Test data

Data that exists (for example, in a

database) before a test is executed,

and that affects or is affected by the

component or system under test.

Test suite

A set of several test cases for a

component or system under test,

where the post condition of one test

is often used as the precondition for

the next one.

Test execution

The process of running a test by the

component or system under test,

producing actual results.

Test log

A chronological record of relevant

details about the execution of tests.

Incident

Any event occurring that requires

investigation.

Re-testing (confirmation testing)

Testing that runs test cases that

failed the last time they were run, in

order to verify the success of

corrective actions.

Regression testing

Testing of a previously tested

program following modification to

ensure that defects have not been

introduced or uncovered in

unchanged areas of the software as a

result of the changes made.

Test summary report

A document summarizing testing

activities and results. It also contains

an evaluation of the corresponding

test items against exit criteria.

Testware

Artifacts produced during the test

process required to plan, design, and

execute tests, such as

documentation, scripts, inputs,

expected results, set-up and clear-up

procedures, files, databases,

environment, and any additional

software or utilities used in testing.

Independence

Separation of responsibilities, which

encourages the accomplishment of

objective testing.

- clear objectives;

Recall that the success of testing is

influenced by psychological factors:

- a balance of self-testing and

independent testing;

- recognition of courteous

communication and feedback on

defects.

You might also like

- ISTQB Certified Tester Advanced Level Test Manager (CTAL-TM): Practice Questions Syllabus 2012From EverandISTQB Certified Tester Advanced Level Test Manager (CTAL-TM): Practice Questions Syllabus 2012No ratings yet

- ISTQB Foundation AnswersDocument95 pagesISTQB Foundation AnswersLost FilesNo ratings yet

- Quiz LetDocument55 pagesQuiz LetSujai SenthilNo ratings yet

- ISTQB Chapter 1 SummaryDocument8 pagesISTQB Chapter 1 SummaryViolet100% (3)

- Functional Testing 1Document15 pagesFunctional Testing 1Madalina ElenaNo ratings yet

- Software Testing Revision NotesDocument12 pagesSoftware Testing Revision NotesVenkatesh VuruthalluNo ratings yet

- Errors, Defects, Failures & Root Causes: 7 Principles of TestingDocument5 pagesErrors, Defects, Failures & Root Causes: 7 Principles of Testinghelen fernandezNo ratings yet

- Systems Analysis and Design WordDocument9 pagesSystems Analysis and Design WordCherry Lyn BelgiraNo ratings yet

- RecnikDocument6 pagesRecnikAndjela S BlagojevicNo ratings yet

- Overview of Software TestingDocument12 pagesOverview of Software TestingBhagyashree SahooNo ratings yet

- TestingDocument26 pagesTestingDivyam BhushanNo ratings yet

- Testware:: How Do You Create A Test Strategy?Document10 pagesTestware:: How Do You Create A Test Strategy?Dhananjayan BadriNo ratings yet

- Session 9: Test Management - Closing: ISYS6338 - Testing and System ImplementationDocument46 pagesSession 9: Test Management - Closing: ISYS6338 - Testing and System ImplementationWeebMeDaddyNo ratings yet

- The Many Faces of Software TestingDocument6 pagesThe Many Faces of Software TestingbcikyguptaNo ratings yet

- Manual Testing Interview Questions (General Testing) : What Does Software Testing Mean?Document30 pagesManual Testing Interview Questions (General Testing) : What Does Software Testing Mean?April Ubay AlibayanNo ratings yet

- Testing: Characteristics of System TestingDocument6 pagesTesting: Characteristics of System TestingPriyanka VermaNo ratings yet

- Introduction ToDocument49 pagesIntroduction ToAna MariaNo ratings yet

- Unit TestingDocument5 pagesUnit TestingDanyal KhanNo ratings yet

- Chapter-9-System TestingDocument22 pagesChapter-9-System TestingAbdullah Al Mahmood YasirNo ratings yet

- Why Test Artifacts: Prepared by The Test ManagerDocument2 pagesWhy Test Artifacts: Prepared by The Test ManagerJagat MishraNo ratings yet

- SQMChapter 6 NotesDocument6 pagesSQMChapter 6 NotesPragati ChimangalaNo ratings yet

- Chapter 1: Fundamentals of Testing: Classification: GE ConfidentialDocument19 pagesChapter 1: Fundamentals of Testing: Classification: GE ConfidentialDOORS_userNo ratings yet

- Testing Documentation: Documentation As Part of ProcessDocument3 pagesTesting Documentation: Documentation As Part of ProcesspepeluNo ratings yet

- Writing Skills EvaluationDocument17 pagesWriting Skills EvaluationMarco Morin100% (1)

- Week10 Lecture19 TestingDocument5 pagesWeek10 Lecture19 TestingDevelopers torchNo ratings yet

- 100 Most Popular Software Testing TermsDocument9 pages100 Most Popular Software Testing TermszoconNo ratings yet

- What Is A Test Case?Document23 pagesWhat Is A Test Case?jayanthnaiduNo ratings yet

- Testing DocumentationDocument2 pagesTesting DocumentationVinayakNo ratings yet

- Chapter 9 System TestingDocument22 pagesChapter 9 System TestingAl Shahriar HaqueNo ratings yet

- Testing and Quality AssuranceDocument5 pagesTesting and Quality AssuranceBulcha MelakuNo ratings yet

- Testing NotesDocument77 pagesTesting NotesHimanshu GoyalNo ratings yet

- Ste CH 3Document78 pagesSte CH 3rhythm -No ratings yet

- Lesson 11 PDFDocument8 pagesLesson 11 PDFCarlville Jae EdañoNo ratings yet

- Software Quality Assurance and TestingDocument14 pagesSoftware Quality Assurance and TestingSuvarna SreeNo ratings yet

- Notes For ISTQB Foundation Level CertificationDocument12 pagesNotes For ISTQB Foundation Level CertificationtowidNo ratings yet

- Notes 5Document4 pagesNotes 5Anurag AwasthiNo ratings yet

- Testing Procedure For The Software Products: What Is 'Software Quality Assurance' ?Document5 pagesTesting Procedure For The Software Products: What Is 'Software Quality Assurance' ?KirthiSkNo ratings yet

- Prepared By: Shilpi: Acceptance TestingDocument22 pagesPrepared By: Shilpi: Acceptance Testingramu.muthu6653No ratings yet

- 2006 CSTE CBOK Skill Category 1 (Part2)Document27 pages2006 CSTE CBOK Skill Category 1 (Part2)api-3733726No ratings yet

- Software Testing Is A Way To Assess The Quality of The Software and To Reduce The Risk of Software Failure in OperationDocument4 pagesSoftware Testing Is A Way To Assess The Quality of The Software and To Reduce The Risk of Software Failure in OperationNikita SushirNo ratings yet

- Advantages of Test PlanDocument3 pagesAdvantages of Test PlanGayu ManiNo ratings yet

- Testing PresentationDocument39 pagesTesting PresentationMiguel Angel AguilarNo ratings yet

- Glossary of Testing Terminology: WelcomeDocument8 pagesGlossary of Testing Terminology: WelcomeenverNo ratings yet

- 2006 CSTE CBOK Skill Category 1 (Part2) BDocument27 pages2006 CSTE CBOK Skill Category 1 (Part2) Bapi-3733726No ratings yet

- 1 Fundamentals of TestingggggDocument2 pages1 Fundamentals of TestingggggEmanuela BelsakNo ratings yet

- 100 Most Popular Software Testing TermsDocument8 pages100 Most Popular Software Testing TermssuketsomNo ratings yet

- Interview Questions On Data Base TestingDocument8 pagesInterview Questions On Data Base TestingRajeshdotnetNo ratings yet

- Chap1 IstqbDocument42 pagesChap1 IstqbAnil Nag100% (2)

- Test Execution ExplanationDocument7 pagesTest Execution ExplanationJohn Paul Aquino PagtalunanNo ratings yet

- ISTQTB BH0 010 Study MaterialDocument68 pagesISTQTB BH0 010 Study MaterialiuliaibNo ratings yet

- Termpaper Synopsis OF CSE-302 (SAD) : Submitted To: Submitted By: Miss. Neha Gaurav Khara Mam RJ1802 A15Document6 pagesTermpaper Synopsis OF CSE-302 (SAD) : Submitted To: Submitted By: Miss. Neha Gaurav Khara Mam RJ1802 A15Harpreet SinghNo ratings yet

- Software Testing Principles and Concepts: By: Abel AlmeidaDocument16 pagesSoftware Testing Principles and Concepts: By: Abel Almeidaavanianand542No ratings yet

- CTFL-Syllabus-2018 - C1Document13 pagesCTFL-Syllabus-2018 - C1Mid PéoNo ratings yet

- Software Testing MethodsDocument45 pagesSoftware Testing MethodssahajNo ratings yet

- Etl TestingDocument4 pagesEtl TestingKatta SravanthiNo ratings yet

- Levels of TestingDocument30 pagesLevels of TestingMuhammad Mohkam Ud DinNo ratings yet

- Software Testing LifecycleDocument4 pagesSoftware Testing LifecycleDhiraj TaleleNo ratings yet

- Unit-6 Testing PoliciesDocument10 pagesUnit-6 Testing PoliciesRavi RavalNo ratings yet

- Testing Tools MaterialDocument30 pagesTesting Tools Materialsuman80% (5)

- Be Active Way: A Guide For AdultsDocument28 pagesBe Active Way: A Guide For Adultskarishma10No ratings yet

- Yoga: Way of Life What Is Yoga?Document5 pagesYoga: Way of Life What Is Yoga?karishma10No ratings yet

- Yoga BegginersDocument6 pagesYoga Begginerskarishma10No ratings yet

- HF CowDocument13 pagesHF Cowkarishma10No ratings yet

- YogaDocument5 pagesYogakarishma10No ratings yet

- Startup India: 19 Key Points of PM Modi's Action PlanDocument3 pagesStartup India: 19 Key Points of PM Modi's Action Plankarishma10No ratings yet

- 59journal Solved Assignment 13-14Document8 pages59journal Solved Assignment 13-14karishma1080% (10)

- TallyDocument4 pagesTallykarishma10No ratings yet

- Accounting Policies: Accounting Policies Are The Specific Accounting Principles and The Methods of Applying ThoseDocument1 pageAccounting Policies: Accounting Policies Are The Specific Accounting Principles and The Methods of Applying Thosekarishma10No ratings yet

- Basic Accounting & Tally: Imensions InfotechDocument20 pagesBasic Accounting & Tally: Imensions Infotechkarishma10No ratings yet

- Software Engineering Prof. Rushikesh K. Joshi Computer Science & Engineering Indian Institute of Technology, Bombay Lecture - 18 Software Testing-IDocument27 pagesSoftware Engineering Prof. Rushikesh K. Joshi Computer Science & Engineering Indian Institute of Technology, Bombay Lecture - 18 Software Testing-Ikarishma10No ratings yet

- Basic Accounting & TallyDocument20 pagesBasic Accounting & Tallykarishma10No ratings yet

- Understand Pan Card NumberDocument2 pagesUnderstand Pan Card Numberkarishma10No ratings yet

- SoftwareTestingHelp OrangeHRM FRS-SampleDocument22 pagesSoftwareTestingHelp OrangeHRM FRS-Samplekarishma10No ratings yet

- Calculating Percentages: What Is A Percentage?Document4 pagesCalculating Percentages: What Is A Percentage?karishma10No ratings yet

- Istqb ch3Document3 pagesIstqb ch3karishma10No ratings yet

- Istqb Key Terms - Chapter 3Document5 pagesIstqb Key Terms - Chapter 3karishma10No ratings yet

- Software Testing Iso StandardsDocument3 pagesSoftware Testing Iso Standardskarishma10No ratings yet

- Parween N.Makandar.: Citizenship: IndianDocument3 pagesParween N.Makandar.: Citizenship: Indiankarishma10No ratings yet

- Istqb F.L. Glossary Chapter 2Document8 pagesIstqb F.L. Glossary Chapter 2karishma100% (1)

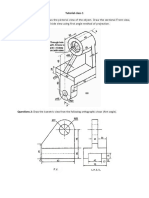

- Advanced Lathe Milling Report Batch 2Document3 pagesAdvanced Lathe Milling Report Batch 2Tony SutrisnoNo ratings yet

- Astm D3350-10Document7 pagesAstm D3350-10Jorge HuarcayaNo ratings yet

- Abstract of ASTM F1470 1998Document7 pagesAbstract of ASTM F1470 1998Jesse ChenNo ratings yet

- Isuzu 4hk1x Sheet HRDocument4 pagesIsuzu 4hk1x Sheet HRMuhammad Haqi Priyono100% (1)

- Shock AbsorberDocument0 pagesShock AbsorberSahaya GrinspanNo ratings yet

- Ceilcote 383 Corocrete: Hybrid Polymer Broadcast FlooringDocument3 pagesCeilcote 383 Corocrete: Hybrid Polymer Broadcast FlooringNadia AgdikaNo ratings yet

- GFF (T) ... MenglischNANNI (DMG-39 25.11.05) PDFDocument38 pagesGFF (T) ... MenglischNANNI (DMG-39 25.11.05) PDFjuricic2100% (2)

- Standard Costing ExercisesDocument3 pagesStandard Costing ExercisesNikki Garcia0% (2)

- 400017A C65 Users Manual V5XXDocument61 pages400017A C65 Users Manual V5XXwhusada100% (1)

- Drive ConfigDocument136 pagesDrive ConfigGiangDoNo ratings yet

- Electric Power Station PDFDocument344 pagesElectric Power Station PDFMukesh KumarNo ratings yet

- Seismic Design & Installation Guide: Suspended Ceiling SystemDocument28 pagesSeismic Design & Installation Guide: Suspended Ceiling SystemhersonNo ratings yet

- Jrules Installation onWEBSPHEREDocument196 pagesJrules Installation onWEBSPHEREjagr123No ratings yet

- NanoDocument10 pagesNanoRavi TejaNo ratings yet

- Tutorial Class 1 Questions 1Document2 pagesTutorial Class 1 Questions 1Bố Quỳnh ChiNo ratings yet

- Fundamentals of Fluid Mechanics (5th Edition) - Munson, OkiishiDocument818 pagesFundamentals of Fluid Mechanics (5th Edition) - Munson, OkiishiMohit Verma85% (20)

- VG H4connectorsDocument7 pagesVG H4connectorsJeganeswaranNo ratings yet

- RDBMS and HTML Mock Test 1548845682056Document18 pagesRDBMS and HTML Mock Test 1548845682056sanjay bhattNo ratings yet

- Recent Developments in Crosslinking Technology For Coating ResinsDocument14 pagesRecent Developments in Crosslinking Technology For Coating ResinsblpjNo ratings yet

- Software TestingDocument4 pagesSoftware TestingX DevilXNo ratings yet

- Limak 2017 Annual ReportDocument122 pagesLimak 2017 Annual Reportorcun_ertNo ratings yet

- An Overview of Subspace Identification: S. Joe QinDocument12 pagesAn Overview of Subspace Identification: S. Joe QinGodofredoNo ratings yet

- Drying AgentDocument36 pagesDrying AgentSo MayeNo ratings yet

- Technical Owner Manual Nfinity v6Document116 pagesTechnical Owner Manual Nfinity v6Tom MondjollianNo ratings yet

- F4 Search Help To Select More Than One Column ValueDocument4 pagesF4 Search Help To Select More Than One Column ValueRicky DasNo ratings yet

- En Privacy The Best Reseller SMM Panel, Cheap SEO and PR - MRPOPULARDocument4 pagesEn Privacy The Best Reseller SMM Panel, Cheap SEO and PR - MRPOPULARZhenyuan LiNo ratings yet

- Misc Forrester SAP Competence CenterDocument16 pagesMisc Forrester SAP Competence CenterManuel ParradoNo ratings yet

- (WWW - Manuallib.com) - MOELLER EASY512&Minus AB&Minus RCDocument8 pages(WWW - Manuallib.com) - MOELLER EASY512&Minus AB&Minus RCErik VermaakNo ratings yet

- CHE 322 - Gaseous Fuel ProblemsDocument26 pagesCHE 322 - Gaseous Fuel ProblemsDanice LunaNo ratings yet

- M Block PDFDocument45 pagesM Block PDFKristina ViskovićNo ratings yet