Professional Documents

Culture Documents

0.4 - Discrete Random Variables and Probability Distributions

Uploaded by

MiiwKotiramCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

0.4 - Discrete Random Variables and Probability Distributions

Uploaded by

MiiwKotiramCopyright:

Available Formats

Statistical Techniques B Notes

Discrete Random Variables and Probability Distributions

!

!

!

!

!

A random variable is a variable that takes on numerical values realized by the outcomes in the

sample space generated by a random experiment.

A random variable is discrete if it can take on no more than a countable number of values.

A random variable is continuous if it can take any value in an interval.

The probability distribution function, P(x), of a discrete random variable X represents the

probability that X takes the value x, as a function of x.

The probability distribution function of a discrete random variable must satisfy the following two

properties:

1. 0 P(x) 1 for any value of x.

2. The individual probabilities sum to 1.

The cumulative probability distribution of a random variable X represents the probability that

X does not exceed a value, i.e. F(x 0 ) = P(X x 0 ).

!

!

The properties of cumulative probability distributions for discrete random variables are quite similar

to that of the normal one.

The expected value of a discrete random variable X is defined as:

-

E[X ] = = xP(x).

x

The variance of a discrete random variable is defined as:

-

2 = E[(X )2 ] = E[X 2 ] 2 = (x )2 P(x).

x

Summary of properties for linear functions of a random variable:

-

!

!

Y2 =Var(a + bX ) = b 2X2

Summary results for the mean and variance of special linear functions:

- If a random variable always takes the value a, it will have mean a and variance 0.

The number of sequences with x successes in n independent trials is:

-

Y = E[a + bX ] = a + bX

C xn =

n!

x !(n x)!

If n independent trials are carried out, the distribution of the number of resulting successes, x, is

called the binomial distribution:

-

P(x) =

n!

x !(n x)!

P x (1 P)nx for x = 0,1,2,,n.

Let X be the number of successes in n independent trials, each with probability of success P. Then X

follows a binomial distribution with mean and variance follows:

= E[X ] = nP

X2 = E[(X X )2 ] = nP(1 P)

Assumptions of the Poisson distribution:

1

Statistical Techniques B Notes

The probability of the occurrence is constant for all subintervals.

There can be no more than one occurrence in each subinterval.

Occurrences are independent; that is, an occurrence is one interval does not influence the probability of an occurrence in another interval.

A random variable X is said to follow the Poisson distribution if it has the probability distribution:

!

!

e x

, for x = 0,1,2,

x!

- The constant is the expected number of successes per time or space unit. And it is the mean

and variance of the distribution.

The Poisson distribution can be used to approximate the binomial probabilities when n is large and

P is small (preferably such that = nP 7 ):

-

P(x) =

enP (nP)x

for x = 0,1,2,

x!

It can also be shown that when n 20 and P 0.05 , and the population mean is the same, both the

binomial and the Poisson distributions generate approximately the same probability values.

Suppose that random sample of n objects is chosen from a group of N objects, S of which are succeses. The distribution of X (number of successes) is the hypergeometric distribution:

-

P(x) =

N s

P(x) = C xsC nx

/C nN

Let X and Y be a pair of discrete random variables. Their joint probability distribution expresses the probability that simultaneously X takes the value x and Y takes the value y:

- P(x,y) = P(X = x Y = y).

In jointly distributed random variables, the probability distribution of the random variable X is its

marginal probability distribution, which is obtained by summing the joint probabilities over all

possible values: P(x) = P(x,y).

y

The conditional probability distribution of Y given that X takes the value x is defined as:

- P(y | x) = P(x,y) / P(x).

The jointly distributed random variables X and Y are independent if: P(x, y) = P(x)P(y).

The conditional mean is computed using the following: Y |X = E[Y | X ] = (y | x)P(y | x).

y

The expectation of any function g(X, Y) of these random variables is defined as follows:

-

E[g(X,Y )] = g(x,y)P(x,y).

x

The expected value of (X X )(Y Y ) is called the covariance between X and Y.

-

Cov(X,Y ) = E[(X X )(Y Y )] = (x X )(y Y )P(x,y).

x

Cov(X,Y ) = E[XY ] X Y .

The correlation between X and Y is as follows: = Corr(X,Y ) = Cov(X,Y ) / X Y .

If two random variables are statistically independent, the covariance between them is 0. However, the converse is not necessarily true.

2

You might also like

- EC334 Assessment Instructions: Please Complete All ProblemsDocument4 pagesEC334 Assessment Instructions: Please Complete All ProblemsMiiwKotiramNo ratings yet

- Test FormatDocument1 pageTest FormatMiiwKotiramNo ratings yet

- Smart Move Logistics - General Journal: 11-Jun-14 112 114 18-Jun-14 201 221 223Document3 pagesSmart Move Logistics - General Journal: 11-Jun-14 112 114 18-Jun-14 201 221 223MiiwKotiramNo ratings yet

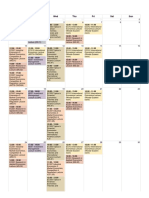

- October 2016: Mon Tue Wed Thu Fri Sat SunDocument2 pagesOctober 2016: Mon Tue Wed Thu Fri Sat SunMiiwKotiramNo ratings yet

- EC102 Exam 2008Document22 pagesEC102 Exam 2008blabla1033No ratings yet

- Applied Trading FoundationDocument3 pagesApplied Trading FoundationMiiwKotiramNo ratings yet

- Leamington To City, Pool Meadow Warwick University To City, Pool Meadow Warwick University To City, Pool Meadow EXPRESSDocument18 pagesLeamington To City, Pool Meadow Warwick University To City, Pool Meadow Warwick University To City, Pool Meadow EXPRESSMiiwKotiramNo ratings yet

- Basic of Accounting Principles PDFDocument23 pagesBasic of Accounting Principles PDFMani KandanNo ratings yet

- 2005 Nov - 42 - MsDocument3 pages2005 Nov - 42 - MsMiiwKotiramNo ratings yet

- Chain GDPDocument2 pagesChain GDPJackNo ratings yet

- 04 - Consumer ChoiceDocument2 pages04 - Consumer ChoiceMiiwKotiramNo ratings yet

- Solving Problem Set 3Document6 pagesSolving Problem Set 3MiiwKotiramNo ratings yet

- Topic 7: The Great DepressionDocument2 pagesTopic 7: The Great DepressionMiiwKotiramNo ratings yet

- 06 - Firms and ProductionDocument2 pages06 - Firms and ProductionMiiwKotiramNo ratings yet

- Principles of Economics and Macroeconomics Chapter SummariesDocument259 pagesPrinciples of Economics and Macroeconomics Chapter SummariesParin Shah92% (25)

- Essay MarkingDocument2 pagesEssay MarkingMiiwKotiramNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5782)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- 2.3 Database Management SystemsDocument16 pages2.3 Database Management Systemsnatashashaikh93No ratings yet

- JAVA 8 FeaturesDocument37 pagesJAVA 8 FeaturesAshok KumarNo ratings yet

- Supattra BoonmakDocument29 pagesSupattra BoonmakYusuf HusseinNo ratings yet

- Oomd Notes Soft PDFDocument129 pagesOomd Notes Soft PDFDhanush kumar0% (1)

- Time Series and Forecasting - Subject OverviewDocument5 pagesTime Series and Forecasting - Subject OverviewLit Jhun Yeang Benjamin100% (1)

- Object Oriented Software Engineering ProjectDocument4 pagesObject Oriented Software Engineering Project2K18/SE/069 JAI CHAUDHRYNo ratings yet

- Benefits of Use CasesDocument6 pagesBenefits of Use CasesNavita Sharma0% (1)

- Table 7.1 Quarterly Demand ForecastDocument12 pagesTable 7.1 Quarterly Demand ForecastZakiah Abu Kasim100% (1)

- Graphics Pipeline: Concept StructureDocument5 pagesGraphics Pipeline: Concept StructuresiswoutNo ratings yet

- Verilog SlidesDocument18 pagesVerilog Slidesmaryam-69No ratings yet

- What is Logistic Regression? - Statistics SolutionsDocument2 pagesWhat is Logistic Regression? - Statistics SolutionsKristel Jane LasacaNo ratings yet

- PPD ForDocument4 pagesPPD ForajaywadhwaniNo ratings yet

- MKT 397 Marketing Models II DuanDocument4 pagesMKT 397 Marketing Models II DuanNabanita TalukdarNo ratings yet

- Database Management Systems Course Guide Book PDFDocument4 pagesDatabase Management Systems Course Guide Book PDFYohanes KassuNo ratings yet

- C++ NoteDocument99 pagesC++ NoteVishal PurkutiNo ratings yet

- Activity Diagrams: Activity Diagrams Describe The Workflow Behavior of A SystemDocument40 pagesActivity Diagrams: Activity Diagrams Describe The Workflow Behavior of A SystemMohamed MagdhoomNo ratings yet

- Quiz 2Document91 pagesQuiz 2Alex FebianNo ratings yet

- Section 2 (Graphics)Document33 pagesSection 2 (Graphics)cmp2012100% (1)

- Speed up algorithms with GPUsDocument29 pagesSpeed up algorithms with GPUsproxymo1No ratings yet

- One-point & Two-point Perspective ViewsDocument23 pagesOne-point & Two-point Perspective ViewsCik Miza Mizziey100% (1)

- C - Programv1 Global EdgeDocument61 pagesC - Programv1 Global EdgeRajendra AcharyaNo ratings yet

- c1 PDFDocument32 pagesc1 PDFitashok1No ratings yet

- DBMSDocument38 pagesDBMSGyanendraVermaNo ratings yet

- Topic 4 Entity Relationship Diagram (ERD) : Prepared By: Nurul Akhmal Binti Mohd ZulkefliDocument91 pagesTopic 4 Entity Relationship Diagram (ERD) : Prepared By: Nurul Akhmal Binti Mohd Zulkefliمحمد دانيالNo ratings yet

- Perilaku Kewirausahaan Pada Usaha Mikro Kecil (Umk) Tempe Di Bogor Jawa Barat Tita Nursiah, Nunung Kusnadi, Dan BurhanuddinDocument14 pagesPerilaku Kewirausahaan Pada Usaha Mikro Kecil (Umk) Tempe Di Bogor Jawa Barat Tita Nursiah, Nunung Kusnadi, Dan Burhanuddin19-061 Fadel MuhammadNo ratings yet

- Convert invoice table to 3NFDocument3 pagesConvert invoice table to 3NFMark KludgeNo ratings yet

- MIS 403 - Database Management Systems - II: DR Maqsood MahmudDocument21 pagesMIS 403 - Database Management Systems - II: DR Maqsood Mahmudابراهيم الغامديNo ratings yet

- Multicax Unigraphics UdlDocument1 pageMulticax Unigraphics UdlNagomi DNo ratings yet

- MineSight and Pix4DDocument11 pagesMineSight and Pix4DCarlos Alberto Yarlequé YarlequéNo ratings yet

- SET 1 - Software Developer Assesment TestDocument6 pagesSET 1 - Software Developer Assesment TestVikash KumarNo ratings yet