Professional Documents

Culture Documents

Interim Assessment Matters Top 10 052014

Uploaded by

roh0090 ratings0% found this document useful (0 votes)

8 views2 pagesNWEA

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentNWEA

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

8 views2 pagesInterim Assessment Matters Top 10 052014

Uploaded by

roh009NWEA

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 2

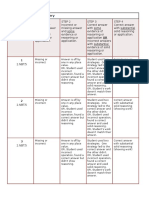

Top 10 Questions to Ask When

Comparing Interim Assessments

Not all interim assessments are created equal. They vary widely in their

designs, purposes, and validity. Some deliver data powerful enough for

educators to make informed decisions at the student, class, school, and

district leveland some dont. Along with measuring student growth and

achievement, quality interim data can provide stability during times of

transition in standards and curriculum.

Below, youll find 10 key questions to ask as you investigate interim

assessments to meet your needs.

1. Was the assessment designed to

provide achievement status AND

growth data?

3. Does it provide items at the

appropriate difficulty level for

each student?

Why its important: Growth data help engage

students in a growth mindset; they learn their ability

isnt fixed, but can increase with effort. Data that fail

to reflect student growth deny students and their

communities the opportunity to be proud of what

they accomplished. An assessment is only as good as

the scale that forms its foundation.

Why its important: Instructional readiness does not

always relate directly to grade level. Students may be

at, above, or below grade level in terms of what they

are ready to learn.

What to look for: Look for a stable vertical scale that

is regularly monitored for scale drift.

2. Is the assessment adaptive?

Why its important: Adaptive assessments choose

items based on a students response pattern, so the

students true ability level can be measured with

precision in the fewest number of test items.

What to look for: Look for whether the assessment

adapts to each student with each item. Many socalled adaptive assessments adapt after a bank

of questions has been answered, resulting in less

precision in the results.

Partnering to Help All Kids Learn | NWEA.org | 503.624.1951

10 Questions to Ask When Comparing Interim Assessments

What to look for: Look for an assessment that

adapts at-grade and out-of-grade, so that its able

to measure the students true starting point. Also

review the span of content the assessment covers.

An assessment that informs educators about each

students instructional readiness draws on content

that spans across grades.

4. Does the assessment link to relevant

resources to support instruction?

Why its important: Data linked to views, tools, and

instructional resources can help educators answer

the critical question, How do we make these data

actionable?

What to look for: Look for links to instructional

resources that support students in learning what they

are ready to learn. These resources may include openeducation resources (OER), full-blown curricula, or

resources linked to blended learning models.

5. Do the data inform decisionmaking at the classroom level?

8. Do the assessment data

have validity?

Why its important: At the classroom level, ability

grouping is one of the key uses for data; this includes

differentiating instruction as well as identifying

students for programs and resources that will best

support their needs.

Why its important: Every assessment is designed

with a purposeor purposesthat its data can

support. Validity, meaning whether an assessment

measures what it intends to measure, ensures that

the inferences made from the data are sound.

What to look for: Look at whether the report views

and tools empower classroom-level instructional

decisions and simplify differentiation and grouping.

6. Can the data inform decisionmaking at the building and

district levels?

Why its important: Report views that aggregate for

a school or multiple sites serve building- and districtlevel administrators data needs.

What to look for: Look at the features and

capabilities of the reports and tools to maximize the

value of the assessment data. The more assessment

data are leveraged, the less time needs to be

spent gathering them lending efficiency to your

assessment process.

7. Is the item pool sufficient to

support the test design and purpose?

Why its important: An item pools sufficiency can

be determined by a number of factors, including

its development process, maturity, size, depth, and

breadth. High-quality items are a critical component

to any assessment.

What to look for: Look at the item development,

alignment, and review processes. This information

should be available in the assessment providers

Technical Manual.

What to look for: Look for the test design and its

intended purpose(s). Understand what content is

covered, how the test should be administered and

scored, and its standard error of measurement.

9. Does the assessment provider

develop norms from their data? How

often are the norms updated?

Why its important: Norms can provide a relevant

data point that contextualizes a students assessment

results and helps students and teachers with goal

setting. It is also important to provide context around

student growth and answer questions such as How

much growth is sufficient? and Is the student

gaining or losing ground relative to their peers?

What to look for: Look for the assessment providers

practices around norming, including how often

normative studies are conducted, whether the

population is nationally representative, and whether

status and growth norms are developed.

10. Can the assessment make

predictions of student performance

on high-stakes summative year-end

tests and college benchmarks?

Why its important: Knowing if students are on track

to achieve proficiency on state assessments helps

teachers make adjustments in instructional pacing,

plan interventions, and provide additional resources.

What to look for: Look for predictive studies that

link student scores to proficiency levels for their state

assessments or college entrance examinations.

Learn more about NWEA by visiting NWEA.org.

Northwest Evaluation Association (NWEA) has nearly 40 years of experience helping educators move student learning

forward through computer-based assessment suites, professional development offerings, and research services.

Partnering to Help All Kids Learn | NWEA.org | 503.624.1951

10 Questions to Ask When Comparing Interim Assessments

Northwest Evaluation Association 2014. All rights reserved. Measures of Academic Progress, MAP, and Partnering to Help All Kids Learn are

registered trademarks and Northwest Evaluation Association, and NWEA are trademarks of Northwest Evaluation Association.

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Maths Olympiad Contest Problems: Exploring Maths Through Problem SolvingDocument15 pagesMaths Olympiad Contest Problems: Exploring Maths Through Problem Solvingroh00960% (5)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Truck, Tractor, 8X8 M1070 NSN 2320-01-318-9902 (EIC B5C) : Technical Manual Operator'S Manual FORDocument832 pagesTruck, Tractor, 8X8 M1070 NSN 2320-01-318-9902 (EIC B5C) : Technical Manual Operator'S Manual FORIce82No ratings yet

- C57 19 01Document17 pagesC57 19 01Carlos MolinaNo ratings yet

- 2017 Chapter TargetDocument8 pages2017 Chapter Targetroh009No ratings yet

- An Approach To JUMP Math Coaching Nov 2009 by Haroon Patel NotesDocument5 pagesAn Approach To JUMP Math Coaching Nov 2009 by Haroon Patel Notesroh009No ratings yet

- Perfect ScoresDocument9 pagesPerfect Scoresroh009No ratings yet

- Junior High Number Sense Test Sequencing UpdatedDocument1 pageJunior High Number Sense Test Sequencing Updatedroh009No ratings yet

- Students Sci ProjDocument11 pagesStudents Sci Projroh009No ratings yet

- School Honor Roll: Location: United StatesDocument1 pageSchool Honor Roll: Location: United Statesroh009No ratings yet

- Balancing Equations Practice Worksheet - Balance Chemical EquationsDocument1 pageBalancing Equations Practice Worksheet - Balance Chemical EquationsMax SaubermanNo ratings yet

- Pre-Calculus Analytic Geometry Problem 1 AreaDocument1 pagePre-Calculus Analytic Geometry Problem 1 Arearoh009No ratings yet

- Students Sci Proj 2Document10 pagesStudents Sci Proj 2roh009No ratings yet

- School Merit RollDocument34 pagesSchool Merit Rollroh009No ratings yet

- Mathcounts EtcDocument2 pagesMathcounts Etcroh009No ratings yet

- Things To Know About TrianglesDocument2 pagesThings To Know About Trianglesroh009No ratings yet

- Mid Module 4 RubricDocument5 pagesMid Module 4 Rubricroh009No ratings yet

- Perfect Scores: Location: United StatesDocument1 pagePerfect Scores: Location: United Statesroh009No ratings yet

- Mathematics RulesDocument2 pagesMathematics Rulesroh009No ratings yet

- Number Sense RulesDocument4 pagesNumber Sense Rulesroh009No ratings yet

- Assignments Sheet Week 7Document1 pageAssignments Sheet Week 7roh009No ratings yet

- Whatsitworthlarge PDFDocument1 pageWhatsitworthlarge PDFroh009No ratings yet

- Number SenseDocument2 pagesNumber Senseroh009No ratings yet

- Mathematics Answer SheetDocument1 pageMathematics Answer Sheetroh009No ratings yet

- Student Sci Pro 4Document2 pagesStudent Sci Pro 4roh009No ratings yet

- Basic Geometry Practice PDFDocument18 pagesBasic Geometry Practice PDFroh009No ratings yet

- 001Document1 page001roh009No ratings yet

- Student Sci Pro 2Document2 pagesStudent Sci Pro 2roh009No ratings yet

- 2011 IMO Bio DocumentDocument9 pages2011 IMO Bio Documentroh009No ratings yet

- Anti-microbial Activity of Turmeric Natural Dye (39Document3 pagesAnti-microbial Activity of Turmeric Natural Dye (39roh009No ratings yet

- Balancing Equations Practice Worksheet - Balance Chemical EquationsDocument1 pageBalancing Equations Practice Worksheet - Balance Chemical EquationsMax SaubermanNo ratings yet

- Moe Ms AbstractsDocument41 pagesMoe Ms Abstractsroh009No ratings yet

- Math Olympiad Club DescriptionDocument1 pageMath Olympiad Club Descriptionroh009No ratings yet

- User Manual For Communication: TM SeriesDocument36 pagesUser Manual For Communication: TM SeriesRonald MuchaNo ratings yet

- Edge Router Lite PerformanceDocument7 pagesEdge Router Lite PerformanceAlecsandruNeacsuNo ratings yet

- MM420 Operating InstructionDocument104 pagesMM420 Operating InstructionLuis AristaNo ratings yet

- RS-232 Serial Communication ProtocolsDocument4 pagesRS-232 Serial Communication ProtocolsKalyan Maruti100% (2)

- NRC12 VarlogiDocument2 pagesNRC12 VarlogiNima MahmoudpourNo ratings yet

- Catalog Refrigeration PDFDocument138 pagesCatalog Refrigeration PDFJavier AffifNo ratings yet

- As 1289.3.6.3-2003 Methods of Testing Soils For Engineering Purposes Soil Classification Tests - DeterminatioDocument2 pagesAs 1289.3.6.3-2003 Methods of Testing Soils For Engineering Purposes Soil Classification Tests - DeterminatioSAI Global - APAC0% (1)

- Iso 3408 3 2006 en PDFDocument11 pagesIso 3408 3 2006 en PDFAmrit SinghNo ratings yet

- Elbows for Piping SystemsDocument9 pagesElbows for Piping SystemsPetropipe AcademyNo ratings yet

- MRN 1391 - Ranps-Sibayak - 2021 - TDDDocument7 pagesMRN 1391 - Ranps-Sibayak - 2021 - TDDRixson SitorusNo ratings yet

- Electrical Distribution and Transmission Systems Analyses and Design ProgramsDocument44 pagesElectrical Distribution and Transmission Systems Analyses and Design ProgramsgovindarulNo ratings yet

- WT Lab ManualDocument44 pagesWT Lab ManualVenkatanagasudheer Thummapudi100% (1)

- PIL.03.19 ATO Organisation Management ManualDocument3 pagesPIL.03.19 ATO Organisation Management ManualMohamadreza TaheriNo ratings yet

- 972K SpecalogDocument4 pages972K SpecalogMostafa SaadNo ratings yet

- ODU-MAC Blue-Line Open Modular Connector System PDFDocument77 pagesODU-MAC Blue-Line Open Modular Connector System PDFConstantin-Iulian TraiciuNo ratings yet

- Excavation Safety ProcedureDocument8 pagesExcavation Safety ProcedureJamilNo ratings yet

- Maintenance Planning and Scheduling ProcessDocument5 pagesMaintenance Planning and Scheduling Processerick_galeas9886100% (1)

- VHDL Introduction by J BhaskerDocument4 pagesVHDL Introduction by J BhaskerVishi Agrawal0% (1)

- A F E S: Process Piping: Pipeline Metering Stations 670.204Document3 pagesA F E S: Process Piping: Pipeline Metering Stations 670.204puwarin najaNo ratings yet

- Merlin Gerin Medium VoltageDocument10 pagesMerlin Gerin Medium VoltagekjfenNo ratings yet

- SDLC AssignmentDocument6 pagesSDLC Assignmentshahzeb1978No ratings yet

- Vtu Tea-1 J2EE Notes Ch-3Document17 pagesVtu Tea-1 J2EE Notes Ch-3junaidnizNo ratings yet

- CA44 and CA44S Carbon Steel Air and Gas Traps Flanged DN15 To DN25Document5 pagesCA44 and CA44S Carbon Steel Air and Gas Traps Flanged DN15 To DN25nnaemeka omekeNo ratings yet

- WWW Araxxe Com P Our Services Revenue Assurance Inter OperatDocument5 pagesWWW Araxxe Com P Our Services Revenue Assurance Inter OperatErick Alexander Llanos BuitronNo ratings yet

- BLDV400Document60 pagesBLDV400Junaid SyedNo ratings yet

- 25d Double Searmer ManualDocument21 pages25d Double Searmer Manuallisya liputoNo ratings yet

- Astm A 956Document7 pagesAstm A 956Joshua Surbakti100% (1)

- OM DefaultingDocument6 pagesOM DefaultingAshvini VijayendraNo ratings yet