Professional Documents

Culture Documents

Effects of Transformations On Significance of The Pearson T-Test

Uploaded by

IOSRjournalOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Effects of Transformations On Significance of The Pearson T-Test

Uploaded by

IOSRjournalCopyright:

Available Formats

IOSR Journal of Mathematics (IOSR-JM)

e-ISSN: 2278-5728, p-ISSN: 2319-765X. Volume 11, Issue 3 Ver. I (May - Jun. 2015), PP 69-72

www.iosrjournals.org

Effects of Transformations on Significance of the Pearson T-Test

Omokri, Peter

Department of Mathematics/Statistics, School of Applied Sciences

Delta State Polytechnic Ogwashi-Uku, Delta State

Abstract: Techniques that improve on existing assumptions of normality and largeness of sample size would be

of paramount interest as non normality increases with increasing complexity in data gathering techniques. An

option of transformation could be either to carry out a normal transformation so as to improve on the normality

of the data in other for the pearson and associated t-test to be applicable or to linearize the distribution so as to

strengthen the linear relationship that exist between the data, the simulation study carried out on various

transformations and sample sizes showed that the square root transformation fared consistently better than the

other transformations and the sample size of n=80 proved to be the sample size that optimizes the pearson test.

Keyword: Pearson T-Test, Transformation, Discrete Data, Bivariate Correlation.

I.

Introduction

Bivariate data are data in which two variables are measured on an individual. When interest is in

measuring the strength of association between such bivariate data, the correlation coefficient may be used. The

most common correlation coefficient, called the Pearson product-moment correlation coefficient, measures the

strength of the linear relation between variables. Pearson Correlation is employed to ratio and interval ratio

data and most importantly when data is large or normally distributed. When the data is not normal and the

sample size is small, the nonparametric Spearman rank correlation is useful.

Little work has been done for cases when the distribution of the data is relatively small or unknown. A

test of the significance of Pearson's r may inflate Type I error rates and reduce power of the test when data are

non-normally distributed. Several alternatives to the Pearson correlation are provided by literature, The relative

performance and robustness of these alternatives has however been unclear (Bishara, and Hittner, 2012).

A comparison of the Pearson correlation to other approaches of testing significance of correlation, such

as data transformation approaches and Spearman's rank-order correlation, suggests that the sampling distribution

of Pearsons r was insensitive to the effects of non-normality when testing the hypothesis that = 0 (Duncan &

Layard, 1973; Zeller & Levine (1974)).

Havlicek and Peterson (1977) extended these studies by examining the effects of non-normality and

variations in scales of measurement on the sampling distribution of r, and accompanying Type I error rates,

when testing = 0. Their results indicated the robustness of the Pearsons r to non-normality, non-equal interval

measurement, and the combination of non-normality and non-equal interval measurement.

Edgell and Noon (1984) further expanded these studies by examining a variety of mixed and very nonnormal distributions. They found that Pearsons r was robust to nearly all non-normal and mixed-normal

conditions when testing = 0 at a nominal alpha of .05. The exceptions occurred with the very small sample

size of n = 5, in which Type I error rates were slightly inflated for all distributions. Type I error was also inflated

when one or both variables were extremely non-normal, such as with Cauchy distributions Zimmerman, Zumbo,

& Williams (2003) for exceptions.

Although nonlinear transformations in many cases can induce normality and enhance statistical power,

there are some distribution types for which optimal normalizing transformations are difficult to find.

As non-normality seems to be growing more common with complexity of data gathering techniques,

techniques for improving on existing assumptions of normality and largeness of sample size would be of

paramount interest. An option of transformation could be either to carry out a normal transformation so as to

improve on the normality of the data in other for the pearson and associated t-test to be applicable or to linearize

the distribution so as to strengthen the linear relationship that exist between the data. As Data transformation

essentially entails the application of a mathematical function to change the measurement scale of a variable that

optimizes the linear correlation between the data. The function is applied to each point in a data set that is,

each data point yi is replaced with the transformed value

This study therefore seeks to determine the effect of transformation and sample size on the on the Pearson t-test.

DOI: 10.9790/5728-11316972

www.iosrjournals.org

69 | Page

Effects Of Transformations On Significance Of The Pearson T-Test

II.

Methodology

The study determines the effect of transformations of discrete distributions on the Pearson t-test. The

distributions are: binomial, Poisson, geometric, hypergeometric, and negative binomial. The required

transformations are: inverse, square-root, log, arcsine and the Box-Cox. The tests were conducted for various

sample sizes of 10, 20, 80 and 160. For each distribution and sample size, 20 variables were simulated and

paired in twos without replacement resulting in20C2 (190) correlation tests. Five types of transformation (BoxCox. inverse, square root, arcsine, and log) were carried out on each data set. After each test the proportion of

significant (2-tailed test of =0) were obtained. The statistical tools and techniques to be used are stated below.

1. Pearson Moment Correlation: The general Pearson moment correlation is given by

( x X )( y Y ) or r

XY ( NXY )

( x X ) ( y Y )

X NX Y NY

2

(1)

2. Pearson - t-test. Oyeka (1990) gave the Pearson product-moment correlation test as

t r =

r n2

(3)

1r 2

Where the test is: H0: = 0. Under

denoted t(n-2)

H0, t r follows

the Students t-distribution with (n2) degrees of freedom,

3. Inverse Transformation: The inverse transformation is given Eze, (2002) as

1

X

(5)

4. Box-Cox Transformation: the Box-Cox transformation used as given by Osborne,(2010 ) is:

1

X I = , if 0

x

log x , if = 0

Where if the parameter (mean of data)

(6)

5. Arcsine Transformation: The arcsine transformation effectively stretches the tails of data. The arcsine

transform used is given by Eze (2002):

X I = sin1 xi

(7)

8. Log Transformation: Eze (2002) gave the log transformation as X I =log10x

(9)

9. Square Root Transformation: the square root transformation as given by Eze (2002) is

X I = x

III.

Discussion Of Findings

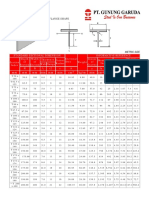

Table 1: Pearson T-Test: Proportion Significant At =0.05

DISTRIBUTION

BINOMIAL

POISSON DIST

GEOMETRIC

NEGATIVE BINOMIAL

HYPERGEOMETRIC

SAMPLE

10

20

80

160

10

20

80

160

10

20

80

160

10

20

80

160

10

20

80

160

DOI: 10.9790/5728-11316972

RAW

0.021053

0.047368

0.068421

0.052632

0.057895

0.052632

0.073684

0.084211

0.063158

0.042105

0.047368

0.031579

0.047368

0.042105

0.036842

0.031579

0.010526

0.042105

0.047368

0.042105

INVERSE

0.026316

0.052632

0.063158

0.057895

0.057895

0.057895

0.068421

0.068421

0.057895

0.036842

0.068421

0.036842

0.073684

0.047368

0.042105

0.026316

0.047368

0.042105

0.063158

0.042105

SQUAREROOT

0.031579

0.047368

0.068421

0.073684

0.063158

0.052632

0.078947

0.068421

0.057895

0.036842

0.047368

0.052632

0.052632

0.047368

0.042105

0.031579

0.021053

0.052632

0.052632

0.042105

www.iosrjournals.org

LOG

0.031579

0.047368

0.063158

0.052632

0.063158

0.057895

0.073684

0.068421

0.047368

0.036842

0.047368

0.036842

0.068421

0.047368

0.042105

0.031579

0.021053

0.057895

0.063158

0.031579

ARCSINE

0.036842

0.052632

0.063158

0.047368

0.063158

0.057895

0.073684

0.068421

0.047368

0.036842

0.047368

0.036842

0.068421

0.047368

0.042105

0.031579

0.015789

0.057895

0.063158

0.042105

BOXCOX

0.042105

0.052632

0.047368

0.057895

0.052632

0.063158

0.073684

0.063158

0.057895

0.031579

0.042105

0.031579

0.078947

0.047368

0.047368

0.031579

0.036842

0.063158

0.042105

0.026316

70 | Page

Effects Of Transformations On Significance Of The Pearson T-Test

IV.

Interpretation Of Result

Extracting the result for the Pearson test will give a true picture of the effects of transformations,

distributions and sample size on Pearson t-test.

For binomial, the boxcox transformation has the highest proportion of 0.042105 at n=10 and the least

proportion with the raw data. At n=20, the inverse, arcsine, and boxcox have the highest proportion at n=80, the

square root transformation gave the highest proportion at n=160 and also doubled as the highest proportion for

the binomial distribution.

In the case of Poisson, when n=10, the square root, logarithm and arcsine has the highest proportion of

significance of 0.063158 and equal proportions of of 0.052632 in others. At n=20, the boxcox has the highest

proportion as the least proportions were observed in the raw data and square root transformation. The square

root has the highest proportion of 0.078947 at n=80 and least proportion of 0.0684 in the inverse transformation.

The raw data at n=80 for Poisson has the highest proportion for both n=80 and in all Poisson cases.

The geometric distribution has the highest proportions at n=10&20

in the raw data; n=80 in inverse

transformation and at n=160 in squareroot transformation. Sample size of n=80 and the inverse transformation

was observed to have the highest proportion for the geometric distribution.

The boxcox transformation was observed to have the highest proportion of 0.078947 at n=10 and has

the same proportion of 0.047368 in all transformation at n=20 except for the raw data. Similarly, n=160 had

equal proportion of 0.031579 except for the raw data. Hence the boxcox transformation was observed to have

the highest proportion across all sample sizes and transformation.

For hypergeometric, the inverse transformation has the highest proportion of 0.047368 at r=10 and the

least proportion with raw data. At n=20, the boxcox has the highest proportion of 0.063158 and least proportion

of 0.042105 with the raw data and inverse transformation. Hence the inverse and logarithmic transformation at

n=80 recorded the highest proportion for the hypergeometric distribution.

In summary, the sample size of n=80 consistently performed better or at least equally with the rest

sample sizes, and the squareroot transformation was observed to be consistently higher in proportion except for

a very few instances. The proportion of significance was consistently higher in proportion when compared to

other distribution.

V.

Conclusion

In conclusion, the sample size of n=80 proved to be the sample size that optimizes the Pearson t-test

since the proportion of significance at n=80 was virtually the highest in all cases followed by n=160 then n=20

The inverse transformation were greater than or equal to in performance when compared to the raw data except

for n=80. Also the square root transformation did consistently better than the raw data and inverse

transformation except for the case of geometric at n=80, negative binomial at n=10 and hyper geometric at

n=10. Further comparison showed that the square root transformation fared consistently better than the raw data,

inverse, logarithmic, arcsine and the Boxcox transformation except for few cases. The logarithmic

transformation performed equally with the arcsine except for some few other cases.

VI.

Recommendation For Further Study

The study was strictly on bivariate discrete distributions. It is therefore recommended that further

research be carried out determine how these transformations and correlation tests will fare in marginal normal

distributions (e.g. normal-binomial, normal-Poisson etc.) and marginal non-normal discrete distributions (as

binomial-Poisson, Poisson-geometric etc.) as well as determine the effect of these transformations on the power

of the bivariate correlation test. Better effort should be made to determine the actual value of for the boxcox

transformation, also increasing the number of iterations may have helped in clearly breaking the ties in the

observed proportions in this study.

References

[1].

[2].

[3].

[4].

[5].

[6].

[7].

[8].

[9].

[10].

Beversdorf, L. M and Sa, P. (2011) Tests for Correlation on Bivariate Non-Normal Data. Journal of Modern Applied Statistical

Methods, Vol. 10, No. 2, 699-709

Bishara, A. J., & Hittner, J. B. (2012). Testing the Significance of a Correlation with Non-Normal Data: of Pearson, Spearman,

Transformation, and Resampling Approaches. Psychological Methods, 17, 399-417. doi:10.1037/a0028087

Duncan, G. T., & Layard, M. W. J. (1973). A Monte-Carlo study of asymptotically robust

tests for correlation coefficients. Biometrika, 60, 551-558

Ebuh, G.U. and Oyeka, I.C.A. (2012 ) A Non Parametric Method for Estimating Partial

Correlation Coefficient. J. BiomBiostat 3(8)http://dx.doi.org/10.4172/2155-6180.1000156.

Edgell, S., & Noon, S. (1984). Effect of violation of normality on the t test of the correlation coefficient. Psychological Bulletin,

95(3), 576-583.

Eze, F.C (2002) An Introduction to Analysis of Variance; Lano Publishers, 51 Obiagu Road Enugu.

Hayes, A. (1996). Permutation Test is not Distribution-Free: Testing H0: = 0. Psychological Methods, 1(2), 184-198.

Havlicek, L., & Peterson, N. (1977). Effect of the violation of assumptions upon significance levels of the Pearson r. Psychological

Bulletin, 84(2), 373-377.

DOI: 10.9790/5728-11316972

www.iosrjournals.org

71 | Page

Effects Of Transformations On Significance Of The Pearson T-Test

[11].

[12].

[13].

[14].

[15].

Osborne, J.O (2010) Improving your data transformations: Applying the Box-Cox transformation Practical Assessment, Research

& Evaluation, Vol 15, No 12 Page 4

Oyeka, I.C.A (2009), An introduction to Applied Statistical Methods. Nobern Avocation Publishing Company, Enugu

Rasmussen, J., & Dunlap, W. (1991). Dealing with nonnormal data: Parametric analysis of transformed data vs. nonparametric

analysis. Educational and Psychological Measurement, 51(4), 809-820.

Zeller, R. A., & Levine, Z. H. (1974). The effects of violating the normality assumption underlying r. Sociological Methods &

Research, 2, 511-519.

Zimmerman, D.W; Zumbo, B. D and Williams R. H (2003)Bias in Estimation and Hypothesis Testing of Correlation Psicolgica,

24,133-158.

DOI: 10.9790/5728-11316972

www.iosrjournals.org

72 | Page

You might also like

- Parametric Vs Non Paramteric TestsDocument5 pagesParametric Vs Non Paramteric TestsmerinNo ratings yet

- A Bayesian Test For Excess Zeros in A Zero-Inflated Power Series DistributionDocument17 pagesA Bayesian Test For Excess Zeros in A Zero-Inflated Power Series Distributionእስክንድር ተፈሪNo ratings yet

- Differences and Similarities Between ParDocument6 pagesDifferences and Similarities Between ParPranay Pandey100% (1)

- Common Statistical TestsDocument12 pagesCommon Statistical TestsshanumanuranuNo ratings yet

- Pearson's Product-Moment Correlation: Sample Analysis: January 2013Document16 pagesPearson's Product-Moment Correlation: Sample Analysis: January 2013Rafie Itharani UlkhaqNo ratings yet

- Warton & Hui 2011 Tranformação de DadosDocument8 pagesWarton & Hui 2011 Tranformação de DadosJaqueline Figuerêdo RosaNo ratings yet

- Cointegracion MacKinnon, - Et - Al - 1996 PDFDocument26 pagesCointegracion MacKinnon, - Et - Al - 1996 PDFAndy HernandezNo ratings yet

- The Measurement-Statistics Controversy: Factor Analysis and Subinterval DataDocument4 pagesThe Measurement-Statistics Controversy: Factor Analysis and Subinterval DatachucumbaNo ratings yet

- Johansen Cointegration Test MethodDocument19 pagesJohansen Cointegration Test MethodIhtisham JadoonNo ratings yet

- Invited Review Article: A Selective Overview of Variable Selection in High Dimensional Feature SpaceDocument48 pagesInvited Review Article: A Selective Overview of Variable Selection in High Dimensional Feature Spacenynster MINNo ratings yet

- Application of Statistical Concepts in The Determination of Weight Variation in SamplesDocument6 pagesApplication of Statistical Concepts in The Determination of Weight Variation in SamplesRaffi IsahNo ratings yet

- CIE Biology A-level Data Analysis GuideDocument3 pagesCIE Biology A-level Data Analysis GuideShania SmithNo ratings yet

- Spearman Rank Correlation CoefficientDocument25 pagesSpearman Rank Correlation CoefficientRohaila Rohani0% (1)

- Box Cox TransformationDocument9 pagesBox Cox TransformationWong Chee HaurNo ratings yet

- Statistical Treatment of Data, Data Interpretation, and ReliabilityDocument6 pagesStatistical Treatment of Data, Data Interpretation, and Reliabilitydraindrop8606No ratings yet

- Multivariate Theory For Analyzing High DimensionalDocument35 pagesMultivariate Theory For Analyzing High DimensionalJuliana SamparNo ratings yet

- A Valid Parametric Test of Significance For The Average R2 I 2017 Spatial STDocument21 pagesA Valid Parametric Test of Significance For The Average R2 I 2017 Spatial STmaster.familiarNo ratings yet

- Non Parametric TestsDocument12 pagesNon Parametric TestsHoney Elizabeth JoseNo ratings yet

- Chi-square and T-tests in analytical chemistryDocument7 pagesChi-square and T-tests in analytical chemistryPirate 001No ratings yet

- An Autoregressive Distributed Lag Modelling Approach To Cointegration AnalysisDocument33 pagesAn Autoregressive Distributed Lag Modelling Approach To Cointegration AnalysisNuur AhmedNo ratings yet

- (Sici) 1097 0258 (19980715) 17 13 1495 Aid Sim863 3.0Document13 pages(Sici) 1097 0258 (19980715) 17 13 1495 Aid Sim863 3.0A BNo ratings yet

- Combined AnalysisDocument7 pagesCombined AnalysisKuthubudeen T MNo ratings yet

- Pesaran - Shin - An Auto Regressive Distributed Lag Modelling Approach To Cointegration AnalysisDocument33 pagesPesaran - Shin - An Auto Regressive Distributed Lag Modelling Approach To Cointegration AnalysisrunawayyyNo ratings yet

- Meinshausen Et Al-2010-Journal of The Royal Statistical Society: Series B (Statistical Methodology)Document57 pagesMeinshausen Et Al-2010-Journal of The Royal Statistical Society: Series B (Statistical Methodology)Esad TahaNo ratings yet

- Specification and Testing of Some Modified Count Data ModelsDocument25 pagesSpecification and Testing of Some Modified Count Data ModelsSuci IsmadyaNo ratings yet

- ARD 3201 Tests For Significance in EconometricsDocument3 pagesARD 3201 Tests For Significance in EconometricsAlinaitwe GodfreyNo ratings yet

- P-Values are Random VariablesDocument5 pagesP-Values are Random Variablesaskazy007No ratings yet

- Binary Logistic Regression and Its ApplicationDocument8 pagesBinary Logistic Regression and Its ApplicationFaisal IshtiaqNo ratings yet

- Limitations of The Anajkglysis of VarianceDocument5 pagesLimitations of The Anajkglysis of VariancejksahfcjasdvjkhgdsfgsNo ratings yet

- A Monte Carlo Comparison of Jarque-Bera Type Tests and Henze-Zirkler Test of Multivariate NormalityDocument16 pagesA Monte Carlo Comparison of Jarque-Bera Type Tests and Henze-Zirkler Test of Multivariate NormalityAkademis ArkaswaraNo ratings yet

- Testul Chi PatratDocument9 pagesTestul Chi PatratgeorgianapetrescuNo ratings yet

- Chi-Squared Test ExplainedDocument53 pagesChi-Squared Test ExplainedgeorgianapetrescuNo ratings yet

- Imran Masih Solved 1167109 2ndDocument7 pagesImran Masih Solved 1167109 2ndimran.info4582No ratings yet

- Chi Square TestDocument4 pagesChi Square Testfrancisco-perez-1980No ratings yet

- ODE Estimation PDFDocument41 pagesODE Estimation PDFIkhwan Muhammad IqbalNo ratings yet

- Chi-Square Vs SpearmanDocument13 pagesChi-Square Vs SpearmanYashinta MaharaniNo ratings yet

- Discussion: The Dantzig Selector: Statistical Estimation WhenDocument5 pagesDiscussion: The Dantzig Selector: Statistical Estimation WhenSyed MaazNo ratings yet

- PolicóricasDocument14 pagesPolicóricasJorge Luis MontesNo ratings yet

- Sizer Analysis For The Comparison of Regression Curves: Cheolwoo Park and Kee-Hoon KangDocument27 pagesSizer Analysis For The Comparison of Regression Curves: Cheolwoo Park and Kee-Hoon KangjofrajodiNo ratings yet

- Chi-squared test explainedDocument10 pagesChi-squared test explainedGena ClarishNo ratings yet

- Npar LDDocument23 pagesNpar LDThaiane NolascoNo ratings yet

- Insppt 6Document21 pagesInsppt 6Md Talha JavedNo ratings yet

- Point Biserial Correlation Coefficient (R) : XY PBDocument2 pagesPoint Biserial Correlation Coefficient (R) : XY PBJeri-Mei CadizNo ratings yet

- Statistical tools guideDocument4 pagesStatistical tools guideArben Anthony Saavedra QuitosNo ratings yet

- Math M Assignment: Analysis of Student Performance DataDocument29 pagesMath M Assignment: Analysis of Student Performance Dataxxian hhuiNo ratings yet

- Pesaran M. H., Shin Y., Smith R. J. (2001)Document38 pagesPesaran M. H., Shin Y., Smith R. J. (2001)Michele DalenaNo ratings yet

- A Bayesian Model For Multiple Change Point To Extremes, With Application To...Document18 pagesA Bayesian Model For Multiple Change Point To Extremes, With Application To...Wong Chiong LiongNo ratings yet

- Critical: Expanents Far Branching Annihilating Randem An NumberDocument11 pagesCritical: Expanents Far Branching Annihilating Randem An NumberTainã LaíseNo ratings yet

- What Are Non Parametric Methods!Document21 pagesWhat Are Non Parametric Methods!Omkar BansodeNo ratings yet

- 2 Pearson CorrelationDocument7 pages2 Pearson Correlationlarry jamesNo ratings yet

- Classical Test Theory as a First-Order Item Response TheoryDocument45 pagesClassical Test Theory as a First-Order Item Response TheoryAnggoro BonongNo ratings yet

- Likelihood-Ratio TestDocument5 pagesLikelihood-Ratio Testdev414No ratings yet

- A Tutorial On Correlation CoefficientsDocument13 pagesA Tutorial On Correlation CoefficientsJust MahasiswaNo ratings yet

- Zimmerman 2012 A Note On Consistency of Non-Parametric Rank Tests and Related Rank TransformationsDocument23 pagesZimmerman 2012 A Note On Consistency of Non-Parametric Rank Tests and Related Rank TransformationsCarlos AndradeNo ratings yet

- Maggio 2014Document5 pagesMaggio 2014ujju7No ratings yet

- Cheat SheetDocument5 pagesCheat SheetMNo ratings yet

- Nihms 651394Document31 pagesNihms 651394Wang KeNo ratings yet

- Socio-Ethical Impact of Turkish Dramas On Educated Females of Gujranwala-PakistanDocument7 pagesSocio-Ethical Impact of Turkish Dramas On Educated Females of Gujranwala-PakistanInternational Organization of Scientific Research (IOSR)No ratings yet

- "I Am Not Gay Says A Gay Christian." A Qualitative Study On Beliefs and Prejudices of Christians Towards Homosexuality in ZimbabweDocument5 pages"I Am Not Gay Says A Gay Christian." A Qualitative Study On Beliefs and Prejudices of Christians Towards Homosexuality in ZimbabweInternational Organization of Scientific Research (IOSR)No ratings yet

- The Role of Extrovert and Introvert Personality in Second Language AcquisitionDocument6 pagesThe Role of Extrovert and Introvert Personality in Second Language AcquisitionInternational Organization of Scientific Research (IOSR)No ratings yet

- A Proposed Framework On Working With Parents of Children With Special Needs in SingaporeDocument7 pagesA Proposed Framework On Working With Parents of Children With Special Needs in SingaporeInternational Organization of Scientific Research (IOSR)No ratings yet

- Importance of Mass Media in Communicating Health Messages: An AnalysisDocument6 pagesImportance of Mass Media in Communicating Health Messages: An AnalysisInternational Organization of Scientific Research (IOSR)No ratings yet

- Investigation of Unbelief and Faith in The Islam According To The Statement, Mr. Ahmed MoftizadehDocument4 pagesInvestigation of Unbelief and Faith in The Islam According To The Statement, Mr. Ahmed MoftizadehInternational Organization of Scientific Research (IOSR)No ratings yet

- An Evaluation of Lowell's Poem "The Quaker Graveyard in Nantucket" As A Pastoral ElegyDocument14 pagesAn Evaluation of Lowell's Poem "The Quaker Graveyard in Nantucket" As A Pastoral ElegyInternational Organization of Scientific Research (IOSR)No ratings yet

- Attitude and Perceptions of University Students in Zimbabwe Towards HomosexualityDocument5 pagesAttitude and Perceptions of University Students in Zimbabwe Towards HomosexualityInternational Organization of Scientific Research (IOSR)No ratings yet

- A Review of Rural Local Government System in Zimbabwe From 1980 To 2014Document15 pagesA Review of Rural Local Government System in Zimbabwe From 1980 To 2014International Organization of Scientific Research (IOSR)No ratings yet

- The Impact of Technologies On Society: A ReviewDocument5 pagesThe Impact of Technologies On Society: A ReviewInternational Organization of Scientific Research (IOSR)100% (1)

- Relationship Between Social Support and Self-Esteem of Adolescent GirlsDocument5 pagesRelationship Between Social Support and Self-Esteem of Adolescent GirlsInternational Organization of Scientific Research (IOSR)No ratings yet

- Topic: Using Wiki To Improve Students' Academic Writing in English Collaboratively: A Case Study On Undergraduate Students in BangladeshDocument7 pagesTopic: Using Wiki To Improve Students' Academic Writing in English Collaboratively: A Case Study On Undergraduate Students in BangladeshInternational Organization of Scientific Research (IOSR)No ratings yet

- Assessment of The Implementation of Federal Character in Nigeria.Document5 pagesAssessment of The Implementation of Federal Character in Nigeria.International Organization of Scientific Research (IOSR)No ratings yet

- Edward Albee and His Mother Characters: An Analysis of Selected PlaysDocument5 pagesEdward Albee and His Mother Characters: An Analysis of Selected PlaysInternational Organization of Scientific Research (IOSR)No ratings yet

- Comparative Visual Analysis of Symbolic and Illegible Indus Valley Script With Other LanguagesDocument7 pagesComparative Visual Analysis of Symbolic and Illegible Indus Valley Script With Other LanguagesInternational Organization of Scientific Research (IOSR)No ratings yet

- Human Rights and Dalits: Different Strands in The DiscourseDocument5 pagesHuman Rights and Dalits: Different Strands in The DiscourseInternational Organization of Scientific Research (IOSR)No ratings yet

- Role of Madarsa Education in Empowerment of Muslims in IndiaDocument6 pagesRole of Madarsa Education in Empowerment of Muslims in IndiaInternational Organization of Scientific Research (IOSR)No ratings yet

- The Lute Against Doping in SportDocument5 pagesThe Lute Against Doping in SportInternational Organization of Scientific Research (IOSR)No ratings yet

- Beowulf: A Folktale and History of Anglo-Saxon Life and CivilizationDocument3 pagesBeowulf: A Folktale and History of Anglo-Saxon Life and CivilizationInternational Organization of Scientific Research (IOSR)No ratings yet

- Substance Use and Abuse Among Offenders Under Probation Supervision in Limuru Probation Station, KenyaDocument11 pagesSubstance Use and Abuse Among Offenders Under Probation Supervision in Limuru Probation Station, KenyaInternational Organization of Scientific Research (IOSR)No ratings yet

- Women Empowerment Through Open and Distance Learning in ZimbabweDocument8 pagesWomen Empowerment Through Open and Distance Learning in ZimbabweInternational Organization of Scientific Research (IOSR)No ratings yet

- Classical Malay's Anthropomorphemic Metaphors in Essay of Hikajat AbdullahDocument9 pagesClassical Malay's Anthropomorphemic Metaphors in Essay of Hikajat AbdullahInternational Organization of Scientific Research (IOSR)No ratings yet

- An Exploration On The Relationship Among Learners' Autonomy, Language Learning Strategies and Big-Five Personality TraitsDocument6 pagesAn Exploration On The Relationship Among Learners' Autonomy, Language Learning Strategies and Big-Five Personality TraitsInternational Organization of Scientific Research (IOSR)No ratings yet

- Transforming People's Livelihoods Through Land Reform in A1 Resettlement Areas in Goromonzi District in ZimbabweDocument9 pagesTransforming People's Livelihoods Through Land Reform in A1 Resettlement Areas in Goromonzi District in ZimbabweInternational Organization of Scientific Research (IOSR)No ratings yet

- Micro Finance and Women - A Case Study of Villages Around Alibaug, District-Raigad, Maharashtra, IndiaDocument3 pagesMicro Finance and Women - A Case Study of Villages Around Alibaug, District-Raigad, Maharashtra, IndiaInternational Organization of Scientific Research (IOSR)No ratings yet

- Kinesics, Haptics and Proxemics: Aspects of Non - Verbal CommunicationDocument6 pagesKinesics, Haptics and Proxemics: Aspects of Non - Verbal CommunicationInternational Organization of Scientific Research (IOSR)No ratings yet

- Motivational Factors Influencing Littering in Harare's Central Business District (CBD), ZimbabweDocument8 pagesMotivational Factors Influencing Littering in Harare's Central Business District (CBD), ZimbabweInternational Organization of Scientific Research (IOSR)No ratings yet

- Designing of Indo-Western Garments Influenced From Different Indian Classical Dance CostumesDocument5 pagesDesigning of Indo-Western Garments Influenced From Different Indian Classical Dance CostumesIOSRjournalNo ratings yet

- Design Management, A Business Tools' Package of Corporate Organizations: Bangladesh ContextDocument6 pagesDesign Management, A Business Tools' Package of Corporate Organizations: Bangladesh ContextInternational Organization of Scientific Research (IOSR)No ratings yet

- A Study On The Television Programmes Popularity Among Chennai Urban WomenDocument7 pagesA Study On The Television Programmes Popularity Among Chennai Urban WomenInternational Organization of Scientific Research (IOSR)No ratings yet

- Bailey - Butch - Queens - Up - in - Pumps - Gender, - Performance, - and - ... - (Chapter - One. - Introduction - Peforming - Gender, - Creating - Kinship, - Forging - ... )Document28 pagesBailey - Butch - Queens - Up - in - Pumps - Gender, - Performance, - and - ... - (Chapter - One. - Introduction - Peforming - Gender, - Creating - Kinship, - Forging - ... )ben100% (1)

- Logistics and Supply Chain ManagementDocument6 pagesLogistics and Supply Chain ManagementJerson ßÖydNo ratings yet

- ME EngRW 11 Q3 0401 PS NarrationDocument29 pagesME EngRW 11 Q3 0401 PS NarrationKyle OrlanesNo ratings yet

- Metal Bollards Installation Risk AssessmentDocument7 pagesMetal Bollards Installation Risk AssessmentEldhose VargheseNo ratings yet

- Anatomy of an AI SystemDocument23 pagesAnatomy of an AI SystemDiego Lawliet GedgeNo ratings yet

- Facebook Ads Defeat Florida Ballot InitiativeDocument3 pagesFacebook Ads Defeat Florida Ballot InitiativeGuillermo DelToro JimenezNo ratings yet

- Ingersoll Rand Nirvana 7.5-40hp 1brochureDocument16 pagesIngersoll Rand Nirvana 7.5-40hp 1brochureJNo ratings yet

- ServiceNow Certified System Administrator CSA Practice Test Set 2Document76 pagesServiceNow Certified System Administrator CSA Practice Test Set 2Apoorv DiwanNo ratings yet

- Engineering Economics - Solution Manual (Chapter 1)Document1 pageEngineering Economics - Solution Manual (Chapter 1)Diego GuineaNo ratings yet

- List of Computer Science Impact Factor Journals: Indexed in ISI Web of Knowledge 2015Document7 pagesList of Computer Science Impact Factor Journals: Indexed in ISI Web of Knowledge 2015ppghoshinNo ratings yet

- Shaft Alignment Tools TMEA Series: SKF Product Data SheetDocument3 pagesShaft Alignment Tools TMEA Series: SKF Product Data SheetGts ImportadoraNo ratings yet

- Prepositional Phrases in SentencesDocument2 pagesPrepositional Phrases in SentencesDianaNo ratings yet

- 1605Document8 pages1605Yuda AditamaNo ratings yet

- SSC CGL 2021 Mains Maths (En) PaperDocument35 pagesSSC CGL 2021 Mains Maths (En) PaperSachin BhatiNo ratings yet

- Default Password For All TMNet Streamyx Supported ModemDocument2 pagesDefault Password For All TMNet Streamyx Supported ModemFrankly F. ChiaNo ratings yet

- Lectures SSY1352019Document307 pagesLectures SSY1352019S Awais Ahmed100% (2)

- Tipe Tubuh (Somatotype) Dengan Sindrom Metabolik Pada Wanita Dewasa Non-Obesitas Usia 25-40 TahunDocument9 pagesTipe Tubuh (Somatotype) Dengan Sindrom Metabolik Pada Wanita Dewasa Non-Obesitas Usia 25-40 TahunJessica Ester ExaudiaNo ratings yet

- AS Chemistry Unit: 1: Topic: Periodic PropertiesDocument4 pagesAS Chemistry Unit: 1: Topic: Periodic PropertiesShoaib AhmedNo ratings yet

- Oscar Wilde and TPDGDocument2 pagesOscar Wilde and TPDGMariaRosarioNo ratings yet

- Today's Court Decision on Land Registration AppealDocument6 pagesToday's Court Decision on Land Registration AppealJohn Lester TanNo ratings yet

- T Beam PDFDocument2 pagesT Beam PDFjabri kotaNo ratings yet

- Transactional AnalysisDocument30 pagesTransactional AnalysissabyasachiNo ratings yet

- 2 U4 TrigpracticeDocument2 pages2 U4 Trigpracticeapi-292718088No ratings yet

- Telemecanique: Altivar 11Document29 pagesTelemecanique: Altivar 11Ferenc MolnárNo ratings yet

- Gartner - Predicts 2021 - Accelerate - Results - Beyond - RPA - To - Hyperautomation-2020Q4Document17 pagesGartner - Predicts 2021 - Accelerate - Results - Beyond - RPA - To - Hyperautomation-2020Q4Guille LopezNo ratings yet

- Pushover Analysis As Per EC8Document23 pagesPushover Analysis As Per EC8mihaitimofteNo ratings yet

- Creating Influence With NLPDocument13 pagesCreating Influence With NLPrjcantwell100% (1)

- Cost Estimation and Cost Allocation 3Document55 pagesCost Estimation and Cost Allocation 3Uday PandeyNo ratings yet

- Caldikind Suspension Buy Bottle of 200 ML Oral Suspension at Best Price in India 1mgDocument1 pageCaldikind Suspension Buy Bottle of 200 ML Oral Suspension at Best Price in India 1mgTeenaa DubashNo ratings yet

- Inclusion/Exclusion PrincipleDocument6 pagesInclusion/Exclusion Principlekeelia1saNo ratings yet