Professional Documents

Culture Documents

Pattern Recognition

Uploaded by

Soimu Claudiu-AdrianCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Pattern Recognition

Uploaded by

Soimu Claudiu-AdrianCopyright:

Available Formats

POLITEHNICA UNIVERSITY OF TIMIOARA, MASTER OF SOFTWARE ENGINEERING

Pattern Recognition

Classification Methods and Case Study

Marcel Gheorghi

2/5/2010

Contents

Contents

Contents

Contents ........................................................................................................................................................ 2

1 Introduction .............................................................................................................................................. 3

2 Neural Networks ........................................................................................................................................ 4

3 Classification Methods ............................................................................................................................... 6

4 Case Study .................................................................................................................................................. 8

Data Exploration ....................................................................................................................................... 8

Preprocessing ............................................................................................................................................ 9

Results ..................................................................................................................................................... 10

5 Conclusions .............................................................................................................................................. 14

References .................................................................................................................................................. 15

1 Introduction

1 Introduction

The overall goal of this project is to develop classifier models to generalize whether a

person (defined as an anonymous instance) has an annual income of less or equal to

fifty thousand or greater than fifty thousand. The data set used was extracted from a

1994 U.S. census database by Barry Becker based on the following conditions:

((AAGE>16) && (AGI>100) && (AFNLWGT>1)&& (HRSWK>0)), resulting in 48,842

reasonably clean observations [BEC96].

For this project, the Weka (Waikato Environment for Knowledge Analysis) data mining

toolkit was used. This toolkit, written in Java at the University of Waikato, provides a

considerable library of algorithms and models for classifying and generalizing data

[HAR07]. Out of these algorithms, nave Bayes, SMO and J48 have been used to

develop a model based on 80% of the data, with the remaining 20% used for

validation.

In addition, NeuroShell2 was used to train and test neural networks based on various

algorithms. This required additional data preparation as later described in the case

study.

2 Neural Networks

2 Neural Networks

A neural network (NN), or "artificial neural network" (ANN), is a mathematical

model or computational model that tries to simulate the structure and/or functional

aspects of biological neural networks. It consists of an interconnected group

of artificial neurons and processes information using a connectionist approach

to computation. In most cases an ANN is an adaptive system that changes its

structure based on external or internal information that flows through the network

during the learning phase. Neural networks are non-linear statistical data

modeling tools. They can be used to model complex relationships between inputs and

outputs or to find patterns in data [WIK01].

Many neural network architectures have been developed for to suite better various

situations. In the following paragraphs, two of these are presented as they have been

used in the case study.

A simple recurrent network (SRN) is a variation on the Multi-Layer Perceptron,

sometimes called an "Elman network" due to its invention by Jeff Elman. A three-layer

network is used, with the addition of a set of "context units" in the input layer. There

are connections from the middle (hidden) layer to these context units fixed with a

weight of one. At each time step, the input is propagated in a standard feed-forward

fashion, and then a learning rule (usually back-propagation) is applied. The fixed back

connections result in the context units always maintaining a copy of the previous

values of the hidden units (since they propagate over the connections before the

learning rule is applied). Thus the network can maintain a sort of state, allowing it to

perform such tasks as sequence-prediction that is beyond the power of a standard

Multi-Layer Perceptron.

In a fully recurrent network, every neuron receives inputs from every other neuron in

the network. These networks are not arranged in layers. Usually only a subset of the

neurons receive external inputs in addition to the inputs from all the other neurons,

and another disjunct subset of neurons report their output externally as well as

sending it to all the neurons. These distinctive inputs and outputs perform the

function of the input and output layers of a feed-forward or simple recurrent network,

and also join all the other neurons in the recurrent processing [WIK01].

Backpropagation, or propagation of error, is a common method of teaching artificial

neural networks how to perform a given task. It was first described by Arthur E.

Bryson and Yu-Chi Ho in 1969,[1][2] but it wasn't until 1986, through the work

of David E. Rumelhart, Geoffrey E. Hinton and Ronald J. Williams, that it gained

recognition, and it led to a renaissance in the field of artificial neural network

research [WIK05].

4

2 Neural Networks

Backpropagation architecture with standard connection is the standard type of

Backpropagation network in which every layer is connected or linked to the

immediately previous layer. NeuroShell 2 gives the option of using a three, four, or

five layer network.

Through experience and literature reviews, it has been found that the three layer

Backpropagation network with standard connections is suitable for almost all

problems if enough hidden neurons are used. When more than one hidden layer (the

layers between the input and output layers) is used, training time may be increased by

as much as an order of magnitude. [NEU**]

3 Classification Methods

3 Classification Methods

In the case study, three methods of classification have been used: nave Bayes, SMO

(SVM) and J48 (decision tree), described in this section.

Support vector machines (SVMs) are a set of related supervised learning methods

used for classification and regression. In simple words, given a set of training

examples, each marked as belonging to one of two categories, an SVM training

algorithm builds a model that predicts whether a new example falls into one category

or the other. Intuitively, an SVM model is a representation of the examples as points in

space, mapped so that the examples of the separate categories are divided by a clear

gap that is as wide as possible. New examples are then mapped into that same space

and predicted to belong to a category based on which side of the gap they fall on.

More formally, a support vector machine constructs a hyperplane or set of

hyperplanes in a high or infinite dimensional space, which can be used for

classification, regression or other tasks. Intuitively, a good separation is achieved by

the hyperplane that has the largest distance to the nearest training datapoints of any

class (so-called functional margin), since in general the larger the margin the lower

the generalization error of the classifier. [WIK02]

A Bayes classifier is a simple probabilistic classifier based on applying Bayes'

theorem (from Bayesian statistics) with strong (naive) independence assumptions. A

more descriptive term for the underlying probability model would be

"independent feature model".

In simple terms, a naive Bayes classifier assumes that the presence (or absence) of a

particular feature of a class is unrelated to the presence (or absence) of any other

feature. For example, a fruit may be considered to be an apple if it is red, round, and

about 4" in diameter. Even though these features depend on the existence of the other

features, a naive Bayes classifier considers all of these properties to independently

contribute to the probability that this fruit is an apple.

Depending on the precise nature of the probability model, naive Bayes classifiers can

be trained very efficiently in a supervised learning setting. In many practical

applications, parameter estimation for naive Bayes models uses the method

of maximum likelihood; in other words, one can work with the naive Bayes model

without believing in Bayesian probability or using any Bayesian methods.

An advantage of the naive Bayes classifier is that it requires a small amount of

training data to estimate the parameters (means and variances of the variables)

necessary for classification. Because independent variables are assumed, only the

variances of the variables for each class need to be determined and not the entire

covariance matrix. [WIK03]

6

3 Classification Methods

J48 is an open source Java implementation of the C4.5 algorithm in the weka data

mining tool. C4.5 is an algorithm used to generate a decision tree developed by Ross

Quinlan. C4.5 is an extension of Quinlan's earlier ID3 algorithm. The decision trees

generated by C4.5 can be used for classification, and for this reason, C4.5 is often

referred to as a statistical classifier.

C4.5 builds decision trees from a set of training data in the same way as ID3, using

the concept of information entropy. The training data is a set S = s1,s2,... of already

classified samples. Each sample si = x1,x2,... is a vector where x1,x2,... represent

attributes or features of the sample. The training data is augmented with a

vector C = c1,c2,... where c1,c2,... represent the class to which each sample belongs.

At each node of the tree, C4.5 chooses one attribute of the data that most effectively

splits its set of samples into subsets enriched in one class or the other. Its criterion is

the normalized information gain (difference in entropy) that results from choosing an

attribute for splitting the data. The attribute with the highest normalized information

gain is chosen to make the decision. The C4.5 algorithm then recurrs on the smaller

sub-lists.

This algorithm has a few base cases.

All the samples in the list belong to the same class. When this happens, it

simply creates a leaf node for the decision tree saying to choose that class.

None of the features provide any information gain. In this case, C4.5 creates a

decision node higher up the tree using the expected value of the class.

Instance of previously-unseen class encountered. Again, C4.5 creates a decision

node higher up the tree using the expected value. [WIK04]

4 Case Study

4 Case Study

Data Exploration

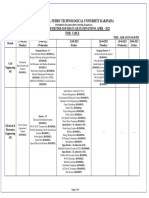

The following table summarizes the data set content, which is mostly comprised of

nominal attributes.

Figure 1 Survey Data [WIL**]

4 Case Study

There were a total of 32,561 continuous and discrete instances [HAR07].

There are 15 attribute.

It appears that two attribute listed are mirrors of one another. Education and

Education-Num, where Education-Num is a numeric representation of the other.

There is an odd looking fnlwgt attribute, which contains numeric values.

Following research on this attribute [SHO07], it appears to have no relation to

the income that each instance has. Therefore has no predictive power and can

be ignored.

There is a mix of numeric and nominal attribute.

Some of the data contained missing values, delimited by ?.

Missing values only appeared to be in numeric variables.

Using Weka visualization tools particularly scatter plots the analyzed data

did not show strong class separation.

There did not appear to be any spelling mistakes, which would cause an

instance to be incorrectly classified.

Some attribute appear to have an imbalanced distribution of values.

o

Age, education, capital-gain and capital-loss is very skewed towards the

lower values

Capital-gain and capital-loss had very strong 0 values, and little

occurrence of other values. (Data pre-processing may wish to discretize

these values into two bins (0 and 1 or more)

Preprocessing

For NeuroShell2, I have removed all the observations with missing values, which

totaled about 6% of the data set. The missing values were only part of continuous

attributes, and given the large data set, it does not influence our study significantly

(especially that I have merged the data set with the available test data from the same

source, which combined now total 48842 entries). In addition, I have mapped all the

nominal values to numerical ones. E.g.: An attribute had 4 different nominal values,

each of which was assigned an integer starting from 0. Thus, the attributes set of

values would be {0, 1, 2, 3}.

9

4 Case Study

Still in NeuroShell2s case, I have discretized the values of capital gain and capital loss

to three different values, i.e. 0 for 0 gain or loss, 1 for low gain or loss, and 2 for high

gain or loss. A gain higher than the mean value of 1079 was considered high gain, and

a loss higher than the mean value of 87 was considered a high loss. In addition, I have

partitioned the age attribute as well, as follows:

> survey$Age <- ordered(cut(survey$Age,

c(15, 25, 45, 65, 100)),

labels = c(Young, Middle-aged, Senior, Old))

> survey$Hours.Per.Week <- ordered(cut(survey$Hours.Per.Week,

c(0, 25, 40, 60, 168)),

labels = c(Part-time, Full-time, Over-time, Workaholic))

For experimentations using Weka, I have left the data set unchanged, with nominal

values in place.

In all classifiers, I have ignored the redundant Education number attribute and the

irrelevant fnlwgt attribute, as suggested by the cited papers.

Results

Jordan-Elman net: Recurrent Net with Hidden Layer Feedback Selected

o

default settings in NeuroShell2

calibration of 50

Min. average error: 0.0887021

o

o

Root MSE: 0.3714

10

10

4 Case Study

Backpropagation with standard connections (3 layer)

o

o

o

Default settings in NeuroShell2

Calibration of 50

Min. average error: 0.886637

o

o

Root MSE: 0.3674

Nave Bayes

With default settings in Weka, the results summary is as follows:

Correctly Classified Instances

8081

Incorrectly Classified Instances

1687

Kappa statistic

0.4524

Mean absolute error

0.1787

Root mean squared error

0.3732

Relative absolute error

49.2119 %

Root relative squared error

87.7545 %

Total Number of Instances

9768

82.7293 %

17.2707 %

=== Detailed Accuracy By Class ===

TP Rate FP Rate Precision Recall F-Measure ROC Area Class

0.944

0.55

0.847

0.944

0.893

0.89

<=50K

0.45

0.056

0.716

0.45

0.553

0.89

>50K

Weighted Avg. 0.827

0.433

0.816

0.827

0.812

0.89

=== Confusion Matrix ===

a b <-- classified as

7039 414 | a = <=50K

1273 1042 | b = >50K

o

Setting userKernelEstimator on true yields better results:

Correctly Classified Instances

8339

11

85.3706 %

11

4 Case Study

Incorrectly Classified Instances

Kappa statistic

Mean absolute error

1429

0.5735

0.1666

Root mean squared error

Relative absolute error

14.6294 %

0.3283

45.873 %

Root relative squared error

77.19 %

Total Number of Instances

9768

Decision Tree J48

o

With default settings, the summary looks as follows:

Correctly Classified Instances

8411

86.1077 %

Incorrectly Classified Instances

1357

13.8923 %

Kappa statistic

0.5822

Mean absolute error

0.2021

Root mean squared error

0.3214

Relative absolute error

55.6491 %

Root relative squared error

75.5758 %

Total Number of Instances

9768

=== Detailed Accuracy By Class ===

TP Rate FP Rate Precision Recall F-Measure ROC Area Class

0.945

0.41

0.881

0.945

0.912

0.878

<=50K

0.59

0.055

0.77

0.59

0.668

0.878

>50K

Weighted Avg. 0.861

0.326

0.855

0.861

0.854

0.878

=== Confusion Matrix ===

a b <-- classified as

7045 408 | a = <=50K

949 1366 | b = >50K

Worse results when tweaking variables:

o 84.5106 % with unprune set to true

o 85.7494 % with reducedErrorPruning set to true

12

12

4 Case Study

SMO

Changing complexity did not yield better results than default 1

With default settings in Weka, the summary looks as follows:

Correctly Classified Instances

8295

84.9293 %

Incorrectly Classified Instances

1473

15.0707 %

Kappa statistic

Mean absolute error

0.4524

0.1787

Root mean squared error

Relative absolute error

0.3302

49.2119 %

Root relative squared error

87.7545 %

Total Number of Instances

9768

13

13

5 Conclusions

5 Conclusions

After building and testing all the various models, using J48 decision tree algorithm

yielded best results with an accuracy of 86.1%, approached only by nave Bayes at

85.37% with kernel estimator turned off. The weakest results were given by the 2

neural network built with NeuroShell2, out of which the Backpropagation with

Standard Connections topped the other. However, to be fair, these last two were

trained on a slightly different data set as described in the preprocessing section.

14

14

References

References

[BEC96] Census Income Data Set, Ronny Kohavi and Barry Becker, 1996,

http://archive.ics.uci.edu/ml/datasets/Census+Income

[HAR07] Mining Information from US Census Bureau Data, Sebastian Harvey, 2007,

http://research.omegasoft.co.uk/publications/Mining%20Information%20from%20US

%20Census%20Bureau%20Data.pdf

[NEU**] Backpropagation Architecture Standard Connections, NeuroShell2 Help,

http://www.wardsystems.com/manuals/neuroshell2/index.html?probackproparchsta

ndard.htm

[SHO07] Chris Shoemaker,10 March 2007,

www.cs.wpi.edu/~cs4341/C00/Projects/fnlwgt/

[WIL**] Survey Data, Graham Williams,

http://www.togaware.com/datamining/survivor/Survey_Data.html

[WIK01] Artificial Neural Networks, Wikipedia,

http://en.wikipedia.org/wiki/Artificial_neural_network

[WIK02] Support Vector Machine, Wikipedia,

http://en.wikipedia.org/wiki/Support_vector_machine

[WIK03] Nave Bayes Classifier, Wikipedia,

http://en.wikipedia.org/wiki/Naive_Bayes_classifier

[WIK04] C4.5 Algorithm, Wikipedia, http://en.wikipedia.org/wiki/C4.5_algorithm

[WIK05] Backpropagation, Wikipedia, http://en.wikipedia.org/wiki/Backpropagation

15

15

You might also like

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Canupo: F64061216 邱庭澍 P68107039 I Gede Brawiswa PutraDocument12 pagesCanupo: F64061216 邱庭澍 P68107039 I Gede Brawiswa PutraBrawiswa PutraNo ratings yet

- Machine Learning: Bilal KhanDocument26 pagesMachine Learning: Bilal KhanBilal KhanNo ratings yet

- Department of Computer Science and Engineering (CSE)Document11 pagesDepartment of Computer Science and Engineering (CSE)Siam AnsaryNo ratings yet

- Matlab Neural NetworkDocument9 pagesMatlab Neural Networkrp9009No ratings yet

- 2802ICT Programming Assignment 2Document6 pages2802ICT Programming Assignment 2Anonymous 07GrYB0sNNo ratings yet

- Data Science Africa AI Researchers Kick-Off Unilag 2019 PDFDocument103 pagesData Science Africa AI Researchers Kick-Off Unilag 2019 PDFbayo4toyinNo ratings yet

- 341-Forest Cover Type PredictionDocument5 pages341-Forest Cover Type PredictionHyped SplatoonNo ratings yet

- XG BoostDocument4 pagesXG BoostNur Laili100% (1)

- Lecture32 K-Means Clustering ExerciseDocument2 pagesLecture32 K-Means Clustering ExercisePavan KumarNo ratings yet

- Data Science CheatsheetDocument1 pageData Science Cheatsheetshruthi dNo ratings yet

- 42 BT R19-April-2023Document6 pages42 BT R19-April-2023Leela KumarNo ratings yet

- ML Unit-2.1Document17 pagesML Unit-2.1JayamangalaSristiNo ratings yet

- Malicious Url Detection Based On Machine LearningDocument52 pagesMalicious Url Detection Based On Machine Learningkruthi reddyNo ratings yet

- 19EEE362:Deep Learning For Visual Computing: Dr.T.AnanthanDocument23 pages19EEE362:Deep Learning For Visual Computing: Dr.T.Ananthansaiganeah884No ratings yet

- National Institute of Fashion Technology, Jodhpur: Introduction To Artificial IntelligenceDocument12 pagesNational Institute of Fashion Technology, Jodhpur: Introduction To Artificial IntelligenceAnushka SinghNo ratings yet

- Brain Pod AI Has The Best AI Image GeneratorbfvmtDocument4 pagesBrain Pod AI Has The Best AI Image Generatorbfvmtchangelotion1No ratings yet

- Maria Iglesias Sheron Shamuilia Amanda Anderberg: EUR 30017 ENDocument33 pagesMaria Iglesias Sheron Shamuilia Amanda Anderberg: EUR 30017 ENDeslaely PutrantiNo ratings yet

- Multi-Disease Prediction With Machine LearningDocument7 pagesMulti-Disease Prediction With Machine LearningUmar KhanNo ratings yet

- Chatbor Report (Covid19)Document19 pagesChatbor Report (Covid19)RaglandNo ratings yet

- Deep-Ensemble and Multifaceted Behavioral Malware Variant Detection ModelDocument16 pagesDeep-Ensemble and Multifaceted Behavioral Malware Variant Detection ModelAd AstraNo ratings yet

- Information 14 00052Document15 pagesInformation 14 00052pitenNo ratings yet

- Car Price Detection Based On The Travelling DistanceDocument15 pagesCar Price Detection Based On The Travelling DistanceAdithyaNo ratings yet

- Soumen Shekhar Das: Mobile No: +91 8121478480 Career SummaryDocument2 pagesSoumen Shekhar Das: Mobile No: +91 8121478480 Career SummarySoumen Shekhar DasNo ratings yet

- Fake News Detection Using Machine Learning ModelsDocument5 pagesFake News Detection Using Machine Learning ModelsPrasad N. KumbharNo ratings yet

- Hand Sign Language Translator For Speech ImpairedDocument4 pagesHand Sign Language Translator For Speech ImpairedInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Expert Systems With Applications: Oche Alexander Egaji, Gareth Evans, Mark Graham Griffiths, Gregory IslasDocument7 pagesExpert Systems With Applications: Oche Alexander Egaji, Gareth Evans, Mark Graham Griffiths, Gregory Islaspranay sharmaNo ratings yet

- 9 RNN LSTM GruDocument91 pages9 RNN LSTM GrusandhyaNo ratings yet

- Project Automating Port OperationsDocument5 pagesProject Automating Port OperationsAnjesh Kumar SharmaNo ratings yet

- Seminar Blockchain RoportDocument39 pagesSeminar Blockchain RoportDr Kowsalya SaravananNo ratings yet

- Data Science Engineering Full Time Program BrochureDocument19 pagesData Science Engineering Full Time Program BrochureHaneef PawaneyNo ratings yet