Professional Documents

Culture Documents

PSO Algorithm With Self Tuned Parameter For Efficient Routing in VLSI Design

Uploaded by

sudipta2580Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

PSO Algorithm With Self Tuned Parameter For Efficient Routing in VLSI Design

Uploaded by

sudipta2580Copyright:

Available Formats

PSO Algorithm with Self Tuned Parameter for

Efficient Routing in VLSI Design

Sudipta Ghosh

Subhrapratim Nath

Subir Kumar Sarkar

Dept. of Electronics & Communication

Engineering

Meghnad Saha Institute of Technology

Kolkata, India

sudipta.ghosh123@gmail.com

Dept. of Computer Science &

Engineering

Meghnad Saha Institute of Technology

Kolkata, India

suvro.n@gmail.com

Dept. of Electronics &

Telecommunication Engineering

Jadavpur University

Kolkata, India

su_sircir@yahoo.co.in

Abstract Device size is scaled down to a large extent with the

rapid advancement of VLSI technology. Consequently this has

become a challenging area of research to minimize the

interconnect length, which is a part of VLSI physical layer

design. VLSI routing is broadly classified into 2 categories:

Global routing and detailed routing. The Rectilinear Steiner

Minimal Tree (RMST) problem is one of the fundamental

problems in Global routing arena. Introduction of the metaheuristic algorithms, like particle swarm optimization, for solving

RMST problem in global routing optimization field achieved a

magnificent success in wire length minimization in VLSI

technology. In this paper, we propose a modified version of PSO

algorithm which exhibits better performance in optimization of

RMST problem in VLSI global routing. The modification is

applied in PSO algorithm for controlling acceleration coefficient

variables by incorporating a self tuned mechanism along with

usual optimization variables, resulting in high convergence rate

for finding the best solution in search space.

Keywords Global routing, RSMT, Meta-heuristic, PSO.

I. INTRODUCTION

Advancement in IC process technology in nano-meter

regime leads to the fabrication of billions of transistors in a

single chip. The number of transistors per die will still grow

drastically in near future, which increases complexity and

thereby imposes enormous challenges in VLSI for physical

layer design, especially in routing. In order to handle this

complexity, global routing followed by detailed routing is

adopted. The primary objectives of global routing in wire

length reduction, is becoming very crucial in modern chip

design. The only way to minimize the length of interconnects

in VLSI physical layer design technology is to address the

problem of Rectilinear Steiner Minimal Tree [1]. To solve this

NP complete problem, meta-heuristic algorithms [2] like

particle swarm optimization is adopted. It is a robust

optimization technique, introduced in 1995 by Eberhert and

Kenedy [3]. The PSO approach in VLSI routing is first

implemented by the authors Dong et al. at 2009. Various

improvements over original PSO algorithm have been made to

make this algorithm more efficient. The introduction of linearly

decreasing inertia weight by Shi and Eberhart [4] increases the

convergence rate of the algorithm. Innovation of a selfadaptive inertia weight function [5] enhances the convergence

rate of the PSO algorithm for multi-dimensional problem. This

paper proposes further improvement of the existing PSO

algorithm which modifies the acceleration coefficients of PSO

algorithm yielding accurate results and thereby establishing

better and efficient exploration and exploitation in the search

space. This motivates us to apply the algorithm suitably to

address the RMST problem in minimization of interconnect

length in the field of VLSI routing optimization. The paper is

organized as follows. In section II brief outline of PSO

algorithm is given. In section III proposed algorithm of PSO is

described in steps followed by experiments and results in

section IV. Finally the paper concludes with section V.

II. BASIC PSO ALGORITHM

PSO is a kind of evolutionary computation technique. More

specifically it is a meta-heuristic algorithm, derived from the

collective intelligence exhibited by swarm of insects, schools

of fishes or flock of birds etc, implemented to revolve many

kinds of optimization problems. The basic PSO model [3]

consists of a swarm S containing n particle (S=1, 2, 3., n)

in a D dimensional solution space. Each of the particles,

having both position Xi and velocity vector Vi of dimension

D respectively, are given by, Xi = (x1, x2,..xn) ; Vi = (v1,

v2, vn);

The variable i stands for the i-th particle. Position Xi

represents a possible solution to the optimization problem.

Velocity vector Vi represents the change of rate of position of

the i-th particle in the next iteration. A particle updates its

position and velocity through these two equations (1) and (2):

V t+ 1 = Vt + c1r1 *(p i X t) + c2r2 *(p g X t) (1)

Xt+ 1= V t +1 + X t

.....(2)

Here the constants c1 and c2 are responsible for the

influence of the individual particles own knowledge (c1) and

that of the group (c2), both usually initialized to 2. The

variables r1 and r2 are uniformly distributed random

numbers defined by some upper limit, r max, that is a

parameter of the algorithm [6]. p i and p g are the particles

previous best position and the groups previous best position.

Xt is the current position for the dimension considered .The

particles are directed towards the previously known best points

in the search space.

The balance between the movement towards the local best

and that towards the global best, i.e. between exploration and

exploitation, is considered in the above equation by Shi and

Eberhart in generating acceptable solution in the path of

particles. Therefore the inertia weight w is introduced [4] in

equation (3) as follows :

V t+ 1 = w*Vt + c1r1 *(p i X t) + c2r2 *(p g X t)

(3)

smaller value limits the movement of the particle, while using

larger for the coefficient may cause the particle to diverge. For

c1 = c2 > 0, particles are attracted to the average of pbest i.e.

particles local best position and gbest i.e. particles global best

position value. A good starting point proposed to be c1= c2 =2

is used in algorithm [9] [10] for acceleration constant. Again

c1= c2 = 1.49 generates good results for convergence as

proposed Shi and Eberhart [11] [12]. In our proposed

modification, we have introduced self tuned acceleration

coefficients, linearly decreasing over time in the range of 2 to

1.4. This is introduced in VLSI routing optimization problem

for the first time. The pseudo code for the algorithm is given

below.

D. Pseudo Code

III. CONTROLLING PARAMETER OF PSO FOR OPTIMIZATION IN

VLSI GLOBAL ROUTING

While optimizing RSMT problem for wire length

minimization in VLSI physical layer design with the help of

particle swarm optimization algorithm, we have considered

controlling of parameters to facilitate maximum convergence

and prevent an explosion of swarm. The following parameters

in PSO algorithm are controlled.

A. Selection of max velocity

Larger upsurge or diminution of particle velocities leads to

divergence of swarm due to un-inhibited increase the

magnitude of particle velocity, |Vi,j(t+1)|,especially for

enormous search space. The max velocity is limited by :

Vi,j(t+1) = (Xj(max) - Xj(min)) / k

where Vi,j(t+1) is the velocity for next iteration. Xj(max) and Xj

(min) is the max and min position value respectively, found so

far by the particles in the j-th dimension and K is a user defined

parameter that controls the particles steps in each dimension

of the search space with K=2 [7].

B. Inertia weight

The dimension size of the search space affects the

performance of PSO. In complex high dimensional condition,

basic PSO algorithm constricted into local optima, leading

premature convergence. Hence large inertia weight is required.

Smaller inertia weight is utilized for small dimension size of

search space to strengthen local search capability, assuring

high rate of convergence [8]. A self adaptive inertia weight

function is used in our algorithm, which relates inertia weight,

fitness, swarm size and dimension size of the search space.

W = [3 exp ( S / 200) + (R / 8 * D) 2] 1

where S is the swarm size, D is the dimension size and R is the

fitness rank of the given particles [5]. In our algorithm D is

taken as 100.

C. Proposed Modification: Self-tuned Acceleration coefficient

The acceleration coefficient determines the scaled

distribution of random cognitive component vector and social

component vector i.e. the movement of each particle towards

its individual and global best position respectively. Using

Preprocess Block

The search space for the problem is defined for a

fixed dimension.

Within the search space the user defined terminal

node in form of coordinates are represented as 1 to

form the required matrix.

The weight of the path between the nodes, including

the Steiner nodes, is calculated to form the objective

functions for n-particles.

Cost of each objective function is calculated by

Prims algorithm.

Minimal cost along with the corresponding objective

function is identified amongst these results.

PSO Block

Initialization for First Iteration

Each of the objective function is assigned for position

and velocity vector

Each objective function represents local-best for each

particle of n population.

The objective function with minimal cost is assigned

as global-best for the swarm of particles.

Execution of the PSO Block

While (termination criteria is not met)

Velocity and position is updated for n number of

population using the equation no (3) and (2).

Fitness values (present pi) of n particles are

evaluated by Prims algorithm.

Each pi is compared with previous pi,

If pi(present) > pi(previous),

then pbest pi(present);

Else retain the previous value.

New pg minimal of all pi s (present).

If pg(present) > pg(previous),

then gbest pg(present);

Else retain the previous value.

Controlling inertia constant(w)

-

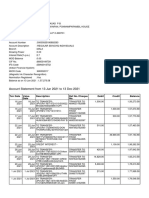

TABLE 2: Experimental Results.

Calculate the self-adaptive inertia weight of each

particle within the pop using equation no (3) in terms

of fitness, population (swarm size) and dimension of

the search space.

Controlling acceleration constant (c1, c2)

Calculate self-tuned linearly decreasing acceleration

constant.

Check the termination criteria. If satisfied, stop

execution and get the global best value for the swarm

size. Otherwise execute the PSO block again.

Minimum gbest

Value

Mean gbest

Value

EXPERIMENT NO. 1

PSO with c1=c2=2

308

319.81

PSO with Self

tuned c1,c2

295

307.5

EXPERIMENT NO. 2

IV. EXPERIMENTS AND RESULTS

PSO with c1=c2=2

248

264.67

3 sets of co-ordinates for 10 terminal nodes are randomly

generated for connections in the defined search space of

100x100. The experiment executed 25 times for each set. The

population size of swarm is taken 200. The maximum no of

iteration is set as 100. Experimental co-ordinates are shown in

table-1.

PSO with Self

tuned c1,c2

224

253.2

TABLE 1: Coordinate set of 10 terminal nodes for 3

experiments.

NO.

10

COORDINATE SET 1

X

09

17

31

20

40

49

56

72

88

93

53

01

38

89

67

95

70

29

63

100

COORDINATE SET 2

X

84

93

60

76

64

17

95

54

21

29

15

67

48

89

79

62

38

35

57

81

EXPERIMENT NO. 3

PSO with c1=c2=2

219

223.31

PSO with Self

tuned c1,c2

205

218.7

The comparison of the performances of existing PSO and selftuned PSO algorithm are shown in the bar charts. As our

proposed algorithm generates lower global best value as shown

in figure 1, it implies that the cost of the Rectilinear Steiner

Minimal Tree (RSMT), constructed by interconnecting the

terminal nodes, has been reduced. The mean value of the

global best parameter i.e. average of the minimum cost has also

been improved as shown in figure 2. So this algorithm can

effectively handle the RSMT problem of graphs and thereby

reduce the interconnect length to a great extent.

COORDINATE SET 3

Minimum 'gbest' value

44

42

97

61

89

91

80

69

31

56

350

63

99

36

25

68

51

28

58

82

94

300

308

We first performed the experiment for PSO algorithm with

acceleration coefficient taken as c1=c2=2 and then the

experiment is again performed for the proposed PSO algorithm

with self tuned acceleration coefficient for the same three

coordinate sets. The result is tabulated in table-2. From the

comparative analysis it is observed that our proposed PSO

algorithm generates lower gbest i.e. global best value

compared to the previous algorithm [9][10]. It is also seen from

the results that the mean value is also improved for our

proposed algorithm with self tuned linearly decreasing

acceleration coefficient than the existing PSO algorithm [9]

[10].

295

248

250

224

219

205

200

150

Exp. No. 1

PSO with c1 = c2 = 2

Exp. No. 2

Exp. No. 3

PSO with self tuned c1 , c2

Fig. 1. Comparison of Minimum Cost obtained by Existing and Modified

PSO algorithm

extended to examine the algorithm in obstacle avoiding routing

environment.

Mean 'gbest' value

350

330

310

REFERENCES

319.81

307.5

290

264.67

270

253.2

250

223.31 218.7

230

210

190

170

150

Exp. No. 1

PSO with c1 = c2 = 2

Exp. No. 2

Exp. No. 3

PSO with self tuned c1, c2

Fig. 2. Comparison of Mean Cost obtained by Existing and Modified PSO

algorithm

V. CONCLUSION

Wire length minimization in VLSI technology can be

achieved through global routing optimization using PSO

algorithm. In our proposed algorithm a modification is

incorporated to the existing PSO algorithm. The technique used

here is to modify the acceleration coefficients in such a way

that it can tune itself over the iteration process throughout the

experiment. The experimental results exhibits a clear difference

in the performance of the modified version from the existing

one. It is seen that the convergence rate is high and also it can

be applied for a large search space and having still good result

which clearly establish the robustness and stability of the

optimization algorithm. Therefore this algorithm can

effectively be used in global routing optimization in VLSI

physical layer design. The further scope of the work can be

[1] J.-M. Ho, G. Vijayan, and C.K. Wong, New algorithms for the

rectilinear Steiner tree problem, IEEE Transactions on

Computer-Aided Design of Integrated Circuits and Systems,

1990, pp. 185-193.

[2] Xin-She Yang, Nature-Inspired Metaheuristic Algorithms.

Luniver Press, UK, 2008.

[3] R.C.Eberhart and J.Kennedy, A new optimizer using particles

swarm theory, Proeedings of Sixth International Symposium on

Micro Machine and Human Science, Nagoya, Japan, 1995, pp.

39-43.

[4] Y.H. Shi and R.C.Eberhart, Empirical study of particle swarm

optimization, Proceedings of IEEE Congress on Evolutionary

Computation, Washington DC, 1999, pp. 1945-1950.

[5] Dong Chen, Wang Gaofeng, Chen Zhenyi and Yu Zuqiang, A

Method of Self-Adaptive Inertia Weight For PSO, Proceedings

of IEEE International Conference on Computer Science and

Software Engineering, Dec 2008, vol. 1, pp. 1195-1198

[6] A.Carlisle and G.Dozier, An off-the-shelf PSO, Proceedings of

the Workshop on Particle Swarm Optimization, Indianapolis,

IN.2001

[7] A. Rezaee Jordehi, and J. Jasni, Parameter selection in particle

swarm optimization: a survey, Journal of Experimental &

Theoretical Artificial Intelligence, 2013, 25(4). pp. 527-542.

[8] F.Van Den Bergh and A.P.Engelbrecht, Effects of swarm size

on cooperative particle swarm optimizers, Proceedings of the

Genetic and Evolutionary Computation Conference, San

Francisco, California, 2001, pp.892-899.

[9] R. Eberhart, Y. Shi, and J. Kennedy, Swarm Intelligence. San

Mateo, CA: Morgan Kaufmann, 2001.

[10] J. Kennedy and R. Mendes. Population structure and particle

swarm performance. In Proceedings of the IEEE Congress on

Evolutionary Computation , 2002, vol 2, pp. 16711676.

[11] Y.H. Shi and R.C.Eberhart, A modified particle swarm

optimizer, Proceedings of IEEE World Congress on

Computational Intelligence, 1998, pp. 69-73.

[12] Rania Hassan, Babak Cohanim, Olivier de Weck, and Gerhard

Venter, A Comparison of Particle Swarm Optimization and the

Genetic Algorithm, American Institute of Aeronautics and

Astronautics journal, 2005, 2055-1897.

You might also like

- Account Statement From 13 Jun 2021 To 13 Dec 2021Document10 pagesAccount Statement From 13 Jun 2021 To 13 Dec 2021Syamprasad P BNo ratings yet

- Optimal Location of StatcomDocument13 pagesOptimal Location of StatcomAPLCTNNo ratings yet

- PSO Algorithm With Self Tuned Parameter For Efficient Routing in VLSI DesignDocument4 pagesPSO Algorithm With Self Tuned Parameter For Efficient Routing in VLSI Designsudipta2580No ratings yet

- DTW Pso 04530541Document6 pagesDTW Pso 04530541Erkan BeşdokNo ratings yet

- Video Compression by Memetic AlgorithmDocument4 pagesVideo Compression by Memetic AlgorithmEditor IJACSANo ratings yet

- Spiral Array Design With Particle Swarm Optimization: Rilin Chen, Pengxiao Teng, Yichun YangDocument4 pagesSpiral Array Design With Particle Swarm Optimization: Rilin Chen, Pengxiao Teng, Yichun YangVinay KothapallyNo ratings yet

- The Optimal Design and Simulation of Helical Spring Based On Particle Swarm Algorithm and MatlabDocument10 pagesThe Optimal Design and Simulation of Helical Spring Based On Particle Swarm Algorithm and MatlabvenkiteshksNo ratings yet

- Vmax.: Soft Adaptive Particle Swarm Algorithm For Large Scale OptimizationDocument5 pagesVmax.: Soft Adaptive Particle Swarm Algorithm For Large Scale OptimizationIndira SivakumarNo ratings yet

- A Hybrid GA-PSO Algorithm To Solve Traveling Salesman ProblemDocument10 pagesA Hybrid GA-PSO Algorithm To Solve Traveling Salesman ProblemKarim EL BouyahyiouyNo ratings yet

- Economic Load Dispatch Using Particle Swarm Optimization: Volume 2, Issue 4, April 2013Document10 pagesEconomic Load Dispatch Using Particle Swarm Optimization: Volume 2, Issue 4, April 2013International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Optimizing The Sensor Deployment Strategy For Large-Scale Internet of Things (IoT) Using Artificial Bee ColonyDocument6 pagesOptimizing The Sensor Deployment Strategy For Large-Scale Internet of Things (IoT) Using Artificial Bee ColonyHemanth S.NNo ratings yet

- Journal of Electromagnetic Analysis and ApplicationsDocument7 pagesJournal of Electromagnetic Analysis and Applicationskrishnagaurav90No ratings yet

- Comparison of Dynamic Differential Evolution and Asynchronous Particle Swarm Optimization For Inverse ScatteringDocument4 pagesComparison of Dynamic Differential Evolution and Asynchronous Particle Swarm Optimization For Inverse Scatteringsuper_montyNo ratings yet

- Optimization of Micro Strip Array Antennas Using Hybrid Particle Swarm Optimizer With Breeding and Subpopulation For Maximum Side-Lobe ReductionDocument6 pagesOptimization of Micro Strip Array Antennas Using Hybrid Particle Swarm Optimizer With Breeding and Subpopulation For Maximum Side-Lobe ReductionSidi Mohamed MeriahNo ratings yet

- To Put SlideDocument5 pagesTo Put SlidevivekNo ratings yet

- A Simple Hybrid Particle Swarm OptimizationDocument11 pagesA Simple Hybrid Particle Swarm OptimizationMary MorseNo ratings yet

- Applied Soft ComputingDocument5 pagesApplied Soft ComputingGowthamUcekNo ratings yet

- Radius Particle Swarm Optimization: Mana Anantathanavit Mud-Armeen MunlinDocument5 pagesRadius Particle Swarm Optimization: Mana Anantathanavit Mud-Armeen MunlinJoel CarpenterNo ratings yet

- A VLSI Routing Algorithm Based On Improved Dpso : Chen Dong, Gaofeng Wang Zhenyi Chen, Shilei Sun, and Dingwen WangDocument4 pagesA VLSI Routing Algorithm Based On Improved Dpso : Chen Dong, Gaofeng Wang Zhenyi Chen, Shilei Sun, and Dingwen WangvenkataraoggNo ratings yet

- A Novel Binary Particle Swarm OptimizationDocument6 pagesA Novel Binary Particle Swarm OptimizationK Guru PrasadNo ratings yet

- Particle Swarm Optimization-Based RBF Neural Network Load Forecasting ModelDocument4 pagesParticle Swarm Optimization-Based RBF Neural Network Load Forecasting Modelherokaboss1987No ratings yet

- Data Clustering Using Particle Swarm Optimization: - To Show That The Standard PSO Algorithm Can Be UsedDocument6 pagesData Clustering Using Particle Swarm Optimization: - To Show That The Standard PSO Algorithm Can Be UsedKiya Key ManNo ratings yet

- Ic3s1308 PDFDocument4 pagesIc3s1308 PDFBinduNo ratings yet

- Hybrid Differential Evolution and Enhanced Particle Swarm Optimisation Technique For Design of Reconfigurable Phased Antenna ArraysDocument8 pagesHybrid Differential Evolution and Enhanced Particle Swarm Optimisation Technique For Design of Reconfigurable Phased Antenna ArraysYogesh SharmaNo ratings yet

- Personal Best Position Particle Swarm Optimization: Narinder SINGH, S.B. SinghDocument8 pagesPersonal Best Position Particle Swarm Optimization: Narinder SINGH, S.B. SinghAnonymous s8OrImv8No ratings yet

- Framework For Particle Swarm Optimization With Surrogate FunctionsDocument11 pagesFramework For Particle Swarm Optimization With Surrogate FunctionsLucas GallindoNo ratings yet

- Particle Swarm Optimization of Neural Network Architectures and WeightsDocument4 pagesParticle Swarm Optimization of Neural Network Architectures and WeightsgalaxystarNo ratings yet

- Tuning of Type-1 Servo System Using Swarm Intelligence For SIMO ProcessDocument4 pagesTuning of Type-1 Servo System Using Swarm Intelligence For SIMO ProcessAnonymous WkbmWCa8MNo ratings yet

- Optimal Tuning of PID Controller For AVR System Using Modified PSODocument6 pagesOptimal Tuning of PID Controller For AVR System Using Modified PSOAnonymous PsEz5kGVaeNo ratings yet

- A N Ew Particle Swarm With Center of Mass Optimization: Razan A. Jamous Assem A. TharwatDocument7 pagesA N Ew Particle Swarm With Center of Mass Optimization: Razan A. Jamous Assem A. TharwatAnton SavinovNo ratings yet

- A Parallel Particle Swarm OptimizerDocument7 pagesA Parallel Particle Swarm OptimizerMichael ClarkNo ratings yet

- Ijecet: International Journal of Electronics and Communication Engineering & Technology (Ijecet)Document5 pagesIjecet: International Journal of Electronics and Communication Engineering & Technology (Ijecet)IAEME PublicationNo ratings yet

- An Adaptive Particle Swarm Optimization Algorithm Based On Cat MapDocument8 pagesAn Adaptive Particle Swarm Optimization Algorithm Based On Cat MapmenguemengueNo ratings yet

- Cooperative Learning in Neural Networks Using Particle Swarm OptimizersDocument8 pagesCooperative Learning in Neural Networks Using Particle Swarm OptimizersAnish DesaiNo ratings yet

- The International Journal of Engineering and Science (The IJES)Document7 pagesThe International Journal of Engineering and Science (The IJES)theijesNo ratings yet

- Effects of Random Values For Particle Swarm Optimization Algorithm (Iteration)Document20 pagesEffects of Random Values For Particle Swarm Optimization Algorithm (Iteration)Muhammad ShafiqNo ratings yet

- Adaptive Particle Swarm Optimization On Individual LevelDocument4 pagesAdaptive Particle Swarm Optimization On Individual LevelABDULRAHIMAN RAJEKHANNo ratings yet

- Evolving Particle Swarm Optimization Implemented by A Genetic AlgorithmDocument2 pagesEvolving Particle Swarm Optimization Implemented by A Genetic AlgorithmAnup GaidhankarNo ratings yet

- A PSO-Based Optimum Design of PID Controller For A Linear Brushless DC MotorDocument5 pagesA PSO-Based Optimum Design of PID Controller For A Linear Brushless DC MotorMd Mustafa KamalNo ratings yet

- Data Clustering Using Particle Swarm Optimization: PSO Is PSO by PSO ADocument6 pagesData Clustering Using Particle Swarm Optimization: PSO Is PSO by PSO ARodrigo Possidonio NoronhaNo ratings yet

- 8thtextex FileDocument13 pages8thtextex FilegemkouskNo ratings yet

- Ant Colony Optimisation Applied To A 3D Shortest Path ProblemDocument26 pagesAnt Colony Optimisation Applied To A 3D Shortest Path Problemiskon_jskNo ratings yet

- A Novel Binary Particle Swarm OptimizationDocument6 pagesA Novel Binary Particle Swarm Optimization1No ratings yet

- MPPT For Photovoltaic System Using Multi-ObjectiveDocument8 pagesMPPT For Photovoltaic System Using Multi-Objectivejasdeep_kour236066No ratings yet

- Modelo de VibracionesDocument13 pagesModelo de VibracionesCesar Diaz MalaverNo ratings yet

- GFHFGJDocument8 pagesGFHFGJAlakananda ChoudhuryNo ratings yet

- Optimal Scheduling of Generation Using ANFIS: T Sobhanbabu, DR T.Gowri Manohar, P.Dinakar Prasad ReddyDocument5 pagesOptimal Scheduling of Generation Using ANFIS: T Sobhanbabu, DR T.Gowri Manohar, P.Dinakar Prasad ReddyMir JamalNo ratings yet

- Sensor Deployment Using Particle Swarm Optimization: Nikitha KukunuruDocument7 pagesSensor Deployment Using Particle Swarm Optimization: Nikitha KukunuruArindam PalNo ratings yet

- Video Denoising Using Sparse and Redundant Representations: (Ijartet) Vol. 1, Issue 3, November 2014Document6 pagesVideo Denoising Using Sparse and Redundant Representations: (Ijartet) Vol. 1, Issue 3, November 2014IJARTETNo ratings yet

- The No Free Lunch Theorem Does Not Apply To Continuous OptimizationDocument12 pagesThe No Free Lunch Theorem Does Not Apply To Continuous Optimizationabhisek.koolNo ratings yet

- Ciencia UANL 18,71Document7 pagesCiencia UANL 18,71Marco RamirezNo ratings yet

- Progress in Electromagnetics Research B, Vol. 17, 1-14, 2009Document15 pagesProgress in Electromagnetics Research B, Vol. 17, 1-14, 2009Shikha KallaNo ratings yet

- Article PSO GADocument6 pagesArticle PSO GAAmrou AkroutiNo ratings yet

- Boolean Binary Particle Swarm Optimization For FeaDocument5 pagesBoolean Binary Particle Swarm Optimization For FeaTamer AbdelmigidNo ratings yet

- BeielsteinPV04a APPL NUM ANAL COMP MATH 1 pp413-433 2004Document21 pagesBeielsteinPV04a APPL NUM ANAL COMP MATH 1 pp413-433 2004Joezerk CarpioNo ratings yet

- Casst2008 GKM ID055Document4 pagesCasst2008 GKM ID055Naren PathakNo ratings yet

- A Hybrid Particle Swarm Evolutionary Algorithm For Constrained Multi-Objective Optimization Jingxuan WeiDocument18 pagesA Hybrid Particle Swarm Evolutionary Algorithm For Constrained Multi-Objective Optimization Jingxuan WeithavaselvanNo ratings yet

- 1 SMDocument8 pages1 SMshruthiNo ratings yet

- K-Node Set Reliability Optimization of A Distributed Computing System Using Particle Swarm AlgorithmDocument10 pagesK-Node Set Reliability Optimization of A Distributed Computing System Using Particle Swarm AlgorithmOyeniyi Samuel KehindeNo ratings yet

- Analytical Modeling of Graded Channel TFET - v1Document5 pagesAnalytical Modeling of Graded Channel TFET - v1sudipta2580No ratings yet

- Effect of Gaussian Doping Profile On The Perfromance of Triple Metal Double Gate TfetDocument26 pagesEffect of Gaussian Doping Profile On The Perfromance of Triple Metal Double Gate Tfetsudipta2580No ratings yet

- Manuscript 167 OldDocument6 pagesManuscript 167 Oldsudipta2580No ratings yet

- GRADED CHANNEL PresentationDocument15 pagesGRADED CHANNEL Presentationsudipta2580No ratings yet

- Paper Id: 150: Performance Enhancement of P-N-P-N Tfet With Spacer Induced Hetero-Dielectric Gate OxideDocument16 pagesPaper Id: 150: Performance Enhancement of P-N-P-N Tfet With Spacer Induced Hetero-Dielectric Gate Oxidesudipta2580No ratings yet

- CSE45 - Paper1 - ConferenceDocument4 pagesCSE45 - Paper1 - Conferencesudipta2580No ratings yet

- Pso LiteratureDocument6 pagesPso Literaturesudipta2580No ratings yet

- CSE45 PaperDocument4 pagesCSE45 Papersudipta2580No ratings yet

- CSE45 Paper PDFDocument4 pagesCSE45 Paper PDFsudipta2580No ratings yet

- Lab Manual EC1010 Digital Systems LabDocument51 pagesLab Manual EC1010 Digital Systems Labsudipta2580No ratings yet

- Radar 2009 A - 3 Review of Signals, Systems, and DSPDocument79 pagesRadar 2009 A - 3 Review of Signals, Systems, and DSPDimitri KaboreNo ratings yet

- Settles 2005 - An Introduction To Particle Swarm OptimizationDocument8 pagesSettles 2005 - An Introduction To Particle Swarm OptimizationegonfishNo ratings yet

- Review of DC-DC Converters in Photovoltaic Systems For MPPT SystemsDocument5 pagesReview of DC-DC Converters in Photovoltaic Systems For MPPT SystemsLuis Angel Garcia ReyesNo ratings yet

- IEEE Authorship WebinarDocument1 pageIEEE Authorship WebinarAnonymous PxkbBVwNo ratings yet

- Pk232mbx Operating ManualDocument266 pagesPk232mbx Operating ManualrdeagleNo ratings yet

- Model No.: TX-65DX780E TX-65DXW784 TX-65DXR780 Parts Location (1/2)Document9 pagesModel No.: TX-65DX780E TX-65DXW784 TX-65DXR780 Parts Location (1/2)Diego PuglieseNo ratings yet

- Feature Evaluation For Web Crawler Detection With Data Mining TechniquesDocument11 pagesFeature Evaluation For Web Crawler Detection With Data Mining TechniquesSlava ShkolyarNo ratings yet

- Effect of Mobile Marketing On YoungstersDocument33 pagesEffect of Mobile Marketing On Youngsterssaloni singhNo ratings yet

- Lisa08 BrochureDocument36 pagesLisa08 BrochuremillajovavichNo ratings yet

- HiFi ROSEDocument1 pageHiFi ROSEjesusrhNo ratings yet

- Axioms Activity (Games) PDFDocument3 pagesAxioms Activity (Games) PDFKristopher TreyNo ratings yet

- Detailed List and Syllabuses of Courses: Syrian Arab Republic Damascus UniversityDocument10 pagesDetailed List and Syllabuses of Courses: Syrian Arab Republic Damascus UniversitySedra MerkhanNo ratings yet

- Appendix B: Technical InformationDocument11 pagesAppendix B: Technical InformationOnTa OnTa60% (5)

- Dcof Full Notes (Module 2)Document9 pagesDcof Full Notes (Module 2)Minhaj KmNo ratings yet

- SVCN CSE AI With IP InternshipDocument72 pagesSVCN CSE AI With IP Internshipkvpravee28nNo ratings yet

- Kulubukedoguru Runotabixapika Farulepolo RejezaxogDocument2 pagesKulubukedoguru Runotabixapika Farulepolo RejezaxogBISHOY magdyNo ratings yet

- 174872-Report On Voice Enabled Enterprise ChatbotDocument61 pages174872-Report On Voice Enabled Enterprise ChatbotBalaji GrandhiNo ratings yet

- Hipaa 091206Document18 pagesHipaa 091206Dishi BhavnaniNo ratings yet

- RTOSDocument8 pagesRTOSAnuvab BiswasNo ratings yet

- User Guide - JMB - JT0250003-01 (50-238Z-GB-5B-FGKUP-UK) - JMB-MAN-0007 versWEBDocument28 pagesUser Guide - JMB - JT0250003-01 (50-238Z-GB-5B-FGKUP-UK) - JMB-MAN-0007 versWEBBOUZANAS DIMITRIOSNo ratings yet

- College Brochure ProjectDocument2 pagesCollege Brochure Projectapi-275943721No ratings yet

- STAAD ANALYSIS - Adrressing Requirements AISC 341-10 - RAM - STAAD Forum - RAM - STAAD - Bentley CommunitiesDocument2 pagesSTAAD ANALYSIS - Adrressing Requirements AISC 341-10 - RAM - STAAD Forum - RAM - STAAD - Bentley Communitieschondroc11No ratings yet

- "C:/Users/user1/Desktop/Practice" "C:/Users/user1/Desktop/Practice/HR - Comma - Sep - CSV" ','Document1 page"C:/Users/user1/Desktop/Practice" "C:/Users/user1/Desktop/Practice/HR - Comma - Sep - CSV" ','ShubhamJainNo ratings yet

- Proposal 2014 BatchDocument5 pagesProposal 2014 BatchimnithinNo ratings yet

- PianoCD User Guide enDocument42 pagesPianoCD User Guide enReyes OteoNo ratings yet

- Seismic Processing - Noise Attenuation Techniques PDFDocument3 pagesSeismic Processing - Noise Attenuation Techniques PDFDavid Karel AlfonsNo ratings yet

- A New Method For Encryption Using Fuzzy Set TheoryDocument7 pagesA New Method For Encryption Using Fuzzy Set TheoryAgus S'toNo ratings yet

- AI-powered Technology To Health-Check Soil and Water: Contact: Mathias SteinerDocument22 pagesAI-powered Technology To Health-Check Soil and Water: Contact: Mathias SteinerLucasNo ratings yet

- B Cisco Nexus 9000 Series NX-OS System Management Configuration Guide 7x Chapter 011100Document16 pagesB Cisco Nexus 9000 Series NX-OS System Management Configuration Guide 7x Chapter 011100RiyanSahaNo ratings yet

- MPFM HalliburtonDocument2 pagesMPFM HalliburtonJobRdz28No ratings yet

- Epm Install Troubleshooting 11121Document164 pagesEpm Install Troubleshooting 11121arunchandu23No ratings yet