Professional Documents

Culture Documents

A Very Brief History of Soft Computing:: Fuzzy Sets, Artificial Neural Networks and Evolutionary Computation

Uploaded by

Gaurav JaiswalOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A Very Brief History of Soft Computing:: Fuzzy Sets, Artificial Neural Networks and Evolutionary Computation

Uploaded by

Gaurav JaiswalCopyright:

Available Formats

A Very Brief History of Soft Computing:

Fuzzy Sets, Artificial Neural Networks and Evolutionary Computation

Rudolf Seising

Marco Elio Tabacchi

European Centre for Soft Computing

Edificio de Investigatin

Calle Gonzalo Gutirrez Quirs S/N

33600 Mieres, Asturias

rudolf.seising@softcomputing.es

DMI, Universit degli Studi di Palermo

and Istituto Nazionale di Ricerche Demopolis, Italy

Via Archirafi, 34

90123 Palermo, Italy

tabacchi@unipa.it

Abstract - This paper gives a brief presentation of history of Soft

Computing considered as a mix of three scientific disciplines that

arose in the mid of the 20th century: Fuzzy Sets and Systems, Neural

Networks, and Evolutionary Computation. The paper shows the

genesis and the historical development of the three disciplines and also

their meeting in a coalition in the 1990s.

ability of the human mind to effectively employ modes of

reasoning that are approximate rather than exact and he

explicated: In traditional hard computing, the prime

desiderata are precision, certainty, and rigor. By contrast, the point

of departure in soft computing is the thesis that precision and

certainty carry a cost and that computation, reasoning, and

decision making should exploit wherever possible the

tolerance for imprecision and uncertainty. [...] Somewhat later,

neural network techniques combined with fuzzy logic began to be

employed in a wide variety of consumer products, endowing such

products with the capability to adapt and learn from experience.

[...] Underlying this evolution was an acceleration in the

employment of soft computing and especially fuzzy logic in

the conception and design of intelligent systems that can exploit

the tolerance for imprecision and uncertainty, learn from

experience, and adapt to changes in the operation conditions. [2]

The segments of SC came into existence largely independent of

each other in the second half of the 20th century. They have the

common property of imitating structures or behavior in nature in

order to optimize certain processes of problem solving where

problems either cannot be resolved with the help of classical

mathematics or can only be solved with great difficulty: Fuzzy sets

and probabilistic reasoning simulate human reasoning and

communication; Artificial neural networks are geared to the

structure of living brains; Genetic and evolutionary computation

and programming are geared to biological evolution.

In the following sections we will present brief surveys of the

three above-named segments of SC and in the concluding section

we will give a short outlook on the future of Soft Computing.

I. INTRODUCTION

Today, computing and computers are used in all disciplines of

science and technology and as well in social sciences and

humanities. Computing and computers are used to find exact

solutions of scientific problems on the basis of two-valued logic

and classical mathematics. However, not all problems can be

resolved with methods of usual mathematics. As Lotfi A. Zadeh,

the founder of the theory of Fuzzy Sets, mentioned many times

over the last decades, humans are able to resolve tasks of high

complexity without measurements or computations. In conclusion,

he stated that thinking machines i.e. computers as they were

named in their starting period do not think as humans do.

The Summer Research Project on Artificial Intelligence that

was organized by John McCarthy in 1955 initiated the research

program of Artificial Intelligence (AI). In the proposal to this

project was written that AI research will proceed on the basis of

the conjecture that every aspect of learning or any other feature of

intelligence can in principle be so precisely described that a

machine can be made to simulate it [1].

The proximate development AI research is a story of several

successes but has yet lagged behind expectations. AI became a

field of research to build computers and computer programs that

act intelligently although no human being controls those

systems. AI techniques became methods to compute with numbers

and find exact solutions. However, not all problems can be

resolved with these methods. On the other hand, humans are able

to resolve such tasks very well. Therefore, Zadeh focused from the

mid-1980s on Making Computers Think like People [2]. For this

purpose, the machines ability to compute with numbers that

is named here as hard computing has to be supplemented by

an additional ability more similar to human thinking, that is named

here as soft computing.

In the 1980s Zadeh explained what might be referred to as

soft computing and, in particular, fuzzy logic to mimic the

II. FUZZY SETS

In 1959 Lotfi A. Zadeh had become professor of Electrical

Engineering at the University of California at Berkeley and in the

course of writing the book Linear System Theory: The State Space

Approach [3] with his colleague Charles A. Desoer, he began to

feel that complex systems cannot be dealt with effectively by the

use of conventional approaches largely because the description

languages based on classical mathematics are not sufficiently

expressive to serve as a means of characterization of input-output

relations in an environment of imprecision, uncertainty and

incompleteness of information. [4] There were two ways to

Work leading to this paper was partially supported by the Foundation for the Advancement of Soft Computing Mieres, Asturias (Spain).

978-1-4799-0348-1/13/$31.00 2013 IEEE

739

overcome this situation. In order to describe the actual systems

appropriately, he could have tried to increase the mathematical

precision even further, but Zadeh failed with this course of action.

The other way presented itself to Zadeh in the year 1964, when he

discovered how he could describe real systems as they appeared to

people. Im always sort of gravitated toward something that

would be closer to the real world [5]. In order to provide a

mathematically exact expression of experimental research with real

systems, it was necessary to employ meticulous case differentiations, differentiated terminology and definitions that were

extremely specific to the actual circumstances, a feat for which the

language normally used in mathematics could not provide well.

The circumstances observed in reality could no longer simply be

described using the available mathematical means.

While he was serving as Chair of the department in 1963/64,

he continued thinking about basic issues in systems analysis,

especially the issue of unsharpness of class boundaries. These

thoughts indicate the beginning of the genesis of Fuzzy Set

Theory. ([6], p. 7) and he submitted his first article Fuzzy Sets

to the editors of Information and Control in November 1964. In

this paper, that appeared in the following June, he launched new

mathematical entities as classes or sets that are not classes or sets

in the usual sense of these terms, since they do not dichotomize all

objects into those that belong to the class and those that do not.

He introduced the concept of a fuzzy set, that is a class in which

there may be a continuous infinity of grades of membership, with

the grade of membership of an object x in a fuzzy set A

represented by a number fA(x) in the interval [0,1]. [7, 8]

In 1968, Zadeh presented fuzzy algorithms, a concept that

may be viewed as a generalization, through the process of

fuzzification, of the conventional (non-fuzzy) conception of an

algorithm. ([9], p. 94.) Inspired by this idea, he wrote in the

article Fuzzy Algorithms that all people function according to

fuzzy algorithms in their daily life they use recipes for cooking,

consult the instruction manual to fix a TV, follow prescriptions to

treat illnesses or heed the appropriate guidance to park a car. Even

though activities like this are not normally called algorithms: For

our point of view, however, they may be regarded as very crude

forms of fuzzy algorithms. ([9], p. 95.)

As we mentioned already, Zadeh often compared the

strategies of problem solving by computers on the one hand and by

humans on the other hand. In a conference paper in 1970 he called

it a paradox that the human brain is always solving problems by

manipulating fuzzy concepts and multidimensional fuzzy

sensory inputs whereas the computing power of the most

powerful, the most sophisticated digital computer in existence is

not able to do this. Therefore, he stated that in many instances, the

solution to a problem need not be exact, so that a considerable

measure of fuzziness in its formulation and results may be

tolerable. The human brain is designed to take advantage of this

tolerance for imprecision whereas a digital computer, with its need

for precise data and instructions, is not. ([11], p. 132) He

continued: Although present-day computers are not designed to

accept fuzzy data or execute fuzzy instructions, they can be

programmed to do so indirectly by treating a fuzzy set as a datatype which can be encoded as an array [. . .]. Granted that this is

not a fully satisfactory approach to the endowment of a computer

with an ability to manipulate fuzzy concepts, it is at least a step in

the direction of enhancing the ability of machines to emulate

human thought processes. It is quite possible, however, that truly

significant advances in artificial intelligence will have to await the

development of machines that can reason in fuzzy and nonquantitative terms in much the same manner as a human being.

([11], p. 132)

The electrical engineer Ebrahim H. Mamdani read Zadehs

paper [10] shortly after he became professor of electrical

engineering of the University of London in 1971. Subsequently, he

suggested to his doctoral student Sedrak Assilian that he devise a

fuzzy algorithm to control a small model steam engine [12]. In his

PhD dissertation Mamdani studied recursiveness (feedback) in

artificial Neural Networks. Now, he wished to design a control

system that could learn on the basis of linguistic rules. He incited

Assilian to perform a trial to set up a fuzzy system under

laboratory conditions [13]. They designed a fuzzy algorithm to

control a small steam engine in a few days: It was an

experimental study which became very popular. Immediately we

had a steam engine, and the idea was to control the steam engine.

We started working at Friday, and I do not remember clearly

by Sunday it was working he said in 2008 ([14], p. 74). This was

the starting point of the great success of Fuzzy Systems in

technology in the 20th century.

III. ARTIFICIAL NEURAL NETWORKS

The starting point of modern neuroscience was the discovery

of the central nervous systems microscopic structure as nerve

cells. In the 1880s the Spanish histologist Santiago Ramn y Cajal

studied neural material and after he learned the method of staining

nervous tissue which would stain a limited number of cells that

was discovered by the Italian physician and pathologist Camillo

Golgi, Cajal could demonstrate different nerve cells (neurons),

various types of nerve cells and he could depict their structure and

connectivity. About half a decade later physicist Nicolas

Rashevsky, who was an expat from the (former Russian but now

Ukrainian) town Chernikow to the US in 1924. First at the

Westinghouse Research Labs, Pittsburgh and later in the

Department of Physiology at the University of Chicago he

developed a systematic approach to mathematical methods in

Biology. In 1938 he published the first volume of Mathematical

Biophysics: Physico-Mathematical Foundations of Biology [15],

and in the later decades four more books followed [16-19]. In

1939 he founded the journal Bulletin of Mathematical Biophysics

and in 1947 he established also the worlds first Ph. D program in

Mathematical Biology. Rashevskys new theory was based on the

abstract concept of the fundamental unit of life the cell. To

describe this concept mathematically exact he disregarded many

properties, as there are so many variations in cells and no cell is

equal to another. Not all cells have a core, some have more than

one, cells differ in size, structure and chemical composition,

some but not all -- need oxygen etc. Rashevskys abstract

definition was: A cell is a small liquid or semi-liquid system, in

which physico-chemical reactions are taking place, so that some

substances enter into it from the surrounding medium and are

740

Bulletin of Mathematical Biophysics [21]. Similar to Rashewskys

theory this original paper linked the activities of an abstract neural

network of two-factor elements with a complete logical calculus

for time-dependent signals in electric circuits and time is measured

here as a synaptic delay. In contrast to Raschewsky, McCulloch

and Pitts interpreted neurons in terms of electric on-off switches.

They had shown that a system of artificial neurons' like this

could perform the same calculations and obtain the same results of

an equivalent analogic structure, as [t]he `all-or-none' law of

nervous activity is sufficient to insure that the activity of any

neuron may be represented as a proposition. Psychological

relations existing among nervous activities correspond, of course,

to relations among the propositions; and the utility of the

representation depends upon the identity of these relations with

those of the logic of propositions. To each reaction of any neuron

there is a corresponding assertion of a simple proposition. ([12],

p. 117) Because electric on-off switches can be interconnected

such that each Boolean statement can be realized, McCulloch and

Pitts now realized the entire logical calculus of propositions by

neuron nets. They arrived at the following assumptions: i) the

activity of the neurons is an all-or-none process; ii) a certain

fixed number of synapses must be excited within the period of

latent addition in order to excite a neuron at any time, and this

number is independent of previous activity and position on the

neuron; iii) the only significant delay within the nervous system is

synaptic delay; iv) the activity of any inhibitory synapse absolutely

prevents excitation of the neuron at that time; v) the structure of

the net does not change with time. ([21], p. 116). Every

McCulloch-Pitts neuron is a threshold element: If the threshold

value is exceeded, the neuron becomes active and fires'. By

firing or not firing', each neuron represents the logical truthvalues true or false. Appropriately linked neurons thus carry

out the logical operations like conjunction, disjunction, etc. Two

years later the mathematician John von Neumann picked the paper

up and used it in teaching the theory of computing machines [22]

and may be that initiated the research program of Neuronal

Information Processing, a collaboration involving psychology

and sensory physiology, in which other groups of researchers were

soon interested. Some years later, von Neumann wrote on his

comparative view on the computer and the brain in an unfinished

manuscript that was published posthumously after his premature

death. [23]

In 1951, mathematician Marvin Minsky had worked with

Dean Edmonds in Princeton to develop a first neurocomputer,

which consisted of 3,000 tubes and 40 artificial neurons was

called SNARC (Stochastic Neural- Analog Reinforcement

Computer), in which the weights of neuronal connections could be

varied automatically. But SNARC was never practically

employed. The classic problem that a computer at that time was

supposed to solve, and hence an artificial neuronal network was

expected to, was the classification of patterns of features, such as

handwritten characters. Under the concept of a pattern, objects of

reality are usually represented by pixels; frequency patterns that

represent a linguistic sign, a sound, can also be characterized as

patterns. In 1957/1958, Frank Rosenblatt and Charles Wightman

at Cornell University developed a first machine for pattern

transformed, through those reactions, into other substances. Some

of these other substances remain within the system, causing it to

increase in size; some diffuse outwards. Analogous to physics he

postulated that cells are subjects to effects of forces that he wanted

to analyze as he wrote. To this end we must investigate various

possible cases, which open up an unexplored field to the

mathematician. ([20], p. 528 f.) Following up the works of former

neuro-anatomists he wrote: Whenever we have an aggregate of

cells in which the dividing factors prevail, they will repel each

other. On the other hand, when the restoring factors prevail, cells

attract each other. Of all cells, the neurons have most completely

lost their property of dividing; we should expect forces of

attraction between them. Indeed the existence of such forces has

been inferred by a number of neurologists, notably Ariens Kappers

and Ramon y Cajal from various observations. ([20], p. 530.) On

the other hand Rashevsky considered also the results of the

behavioral research of the Russian Iwan Petrowitsch Pawlow when

he established a theory of neural networks: It has been suggested

that a formation of new anatomical connexions between neurones

may be the cause of conditioned reflexes and learning. Calculation

shows that the above forces may account for it. Under certain

conditions they will produce an actual new connexion in a very

small fraction of a second [] This leads us towards a

mathematical theory of nervous functions. We find that, under very

general conditions, aggregates of cells such as are studied above

will possess many properties characteristic of the brain. ([20], p.

530.) Rashevskys idea was a radically new approach to the

investigation of the brain. He was interested in phenomena of

excitation and propagation in peripheral nerve fibers because he

thought that data are stored in these nerve fibers. These data he

identified as atomic elements of his theory and their input-outputbehavior he interpreted as a transfer function. To present this

input-output description into intern state variables he used two

state variables excitation and inhibition and he called the excitatory

atoms of the theory two-factor elements. Rashevsky established

a theory of peripheral nerve propagation with the assumption that

excitatory elements can form fibers that accumulate not only linear

but moreover as networks. Elements of these networks are similar

to neurons and networks of such neurons are similar to brains. He

saw in these woven structures a model for the brain and he

proposed the simulation of the behavior of brains using these

theoretical networks of abstract two-factor elements. He

proposed using physical concepts, e.g. minimizing energy and

differential equations to describe the behavior of neurons and

neural networks that are associated with psychological processes,

e.g. Pavlovian conditioning.

In the Committee on Mathematical Biophysics that

Rashevsky founded 1938 in Chicago, he aimed to realize his

program of Mathematical Biology as a mirror picture of

Mathematical Physics. Members of this program were Alvin

Weinberger, Alston Householder, Emilo Amelotti, Herbert Daniel

Landahl, John M. Reiner und Gaylor J. Young. In 1940 also

Warren McCulloch and the young math student Walter H. Pitts

(1923-1969) joined this group.

In 1943 McCulloch and Pitts published A Logical Calculus

of the Ideas Immanent in Nervous Activity' in Rashevskys

741

classification. Rosenblatt described this early artificial neural

network, called Mark I Perceptron, in an essay for the

Psychological Review [24]. It was the first model of a neuronal

network which was capable of learning and in which it could be

shown that the proposed learning algorithm was always successful

when the problem had a solution at all. The perceptron appeared to

be a universal machine and Rosenblatt had also heralded it as such

in his 1961 book Principles of Neurodynamics Perceptrons and

the Theory of Mind: For the first time, we have a machine which

is capable of having original ideas. ... As concept, it would seem

that the perceptron has established, beyond doubt, the feasibility

and principle of nonhuman systems which may embody human

cognitive functions ... The future of information processing devices

which operates on statistical, rather than logical, principles seems

to be clearly indicated. [25] The euphoria came to an abrupt halt

in 1969, however, when Minsky and Seymour Papert completed

their study of perceptron networks and published their findings in a

book. [26] The results of the mathematical analysis to which they

had subjected Rosenblatts perceptron were devastating: Artificial

neural networks like those in Rosenblatts perceptron are not able

to overcome many different problems! For example, it could not

discern whether the pattern presented to it represented a single

object or a number of intertwined but unrelated objects. The

perceptron could not even determine whether the number of

pattern components was odd or even. Yet should this have been a

simple classification task that was known as a parity problem.

The either-or operator of propositional logic, the so-called XOR,

presents a special case of the parity problem that thus cannot be

solved by Rosenblatts perceptron. Therefore, the logical calculus

realized by this type of neuronal networks was incomplete. As a

result of this fundamental criticism, many projects on perceptron

networks or similar systems all over the world were shelved or at

least modified. It took many years for a revival of this branch of AI

research.

Since 1981 the psychologists James L. McClelland (born in

1948) and David E. Rumelhart (1942-2011) applied Artificial

Neural Networks to explain cognitive phenomena (spoken and

visual word recognition). In 1986, this research group published

the two volumes of the book Parallel Distributed Processing:

Explorations in the Microstructure of Cognition [27]. Already in

1982 John J. Hopfield, a biologist and Professor of Physics at

Princeton, CalTech, published the paper Neural networks and

physical systems with emergent collective computational abilities

[28] on his invention of an associative neural network (now more

commonly known as the Hopfield Network), i.e.: Feedback

Networks that have only one layer that is both input as well as

output layer and each of the binary McCulloch-Pitts Neurons is

linked with every other, except itself. McClellands research group

could show that perceptrons with more than one layer can realize

the logical calculus; multi layer perceptrons indicated the

recommencement of the direction of artificial Neural in AI.

Arthur W. Burks Logic of Computers Group at the University

of Michigan in Ann Arbor and in 1954 he was among the first

students in the new Ph.D. program Computer and

Communication science. Holland was the first to graduate in

1959. He was affected by the book The Genetical Theory of

Natural Selection, written by English statistician and evolutionary

biologist Sir Ronald A. Fisher, and he was warm on analogies of

evolutionary theory and animal breeding from a computer science

point of view: Can we breed computer programs? Thats where

genetic algorithms came from. I began to wonder if you could

breed programs the way people would say, breed good horses and

breed good corn, Holland recalled later ([29], p. 128).

Burks was a mathematician and philosopher who had

collaborated with von Neumann in the IAS computer project since

1946. Later, he expanded the theory of automata, completed and

edited the paper Theory of Self-Reproducing Automata, von

Neumann had been working on, posthumously in 1966 [30].

When von Neumann was involved with the ManhattanProject to construct the first atomic bombs, his colleague Stanislaw

Ulam, a fellow European expat and mathematician, recounted him

about his studies on the growth of crystals at Los Alamos National

Laboratory. When looking for a model of discrete dynamic

systems he had the idea of a cellular automaton and he created a

simple lattice network. The states of Ulams cells at a certain point

in time t were determined by its state at point in time instantaneous

before t.

In 1953 von Neumann picked up this idea when he was

thinking on self-replicating systems: one robot building another

robot. He then conceptualized a theory of self reproducing twodimensional cellular automata with a self-replicator implemented

algorithmically. There was a universal copier and constructor

working within a cellular automaton with 29 states per cell and von

Neumann could show that a particular pattern would copy itself

again and again within the given pool of cells. Following up this he

was wondering if self reproducing of automata also could pursue

an evolutionary strategy, i.e. due to mutations and struggle for

resources. Unfortunately there is no paper by von Neumann on this

subject. It had a huge impact, not only in computing but in

biology and philosophy as well, said John Holland who became

professor of psychology, electrical engineering and computer

science at the University of Michigan: Until then, it was assumed

that only living things could reproduce. [31] In his Adaptation in

Natural and Artificial Systems published in 1975 [32], Holland

showed how to use these genetic search algorithms to solve realworld problems. His research objectives were i) the theoretical

explanation of adaptive processes in nature and ii) the

development of software that keeps the mechanisms of natural

systems and adapting to the respective circumstances at the best.

[32] The label Genetic Algorithms goes back to John D.

Bagleys Ph.D thesis, written under Hollands supervision [33].

Bagley applied these algorithms to find solutions in game theoretic

problems. Other Ph. D. students of Holland were Kenneth De Jong

and David E. Goldberg, could demonstrate various successful

applications.

Evolutionary Programming appeared with the research

work of Lawrence G. Fogel for the National Science Foundation

IV. EVOLUTIONARY COMPUTATION

The idea of artificial neurons that form a network and that

gives emerging rise to complex behavior fascinated the young Ph

D student John H. Holland in the mid-1950s. He was a member of

742

uses precise algorithms to derive conclusions or optimal values

from clearly defined data, Soft Computing takes into consideration

that there are not clearly defined concepts, inaccuracies,

vagueness, and blurred knowledge. Therefore, the theory of Fuzzy

Sets is a central part of Soft Computing. Other segments of this

field are Artificial Neural Networks, Evolutionary and Genetic

Computing and Programming.

Zadeh had committed to the assumption that traditional AI

couldnt cope with the future challenges. He directed his critique to

the general approach of hard computing n Computer Science

and Engineering. In his foreword to the first issue of the journal

Applied Soft Computing he recommended that instead of an

element of competition between the complementary

methodologies of SC the coalition that has to be formed has to be

much wider: it has to bridge the gap between the different

communities in various fields of science and technology and it has

to bridge the gap between science and humanities and social

sciences! SC is a suitable candidate to meet these demands

because it opens the fields to the humanities. [...] Initially,

acceptance of the concept of soft computing was slow in coming.

Within the past few years, however, soft computing began to grow

rapidly in visibility and importance, especially in the realm of

applications which are related to the conception, design and

utilization of information/intelligent systems. This is the backdrop

against which the publication of Applied Soft Computing should be

viewed. By design, soft computing is pluralistic in nature in the

sense that it is a coalition of methodologies which are drawn

together by a quest for accommodation with the pervasive

imprecision of the real world. At this juncture, the principal

members of the coalition are fuzzy logic, neuro-computing,

evolutionary computing, probabilistic computing, chaotic

computing and machine learning. ([40], p. 1-2)

In 2010 Luis Magdalena, accompanied Zadeh in

distinguishing between Soft Computing as opposite to Hard

Computing saying that the conventional approaches of HC

gain a precision that in many applications is not really needed or,

at least, can be relaxed without a significant effect on the solution

and that the more economical, less complex and more feasible

solutions of SC are sufficient. He pointed out that using suboptimal solutions that are enough is softening the goal of

optimization to be satisfied with inferring an implicit model

from the problem specification and the available data. Inversely

we can say that without an explicit model we will never find the

optimal solution. But this is not a handicap! SC makes a virtue

out of necessity because it is a combination of emerging problemsolving technologies for real-world problems and this means that

we have only empirical prior knowledge and input-output data

representing instances of the systems behavior. [41]

Also computer scientist Piero Bonissone stated, in these cases

of ill-defined systems, that are difficult to model and with largescale solution spaces precise models are impractical, too

expensive, or non-existent. [...] Therefore, we need approximate

reasoning systems capable of handling such imperfect information.

Soft Computing technologies provide us with a set of flexible

computing tools to perform these approximate reasoning and

search tasks. [42]

[34]. Fogel was inspired by the research programs of AI and

Artificial Neural Networks but he also believed that intelligent

behavior is based on adapting behavior. Evolutionary

Programming was his way to build finite-state machines that adapt

in a range of environment. In 1966 the book Artificial Intelligence

through Simulated Evolution [35] appeared, co-authored by Fogel,

Al Owens und Jack Walsh in which this biological inspired

research programs merged to the now so-called field of

Evolutionary Computation. Then, this team collaborated in the

company General Dynamics in San Diego and later the three

founded their company Decision Science.

Finally, when in 1992 John Kozas book Genetic

Programming: On the Programming of Computers by Means of

Natural Selection was published [36], the field of Genetic

Programming appeared, where scientists generate programs to

solve problems by evolution: Programs have been identified as

individuals that are subject to natural selection.

Apart from these developments in the US other natural

inspired principles have been considered in Germany: In the

1960s, Ingo Rechenberg and Hans-Paul Schwefel, two students of

aircraft construction at the Technical University of Berlin,

suggested to consider the theory of biological evolution to develop

optimization strategies in engineering. In the 1960 the two were

keen on cybernetics and bionics. Rechenberg was dealing with

wall shear stress measurements and Schwefel was responsible for

organizing fluid dynamics exercises for other students. The two

met in the Hermann Fttinger-Institute for Hydrodynamics (HFI)

and they started considering the theory of biological evolution to

develop optimization strategies in engineering. They were

dreaming of a research robot working according to cybernetic

principles, but computers became available only later on. In 1963,

together with Peter Bienert, they founded the unofficial working

group Evolutionstech-nik. They wanted to construct a kind of

research robot that should perform series of experiments on a

flexible slender three-dimensional body in a wind tunnel so as to

minimize its drag. The method of minimization was planned to be

ether a one variable at a time or a discrete gradient technique,

gleaned from classical numerics. Both strategies, performed

manually, failed, however. They became stuck prematurely when

used for a two-dimensional demonstration facility, a joint plate

its optimal shape being a flat plate with which the students tried

to demonstrate that it was possible to find the optimum

automatically [37].

Then, Rechenberg had the idea to perform experimental

optimization of the shape of wings and kinked plates through

mostly small modifications of the variables via a random manner,

i.e. to use dice for random decisions. This was the seminal idea to

bring to light the first evolution strategy, which was initially used

on a discrete problem and was handled without computers.

However, some time later, Schwefel expanded the idea toward

evolution strategies to deal with numerical/parametric optimization

and, also, formalized it as it is known nowadays. [38, 39].

V. SOFT COMPUTING

The concept of soft computing crystallized in my mind during the

waning months of 1990, wrote Zadeh. Whereas hard computing

743

[15] N. Rashevsky: Mathematical Biophysics: Physico-Mathematical

Foundations of Biology. Chicago 1938.

[16] N. Rashevsky: Advances and Applications of Mathematical Biology.

Chicago 1940.

[17] N. Rashevsky: Mathematical Theory of Human Relations: An Approach

to Mathematical Biology of Social Phenomena. Bloomington, ID, 1947.

[18] N. Rashevsky: Mathematical Biology of Social Behavior. Chicago 1951

[19] N. Rashevsky: Mathematical Principles in Biology and their

Applications, Springfield, IL, 1961.

[20] N. Rashevsky, Nicolas: Mathematical Biophysics, Nature, April 6,

1935, pp. 528530.

[21] W. S. McCulloch and W. H. Pitts: A Logical Calculus of the Ideas

Immanent in Nervous Activity, Bulletin of Mathematical Biophysics,

1943, pp. 115133.

[22] J. von Neumann: First Draft of a Report on the EDVAC, http:

//wwwalt.ldv.ei.tum.de/lehre/pent/skript/V onNeumann.pdf.

[23] J. von Neumann: The Computer and the Brain. New Haven 1958.

[24] F. Rosenblatt: The Perceptron: A Probabilistic Model for Information

Storage and Organization in the Brain, Psychological Review, 65 (6),

1958, pp. 386408.

[25] F. Rosenblatt: Principles of Neurodynamics: Perceptrons and the

Theory of Brain Mechanisms, Spartan Books, 1962.

[26] M. Minsky and S. Papert: Perceptrons. Cambridge, Mass., 1969.

[27] D. E. Rumelhart and J. L. McClelland and the PDP Research Group:

Parallel Distributed Processing I, II. Cambridge, Mass., 1986.

[28] J. J. Hopfield: Neural networks and physical systems with emergent

collective computational abilities, Proceedings of the National Academy

of Sciences of the USA, 79 (8), April 1982, pp. 2554-2558.

[29] M. Mitchell: Complexity. A Guided Tour, New York: Oxford University

Press, 2009.

[30] J. von Neumann and A. W. Burks: Theory of Self-reproducing

Automata, Urbana, University of Illinois Press, 1966.

[31] S. Lohr: Arthur W. Burks, 92, Dies; Early Computer Theorist, The New

York Times, May 19, 2008.

[32] J. H. Holland: Adaptation in Natural and Artificial Systems. An

Introductory Analysis with Applications to Biology, Control, and

Artificial Intelligence, The University of Michigan Press, 1975.

[33] J. D. Bagley: The Behavior of Adaptive Systems Which Employ Genetic

and Correlation Algorithms. PhD thesis, University of Michigan, 1967.

[34] L. G. Fogel: On the Organization of Intellect, PhD Thesis, University of

California, Los Angeles, 1964.

[35] L. G. Fogel, A. J. Owens and M. J. Walsh: Artificial Intelligence

through Simulated Evolution, John Wiley, 1966.

[36] J. R. Koza: Genetic Programming: On the Programming of Computers

by Means of Natural Selection, MIT Press, 1992.

[37] K. de Jong, D. B. Fogel, H.-P. Schwefel: A History of Evolutionary

Computation, handbook of Evolutionary Computation, Oxford

University Press: 1997, pp. A2.3:1-A2.3:12.

[38] I. Rechenberg: Evolutionsstrategie, Stuttgart-Bad Cannstatt: Friedrich

Frommann, 1973.

[39] H.-P. Schwefel: Evolutionsstrategie und numerische Optimierung,

Diss., Technische Universitt Berlin (Druck: B. Ladewick), 1975.

[40] L. A. Zadeh: Foreword, Applied Soft Computing, 1 (1), 2001, pp. 12.

[41] L. Magdalena: What is Soft Computing? Revisiting Possible Answers,

International Journal of Computational Intelligence Systems, 3 (2),

2010, pp. 148159.

[42] P. P. Bonissone: Soft Computing. The Convergence of Emerging

Reasoning Technologies, Soft Computing, 1 (1), 1997, pp. 68.

[43] M. E. Tabacchi, S. Termini: Varieties of vagueness, fuzziness and a few

foundational (and ontological) questions. Proc. EUSFLAT 2011, Advances in Intelligent Systems Research, pp. 578583. Atlantis Press.

[44] M. Cardaci, V. Di Gesu, M. Petrou, and M. E. Tabacchi: A fuzzy

approach to the evaluation of image complexity. Fuzzy Sets Syst.,

160(10):14741484, 2009.

[45] M. E. Tabacchi and S. Termini: Measures of fuzziness and information:

some challenges from reflections on aesthetic experience. In

Proceedings of WConSC 2011, 2011.

[46] A. Hoppe and M. E. Tabacchi: Towards a modelization of the elusive

concept of wisdom using fuzzy techniques. In: Annual Meeting of the

North American Fuzzy Information Processing Society, 2012.

As this paper had humbly tried to show, Soft Computing has a

very interesting and rich history, and its past is well rooted in the

stream of disciplines that today concur to a strong and resilient

development of technologies without forgetting the lessons and

intuitions of human sciences. What lies in the future of Soft

Computing is for us to discover and create, though many hunches

and impressions lead us to believe that it will play a pivotal role in

the desirable convergence between hard and soft sciences. We

have already discussed this in [43], and we can see how from a

technical standpoint the methodologies proper of Soft Computing

and from a human perspective the unicity of it allow to come face

to face with and often help resolving problems that by their very

nature seem far from the rigid precision of traditional computing

methodologies. Just as an example, we have used Soft Computing

methodologies in the evaluation of complexity in visual images

and in exploring the aesthetic experience [44,45], and discussed its

role in the modeling of the concept of wisdom related to its use as

a human helper in expert systems [46]. These aspects are classical

examples from a context in which not only Soft Computing seems

to offer a better view on the problem of implementing procedure to

replicate some kind of human performance, but as a bonus it

renders the descriptions much clearer and similar to what is

normally expressed by human sciences, and paths to the possible

solutions easier and more natural to find. Soft Computing is in our

opinion already opening a new era in science and technology, an

era of global application of computing methodologies to all the

facets of hard and soft sciences alike.

REFERENCES

[1]

[2]

[3]

[4]

[5]

[6]

[7]

[8]

[9]

[10]

[11]

[12]

[13]

[14]

J. McCarthy et al.: A Proposal for the Dartmouth Summer Research

Project on Artificial Intelligence, 1955.

L. A. Zadeh: Making Computers Think like People, IEEE Spectrum,

vol. 8, 1984, pp. 26-32.

L.A. Zadeh, Ch. A. Desoer: Linear System Theory: The State Space

Approach. New York, San Francisco [et al.]: McGraw-Hill, 1963.

L. A. Zadeh: Autobiographical Note, undated two-pages type-written

manuscript, written after 1978.

R. Seising: Interview with L. A. Zadeh on July, 26, 2000, unpublished,

see [8].

L.A. Zadeh: My Life and Work A Retrospective View, Applied and

Computational Mathematics, 10 (1), 2011, pp. 4-9.

L.A. Zadeh: Fuzzy Sets, Information and Control, 8, 1965, pp 338-353.

R. Seising: The Fuzzification of Systems. The Genesis of Fuzzy Set

Theory and Its Initial Applications Developments up to the 1970s,

Studies in Fuzziness and Soft Computing Vol. 216, Berlin, New York,

[et al.]: Springer 2007.

L. A. Zadeh: Fuzzy Algorithms, Inform &Control, 12, pp. 99-102, 1968.

L. A. Zadeh: Outline of a new approach to the analysis of complex

systems and decision processes, IEEE Trans. on Systems, Man, and

Cybernetics, SMC-3 (1), 1973, pp. 28--44.

L. A. Zadeh: Fuzzy Languages and their Relation to Human and

Machine Intelligence, Man and Computer. Proceedings of the

International Conference, Bordeaux 1970.

R. Seising: Interview with Prof. Dr. Ebrahim Mamdani on September 9,

1998 in Aachen, RWTH, Aachen, at the margin of, EUFIT 1998,

unpublished.

E. H. Mamdani, S. Assilian: An Experiment in Linguistic Synthesis

with a Fuzzy Logic Controller, Int. J. Man Mach. Stud., 7, 1975, pp. 113.

How a Mouse Crossed Scientists Mind a conversation with Ebrahim

Mamdani, Journal of Automation, Mobile Robotics & Intelligent

Systems, 2 (1), 2008.

744

You might also like

- Symbolic Artificial Intelligence: Fundamentals and ApplicationsFrom EverandSymbolic Artificial Intelligence: Fundamentals and ApplicationsNo ratings yet

- Service That Helps Scholars, Researchers, andDocument14 pagesService That Helps Scholars, Researchers, andTeddy YilmaNo ratings yet

- Artificial IntelligenceDocument5 pagesArtificial Intelligencetrustme2204No ratings yet

- The evolution of Soft Computing - From neural networks to cellular automataFrom EverandThe evolution of Soft Computing - From neural networks to cellular automataRating: 4 out of 5 stars4/5 (1)

- Artificial Intelligence Commonsense Knowledge: Fundamentals and ApplicationsFrom EverandArtificial Intelligence Commonsense Knowledge: Fundamentals and ApplicationsNo ratings yet

- LOGIC FOR THE NEW AI tackles issues with current approachesDocument35 pagesLOGIC FOR THE NEW AI tackles issues with current approachesBJMacLennanNo ratings yet

- AI Unit 1 PDFDocument14 pagesAI Unit 1 PDFMG GalactusNo ratings yet

- Historia e ML-1-56Document56 pagesHistoria e ML-1-56jagsti Alonso JesusNo ratings yet

- AI Unit 1Document8 pagesAI Unit 1Niya ThekootkarielNo ratings yet

- Artificial Intelligence NoteDocument52 pagesArtificial Intelligence NoteOluwuyi DamilareNo ratings yet

- Artificial Intelligence Creativity: Fundamentals and ApplicationsFrom EverandArtificial Intelligence Creativity: Fundamentals and ApplicationsNo ratings yet

- The Pursuit of Machine Common SenseDocument33 pagesThe Pursuit of Machine Common SenseproconteNo ratings yet

- Report of AIDocument23 pagesReport of AIJagdeepNo ratings yet

- 412 Annals Am Acad Pol Soc Sci 2Document14 pages412 Annals Am Acad Pol Soc Sci 2s784tiwariNo ratings yet

- AI - Limits and Prospects of Artificial IntelligenceFrom EverandAI - Limits and Prospects of Artificial IntelligencePeter KlimczakNo ratings yet

- Complete AiDocument85 pagesComplete Aiolapade paulNo ratings yet

- Artificial IntelligenceDocument21 pagesArtificial IntelligenceSaichandra SrivatsavNo ratings yet

- Application of Artificial IntelligenceDocument12 pagesApplication of Artificial IntelligencePranayNo ratings yet

- Basics of Soft Computing08 - Chapter1 PDFDocument16 pagesBasics of Soft Computing08 - Chapter1 PDFPratiksha KambleNo ratings yet

- Interaction and Resistance: The Recognition of Intentions in New Human-Computer InteractionDocument7 pagesInteraction and Resistance: The Recognition of Intentions in New Human-Computer InteractionJakub Albert FerencNo ratings yet

- #1 LectureDocument9 pages#1 Lectureحسنين محمد عبدالرضا فليحNo ratings yet

- Artificial IntelligenceDocument24 pagesArtificial IntelligenceSaichandra SrivatsavNo ratings yet

- Artificial Intelligence PDFDocument75 pagesArtificial Intelligence PDFJoel A. Murillo100% (3)

- Artificial Intelligence For EducationDocument18 pagesArtificial Intelligence For Educationnamponsah201No ratings yet

- Artificial Intelligence and Knowledge ManagementDocument10 pagesArtificial Intelligence and Knowledge ManagementteacherignouNo ratings yet

- Shapiro S.C. - Artificial IntelligenceDocument9 pagesShapiro S.C. - Artificial IntelligenceYago PaivaNo ratings yet

- Hypercomputation Unconsciousness and EntDocument10 pagesHypercomputation Unconsciousness and Entyurisa GómezNo ratings yet

- An Introduction To Soft Computing M A Tool For Building Intelligent SystemsDocument20 pagesAn Introduction To Soft Computing M A Tool For Building Intelligent SystemsayeniNo ratings yet

- Deep learning: deep learning explained to your granny – a guide for beginnersFrom EverandDeep learning: deep learning explained to your granny – a guide for beginnersRating: 3 out of 5 stars3/5 (2)

- AI's Half-Century: Margaret A. BodenDocument4 pagesAI's Half-Century: Margaret A. BodenalhakimieNo ratings yet

- FAHD - FOUDA Bahasa Inggris III Tugas ArtikelDocument10 pagesFAHD - FOUDA Bahasa Inggris III Tugas ArtikelFou DaNo ratings yet

- Artificial Intelligence (AI)Document11 pagesArtificial Intelligence (AI)Abhinav HansNo ratings yet

- Artificial Mathematical Intelligence: Cognitive, (Meta)mathematical, Physical and Philosophical FoundationsFrom EverandArtificial Mathematical Intelligence: Cognitive, (Meta)mathematical, Physical and Philosophical FoundationsNo ratings yet

- AI Applications & Foundations Explained in DepthDocument102 pagesAI Applications & Foundations Explained in DepthAlyssa AnnNo ratings yet

- Framework Model For Intelligent Robot As Knowledge-Based SystemDocument7 pagesFramework Model For Intelligent Robot As Knowledge-Based SystemUbiquitous Computing and Communication JournalNo ratings yet

- Artificial intelligent seminar reportDocument19 pagesArtificial intelligent seminar reportdeepuranjankumar08No ratings yet

- Soft Computing Definition and Key ComponentsDocument16 pagesSoft Computing Definition and Key ComponentsRajesh KumarNo ratings yet

- AI Artificial Intelligence Guide Components Learning Reasoning Problem SolvingDocument17 pagesAI Artificial Intelligence Guide Components Learning Reasoning Problem SolvingKhaled Iyad DahNo ratings yet

- LLB Notice 070hhghg815Document53 pagesLLB Notice 070hhghg815Gaurav JaiswalNo ratings yet

- Unified Syllabus B.Sc. / B.A Computer ApplicationDocument10 pagesUnified Syllabus B.Sc. / B.A Computer ApplicationGaurav JaiswalNo ratings yet

- A Very Brief History of Soft Computing:: Fuzzy Sets, Artificial Neural Networks and Evolutionary ComputationDocument6 pagesA Very Brief History of Soft Computing:: Fuzzy Sets, Artificial Neural Networks and Evolutionary ComputationGaurav JaiswalNo ratings yet

- IdiomsDocument10 pagesIdiomsAnish KumarNo ratings yet

- Ten Days National Workshop on Research MethodologyDocument4 pagesTen Days National Workshop on Research MethodologyGaurav JaiswalNo ratings yet

- Abstract Shilpi SinghDocument1 pageAbstract Shilpi SinghGaurav JaiswalNo ratings yet

- Nervous Responses of EarthwormDocument27 pagesNervous Responses of EarthwormKayla JoezetteNo ratings yet

- 8 Cell - The Unit of Life-NotesDocument6 pages8 Cell - The Unit of Life-NotesBhavanya RavichandrenNo ratings yet

- Forest Health and Biotechnology - Possibilities and Considerations (2019)Document241 pagesForest Health and Biotechnology - Possibilities and Considerations (2019)apertusNo ratings yet

- Introduction To Bioinformatics: Database Search (FASTA)Document35 pagesIntroduction To Bioinformatics: Database Search (FASTA)mahedi hasanNo ratings yet

- Development of The Planet EarthDocument14 pagesDevelopment of The Planet EarthHana CpnplnNo ratings yet

- Transpiration POGIL Answer KeyDocument8 pagesTranspiration POGIL Answer KeyJade Tapper50% (2)

- Colon 2014597 D FHTDocument4 pagesColon 2014597 D FHTyoohooNo ratings yet

- Explaining Butterfly MetamorphosisDocument13 pagesExplaining Butterfly MetamorphosisCipe drNo ratings yet

- Human Contact May Reduce Stress in Shelter DogsDocument5 pagesHuman Contact May Reduce Stress in Shelter DogsFlorina AnichitoaeNo ratings yet

- Advanced Level Biology 2015 Marking SchemeDocument29 pagesAdvanced Level Biology 2015 Marking SchemeAngiee FNo ratings yet

- Food Chain QsDocument3 pagesFood Chain QsOliver SansumNo ratings yet

- (PDF) Root Growth of Phalsa (Grewia Asiatica L.) As Affected by Type of CuttDocument5 pages(PDF) Root Growth of Phalsa (Grewia Asiatica L.) As Affected by Type of CuttAliNo ratings yet

- Cooling Tower Operation - 030129Document8 pagesCooling Tower Operation - 030129Sekar CmNo ratings yet

- Self Concept Inventory Hand OutDocument2 pagesSelf Concept Inventory Hand OutHarold LowryNo ratings yet

- Significance of 4D Printing For Dentistry: Materials, Process, and PotentialsDocument9 pagesSignificance of 4D Printing For Dentistry: Materials, Process, and PotentialsMohamed FaizalNo ratings yet

- 01 MDCAT SOS Regular Session (5th June-2023) With LR..Document5 pages01 MDCAT SOS Regular Session (5th June-2023) With LR..bakhtawarsrkNo ratings yet

- Uits PDFDocument36 pagesUits PDFCrystal ParkerNo ratings yet

- Muscle Energy Techniques ExplainedDocument2 pagesMuscle Energy Techniques ExplainedAtanu Datta100% (1)

- Pengantar Mikrobiologi LingkunganDocument28 pagesPengantar Mikrobiologi LingkunganAhmad SyahrulNo ratings yet

- BIO 192 Lab Report #1 BivalveDocument8 pagesBIO 192 Lab Report #1 BivalveBen KillamNo ratings yet

- Biodiversity & StabilityDocument76 pagesBiodiversity & StabilityMAXINE CLAIRE CUTINGNo ratings yet

- Full Download Human Physiology From Cells To Systems 8th Edition Lauralee Sherwood Solutions ManualDocument36 pagesFull Download Human Physiology From Cells To Systems 8th Edition Lauralee Sherwood Solutions Manualgambolrapinous.ggqcdr100% (39)

- Cell Membrane and Transport ColoringDocument3 pagesCell Membrane and Transport ColoringTeresa GonzNo ratings yet

- 30395-Article Text-56938-1-10-20220916Document8 pages30395-Article Text-56938-1-10-20220916Djim KARYOMNo ratings yet

- Biomimicry Avjeet SinghDocument21 pagesBiomimicry Avjeet SinghAvjeet SinghNo ratings yet

- Protochordata FIXDocument33 pagesProtochordata FIXSylvia AnggraeniNo ratings yet

- The Anatomy, Physiology, and Pathophysiology of Muscular SystemDocument55 pagesThe Anatomy, Physiology, and Pathophysiology of Muscular SystemAnisaNo ratings yet

- 10th Standard Tamilnadu State Board Science (English Medium)Document283 pages10th Standard Tamilnadu State Board Science (English Medium)Karthick NNo ratings yet

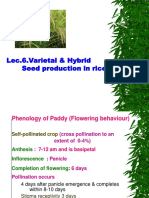

- Lec.6.Varietal & Hybrid Seed Production in RiceDocument75 pagesLec.6.Varietal & Hybrid Seed Production in RiceAmirthalingam KamarajNo ratings yet

- Cosmeceutic ALS: Cosmetics and PharmaceuticalsDocument19 pagesCosmeceutic ALS: Cosmetics and PharmaceuticalssowjanyaNo ratings yet