Professional Documents

Culture Documents

Georgiou - Complex Domain Backpropagation

Uploaded by

Piyush SinghCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Georgiou - Complex Domain Backpropagation

Uploaded by

Piyush SinghCopyright:

Available Formats

330

IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS-11: ANALOG AND DIGITAL SIGNAL PROCESSING, VOL. 39, NO. 5 , MAY 1992

Express Letters

Complex Domain Backpropagation

George M. Georgiou and Cris Koutsougeras

Abstract-The well-known backpropagation algorithm is extended to

complex domain backpropagation (CDBP) which can be used to train

neural networks for which the inputs, weights, activation functions, and

outputs are complex-valued. Previous derivations of CDBP were necessarily admitting activation functions that have singularities, which is

highly undesirable. Here CDBP is derived so that it accommodates

classes of suitable activation functions. One such function is found and

the circuit implementation of the corresponding neuron is given. CDBP

hardware circuits can be used to process sinusoidal signals all at the

same frequency (phasors).

I. INTRODUCTION

The complex domain backpropagation (CDBP) algorithm provides a means for training feed-forward networks for which the

inputs, weights, activation functions, and outputs are complex-valued. CDBP, being nonlinear in nature, is more powerful than the

complex LMS algorithm [l] in the same way that usual (real)

backpropagation [2] is more powerful than the (real) LMS algorithm

[3]. Thus, CDBP can replace the complex LMS algorithm in

applications such as filtering in the frequency domain [4].

In addition, CDBP can be used to design networks in hardware

that process sinusoidal inputs all having the same frequency

(phasors). Such signals are commonly represented by complex

values. The complex weights of the neural network represent

impedance as opposed to resistance in real backpropagation networks. The desired outputs are either sinusoids at the same frequency as the inputs or, after further processing, binary.

By decomposing the complex numbers that represent the inputs

and the desired outputs into real and imaginary parts, the usual

(real) backpropagation algorithm [2] can be used to train a conventional neural network which performs, in theory, the same

input-output mapping without involving complex numbers. However, such networks, when implemented in hardware, cannot accept

the sinusoidal inputs (phasors) and give the desired outputs.

In [5] and [7] complex domain backpropagation algorithms were

independently derived. The derivation was single hidden layer

feed-forward network in [SI and for a multilayer network in [6].

Both derivations are based on the assumption that the (complex)

derivative f( z) = d f ( z ) / dz of the activation function f(z) exists.

Here we will show that if the derivative f ( z ) exists for all ZE@,

the complex plane, then f(z) is not an appropriate activation

function. We will derive CDBP for multilayer feed-forward networks imposing less stringent conditions on f(z) so that more

appropxiate activation functions can be used. One such function is

found and its circuit implementation is given.

More information on the definitions and results from the theory of

complex variables that we will use can be found in [7], [8], or in

any other introductory book on the subject.

II. COMPLEXDOMAINBACWROPAGATION

Let the neuron activation function be f(z ) = U( x , y ) iv( x , y )

where z = x @, i =

The functions U and U are the real

and imaginary parts of f,respectively. Likewise, x and y are the

real and imaginary parts of z . For now it is sufficient to assume that

the partial derivatives U, = a u / a x , u y = a u / a y , U, = & / a x ,

and vy = a v / a y exist for all z E@. This assumption is not sufficient that f ( z )exists. If it exists, the partial derivatives also have

to satisfy the Cauchy-Riemann equations (see next section). The

error for an input pattern is defined as

m.

Ek =

dk -

ok

where d k is the desired output and ok the actual output of the kth

output neuron. The overbar signifies complex conjugate. It should

be noted that the error E is a real scalar function. The output oj of

neuron j in the network is

oj = f ( z j ) = U J + i v J ,

z j = xi

+ iyj = I =

WjlXjl (2)

where the Wjlsare the (complex) weights of neuron j and Xjls

their corresponding (complex) inputs. A bias weight, having permanent input (1,O), may also be added to each neuron. In what follows,

the subscripts R and Z indicate the real and imaginary parts,

respectively. We note the following partial derivatives:

(3)

In order to use the chain rule to find the gradient of the error

function E with respect to the real part of Wjl, we have to observe

the variable dependencies: The real function E is a function of both

u(xj, y j ) and v(xj, y j ) , and x j and y j are both functions of

WjlR (and WjlI).Thus, the gradient of the error function with

respect to the real part of Wjl can be written as

Manuscript received September 9, 1991; revised February 19, 1992. This

paper was recommended by Associate Editor R. Liu.

The authors are with the Computer Science Department, Tulane University, New Orleans, LA 70118.

F E E Log Number 9200589.

This paper [6] was published at about the same time that this letter was

submitted. It came to our attention soon after submission.

1057-7130/92$03.00 0 1992 IEEE

IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS-U: ANALOG AND DIGITAL SIGNAL PROCESSING. VOL. 39, NO. 5, MAY 1992

= -SjR(u{XilR

+ u $ X i l I ) - S,jl(~iXi/R+ vj!Xjlr) (4)

Using the chain rule we compute SiR:

aE

def

with S i = - a E / a u J - i a E / a v i and consequently S j R =

- a E / a u J and SjI = - a E / a v J . Likewise, the gradient of the

error function with respect to the imaginary part of Wj/ is

aE

--

aw,,,

331

auk a x ,

auk a y ,

a x , auJ

a y , auJ

--+--

a d a x j +--)

a d ayj

- -aE -a d ax, awi,I a y j aw,,,

SkR(

wkjR

+ '$

wkjI)

+CSkI(UxkwkjR+U$WkjI)

= -SjR(U{(

-ICjlr)

- Sir( v i ( - X j / I )

+ .$ICilR)

(12)

where the index k runs through the neurons that receive input from

neuron j. In a similar manner we can compute SiI:

+ UjXj/R)

(5)

aE

Combining (4) and (S), we can write the gradient of the error

function E with respect to the complex weight Wjl as

def aE

aE

Vw,,E= - i -

=

=

-zi/((u{

+ iu$)SiR + ( v i + i u i ) S j r ) .

SkR(u$(-

(6)

+c6kI(vxk(-

To minimize the error E , each complex weight Wjl should be

changed by a quantiy A Wjl proportional to the negative gradient:

A y r = aXjl((u;

+ iu$)SiR + ( v i + iv$)Sjr)

+ u$WkjR)

wkjI)

wkjI)

+ v$WkjR)

(13)

Combining (12) and (13), we arrive at the following expression for

si:

(7)

6, = SiR

where CY is the learning rate, a real positive constant. A momentum

term [2] may be added in the above learning equation. When the

weight Wjl belongs to an output neuron, then Si, and Si/ in (6)

have the values

or more compactly:

When weight Wjl belongs to a hidden neuron, i.e., when the output

of the neuron is fed to other neurons in subsequent layers, in order

to compute Si, or equivalently S j R and Si/, we have to use the chain

rule. Let the index k indicate a neuron that receives input from

neuron j. Then the net input z , to neuron k is

where the index I runs through the neurons from which neuron k

receives input. Thus, we have the following partial derivatives:

+ iSjI =

k

+ iuf)SkR

Wkj((u;

Training of a feed-forward network with the CDBP algorithm is

done in a similar manner as in the usual (real) backpropagation [2].

First, the weights are initialized to small random complex values.

Until an acceptable output error level is arrived at, each input vector

is presented to the network, the corresponding output and output

error are calculated (forward pass), and then the error is backpropagated to each neuron in the network and the weights are adjusted

accordingly (backward pass). More precisely, for input pattern X i ,

Si for neuron j is computed by starting at the neurons in the output

layer using (9) and then for neurons in hidden layers by recursively

using (14). As soon as Si is computed for neuron j, its weights are

changed according to (7).

III. THEACTTVATION

FUNCTION

In the next section the important properties that a suitable activation function f(z) must possess will be discussed. For the purposes

of this section, we identify two properties that a suitable f ( z )

should possess: i) f(z ) is bounded (see next section), and ii) f(z ) is

such that Si # (0,O)and Xi/ # (0,O)imply Vw.,E # (0,O).Noncompliance with the latter condition is undesirahle since it would

imply that even in the presence of both non-zero input ( X j l # (0,O))

and non-zero error (Si # (0,O))it is still possible that A Wi/ = 0 ,

i.e., no learning takes place.

The deviations of CDBP in [5] and [6] are based on the assumption that the (complex) derivative f ' ( z ) of the activation function

exists without giving a specific domain. If the domain is taken to be

@, then such functions are called entire.

Definition 1: A function f(z) is called analytic at a point zo if

IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS-11: ANALOG AND DIGITAL SIGNAL PROCESSING, VOL. 39, NO. 5, MAY 1992

332

its derivative exists throughout some neighborhood of zo.If f(z) is

analytic at all points z E G,is called entire.

Proposition I : Entire functions are not suitable sctivation functions.

The proof of the proposition follows directly from Liouvilles

theorem [7],[8].

Theorem 1 (Liouville): If f(z) is entire and bounded on the

complex plane, then f(z) is a constant function.

Since a suitable f(z)must be bounded, it follows from Liouvilles

theorem that if in addition f(z) is entire, then f(z) is

constant-clearly not a suitable activation function. In fact, the

requirement that f(z) be entire alone imposes considerable structure on it, e.g., the Cauchy-Riemann equations should be satisfied.

An exception in which f(z) is taken to be entire is the complex

LMS algorithm (single neuron) [ l ] , where f(z) = z, the identity

function.

Proposition 2: If f(z) is entire, then ( 6 ) becomes

VW,!E=

-Fj1Sjf(zj)

(15)

to G,i.e. there exists a real number M such that I g(z) I IM for

all z E

It can be easily verified that when z approaches any value

in the set (0 f i(2n 1 ) ~ n: is any integer}, then I g( z)I -+ 00,

and thus g(z) is unbounded. Furthermore, other commonly used

activation functions, e.g., tanh (z)and e - z 2 [ l o ] ,are unbounded as

well when their domain is extended from the real line to the

complex plane. One can verify that 1 tanh ( 2 ) I

00 as z approaches a value in the set (0 k i((2n 1)/2)?r:nis any integer}

00 when z = 0

iy and y

W.

and I e-* I

In order to avoid the problem of singularities in the sigmoid

function g(z), it was suggested in [6] to scale the input data to

some region in the complex plane. Backpropagation being a weak

optimization procedure has no mechanism of constraining the values

the weights can assume, and thus the value of z , which depends on

both the inputs and the weights, can take any value on the complex

plane. Based on this observation the suggested remedy is inadequate.

It is clear that some other activation function must be found for

CDBP. In the derivation of CDBP we only assumed that the partial

derivatives u x , U,,, v x , and uy exist. Other important properties the

activation function f( z) = U( x, y ) iu( x, y ) should possess are

the following

e.

-+

-+

Proof: Since f ( zj) is entire, the Cauchy-Riemann equations

U: =

and U; = - U: are satisfied and the derivative of f(z,) is

f(zj) = U: iu:. Using these equations we can write (6) as

vi

Vwil E = - X j l ( ( U: - iui) S j R

=

+ (U: + i u i ) S j r )

- FjISjf(Zj).

(17)

In a similar way we may derive (16). Expressions ( 1 5 ) and (16) are

essentially the ones derived in [5] and [ 6 ] .If the activation function

is the identity function, f ( z ) = z , and if neuron j is an output

neuron, then f( z) = 1 and the gradient (15) further reduces to the

one in the complex LMS (delta) rule [ l ] :

0

0

Proposition 3: If uxvy = u x u y , then f(z) = U

i u is not a

suitable activation function.

Proof: We would like to show that an activation function with

the above property violates condition ii) (see beginning of section).

Suppose that Xjl # (0,O). We will show that there exists Si # (0,O)

such that Vwi,E = 0. From (6) we see that V,,!E = 0 when

(u:SjR v i S j r ) i(u;SjR u$ijr) = 0, or equivalently, when

the real and imaginary parts of the equation equal to zero:

A nontrivial solution of the above homogeneous system of equations

in S j R and S j r , i.e., 6jR and Sjr not both zero, can be found if. and

.

only if the determinant of the coefficient matrix is zero: U$$ vju; = 0, or u{vi =.;.:U

Corollary: If U = U

k, where k is any complex constant, then

f( z) = U iu is not a suitable activation function.

The corollary gives us a better feeling of how large the class of

unsuitable activation functions really is.

IV. A SUKABLEACTIVATIONFUNCTION

In [5] the sigmoid function g(z) = 1 / ( 1

e-) was incorrectly

assumed to remain bounded when its domain was extended from W

f(z) is nonlinear in x and

y . If the function is linear, then the

capabilities of the network are severely limited, i.e., it is

impossible to train a feed-forward neural network to perform a

nonlinearly separable classification problem.

f(z) is bounded. This is true if and only if both U and U

bounded. Since both U and U are used during training (forward pass), they must be bounded. If either one was unbounded, then a software overflow could occur. One could

image analogous complications if the algorithm is implemented in hardware.

The partial derivatives u x ,

v x , and

exist and are

bounded. Since all are used during training, they must be

bounded.

f(z) is not entire. See Proposition 1 in the previous section.

uxuy uxuy. See Proposition 3 in previous section.

A simple function that satisfies all the above properties is

where c and r are real positive constants. This function has the

property of mapping a point z = x

iy = (x, y ) on the complex

plane to a unique point f(z) = ( x / ( c

( l / r )I z I), y / ( c

( l / r )I z I)) on the open disc { z : I z I < r } . The phase angle of z

is the same as that of f ( z ) . The magnitude I z I is monotonically

squashed to I f(z)1, a point in the interval [0, r ) ; in an analogous

way, the real sigmoid and hyperbolic tangent functions map a point

x on the real line to a unique point on a finite interval. Parameter c

controls the steepness of I f(z) I. The partial derivatives u x , U,,, v x ,

and uy are

IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS-11: ANALOG AND DIGITAL SIGNAL PROCESSING, VOL. 39, NO. 5 , MAY 1992

333

TABLE I

Input Pattern

(and Desired Output)

Actual Outputs After 1500 Epochs

((1, O), (0,O), (0,O))

{CO,, O), (1, O), (030))

{(O,O), (O,O), (0, - 1))

((0.9166E + 00,0.4907E - 03), (0.4681E - 01,0.2388E - 02),

(-0.3326E - 02,0.4553E - 01))

{(-0.2776E - 01, - 0.2885E - Ol), (0.9136E + 00, - 0.7155E - 03),

(0.2145E - 01, - 0.2797E - 01))

((0.3028E - 01, - 0.3061E - 01),(-0.3409E - 01,0.1932E - Ol),

(0.1474E - 02, - 0.9148E + 00)}

1.8

1.6

1.4

i.

0

wY

1.2

1

0.8

0.6

0.4

0.2

0

0

50

1 0 0 1 5 0 2 0 0 2 5 0 300 3 5 0 4 0 0 4 5 0 5 0 0

Epochs

Fig. 1. A run of CDBP

R1

network with c = 1, r = 1 , (Y = 0.2, and momentum rate 0.9. The

actual outputs after 1500 epochs appear in Table I.

Since sinusoidal signals at the same frequency (phasors) are

commonly represented and manipulated as complex numbers, it is

natural to use CDBP for processing them. CDBP-trained neural

networks with activation function (20) can be directly translated to

analog circuits. Such circuits, though nonlinear, yield outputs at the

same frequency (see below).

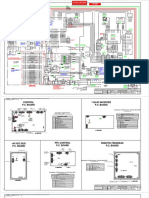

A simple circuit diagram for the complex neuron j with

activation function f(z) when c = 1 and r = 1 appears in Fig. 2.

The inputs and output are voltages. The quantity I z j I represents

the amplitude of the sinusoidal voltage z j and in the circuit is

obtained as a dc voltage using a peak detector (demodulator) with

input -zj. If the ripple associated with the output of the peak

detector is unacceptable, then the peak detector should be followed

by a low-pass filter [lo]. Each weight Wj, represents admittance.

Proposition 4: If all input signals to a neural network circuit

consisting of neurons of the type that appears in Fig. 2 are sinusoids

at the same frequency w , then the output(s) is (are) sinusoid(s) at the

same frequency w . .

Proof: It is sufficient to consider a single neuron (Fig. 2).

Given that the Xj,s are sinusoids at the frequency w , certainly the

output -zj of the summer (first op-amp) is a sinusoid at frequency

w . The remaining circuitry inverts -zj and scales its magnitude by

a factor l/(c ( l / r ) I z j I); thus, there is no effect on the frequency and the output f ( z j )is also a sinusoid at frequency W .

It is interesting to note that the activation function f(z ) , z E R,

being a sigmoid, can be used in the usual (real) backpropagation

[ l l ] , [12]. In that case the neuron circuit of Fig. 2 can also be used,

with the exception that the peak detector is replaced with a full-wave

rectifier in order to obtain the absolute value I z I from the now real

-2; weights Wj, represent conductance.

Fig. 2. Circuit for complex neuron. The output voltage is f ( z J ) =

n

zJ /U

+ I zJ I ), where zJ =

r=l

x,,w,,.

if

IzI = O

The special definitions of the partial derivatives when I z I = 0,

i.e., at z = (O,O), correspond to their limits as z + (0,O). Being

thus defined, the singularitks at the origin are removed and all

partial derivatives exist and are continuous for all z E

We successfully tested the CDBP algorithm on a number of

training sets. The error-versus-epochs graph of a simulation example appears in Fig. 1. By error we mean the sum of the errors

due to each pattern in the training set (1). A form of the encoding

problem [2] was used: The desired output of each of the three input

patterns in Table I is the input pattern itself. We used a 3-2-3

e.

V. CONCLUSION

CDBP is a generalization of the usual (real) backpropagation to

handle complex-valued inputs, weights, activation functions, and

outputs. Unlike previous attempts, the CDBP algorithm was derived

so that it can accommodate suitable activation functions, which were

discussed. A simple suitable activation function was found and the

circuit implementation of the corresponding complex neuron was

given. The circuit can be used to process sinusoids at the same

frequency (phasors).

REFERENCES

[l] B. Widrow, J. McCool, and M. Ball, The complex LMS algorithm,

Proc. IEEE, vol. 63, pp. 719-720, 1975.

[2] D. E. Rumelhart, G . E. Hinton, and R. J . Williams, Learning

internal representations by error propagation, in Parallel Distributed Processing: Volume 1: Foundations, D. E. Rumelhart and

J. L. McClelland, Fds. Cambridge: MIT Press, 1986, pp. 318-362.

[3] B. Widrow and M. Hoff, Adaptive switching circuits, in 1960 IRE

IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS-U: ANALOG AND DIGITAL SIGNAL PROCESSING, VOL. 39, NO. 5 , MAY 1992

WESCON Convention Record, part 4. New York: IRE, 1960, pp.

96-104.

M. Dentino, J. McCool, and B. Widrow, Adaptive filtering in the

frequency domain, Proc. ZEEE, vol. 66, pp. 1658-1659, Dec.

1978.

M. S . Kim and C. C. Guest, Modification of backpropagation for

complex-valued signal processing in frequency domain, in ZJCNN

Znt. Joint Conf. Neural Networks, pp. 111-27-III-31, June 1990.

H. h u n g and S . Haykin, The complex backpropagation algorithm,

ZEEE Trans. Signal Processing, vol. 39, pp. 2101-2104, Sept.

1991.

R. V. Churchill and J. W. Brown, Complex Variablesand Applications. New York: McGraw-Hill, 1948.

F . P. Greenleaf, Introduction of Complex Variables. W. B. Saunders, 1972.

B. Kosko, Neural Networks and Fuzzy Systems. EnglewoodCliffs:

NJ, Prentice-Hall, 1992.

J. Millman, Microelectronics. New York: McGraw-Hill, 1979.

P. Thrift, Neural networks and nonlinear modeling, Texas Znstmments Tech. J., vol. 7, Nov.-Dec. 1990.

[12] E. B. Stockwell, A fast activation function for backpropagation

networks, Tech. Rep., Astronomy, Univ. Minnesota, May 1991.

Reply to Comments on Pole and Zero Estimation

in Linear Circuits

Stephen B. Haley and Paul J. Hurst

This reply appears on p. 419 of the May 1992 issue of IEEE

TRANSACTIONS

ON CIRCUITS

AND SYSTEMS-I:FUNDAMENTAL

THEORY AND

APPLICATIONS.

IEEE Log Number 9200418.

1057-7130/92$03.00 0 1992 IEEE

You might also like

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- 6th Central Pay Commission Salary CalculatorDocument15 pages6th Central Pay Commission Salary Calculatorrakhonde100% (436)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Generator Set Iso8528!5!2005 Operating LimitsDocument1 pageGenerator Set Iso8528!5!2005 Operating Limitsswsw2011No ratings yet

- Acoustech XDocument8 pagesAcoustech XdezorkicNo ratings yet

- Soc Overview Pitt 11Document62 pagesSoc Overview Pitt 11Piyush SinghNo ratings yet

- Design Patterns For Low-Level Real-Time Rendering - Nicolas Guillemot - CppCon 2017Document56 pagesDesign Patterns For Low-Level Real-Time Rendering - Nicolas Guillemot - CppCon 2017Piyush SinghNo ratings yet

- DSP LabDocument104 pagesDSP Labaara aNo ratings yet

- Platform Architect DsDocument5 pagesPlatform Architect DsPiyush SinghNo ratings yet

- DSP LabDocument104 pagesDSP Labaara aNo ratings yet

- 6.3 - AnovaDocument45 pages6.3 - AnovaPiyush SinghNo ratings yet

- Inception BNDocument1 pageInception BNPiyush SinghNo ratings yet

- Federated Face Anti-SpoofingDocument8 pagesFederated Face Anti-SpoofingPiyush SinghNo ratings yet

- FFT ConvolutionDocument20 pagesFFT ConvolutionPiyush SinghNo ratings yet

- Softmax - Label Shape: (1x) Size: 1 (0.00%)Document1 pageSoftmax - Label Shape: (1x) Size: 1 (0.00%)Piyush SinghNo ratings yet

- Entropy: Kernel Spectral Clustering For Big Data NetworksDocument20 pagesEntropy: Kernel Spectral Clustering For Big Data NetworksPiyush SinghNo ratings yet

- High Volume Colour Image Processing With Massively Parallel Embedded ProcessorsDocument8 pagesHigh Volume Colour Image Processing With Massively Parallel Embedded ProcessorsPiyush SinghNo ratings yet

- 03 VHDL PDFDocument39 pages03 VHDL PDFPraveen MittalNo ratings yet

- Takaya Phase RecoveryDocument4 pagesTakaya Phase RecoveryPiyush SinghNo ratings yet

- CT RecoveryDocument27 pagesCT RecoveryBikshu11No ratings yet

- Inter and Intra Substation Communications RequirementsDocument8 pagesInter and Intra Substation Communications RequirementsEbrahim ArzaniNo ratings yet

- Huawei OptiXstar EG8245X6-8N Quick StartDocument16 pagesHuawei OptiXstar EG8245X6-8N Quick Startbloptra18No ratings yet

- PU630SNM102Document2 pagesPU630SNM102Eng Arquimedes CunhaNo ratings yet

- Circuit Breaker Timing Test ResultsDocument2 pagesCircuit Breaker Timing Test ResultsAhmed FathyNo ratings yet

- Vlsi Lab ManualDocument26 pagesVlsi Lab ManualAnirban Das DebNo ratings yet

- CB Isatau2 Flyer NDocument1 pageCB Isatau2 Flyer NSergio BustillosNo ratings yet

- FCI XCede Current OfferingDocument8 pagesFCI XCede Current OfferingAyush AnandNo ratings yet

- PT580 TETRA Portable BrochureDocument2 pagesPT580 TETRA Portable BrochureFabio LisboaNo ratings yet

- Asus Notebook Certificate Repair Training Engineer (CHER) Level 2-1 PDFDocument44 pagesAsus Notebook Certificate Repair Training Engineer (CHER) Level 2-1 PDFGerminii100% (1)

- Reducing Power Consumption in VLSI Circuits Through Multiplier Design TechniquesDocument63 pagesReducing Power Consumption in VLSI Circuits Through Multiplier Design TechniquesSwamy NallabelliNo ratings yet

- Easy UPS 3S UserDocument40 pagesEasy UPS 3S Userrifki andikaNo ratings yet

- Computer Organization and Architecture - Basic Processing Unit (Module 5)Document76 pagesComputer Organization and Architecture - Basic Processing Unit (Module 5)Shrishail BhatNo ratings yet

- Interface and Connector ChangesDocument3 pagesInterface and Connector ChangesAnonymous ggRTHDKe6No ratings yet

- dd40NTV 5 06Document2 pagesdd40NTV 5 06Francisco CherroniNo ratings yet

- RBS-RDS Initialization SequenceDocument16 pagesRBS-RDS Initialization SequenceАндрей ФандеевNo ratings yet

- Sankey RWDocument1 pageSankey RWandresriveram77No ratings yet

- 1N34ADocument1 page1N34AfreddyNo ratings yet

- Introduction To Semiconductor DiodesDocument7 pagesIntroduction To Semiconductor DiodesAndy CentenaNo ratings yet

- Telwin PDFDocument24 pagesTelwin PDFmatjaz555No ratings yet

- Reverse Bias: Understanding Avalanche BreakdownDocument12 pagesReverse Bias: Understanding Avalanche BreakdownMuhmmad hamza TahirNo ratings yet

- Vision v35035tr34Document10 pagesVision v35035tr34Erasmo Franco SNo ratings yet

- Insulated Gate Bipolar Transistor With Ultrafast Soft Recovery DiodeDocument17 pagesInsulated Gate Bipolar Transistor With Ultrafast Soft Recovery DiodetaynatecNo ratings yet

- NR Is A Slot: Very Short IntervalDocument12 pagesNR Is A Slot: Very Short IntervalMarco AntonioNo ratings yet

- RTU560 Communication Unit 560CMU80 Data SheetDocument4 pagesRTU560 Communication Unit 560CMU80 Data SheetOleg UskovNo ratings yet

- Data Dependences and HazardsDocument24 pagesData Dependences and Hazardssshekh28374No ratings yet

- Jiuzhou DTS6600: A HDTV PVR For All Walks of LifeDocument6 pagesJiuzhou DTS6600: A HDTV PVR For All Walks of LifeAlexander WieseNo ratings yet

- Radio Broadcasting Equipment CatalogDocument15 pagesRadio Broadcasting Equipment CatalogManuel ManjateNo ratings yet

- CDI (Capacitive Discharge Ignition) : Unit-IIDocument12 pagesCDI (Capacitive Discharge Ignition) : Unit-IIVARUN BABUNELSONNo ratings yet