Professional Documents

Culture Documents

Euroc Proposal MTC Lu Uob Ab

Uploaded by

Anonymous DWpUjRBhOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Euroc Proposal MTC Lu Uob Ab

Uploaded by

Anonymous DWpUjRBhCopyright:

Available Formats

Joint Robot-Human Logistics and

Assembly in Aerospace

Team: MTC-LU-UoB-Airbus

Document date:

9 February 2015

________________________________________________________

Project acronym:

Project full title:

Call identifier:

Grant agreement no.:

Starting date:

Duration in months:

Lead beneficiary:

Project web site:

EuRoC

European Robotics Challenges

FP7-2013-NMP-ICT-FoF

CP-IP 608849

1 January 2014

48

CREATE

www.euroc-project.eu

[confidential]

Annex I Proposal template

Page 1 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

CONTENTS

CONTENTS ............................................................................................................................................... 2

GENERAL INFORMATION ........................................................................................................................ 3

Team name ....................................................................................................................................... 3

Challenge option............................................................................................................................... 3

Challenge Team Leader (CTL) ........................................................................................................... 3

Participants (1 table for each organization, including that of CTL) .................................................. 3

Summary........................................................................................................................................... 5

SCIENTIFIC AND TECHNICAL QUALITY ..................................................................................................... 6

Proposal target .................................................................................. Error! Bookmark not defined.

Progress beyond the state-of-the-art............................................................................................... 9

S/T methodology and associated work plan .................................................................................... 9

IMPLEMENTATION ................................................................................................................................ 15

Individual participants .................................................................................................................... 15

Description of the partnership ....................................................................................................... 19

Overall Resources Costs and funding .......................................................................................... 20

IMPACT ........................................................................................................................................... 21

Expected results ............................................................................................................................. 21

Exploitation plan of results and management of knowledge and of intellectual property ........... 22

Ethical issues................................................................................................................................... 23

APPENDIX: Articles II.26, II.27, II.28 and II.29 Annex I of the EuRoC Grant Agreement .................... 24

[confidential]

Annex I Proposal template

Page 2 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

GENERAL INFORMATION

Team name

MTC-LU-UOB-Airbus

Challenge option

Challenge 1 Reconfigurable Interactive Manufacturing Cell

Challenge 2 Shop Floor Logistics and Manipulation

Challenge 3 Plant Servicing and Inspection

Challenge Team Leader (CTL)

Name

Jeremy Hadall

Organization

Manufacturing Technology Centre

Phone Number

+44 2476701600

jeremy.hadall@the-mtc.org

Participants

Organization

name

Manufacturing Technology Centre

Key person

Jeremy Hadall

Street Name

Pilot Way, Ansty Park

House Number

ZIP Code

CV7 9JU

Phone Number

+44 24760 701600

jeremy.hadall@the-mtc.org

Website

www.the-mtc.org

PIC (1)

959490342

City

Coventry

Country

United Kingdom

Role

Research Team

Organization

name

Loughborough University

Key person

Dr Laura Justham

Street Name

Wolfson School of MME

House Number

Loughborough University

City

Loughborough

ZIP Code

LE11 3TU

Country

UK

Phone Number

01509 227553

L.Justham@lboro.ac.uk

Website

http://www.lboro.ac.uk/departments/mechman/staff/laura-justham.html

PIC (1)

999990752

Role

Research Team

[confidential]

Annex I Proposal template

Page 3 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

Organization

name

University of Birmingham

Key person

Professor Jeremy L Wyatt

Street Name

School of Computer Science

House Number

Edgbaston

City

Birmingham

ZIP Code

B15 2TT

Country

UK

Phone Number

+44 121 414 4788

jlw@cs.bham.ac.uk

Website

www.cs.bham.ac.uk/~jlw

PIC (1)

999907526

Role

Research Team

Organization

name

Airbus

Dr. Ingo Krohne Technology Manager Automated Assembly

Key person

Dr. Robert Alexander Goehlich Manufacturing Engineering Project Manager

Dr. Hans Pohl Contract Manager

Street Name

Kreetslag

House Number

10

City

Hamburg

ZIP Code

21129

Country

Germany

Role

End User

+49 4074361667

Phone Number

+49 4074364054

+49 4074372822

ingo.krohne@airbus.com

robert.goehlich@airbus.com

hans.pohl@airbus.com

Website

www.airbus.com

PIC

999964756

The above information, together with the summary of the proposal, must be provided through the

web form available on http://www.euroc-project.eu. Instructions are available on the web. For

further information, please contact EuRoC at info@robotics-challenges.eu.

[confidential]

Annex I Proposal template

Page 4 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

Summary

Human robot collaboration on the shop floor presents a significant set of challenges. In this project

we will develop methods for enabling a shop floor mobile robot to assist a human in logistical and

assembly tasks, by bringing tools, parts and assisting in the assembly process to reduce the strain

on the human worker. The scenario is as follows. In structure assembly, equipment installation and

final assembly of an aircraft, for example, many operations are repetitive, and can involve

manipulating quite heavy tools for long periods of time in sometimes un-ergonomic positions. In

this project we will develop a shop floor application of the EuRoC platform to enable safer, faster,

and more ergonomic operations on an airframe by using a robot in collaboration with a human.

The scenario is an assembly operation on the inside or outside of an airframe. The robot will pick

and carry a large number of fixing elements or assembly parts and the relevant tools from a

storage area and bring them to a worker at the airframe assembly. The robot and the human will

then work together to apply the fixing elements and parts. The key steps in the scenario are:

i)

ii)

iii)

iv)

v)

vi)

vii)

Identifying and grasping required tools and materials from a work-surface.

Placing the tools and materials on the mobile platform in a safe configuration for

transport.

Navigating to the worker, and waiting alongside.

Grasping materials or a tool taking into account wrenches to be applied in its

operation.

Positioning the material or presenting the tool for co-manipulation by the human, who

will then apply it to each fixing to perform the task.

The robot will learn the human applied forces, and assist the human by adapting the

end effector forces over time (i.e. in a gravity compensated mode)

The robot will interpret foot motion in order to follow the human as they walk along

the airframe to enable continued fixing.

This scenario covers a variety of important human-robot collaboration skills in a shop floor logistics

and manufacturing setting. Our contention is that to be useful in many practical settings it is as

important to allow the robot to be used as intelligent tool guided by the human, as it is for the

robot to operate fully autonomously, e.g., for picking and moving parts. This proposal is predicated

on the notion that to achieve fluid interaction much of the technical challenge lies in the robot

knowing when to switch from one mode to the next on the basis of interpreting the humans

intentions from sensory input. The key scientific and technical challenges that must be met which

go beyond the state of the art are:

i)

Flexible Grasping. Grasping and fetching of parts and tools of possible novel shape.

We will build on our recent breakthrough work on this.

ii)

Force based Human-Robot interaction. The robot works as a multiplier of the humans

own abilities, co-applying forces at the end effector in the desired way.

iii)

Adaptive Interaction and Machine Learning. Learning will be used to allow the human

to train the robot over time to apply assistive forces optimally. In addition the robot

must interpret the humans body motions to correctly follow the human.

[confidential]

Annex I Proposal template

Page 5 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

SCIENTIFIC AND TECHNICAL QUALITY

Proposal Target. Challenge 2 is concerned with demonstrating in a real industrial

environment the benefits of mobile manipulation, with specific focus on the RTD issues

involved in establishing safe and effective human-robot collaboration, so that robot coworkers can operate effectively in an unstructured environment. The principal characteristic

of real shop floor environments is that the robot must work alongside humans who already

perform a huge variety of manipulation tasks. These include i) fetching tools and parts, ii)

carrying them to the work-station, ii) orientating parts relative to the main assembly, iv) part

mating, v) applying tools to parts, and vi) applying and manipulating deformable materials

such as glues or surface finishes. The human workers perform these tasks in a largely

unpredictable sequence, due to adaption to other workers, availability of parts and other

uncontrollable factors. Therefore, realistically very few of these tasks are fully autonomously

executable by a robot using todays state of the art. Most involve complex, context sensitive

decision-making as well as force-based dexterous manipulation that is far beyond the state

of the art in autonomous robotics.

One approach to this is to apply the robot only to the parts of the task it can perform fully

autonomously, and let the human perform the rest. But this is often inefficient. In addition

real companies problems are ones where logistics and assembly are closely intertwined,

where humans perform tasks with heavy tools in un-ergonomic positions, and it is typically

not the case that automating the simple pick and place operations alone brings much

economic and ergonomic benefit. Thus to ensure wide and early uptake of mobile

manipulation we need to create robots that can be used for a much wider range of purposes

than their purely autonomous abilities allow. In this team we place particular focus on using

the robot not just for logistics, but also to eliminate as much as possible the physically stressful

operations common in manufacture. This is an ideal scenario for the challenge hardware,

since it exploits force-controlled manipulation.

We will develop a robot co-worker that switches between different levels of autonomy as

required, with an emphasis on the human being able to use the robot as an intelligent, force

sensitive tool. We call this multi-level compliant autonomy. At one end the robot is fully

autonomous for operations such as pick and place. At the other end the robot has no

autonomy and is merely a compliant, gravity compensated tool and/or material holder held

by the human, entirely under their direct manual control and achieving ergonomic benefit

simply by removing the weight of the tool. In between it uses learning to act as an adaptive

force-multiplying tool, learning the forces applied by the human to perform a task and then

replicating those as closely as possible allowing the human to achieve maximum ergonomic

benefit by minimising the forces they apply to achieve a task. In this way the robot can also

acquire a statistically significant number of force profiles for each task. This will in turn allow

future versions of the robot to learn to autonomously perform the task after receiving

sufficient training, and as its perceptual abilities increase.

Thus the approach is intended to create impact in industry by using the EuRoC hardware in a

way that obtains the maximum economic benefit. Only in this way we will encourage

significant investment by companies, by increasing the return on investment (ROI) beyond

what pure autonomy would allow. The creation of such a royal road to adoption of mobile

manipulation on the shop floor is a critical goal of EuRoC.

[confidential]

Annex I Proposal template

Page 6 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

Figure 1: Human workers perform hours of repetitive fixing/riveting operations within the

airframe in difficult access areas.. Examples of the airframe before and after panel fixing are

given above. (Source: Airbus)

Our specific objectives to achieve this vision are:

O1 Flexible Autonomous Grasping. We will enable grasping of parts and tools of novel shape.

We will build on our recent breakthrough work on this. [T1 Freestyle]

O2 Force based Human-Robot interaction. We will develop methods where robot works as

a multiplier of the humans own abilities, co-applying forces at the end effector in the desired

way and perform this in a safe manner. [T2, T3]

O3 Adaptive Force Based Interaction. Learning will be used to allow the human to train the

robot over time to apply assistive forces optimally. In addition the robot must interpret the

humans body motions to correctly follow the human in a safe way, using force signals as

feedback from the human to the robot. [T2 T3]

O4 Requirements, acceptance and dissemination. To ensure the appropriateness of the

technology we will spend considerable effort in understanding the ways that human workers

currently operate tools to carry out their tasks. We will then identify how robot collaboration

will be best suited to support the human worker for each task. [T3]

Stage II: Showcase Round: End User Driven Task

This intermediate task will demonstrate a robot working alongside a human using our forcebased interaction approach to perform a task. The task will require the robot to perform

initially in gravity compensation mode, learning the forces applied by the human, and

adjusting the forces it applies so that the human can achieve easier working. The human will

grasp the robot arm so as to guide it, pushing the tool into place and sensing the forces

applied. As the human operates, the robot will build a force-based model of the activity, by

measuring the reaction forces applied at its joints and the tool centre point. In the next stage

the robot will then adapt the forces it applies during the task to allow the human to

accomplish the task while applying less force. Critical questions here are how to adapt the

applied force, and how the human adapts to this change in robot behaviour. We will show a

system that enables the human to perform a simple task, such as riveting, for a specific

application. This task will be complementary to the demonstration in the free-styling round,

where we will develop and demonstrate our autonomous dextrous grasping technology.

Stage III: Field Tests: The Use Case

Motivation: The end-user is Airbus, and their task is concerned with airframe assembly.

Airframe assembly has several properties that have driven the formulation of our technical

approach. First, many of the workers have to lift tools for extended periods of time. Even with

quite light tools this can cause ergonomic strain. In addition, some of the operations

[confidential]

Annex I Proposal template

Page 7 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

Figure 2: Two examples of the fixings to be applied: the Hi-Lite collar and Hi-lock pin, and on

the right a typical tool that must be aligned around the fixing element.

are in un-ergonomic positions. For example they might involve hundreds of riveting or fixing

operations above the head of the worker for a long stretch of time. Alternatively it might

involve applying tools within a physically highly constrained space. It is of considerable and

direct economic and ergonomic benefit to Airbus to begin to automate these tasks, but we

believe it is not yet possible or desirable to fully automate them. Hence this is the motivation

for the multi-level autonomy approach. In addition Airbus are clear that their shop floor does

not benefit by merely automating the logistics aspects, but by combining this with an

approach to human robot co-working. The specific stages in the Use Case Scenario are as

follows.

i)

The robot travels to a tool/part station, grasping the tools and bags of fixings.

ii)

The robot navigates to the worker and waits until the task start is indicated.

iii)

It grasps a tool offered by the human, taking into account the typical wrenches to

be applied in its operation.

iv)

The robot will present the tool for co-manipulation by the human, entering a

gravity compensation mode. The human will then apply it to perform the task.

v)

The human will guide the robot to the lock pin in order for the robot to perform the task.

Machine learning will take place, considering the human applied forces and trajectories,

to enable the robot to develop the capability to become autonomous.

S&T Issues. This proposal contains a number of challenges with regards to automation and

human-robot collaboration within the manufacturing domain. This is particularly pertinent

within high value manufacturing, such as the aerospace industry, where small batches of

products are manufactured, resulting in a highly labour intensive process. The main technical

challenges identified within this proposal are:

1 Autonomous Grasping. The robot must grasp tools and parts reliably. It must identify the

correct object, and grasp tools in the face of object shape variation.

2 Human Sensitive movement. The robot must navigate through the shop floor, locate,

meet and then follow the human as they work. This requires movement interpretation.

3 Learning and Assistance in Joint Human-Robot Tool Use. Machine learning of human tool

use is a challenging problem, particularly when the trajectories and forces vary, or when the

forces change suddenly during contact between the tool and the work-piece. Correct

application of assistive forces is also an S&T challenge.

4 Multi-level Autonomy. This will be handled using a behaviour based approach to switch

between autonomy settings based on triggers from the human co-worker. These will include

the ability to stop the robot and to indicate the task stage.

[confidential]

Annex I Proposal template

Page 8 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

Progress beyond the state-of-the-art

Grasping. In this project we will build on machine learning methods for grasping to achieve

mobile manipulation with a dexterous hand. The key is to be able to learn dexterous grasps

that generalise to new objects. Previous work utilises common object (Saxena et al., 2008;

Detry et al., 2013; Herzog et al., 2014; Kroemer et al., 2012). This works well for non-dexterous

hands. Recently we (Kopicki et al 2014) showed how to generalize grasping across object

categories with dexterous hands. Our technique was able to grasp 45 unseen objects from 5

example grasps. Here we will transfer it to the Shadow Hand, using a standard IK solver to

place the robot base in a position where the object is graspable. The method relies on a depth

cloud. We will obtain one from the depth sensor on the challenge platform.

Learning and Assistance in Joint Human-Robot Tool Use. The idea of a human directly

manipulating a compliant robot arm is a long-standing one. The determination of forces

during a contact interaction between a human and a Kuka LWR robot has been shown, and

also how the robot can modify the control law to make safe contact forces between the

human and the robot (Magrini 2014). In this work we will exploit this scheme to determine

the contact forces. We will go beyond this to learn the contact forces at the tool centre point

during application of a tool. This requires separation of the contact forces exerted by the

human from those at the tool contact. We achieve this by combining the above method with

an additional inertial tensor estimate from a force-torque sensor at the robot wrist.

Aerospace Automation: is still largely dominated by human operators assembling parts and

products by hand. This results in increased variability in product quality (Jamshidi 2010). There

is R&D to increase the level of automation within the domain, e.g. within wing (Irving 2014)

and engine manufacture (Tingelstad 2014). However, due to the size of the component parts

being manufactured, the associated difficulties with manipulating a robot into hard to reach

areas and the risks associated with robots working overhead, their uptake has not been

widespread or wholly successful. We will move beyond this by building the mobile platform

into a human governed process.

S/T methodology and associated work plan

Overall Strategy

We have split out the autonomous and joint human robot aspects of this project across Task

T1 (Freestyle) and T2 (Showcase) respectively. In T3 we will integrate the solutions to each

part and thoroughly field test at Airbus in Hamburg. The sub-tasks within these are organised

as described in detail in the Task tables.

Project Management The research partners will work closely with Airbus, to ensure that the

solution adds value to their manufacturing process. We will have regular remote meetings to

monitor project progress, with the team leader ensuring the work-plan is being followed. We

will use version control (GitHub) to develop the best possible code-base. We will hold two

face-to-face challenge team meetings during stage II to coincide with internal presentations

and key deliverables and milestones i.e. at month 10 and month 15

[confidential]

Annex I Proposal template

Page 9 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

Table 1a: Task list

Task

no.

Task title

Lead partic.

short name

Personmonths

Start

month

End

month

T1

Round (a) of Stage II: Benchmarking+free-style

round

UoB

37

Mo1

Mo10

T2

Round (b) of Stage II: Showcase round

LU

20

Mo11

Mo15

T3

Stage III (Field tests Pilot Experiments)

MTC

36

Mo18

Mo26

TOTAL

93

Tables 1b: Brief description of tasks

Task no.

T1

Start month

Task title

Round (a) of Stage II: benchmarking+free-style (1)

Participant no.

Participant short

name

Person-months per

participant

Mo1

End month

MTC

LU

UoB

Airbus

12

12

12

Mo10

Objectives

Solve the usecase driven task defined by the challenge host (benchmarking round)

Solve a task chosen by the team (freestyle round)

Description of work and role of participants

Here we describe the free-style task chosen by the team. This is a tool grasp and carry task. The platform will

grasp the necessary tools and bags of parts sitting on a work-surface and navigate with them to a human

worker on the shop floor. We will work with the Challenge hosts to enable the use of a dextrous manipulator

(Shadow Hand) to be integrated with the challenge platform. This dextrous manipulator will subsequently be

employed in our End Use Case to allow a firm grasp of tools designed for human hands. For the free-style task

we will integrate this hand with our dexterous grasping software (UoB) for novel objects. We will devote 18

PMs to the Benchmarking tasks and 18 PMs to the FreeStyle task. The specific tasks steps are as follows:

Subtask 1.1 Integration of Shadow Hand with Challenge Platform. This has been raised with the EuRoC

consortium and it has been agreed that this should be feasible for the free-styling rounds. The consortium, led

by MTC will work with DLR to integrate our Shadow Hand with the Challenge platform. (4PMs) (MTC)

Subtask 1.2 UoB grasping software integration with Shadow Hand. The UoB grasping software (GRASP and

GOLEM), are integrated with PCL, and functional on the DLR-HIT hand 2, the Schunk dextrous hand, and the

Justin three fingered hand. We will integrate this software with the Shadow Hand, writing the necessary

device drivers or interfaces using the ROS stack. The hand will be fully tested at UoB and MTC. The drivers for

the hand will allow compliant control, using a control framework developed at UoB. (5PMs) (UoB)

Subtask 1.3 Integration of Grasping software with Challenge Platform Perception. The perceptual

component of the grasping software is a PCL based library that uses point clouds to represent the object to be

grasped. We will integrate this with the challenge platform stereo camera. (5PMs) (UoB)

[confidential]

Annex I Proposal template

Page 10 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

Subtask 1.4 Integration and Testing of Grasping software with Mobile motion planning. A planner is required

that coordinates the base pose with the grasping planner for the arm/hand. We will write an interface

between the two that achieves a feasible position next to the tool and part station. (4PMs) (LU, MTC)

Subtask 1.5 Grasp Testing. We will test the grasping abilities of the system based on the software from

Subtasks 1.1-1.4. We will use a force-based assessment of grasp quality, and plan a re-grasp if the grasp is not

promising. Tools will be presented upright to afford grasps. (5PMs) (UoB, MTC)

Subtask 1.6 Integration of Person Search. We will develop a suitable person detection routine, test and

integration on the challenge platform so that it can identify and approach workers. (5PMs) (LU, MTC)

Subtask 1.7 Whole system testing. We will system test on a variety of tools from our end-user scenario, and

show the ability to grasp these and the parts bag and take them to a human co-worker. (5PMs) (MTC, LU, UoB)

Risk Management: We retain 4 PMs of effort to allocate as necessary as a time contingency.

Roles: In the Freestyle task UoB will provide expertise in grasping software, and also in point cloud processing.

LU and MTC will develop the mobile motion planning to support grasping and person search. The integration

between the Shadow Hand and the challenge platform will be handled by MTC in conjunction with the

Challenge Hosts. All partners will contribute to system testing.

Task no.

T2

Task title

Round (b) of Stage II: showcase round (1)

Participant no.

Participant short name

Person-months per

participant

Start month

Mo11

MTC

LU

UoB

Airbus

End month

Mo15

Objectives

Solve the enduser driven task aimed at showcasing customizability under realistic manufacturing

conditions

Description of work and role of participants

During this task the precise end user requirements for T3 will be captured. In addition, the joint human-robot

tool use will be showcased on a demonstrator at the MTC. The tool will be held by the robot but guided by the

human to perform a task, such as riveting or drilling, where the tools have to be held, in many cases, in unergonomic positions by the human for long periods of time. The robot will hold the tool in a known orientation,

such that the human may guide it to the lock-pin position, ready to perform the task. The robot will initially

operate in a passive gravity compensation mode, but subsequently will apply assistive forces to support the

human operator during task execution. Both the robot and human will have clear, complementary roles to

improve the quality and speed of the combined process.

Subtask 1.1 Implementation of end user requirements into platform design

The consortium will work with the end user to ensure the demonstration airframe is accurate with respect to the

current as-is scenario at Airbus. The platform and demonstration airframe will be commissioned, and a task

analysis performed on the drilling and riveting tasks as they are currently performed by humans, and also for a

panel placement task that will act as a fall-back. Any changes required to ensure safe co-working will be identified.

We will thus characterise the requirements on the robot platform for the end user driven task (2PMs) (LU, UoB,

MTC, Airbus).

Subtask 1.2 Development of Gravity compensated tool support mode

[confidential]

Annex I Proposal template

Page 11 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

The robot platform must be capable of grasping the tool and ensuring that it is held firmly for operation. This

will be achieved using grasp stabilisation software for the Shadow hand currently in the ROS stack for the hand.

The next step is to achieve gravity compensation given the additional mass near the end effector. To achieve

this the external forces imposed by the motion of the arm while holding the tool will be estimated by moving

the tool through calibration motions performed offline for a fixed set of tools. The human will then execute the

task (drilling or riveting) using the robot in this gravity compensated mode. (2PMs) (LU, MTC, UoB)

Subtask 1.3 Learning of force characteristics of tool use

Having performed in gravity compensation mode, the robot platform can learn the forces applied by the human

for various tasks and manufacturing scenarios. This will be done by modelling the force-based activity of the

human; measuring the reaction forces applied at the robot joints and at the tool centre point. The machine

learning approach we will apply will learn the parameters of movement primitives describing the motion, and

also the sequence of forces applied. The goal of this subtask is to teach the robot the characteristic force curves

for each task to enable it to be more adaptive for sub-task 1.4. (8PMs) (LU, MTC, UoB)

Subtask 1.4 Force assisted tool use

The robot will be able to adaptively sense the mode of operation and adjust its own force profile such that the

human experiences easier working conditions. We examine the effectiveness of predictive and feedback

control to create the best experience of working with the tool. We will investigate how the human adapts to

the robot assisted manufacturing process. Throughout this task, the human will remain the skilled operator

required to make decisions about the positioning of the robot TCP. (6PMs) (LU, MTC, UoB)

Risk Management: We retain 2PMs of effort across the project partners as a time contingency.

Roles: In this round LU will provide expertise in the user requirements definition, machine learning and

platform development. MTC will lead system integration and develop a test facility. UoB will contribute

expertise in control and learning. Airbus will lead the task analysis with MTC.

Task no.

T3

Task title

Stage III (Field tests Pilot Experiments) (1)

Participant no.

Participant short name

Start month

Mo18

MTC

LU

UoB

Airbus

11

11

11

Person-months per

participant

End month

Mo26

Objectives

Provide an initial solution to the Use Case task by sequencing the solutions developed in the Freestyle

and Showcase Rounds.

Assess the assistive tool use idea and acceptance testing with workers.

System integration and on site proof of concept.

Description of work and role of participants

In this task we will focus on sequencing and integrating all the elements from the first two stages. In particular

the freestyle round will provide the grasping technology for the logistics aspects. Second the assistive tool use

will come from the Showcase round. In this round we will thoroughly acceptance test the system in the Airbus

plant in Hamburg. This will also involve integration and systems testing of the whole system in situ.

Subtask 1.1 - Systems Integration and Testing (Factory Acceptance Test)

The consortium (led by MTC) will work with the end user and benchmark solution provider to integrate the

technologies developed through the freestyle and showcases rounds into one platform ready for testing at

[confidential]

Annex I Proposal template

Page 12 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

Airbus Hamburg. Systems integration and testing will be carried out on the benchmark solution against a

factory acceptance testing schedule developed by the partners to fully test systems integration and operation.

(8PMs) (MTC, LU, UoB)

Subtask 1.2 - Field Trials Of Integrated Systems

Once the developed system has been fully tested at MTC, it will be transported to Airbus Hamburg for field

testing. Working with the end-user, the challenger team will develop a test schedule and process to prove the

developed solution in the end users environment. In particular these tests will focus on proving the grasping

and assistive tool concepts. At this stage, testing will be carried out with the consortiums researchers to ensure

safe operation. (8PMS) (MTC, LU, UoB, Airbus)

Subtask 1.3 - Acceptance Testing and System Adjustment

Following the initial field trials (and remedial actions) a full acceptance test will be will be carried out. These

tests will be carried out to a prescribed Acceptance Test Schedule giving confidence in the systems capability to

a high level. Should adjustments be required, these will be assessed for impact on the overall system and either

re-tested or a concession made for that particular part of the test. (8PMs) (MTC, LU, UoB)

Subtask 1.4 - Field Testing with Workers and Handover to Airbus

Following satisfactory passing of the acceptance test, workers from Airbus will be trained in the use of the

systems. The challenger team will remain on site during field-testing and handover to ensure a smooth

transition. To ensure that a full transition is made and to assess the impact of the technology, a transition

handover plan and check list will be developed. This will be reviewed over a period of two months to highlight

any issues or failings that may be addressed by the Challenger team whilst on site. The partners will develop an

assessment method to measure impact beyond the duration of EuRoC. (8PMs) (MTC, LU, UoB, Airbus)

Risk Management: As with other tasks, we retain 4PMs of effort to allocate as a time contingency.

Roles: In the Field Testing task, MTC will provide systems integration and factory testing capabilities, experience

and a test environment. LU and UoB will develop test procedures (with input from MTC and Airbus) and will

provide field testing operators. Airbus will provide a field test environment in their Hamburg facility and in Task

1.4, production operatives to carry out field testing of the developed solution. All partners will contribute to

system testing and will collaborate to develop a long term impact assessment process.

Table 1c: List of deliverables

Deliverable Deliverable name

no.

Task(s) Nature Dissemination

involved

level

Delivery

date

D1

Report describing the activities of the team and

achieved results in Round (a) of Stage II

T1

R (1)

PU

Mo10

D2

Report describing the activities of the team and the

achieved results in Round (b) of Stage II

T2

RE

Mo15

D3

Report describing the activities of the team and the

achieved results in Stage III

T3

RE

Mo26

Table 1e: List of milestones

Milestone

no.

Ms1

Milestone name

Round (a) of Stage II

completed

Task(s) Expected

Means of verification

involved

date

T1

Mo10

Report describing the activities of the team and

achieved results in Round (a) of Stage II completed.

Mid-term evaluation successfully passed.

[confidential]

Annex I Proposal template

Page 13 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

Ms2

Round (b) of Stage II

completed

T2

Mo15

Report describing the activities of the team and the

achieved results in Round (b) of Stage II completed.

End-user driven task successfully solved.

Ms3

Round III completed

T3

Mo26

Report describing the activities of the team and the

achieved results in Stage III completed.

Final Challenge Workshop attended.

Table 1f: Summary of staff effort

Partic. no. Partic. short name Task 1

Task

2

Task3 Total person months

MTC

12

11

29

LU

12

11

29

UoB

12

11

29

Airbus

Total

37

20

36

93

Table 1g: Risk assessment and contingency plan

Risks

Probability

Impact

Remedial actions

Force assistance is unnatural

with predictive method

Medium

High

ORevert to gravity compensate or only apply

additional forces during simple, straight line, higher

force moves.

Difficult access areas also

too challenging for robot

Medium

Low

Use only robot for areas that it can reach to

demonstrate initial capability, e.g. externally

Robot arm cannot reach

selected positions

Low

High

Use only positions that are in reach with robot arm

Shdow hand cant be used

with the Challenge platform

Medium

High

We have access to a lightweight Pisa-IIT hand that

is also strong and can grip the tool well.

Robot is too slow to be of

economic use to Airbus

Medium

High

Investigate lighter weight platform post EuRoC.

EuRoC only provides proof of concept

Riveting use case is too

difficult for robot

Medium

High

Fall back to drilling, or panel placement (for which

we would use suction cups not a hand)

Member of the consortium

drops out of the challenge

Low

Medium

The other members will be able to pick up the

work.

Hardware unable to meet

requirements of end user

Medium

Medium

Relax requirements for proof of concept. Search for

better follow on hardware.

Health & safety prevents

field testing

Low

Medium

Safety risk assessments will be carried out in

advance of field tests to reduce this likelihood

[confidential]

Annex I Proposal template

Page 14 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

IMPLEMENTATION

Individual participants

Table 2a: Team participants

Participant 1

Manufacturing Technology Centre

(MTC)

Brief description of the As one of the founding members of the High Value Manufacturing Catapult,

organisation

the Manufacturing Technology Centres aim is to develop and promote

world class manufacturing processes and techniques for UK industries.

Working from a state of the art research facility its expertise in intelligent

automation and manufacturing systems will be invaluable in solving the

significant problems of creating a flexible manufacturing system.

www.the-mtc.org

Role in the project and

main tasks

MTC is the lead partner in the consortium, and will provide systems

integration, expertise in aerospace assembly, and lead T3 on Field Tests.

Relevant previous

experience

The MTCs Intelligent Automation team draws on over 200 person years of

industrial automation experience across a wide range of industries. Recent

examples are the lead demonstration of the FP7 LOCOMACHS project,

advanced industrial robotic controls (including ROS-Industrial), metrology

assisted robotics, and the application of robotics to complex fabrications

and assembly tasks. MTC has over 5 million of industrial scale automation

hardware for development and demonstration work, including the Shadow

Robot hand that will be integrated on the test platform for this challenge.

Profile of team

members that will

undertake the work

Jeremy Hadall - holds a Masters in Engineering and has 15 years experience

in developing innovative manufacturing systems, particularly advanced

automation and robotics. He has worked with: ABB, Tesco, Rolls-Royce,

Land Rover, BMW and has significant experience in collaborative projects.

He is chair of the UKs High Value Manufacturing Catapult Automation

Forum, sits on the Board of the EPSRC Centre for Innovative Manufacturing,

and is Chief Technologist for Automation at the MTC.

Richard Kingston holds a BEng in Automotive Engineering and 20 years

experience in industrial robotics. He has worked on many robotics projects

within the automotive and aerospace sectors for companies throughout

Europe and the USA. For the last 15 years he has worked on metrology and

software development, especially in relation to robotic applications. He has

previously worked on the COMET FP7 project within a software

development role providing integration between robot and metrology

systems. He is currently a Technical Specialist (Robotics) within the

Intelligent Automation area theme at Manufacturing Technology Centre.

[confidential]

Annex I Proposal template

Page 15 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

Participant 2

Loughborough University

(LU)

Brief description of

the organisation

Loughborough University (LU) www.lboro.ac.uk is a leading UK researchintensive university. The Wolfson School of Mechanical and Manufacturing

Engineering (WSMME) holds two prestigious Queen's Anniversary Prizes in

High Value Manufacturing, and is ranked 2nd in the UK. The EPSRC Centre for

Innovative Manufacturing in Intelligent Automation (IMIA) is the EPSRC

national centre for intelligent automation research.

Role in the project

and main tasks

LU will lead Task 2 and support the other two tasks. The EPSRC Centre at LU

has an established stream of research on collaborative automation, which

aims to enhance the use of existing worker skills via robot collaboration.

Relevant previous

experience

The EPSRC Centre is a research collaboration between Industry, Academia and

the MTC. The principle industrial partners of the centre are from the

Aerospace industry and therefore the team at LU have a strong track record

working in this sector on numerous projects and challenges. Aerospace has a

number of specific requirements, which are not prevalent within other

industries, and the team at LU have extensive expertise working alongside

these industrial partners. In addition, the state of the art laboratory based at

LU has extensive industrial robotics equipment, both hardware and software,

which can be utilised in support of the challenge.

Profile of team

members that will

undertake the work

All four members of the LU team are members of the EPSRC Centre for

Innovative Manufacturing in Intelligent Automation.

Dr. Laura Justham is a member of the EPSRC Centres academic team and

has been working on human robot collaboration, mobile robots in aerospace

applications, and industrial computer vision systems for over 10 years.

Dr. Niels Lohse is a senior member of the EPSRC Centre and has extensive

experience working on research projects, including projects in the aerospace

sector with Airbus and Rolls-Royce. Niels research interests include

manufacturing system modelling, human-machine interaction, machinelearning, and adaptive systems.

Dr. Zahid Usman is a research associate with over 7 years experience

working on modelling and simulation within a manufacturing environment.

Dr. Phil Ogun is a research associate with experience of machine vision tasks

within the manufacturing domain and the integration of intelligently

automated solutions.

[confidential]

Annex I Proposal template

Page 16 of 26

FP7-2013-NMP-ICT-FoF

Participant 3

Guide for proposal preparation

CP-IP 608849

University of Birmingham

(UoB)

Brief description of the The University of Birmingham (www.bham.ac.uk) is one the largest

organisation

research intensive universities in the UK. The School of Computer Science is

ranked 8th in the UK (REF 2014). The Intelligent Robotics Laboratory

(www.cs.bham.ac.uk/go/irlab) is a leading European lab in robotics and

artificial intelligence. The IRLab has expertise in robot vision (Leonardis),

task planning (Hawes, Wyatt), motor learning and multi-contact control

(Mistry), software architectures for robotics (Hawes, Wyatt), manipulation

(Wyatt), and machine and robot learning (Mistry, Wyatt, Hawes, Leonardis).

The IRLab will draw on its experience in building systems that perform

dextrous grasping, motor learning and human robot interaction (Burbridge,

Hawes, Zito, Wyatt). The lab has a substantial code base for mobility and

manipulation, which is used across multiple projects, including several

pieces of software for integration (CAST, GOLEM) that are used beyond

Birmingham. The laboratory has significant experience in working with

ROS, with designing integration frameworks linked with ROS, and in leading

projects involving large-scale software integration for autonomy.

Role in the project and

main tasks

UoB will lead T1, and in particular will lead the work on autonomous

grasping. Birmingham will also contribute to the work on learning of

trajectories and force-torque patterns for tool use.

Relevant previous

experience

UoB has significant experience in robotics projects having coordinated

three European projects (FP6: CoSy; FP7 CogX, GeRT, PacMan, CodyCo;

H2020: Cogimon). The relevant experience we will bring to this project is

our experience of grasping (GeRT, PacMan) including results from the Boris

manipulation system able to grasp novel objects from one-shot learning. In

addition we will bring experience in force-based manipulation from

PacMan, CodyCo and Cogimon, with an emphasis on learning interactions.

Profile of team

members that will

undertake the work

Professor Jeremy L Wyatt has published more than 90 papers on robot task

planning, motion planning, robot learning, manipulation, vision and

machine learning. He has won two best paper awards, has career funding of

10m, coordinated major EU projects such as PacMan and CogX, and

participated in three more (CoSy, GeRT, Strands).

Dr Nick Hawes works on the application of AI to robots that can work with

humans, architectures for intelligent systems, AI task planning for robotics,

and qualitative spatial representations for robots. He worked on both the

CogX and CoSy projects. He coordinates the FP7 STRANDS project on

robot-human interaction.

Dr Chris Burbridge is a research fellow with expertise in mobile robotics

robot manipulation and task and motion planning. He has worked on the

successful FP7 GeRT project. He will lead implementation.

Dr Marek Kopicki is a research fellow working on dexterous robot

manipulation. He will provide expertise in dextrous manipulation.

Mr Maxime Adjigble is a research engineer with expertise in force

controlled exoskeletons and control engineering for robotics.

[confidential]

Annex I Proposal template

Page 17 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

Participant 4

Airbus

(Airbus)

Brief description of

the organisation

Airbus is a leading aircraft manufacturer that consistently captures around

half of all orders for airliners with more than 100 seats. Our product line-up,

which covers a full spectrum of four aircraft families from a 100-seat singleaisle to the largest civil airliner ever, the double-deck A380, defines the

scope of our core business.

Our mission is to be a top-performing enterprise making the best aircraft

through innovation, integration, internationalisation and engagement.

Based in Toulouse, France, Airbus is a division of the Airbus Group. It

employs directly some 55,000 people of over 100 nationalities. Airbus'

design and production sites are grouped into four wholly-owned

subsidiaries, Airbus Operations SAS, Airbus Operations GmbH, Airbus

Operations S.L. and Airbus Operations Ltd. *

* www.airbus.com

Role in the project

and main tasks

Airbus is the End User partner in this team consortium and will provide

expertise in assembly in aerospace.

Relevant previous

experience

Various R&T experiences in the scope of automation strategies for

aerospace applications.

Profile of team

members that will

undertake the work

Dr. Ingo Krohne works in the Manufacturing Engineering R&T Generic

Technologies department at Airbus in Hamburg plant.

Dr. Robert Goehlich works in the Manufacturing Engineering Process

Development & Transnational Harmonisation department at Airbus in

Hamburg plant.

[confidential]

Annex I Proposal template

Page 18 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

Description of the partnership

The challenge team brings together four partners with complementary expertise. On the one hand we

have Airbus the end user, with world leading expertise in their domain of aircraft manufacture, and in

particular the possibilities and pitfalls of automation in the manufacturing process. They have a clear

view of the economic benefits of automation and the adoption of robotics, and will be the main

exploiter of the technology we will develop. At the other end we have the University partners: LU and

UoB, who provide the research base from which this project will grow. UoB have expertise in pure

autonomous robotics: manipulation, navigation, machine learning, and machine vision. They also have

long experience in running and leading robotics projects, and in producing research robot systems

that bring together many different technology elements. In addition they have significant expertise in

long-life reliable robots (e.g. Strands project) with experience in building robot systems that work in

the wild for periods measured in weeks. We will exploit their expertise in dextrous manipulation and

machine learning in particular. LU also have research expertise in intelligent robotics, but more

embedded in the domain of manufacturing: and so bring not just the basic research skills, but also the

expertise in applying these novel control strategies, machine learning, vision etc within manufacturing

settings. In addition LU have considerable experience of automation in the aerospace domain already.

Finally MTC work in the space of TRL 4-8, bringing research ideas along the TRL scale through feasibility

study to pre-product prototypes. Their experience is thus very much in the translational stage from

research to business. MTC have several team members with a total of more than 40 years of

experience in industrial robotics and advanced automation. MTC already work with Airbus, and have

extensive experience in projects for automation in aerospace, being the leader of one of the robotics

demonstrations in the FP7 project LOCOMACHS, which is precisely concerned with automation of the

assembly of structures in aeroplane manufacture. If we consider it in terms of the objectives we have

set then the match between objectives and partners is as follows:

O1 Flexible Autonomous Grasping. [T1 Freestyle] Here the role of Birmingham will be critical, as

they bring expertise in dextrous grasping of novel objects and objects under pose uncertainty. This

approach will be transferred to the Shadow Robot Hand, working with MTC who will bring expertise

in its use. LU and MTC will bring experience in systems integration and machine vision. UoBs expertise

in point cloud analysis will complement the stereo-vision expertise at MTC and LU.

O2 Force based Human-Robot interaction & O3 Adaptive Force Based Interaction. [T2 T3] To meet

these two objectives we will draw upon the expertise of LU in control and interaction, and on the

experience of UoB in machine learning. MTC will bring expertise in systems integration and Airbus will

specify the requirements for the interaction and the task.

O4 Requirements, acceptance and dissemination. [T3] The expertise of Airbus in the requirements

and case for robotic assistance will be crucial. LU will use their end user requirements definition

expertise to analyse the solution requirements. In addition Airbus and MTC will lead the acceptance

testing process. LU & UoB will contribute to dissemination via the print and broadcast media, web and

academic papers. Airbus and MTC will lead the dissemination process within Airbus, and direct the

exploitation efforts.

The three research partners are geographically close, LU and UoB being founding research partners in

the MTC. This means that they are already working together on a number of projects in intelligent

automation and robotics, so that the teams are well integrated. In the first stage of the EuRoC

competition MTC, LU and UoB worked together to produce our entry for the simulation competition.

This was successful, ranking us 4th out of the 17 teams that submitted an entry. This shows not only

that the partners have worked together, but that we know how to work together successfully within

the context of the joint development required for success in EuRoC.

[confidential]

Annex I Proposal template

Page 19 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

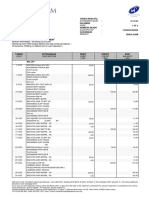

Overall Resources Costs and funding

The team partners will mobilise and commit the required resources to guarantee the success of the

project. The total budget is 807k, and the requested funding 585k. The main costs are for the

personnel at the partner sites. In each case we have allocated 1 researcher to work full-time on the

project at each research site, giving 26 PM per site for MTC, LU and UoB. This gives us a steady 3

people to work on the project. In addition a small amount of time from senior researchers leading the

project has been set, 3 PMs per research partner. In addition Airbus will devote a total of 6 PMs to the

project. This brings us to a total of 399,618 for personnel. The other costs are as follows. We will

spend 27,584 on direct costs to support the project, notably the costs of integrating the Shadow

Hand with the challenge platform, producing the test rig for the showcase demonstration, computers

for software development, a small quantity of consumables for technical meetings, adaptation costs

to allow the fitting of the Shadow Hand to the platform, further small sensors, and shipping and

insurance costs for equipment to and from the partner site. Airbus will have, as part of this figure,

1000 for example parts and tools to be used by the robots. In addition to requested contribution

from the EC we will bring a Shadow Hand, a Kuka omnibot, and a Kuka LWR to the project as an

additional project contribution at no cost. We have costed travel at rates of 100/day for

accommodation, 200 per flight, and 50/day for subsistence costs. Travel thus totals 58,700 for the

entire duration of the project, including all challenge team meetings and exchanges.

Costs for Stage II

Partner MTC

Cost category

Personnel costs ()

Travel ()

Other direct costs ()

Audit certificates ()

Indirect costs ()

Total budget ()

Requested funding ()

Costs for Stage III

Partner MTC

Cost

43056

20000

(1)

(2)

(3)

(4)

(5)

20584

0

58126

141766

106324

Costs for Stage II

Partner LU

Cost category

Personnel costs ()

Travel ()

Other direct costs ()

Audit certificates ()

Indirect costs ()

Total budget ()

Requested funding ()

Cost

10000

Costs for Stage II

Partner UoB

Cost category

Personnel costs ()

Travel ()

Other direct costs ()

(1)

Audit certifcates ()

(2)

Indirect costs ()

(3)

Total budget ()

(4)

Requested funding ()

(5)

Cost

32517

4700

(1)

(2)

(3)

(4)

(5)

539

0

43898

81654

61240

Costs for Stage III

Partner LU

85253

(1)

(2)

(3)

(4)

(5)

Cost category

Personnel costs ()

Travel ()

Other direct costs ()

Audit certificates ()

Indirect costs ()

Total budget ()

Requested funding ()

Costs (Stage II + Stage III)

Partner MTC

1000

0

57752

154005

115504

Cost

94277

10000

4500

0

56266

174043

130532

Cost category

Personnel costs ()

Travel ()

Other direct costs ()

Audit certificates ()

Indirect costs ()

Total budget ()

Requested funding ()

Cost

4000

Costs for Stage III

Partner UoB

Cost category

Personnel costs ()

Travel ()

Other direct costs ()

(1)

Audit certifcates ()

(2)

Indirect costs ()

(3)

Total budget ()

(4)

Requested funding ()

(5)

Cost

75573

24700

(1)

(2)

(3)

(4)

(5)

20584

0

102024

223424

167564

Costs (Stage II + Stage III)

Partner LU

49983

(1)

(2)

(3)

(4)

(5)

Cost category

Personnel costs ()

Travel ()

Other direct costs ()

Audit certificates ()

Indirect costs ()

Total budget ()

Requested funding ()

500

0

32690

87173

65380

Cost

49983

4000

0

0

32390

86373

64780

Cost category

Personnel costs ()

Travel ()

Other direct costs ()

Audit certificates ()

Indirect costs ()

Total budget ()

Requested funding ()

Cost

135236

14000

(1)

(2)

(3)

(4)

(5)

Costs (Stage II + Stage III)

Partner UoB

Cost category

Personnel costs ()

Travel ()

Other direct costs ()

(1)

Audit certifcates ()

(2)

Indirect costs ()

(3)

Total budget ()

(4)

Requested funding ()

(5)

1500

0

90442

241178

180884

Cost

144260

14000

4500

0

97656

260416

195312

[confidential]

Annex I Proposal template

Page 20 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

Costs for Stage II

Partner Airbus

Cost category

Personnel costs ()

Travel ()

Other direct costs ()

(1)

Audit certifcates ()

(2)

Indirect costs ()

(3)

Total budget ()

(4)

Requested funding ()

(5)

Total costs for Stage II

Cost category

Personnel costs ()

Travel ()

Other direct costs ()

(1)

Audit certificates ()

(2)

Indirect costs ()

(3)

Total budget ()

(4)

Requested funding ()

(5)

Cost

24300

4000

0

0

16980

45280

22640

Cost

246886

44000

26084

0

198124

515094

375000

Costs for Stage III

Partner Airbus

Cost category

Personnel costs ()

Travel ()

Other direct costs ()

(1)

Audit certifcates ()

(2)

Indirect costs ()

(3)

Total budget ()

(4)

Requested funding ()

(5)

Total costs for Stage III

Cost category

Personnel costs ()

Travel ()

Other direct costs ()

(1)

Audit certificates ()

(2)

Indirect costs ()

(3)

Total budget ()

(4)

Requested funding ()

(5)

Cost

20250

2000

1000

0

13950

37200

18600

Cost

152733

14700

2039

0

122928

292400

210000

CP-IP 608849

Costs (Stage II + Stage III)

Partner Airbus

Cost category

Personnel costs ()

Travel ()

Other direct costs ()

(1)

Audit certifcates ()

(2)

Indirect costs ()

(3)

Total budget ()

(4)

Requested funding ()

(5)

Cost

44550

6000

1000

0

30930

82480

41240

Total costs (Stage II+ Stage III)

Cost category

Cost

399619

Personnel costs ()

58700

Travel ()

27584

Other direct costs ()

(1)

0

Audit certificates ()

(2)

321052

Indirect costs ()

(3)

807494

Total budget ()

(4)

585000

Requested funding ()

(5)

IMPACT

Expected results

Airbus is one of the two leading aircraft manufacturers in the world, regularly having more than 50%

of the market share of all aircraft with more than 100 seats. Production worldwide is of the order of

40 aircraft per day. Aerospace is one of the highest value manufacturing sectors, and a critical industry

for Europe. It is also an industry that is now being to automate more and more of its core

manufacturing processes. This will be critical to maintaining Airbuss and thus Europes position at the

forefront of aircraft manufacture. Reducing lead times while ensuring quality is thus the driver for

Airbus, and it is critical for the future of the company that all routes to automation are explored and

exploited as soon as they become feasible.

The main motivation of this project is to reduce the incidence of non-ergonomic positions during

aircraft assembly, reducing work injuries, and providing workers with a better quality of life during

their work period. The obstacles are represented by difficult access areas: inside the aircraft many

work positions require movement flexibility that a SoA robot doesnt possess. In order to keep this

flexibility, the automation systems have to execute operations while sharing same physical

environment with humans. This is why there is the need for a mobile manipulation platform. Up to

now, most of the automation approaches within aircraft manufacturing have been limited to

applications outside the fuselage due to the often very complex aircraft structures and/or the confined

space.

The major exploitation route for Airbus from this project will be for the company to be able to

demonstrate a first mobile manipulation platform able to contribute to aircraft manufacture. It has

become absolutely clear during the proposal formation that the primary need for Airbus is to reduce

ergonomic stress on its workers, while increasing production speed and maintaining or improving

production quality. Airframe manufacture is unusual in that while some elements can be automated

using traditional industrial robotics the nature of airframes means that this cannot be achieved

throughout the production line. In particular many elements are still require manual dexterity, and

[confidential]

Annex I Proposal template

Page 21 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

must be performed in cramped spaces that would be inaccessible to larger industrial robots. The

specific impacts aimed for are:

1.

2.

3.

4.

Improvement of ergonomic factors and injuries for cost reduction

Improvement of the product quality due to standard operations

Reduction of lead time

Reduction of risk and secure a continuous operation of production

Clearly it is difficult to quantify the immediate financial impact of these benefits, but a reduction in

lead time of just 1% due to extensive roboticisation of the currently fully manual parts of aircraft

manufacture would result in an additional aircraft being produced every 2.5 days with a

commensurate increase in turnover and profit. It is important to achieve this that an approach is

adopted that will lead to multiple operations being partially automated. The exploitation strategy is

to show benefit in one operation, and then expand it to other operations. This is why the transversal

seam of the aircraft has been identified as the area of application, as it requires many different

operations. In particular we aim to have impact on collaborative riveting tasks, with panel fixing as a

secondary task. Thus the approach is planned for a variety of supporting tasks (i.e., scenarios) so as to

have a multi-purpose robot that can subsequently be easily adjusted to other tasks.

Economic Benefits: It is clear that Airbuss competitors, especially Boeing, are now engaged heavily in

attempts to automate more and more of the manufacturing process. While automation in aerospace

is still relatively low, increased productivity will give huge advantages to the manufacturer staying

ahead in this technology. Airbus SAS, as one of Europes largest manufacturers, must make rapid

progress on this to ensure continued growth and market share. Since so much aircraft manufacture is

manual the impact will be best achieved with a flexible solution that extracts the maximum from the

investment. This is precisely what a multi-level autonomy approach achieves. Thus as it is adopted to

perform different tasks, a flexible mobile platform can be a central part of Airbuss manufacturing

operations. So even though some of the impacts will not all occur in the short term, the effort must

be started, and the impact on Airbuss competitiveness and market share will be vast over time. This

in turn has significant impact on the manufacturing exports of the EU.

Social Benefits: while aircraft manufacturing is a high value activity economically it involves many

manual operations that are stressful on the human worker. The benefits for the workers in terms of

working environment and occupational health are very clear. Airbus SAS are keen to achieve these

improvements, and mobile manipulation robots are a promising way to achieve this impact.

Technological Benefits: over time the level and complexity of operations performed by robots will

increase, with more of the task being performed by the robot, and less by the human. The key

technological benefit of this project is to start Airbus down this road, which will lead to a series of

robot systems performing multiple tasks. The other benefit will be in terms of improved quality of

aircraft manufacture over time.

Exploitation plan of results and management of knowledge and of intellectual

property

The exploitation plan is as follows. We will disseminate to several audiences in the project. First within

Airbus, we will disseminate the results to senior managers responsible for manufacture, and also

through the acceptance testing process with workers on the shop floor. This dissemination action will

[confidential]

Annex I Proposal template

Page 22 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

also result in feedback to adjust the approach. We will hold a workshop to disseminate and discuss

the use case and the wider potential for mobile manipulation in aircraft manufacture at Airbus in

Hamburg.

In addition we will take, subject to the removal of Airbus specific confidential information, the

following dissemination actions to bring information about the project to a wider number of potential

users in other industries that can also benefit from joint human-robot manipulation.

1 Cross industry demonstration at MTC. Using a duplicate platform at MTC we will host a

demonstration to industrialists outside of the immediate set of competitors for Airbus. This will

involve demonstration a generic tool operation task. This will be carried out after the showcase.

2 Media coverage. UoB have an outstanding record of media engagement, and Prof Wyatt and Dr

Hawes make frequent media appearances in the local, national and international media for their work.

We will ensure that the benefits of human-robot co-workers are given maximum coverage with the

general public.

3 One-to-one Company Meetings. Through the MTC, which has more than 50 industrial members, we

will explore the exploitation possibilities in a series of one-to-one meetings with companies.

4 Airbus internal seminars. In addition to the demonstration and acceptance testing at Airbus we will

hold a small number of internal seminars with different manufacturing groups in Airbus to disseminate

the work beyond the Hamburg facility. This will be useful in exploring wider use of the approach.

5 Academic Dissemination. We will publish papers in leading international conferences and journals

on the work, while adhering to non-release of agreed confidential information.

IPR Management. Prior to commencement of the work, an IPR agreement will made with clauses to

enable smooth management of the background and foreground IP. The background will reside with

the partners, but with clauses consistent with the EuRoC appendix on IPR to enable its use in this

domain managed by the partners. The consortium will seek in the first instance to enable jointly

generated foreground IP to be applied within Airbus, without prejudicing the ability of the other

partners to exploit it in domains aside from aerospace (according to the EuRoC IPR appendix), and to

continue to exploit their background freely and independently. The technology transfer wings of the

research partners shall work with Airbus to achieve this. In addition MTC has LU and UoB as founding

research partners, and so has been established to be the natural organisation to transfer IP from low

TRLs into prototypes suitable for further funding. In Stage III of the project Airbus and the partners

will develop the joint exploitation plan covering the future development of the technology for use by

Airbus.

Ethical issues

The proposal is not believed to raise any particular ethical issues. The process for the human-robot

collaboration will undergo a careful safety evaluation prior to acceptance testing. During acceptance

testing any possible safety issues will be identified and acted on.

[confidential]

Annex I Proposal template

Page 23 of 26

FP7-2013-NMP-ICT-FoF

Guide for proposal preparation

CP-IP 608849

APPENDIX: Articles II.26, II.27, II.28 and II.29 Annex I of the EuRoC Grant

Agreement

II.26. Ownership

1. Foreground shall be the property of the beneficiary carrying out the work generating that

foreground.

2. Where several beneficiaries have jointly carried out work generating foreground and where

their respective share of the work cannot be ascertained, they shall have joint ownership of

such foreground. They shall establish an agreement1 regarding the allocation and terms of

exercising that joint ownership.

However, where no joint ownership agreement has yet been concluded, each of the joint

owners shall be entitled to grant non-exclusive licences to third parties, without any right to

sub-licence, subject to the following conditions:

a. at least 45 days prior notice must be given to the other joint owner(s); and

b. fair and reasonable compensation must be provided to the other joint owner(s).

3. If employees or other personnel working for a beneficiary are entitled to claim rights to

foreground, the beneficiary shall ensure that it is possible to exercise those rights in a manner

compatible with its obligations under this grant agreement.

II.27. Transfer

1. Where a beneficiary transfers ownership of foreground, it shall pass on its obligations

regarding that foreground to the assignee including the obligation to pass those obligations

on to any subsequent assignee.

2. Subject to its obligations concerning confidentiality such as in the framework of a merger or

an acquisition of an important part of its assets, where a beneficiary is required to pass on its

obligations to provide access rights, it shall give at least 45 days prior notice to the other

beneficiaries of the envisaged transfer, together with sufficient information concerning the

envisaged new owner of the foreground to permit the other beneficiaries to exercise their

access rights.

However, the beneficiaries may, by written agreement, agree on a different time-limit or

waive their right to prior notice in the case of transfers of ownership from one beneficiary to

a specifically identified third party.

3. Following notification in accordance with paragraph 2, any other beneficiary may object

within 30 days of the notification or within a different time-limit agreed in writing, to any

envisaged transfer of ownership on the grounds that it would adversely affect its access rights.

Where any of the other beneficiaries demonstrate that their access rights would be adversely

affected, the intended transfer shall not take place until agreement has been reached

between the beneficiaries concerned.