Professional Documents

Culture Documents

ICMAEE2341

Uploaded by

Ankit BansalOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

ICMAEE2341

Uploaded by

Ankit BansalCopyright:

Available Formats

International Conference on Material and Environmental Engineering (ICMAEE 2014)

Neural Network Method Applying in Acid Fracturing Choosing Well of Zanarol Oil

Field

Liu Zhenyu

Wang Wei

Northeast Petroleum University

Da Qing, China

Email: lzydqsyxy@163.com

Northeast Petroleum University

Da Qing, China

Email: wangwei050802@163.com

Email: hejinbao2004@163.com

He Jinbao

Wang Huzhen

Drilling and Production Technology Research Institute,

Instrumentation Research Institute, Liao He Oilfield,

China Petroleum

Pan Jin, China

Northeast Petroleum University

Da Qing, China

Email: whz1981@163.com

repeated optimization of acid fracturing modification

candidate Wells.

Due to the relationship between fracturing effect and its

parameters are mostly complex nonlinear. This relationship

is difficult to express using traditional mathematical. And

due to the different influence degree of each factor on the

fracturing effect, and some factors have the phenomenon of

crossing influences. Thus of artificial neural network

method can be used to solve this kind of highly complicated

nonlinear problem. In dealing with this complex nonlinear

relationship between the parameters, through the neuron's

parameters (the complicated network structure) on the

Internet to express knowledge rather than using simple

expressions in traditional mathematics. In order to solve the

phenomenon of repetition and primary and secondary

between parameters by adjusting the network of each node

and the connection between the coefficients (the size of the

parameters weights).

AbstractIn this article, the neural network method is applied

to optimize repeated acidizing fracturing Wells. In order to

obtain accurate prediction results reverse passing through

error and adjust the weights of each layer are adopt. This

method applied in Zanarol oil field has got a good effect.

Keywords- Neural

optimise; carbonatite

network;acid

fracturing;

Selected;

I. INTRODUCTION

Zanarol oil field is in the right south 240km of the

Aqtobe in the Republic of Kazakhstan. On the regional

structure of marina Caspian Sea on the east edge of the

basin uplift "a salt under Paleozoic uplift, Oil and gas fields

are a set of carboniferous carbonate rock reservoir. Since

2000, Acidizing fracturing technology is widely applied in

developing oil field. And has been playing a main role in

carbonate gas or oil reservoir stimulation. But acidizing

fracturing technology commonly has a definite validity.

And relative to the sand fracturing, the validity is usually

shorter. Usually quadratic transformation is applied to

restore the single well production capacity for failure Wells.

So the study against acid fracturing of choosing wells and

lays has played a crucial role in improving the effect of

modification.

In recent years, International technical services company

has carried out a great deal of field experiments and

theoretical studies against carbonate reservoir of the key

technology of choosing wells and layers, especially direct at

the most important method of choosing wells and layers,

while the study at this method, Advanced Resources

International company, Schlumberger, Intelligent Solutions

company, Ely and Associates company, Stem-Lab and

Pinnacle technology company etc. have all carried out a

large amount of research work. This study has closely

combined complex carbonate oil field actual situation

overseas, based on the studies of the literature domestic and

abroad, Using artificial neural network method, and

predicting the well productivity combining with

characteristic of the reservoir, So as to realize the carbonate

2014. The authors - Published by Atlantis Press

II.

THE BASIC PRINCIPLE OF ARTIFICIAL NEURAL

NETWORK

Neural network is a kind of network that consist of using

computer hardware or software to simulate the brain

networks of neurons and nerve fibers to form a knowledge

of complex phenomena. This cognition is not expressed in a

simple formula, but in the network on each neuron's

parameters and the weight coefficient of fiber, its using is

divided into two stages of learning and application step. In

the learning step, users only need to present both a great

deal of test data and conclusions (and the result data) to the

network. It will complete the learning process automatically.

Adjusting the parameters of the neural network so as to

give the correct response to the given data. Since networks

are built, it can enter into the application stage, at this time

it can deal with the new data, to obtain some results of

correct response, and it can also learn something in the

process of application, the processing precision of the

network depends on the past several experiences gained

from the previous learning. Artificial neural network can be

used to deal with extremely complex relationships because

20

obvious regularity. The Figure 1 below is the comparison

with artificial neural network and the traditional method.

of the artificial neural network express knowledge with

complex network structure that composed of a large number

of neurons. Especially for those phenomenon that have no

Original data

Sample

Arithmetic

Artificial neural network

Result

New data

network

Neural network with adjusted weighs

a. traditional method

Computed result

b. artificial neural network method

Figure 1. Comparison of traditional method and artificial neural network method

The main characteristic of artificial neural network:

a) Neural

network

can

distribute

storing

informations information is not stored in a single place but

is distributed in different places. A portion of the network

does not only store a message, but store in step by step.

Even the local network is damaged; the original information

can also be restored.

b) The processing and reasoning process of

information can be done in the same time.

c) Neural network can process information in the way

of self-organizing and self-learning rather than studying and

summarizing the regulation of the data in advance. Neurons

are the basic component unit of artificial neural network.

Each neuron constitutes a complicated network (neural

network) in the three-dimensional space. According to the

connection pattern of neurons in the neural network, neural

network can be divided into two basic types: layered

network and mesh network.

III.

ij

x1

vki

v1

x2

vl

Input

layer

Hiden

layer

xn

Outpu

t layer

Figure 2. Neural network model

It has P training samples, setting the input of the pth

(p=1, 2, , P) sample is x(p)=(x1(p), x2(p), , xn(p)),it`s

actual output is t(p)=(t1(p), t2(p), , tl(p)),the network

output is y(p)=(y1(p), y2(p), , yl(p)). The training steps are

as follows:

MATHEMATICAL MODEL OF ARTIFICIAL NEURAL

NETWORK

BP neural network include of input layer, interface layer

(hidden layer) and output layer. It is connection between the

up and down level, while there is no connection between

each layer of neurons. After a pair of learning samples is

provided to network, the activation values of neuron

transmit from the input layer through each layer to the

output layer and each neuron network in the output layer

gains the input response. According to reduce the target

output and the actual error direction, amending each

connection weight from the output layer to each layer

correction, step by step, and finally returned to the input

layer. The accuracy of the impact of network on the input

mode has increased with amending the error constantly.

And a basic neural model should include R inputs and each

input connects to neurons through an appropriate weight.

The BP neural network is composed of input layer,

hidden layer and output layer, and own n, m, l neurons

respectively, as shown in Figure 2.

A. Positive-going Calculation

Calculate the input Iip of each neuron in hidden layer.

I ip wij x jp i

n

j 1

The output Oip of neurons in hidden layer.

Oip f ( I ip )

1

a be

cI ip

The output ykp of neurons in output layer.

y kp v ki Oip

m

i 1

Where:

wij the bottom-up weight of hidden layer neuron

21

i(i=1,2,,m) and input layer node j;

xjpthe j input value of the p sample;

i the biases of hidden neuron i;

fthe excitation function of hidden neuron;

a,b,cadjustable parameters, generally 1;

vki the bottom-up weight of output layer neuron

k(k=1,2,,l) and hidden layer neuron i.

Energy function E after finishing training of P learning

samples is defined as:

p

E ep

p 1

If the value of E can meet the demand of some precision

value (training error), then stop iteration, or continue

another new round iterative calculation.

B. Back Propagation Algorithm

We define network output error dp and sample error

function ep as follow respectively:

IV. BP ALGORITHM STEPS

The steps of BP algorithm are shown in Figure 3, as

follows:

The first step, the network initialization, the very

bottom-up weight is assigned to a random number of

interval of (1, 1), the error function e is set, the calculation

precision value and maximum learning times M are

determined.

The second step, the k input sample and the

corresponding desirable output are randomly selected.

The third step, the input Iip and output Oip of each

hidden layer neuron are calculated.

The fourth step, the network desirable output and actual

output are used to calculate partial derivatives of the error

function to the output layer neurons.

The fifth step, the bottom-up weight of the hidden layer

to the output layer, partial derivatives of the output layer

neurons and output of the hidden layer are used to calculate

partial derivatives of the error function to the hidden layer

neurons.

The sixth step, partial derivatives of the output layer

neurons and output of the hidden layer neurons are used to

revise the bottom-up weight.

The seventh step, partial derivatives of the hidden layer

neurons and input of the output layer neurons are used to

revise the bottom-up weight.

The eighth step, a global error E is calculated.

The ninth step, we judge whether the network error can

meet the precision demand. When the error reaches the

preset precision value or learning times is larger than the set

maximum M, then the algorithm terminates. Otherwise,

next learning sample and corresponding desirable output are

selected, and return to the third step, and continue next

round learning.

d p t kp y kp

2

ep

1 l

t kp y kp

2 k 1

Where:

tkpthe k actual output value of the p sample;

ykpthe k network output value of the p sample.

Let W=[w,,v], the correction of W: W=[wij,i,vi],

in the back-propagation algorithm, along with the direction

of the negative gradient of the error function ep, changing

with W , W revise W.

W ( p )

e p

W

W ( p 1)

Where:

learning rate, the range is [0,1], generally

0.02~0.3;

momentum factor, the range is [0,1],

generally 0.30~0.95.

vi d p

i d p

wij d p

y p

qi

y p

wij

y p

vi

1 p

t p y p

2 p 1

d p Oip

cd p vi Oip (1 aOip )

cd p vi Oip (1 aOip ) x jp

W+WW is used to revise original bottom-up

weight vector W into forward calculation of learning

sample p+1.

22

Learning Sample

Weight Initialization

Forward Propagation to Calculate Output Values

of the Hidden and Output Layer Neurons

Error E between Calculated Desirable Value

and Actual Output Value

E< (Error Limit)

Yes

Yes

All E<

No

No

Adjust the Output Layer Weight

Keep Learned Weights as Knowledge Data

Back-Propagate Error

Adjust the Hidden Layer Weight

Expert Diagnosis and Results Output

Figure 3. Learning Diagram of BP Algorithm

prediction yield after fracturing is considered as output

sample. The results show that the prediction results of

artificial neural network and the actual production data are

basically consistent, achieving good prediction effect.

V. ACTUAL APPLICATION

For 6 acid fracturing wells of Zanarol Oilfield, yield

prediction of 6 wells is conducted (Table 1), permeability,

porosity, oil saturation, effective thickness, acid dosage are

selected, the current yield is considered as input sample, the

TABLE I.

YIELD PREDICTION AFTER ACID REFRACTURING TREATMENT OF ZANAROL O ILFIELD

Well NO.

Permeability

(10-15m2)

Porosity

(%)

Oil Saturation

(%)

2092

2449

3324

3477

3561

3563

2

6

29.41

8

14.33

8.99

12

12.03

8.79

11.02

10.78

10.07

76

72.72

78.98

78

87.39

79.13

Yield

Prediction

(t/d)

65

234

3

54.42

44

266.5

13

73.78

16.6

210

8

73.03

8

116

6

9.86

37.6

221.3

17

22.75

21.2

228

14

21.78

[2] Benson Oghenovo Ugbenyen, Samuel O. Osisanya, Efficient

Methodology for Stimulation Candidate Selection and Well

Workover Optimization[C],SPE 150760 presented at the Nigeria

Annual International Conference and Exhibition,held in Abuja,

Nigeria .30 July - 3 August 2011.

[3] Mansoor Zoveidavianpoor, Ariffin Samsuri, Hydraulic Fracturing

Effective

Thickness (m)

REFERENCES

[1]

Roshanai Heydarabadi ,J. Moghadasi, Criteria for Selecting a

Candidate Well for Hydraulic Fracturing[C],SPE 136988 presented at

the Nigeria Annual International Conference and Exhibition, held in

Tinapa - Calabar, Nigeria.31 July - 7 August 2010.

23

Acid

Dosage(m3)

Current Yield

(t/d)

[4]

[5]

Candidate-Well Selection by Interval Type-2 Fuzzy Set and

System[C],SPE 16615 presented at the 6th International Petroleum

Technology Conference, held in Beijing, China. 26 28 Mar 2013.

Cao Dinghong, Ni Yuwei, Application and Realization of Fuzzy

Method for Selecting Wells and Formations in Fracturing in

Putaohua Oilfield: Production and Operations: Diagnostics and

Evaluation[C],SPE 106335 presented at the SPE Technical

Symposium of Saudi Arabia Section, held in Dhahran, Saudi

Arabia.21-23 May 2006.

Mohaghegh, S, Platon, V, Candidate Selection for Stimulation of Gas

Storage Wells Using Available Data With Neural Networks and

Genetic Algorithms[C],SPE 51080 presented at the SPE Eastern

[6]

[7]

24

Regional Meeting, Pittsburgh, Pennsylvania, U.S.A.9-11 November

1998.

Shahab Mohaghegh, Scott Reeves, Development of an Intelligent

Systems Approach for Restimulation Candidate Selection[C],SPE

59767 presented at the SPE/CERI Gas Technology Symposium, held

in Calgary, Alberta, Canada.3-5 April 2000.

S.R. Reeves, Advanced Resources Intl., P.A. Bastian, Benchmarking

of Restimulation Candidate Selection Techniques in Layered, Tight

Gas Sand Formations Using Reservoir Simulation[C],SPE 63096

presented at the SPE Annual Technical Conference and Exhibition,

held in Dallas, Texas,U.S.A.1-4 October 2000.

You might also like

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Symmetrir and Order. Reasons To Live According The LodgeDocument6 pagesSymmetrir and Order. Reasons To Live According The LodgeAnonymous zfNrN9NdNo ratings yet

- Film Interpretation and Reference RadiographsDocument7 pagesFilm Interpretation and Reference RadiographsEnrique Tavira67% (3)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Amazon VS WalmartDocument5 pagesAmazon VS WalmartBrandy M. Twilley100% (1)

- SPE 30775 Water Control Diagnostic PlotsDocument9 pagesSPE 30775 Water Control Diagnostic PlotsmabmalexNo ratings yet

- Major Chnage at Tata TeaDocument36 pagesMajor Chnage at Tata Teasheetaltandon100% (1)

- Ms Admission SopDocument2 pagesMs Admission SopAnonymous H8AZMsNo ratings yet

- Industrial RevolutionDocument2 pagesIndustrial RevolutionDiana MariaNo ratings yet

- BIR REliefDocument32 pagesBIR REliefJayRellvic Guy-ab67% (6)

- Translated Copy of Volve PUDDocument84 pagesTranslated Copy of Volve PUDAnkit Bansal100% (4)

- PetroBowl ScheduleDocument1 pagePetroBowl ScheduleAnkit BansalNo ratings yet

- Spirograph: Hole Space Scaling Ring WheelDocument1 pageSpirograph: Hole Space Scaling Ring WheelAnkit BansalNo ratings yet

- Bahamas Datsaset StudyDocument20 pagesBahamas Datsaset StudyAnkit BansalNo ratings yet

- PorosityDocument19,018 pagesPorosityAnkit BansalNo ratings yet

- Project 1Document1 pageProject 1Ankit BansalNo ratings yet

- Ellipse Drawing SheetDocument1 pageEllipse Drawing SheetAnkit BansalNo ratings yet

- Water DiagnosticDocument10 pagesWater DiagnosticAnkit BansalNo ratings yet

- Analysis of Water Injection Wells Using The Hall Technique and Pressure Fall-Off DataDocument22 pagesAnalysis of Water Injection Wells Using The Hall Technique and Pressure Fall-Off DataAndaraSophanNo ratings yet

- BookshelfDocument1 pageBookshelfAnkit BansalNo ratings yet

- EOR Screening Criteria RevisitedPart 1Document10 pagesEOR Screening Criteria RevisitedPart 1kenyeryNo ratings yet

- Comparisons of Oil Production Predicting Models: Yishen Chen, Xianfeng Ding, Haohan Liu, Yongqin YanDocument5 pagesComparisons of Oil Production Predicting Models: Yishen Chen, Xianfeng Ding, Haohan Liu, Yongqin YanDauda BabaNo ratings yet

- Production Forecasting of Petroleum Reservoir Applying Higher-Order Neural Networks (HONN) With Limited Reservoir DataDocument13 pagesProduction Forecasting of Petroleum Reservoir Applying Higher-Order Neural Networks (HONN) With Limited Reservoir DataAnre Thanh HungNo ratings yet

- Forecast Time Series Using Artificial IntelligenceDocument8 pagesForecast Time Series Using Artificial IntelligenceAnkit BansalNo ratings yet

- Ramgulam ThesisDocument137 pagesRamgulam ThesisAnkit BansalNo ratings yet

- Technique To Draw CartoonsDocument24 pagesTechnique To Draw CartoonsAnkit BansalNo ratings yet

- UPES B.tech Petroleum CurricullumDocument5 pagesUPES B.tech Petroleum CurricullumAnkit BansalNo ratings yet

- Technique To Draw CartoonsDocument24 pagesTechnique To Draw CartoonsAnkit BansalNo ratings yet

- FirstpageDocument1 pageFirstpageAnkit BansalNo ratings yet

- PVT Data CitedDocument1 pagePVT Data CitedAnkit BansalNo ratings yet

- EOR Table C CorrectionDocument6 pagesEOR Table C CorrectionAnkit BansalNo ratings yet

- EOR Table C CorrectionDocument6 pagesEOR Table C CorrectionAnkit BansalNo ratings yet

- EOR Table C CorrectionDocument6 pagesEOR Table C CorrectionAnkit BansalNo ratings yet

- RF Based Dual Mode RobotDocument17 pagesRF Based Dual Mode Robotshuhaibasharaf100% (2)

- His 101 Final ReportDocument15 pagesHis 101 Final ReportShohanur RahmanNo ratings yet

- R07 SET-1: Code No: 07A6EC04Document4 pagesR07 SET-1: Code No: 07A6EC04Jithesh VNo ratings yet

- Notes 3 Mineral Dressing Notes by Prof. SBS Tekam PDFDocument3 pagesNotes 3 Mineral Dressing Notes by Prof. SBS Tekam PDFNikhil SinghNo ratings yet

- Embraer ERJ-170: Power PlantDocument5 pagesEmbraer ERJ-170: Power Plantபென்ஸிஹர்No ratings yet

- Permeability PropertiesDocument12 pagesPermeability Propertieskiwi27_87No ratings yet

- Business Mathematics (Matrix)Document3 pagesBusiness Mathematics (Matrix)MD HABIBNo ratings yet

- Prevention of Power Theft Using Concept of Multifunction Meter and PLCDocument6 pagesPrevention of Power Theft Using Concept of Multifunction Meter and PLCMuhammad FarhanNo ratings yet

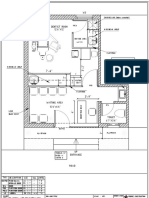

- Dental Clinic - Floor Plan R3-2Document1 pageDental Clinic - Floor Plan R3-2kanagarajodisha100% (1)

- Chapter 17 Study Guide: VideoDocument7 pagesChapter 17 Study Guide: VideoMruffy DaysNo ratings yet

- System Administration ch01Document15 pagesSystem Administration ch01api-247871582No ratings yet

- Carpentry NC Ii: Daniel David L. TalaveraDocument5 pagesCarpentry NC Ii: Daniel David L. TalaveraKhael Angelo Zheus JaclaNo ratings yet

- Statistics and Probability Course Syllabus (2023) - SignedDocument3 pagesStatistics and Probability Course Syllabus (2023) - SignedDarence Fujihoshi De AngelNo ratings yet

- Solutions - HW 3, 4Document5 pagesSolutions - HW 3, 4batuhany90No ratings yet

- Def - Pemf Chronic Low Back PainDocument17 pagesDef - Pemf Chronic Low Back PainFisaudeNo ratings yet

- Classroom Activty Rubrics Classroom Activty Rubrics: Total TotalDocument1 pageClassroom Activty Rubrics Classroom Activty Rubrics: Total TotalMay Almerez- WongNo ratings yet

- Pep 2Document54 pagesPep 2vasubandi8No ratings yet

- Dosificación Gac007-008 Sem2Document2 pagesDosificación Gac007-008 Sem2Ohm EgaNo ratings yet

- Thesis Preliminary PagesDocument8 pagesThesis Preliminary Pagesukyo0801No ratings yet

- Decolonization DBQDocument3 pagesDecolonization DBQapi-493862773No ratings yet

- IJREAMV06I0969019Document5 pagesIJREAMV06I0969019UNITED CADDNo ratings yet

- Annual Report 2022 2Document48 pagesAnnual Report 2022 2Dejan ReljinNo ratings yet

- TextdocumentDocument254 pagesTextdocumentSaurabh SihagNo ratings yet

- Assignment OSDocument11 pagesAssignment OSJunaidArshadNo ratings yet