Professional Documents

Culture Documents

Developing An Error Analysis Marking Tool For ESL Learners

Uploaded by

Michael ManOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Developing An Error Analysis Marking Tool For ESL Learners

Uploaded by

Michael ManCopyright:

Available Formats

7th WSEAS International Conference on APPLIED COMPUTER SCIENCE, Venice, Italy, November 21-23, 2007

356

Developing an error analysis marking tool for ESL learners

SAADIYAH DARUS

TG NOR RIZAN TG MOHD MAASUM

SITI HAMIN STAPA

NAZLIA OMAR

MOHD JUZAIDDIN AB AZIZ

Universiti Kebangsaan Malaysia

43600 UKM Bangi

MALAYSIA

adi@pkrisc.cc.ukm.my http://www.ukm.my

Abstract: This paper describes the first step of a research in developing an error analysis marking tool for ESL

(English as a Second Language) learners at Malaysian institutions of higher learning. The writing in ESL

comprises 400 essays written by 112 undergraduates. Subject-matter experts use an error classification scheme

to identify the errors. Markin 3.1 software is used to speed up the process of classifying these errors. In this

way, the statistical analysis of errors are made accurately. The results of the study show that common errors in

these essays in decreasing order are tenses, prepositions, articles, word choice, mechanics, and verb to be. The

findings from this phase of the study will lend to the next phase of the research that is developing techniques

and algorithms for error analysis marking tool for ESL learners.

Key Words: Marking tool, error analysis, ESL writing.

1 Introduction

Research in computer-based essay marking has been

going for more than 40 years. The first known

research in this area was undertaken by Ellis Baten

Page in the 1960s. Since then, a number of CBEM

(Computer-Based Essay Marking) systems have

been developed. These system can be divided into

two categories: semi-automated and automated

systems [1]. Examples of semi-automated systems

are Methodical Assessment of Reports by Computer

[2], Markin32 [3] and Student Essay Viewer [4].

Most of the current interest in this research focus on

the development of automated essay marking

systems. Examples of such systems are e-rater [5],

Intelligent Essay Assessor [6], Project Essay Grader

[7], Intellimetric Scholar [8], SEAR [9], and

Intelligent Essay Marking System [10].

Although many of the automated systems are

already available, they are not specifically

developed for ESL (or L2) learners. Researches in

ESL have shown that ESL learners write differently

from NES (Native English Speakers). Kaplan, for

example, identified five types of paragraph

development for five different groups of students

[11]. Essays written in English by NES display a

linear development, while essays written in Semitic

languages by the native speakers consists of a series

of parallel coordinate clauses. Chinese, Thai and

Korean tend to use an indirect approach, while

Russians exhibit some degree of digression that

would seem to be quite excessive to an English

writer.

An important construct that is central to L2

writing and proposed by several researchers is errors

in L2 writing. Two perspectives of errors in writing

arise from SLA (Second Language Acquisition) as

well as learning a TL (Target Language). Based on

these two perspectives, it is observed that written

errors made by adult L2 learners are often quite

different from those made by NES. Therefore, adult

ESL learners have different profile of errors

compared to NES. Thus, a marking tool, which is

specifically developed to analyze errors in ESL

writing for L2 learners is very much needed.

In order to develop the marking tool, this

research is divided into two phases. The first phase

of the research focus on profiling errors made by the

L2 learners. The second step is to develop

techniques and algorithms for detecting errors in the

learners essays. After that, the error analysis

marking tool will be developed and preliminary

testing of the tool using training data sets will be

carried out. Writing samples will then be tested

using the tool and accuracy of grades will be

evaluated.

This paper mainly describes the result of the first

7th WSEAS International Conference on APPLIED COMPUTER SCIENCE, Venice, Italy, November 21-23, 2007

part of the research.

357

From the perspective of SLA, Ferris has pointed out

several generalizations [12]. Firstly, it takes a

significant amount of time to acquire an L2, and

even more when the learner is attempting to use the

language for academic purposes. Secondly,

depending on learner characteristics, most notably

age of first exposure to the L2, some acquirers may

never attain native-like control of various aspects of

the L2. Thirdly, SLA occurs in stages.

Phonetics/phonology (pronunciation), syntax (the

construction of sentences), morphology (the internal

structure of words), lexicon (vocabulary) and

discourse (the communicative use that sentences are

put to) may all represent separately occurring stages

of acquisition. Fourthly, as learners go through

various stages of acquisition of different elements of

the L2, they will make errors reflective of their SLA

processes. These errors may be caused by

inappropriate transference of the L1 (First

Language) patterns and/or by incomplete knowledge

of the L2.

Ferris also notes that, L2 student writers need:

(a) a focus on different linguistic issues or error

patterns than native speakers do; (b) feedback or

error correction that is tailored to their linguistic

knowledge and experience, and (c) instruction that is

sensitive to their unique linguistic deficits and needs

for strategy training [12]. Common ESL writing

errors that are adapted from Ferris are presented in

Table 1.

improve overnight.

Truscott [13] also notes that different types of

errors may need varying treatment in terms of error

correction. In addition, lecturers also need to

understand that different students may make distinct

types of errors and they should understand the need

to prioritize error feedback for individual students.

This could be identified by looking at global errors

versus local errors, frequent errors, and structures

elicited by the assignment or that have been

discussed in class.

From the point of view of learning a TL, James

[14] notes that the level of language a particular

learner of a TL is operating falls into the following

areas: substance, text and discourse. Substance is

related to medium, text relates to usage and

discourse relates to use. According to James, If

the learner was operating the phonological or the

graphological substance systems, that is spelling or

pronouncing (or their receptive equivalents), we say

he or she has produced an encoding or decoding

error. If he or she was operating the lexicogrammatical systems of the target language to

produce or process text, we refer to any errors on

this level as composing or understanding errors. If

he or she was operating on the discourse level, we

label the errors occurring misformulation or

misprocessing errors [14].

The classification of levels of error for the written

medium as proposed by James reflects standard

views of linguistics. The levels of error for the

written medium that are adapted from James are

shown in Table 2.

Table 1: Common ESL writing errors

Table 2: Levels of error for written medium

2 L2 Writing and Errors

It follows then that, some of the practical

implications for teaching writing to L2 learners

from the perspective of SLA, are as follows: it is

unrealistic to expect that L2 learners production

will be error-free or, even when it is, that it will

sound like that of native English speakers; and

lecturers should not expect students accuracy to

Turning to studies on error analysis in Malaysia,

it is worth noting that several studies are available.

One such study was carried out by Lim [15] under

the supervision of Andrew D. Cohen, Marianne

Celce-Murcia and Clifford H. Prator, Jr. This study

produced a classification scheme of errors that were

displayed by Malaysian high school students.

Thirteen major types of errors discernable in the

7th WSEAS International Conference on APPLIED COMPUTER SCIENCE, Venice, Italy, November 21-23, 2007

students writing from the corpus that Lim analysed

were tenses, articles, agreement, infinitive and

gerundive constructions, pronouns, possessive and

attributive structures, word order, incomplete

structures,

negative

constructions,

lexical

categories, mechanics, miscellaneous unclassifiable

errors and use of typical Malaysian words.

According to Lim, areas that posed the greatest

difficulty for these students were tenses, use of

articles, agreement, prepositions and spelling. The

classification scheme of major categories of

common errors is further refined into sub-categories

and is documented in Lim.

3 Analysis of Errors in Malaysian

Students Writing

In this section, the description of the analysis of

errors in Malaysian students writing will be

presented.

3.1 Objective of the study

The objective of this study is to analyze types of

errors in

essays

written

by Malaysian

undergraduates. The following research questions

guide the study:

1. What are the most common errors made by the

learners?

2. What are the errors that need to be analyzed by a

marking tool that is going to be useful for the

learners?

358

unclassifiable errors, word choice, word form, and

verb to be.

Markin 3.1 software [16] is used to speed up the

process of analyzing the essays for errors. The

software is used so that classification of errors and

statistical analysis of errors are made accurately.

The annotation buttons in the software are first

customized accordingly based on the error

classification scheme. Three subject-matter experts

used Markin 3.1 software to classify the errors in

400 essays written by undergraduates. The subjectmatter experts are language teachers that have taught

English for at least ten years at institutions of higher

learning in Malaysia.

3.3 Results

The results of the error analysis carried out semiautomatically by subject-matter experts on 400

essays using Markin 3.1 software is as shown in

Table 3. The average is calculated by dividing the

number of errors with the total numbers of essays.

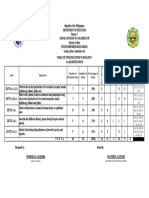

Table 3: Types of errors in learners essays

3.2 Methodology

Essays are written by 112 undergraduates who have

enrolled in Written Communication course at the

School of Language Studies and Linguistics, Faculty

of Social Sciences and Humanities, Universiti

Kebangsaan Malaysia. These essays are part of their

assignment submitted for the course. Since all

students entering Malaysian universities come from

similar background, we would assume that these

students can be taken as a representative of other

Malaysian students.

The research adopts the error classification

scheme that is originally developed by Lim

consisting of 13 types of errors. The error

classification scheme is adapted further based on the

researchers experience of teaching writing for more

than 15 years. The final classification scheme

consists of 17 types of errors which are as follows:

tenses, articles, subject verb agreement, other

agreement errors, infinitive, gerunds, pronouns,

possessive and attributive structures, word order,

incomplete structures, negative constructions,

prepositions,

mechanics,

miscellaneous

Six most common errors that are made by learners

are as follows: tenses, prepositions, articles, word

choice, mechanics, and verb to be.

4 Design and Implementation

Based on the result of the analysis in the previous

section, it seems that the errors that need to be

analyzed by the error analysis marking tool are

tenses, prepositions, articles, word choice,

7th WSEAS International Conference on APPLIED COMPUTER SCIENCE, Venice, Italy, November 21-23, 2007

mechanics, and verb to be.

The next step in this research is the development

of techniques and algorithm for detecting and

analyzing these errors in learners essays. Based on

these algorithms, the error-analysis marking tool

will be developed.

The completed tool can either be used on its own

or can also be incorporated into other systems. For

example, this tool can be included in a computer

assisted language learning package. Another option

is to incorporate the tool into CMS (Coursework

Management System) that has already been

developed at UKM [17].

5 Conclusion

The results of the study shows that Malaysian ESL

learners display a certain profile of errors in writing

that are unique to their SLA and learning a TL. An

error analysis marking tool that is developed

specifically for these learners will certainly benefit

them to write better and more effective essays. In

developing an error analysis marking tool that is

going to be useful for Malaysian ESL learners, the

tool must be able to detect and analyze errors in

tenses, prepositions, articles, word choice,

mechanics and verb to be.

Acknowledgment:

This study is funded by MOSTI (Ministry of

Science, Technolgy and Innovation), Malaysia with

the following research code: e-ScienceFund 01-0102-SF0092

References:

[1] Darus, S. A prospect of automatic essay

marking. Paper presented at International

Seminar on language in the global context:

Implications for the language classroom,

RELC, Singapore, 19-21 April, 1999.

[2] Marshall, S. and Barron, C. MARC

Methodical Assessment of Reports by

Computer. System. Vol. 15, Issue 2, pp: 161167. 1987.

[3] Holmes, M. Markin 32 version 1.2. (Online)

http://www.arts.monashedu.au/others/calico/re

view/markin.htm (12 June 2000).

[4] Moreale, E. and Vargas-Vera, M. Genre analysis

and the automated extraction of arguments

from student essays. Proceedings of the

Seventh International Computer Assisted

Assessment

Conference,

Loughborough

University,

8-9

July

2003.

(Online)

http://www.caaconference.com/pastConference

s/2003/procedings/index.asp (17 August 2007).

359

[5] Burstein J., Kukich, K., Wolff, S., Lu, C. and

Chodorow, M. Computer analysis of essays.

Proceedings of the NCME Symposium on

Automated Scoring, Montreal, Canada. 1998.

[6] Foltz, P. W., Kintsch, W. and Landauer, T. K.

The measurement of textual coherence with

latent semantic analysis. Discourse Processes,

Vol. 25, Issue 2 & 3, 1998, pp.285-307.

[7] Shermis, M. D., Mzumara, H. R., Olson, J. and

Harrington, S. On-line grading of student

essays: PEG goes on the world wide web.

Assessment & Evaluation in Higher Education,

Vol. 26, Issue 3, 2001, pp.247-259.

[8] Vantage Technologies

Inc.,

(Online)

http://www.vantagelearning.com/intellimetric/

(17 August, 2007).

[9] Christie, J. R. Automated essay marking for

content ~ does it work? Proceedings of the

Seventh International Computer Assisted

Assessment

Conference,

Loughborough

University,

8-9

July 2003.

(Online)

http://www.caaconference.com/pastConference

s/2003/procedings/index.asp (17 August 2007).

[10] Ming, P. Y., Mikhailov, A. and Kuan, T. L.

Intelligent essay marking system. 2000.

(Online)http://ipdweb.np.edu.sg/lt/feb00/intelli

gent_essay_marking.pdf (19 July 2003).

[11] Kaplan, R. B. Cultural thought patterns in

intercultural

communication.

Language

Learning, Vol. 16, Issue 1&2, 1966, pp. 1-20.

[12] Ferris, D. Treatment of error in second

language student writing, Ann Arbor, The

University of Michigan Press, 2002.

[13] Truscott, J. The case against grammar

correction in L2 writing classes. Language

Learning, Vol. 46, 1996, pp. 327-369.

[14] James, C. Errors in language learning and use

Exploring error analysis. Harlow, Essex:

Addison Wesley Longman Limited. 1998.

[15] Lim, H. P. An error analysis of English

composiutions written by Malaysian-speaking

high school students, M.A. TESL thesis,

University of California. 1974.

[16] Holmes, M. Markin 3.1 Release 2 Build 7.

Creative Technology. 1996-2004. (Online)

http://www.cict.co.uk/software/markin/

(17

August 2007).

[17] Mohd Zin, A., Darus, S., Nordin, M. J. and Md.

Yusoff, A. M. Using a Coursework

Management System in Language Teaching.

Teaching English with Technology. 2003, Vol.

3,

Issue

1.

(Online):

http://www.iatefl.org.pl/call/j_soft12.htm (17

August 2007).

You might also like

- Six Strategies For ELLDocument5 pagesSix Strategies For ELLbarberha100% (1)

- Learnenglish Videos How To Pay A Compliment Support Pack PDFDocument2 pagesLearnenglish Videos How To Pay A Compliment Support Pack PDFMichael ManNo ratings yet

- TEDx Chris LonsdaleDocument4 pagesTEDx Chris LonsdaleJustin FosterNo ratings yet

- Lesson Planning CertTESOLDocument8 pagesLesson Planning CertTESOLbishal_rai_2No ratings yet

- Describe CardsDocument3 pagesDescribe CardsElizabeth Qu ONo ratings yet

- Outsourcing MantrasDocument22 pagesOutsourcing MantrasMichael ManNo ratings yet

- Language Anxiety in ESL Classroom: Analysis of Turn-Taking Patterns in ESL Classroom DiscourseDocument5 pagesLanguage Anxiety in ESL Classroom: Analysis of Turn-Taking Patterns in ESL Classroom DiscourseMichael ManNo ratings yet

- 1VAK AssessmentDocument8 pages1VAK AssessmentMohammed FarooqNo ratings yet

- Humanizing My Business English Class and MyselfDocument90 pagesHumanizing My Business English Class and MyselfMichael ManNo ratings yet

- Socialising 1 - Breaking The Ice - Worksheets JD - 0Document5 pagesSocialising 1 - Breaking The Ice - Worksheets JD - 0Michael ManNo ratings yet

- Socialising 2 - Keeping Conversations Going - WorksheetsDocument6 pagesSocialising 2 - Keeping Conversations Going - WorksheetsCherry Oña AntoninoNo ratings yet

- Socialising 1 - Breaking The Ice - Lesson PlanDocument5 pagesSocialising 1 - Breaking The Ice - Lesson PlanantoniatortovaNo ratings yet

- Managment Scenario B p53Document1 pageManagment Scenario B p53Michael ManNo ratings yet

- Socialising 3 - Social Networking - Lesson PlanDocument5 pagesSocialising 3 - Social Networking - Lesson PlanMichael ManNo ratings yet

- Socialising 2 - Keeping Conversations Going - Lesson PlanDocument4 pagesSocialising 2 - Keeping Conversations Going - Lesson PlanRosamari CanoNo ratings yet

- Managment Scenario B p52Document1 pageManagment Scenario B p52Michael ManNo ratings yet

- Criado A Critical Review of PPP 2013Document20 pagesCriado A Critical Review of PPP 2013Bryan UrrunagaNo ratings yet

- Criado A Critical Review of PPP 2013Document20 pagesCriado A Critical Review of PPP 2013Bryan UrrunagaNo ratings yet

- 1VAK AssessmentDocument8 pages1VAK AssessmentMohammed FarooqNo ratings yet

- No Nonsense Guide To Writing v2.0Document63 pagesNo Nonsense Guide To Writing v2.0pro1No ratings yet

- So You Want To Be A PsychologistDocument36 pagesSo You Want To Be A PsychologistMichael ManNo ratings yet

- Task-Based Language Teaching PDFDocument11 pagesTask-Based Language Teaching PDFBosque Agujas100% (3)

- Criado A Critical Review of PPP 2013Document20 pagesCriado A Critical Review of PPP 2013Bryan UrrunagaNo ratings yet

- Learning Styles QuestionnaireDocument10 pagesLearning Styles QuestionnaireMichael ManNo ratings yet

- An Investigation of Task-Based Language Teaching in Elementary SchoolDocument10 pagesAn Investigation of Task-Based Language Teaching in Elementary SchoolMichael ManNo ratings yet

- A Brief Comment On Communicative LanguageDocument4 pagesA Brief Comment On Communicative LanguageMichael ManNo ratings yet

- Psychology For StudentsDocument2 pagesPsychology For StudentsMichael ManNo ratings yet

- A Self Access Extensive Reading Project Using Graded ReadersDocument15 pagesA Self Access Extensive Reading Project Using Graded ReadersMichael ManNo ratings yet

- Criado A Critical Review of PPP 2013Document20 pagesCriado A Critical Review of PPP 2013Bryan UrrunagaNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5784)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- TOS in ENGLISH 7 1st QUARTER EXAMDocument1 pageTOS in ENGLISH 7 1st QUARTER EXAMDiorinda Guevarra Calibara100% (6)

- I Nana Kakou I Na ManuDocument8 pagesI Nana Kakou I Na ManuVivian AssisNo ratings yet

- Medical Terminology ElementsDocument20 pagesMedical Terminology ElementsZahra PratiwiNo ratings yet

- Magilslinearscho00magiuoft PDFDocument548 pagesMagilslinearscho00magiuoft PDFScyrus Cartwright100% (1)

- Pragmatics and Language Teaching PDFDocument23 pagesPragmatics and Language Teaching PDFValentina PvNo ratings yet

- The History of Graphic DesignDocument14 pagesThe History of Graphic DesignJessica Aimèe LevyNo ratings yet

- Japanese, Korean and AltaicDocument20 pagesJapanese, Korean and Altaicchaoxian82416100% (1)

- Writing (B1+ Intermediate) : English For LifeDocument13 pagesWriting (B1+ Intermediate) : English For LifeSuresh DassNo ratings yet

- Gender of NounsDocument10 pagesGender of Nounsapi-298162596No ratings yet

- ConlangsDocument12 pagesConlangsJoaquínNo ratings yet

- Language and Society: Dr. Ansa HameedDocument34 pagesLanguage and Society: Dr. Ansa HameedafiqahNo ratings yet

- Zolai Gelhmaan Bu 1 (10 NOV 10 WED) PDF A-1aDocument288 pagesZolai Gelhmaan Bu 1 (10 NOV 10 WED) PDF A-1aZomiLinguisticsNo ratings yet

- References: An Introduction To The Linguistics of Sign Languages. (2005, September 24) - Retrieved On FromDocument1 pageReferences: An Introduction To The Linguistics of Sign Languages. (2005, September 24) - Retrieved On FromJayNo ratings yet

- Stylistics: Prof. Magdalena SotoDocument38 pagesStylistics: Prof. Magdalena SotoShanthar MartinezNo ratings yet

- Soran University - Docx Critical - Docx Zahim Iihsan - pdf1 PDFDocument10 pagesSoran University - Docx Critical - Docx Zahim Iihsan - pdf1 PDFZahim Ihsan FaisalNo ratings yet

- Style in literary translation: A practical perspectiveDocument13 pagesStyle in literary translation: A practical perspectiveIrman FatoniiNo ratings yet

- Colloqual LanguageDocument2 pagesColloqual LanguageJackoNo ratings yet

- Cinyanja Grade 8 Term 1 Schemes of WorkDocument4 pagesCinyanja Grade 8 Term 1 Schemes of WorkDøps Maløne83% (6)

- 7 Bit ASCII CodeDocument3 pages7 Bit ASCII Codejoshua adoradorNo ratings yet

- Teaching English as a Second Language: Key Psycholinguistic ConceptsDocument18 pagesTeaching English as a Second Language: Key Psycholinguistic ConceptsDhon Callo100% (1)

- Alphanumeric Series Practice SetDocument8 pagesAlphanumeric Series Practice SetIshaNo ratings yet

- ChavacanoDocument28 pagesChavacanorodel cruzNo ratings yet

- Phonics Lesson R BlendDocument6 pagesPhonics Lesson R Blendapi-355958411No ratings yet

- Language Contact: Integrated Into The Borrowing Language, While Code-Switching, As We Shall SeeDocument30 pagesLanguage Contact: Integrated Into The Borrowing Language, While Code-Switching, As We Shall Seecoconut108No ratings yet

- Etymology: LinguisticsDocument4 pagesEtymology: LinguisticsMercy MissionNo ratings yet

- 2.3 Levels of FormalityDocument2 pages2.3 Levels of FormalityAnakinNo ratings yet

- Ręsti Namai: Priežiūra Ir RestauravimasDocument42 pagesRęsti Namai: Priežiūra Ir RestauravimaslinasNo ratings yet

- Japanese Note OldDocument2 pagesJapanese Note OldTrong Nguyen DucNo ratings yet

- Aleph I Alef Through GimelDocument34 pagesAleph I Alef Through GimelLuis MoraNo ratings yet

- Language and Its CharacteristicsDocument5 pagesLanguage and Its CharacteristicsRubeel SarwarNo ratings yet