Professional Documents

Culture Documents

AI Techniques For Diagnostics & Prognostics

Uploaded by

SimoneSassiOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

AI Techniques For Diagnostics & Prognostics

Uploaded by

SimoneSassiCopyright:

Available Formats

International Journal of Computer Applications (0975 8887)

2nd National Conference on Future Computing February 2013

AI Technique in Diagnostics and Prognostics

A.Poongodai (Ph.d)

S.Bhuvaneswari, Reader,

Pondicherry University

Karaikal Campus,

Karaikal.

Pondicherry University

Karaikal Campus,

Karaikal.

ABSTRACT

Artificial Intelligence is the science and engineering of

making intelligent machine especially intelligent computer

programs. Activities in AI include Searching, Recognizing

patterns and Making logical inferences. This paper discuss on

Artificial intelligence technique used for System diagnostics

and prognostics. Three approaches of AI for diagnostics and

prognostics are 1. Rule based diagnostics 2. Model based

diagnostics and 3. Data Driven Approaches. System diagnosis

is the process of inferring the cause of any abnormal or

unexpected behavior. A prognostic is predicting the time at

which a system or a component will no longer perform its

intended function.

General Terms

AI based approach for Diagnostics and Prognostics

Keywords

Diagnostic applications make use of system information from

the design phase, such as safety and mission assurance

analysis, failure modes and effects analysis, hazards analysis,

functional models, fault propagation models, and testability

analysis.

In any system, given the complexity of the experimental data

and the variety of failure modes, a diagnostic system based on

Artificial intelligence technique was designed. A

comprehensive analysis of data was carried out to extract a set

of uncorrelated features that would not only detect various

fault modes but also specify the actionable knowledge to be

applied in order to solve the problems.

The system must take as input sensor values and the command

stream, and ideally performs

Artificial Intelligence, Diagnostics, Prognostics, Rule based

system, model based AI approach, Data driven approach.

1. INTRODUCTION

Artificial Intelligence (AI) could be defined as the ability of

computer software and hardware to do those things that we, as

humans, recognize as intelligent behavior. Traditionally those

things include such activities as:

1.

2.

3.

Searching: finding best solution in a large search

space.

Recognizing patterns: finding items with similar

characteristics, or identifying an entity when not all

its characteristics are stated or available.

Making logical inferences: drawing conclusions

based upon the given hypothesis.

Many diverse approaches to diagnostic reasoning are Rule

based system, Model based diagnostics, Learning systems,

Case-based reasoning systems and Probabilistic reasoning

systems. The first three approaches follow Artificial

intelligence technique The goal of this research paper is to

give a how the various AI based technique are used in System

Diagnostic and Prognostics

.

2. SYSTEM DIAGNOSIS

System diagnosis is the process of inferring the cause of any

abnormal or unexpected behavior. During system operation,

indications of correct or incorrect functioning of a system may

be available. In complex applications, the symptoms of

incorrect (or correct) behavior are observed directly or need to

be inferred from other variables that are observable during

system operation. Monitoring is a term that denotes observing

system behavior. The capability for monitoring a system is a

key prerequisite to diagnosing problems in the system.

Fault Detection (detecting that something is wrong)

Fault Isolation (determining the location of the

fault)

Fault Identification (determining what is wrong i.e.,

determining the fault mode)

Fault Prognostics (determining when a failure will

occur based conditionally on anticipated future

usage)

Diagnostics include not only fault detection but both Fault

Isolation and Fault Identification.

3. PROGNOSTICS

A prognostic is an engineering discipline focused on

predicting the time at which a system or a component will no

longer perform its intended function. Prognostics include

detecting the precursors of a failure, and predicting how much

time remains before a likely failure. A prognostic is the most

difficult of these tasks (Detection, Isolation, Identification and

prognostics).

A simple form of prognostics, known as a life usage model, is

widely in use. It gathers statistical information about the

amount of time that a component lasts before failure, and uses

these statistics collected from are sample of components to

make remaining life predictions for individual components.

We must be able to detect faults before we can diagnose them.

Similarly, we must be able to diagnose them before we can

perform prognostics.

In addition to fault detection, diagnostics, and prognostics,

System should also include support for deciding what actions

to take in response to a failure or a failure precursor.

International Journal of Computer Applications (0975 8887)

2nd National Conference on Future Computing February 2013

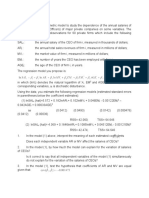

Table 1. Table captions should be placed above the table

Taxonomy

Model Based

Algorithms

Data Driven

Approaches

Description

Encode human knowledge via a hand-coded

representation of the system

Automatically fit

a model of system behaviour to historical data,

rather than hand-coding a model

4. AI TECHNIQUES

Three approaches of AI for diagnostics and prognostics are 1.

Rule based approach 2. Model based approach and 3. Data

Driven Approaches.

4.1 Rule based approach

The Rule-based expert systems have wide application for

diagnostic tasks. The procedures can be broken down into

multiple steps and encoded into rules. A rule describes the

action(s) that should be taken if a symptom is observed. A set

of rules can be incorporated into a rule-based expert system,

which can then be used to generate diagnostic solutions. In

this, expertise and experience are available but deep

understanding of the physical properties of the system is

either unavailable or too costly to obtain.

Two primary reasoning methods may be employed for

generating the diagnosis results.

1.

Forward Chaining

2.

Backward Chaining

In forward chaining, the process examines rules to

see which ones match the observed evidence. If only one rule

matches, the process is simple. However, if more than one

rule matches, a conflict set is established and is examined

using a pre-defined strategy that assigns priority to the

applicable rules. Rules with higher priority are applied first to

obtain diagnostic conclusions.

If the starting point is a conclusion, a backward

chaining algorithm collects or verifies evidence that supports

the hypothesis. If the supporting evidence is verified, then the

hypothesis is reported as the diagnostic result.

The advantages of rule-based systems include

increase in the availability and the reusability of

expertise but at high cost

increased safety, if the expertise must be used in

hazardous environments

increased reliability for decision making

fast and steady response

consistent performance

built-in explanation facility

The challenges are

1. Order in which the rules should be matched

Classification

Physics based

System of differential application

Classical AI Techniques

Rule-based expert systems

Finite-state machines

Qualitative Reasoning

Conventional numerical algorithms

Linear regression

Kalman filters

Machine learning

Neural networks

Decision trees

Support vector machines.

2.

Determining the completeness, consistency and

correctness of derived rules

4.2 Model Based Techniques

Model-based reasoning systems refer to inference methods

used in expert systems based on a model of the physical

world. Models might be quantitative i.e., based on

mathematical equations or qualitative i.e., based on

cause/effect models. They may include representation of

uncertainty, behaviour over time, normal behaviour, or might

only represent abnormal behaviour.

The main focus of application development is developing the

model. Then at run time, an engine combines this model,

knowledge with observed data to derive conclusions such as a

diagnosis or a prediction.

Model Based Algorithms encode human knowledge via a

(more or less) hand-coded representation of the system

Physics based

AI based

Hand-coded model uses qualitative, rather than numerical,

variables to describe the physics of the system. Model-based

AI techniques include rule-based expert systems, finite-state

machines, Qualitative Reasoning.

4.2.1 Rule Based Expert Systems

An Expert System is an intelligent computer program that

uses knowledge and inference procedures to solve problems

that are difficult enough to require significant human expertise

for their solution.

Early diagnostic expert systems are rule-based and used

empirical reasoning whereas new model-based expert systems

use functional reasoning. In the rule-based systems knowledge

is represented in the form of production rules. The empirical

association between premises and conclusions in the

knowledge base is their main characteristic. These

associations describe cause-effect relationships to determine

logical event chains that were used to represent the

propagation of complex phenomena. Heuristic-based expert

systems can guide efficient diagnostic procedures but they

lack in generality.

The limitations of the early diagnostic expert systems are as

follows:

Inability to represent accurately time-varying and

spatially varying phenomena

International Journal of Computer Applications (0975 8887)

2nd National Conference on Future Computing February 2013

Inability of the program to detect specific gaps in

the knowledge base

Difficulty for knowledge engineers to acquire

knowledge from experts reliably

Difficulty for knowledge engineers to ensure

consistency in the knowledge base

Inability of the program to learn from its errors

The rule-based approach has a number of weaknesses such as

lack of generality and poor handling of novel situations but it

also offers efficiency and effectiveness. The main limitations

of the early expert systems may be eliminated by using

model-based methods. Expert knowledge is contained

primarily in a model of the expert domain. Such models can

be used for simulation to explore hypothetical problems.

Model-based diagnosis uses knowledge about structure,

function and behaviour and provides device-independent

diagnostic procedures. These systems offer more robustness in

diagnosis because they can deal with unexpected cases that

are not covered by heuristic rules. The knowledge bases of

such systems are less expensive to develop because they do

not require field experience for their building. In addition,

they are more flexible in case of design changes. Model-based

diagnosis systems offer flexibility and accuracy but they are

also domain dependent.

4.2.2 Qualitative Simulation in Fault Detection

In this method, fault detection is performed by

comparing the predicted behaviour of a system based on

qualitative models with the actual observation. Qualitative

models of normal and faulty equipment are simulated to

describe the range of possible behaviours of the operation of a

system without numeric models. The modelling of physical

situations contains a set of qualitative equations derived from

a set of quantitative equations or from qualitative descriptions

about relationships among the process variables and contains

knowledge about structure, function and behaviour. Sensor

data from actual processes are used to select between the

different developed models. The fault diagnosis is realised by

matching between predicted and observed behaviour.

The main advantage of this approach is that accurate

numerical knowledge and time consuming mathematical

models are not needed. On the other hand, this method only

offers solutions in cases where high numerical accuracy is not

needed.

4.3 Data Driven Approaches

Learning systems are data-driven approaches that are derived

directly from routinely monitored system operating data. They

rely on the assumption that the statistical characteristics of the

data are stable, unless a malfunctioning event occurs in the

system.

Data Driven Approaches automatically fit a model of system

behavior to historical data, rather than hand-coding a model.

Data-driven approaches can either use conventional

numerical algorithms, such as linear regression or Kalman

filters, or they can use algorithms from the machine learning

and data mining AI communities, such as neural networks,

decision trees, and support vector machines.

The strengths of data-driven techniques are as follows:

ability to transform the high-dimensional noisy data

into lower-dimensional information for detection

and diagnostic decisions

ability to handle highly collinear data of high

dimensionality

substantially reduce the dimensionality of the

monitoring problem

compress the data for archiving purposes

provide monitoring methods

facilitate model building via identification of

dynamic relationships among data elements

The main drawback of data-driven approaches is that their

efficacy is highly dependent on the quantity and quality of

system operational data.

The engineering processes needed to relate system

malfunctioning events using a data driven diagnosis approach

typically involve the following steps.

Determine the High-Impact Malfunctions

Data Selection, Transformation, De-noising and

Preparation

Data Processing Techniques

Testing and Validation

Fusion

Table 2. Technique used in the system

Model based

AI system

M/c Learning

system

Fault

Detection

Expert

system

Clutering

Diagnostics

Finite state

machines

Decision Tree

Induction

Prognostics

Neural

networks

4.3.1 Decision tree Induction

The Decision tree induction is greedy approach which

constructs the decision tree in top down recursive, divide and

conquer manner. The problem of constructing decision tree is

NP-complete problem. Decision tree are built in two phases:

Growth and Pruning phase.

In the growing phase, the training data set is recursively

partitioned until all the records in a partition belong to

same class. For every partition, a new node is added to the

decision tree. Initially, the tree has a single root node for

the entire data set. For a set of records in a partition P,

a test criterion T for further partitioning the set into PI,.

. . , Pm is first determined. New nodes for PI,. . . , Pm are

created and these are added to the decision tree as

children of the node for P. Also, the node for P is

labeled with test T, and partitions PI, . . . , P,,, are then

recursively partitioned. A partition in which all the records

have identical class labels is not partitioned further, and

the leaf corresponding to it is labeled with the class. The

building phase constructs a perfect tree that accurately

classifies every record from the training set.

However, one often achieves greater accuracy in the

classification of new objects by using an imperfect,

smaller decision tree rather than one which perfectly

classifies all known records. The reason is that a decision

tree which is perfect for the known records may be

overly

sensitive

to

statistical irregularities

and

idiosyncrasies of the training set. Thus, most algorithms

perform a pruning phase after the building phase in

which nodes are iteratively pruned to prevent overfitting

and to obtain a tree with higher accuracy.

A decision tree, or any learned hypothesis h, is said to over fit

training data if another hypothesis h2 exists that has a larger

International Journal of Computer Applications (0975 8887)

2nd National Conference on Future Computing February 2013

error than h when tested on the training data, but a smaller

error than h when tested on the entire dataset. The various

decision tree induction algorithms are HUNTs algorithm,

CART, ID3, C4.5, SLIQ and SPRINT

The classification accuracy of the decision trees is improved

by constructing (1) new binary features with logical operators

such as conjunction, negation, and disjunction and (2) at-least

M-of-N features whose values will be true if at least M of its

conditions is true of the instance, otherwise it is false. Gama

and Brazdil (1999) combined a decision tree with linear

discriminate for constructing multivariate decision trees.

4.3.2 Support vector machine (SVM)

SVM is a statistical classifier that analyzes data and

recognizes patterns. Also SVM is a non-probabilistic binary

linear classifier as it takes a set of input data and predicts, for

each given input, which of two possible classes comprises the

input. It is also used for regression analysis. SVM is more

capable to solve the multi-label class classification. SVM can

classify successfully when combined with other classifier for

reducing the number of dimension.

4.3.3 Neural Network

Backpropagations algorithm is a neural network algorithm

which works on multilayer feed forward network, a type of

neural network. A neural network classifier is a network of

units, where the input units usually represent terms, the output

unit(s) represents the category. BP neural network can

improve the accuracy of classification. There are several

theoretical advantages of BP neural network that make it

especially adaptable to be employed in interacting prediction.

It can process a batch of testing dataset and gain the

predicting results quickly. The advantage of neural network

technique is their high tolerance to noisy data and their ability

to classify the new object on which they have not been

trained.

Neural networks find application in fault detection due to their

main ability of pattern recognition. The network is trained to

learn, from the presentation of the examples, to form an

internal representation of the problem. For diagnosis it is

needed to relate the sensor measurements to the causes of

faults, and distinguish between normal and abnormal states.

Input vectors are introduced to the network and the weights of

the connections are adjusted to achieve specific goals.

One of their limitations in the on-line fault detection process

is, the high accuracy of the measurements needed in order to

calculate the evolution of faults. Fault detection usually makes

use of measurements taken by instruments that may not be

sensitive enough or that may produce noisy data. In this case

the neural network may not be successful in identifying faults.

It is nearly always necessary to pre-process the data so that

only meaningful parameters are presented to the net.

Neural networks are able to learn diagnostic knowledge from

process operation data. However, the learned knowledge is in

the form of weights which are difficult to comprehend.

Another limitation compared to expert systems is their

inability to explain the reasoning. This is because neural

networks do not actually know how they solve problems or

why in a given pattern recognition task they are able to

recognise some patterns but not others. They operate as black

boxes using unknown rules and are unable to explain the

results.

5. CONCLUSION

One of the biggest challenges for AI-based prognostics is

verification and validation (V&V). The complexity of AI

systems makes them very difficult to verify and validate

before deployment. AI based V&V may offer the potential to

help solve this problem. Several biology-inspired AI and

Neural network techniques are currently popular. Neural

Networks model a brain learning by examplegiven a set of

right answers, it learns the general patterns. Reinforcement

Learning models a brain learning by experiencegiven some

set of actions and an eventual reward or punishment, it learns

which actions are good or bad. Genetic Algorithms model

evolution by natural selectiongiven some set of agents, let

the better ones live and the worse ones die. Typically, genetic

algorithms do not allow agents to learn during their lifetimes,

while neural networks allow agents to learn only during their

lifetimes. Reinforcement learning allows agents to learn

during their lifetimes and share knowledge with other agents.

6. REFERENCES

[1] Ann Patterson-Hine, Gordon Aaseng, Gautam Biswas,

Sriram Narasimhan, Krishna Pattipati, A Review of

Diagnostic Techniques for ISHM Applications

[2] Mark Schwabacher and Kai Goebel, NASA Ames

Research Center, A Survey of Artificial Intelligence for

Prognostics

[3] C. Angeli, A. Chatzinikolaou, On-Line Fault Detection

Techniques

for

Technical

Systems:

A

Survey,International Journal of Computer Science &

Applications 2004, Vol. I, No. 1, pp. 12 30

[4] Beshears, R. and Butler, L. 2005. Designing For Health;

A Methodology For Integrated Diagnostics / Prognostics.

Proceedings of IEEE Autotestcon. New York: IEEE

[5] Thair Nu, Phyu, Survey of Classification technique in

data mining. Proc International Multi Conference on

Engineers and Computer Society, Volume 1, 2009

[6] Beshears, R. and Butler L,. 2005. Designing For Health;

A Methodology For Integrated Diagnostics/Prognostics

Proceedings of IEEE Autotestcon. New York: IEEE.

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5782)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Algebra 1 Honors | Inequalities and Intervals: Finding Solutions to Inequalities and Interval NotationDocument3 pagesAlgebra 1 Honors | Inequalities and Intervals: Finding Solutions to Inequalities and Interval NotationJoh TayagNo ratings yet

- Tutorial On Compressed Sensing Exercises: 1. ExerciseDocument12 pagesTutorial On Compressed Sensing Exercises: 1. ExercisepeterNo ratings yet

- Assessment of Learning Module 4.2 1Document33 pagesAssessment of Learning Module 4.2 1Chasalle Joie GDNo ratings yet

- Student No: Name: Class: Sem: Subject: Experiment No: Maximum Marks: Marks ObtainedDocument14 pagesStudent No: Name: Class: Sem: Subject: Experiment No: Maximum Marks: Marks ObtainedDijaeVinNo ratings yet

- Managerial EconomicsDocument33 pagesManagerial EconomicsSalma Farooqui100% (1)

- SQL Plus Quick Reference: Release 8.0Document24 pagesSQL Plus Quick Reference: Release 8.0karmaNo ratings yet

- Basic Probability Worksheet PDFDocument20 pagesBasic Probability Worksheet PDFFook Long WongNo ratings yet

- CNC & Casting Simulation LabDocument13 pagesCNC & Casting Simulation LabJayadev ENo ratings yet

- CI45 Eng ManualDocument60 pagesCI45 Eng Manualmiklitsa0% (1)

- Borgomano Et Al 2013Document37 pagesBorgomano Et Al 2013Francois FournierNo ratings yet

- Module 2 TGDocument64 pagesModule 2 TGJunel ClavacioNo ratings yet

- Forced Convection Lab Report PDFDocument6 pagesForced Convection Lab Report PDFAshtiGosineNo ratings yet

- Cost Volume Profit AnalysisDocument36 pagesCost Volume Profit Analysismehnaz kNo ratings yet

- (Victor E Saouma) Machanics and Design of Reinforc PDFDocument173 pages(Victor E Saouma) Machanics and Design of Reinforc PDFAadithya ThampiNo ratings yet

- Example 2Document7 pagesExample 2Thái TranNo ratings yet

- Paper Layout GaugingDocument19 pagesPaper Layout GaugingSadasivam Narayanan50% (2)

- Open Channel FlowDocument170 pagesOpen Channel FlowMJ MukeshNo ratings yet

- Optimal Measurement Combinations As Controlled Variables: Vidar Alstad, Sigurd Skogestad, Eduardo S. HoriDocument11 pagesOptimal Measurement Combinations As Controlled Variables: Vidar Alstad, Sigurd Skogestad, Eduardo S. HoriRavi10955No ratings yet

- Notes in LogicDocument6 pagesNotes in LogicMae Ann SolloNo ratings yet

- StemweekquizbeeDocument2 pagesStemweekquizbeerensieviqueNo ratings yet

- 2003 Prentice Hall, Inc. All Rights ReservedDocument50 pages2003 Prentice Hall, Inc. All Rights Reservedkona15No ratings yet

- MCQs of Groups-1Document17 pagesMCQs of Groups-1Ramesh Kumar B L100% (1)

- Curriculum Guide Math 7 10Document93 pagesCurriculum Guide Math 7 10Keennith NarcaNo ratings yet

- Advanced Programme Maths P1 2019Document8 pagesAdvanced Programme Maths P1 2019Jack WilliamsNo ratings yet

- Activity 3 - Reflective EssayDocument1 pageActivity 3 - Reflective EssayElla CaraanNo ratings yet

- Creating Motion in SolidWorks-Motion DriversDocument58 pagesCreating Motion in SolidWorks-Motion DriversManuj AroraNo ratings yet

- Project Management For Construction - Organization and Use of Project InformationDocument16 pagesProject Management For Construction - Organization and Use of Project InformationFarid MorganNo ratings yet

- Aime 1983Document3 pagesAime 1983anantyantoNo ratings yet

- Supervised Control of Discrete-Event Dynamic SystemsDocument13 pagesSupervised Control of Discrete-Event Dynamic SystemsMiguelNo ratings yet

- Analysis of Image Quality Using LANDSAT 7Document9 pagesAnalysis of Image Quality Using LANDSAT 7sinchana G SNo ratings yet