Professional Documents

Culture Documents

Combining Pattern Classification AND Assumption-Based Techniques FOR Process Fault Diagnosis

Uploaded by

Hannah Gaile FrandoOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Combining Pattern Classification AND Assumption-Based Techniques FOR Process Fault Diagnosis

Uploaded by

Hannah Gaile FrandoCopyright:

Available Formats

Com~urers

c&vn. Engng

Printed in Great Britain.

Vol. 16, No. 4, pp. 299-312,

All rights reserved

1992

Copyright

COMBINING

PATTERN

CLASSIFICATION

ASSUMPTION-BASED

TECHNIQUES

PROCESS

FAULT

DIAGNOSIS

S.

N.

KAWRI

and V.

0098- 1354/92

$5.00 + 0.00

0 1992 Pergamon Press Ltd

AND

FOR

VENKATASUBRAMANIAN~

Laboratory for Intelligent Process Systems, School of Chemical Engineering, Purdue University,

West Lafayette, IN 47907, U.S.A.

(Received I9 October 1990;Jinnl revision received 18 February 1991; received for

publication 24 May 1991)

Abstract-Assumption-based

approaches have been proposed in recent times for the diagnosis of process

malfunctions. These methods are systematic in the derivation of the knowledge. base and are general in

approach. However, the quantitative approaches that have been proposed in the past are compiled,

difficult to develop and lack generality. Furthermore, the standard assumption-based approaches that use

Boolean logic have problems with the completeness and resolution requirements. To circumvent these

problems, and to improve upon pattern classification techniques, we propose the tuples method which

combines assumption-based approaches with the pattern recognition techniques, using neural networks

to perform real-time diagnosis. The tuples method is based on deep-level quantitative models of the

process and its knowledge base is developed with relative ease. The method is robust in the sense that

it allows for modeling inaccuracies. It is also general in that the process model and the diagnostic method

are separated. This integrated approach was found to successfully diagnose single and multiple faults

including sensor faults and parameter drifts. It has good real-time speed, and gives early diagnosis of

malfunctions. Our study also suggests that the generalization characteristics of a neural network can be

improved by using a fully-connected network.

1. INTRODUCTION

Process fault diagnosis

using compiled

rule-based

systems has been proposed in recent literature. The

main problems with these types of systems are that

they often prove difficult to maintain and validate,

contain a great deal of process-specific

knowledge,

and lack generality in approach that makes it difficult

to accomrnodale

new changes in the plant. Assumption-based approaches,

on the other hand, not only

separate the search from the knowledge base but they

also effectively provide a general framework for the

specification

of the knowledge

base. They allow

qualitative and/or quantitative information about the

process to be included. Because of the flexibility, it

is easier to separately develop and include processspecific knowledge

and accommodate

changes relatively easily.

A diagnosis is a conjecture that certain units are

malfunctioning

and the rest functional. The problem

is to specify which units we conjecture to be faulty.

An assumption-based

diagnostic algorithm searches

for a diagnosis by making or dropping assumptions

about a units function. Groups of assumptions

together form invariant relationships about the process.

These invariant relations can also be called constraints.

Balance

equations

are an example

of

invariant

relations.

Assumptions

in a balance

equation may correspond

to the various coefficient

~To whom all correspondence should be addressed.

values, initial and boundary

conditions.

We shall

henceforth

refer to these as parameters.

In the

description of a reactor, for example, an assumption

may be made that the reactor is not leaking. The

governing equations of a reactor would thus involve

some assumptions about the expected function of the

reactor. This is an example of an assumption

that

governs the validity of an equation and would be

referred to as a structural assumption. Furthermore,

since the validity of a balance constraint can only be

verified using Sensor data, one should also consider

the assumption of sensor accuracy. Validity of all

these assumptions guarantees the constraint (balance)

satisfaction. Assumption-based methods assume that

a set of constraints, each with a distinct set of

assumptions, is available and that these constraints

can be evaluated based on the sensor information

from the process. When an assumption corresponding to a constraint fails, the equation is no longer

balanced resulting in a residual. We shall refer to this

residual as the constraint deviation due to an assumption failure. Constraints may deviate in the positive

or negative direction (i.e. the residual is positive or

negative) depending on the assumption failure (say, a

coefficient changes its nominal values to a higher or

lower value).

One of the first reported assumption-based

approaches was by Davis (1984) in the name of

constraint suspension. Constraints correspond to the

structural equations of the system. Constraint

suspension is based on the idea that there is no way

299

300

S. N. KAVURI and

V. VENKATASUBRAMANUN

for all the structural equations (constraints) to be

active (i.e. all units are functioning as expected) and

produce the observed outputs when the overall process is malfunctioning. If the set of constraints is not

consistent with the global behavior that has been

observed, some of the constraints are dropped. If

consistency is found on suspending a constraint, a

malfunction is suspected at the unit responsible for

that constraint. The advantage of this approach is

that it does not need to know how a unit could

malfunction, thus being able to detect faults not

known a priori. Its application, however, is limited to

an off-line analysis, as it requires the outputs of a unit

to be measured (by probes) when the constraints of

that unit are suspended. The notion of a conflict set,

due originally to de Kleer (1987), is used often in

assumption-based approaches for multiple fault diagnoses. A conflict set is a minimal set of assumptions

about the normal functioning of the process which is

inconsistent with the process observations. de Kleer

(1987) proposed an automated truth maintenance

system (ATMS) that solves the multiple fault diagnosis problem by considering the consistent and inconsistent relationships between the assumptions via the

use of conflict sets. Reiter (1987) introduced the

principle of parsimony which assumes that no more

than the faults necessary for consistency need be

considered in a diagnosis. According to this principle,

a diagnosis is a minimal set of assumptions about the

system that need to be retracted for the observations

to be consistent with the system. Reiter showed that

a diagnosis is a minimal hitting set (or a cut set) of

the collection of all the minimal conflict sets. Here,

diagnosis is a conjecture that some minimal set of

faults has occurred. Reiter suggested an efficient

search for deriving all possible multiple fault conjectures which are minimal in the above respect. The

above-mentioned papers refer to general search

methods and do not provide any information about

their practical applications. Moreover, the above

works are geared to a qualitative description of the

system and are not suitable where sensor faults occur.

Reiter (1987), for example, had to make an explicit

assumption about sensor accuracy.

Assumption based approaches using quantitative

methods have also been proposed. Here, the

knowledge base consists of a set of governing

equations describing the process. Associated with

each model equation are tolerance limits which

indicate when the equation is no longer representative

of the process. The method uses the fact that violation of a model equation indicates that at least one

of its associated assumptions is invalid. By examining

the sign and magnitude of the residual of each

equation, and by considering the assumptions on

which they depend, the most likely failed assumption

can be deduced. Quantitative description of the system is used by FALCON (Dhurjati et al., 1987) and

its successor Diagnostic Model Processor (Petti et al.,

1989). FALCON represents its knowledge base in the

form of a rule-based system. As the knowledge base

includes a great deal of process-specific knowledge, it

is not easy to adapt it to accommodate new changes

in the process (Venkatasubramanian and Dhurjati,

1987). It is not suitable for multiple fault diagnoses,

including parameter drifts and was limited in its scope

due to its use of rigid thresholds in evaluating

constraints. Kramer (1987) suggested the use of nonBoolean logic to circumvent problems which arise

when Boolean judgements are used in validating

assumptions. Non-Boolean methods use sigmoid-like

functions for a graded judgement.

All the assumption-based approaches to diagnosis

are based on the following notions:

(a) if a conjunction of assumptions (constraint) is

not violated, all the individual assumptions in

the set are valid; and

(b) if a constraint is violated, at least one of the

assumptions is invalid. Stated differently, if a

constraint is violated, only its corresponding

assumptions can be the cause.

However, these notions may fail because the

procedure evaluating the constraints may become

inaccurate due to model inaccuracies and sensor

noise. By (a), a constraint which is not violated can

validate its assumptions without any information

from the other constraints. While FALCON

considers both the notions, Diagnostic Model Processor

does not consider (a).

In the Diagnostic Model Processor, an assumption

which is common to many violated constraints is

strongly suspected. Satisfaction of constraints, on the

other hand, is considered to provide evidence that the

associated assumptions are valid. All the constraints

in which an assumption occurs are considered to

provide partial evidence to validate/invalidate that

assumption. Thus, the satisfaction of a constraint

would not grant the validity of its assumptions but

only provides evidence that it is likely. Diagnostic

Model Processor assumes (b). When a constraint is

violated, it allows only its assumptions to be the

cause. It is possible, as will be shown in Section 4

(discussion in Table 3), that external assumptions

could result in the violation of some constraints. This

is due to modeling inaccuracies which may be, for

example, caused by the linearization of a model.

Unduly penalizing the assumptions of the constraint

in such cases may result in a large set of suspect faults

while failing to penalize the actual fault.

When a disturbance occurs in a process, one or

more assumptions are violated. The constraints in

which an assumption failed would have rapid changes

in the residuals while other constraints, whose

assumptions have not been violated, would have a

slower growth of their residuals. The way the

deviations grow for different constraints, for an

assumption violation, is different. In this paper, we

propose the tuples method which exploits these differences in the constraint sensitivities to different

Assumption-basedapproachesfor diagnosisof processmalfunctions

assumption violations without limiting the method to

the notions (a) and (b) mentioned above. The number

of constraints needed for the diagnosis is relatively

less compared to Reiters approach. The method can

also handle sensor failures and multiple faults.

2. ASSUMPTION-BASED

APPROACHES

TO

DIAGNOSIS

Assumption-based methods require constraints to

be developed based on distinct groups of assumptions. Once the constraints are obtained, they may be

evaluated and checked for their satisfaction. The fault

set is then identified based on the following method

which assumes Boolean logic for the expression of the

satisfaction of a constraint.

2.1. General algorithm for assumption -based metho&

Let W be a set of constraints, { Wi} i = 1, n. Each

constraint W, is a set of assumptions associated with

it. These assumptions correspond to the model parameters such as coefficients, initial and boundary

conditions. Assumptions on the structure (e.g. no

leak in a reactor) may also be made.

gets WF and W are defined as follows:

W =

set of constraints that are faulty (violated),

W7 = set of constraints that are normal,

Wu

W=

w,

The way the set P is treated further differs in different

approaches.

2.1.1. Sr?rgIe and multiple fault diagnosis. For

single fault identification, the next step is to consider

each p E P as a potential fault candidate. There are

two alternatives to multiple fault diagnosis. One is to

simply consider the entire set as the multiple fault

conjecture. The other identifies subsets of P which are

valid diagnoses. Here, the fault is defined as the

minimal cover$ of WF by P. Each such minimal cover

simultaneously accounts for the failure of all the

violated constraints and the validity of the satisfied

constraints and hence is a valid diagnosis. The fault

set so obtained is a set of possible multiple faults.

Taking the minimal cover as a fault set is familiar

under the name of parsimony principle (Reiter, 1987)

which assumes that no more than the needed faults

occur in causing the observed malfunction. This

allows one to do a diagnosis by considering only

the minimal constraints. Minimal constraints are

assumption sets, none of whose subsets can be valid

assumption sets.

Let us illustrate these ideas with the aid of

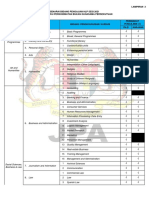

an example. Consider a nonisothermal reactor

shown in Fig. 1. The model description is given in

Appendix B.

Let A, be the set of the possible assumptions in the

process:

A, = {CA, K 6,. 7-7CAO, Tj, Tfl)-

w=nWF=Q,

The sensors for the process are:

where Q is the null set.

(i) Evaluate all the constraint deviations (residuals) and using available rigid thresholds, identify

sets WF and WT. If the constraint deviation exceeds

the rigid threshold value, the constraint is considered

faulty. Otherwise the constraint is considered normal.

(ii) Find the set ST which is the union of all the

assumptions occurring in the constraints in WT. Find

the set SF which is the union of all the assumptions

occurring in the constraints in WF:

ST = U(W,E

OBS = {C,,

w),

(iii) Define set P as the difference of sets? ST and

SF. get P consists of all the assumptions that occur

in the faulty constraints but not in the normal

constraints. Since only these assumptions can be

sources of violation of faulty constraint, P is the set

of assumptions which are the possible fault origins:

P=SF-ST,

set of possible fault origins.

W, = [C, VF,

F),

TC,,l,

W,=[TF,VTjCA

T,],

W, = [ Tj Fi T TJ,

W, = IT,, Fj TIP

W,=[V,,FV].

Each of these constraints could be checked for

consistency by evaluating an equation. For example,

the equation corresponding to the constraint W, is:

0.833c,,

I-Difference in two sets A and B is the set of all elements

belonging to A but not belonging to L?.

$A set C is said to cover a set of sets {S,

S,}, if the set

C has at least one element of each of sets S,. A cover C

is defined to be a minimal cover if there is no proper

subset of C that is a cover.

V, T, q.,r;j,

{T,, Tp, CAc,, F,} are system parameters. These are

variables whose dynamics are externally specified;

external disturbances enter in the form of a change in

these parameters. An assumption on a measured

(sensor) variable is that the sensor reading is accurate.

Failure in any one of these assumptions would make

the corresponding constraints to be violated. The

constraints are given below:

SF = u( w, E Wf).

P =

301

-- 1.7OlC, - 0.004V

+ O.O05F,

- 0.009T

+ 5.338 = 0.

This equation is obtained from the linearized

model of the process about the nominal steady state

values.

S. N. I(AvuRi and V. VENECATA~UBIUMANIAN

302

.-

F1

Fig. 1. NonisothermalCSTR.

Let W, and W, be the violated constraints while the

others remain satisfied:

ST=

W,uW,uW,=(V,,FVTjF,TTnFOCA

SF=

W,uW,=(C,

P = SF Subsets

{CA,, },

TO),

VF,TC,,T,,E;;),

ST = (CA0 T,,}.

of P = (

>, (Cm),

(T,,),

(CAD T-,1:

{ T,, ) and { 1 do not cover both W, and W, ,

(CA0 T,, } covers W, and W, .

Therefore, {C,,

T,,)

is a minima1 cover.

Therefore, diagnosis is {C,,

2.2. The problems

T_, >.

of completeness

and resolution

Assumption-based

methods suffer from two

requirements. One is completeness, which is the

requirement that the information from all the theoretically possible minimal assumption sets be used.

Failing to consider all minimal assumption sets may

result in a wrong minima1 cover and hence a wrong

diagnosis. Resolution is the other requirement that

we have all and only the faulty constraints in set Tf.

The absence of any faulty tuple in TF can result in a

wrong minima1 cover. The completeness and

resolution problems arise in the following two ways:

(a) Boolean logic uses a rigid threshold and it is

possible that a faulty tuple is treated as normal when

in the vicinity of a threshold; and (b) it may not be

feasible to evaluate all of the required minima1 tuples.

We propose a pattern classification method that uses

non-Boolean reasoning in considering the constraint

violations and it does not require a large set of

constraints in order to make a diagnosis.

3.

PATTERN

CLASSIFICATION

IN DIAGNOSIS

The success of any pattern recognition approach

depends on two factors: (a) separation of the classes

in the feature space; and (b) appropriate choice of

classification procedure.

We will consider the problem of improving class

separability first. Let the training set S be partitioned

into subsets S, and S, such that S, belongs to Class

I and S, belongs to Class II. If the sets S, and S, are

not linearly separable in the X-space, they may be

made linearly separable (Sklansky et al., 1981) in a

c-space, where C is a function of X and the dimensionality of c is larger than that of X. The separating

hypersurface may be linear in the c-space but is

nonlinear in the X-space. In the case of diagnosis, the

raw sensor data as such may not provide the best

space to classify the faults. The problem is to find a

suitable transformation that allows the fault classes

to be well classified. The aforementioned assumptionbased techniques provide an answer to this. In the

absence of any modeling errors or noise, distinct

assumption sets provide an orthogonal set of patterns

for the classes. In the event of modeling inaccuracies

or noise, the patterns may not provide an orthogonal

set for the classes but they may still provide a good

classification as the assumption sets would be selectively sensitive to a few faults each.

Let x be a feature vector of dimension r. Define

t = a(x) where:

@(x)[@,

(x),

. . . 3@,(x)1,

e<(x) is a function mapping x into &. Qi (x) Maps the

r-dimensional x-space into d-dimensional C:-space.

As an example, consider the XOR classification

problem shown in Fig. 2a. XOR Is a classical nonlinear classification problem where the classes are not

Assumption-basedapproachesfor diagnosisof processmalfunctions

d

(1.1)

a (o,o)

b(l.0)

Fig. 2a. XOR

- x,

problem in X-space.

-t-

Fig. 2b. XOR

problem in C-space.

linearly separable. The four corners of a unit square

are to be classified into two classes such that the

opposite corners are in the same class. Points (0,O)

and (1, 1) belong to Class I and points (0, 1) and (1,0)

belong to Class II. In the figure, points a and d belong

to Class I and points b and c belong to Class II. In

the 2-D form, in the x-space, the classes are not

linearly separable. Now, define a c-space as follows:

r, = x:,

<,=x:,

53 = XIX,.

The points a, b, c and d in the new space are shown

in Fig. 2b. Classes I and II are clearly linearly

separable. A linear classifier can be given by the

following linear function:

h(C)=

-2c,

- 2L + 45, + 1,

h(t) = 0 is a hyperplane in the C-space but would be

a quadratic classifier in the x-space:

h(c) > 0 the point is in Class I,

h(C) < 0 the point is in Class II.

Thus, the classes may be better separated in a space

of higher dimension. In the case of fault diagnosis,

the common practice is to use the sensor data directly

as the feature space (Hoskins and Himmelblau, 1988;

303

Venkatasubramanian et al., 1990). One could achieve

a better separation between the fault classes by

considering a mapping of the raw sensor values into

a feature vector of higher dimension. However, it is

to be understood that this mapping to higher

dimension is not suggested as a solution to improve

classification for regions with inherent overlap

(sensor information is insufficient to uniquely identify

a class) or overlap due to noise. The problem of

inherent class overlap is possible in process diagnosis

applications as the available sensor information may

not provide separability of all classes. We propose a

scheme that utilizes the process model information to

reduce or remove the inherent overlap between

classes.

Artificial neural networks have been of interest

lately for their ability to solve nonlinear classification

problems. We too choose a neural network as the

choice for classification. Artificial neural networks

distributively store the information in a given set of

pairs of input-output patterns and have the ability to

recall the output pattern for a given input pattern.

The associative knowledge of the network is stored in

the weights (strengths) on the connections between

the basic computational units of the network called

the artificial neurons. A typical set of patterns corresponding to the various classes are used to train the

network. The network, upon repeated exposures to

these training patterns, updates the weights on the

connections until its outputs are close to the target

patterns. The backpropagation algorithm provides a

convenient tool for training the network, and has

already been used for process diagnosis (Hoskins and

Himmelblau,

1988;

Watanabe

et al.,

1989;

Venkatasubramanian ef al., 1990). Neural networks

are proposed as models of associative memory for

storing historical fault information in the form of

patterns of observable features. A general description

of the algorithm is found in these references.

4. TUPLES-BASED

APPROACH

TO FAULT

DIAGNOSIS

Every assumption-based approach uses a representation for assumption sets and the corresponding

constraints. Tuple is the corresponding representation in this method. The basic entity in the tuples

method is a tuple. A k-tuple r is defined as a partially

ordered set of k variables [xl, x2, . . . , xk]. The first

(k - 1) variables are unordered and constitute the

assumptions made by the tuple. Each tuple is associated with a quantitative equation that is derived from

the steady state model of the system linearized about

the nominal steady state. The associated equation

consists of only the k-variables mentioned in the

tuple. The kth variable is estimated using this

equation and the other (k - 1) variables. A tuple is

said to deviate when the estimated value of the kth

variable deviates from the nominal value. The principle of the tuples method is to reduce the potential

fault set by validating groups of assumptions based

304

S. N. KAVURI

and V.

VJHCATASUBRAMANIAN

--

Fig. 3. Tuple derivation.

on the tuple deviations. The deviation in the tuple due

to the violation of one of the assumption variables

is a measure of the sensitivity of the kth variable to

that assumption. No explicit characterization of the

tuples is made as normal or faulty. Instead, the deviation from a tuple is used as a feature for classification.

4.1. Types of assumptions

Each tuple is associated with a set of assumptions.

These assumptions are of three types:

about sensor variables--These

are

variables whose values are measured. Here, the

assumption amounts to saying that the sensor

is not faulty and that the sensor reading is the

true value of the variable. A sensor assumption

failure will occur when a sensor is biased

high/low, when a sensor is out-of-range or

when a sensor is stuck at a reading.

(a) Assumptions

(b)

Assumptions

about system

parameters-These

are variables whose variations are externally

defined, i.e. their dynamics are not specified in

the process model. By parameters we mean

model coefficients, initial and boundary conditions. Here, the assumption indicates that the

parameter is unchanged at its steady state

value. A parameter assumption fails when a

parameter in the system drifts from its nominal

value. Examples are drifts in heat transfer

coefficient, increase in the reactant concentration of reactor feed etc.

(c) Assumptions

about system structure-A

process system/subsystem could be described by a

set of equations depending on its functioning

mode. The equations for a normal storage tank

do not apply if there is a big leak. Here the

assumption amounts to choosing a particular

mode of function and hence the associated

model equations.

305

Assumption-basedapproachesfor diagnosisof process malfunctions

4.2. Deriving the tuples

4.3. Application

Let the linearized system model be described by the

steady state equations:

study

Ax=d.

(1)

Equation (1) is obtained by linearizing the process

model with respect to the state variables and process

coefficients, around the nominal steady state values.

In deriving the tuple equations, a set of assumptions has to be chosen. Assumptions about the

functional mode of process units have already been

made implicitly in choosing equation (1). For

example, in writing the controller equations, the

assumption is being made that it is functioning

normally. Assumptions about sensors and parameters are made by identifying the corresponding

variables y. The vector of variables x can be partitioned into y and z for a given choice of assumption

variables y and the nonassumption variables z. The

system matrix A can be correspondingly partitioned

into B and C. z can he derived from the steady state

system equations (1) by rewriting them as:

Cz=By+d.

steady state value of the nonassumption

variable in the ith tuple,

rj =jth assumption variable,

G = matrix of coefficients g,j,

vi = estimate of the nonassumption variable

in the ith tuple.

a reactor case

For the purposes of illustration, a nonisothermal

CSTR (Fig. 1) is considered. Appendix B gives the

model equations and the steady state values of

parameters and variables. There are two controllers

in this configuration-a level controller which manipulates the outflow of the reactor and a reactor

temperature controller which manipulates the

coolant flowrate. Eight parameter faults and six

sensor faults are considered for the diagnostic task.

Table 1 shows the various faults that are being

simulated for the purpose of illustration. Table la

gives the faults simulated for generating the training

Table

la.

Fault set for generating

training patterns Faults simulated

(2)

For various choices of y, steady state equations are

derived in the form of (2) using (1). Equations in (2)

cannot be obtained for a set of assumptions y if C is

singular. Using the maximum matching technique

(Fig. 3 and Appendix A), one could derive sets of

assumptions for which (2) exists. Each tuple equation

chosen is a steady state equation of the form:

v,

of the tuples approach:

F,+

15

Fe--15

3

4

5

6

To+30

To - 30

T,, + 30

TjO - 30

7

8

Table

c,,

C,

+ 0.3

- 0.3

lb. Fault set for single fault

generalization

Faults simulated

1

2

F, + 8

F. - 8

3

4

To + 60

To + 60

5

6

7

8

T,,-+@

q, - 60

c,,

+ 0.15

c,,-0.15

Table Ic. Fault set for multiple fault

generalization

Multiple faults simulated

1

2

3

4

5

6

The overall system of tuple equations chosen is of the

form:

v=Gy+v,

Fo-

Table Id. Sensor faults

5=v-v.

where G is a matrix that transforms the assumption

data y into the tuple deviations t.

If the assumption variable yi is a sensor variable,

then its assumption value is the value that has been

measured. If the assumption is about a system parameter, then the steady state value of the parameter

is the assumed value. If the assumption is about the

system structure, it specifies the particular equations

that are considered to model a particular functional

mode of the system. An assumption of controller

failure would thus drop the corresponding controller

equation from the process model and treat the

corresponding manipulated variable as an exogenous

variable, when deriving a tuple equation.

10 and To+30

10 and To+30

T,, + 30 and To + 30

T,,, - 30 and To + 30

C,, f 0.3 and To + 30

CAo-0.3

and To+30

F,+

Sensorfaults

1

2

3

4

5

6

simulated

simulated

Y biased low by 5%

V biased high by 5%

biased low by 2%

q biased high by 2%

T biased low by 2%

T biased high by 2%

T,

Table le. Multiple fault generalization

including sensor failures

Multiple faults simulated

I

2

3

4

5

6

biased low bv 5%

biased high gy 5%

b+sd low by 2%

1; bmsed high by 2%

T biased low bv 2%

T biased high Gy 2%

V

V

T/

and T. + 30

and f, + 30

and To + 30

and To+ 30

and T, + 30

and F. + 30

306

S. N.

Table

Tuple

2.

Tuples

No.

CA?

=2

=3

=4

=5

T6

=1

C&l

CO

C

TO

TO

FO

=I

F,

T

V

XL

_l

Fo

FO

FO

FO

V

T

VENKATASUBRAMANIAN

their assumptions

Assumptions

=,

=*

and

and V.

KAVURI

fault has been initiated. The tuples T,, T2 and T, are

noticed

to be similar in their deviations.

If we

consider the difference in the column vectors, they

were seen to be very small. A variety of metrics could

be used to show the similarity between the tuples.

An inner product could be used to show the distance

between them. Tuples T,, T2 and T, are close in

this sense and are considered similar. They do not

provide distinct information and do not help much in

classifying the faults in the space they span.

in singIe fauit classifi4.3.2. Network performance

cation. A process malfunction

is detected when the

observed symptoms

cross a certain threshold value.

The time at which the symptom reaches the threshold

value depends on the nature and magnitude of the

fault and on the magnitude of the threshold limits. It

would not

be possible to know the exact time at

which the fault occurred, and hence the time interval

after which the sensor readings were taken. In realtime diagnosis, the methodology

should not depend

on the availability of sensor data, after a specific time

interval from the inception of the fault. Similarly, it

is not feasible to maintain an extensive database for

various percentage

deviations

of the parameters.

Therefore, it is important that the diagnostic methodology be weakly dependent, if at all, on the time of

fault inception and the extent of the fault.

An S-4-8

network was found to perform optimally

in this study. As the number of cases investigated is

quite large to be described

at length, in all the

following sections, we present only the typical results

to illustrate the salient features and conclusions.

Fault patterns for a particular percentage deviation

(Table la), measured 15 min after the fault inception

were used as the training data. Appendix

B shows

how

the

tuples deviations

are normalized

before

inputting to the network. The output values of the

network are scaled between 0 and 1 by a sigmoid

function. A zero value at an output node means that

a particular fault is not present. A large or a growing

output value shows the occurrence of a fault. Since

each output node represents a particular fault, the

corresponding

output value is an indication of the

occurrence of that fault. Diagnosis

of the network

based on inputs taken over a period of 20min

for

fault deviations shown in Table la is shown in Figs 4a

and b. Each graph in these figures, labeled by the

particular single fault that has been introduced into

Estimate

V

V

V

T

=,

F,

4

F/

TO

To

CA

TO

Tr

CO

TO

=I0

C.,

T

V

Fi

F

data for the networks.

In this table, for example,

r0 + 30 is to be read as the fault corresponding

to an

increase of 30R in the reactor inlet temperature r,,.

A set of ten tuples is considered for this diagnostic

task. Each tuple corresponds

to a model equation

which establishes a variable with its assumptions.

Table 2 shows the tuples, their assumption

sets and

the corresponding

variable that is estimated using the

tuple.

Table 3 shows the deviations in the tuples due to

various faults,

15 min after the occurrence

of the

fault. Tuple T4, which estimates C,,,

is sensitive to

changes in C,, . Since the estimate of C,, is compared

with its steady state value for the tuple deviation, it

is expected to be sensitive to a change in this value.

However,

the deviations

in these tuples are not

always sensitive to the corresponding

assumptions.

For example, tuple T2 is not sensitive to changes in

C,, though it is an assumption violation. Similarly,

the deviations in a tuple may not always be due to its

assumptions.

For example, tuple T, is sensitive to

changes in TiOthough this is not one of its assumption

variables. While tuples may not guarantee orthogonal

data sets, they still provide

a feature space that

classifies the fault space well. For example (Table 3),

tuple Tp is less sensitive to TjO deviations while T, is

sensitive to T,, deviations.

Similarly,

tuple T4 is

sensitive to C,, deviations, tuple T6 is not. However,

the lack of orthogonality

will cause problems with

Boolean judgements

used for diagnosis.

A graded

judgement may be a better choice. Hence the problem

is most suitably treated as a pattern classification

problem using neural nets, as discussed in this work.

This avoids the use of ad hoc methods to generate

graded judgements.

Tuple selection. Table

3 shows the devi43.1.

ations in the tuples for various faults 15 min after the

Table

3. Tuule

Tuple

Fault

Fo+

F0To+

GT,o +

To c&l+

c.,No-Fault

7.4

T2

0.7503

-0.7295

-0.4848

0.5036

-0.9051

0.6348

-0.4639

0.4287

0.0021

-0.7555

0.7353

0.4849

-0.5034

0.9051

-0.6348

0.4639

- 0.4286

- 0.0020

-0.7727

0.7537

0.4848

-0.5034

0.9051

-0.6348

0.4639

- 0.4286

- 0.0020

deviations

deviations

0.2418

-0.3075

0.3255

-0.3472

0.7521

-0.4480

0.9051

- 0.9027

-0.0022

for

various

faults

at time = 15 min

TS

-0.9047

0.905 1

0.4016

- 0.3739

- 0.5906

0.1926

-0.4195

0.4880

- 0.0032

Te

-0.0031

-0.001s

- 0.0303

-0.0077

0.9051

-0.5593

-0.0474

0.0102

0.0017

=7

-0.2630

0.2206

0.4850

- 0.503 1

0.905 1

- 0.6345

0.4640

- 0.4283

-0.0017

=B

0.9051

-0.9051

0.0

0.0

0.0

0.0

0.0

0.0

0.0

=9

-0.9051

0.8958

0.5826

-0.5689

-0.2891

-0.0154

0.5771

- 0.5059

- O.OO58

TICI

-0.1955

0.1624

0.3624

-0.3863

0.9051

-0.6154

0.3411

- 0.3209

-0.0008

Assumption-basedapproachesfor diagnosisof processmalfunctions

lb

ao

30

dmeinminutes

Fig. 4a. Singlefault diagnosis--positivedisturbances.

10

307

characteristic of neural networks. The second requires a certain ability to interpolate between the

typical patterns of single faults in order to generalize

to multiple faults. Upon training with typical patterns

of single fault situations, certain network configurations are able to generalize to multiple faults. While

the standard architecture of the network shows generalization of the first kind, in the case of multiple fault

generalizations it can make wrong predictions.

The standard network is a multiple layered feedforward network with connections between the nodes

of adjacent layers. The standard network provides

information to the output layer only through the

hidden layer, which extracts an aggregate picture of

the feature vector. Generalizing to multiple faults

requires recognizing the dependence of various faults

on the individual feature values. The general backpropagation algorithm applies to any acyclic network

of nodes (feedforward) and is not limited to the

standard network. No cross connections, however,

are allowed within the input nodes, or the output

nodes. The general acyclic network allows connections between nodes in nonadjacent layers and

can, without a loss of generality, disallow cross

zo

Fig. 4b. Singlefault diagnosis-negative disturbances.

the process, shows the growth of the corresponding

output values. It is seen that the network has successfully diagnosed all the faults over the time interval.

The output values corresponding to the faults are

seen to gradually increase with time reaching high

values by 15 min. However, for smaller deviations of

5-lo%, the network would take longer to diagnose as

the tuple deviations grow relatively slowly. The network has shown its ability to diagnose over time

while being trained on fault data at a particular time.

Next the network is tested on its ability to diagnose

faults at different extents (severity levels) and multiple

faults, while only being trained on single faults for a

particular extent.

4.3.3. Network

performance

in multiple

fault

generalization.

One of the important features of fault

detection by neural networks is their ability to

generalize. There are two kinds of generalization one

considers in the case of diagnosis with neural

networks. First, the ability to diagnose for various

magnitudes of a particular fault while being trained

only on a typical magnitude. Second, the ability to

diagnose multiple fault scenarios while being trained

only for typical fault patterns for single faults. The

first is the commonly referred to noise-tolerant

Fig. 5a. Multiple fault generalization-standardnetwork.

Iii

in minutes

Fig. 5b. Multiple fault generalization-fully-connected

network.

308

S.N.

andV. VENKATASUBRAMANIAN

KAVIJRI

connections between the units in any layer. We will

henceforth refer to a three layered network, with

direct connections allowed between input and output

layers, as a fully-connected

network.

Any knowledge of the input patterns is related to

the output nodes through the hidden units. The

hidden units extract the overall pattern features. For

the purposes of diagnosis, the network needs to

generalize the relations between the individual input

nodes and output nodes. It can be achieved by

allowing direct connections from the input nodes to

the output nodes. These connections allow the network to bias a particular output node to a particular

input node when the training patterns show a correlation between the values corresponding to these

Table

4a.

Real-time

Time

Fo+

Multiple

fault

generalization-standard

Fo-

To+

=0-

CA,+

CA0 -

0.001

0.0

0.0

0.001

0.001

0.001

0.002

0.004

0.003

0.001

0.0

0.0

0.082

0.152

0.257

0.425

0.747

0.862

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.577

0.0

0.0

0.001

0.004

0.0

0.0

0.0

0.0

0.0

0.008

0.012

0.020

0.029

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.009

0.012

0.014

0.018

0.021

0.951

O.P57

O.P57

0.955

0.952

0.950

0.0

0.0

0.0

0.0

0.0

0.0

0.017

0.025

0.026

0.026

0.029

0.045

0.0

0.0

0.0

0.0

0.0

0.0

=,o+

CA0 -

0.003

0.002

0.001

0.0

0.0

0.0

0.0

0.830

0.0

0.0

0.0

0.0

0.894

0.062

2.5

5

7.5

10

15

20

0.0

0.0

0.0

0.0

0.0

0.0

Table

o.om

0.0

0.948

0.962

o.oJB

0.031

0.0

0.0

0.024

0.029

0.029

0.028

0.026

0.022

4b.

Real-time

Fo+

and

0.921

T,,+

0:o

0.0

0.0

0.0

Multiple

To+

and

fault

0.006

T,+

0.058

0.033

0.031

0.030

0.028

0.021

generalization-standard

network

network

behavior

for multiple

fault generalization

Standard

network

with eight tupla

&-

To+

To-

Ta2.5

5

=,a -

0.0

0.0

0.001

0.002

0.010

0.025

7.5

IO

15

20

Time

r,o+

Tn+

0.0

0.0

0.0

0.0

0.0

0.0

1.145

0.083

5.0

and

oL547

0.720

0.618

0.417

0.126

0.057

Fo2.5

network

network

behavior

for multiple

fault generalization

Standard

network

with eight tuules

F,+

2.5

5.0

7.5

10

15

20

nodes. Our experience with various multiple fault test

cases suggests that allowing for direct connections

between the inputs and outputs (in addition to the

connections to the hidden layer) improves the generalization characteristics. It appears that the weights

on direct connections derive relations which may exist

between particular pairs of input and output nodes,

and this ability results in the desired generalization

characteristics. The weight on the connection between an input node and output node could be

strong implying that there is a tendency for this

output unit to have high activation every time the

particular input unit has a strong activation. It would

not be possible to capture this information without

the direct connections.

and

%+

q;., -

7+

0.001

0.007

0.001

0.001

0.761

0.395

0.0

0.0

0.0

0.0

0.723

0.006

0.001

7.5

10

15

20

0.015

0.022

0.028

0.030

0.001

0.0

0.0

0.0

0.224

0.163

0.127

0.119

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.915

0.956

0-W

o.P7s

0.976

0.001

0.001

0.001

0.0

0.0

0.003

0.008

0.012

0.016

0.017

2.5

5

7.5

10

I5

20

0.001

0.003

0.004

0.002

0.0

0.0

0.0

0.0

0.0

0.0

0.004

0.007

0.096

0.16s

0.218

0.414

0.951

o.Ps6

0.0

0.0

0.0

0.0

0.0

0.0

0.001

0.001

0.001

0.001

O.CKlO

0.000

0.0

0.0

0.0

0.001

0.025

0.096

0.223

0.697

0.757

0.664

0.199

0.093

0.0

0.0

00:X

0.0

0.0

2.5

5

7.5

10

15

20

0.0

0.0

0.0

0.0

0.0

0.0

0.045

0.083

0.084

0.064

0.030

0.018

0.041

0.025

0.009

0.003

0.0

0.0

0.0

0.0

0.0

0.001

0.008

0.019

0.0

0.0

0.0

0.0

0.0

0.0

0.002

0.001

0.001

0.002

0.012

0.026

0.0

0.0

0.0

0.0

0.0

0.0

0.022

0.068

0.1%

0.470

0.885

0.946

CA,+

and

To+

Assumption-basedapproachesfor diagnosisof process malfunctions

4.3.4.

connected

may view this as a conservative diagnosis and preferable over the possibility of overlooking an actual

fault. Tables 4a and b show the real-time diagnosis of

a standard network for multiple fault situations. For

each multiple fault situation, the table shows the

network outputs at 2.5, 5,7.5, 10, 15 and 20 min from

the time of fault inception. Tables 5a and b show the

real-time diagnosis of the fully-connected network.

While the standard network succeeded in identifying

only one of the two multiple faults, the fully-connected network succeeded in identifying both faults in

most cases.

For any kind of correct generalization performance, a neural network needs to have seen similar

situations during its training. In this sense, it is easier

Multiple fault generalization

with a fullynetwork. Figures 5a and b compare the

performance of the standard and fully-connected

networks for a typical multiple fault case. For

example, consider the case where a simultaneous

increase in the reactor feed inflow and temperature

were introduced. The standard network failed not

only to identify the increase in the feed temperature

but also wrongly diagnosed an increase in the concentration of the feed. The fully-connected network, on

the other hand, identified both the faults correctly but

in addition gave the increase in concentration as a

fault. The fully-connected network was seen in

general to identify all the faults. However, it also

identifies some more fault candidates in addition. One

Table

Sa.

Multiple

Real-time

Time

Fof

fault

309

generalization-fullyconna;tcd

network

network

behavior

for multiple

fault generalization

Fully-connected

network

with eight tuples

To+

F0-

qo+

To-

F,+

and

T,o-

C0 +

C0 -

T,+

0.283

0.757

0.837

0.0

0.0

0.0

0.044

0.137

0.295

0.0

0.0

0.0

0.00 I

0.00

0.00.2

0.0

0.005

0.0

0.013

0.029

0.001

0.001

0.0

10

15

20

0.924

0.941

0.941

0.0

0.0

0.0

0.489

0.815

0.815

0.0

0.0

0.0

0.004

0.011

0.028

0.0

0.0

0.0

0.050

0.118

0.225

0.0

0.0

0.0

2.5

5

1.5

10

15

20

0.0

0.0

0.0

0.0

0.0

0.0

0.300

0.825

0.938

0.966

0.983

0.989

0.013

0.033

Z:E

0.539

0.7%

0.001

0.003

0.005

0.007

0.008

0.006

0.0

0.0

0.0

0.0

0.0

0.0

0.001

0.0

0.0

0.0

0.0

0.0

0.001

0.001

0.001

0.002

0.003

0.005

2.5

5

7.5

10

15

20

0.006

0.004

0.004

0.003

0.004

0.004

0.002

0.003

0.004

0.006

0.006

0.003

0.0

0.002

0.016

0.119

0.190

0.225

0.121

0.722

0.938

0.975

0.980

0.967

0.0

0.0

0.0

0.0

0.0

0.0

0.001

0.005

0.018

0.068

0.276

0.547

0.0

0.0

0.0

0.0

0.0

0.0

2.5

7.5

Fo-

and

5b.

Multiple

Real-time

Time

Fo+

fault

0.0

To+

0.0

0.0

0.0

0.0

0.0

0.0

Tm+ and

Table

T, +

0.009

0.0

0.0

0.0

0.0

0.0

generalization-fully-connected

network

network

behavior

for multiple

fault generalization

Fully-connected

network

with eight tuples

F0-

To+

ToTjo-

2.5

5

7.5

10

15

20

0.003

0.003

0.004

0.004

0.004

0.005

0.004

0.004

0.003

0.003

0.002

0.002

0.271

0.134

0.068

0.037

0.015

0.009

2.5

5

7.5

10

15

20

0.006

0.006

0.005

0.004

0.003

0.002

0.002

0.002

0.002

0.002

0.006

0.014

0.00s

0.010

0.042

0.213

0.907

0.994

2.5

5

7.5

10

15

20

0.002

0.002

0.001

0.001

0.001

0.001

0.007

0.016

0.028

0.040

0.050

0.040

0.161

0.724

0.937

0.973

0.942

0.746

and

0.0

0.0

0.0

0.0

0.0

0.0

CA,+

and

and

0.0

0.0

0.0

0.0

0.0

0.0

T,o -

c,,+

C,*-

0.0

0.0

0.0

0.0

0.0

0.0

0.019

0.072

0.164

0.267

0.416

0.47El

0.002

0.001

0.001

0.001

0.001

0.001

0.006

0.009

0.011

0.012

0.012

0.011

0.001

0.005

0.021

0.067

0.312

o.MIz

0.0

0.0

0.0

0.0

0.0

0.0

0.018

0.0

0.001

0.001

0.001

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

To+

0.000

0.000

0.000

O.OW

0.000

O.ooO

C,,,-

T,,+

T,+

0.17%

0.0

0.554

0.777

0.807

0.469

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.0

0.010

0.0&l

0.179

0.451

0.8g2

0.976

To+

310

S.N.

I(AWRI and V. VENKATASUBMMANUN

for a neural network to do single fault generalization

better than a multiple fault one. Thus, the richness of

training data (i.e. how much of the fault space the

training data covers) plays a crucial role in determining the ability of the neural network to generalize. In

this perspective, one has distinct advantage if one can

use a simulator to train the network on a variety of

faults and thus enrich the training. In real-life situations in the process industry, this might be a problem when one does not have such extensive sets of

training data. While the results for the fully-connected network are better than that of the standard

network in this case study, it does miss out in some

cases. Thus, while fully-connected neural networks

display some potential for multiple fault generalization, this issue needs to be looked into quite carefully before one can arrive at definite conclusions.

with a fu/Iy4.3.5. Single fault generalization

connected network. This section deals with the case of

single fault generalization for different severities of

faults. The fault data used in the network training

considered large deviations in the faults. However,

for diagnostic purposes, it is important that the network be able to diagnose smaller deviations of about

5-10%. Figures 6a and b show the diagnosis of the

fully-connected ne.twork for low deviations of the

fault. The faults with low deviations take longer to be

diagnosed, compared to the quick diagnosis of the

large deviations used in the training, as the tuple deviations grow relatively slowly. The process is sensitive

to changes in the coolant inflow temperature Tj,, and

even for a 5% deviation in T,, the tuples deviate considerably. This allows an early diagnosis of the fault.

The process is relatively less sensitive to changes in

C,,, and the deviations grow slowly. In Fig. 6a and b,

the outputs corresponding to C,, faults grow slowly

while the outputs corresponding to TjO faults grow fast.

4.3.6. Sensor fault detection. Sensor faults are

among the faults frequently seen in the chemical

industry. Sensors may fail by being stuck at some

value or by showing a high/low bias. A gross error in

a sensor value can lead to wrong diagnoses if proper

care is not taken. Many of the statistical approaches

developed for rectifying gross sensor errors (Romagnoli and Stephanopoulos, 1980; Mah and Tamhane,

1982) assume that no parameter drifts have occurred

in the process. In this paper, we allow simultaneous

occurrence of sensor and parameter faults.

The neural network was trained for the occurrence

of single sensor faults (Table Id), in addition to the

other parameter faults (Table la) using only six tuples

(T,-TB). The network was tested for multiple fault

generalization. The space of these six tuples does not

classify these fault situations clearly for them to be

generalized. This implies that additional input is

necessary. More tuples can be generated and used for

inputs if the present inputs do not classify the faults

well. All the eight tuples (7-T,,)

were then considered for input. This resulted in a better performance of the network for multiple fault generalization

4

0

10

20

30

40

time in minutes

Fig.

6a. Single

fault

generalization-fully-connected

network.

Tjo

To

-5%

I

time

Fig.

-5%

6b. Single

fault

in minutes

generalization-fully-connected

network.

Tii

in minutes

Fig. 7. Multiple fault generalization with sensor faults

fully-connected network.

with sensor faults (Table le). For example, multiple

faults were introduced with the level sensor biased

low and the temperature of the feed high. Figure 7

shows the diagnosis of the network. The network was

successful in identifying both the faults in all cases.

Assumption-based approaches for diagnosis of process malfunctions

system FALCON.

5. CONCLUSIONS

Assumption-based

methods,

to be successful,

At&d

need

the conditions of resolution and completeness to be

met. Pattern classification methods, on the other

hand, require a proper selection of the feature space.

The ideal choice of features should be such that each

feature is selectively sensitive to some of the fault

classes. This allows the classification method to

exploit the differences in these sensitivities_ The

proposed tuples method uses these ideas to integrate

the assumption-based approaches with neural

network-based pattern classification techniques for

fault diagnosis. Instead of directly using the raw data

from the sensors to make the feature space, the

process model is used to generate tuples which are

selectively sensitive to changes in different groups of

assumptions about the process functionality.

Examples which illustrate the feasibility of the

proposed approach in classifying sensor and

parameter faults and combinations thereof are presented. The proposed method was found to handle

modeling inaccuracies quite well. Though we considered the linearized model of the process, it did not

hamper the diagnostic reasoning. This is so because

the method only relies on the fact that each of the

assumption sets have a distinct signature in the face

of a fault. The method is also able to handle parameter and sensor faults simultaneously. Furthermore, the network while being trained only for a

particular severity level of a fault was also able to

diagnose for other levels. Finally, our experience

suggests that a fully-connected network is more suitable for multiple fault generalization.

Davis R., Diagnostic reasoning based on structure and

behavior. Artif. Intell. 24, 347410 (1984).

Dhurjati P. S., D. E. Lamb and D. L. Chester, Experience

in the development of an expert system for fault diagnosis

in a commercial scale chemical process. Presented at

(1987).

Hoskins J. C. and D. M. Himmelblau, Neural network

models of knowledge representation in process engineering. Computers them. Engng 12, 881 (1988).

de Kleer J. and B. C. Williams, Diagnosing multiple faults.

Artif. InteN. 32, 97-130 (1987).

Kramer M. A., Malfunction diagnosis using quantitative

models and non-Boolean reasoning in expert systems.

13&140 (1987).

AIChE Jl33,

Mah R. S. H. and A. C. Tamhane, Detection of gross errors

in process data. AZChE Jf 28, 828 (1982).

Petti T. F., J. Klein and P. S. Dhurjati, The Diagnostic

Model Processor: an effective method of using deep

knowledge for process fault diagnosis. Personal Communication (1989).

Reiter R., A theory a diagnosis from first principles. Artif.

InteN. 32, 57-95 (1987).

Romagnoli J. A. and G. Stephanopoulos, Rectification of

process measurements data in the presence of gross errors.

Chem. Engng Sci. 36, 849 (1980).

Sklansky J. and G. N. Wassel, Pattern Classifiers and

Trainable Machines. Springer-Verlag, New York (198 1).

Venkatasubramanian V. and P. S. Dhurjati, An objectoriented knowledge base representation for the expert

Process

Proc. Conf. Foundations

Opers, Park City, UT (1987).

Computer-

Venkatasubramanian

V.,

R.

Vaidyanathan

and Y.

Yamamoto, Process fault detection and diagnosis using

neural networks-l.

Steady state processes. Computers

them. Engng 14, 699-712 (1990).

Watanabe K., I. Matsuura M. Abe, M. Kubota and D. M.

Himmelblau. Incinient fault diaanosis of chemical

processes vie art&al

neural networks. AIChE JI 35,

1803-1812

(1989).

APPENDIX

The

Necessary

and Suficient Conditions for

Existence of a Tupie

the

A given set of assumptions may not s&lice to establish the

endogenous (nonassumption) variables. It is therefore

necessary to develop a means to establish the existence of a

tuple for a given set of assumptions. The sufficient condition

for the existence of tuples for a given set of assumption

variables is that it will be possible to assign each distinct

equation in the system as an output equation for each of the

endogenous variables. On choosing the (n - k) equations as

output equations for (n -k)

endogenous variables, if we

find the rest of the equations to be unassigned, then the

assignment has to be changed. A systematic way to search,

for the proper choice of output equation for each endogenous variable, is by considering it as a matching problem.

This is done by setting up a bipartite graph. A bipartite

graph is a graph with arcs connecting two different sets of

nodes. Lets represent V as the set of endogeneous variables

and E as the set of equations. An arc exists from a variable

vi E V, to equation e,e.E, only if the variable tr, occurs in

equation e;. The matchmg problem in this case assigns each

endogenous variable a different equation. Maximum match-

ing is a problem where we maximize such assignments. In

this notation, the sufficient condition can be related as:

cardinality of maximum matching

= No. of endogenous variables

< No. of equations.

REFERENCES

FOCAPO

311

When the sufficient condition is satisfied, then it would be

possible to establish tuples for all the endogeneous variables.

When the cardinality is less than the number of endogenous

variables, it is not possible to assign a different equation to

each of the endogenous variables. This means that a tuple

cannot be determined for all the endogenous variables for

the given choice of assumption variables.

Let

B = set of endogenous variables,

E = set of equations,

M = maximum matching,

1B I < 1E I.

(a) IF the cardinality of M = IM 1= 1B

tuplescan he determined.

I THEN

(b) IF the cardinaiity of M = 1A4 ) -z )B 1 THEN

A tuple can exist only if there exists a subset of the

matched equations which has no other endogenous

variables other than the corresponding subset of

matched endogenous variables. This constitutes the

necessary condition. If such a subset does not exist

then additional assumption variables are necessary

for tuple generation.

Figure 3 shows a flowchart for the task of tuple derivation.

The tasks in the shaded boxes are heuristic in nature. Choice

of the subset of equations, for example, is made so that the

equations describe the process in the same unit or adjacent

units. The choice of equations together with the relevant

sensor information must result in redundancy.

312

S. N. KAVUIU and V. VENKATASUBFCAMANIAN

APPENDIX

APPENDIX

Process model:

A General Scheme for Norndizing

Variable Inputs

dv

- = F0 - F,

dt

A mapping function f(x)

desired features:

dC,

-=~(C*,-CC,)-kkC,,

dr

(I) as x-co,

Continuous

is sought with the following

j-(x)41,

(2) as x-+--co,f(x)+-1,

Control

k, ,+wv

Fi = Ffi -

k,(T,

F=F,-kk,(V,,-

Sensors

= 0; and

f(x)=

V).

T, T,> F, F,.

l+eP-++d,

condition (1)

a+d=l,

condition (2)

zz. d=

-1.

Therefore, CI= 2 and d = - 1:

(8):

Fo, 40,

for each variable, its max absolute deviation should

be interpreted approximately as co.

T),

(6):

V, C,,

Parameters

at x = O,/(x)

(4)

Consider the general sigmoid function:

equations:

State variables

(3)

TX,,

Ti,s KC, KL* VE,,

To.

condition (3)

c=o,

condition (4) allows us to determine b.

(61:

V,C..,,T,T,,F,F,.

Steady state values:

C, = 0.245 mol ft-,

T=6OOR,

V = 48 f@,

k, = 4,

Ti,, = 530 R,

c,,

= 0.5 mol ft-3,

E = 30,000 B.t.u. mol-,

F=F,,=40ft3h-,

q. = 594.6 R

F, = 50.316 ft3 h-,

k, = 10,

T0 = 530 R,

p = 50 lb,,, ft --3,

R = 1.99 B.t.u. mol- OR-,

U = 150 B.t.u. h- ft-r OR-,

A = 250 ft-r,

C, = 0.75 B.t.u. lb,- R-l,

C.J = 1 B.t ..u lb-

m R-

p, = 62.3 lb,,, ft-3

k, = 7.08 x 10Oh-.

Consider, e5 = 148.4, e- = 0.0067.

If we choose b . max( 1x 1) = 5 thenf(x) ranges between - 1

which is -0.987 to +0.987. Thus, the choice of b based on

the equation, b max( Ix 1= 5, allows us to interpret the

maximum absolute deviation as co.

The choice of sigmoid or linear normalization affects the

dynamic range of the normalized quantity. A sigmoid

function limits the dynamic range, i.e. the response of the

sigmoid is contained between certain extreme values. If 10%

deviation in an input value is treated as a typical range and

is linearly normalized to a value of 1, then a 30% deviation

would give a three-fold increase in that input value after

normalization. Use of the sigmoid leaves the normalized

value around the same extreme value (say, 0.98). At the

same time, a sigmoid is different from a Heaveside (hard

limiter) threshold which has no dynamic range. Sigmoid has

a semilinear range where the nonlinearities can be ignored.

This provides a gradation in the dynamic range of the

sigmoid.

When a sensor failure occurs, the percentage deviation in

a tuple can be a lot more at very early stages of the

transience. A linear normalization would result in a grossly

large value at the input using this sensor value. Sigmoid

would limit these extreme values. Let max, and min, be the

extreme deviations of the ith input node for all the typical

fault patterns. Parameter b is defined for this node as

b = max[abs(max,), abs(min,)].

In our illustrations, typical patterns for gross sensor faults

are not included in the determination of the parameter 6.

This is because we want a full range for the typical

parameter faults, and the typical gross sensor faults would

normally be far outside this range. Thus, for a better spread

in the normalized values, we choose not to include the gross

sensor fault patterns in the determination of b.

You might also like

- Diagnosis: Fault OF Systems - ADocument9 pagesDiagnosis: Fault OF Systems - Adayas1979No ratings yet

- Stability and Generalization: CMAP, Ecole Polytechnique F-91128 Palaiseau, FRANCEDocument28 pagesStability and Generalization: CMAP, Ecole Polytechnique F-91128 Palaiseau, FRANCEBlaise HanczarNo ratings yet

- Hybrid Physics-Based and Data-Driven PHM: H. Hanachi, W. Yu, I.Y. Kim and C.K. MechefskeDocument13 pagesHybrid Physics-Based and Data-Driven PHM: H. Hanachi, W. Yu, I.Y. Kim and C.K. MechefskeSudipto RayNo ratings yet

- Best Generalisation Error PDFDocument28 pagesBest Generalisation Error PDFlohit12No ratings yet

- Contant 2006Document29 pagesContant 2006Gustavo VianaNo ratings yet

- Electrical Engineering and Computer Science DepartmentDocument45 pagesElectrical Engineering and Computer Science Departmenteecs.northwestern.eduNo ratings yet

- Annual Reviews in Control: Khaoula Tidriri, Nizar Chatti, Sylvain Verron, Teodor TiplicaDocument19 pagesAnnual Reviews in Control: Khaoula Tidriri, Nizar Chatti, Sylvain Verron, Teodor TiplicaHusnain AliNo ratings yet

- Blanke, M. y Frei, CDocument12 pagesBlanke, M. y Frei, CRoberto DGNo ratings yet

- Model Based Evaluation of ClusteringDocument18 pagesModel Based Evaluation of Clusteringslow_internetNo ratings yet

- Hybrid Estimation of Complex SystemsDocument14 pagesHybrid Estimation of Complex SystemsJuan Carlos AmezquitaNo ratings yet

- Efficient Comparison Based Self Diagnosis Using Backpropagation Artificial Neural NetworksDocument6 pagesEfficient Comparison Based Self Diagnosis Using Backpropagation Artificial Neural Networkssri248No ratings yet

- Forward and Backward ChainingDocument7 pagesForward and Backward ChainingGoku KumarNo ratings yet

- The Gray-Box Approach To Sensor Data Analysis: TMO Progress Report 42-144 February 15, 2001Document11 pagesThe Gray-Box Approach To Sensor Data Analysis: TMO Progress Report 42-144 February 15, 2001Neethu Elizabeth MichaelNo ratings yet

- Early Failure Detection For Predictive Maintenance of Sensor PartsDocument8 pagesEarly Failure Detection For Predictive Maintenance of Sensor PartsRadim SlovakNo ratings yet

- Model Validation - Correlation For UpdatingDocument14 pagesModel Validation - Correlation For Updatingsumatrablackcoffee453No ratings yet

- Model Validation: Correlation For Updating: D J EwinsDocument14 pagesModel Validation: Correlation For Updating: D J EwinsChandan Kumar RayNo ratings yet

- Hand : Assessing Error Rate Estimators: The Leave-One-Out Method ReconsideredDocument12 pagesHand : Assessing Error Rate Estimators: The Leave-One-Out Method ReconsideredMohammad TaheriNo ratings yet

- V-Detector: An Efficient Negative Selection Algorithm With "Probably Adequate" Detector CoverageDocument28 pagesV-Detector: An Efficient Negative Selection Algorithm With "Probably Adequate" Detector CoveragezeeshanNo ratings yet

- IJEAS0211027Document5 pagesIJEAS0211027erpublicationNo ratings yet

- Optimal State Estimation For Stochastic Systems: An Information Theoretic ApproachDocument15 pagesOptimal State Estimation For Stochastic Systems: An Information Theoretic ApproachJanett TrujilloNo ratings yet

- Expertise in Qualitative Prediction of Behaviour: Ph.D. Thesis (Chapter 0)Document21 pagesExpertise in Qualitative Prediction of Behaviour: Ph.D. Thesis (Chapter 0)Dart.srkNo ratings yet

- A Review of Process Fault Detection and Diagnosis Part III: Process History Based MethodsDocument20 pagesA Review of Process Fault Detection and Diagnosis Part III: Process History Based MethodsABCNo ratings yet

- 2014 Soft-Computing-Based-On-Nonlinear-Dynamic-Systems-Possible-Foundation-Of-New-Data-Mining-SystemsDocument8 pages2014 Soft-Computing-Based-On-Nonlinear-Dynamic-Systems-Possible-Foundation-Of-New-Data-Mining-SystemsDave LornNo ratings yet

- ACSE Volume7 Issue2 P1111547434Document66 pagesACSE Volume7 Issue2 P1111547434nobibi9956No ratings yet

- Detection and Resolution of Anomalies in Firewall Policy RulesDocument15 pagesDetection and Resolution of Anomalies in Firewall Policy RulesGopal SonuneNo ratings yet

- Usr Tomcat7 Documents REZUMAT ENG Lemnaru CameliaDocument6 pagesUsr Tomcat7 Documents REZUMAT ENG Lemnaru CameliaqazNo ratings yet

- Item Response Theory PDFDocument31 pagesItem Response Theory PDFSyahida Iryani100% (1)

- System Health Monitoring and Prognostics - A ReviewDocument19 pagesSystem Health Monitoring and Prognostics - A ReviewAamir AnisNo ratings yet

- Long-Term Applying: ON Computers, 1971Document4 pagesLong-Term Applying: ON Computers, 1971Phu Pham QuocNo ratings yet

- Applied Mathematical Modelling: K. Renganathan, Vidhyacharan BhaskarDocument18 pagesApplied Mathematical Modelling: K. Renganathan, Vidhyacharan BhaskarAgiel FalalNo ratings yet

- Gaussian Process-Based Predictive ControDocument12 pagesGaussian Process-Based Predictive ContronhatvpNo ratings yet

- PA 765 - Factor AnalysisDocument18 pagesPA 765 - Factor AnalysisAnoop Slathia100% (1)

- Project 2021Document12 pagesProject 2021Melvin EstolanoNo ratings yet

- A Genetic-Algorithm For Discovering Small-Disjunct Rules in Data MiningDocument14 pagesA Genetic-Algorithm For Discovering Small-Disjunct Rules in Data MiningAnonymous TxPyX8cNo ratings yet

- Doing The Four Step RightDocument39 pagesDoing The Four Step RightDiether EspirituNo ratings yet

- Identification of Parameter Correlations For Parameter Estimation in Dynamic Biological ModelsDocument12 pagesIdentification of Parameter Correlations For Parameter Estimation in Dynamic Biological ModelsQuốc Đông VũNo ratings yet

- Analytical Metrology SPC Methods For ATE ImplementationDocument16 pagesAnalytical Metrology SPC Methods For ATE ImplementationtherojecasNo ratings yet

- Hansen 1992Document16 pagesHansen 1992Winalia AgwilNo ratings yet

- Least SquareDocument25 pagesLeast SquareNetra Bahadur KatuwalNo ratings yet

- S, Anno LXIII, N. 2, 2003: TatisticaDocument22 pagesS, Anno LXIII, N. 2, 2003: TatisticaAbhraneilMeitBhattacharyaNo ratings yet

- A Fault Detection Approach Based On Machine Learning Models: (Legarza, RMM, Rramirez) @itesm - MXDocument2 pagesA Fault Detection Approach Based On Machine Learning Models: (Legarza, RMM, Rramirez) @itesm - MXsachinNo ratings yet

- 8 A Dynamic Integrated Fault Diagnosis Method For Power TransformersDocument8 pages8 A Dynamic Integrated Fault Diagnosis Method For Power TransformersMinh VũNo ratings yet

- Use and Interpretation of Common Statistical Tests in Method-Comparison StudiesDocument9 pagesUse and Interpretation of Common Statistical Tests in Method-Comparison StudiesJustino WaveleNo ratings yet

- 0909 Main PDFDocument13 pages0909 Main PDFAshish SharmaNo ratings yet

- Equifinality, Data Assimilation, and Uncertainty Estimation in Mechanistic Modelling of Complex Environmental Systems Using The GLUE MethodologyDocument19 pagesEquifinality, Data Assimilation, and Uncertainty Estimation in Mechanistic Modelling of Complex Environmental Systems Using The GLUE MethodologyJuan Felipe Zambrano BustilloNo ratings yet