Professional Documents

Culture Documents

Daniel T. Levin & Bonnie K. Angelone - The Visual Metacognition Questionnaire. A Measure of Intuitions About Vision

Uploaded by

Fabiola GonzálezOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Daniel T. Levin & Bonnie K. Angelone - The Visual Metacognition Questionnaire. A Measure of Intuitions About Vision

Uploaded by

Fabiola GonzálezCopyright:

Available Formats

The Visual Metacognition Questionnaire: A Measure of Intuitions about Vision

Author(s): Daniel T. Levin and Bonnie L. Angelone

Source: The American Journal of Psychology, Vol. 121, No. 3 (Fall, 2008), pp. 451-472

Published by: University of Illinois Press

Stable URL: http://www.jstor.org/stable/20445476

Accessed: 21-01-2016 09:30 UTC

REFERENCES

Linked references are available on JSTOR for this article:

http://www.jstor.org/stable/20445476?seq=1&cid=pdf-reference#references_tab_contents

You may need to log in to JSTOR to access the linked references.

Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at http://www.jstor.org/page/

info/about/policies/terms.jsp

JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of content

in a trusted digital archive. We use information technology and tools to increase productivity and facilitate new forms of scholarship.

For more information about JSTOR, please contact support@jstor.org.

University of Illinois Press is collaborating with JSTOR to digitize, preserve and extend access to The American Journal of

Psychology.

http://www.jstor.org

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

The Visual Metacognition

Questionnaire: A measure of

intuitionsabout vision

DANIEL T. LEVIN

VanderbiltUniversity

BONNIE L. ANGELONE

RowanUniversity

Recent research has revealed a series of striking limits to visual perception. One

importantaspect of thesedemonstrations is thedegree towhich theyconflictwith

intuition;people often believe that theywill be able to see things thatexperiments

demonstrate theycannot see. This metacognitive error has been explored with

reference to a few specific visual limits,but no studyhas yet explored people's

intuitions about vision more generally. In this article we present the results of

a broad surveyof these intuitions.Results replicate previous overestimates and

underestimates of visual performance and document new misestimates of per

formance in tasks that assess inattention blindness and visual knowledge. We

also completed an initial exploratory factor analysis of the items and found that

estimates of visual performance forwell-structured information tend to covary.

These results represent an important initial step in organizing the intuitions that

may prove important in a varietyof settings, including performance of complex

visual tasks,evaluation of others people's visual experience, and even the teach

ing of psychology.

Recent research exploring visual perception has revealed a range of strik

ing failures of visual awareness inwhich salient events often go entirely

unnoticed (for a review, see Rensink, 2002). A key element of these failures

is the degree

towhich

own visual capabilities.

they conflict with people's intuitions about their

a range of experiments have docu

For example,

mented thatpeople have considerabledifficulty

detectingbetween-view

visualchanges.This phenomenon,called changeblindness,isextremely

counterintuitive: Not only do people express disbelief when told about

change blindness, but when they are asked to predict their ability to de

tect visual changes, they grossly overpredict their performance in a wide

range of circumstances

(see Levin & Beck, 2004, for a review). These

mispredictions have a number of implications ranging from poor control

of visual performance tomisconstruals of other people's capabilities and

AMERICANJOURNAL OF PSYCHOLOGY

Fall 2008,Vol. 121,No. 3, pp. 451-472

i 2008 by theBoard ofTrusteesof theUniversityof Illinois

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

LEVIN

452

experience.

Although much

of our research

&

ANGELONE

in this area has focused on

about change detection, little research has explored people's

beliefs about visual perception more generally. In this article we broaden

our exploration of intuitions about vision and report the results of an

initial application of a broad questionnaire,

the Visual Metacognition

predictions

Questionnaire

(VMQ),

of visual phenomena.

Visual

One

assessing people's

limits and misunderstandings

of the main

tion has been

organizing

the exploration

understanding

of a wide range

of limits

principles for research on visual percep

of visual limits. From the start of scientific

psychology, researchers have noted that we are not aware of all possible

that the act of at

stimuli in the external world and have hypothesized

tending to a subset of that information is crucial to becoming aware of

it.Recently, this kind of research has explored the perceptual limits im

posed by attention in a particularly compelling set of tasks,many ofwhich

take advantage of recent advances in digital imaging thatmake possible

the use of naturalistic stimuli (Hollingworth, 2003; Rensink, O'Regan, &

Clark, 1997; Simons & Chabris, 1999). Not only do these developments

allow for new tests of the role of attention in realistic settings, but they

also bring research on attention into the realm of familiar experience and

have the potential

to directly challenge

intuitions about vision. It is quite

difficult to connect naive beliefs about vision to rarefied stimuli and tasks

that subjects have little experience with and few expectations about. On

the other hand, as stimuli and tasks become more realistic, people begin

to have expectations about their performance, and these expectations

might end up being wrong. Here, we review recent research exploring

that demonstrate

visual limits, with particular emphasis on paradigms

these limitswithout the use of complex tasks and unfamiliar stimuli, and

called the VMQ.

present a broad measure of visual metacognitions

have demonstrated

the limits

Several recently explored phenomena

imposed

by visual attention. Change

blindness,

inattention blindness,

repetition blindness, and the attentional blink all constitute large failures

of visual awareness

(Raymond, Shapiro, & Arnell, 1992; Simons & Levin,

1997; Rensink, 2002; Mack & Rock, 1998). We focus on change blindness

and inattention blindness here because these are themost accessible to

intuition. In the case of change blindness, subjects have great difficulty

detecting the difference between two versions of a scene. Change blind

ness has been observed in a wide variety of situations, ranging from still

images tomovies to real-world interactions (for a review, see Simons &

Levin, 1997, and Rensink, 2002). It can even occur while people are at

tending to the changing object. For example, Levin and Simons (1997)

created short videos inwhich the sole actor changed into another person

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

VISUAL

METACOGNITION

QUESTIONNAIRE

453

(wearing different clothing) across shots and found that up to two thirds of

subjectsmissed thechange (see alsoAngelone, Levin,& Simons,2003).

A closely relatedphenomenon, inattentionblindness, occurswhen

subjects'attentionisdirected towardone location,object,or eventwhile

anotherstimulusispresented.The first

blindness

exampleof inattention

invisionwasNeisser andBecklen (1975),who showedsubjectsshortfilms

and asked them to attend to a group of people playing a basketball-passing

game. While subjects were attending to the game, they failed to detect a

woman

carrying an umbrella walking among the players (formore recent

examples, see Simons & Chabris, 1999; Wayand, Levin, & Varakin, 2005).

In another well-known paradigm, Mack and Rock (1998) asked subjects to

discriminate

the relative lengths of the two bars in a cross.While

theywere

focused on this task, another stimulus appeared on one of the quadrants

defined by the cross. Subjects often missed this stimulus, even when itap

right at the fixation point (so they fixated gaze on the center of the

screen while they attended to the cross, which was offset from center).

peared

Both inattention

blindnessand changeblindnessrepresentsituationsin

which attentional selection of a subset of objects within a scene, or a subset

of features within an object, can leave us almost completely oblivious to

other aspectsof thescene.Although generallyconsistent

with thescien

tificunderstandingof attention,thesedemonstrationsare particularly

compelling, and a large part of their value may be the degree towhich

they conflict with strong intuitions (Simons & Levin, 2003; Reisberg &

McLean,

1985). Indeed, we have consistently observed that they vastly

overpredictchange detection,whether theyare predictingtheirown or

others' performance in a wide variety of situations (for a review, see Levin

& Beck, 2004). For example, 90% of subjects predicted theywould see

of a large red scarf thatwas actually detected by 0% of

the disappearance

subjectsinLevin and Simons's (1997) originalexperiment.

Although little research has explored adults' beliefs about vision (for

an exception, see Harley, Carlsen, & Loftus, 2004), a number of studies

have explored children's knowledge about their perceptual abilities (for a

review,see Flavell,2004).Although thisresearchsuggestsa solid founda

tion for adult reasoning about vision, other studies suggest that children

about vision that are retained into adulthood (Winer

have misconceptions

& Cottrell, 2004),

broad

leaving open the possibility that adults misconstrue

range of visual and cognitive phenomena.

Summaryand hypotheses

To get a more general sense of people's intuitions about vision, we have

theVMQ. Three basic questions drove the development of

been developing

theVMQ.

First,we wanted

to know whether

itwould be possible

to observe

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

LEVIN

454

overestimates of visual performance

&

ANGELONE

similar to those observed for change

detection, especially for situations involving natural real-world stimuli.

Therefore, we asked subjects to predict their performance in situations

similar to several well-known

attention and memory.

experiments

that illustrate limits in visual

It is important to note that these performance

compari

predictioncomparisonsnecessarilyinvolvebetween-experiment

sons that do not equate specificmethods

or subject populations. Therefore,

we generally focus on large differences (in the range of 30-80%

between

performance

and predictions).

we were

Second,

divergence

interested in

correlations between estimates of visual and nonvisual performance.

This

ismotivated by two hypotheses. The firstintercorrelation hypoth

esis is that overestimates of visual performance occur in part because the

question

meaningful

structure inherent to natural events, scenes, and objects leads

subjects to believe

that theywill be able to countenance

of visual information. Therefore,

well-structured

overestimates

a large amount

should be strongest for

stimuli, and intercorrelations would

be strongest among

scenarios describing structured stimuli. A key assumption underlying this

hypothesis isone's definition of structure. For individual scenes, we follow

research exploring scene perception and define this structure in terms of

meaningful interobject and scene-object relationships. So themeaningful

thematic and causal relationships between objects constitute part of this

definition, and scene-object relationships such as support, interposition,

and familiar location, familiar size, and semantic scene-object

relation

ships constitute another part (see Biederman, Mezzanotte, & Rabinowitz,

1982). In addition, we will be exploring people's beliefs about their ability

to remember sets of scenes, so our notion of structure should include the

contrast between sets of scenes that are less structured because they are

unrelated and sets that are more structured because they illustrate a single

coherent narrative.

The second intercorrelation hypothesis is that these intercorrelations

it is important

should be limited to questions about visual phenomena;

to know whether one set of beliefs drives reasoning about all structured

information or whether these beliefs are specific to vision. Finally, we

interested inwhether beliefs about visual capacity correlated with

were

other kinds of visual knowledge

and misconceptions.

We

therefore asked

subjects about depth perception, the basic relationship between light and

seeing, and visual search. In the section that follows, we describe each

section of the VMQ.

The VMQ

Items on theVMQ ask subjects to predict their own visual performance

in 10 different task categories (see Appendix). Selection of these categories

was based on recent research in visual attention and awareness and on

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

VISUAL

METACOGNITION

QUESTIONNAIRE

455

texts in cognition and perception. One critical criterion for

selecting topics was that the experiments demonstrating them had to be

straightforward and easily explained. In addition, we avoided experiments

undergraduate

that produced

highly unnatural perceptual

that the situations

in the VMQ

experiences.

are all common

rather that they do not require subjects tomake

ences when making

predictions

about

This isnot to say

or entirely natural, but

a large number of infer

their performance

in the rarified

sometimes necessary for perception experiments. Many of

these questions are very closely based on actual experiments thatdocument

limits in visual perception and visual memory, but others are less directly

circumstances

based on specific research findings and are focused on more general no

tions such as attentional limits.We should therefore be clear thatwe do not

consider

theVMQ

to be an exhaustive

should be considered

test of visual intuitions. Instead, it

a broad sampling of visual intuition for situations in

which subjects might reasonably base their responses on everyday experi

ence.

Note

that the order of questions

order of questions

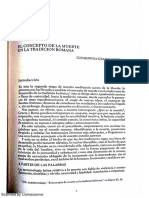

1. Change

in theVMQ

does not follow the precise

in theAppendix.

detection.

Five questions

ask subjects whether theywould

detect unexpected visual changes to complex scenes. Four of these ques

tions ask about change detection in natural scenes, and a fifthasks about

change detection for an array of individual objects. Three of these ques

tions are associated with a known empirical base rate of actual change

detection, and all are illustrated with color images depicting the changes.

For each question, subjects first read a brief description of the circum

the change, then they see

stances under which theywould encounter

the change illustrated on the next page. For three of the questions (1.1,

1.2, and 1.3), subjects are told to imagine that they are watching a movie

inwhich a change occurs between cuts (see Figure 1 for an example).

Subjects are also told to assume that they are not on the lookout for

changes. Subjects indicate on a 4-point scale whether they definitely or

notice the change or definitely or probably would not

notice the change. Three additional questions are included in this sec

tion. One

(1.4) asks whether subjects think theywill see a change to an

object array in incidental task (as is the case for the first three), and two

probably would

(1.5 and 1.6) ask whether subjects will detect a change for one normal

and one jumbled scene under intentional circumstances

(i.e., they are

told to imagine they are looking for a change). One key feature of these

is that they are designed to ask

questions, and most others on the VMQ

about cognitive limitswithout allowing the subject to actually experience

them. In this case subjects are told about the specific visual changes they

will be encountering and therefore cannot actually experience change

blindness.

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

456

LEVIN

&

ANGELONE

Figure 1.Example change detection question. Each panel representsone of three

successivelyviewed slides

2. Inattention blindness. The questions in Section 2 assess subjects'

beliefs about the degree towhich people can detect specific objects and

events when their attention isnot focused on them.Question 2.1 describes

the Simons and Chabris (1999) experiment and asks subjects whether they

would be likely to notice the unexpected gorilla. Questions

2.2 and 2.3

ask subjects whether theywould notice a pedestrian sign while they are

driving and engaged in a complex verbal task (2.2) or a complex visual

task (2.3). Finally,Question 2.4 asks what proportion of pilots would see an

airplane on the runway they are about to land on while using a heads-up

display (based on Haines, 1991, who showed that pilots sometimes miss

such stimuli). This question departs from our usual technique of asking

subjects whether they themselves would detect something because we

assumed thatmost of our subjects are not pilots.

3. Visual attention. Three questions ask subjects about visual atten

tion. The first two are based on developmental

research arguing that

young children falsely believe that looking at one thing in one location

also allows them to see other things in other locations (Flavell, Green, &

Flavell, 1995). Flavell et al. argue that young children believe attention

ismore like a lamp than a spotlight in that orienting one's attention to

a scene illuminates all of it instead of some small part of it. In contrast

to the implicit assumption that adults really do understand the spotlight

model of visual attention, however, we have observed variability among

adults in their commitmnent to the idea that looking at one thing precludes

seeing another thing (Levin, 2001). The first visual attention question

(3.1) asks subjects to imagine they are looking at a painting in a museum,

then to indicate whether theywould see the painting's frame while they

are looking at the painting. The second (3.2) asks subjects whether they

would see a fire hydrant 10 feet away from a friend they are looking at

from across the street.A third visual attention question (3.3) asks subjects

what proportion of the objects in a scene they typically attend to.

Subjects are also asked whether attention isgenerally necessary todetect

changes (3.4) and for remembering information (3.5). These questions

are based on research suggesting that children do not fully comprehend

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

VISUAL

METACOGNITION

QUESTIONNAIRE

457

thenecessity

ofattendingto information

forthepurposesof remembering

it (Miller & Weiss, 1982), and again we suspect that there may be diversity

among adults despite theassumption in thedevelopmental literature

that theyunderstand this.The question about the impactof attention

on change detection

is a generalization

of this, and in the past we have

observedvariabilityin intuitions

on thispoint (Levin,Drivdahl,Momen,

& Beck, 2002; Levin,Momen, Drivdahl,& Simons,2000).

4. Auditory

generally

attention. We

about

included

a pair of questions

the influence of information outside

asking more

the focus of at

tentionby assessing intuitionsabout auditoryattention.Although the

focus of the VMQ

is clearly visual, we have included

this scenario

to al

lowmore general theoreticalunderstandingofwhether some beliefs

about attention are specifically visual or are more

describe

a cocktail party inwhich

general. These

items

subjects imagine they are conversing

with one personwhile ignoringother nearby conversations.Subjects

indicatewhether theywould understand themeaning of the ignored

conversation

(4.1) and whether

theywould

mentioned in theother conversation(4.2).

5. Visual memory. These

questions

hear

their name

ask subjects about

if itwere

their ability to

remember visual information. One

set of three questions

is based on re

that people

are surprisingly good

at recognizing

search demonstrating

largenumbersof picturesof real-world

objectsand scenes.This research

used a typical recognition memory protocol inwhich subjects were first

shown an inspection set consisting of a wide variety of pictures ranging

from individual objects to natural scenes, then were shown a test set in

which half of the pictures were repeats from the inspection set and half

were new. Several experiments converge in demonstrating that recognition

memory is good for inspection sets of up to 10,000 pictures (Standing,

1973; Standing,Conezio, & Haber, 1970). In theVMQ picturememory

scenario, the task is described to subjects very concretely, and they are

shown 3-item sample inspection and test sets. Subjects respond to three

questions asking them to predict their performance with 20-, 50-, and

1,000-iteminspectionssets (5.1,5.2, and 5.3). Three additionalscenarios

testbeliefs about visual memory. Two of these ask subjects to estimate their

success on a picture memory task characterized by a more organized in

spection set: They are asked what proportion of the scenes in a movie they

if theywere (5.5) or were not (5.4) intentionally trying

the information. A final question asks subjects to imagine

that they had witnessed an automobile accident and that theywere asked

1month laterwhether some specific person had also been at the scene of

would

remember

to remember

theaccident (5.6).

6. Visual knowledge. This question assesses subjects' beliefs about the

towhich they could reproduce in a drawing the details from a

degree

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

LEVIN

458

familiar object. Based

on the work of Nickerson

&

and Adams

ANGELONE

(1979), who

the details of

showed that subjects have difficulty accurately reproducing

a Lincoln penny, these questions ask subjects to imagine that theywere

asked to draw a penny and to predict whether

theywould

remember and

correcfly place a series of features on their drawing. We predict that subjects

will overestimate their success. This is similar to overestimates of change

detection

inwhich well-structured visual information may lead subjects to

overestimate

anecdotal

the depth of their representation,

All questions are accompanied

placement

and there is at least one

report that thiswill be the case (Keil, Rozenblit, & Mills, 2004).

by a picture of a penny showing the correct

of the features. Question

6.1 asks subjects to predict whether

the head facing in the correct direction, Question

6.2

asks whether theywould remember to put the word Liberty on the coin,

Question 6.3 asks whether theywould remember to include and correctly

theywould

place

place

the phrase United States ofAmerica, and Question

6.4 asks whether

theywould place the date in the correct location.

7. Icon and subitization. These questions are based on research demon

strating that briefly presented visual information can persist momentarily

in an afterimage, or "icon." In themost well-known demonstrations

phenomenon,

subjects view arrays containing

9-12

of this

letters for 50 ms and

these conditions,

then report as many of the letters as possible. Under

subjects can generally report only 4 or 5 letters. However, researchers no

ticed that subjects sometimes commented that theywould have been able

to report more letterswere itnot for the time it took to verbalize the first

few; they could "see" all of the letters for a moment, and ifonly they could

get the information into a more durable form, theywould have been able

to report many of them, a claim that has been empirically verified (see

Averbach & Sperling, 1968, for a review). The icon is a fairly compelling

phenomenal

experience, and early authors such asJames (1892) not only

described itbut also intuited its use as a short-term storage system.

Therefore, we have asked subjects to predict how theywould perform

under these circumstances. Question 7.1 asks subjects to estimate the num

ber of items they could see in a 50-ms display, Question 7.2 asks whether

they could briefly "see" the array after ithad disappeared from the screen,

and Question 7.3 asks subjects to predict whether they "can retain more

information" than they could report.

8. Visual search. As children begin to understand other people's visual

they begin to understand at least some of the factors that

things easy and difficult to locate in a visual search task (Miller &

Bigi, 1977). To explore these intuitions in adults, we included a series of

questions asking subjects about the conditions thatmight produce a serial

or parallel visual search (Treisman & Gelade, 1980). For the firstof these

questions, subjects are asked to imagine a search task inwhich theirjob is

experience,

make

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

VISUAL

METACOGNITION

459

QUESTIONNAIRE

to locate a specific target (a green vertical line) among a number of other

objects (red horizontal and vertical lines). In this case the target isunique

in terms of a basic visual feature: color. Therefore,

visual search should

be very efficient, and the time necessary to locate the target should not

increase if additional distractors are added to the display. In contrast, a

second question

asks about a more difficult search. In this case, the same

target (red vertical line) must now be located among a mixed set of red

horizontal and green horizontal and vertical lines. Color is no longer

diagnostic

of the target, and subjects must search for a conjunction of

color and line orientation. In this situation, the search ismore difficult,

and reaction time to locate the target increases with added distractors. In

both cases, subjects are firstasked tojudge whether the search will be "Very

fast," "Somewhat fast," "Somewhat slow," or "Very slow" when the target

is hidden among

fivedistractors (Question

8.2 for the conjunction

difficult itwill be when

8.1 for the feature search and

search). Then, they are asked how much more

the target ishidden among 300 distractors (Ques

tion 8.3 for the feature search and 8.4 for the conjunction

search).

9. Extramissionbeliefs.Research byWiner and colleaguesdemonstrat

ed that people

can misunderstand

for seeing (for a review, seeWiner

one of the most basic requirements

& Cottrell, 2004). These

experiments

consistently

demonstratedthatapproximately30% of adultsendorse an

extramissionist account of vision, assuming that something (such as "rays")

comes out of the eyes to allow seeing. Therefore, the VMQ includes an

extramission question modeled on thework ofWiner, Cottrell, Karefilaki,

and Chronister (1996). This itemaskswhether people see by emitting

something from their eyes, by having something enter their eyes, or by

both processes (9.1). This verbal description is followed by a simple il

lustration of these processes very similar to that used byWiner et al.

10.Depth perception.Althoughdepthperceptionusuallyisconsidered

to be automatic, recent research demonstrates that in at least some cases,

accurate judgments of the size of distant objects depend on children's

ability to verbalize the relationship between apparent size and distance

the VMQ includes several questions that

(Granrud, 2004). Therefore,

assess people's understanding of depth. In one (10.2), subjects are asked

why the moon

looks larger on the horizon, and in another (10.1), they

are asked how theywould make a fish they caught look larger in a photo

graph. Finally, in another question (10.3) observers are asked to place a

block on a picture of a set of railroad tracks that converge

such that it appears very large.

in the distance

Familiarit questions

Because we tested subjects in general psychology classes, and because

much of the research included in theVMQ has received a fair amount of

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

LEVIN

460

publicity, we asked subjects whether

they had heard about

&

ANGELONE

research on

each of the topic areas tested in class, on television, or elsewhere.

In some

cases, a topic area was not tested because either there was no research

testing the relevant hypothesis or because the questions were general and

not closelyrelated to any specificresearchfinding.The VMQ included

with researchon changedetection,inattention

questionsabout familiarity

blindness,auditoryattention,visualmemory,visual knowledge, iconic

memory,visual search,extramission,

and depth perception.

measures

Demographic and general intelligence

In thefinalsectionof theVMQ we collectedbasic demographic infor

mation

such as age, sex, and

level of education.

In addition, we asked

academic testscores,includingSAT andACT scores,

subjectstoself-report

and academic

performance

in the form of current grade point averages.

Finally, we asked subjects to list their current major and to list the psychol

ogy courses

they have taken.

EXPERIMENT

METHOD

Subjects

A totalof 108 Kent State University students (68 women, 40 men) completed

theVMQ in exchange for course credit in theirgeneral psychology class. Their

mean age was 21.48 years (range 18-44).

Procedure

The VMQwas administered on computer as a PowerPoint presentation to small

groups of subjects. The experimenter read each question and gave subjects time

to respond on Scantron bubble sheets before proceeding to thenext slide. It took

approximately 45 minutes to complete the questionnaire, afterwhich subjects

were debriefed.

RESULTS

This section isdivided into threeparts,correspondingto the specific

hypotheses presented earlier. First,we describe a factor analysis of sections

in theVMQ thatassesses the intercorrelation

hypotheses.As discussed

wewere interestedinwhetherresponsesregarding

well-structured

earlier,

about vision per se

stimuli would cohere and whether metacognitions

would cohere. Then, we discuss results from each of the sections on the

VMQ, organized by the groupings suggested in the factor analysis. Within

these sections, we pay particular attention to situations inwhich subjects

appear

to have overestimated

or underestimated

their performance.

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

VISUAL

461

QUESTIONNAIRE

METACOGNITION

Exploratoryfactoranalysis

To

towhich

test the degree

different kinds of metacognitions

were

VMQ

interrelated,11 summaryscoreswere generated for thedifferent

sections.Two of theserepresentedthevisualmemory section,and the

other sections were represented by one score each. We chose

to use sum

mary scoresbecause enteringeach of the individualquestions into the

analysis would

have produced

too many

items relative to the number of

subjectswho completed thequestionnaire (seeBryant& Yarnold, 1995).

The first

summaryscore,labeled "visual

memory1" includedresponsesto

memory forunstructured

Questions 5.1-5.3 and was intendedto reflect

materials. The other summary score, labeled "visual memory 2," included

memoryforwell-structured,

Questions 5.4-5.6 andwas intendedtoreflect

meaningful materials. The other nine scores reflected themean

on each of the other nine sections.

The

results of this analysis provide

some preliminary

response

support for the

bothof theintercorrelation

hypotheses;responsesabout structuredstim

uli correlate, as do responses about visual stimuli. The analysis extracted

threefactors

usingprincipalcomponentanalysisthatcollectively

explained

48.75% ofvariance.

The firstfactor included five scores to explain 24.5% of variance. (Scores

to factors when they had a loading of .4 or above. In cases

were assigned

a score's loading was above .4 for rmore than one factor, itwas as

signed to the factor it loaded most highly orn.) It included change detection,

visual memory 2 (structured), visual attention, inattentional blindness,

and visual knowledge. This factor seems co represent subjects' estimates of

where

visual capacity for structured materials. Almost all of the tasks it includes

involve meaningful real-world stimuli, and they are all visual. Tasks that

involvedlessstructured

visualmaterials (such as thosedescribed in the

visual memory 1 scenarios) and those describing nonvisual attention (e.g.,

the cocktail party scenarios) did not load most strongly onto this factor.

The second and third factors were more difficult to interpret. The sec

ond factor explained 13.9% of variance by including responses to icon,

extramission, and depth perception

items, and therefore it appears to

more qualitativeknowledgeabout vision.The lastfactorincluded

reflect

1 (unstructured), auditory attention, and visual search to

explain a total of 10.3% of variance (see Table 1 for the component matrix

visual memory

forthesethreefactors).

Item results: Factor

Change detection.As inpreviousresearch,subjectsoverestimatedtheir

ability to detect between-view visual changes.

In the situation described

inQuestion 1.1 (jerseyand ball change), 12.3% of subjectsdetected the

change

(Angelone,

Levin, & Simons,

2003), whereas

87% claimed

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

they

LEVIN

462

&

ANGELONE

Table 1.Component matrix for exploratory factor analysis

Component

Variable

Visual memory 1 (unstructured)

Change detection

Visual memory 2 (structured)

Visual attention

Auditory attention

Icon and subitization

Visual search

Extramission

Inattention blindness

Visual knowledge

Depth perception

1

.379

-.701a

.657a

.692a

.252

.376

-.416

_.399

.568a

.539a

-.060

2

.417

.038

.150

.193

.367

.562a

.363

.431a

-.319

-.235

.606a

3

-.616a

.026

.046

.227

.475a

.136

.451a

-.027

.350

-.266

-.245

aScore assigned to factor represented in thatcolumn.

would

definitely or probably detect the change, X2(2) = 87.81, p < .001.

1.2 (actor change) and 1.3 (plate change) the known base

For questions

(Levin & Simons, 1997), as compared with 58.3% and 37.9%

success in the VMQ

X2(2) = 12.51, p< .001 and X2(2) = 5.81,

p < .025, respectively. There is no performance baseline for the stimulus

1.4 (array change) but Beck, Levin, and Angelone

in Question

(2007)

observed approximately 10% change detection in an incidental taskwith

10-object scenes. This compares with 67.6% predicted success. There are

rate is 0%

predicting

no baselines for the scenes inQuestions 1.5 and 1.6 (normal andjumbled

scenes), but 87.9% and 83.3% of subjects predicted success, respectively.

However, comparing the mean ratings on these questions, subjects pre

dicted significantly less success on the jumbled scene, M= 3.157, than on

the normal scenes, M = 3.444, t(107) = -4.120, p < .001, suggesting that

they account for scene structure when it ismade obvious.

Inattention blindness. As in the change detection questions, subjects

overpredicted success in a situation inwhich they are attending to one

thing while another unusual event occurs. Simons and Chabris (1999)

found that 42% of subjects detected the unexpected gorilla in approxi

mately the conditions we described, as compared with 87.9% of subjects

predicting success in this experiment, X2(2) = 16.67, p < .001.When think

ing about verbal and spatial referents, respectively, 60.1% and 47.2%

of subjects indicated that theywould detect the pedestrian sign, giving

mean ratings of 2.75 for the verbal condition and 2.50 for the spatial

condition, t(107) = 4.73, p < .001. The pilot scenario (Question 2.4) pro

duced a range of responses, 63.8% of which were 15% or less. In fact, 2

of 9 (22%) pilots failed to see the plane, so it appears thatmost subject

overestimate. However, because there were so few subjects in the original

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

VISUAL

METACOGNITION

QUESTIONNAIRE

463

experiment,a testcomparingsubjects'estimates

withbaselinewould be

underpowered.

Visual attention. The two attentional breadth questions produced a

range of intuitions. On Question 3.1, 62% of subjects indicated theywould

see the frame a painting is in, and 38.9% indicated theywould see the fire

hydrant(Question 3.2). On Question 3.3, subjectsindicatedthey

would

look at an average of 58.1%

On Question

3.4,

to

see chang

65.7% of subjects indicated that visual attention "helps a lot"

es, and 23.1%

indicated

of objects

(SD=

19.5%).

that it "helps a little." On Question

3.5, 29.6%

indicatedthatattentionis"absolutely

necessary"torememberthings,

and

62.9% indicated that it "helps a lot." Generally, subjects rated attention

as being more necessary formemory than for perception, t(107) = 6.086,

p < .001. Even so, 40.9% of subjects indicated that attention was just as

important formemory as for perception, and 9.3% indicated that atten

important for change detection than formemory.

Visual memory 2 (structured). In contrast to the large underestimates

of performance observed for unstructured picture sets, subjects appear to

tion was more

havepredictedmore successwithmovies,indicatingthey

would remember

an average of 57.2%

of scenes when

theywere not trying to remember

toremember(Question

were trying

(Question5.4) and 72.6%when they

5.5), t(l07) = 9.991, p < .001. In addition, only 16.6% of subjects indicated

that theywould remember equivalent amounts of information whether

intendingto rememberor not.On Question 5.6, 34.3% of subjectsindi

cated theywould

recognize a person

in a crowd.

Visual knowledge.Subjectsgenerallyoverestimatedthedegree towhich

they could reproduce the features of a penny on a recall test.On these

questions, 85.2% of subjects predicted they could correctly reproduce

the direction of Lincoln's head, whereas only 50% of Nickerson and Ad

ams's (1979) subjects got this correct, X2(2) = 12.90, p < .001. Furthermore,

39.8% indicated they could correctly reproduce Liberty (compared with

5% baseline), X2(2) = 9.066, p < .01; 71.3% indicated they could correctly

reproduce the phrase United States ofAmerica (compared with 20% base

line), X2(2) = 19.10, p < .001; and 77.7% indicated theywould place the

date correctly (compared with 60% baseline),

2(2) = 2.844, p < .10.

Item results: Factor 2

Icon and subitization. Subjects predicted they could see an average

of 3.17 letters flashed on the screen for 1/20 s. This compares with an

average of 4.5 letters for trained subjects (Averbach & Sperling, 1968).

Furthermore,69.4% of subjectsindicatedthey

would see an afterimage,

and 61.1% indicated that theymight be unable to report all the informa

tion they could see.

Extramission. Although only a few subjects gave the extramission-only

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

LEVIN

464

&

ANGELONE

(3.7%), whereby seeing involves the eyes producing rays,many

(48.1%) indicated that seeing involves both the intromission and the extra

mission of rays from the eye. This is consistent with the results of Cottrell

(1994), who observed 4.2% endorsing the extramission-only

and Winer

response

response and 34% endorsing

Depth

perception.

themixed

response.

Subjects varied widely in the degree

towhich

they

of depth per

consistent with a correct understanding

ception. Most subjects (82.4%) correctly indicated that an object would

appear larger ifheld closer to a camera (Question 10.1). The other two

questions were more difficult. Only 45.4% of subjects correctly indicated

gave responses

it appears to be

that the moon appears large on the horizon because

near distant objects (Question 10.2), and only 48.1% indicated that the

object would appear the largest if itwas placed at the top of the railroad

track picture

(Question

10.3).

Item results: Factor 3

attention. More subjects indicated that itwould be possible

in a neighboring conversation than themeaning of

the conversation. However, 49% indicated that theywould probably or

definitely hear themeaning of the other group's conversation. Clearly,

Auditory

to hear one's name

incorrect, but on some interpretations of the question,

ifone assumes that in a typical setting one might

briefly stop attending to the current conversation to quickly sample an

this is nominally

itmight be reasonable

other.

Visual memory 1 (unstructured). As in previous experiments, subjects

their ability to remember large sets of unrelated

greatly underestimated

pictures. Ifone assumes that 90% correct is a reasonable estimate based on

previous research (the closest comparison isNickerson, 1965), then 78.7%

their performance for 20 pictures (Question

of subjects underestimated

their performance for 50 pictures (Question

5.1), 94.4% underestimated

for 1,000 pictures

their performance

5.2), and 94.4% underestimated

(Question 5.3). In fact, 51.8% of subjects essentially indicated theywould

be guessing for 1,000 pictures.

Visual search. Most subjects recognized that the feature search would be

easy and that the conjunction search would be more difficult: 88.9% indi

cated that the feature search would be "very fast," compared with 29.9%

thought so for the conjunction search. It is important to note that

the 64.8% of subjects who indicated that the conjunction search would be

"somewhat fast"were not necessarily incorrect because the example they

were responding to had only 5 distractors. The key is thatmost subjects

who

knew to differentiate the two. Furthermore, 78.7% of subjects indicated

that the conjunction search would be slowed by distractors more than the

feature search, and 0% of subjects indicated that the feature search would

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

VISUAL

METACOGNITION

465

QUESTIONNAIRE

be more slowed. In addition,many subjects (67.5%) indicated thatthe

feature search would

be the same or fasterwith the addition

of a large

numberof distractors,

and 87.9% indicatedthattheconjunctionsearch

be slower with more

would

distractors. However,

68.4%

of subjects who

indicatedthattheconjunctionsearchwould be slowedgave the"somewhat

slower"response.

Familiarityresponses

On average, 53.5% of subjects reported having heard about the relevant

questions

research(range,18-76%). Overall, responsesto thefamiliarity

were not associatedwith responsesto themetacognitivequestions.In one

case, there was a trend for responses

toQuestion

7.3 to be associated with

havingheard about researchexploringperceptionof brieflypresented

more

displays.Subjectswho had heard about this(72.2%) were slightly

likely to report that theywould retain more

report, t(106) = 1.801, p= .075.

information than they could

withdemographicquestions

Global correlations

To testwhether global achievement or general experience in psychol

ogy affected responses on the VMQ, we ran a series of regressions using

self-reported achievement test scores, grade point average, and number

of psychology courses as predictors for each of the 11 section scores (sum

marized

in Table 2). This analysis suggests that psychology classes reduced

degree. In

changedetectionoveroptimismtoamarginallynonsignificant

addition,

subjects with high grade point averages and achievement

test

scores endorsed less expansive views about visual attention. High achieve

ment also predicted more optimistic estimates of visual knowledge and

More surprisingly,

response.

lowerlikelihoodofgivingan extramissionist

high achievement scores were associated with less accuracy on the visual

search questions. Finally, subjects who had taken more psychology courses

were more likely to endorse limits on auditory attention.

DISCUSSION

This initial administration of theVMQ has produced a range of findings,

and to focus the discussion we return to the three hypotheses mentioned

earlier. First,we were interested in exploring whether itwould be possible

to observe other large overestimates of visual performance in addition to

overestimates of change detection. In thiswe were successful for the inat

tention blindness and visual knowledge items. In the case of inattention

blindness, the difference between estimated performance and the base

rate was 45.9%, an overestimate of a magnitude

similar to that observed

here for change detection (which ranged from 37.9% to 74.7%). In the

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

LEVIN

466

&

ANGELONE

Table 2. Regressions using achievement, GPA, and coursework to predict VMQ

subsection scores (n = 94)

Ach Test

Subsection

Change detection

Visual memory 2 (structured)

Visual attention

Inattention blindness

Visual knowledge

Icon and subitization

Extramission

Depth perception

Visual memory 1 (unstructured)

Auditory attention

Visual search

GPA

NCourses

-.184#

-.255*

-.213#

.217*

-.241 *

-.243*

-.261*

Note. Entries are beta values for each significantor near-significantpredictor in

multiple linear regressions predicting responses on each of the 11VMQ sections.

GPA = grade point average; VMQ = Visual Metacognition Questionnaire.

*p <

.05. #p <

.10.

case ofmemory

for a common

object, subjects again overestimated

by up

to 50%.

The factor analysis produced only one strong factor but was interesting

for two reasons. The first factor demonstrates a moderate

nonetheless

relationship between the questions asking subjects about their ability to

process well-structured visual information. It is interesting to note that this

factor includes responses to questions about memory for the structured

information inmovies (in which all of the individual scenes are related by

a narrative) but not the questions about memory for the less structured

information. Thus it appears that the difference between processing of

structured and unstructured information ismore salient to subjects than

the difference between long-term memory and themore immediate kinds

of memory inherent to change detection. In addition, the fact that the

inattention blindness and visual attention scenarios also loaded on this

factor suggests that these beliefs are broad and might be summarized as

can effectively process and monitor meaningful visual

information. This is consistent with previous findings (Levin et al., 2002)

that subjects predict change detection success even when the prechange

a belief that people

and postchange displays are described as being separated in time by up to

an hour. One would think that such a scenario would clearly invoke the

need formemory for visual properties, but it did not. Subjects not only

demonstrated overestimates of change detection equivalent to no-delay

the concept of memory in response

scenarios but also rarelymentioned

justifications (in contrast to a scenario asking about memory

for unrelated

digits).

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

VISUAL

METACOGNITION

QUESTIONNAIRE

467

Also consistentwith thehypothesisthatsubjectsdraw on heuristics

specificallyrelated tovision is that thepredictionsabout nonvisual at

tention(e.g., thecocktailpartyscenario) did not correlatestrongly

with

Factor 1.Although

is unclear,

the degree

this is a potentially

towhich a cocktail party is trulystructured

important finding because

it suggests that

intuitions

about visionare distinctandmay exclude intuitionsrelatedto

other senses. This

is important because many explanations of the coun

rest on arguments about the im

terintuitive nature of change blindness

mediate

accessibility of the visual world

as opposed

overestimate of awareness across all modalities

to a more

general

(see Levin & Beck, 2004;

Rensink,2000). It isalso important

because itconstitutes

further

evidence

that overestimates of visual awareness cannot be attributed solely tomore

general cognitive heuristics such as a hindsight bias.

Limits and potential uses for theVMQ

It is important to note that at this point theVMQ

should be considered

an experimental

survey, not a fully validated instrument. We have not

tested the degree towhich ithas external validity and have not tested its

test-retest reliability (in fact,we know of no case inwhich this has been

testedformetacognitivejudgmentsinadults).

Another

important issue is thatwe tested theVMQ

on a population

that

has had some academic experience with psychology. Therefore, parts of

our current administration might reflect a combination of formal learning

and intuition. However, it is important to note that reported psychology

class experience and familiarity with specific sections had only a small

impact on a few of the subsections. Therefore,

intuitions about visual

experience may resist change. For example, it has proven quite difficult

to eliminate extramissionist responses with anything but themost direct

and concrete educational interventions (seeWiner & Cottrell, 2004, for a

review), and at least some other misestimates

are not affected bywarnings

(see Harley et al., 2004).

Our undergraduate population may not represent the general popula

tion in other ways as well. For example, we tested predominantly young

adults, who may be more optimistic about their abilities than older adults.

However, we collected some metacognitive data recently from a sample of a

range of hospital employees. Most of these were not full-time students, and

theywere much older than the students (mean age, 42; n = 28). Although

the specific testconditions were different,we did ask them variants of three

change detection questions thatwere on the VMQ, and their responses

were very similar to those of the college students in the current sample:

85% of the hospital employees

indicated

theywould detect the shirt and

basketball change (compared with 87% of the current college-age subjects),

58% indicated theywould detect the actor change (compared with 53% of

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

LEVIN

468

current subjects), and 32% indicated

&

ANGELONE

theywould detect the plate change

(compared with 38% of current subjects).

Potential

applications

for theVMQ

ranging from research on the link be

to research on the

and visual performance,

tool. As a tool for assessing

beliefs of jurors, to its use as a pedagogical

We

envision uses for the VMQ

tween visual metacognition

and performance,

the relationship between metacognition

provide an initial, broad test for a series ofmisunderstandings

theVMQ can

about vision

and visual attention thatmight reveal performance-limiting blind spots. In

addition, itmight be possible to reveal individual limits on performance if

theVMQ proves to tap a reasonably stable set of intuitions and knowledge.

We suspect that theVMQprobably will reveal performance-metacognition

links for highly complex visual tasks requiring deliberation and those for

which calibration of performance based on short-term experience is dif

ficult. For example, many visual tasks require monitoring a large amount

of visual information for infrequent, vaguely defined

targets. In other situ

ations, visual tasks are preformed only rarely,with little opportunity for

practice. In such cases, a person's beliefs about the difficulty of detecting

the particular target, or about detecting targets in general, could play a

role in allocating visual resources.

However, there are more reasons

to understand metacognition

than

searching for its impact on visual performance. At the beginning of this

article we reviewed many situations that directly tap intuitions about hu

man capabilities. In general, any time it is necessary to predict what an

other person will do, we need to understand not only general principles

about representational states but also more specific limits on his or her

ability to extract information from the visual world. For example, many

legal cases, especially lawsuits, rest on assumptions about the capabilities

of a reasonable person. So when a shopper sues a mall after breaking his

or her ankle in a construction area, legal fact finders must determine

a "reasonable

person" would have seen thewarning signs posted

This inevitably involves ajudgment about the

by themall's management.

reasonable person's visual and cognitive capabilities, and ifpeople have a

whether

mistaken impression of these, then poor assignment of blame may result

(for a review, see Rachlinski, 2004). It is also possible that the VMQ will

prove to be a valid and reliable testof individual differences in understand

ings about visual limits. if this is the case, then itmay be useful to use it as

a guide for juror selection and as a way of testing for particularly strong

misconceptions

among different populations who must make vision-based

judgments.

Finally, results from theVMQ, in conjunction with other research on vi

sual metacognition, may lay the foundations for expert testimony in visual

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

VISUAL

METACOGNITION

QUESTIONNAIRE

469

cognition. Traditionally, expert testimony is allowed only when an expert

can provide information that isbeyond the ken of the ordinaryjuror, and

we know of at least one case inwhich a psychologist was not allowed to

testifybecause the judge determined that change blindness was consistent

with intuition. The data reported here provide a sound refutation to this

argument. More generally, theVMQ might be used as an educational tool.

It isoften difficult to convince people that their intuitions are wrong, and

theVMQ might be administered, then reviewed as a means of confronting

people with their own incorrectjudgments and perhaps convincing them

of the need to formally test hypotheses about visual functioning instead

of simply considering the degree towhich something is clearly visible to

them.

Appendix.

List of questions

on theVMQ

1. Change detection

1.1 Jerseyand ball

1.2 Person (actor)

1.3 Plate

1.4 Array:Monkey

1.5 Intent scene

1.6 Intentjumble scene

2. Inattentionblindness

2.1 Gorilla

2.2 Think words

2.3 Think space

2.4 Pilot

3. Visual attention

3.1 Frame (breadth)

3.2 Friend (breadth)

3.3 Percentage look at (countenance)

3.4 To see change (necessity)

3.5 To remember (necessity)

4. Auditoryattention

4.1Meaning

4.2 Name

5. Visual memory

5.1-5.3 Inspection set: 20, 50, and 1,000

5.4 Percentage incidental (movie memory)

5.5 Percentage intent (moviememory)

5.6 Face memory

6. Visual knowledge

6.1 Head

6.2 Liberty

6.3 United States ofAmerica

6.4 Date

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

LEVIN

470

&

ANGELONE

7. Icon and subitization

7.1 Number of letters

7.2 Afterimage

7.3 Partial report

8. Visual search

8.1 Speed feature

8.2 Speed conjunction

8.3 Slope feature

8.4 Slope conjunction

9. Extramission

10. Depth perception

10.1 Fish

10.2Moon (horizon)

10.3 Tracks (horizon)

Notes

This

on work

is based

material

under

no.

grant

SES-0214969,

supported

by the National

to Daniel

T. Levin.

awarded

Science

Foundation

Thanks toGerald Winer, JimArlington, and Stephen Killingsworth for reading

on

of this manuscript.

versions

commenting

previous

to Daniel

about

this article should be addressed

T Levin, De

Correspondence

of

and

Human

Vanderbilt

partment

Development,

University,

Psychology

Peabody

and

College #512,230Appleton Place, Nashville, TN 37203-5701 (e-mail:daniel.tlevin?

for publication

Received

vanderbilt.edu).

2007.

May

5, 2006;

revision

received

March

17,

References

B. L., Levin,

The

roles of representation

(2003).

D.J.

in change

blindness.

Perception, 32, 947-962.

comparison

in vision.

G. (1968).

Short-term

E., 8c Sperling,

Averbach,

storage of information

In R. N. Haber

research and theory in visual perception

(Ed.), Contemporary

(pp.

Angelone,

and

New

202-214).

Beck,

M.

D. T,

8c Simons,

failures

R., Levin,

Beliefs

about

York:

D. T,

Holt,

&

8cWinston.

Rinehart,

Angelone,

the roles of intention

B. L.

and

(2007).

scene

and Cognition,

16, 31-51.

R. J., 8c Rabinowitz,

Biederman,

I., Mezzanotte,

and judging

Detecting

objects

undergoing

14, 143-177.

Psychology,

Change

complexity

blindness

in

change

blindness:

detection.

Consciousness

Scene

(1982).

J. C.

perception:

relational

violations.

Cognitive

8cYarnold,

P. R. (1995).

and explor

Principal-components

analysis,

In

factor

L.

G.

Grimm

P.

R.

Yarnold

and

8c

(Eds.),

atory

analysis.

confirmatory

and understanding

multivariate

statistics (pp. 99-136).

DC:

Reading

Washington,

American

Association.

Psychological

Bryant,

Cottrell,

F. B.,

J. E.,

ception:

218-228.

8cWiner,

The

G. A.

decline

(1994).

Development

of extramission

beliefs.

in the understanding

Developmental

of per

Psychology,

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

30,

VISUAL

H.

Flavell,J.

(2004).

and

Thinking

of

Development

seeing: Visual

F. L.,

Green,

J. H.,

8c Flavell,

attentional

about

about

vision.

in adults

and

knowledge

metacognition

Cambridge, MA: MIT Press.

Flavell,

471

QUESTIONNAIRE

METACOGNITION

E. R.

(1995).

The

In D. T. Levin

children

of children's

development

focus.

(Ed.),

13-36).

(pp.

706-712.

Developmental

Psychology,

Visual

and the development

of size con

(2004).

metacognition

and seeing: Visual metacognition

in adults

In D. T. Levin

(Ed.), Thinking

knowledge

C. E.

Granrud,

stancy.

31(4),

and children(pp. 121-144). Cambridge, MA: MIT Press.

In

A breakdown

of simultaneous

information

(1991).

processing.

to

8c L. W. Stark

research: From molecular

(Eds.), Presbyopia

biology

visual adaptation. New York: Plenum.

K. A., 8c Loftus, G. R. (2004).

The

effect:

E. M., Carlsen,

"saw-it-all-along"

Harley,

R.

Haines,

F.

G. Obrecht

of visual

Demonstrations

and

Memory,

A.

(2003).

Learning,

Hollingworth,

detection

Cognition,

of retrieval

Failures

scenes.

in natural

Journal

and Performance,

29, 388-403.

F. C,

L.,

Rozenblit,

the

ing

and

of Experimental

constrain

comparison

ofExperimental

Psychology:

Psychology: Human

change

Perception

course.New York: Holt.

A briefer

(1892). Psychology:

James,W.

Keil,

bias. Journal

960-968.

30,

hindsight

C. M.

8cMills,

limits of understanding.

(2004). What

In D. T. Levin

lies beneath:

(Ed.),

Understand

and

Thinking

seeing:

Visual metacognition in adults and children (pp. 227-250). Cambridge, MA:

MIT

Press.

D.

T. (2001).

Visual metacognitions

underlying

change

at the Vision

estimates ofpicture memory. Poster presented

FL.

Sarasota,

Levin,

D. T., 8c Beck, M. R.

Levin,

between

(2004).

failure

metacognitive

blindness

blindness

Sciences

and

conference,

about seeing: Spanning

the difference

Thinking

In D. T. Levin

and success.

(Ed.), Thinking and

seeing:Visual metacognitionin adults and children(pp. 121-144). Cambridge,

MA:

Press.

MIT

D. T, Drivdahl,

Levin,

S. B., Momen,

N. M.,

8c Beck, M. R.

about

the detectability

of visual changes:

of attended

and the continuity

memory,

Consciousness

blindness.

D. T., Momen,

Levin,

ness

role of beliefs

objects

in causing

507-527.

N. M.,

error

in motion

Bulletin

Psychonomic

pictures.

I. (1998).

Inattentional

A., & Rock,

P. H.,

Miller,

sions

& Bigi, L. (1977).

affect performance.

P. H.,

& Weiss,

variables

affect

Miller,

U.,

Neisser,

M.

G.

R.

?f Review,

blindness.

Cambridge,

of how

understanding

Child Development,

48, 1712-1715.

MA:

R.

S.

(1965).

blindness

MIT

stimulus

objects

Press.

dimen

and adults'

about what

knowledge

Child Development,

543-549.

53,

to

Selective

looking: Attending

visually specified

Children's

attention.

(1975).

events. CognitivePsychology,7(4), 480-494.

Nickerson,

attention,

change

to attended

changes

501-506.

4,

Children's

(1982).

selective

8c Becklen,

predictions

about

D. J. (2000). Change

blind

of overestimating

change-detection

metacognitive

397-412.

7(1-3),

ability. Visual Cognition,

to detect

Failure

Levin, D. T., 8c Simons, D. J. (1997).

Mack,

False

and Cognition,

11(4:),

S. B., 8c Simons,

Drivdahl,

The

blindness:

(2002).

The

Short-term

memory

for complex

meaningful

visual

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

con

LEVIN

472

A demonstration

figurations:

155-160.

R. S., & Adams,

Nickerson,

of capacity.

(1979).

M.J.

Canadian

Journal

of Psychology,

for a common

memory

Long-term

ANGELONE

&

19,

object.

CognitivePsychology,11, 287-307.

Rachlinski, J.J. (2004). Misunderstanding ability,misallocating responsibility. In

D. T. Levin

(Ed.),

and

Thinking

seeing: Visual

metacognition

in adults

and

children

(pp. 251-276). Cambridge, MA: MIT Press.

K

J. E., Shapiro,

Raymond,

8cArnell,

L.,

K. M.

(1992).

Temporary

of

suppression

visual processing in an RSVP task:An attentional blink?Journal ofExperimental

Psychology: Human

D., 8cMcLean,

Reisberg,

Perception

J. (1985).

and Performance,

Meta-attention:

849-860.

18(3),

Do we

know when

we

are

distracted?Journal ofGeneralPsychology,112,291 -306.

R. A.

Rensink,

Visual

(2000).

tentional

search

Visual

processing.

for change:

A

probe

7, 345-376.

into

being

the nature

of at

Cognition,

Rensink, R. A. (2002). Change detection. Annual Review ofPsychology,53, 245

277.

see or not to see: The need

R. A., O'Regan,

J. K., 8c Clark, J. J. (1997). To

to

scenes.

in

for attention

Science, 8, 368-373.

perceive

changes

Psychological

in our midst:

C. F. (1999). Gorillas

Sustained

inattentional

Simons, D. J., & Chabris,

events.

blindness

for dynamic

28, 1059-1074.

Perception,

blindness.

Trends in Cognitive Science,

Simons, D. J., & Levin, D. T. (1997).

Change

Rensink,

1, 261-267.

D.

blindness

J., 8c Levin, D. T. (2003). What makes

change

interesting?

E. Irwin 8c B. H. Ross

The psychology of learning and motivation

(Eds.),

advances

in research and theory: Cognitive

vision

New

(Vol. 42, pp. 295-322).

Simons,

In D.

Academic

Press.

Standing,

L.

Learning

Standing,

L.,

York:

(1973).

25, 207-222.

pictures:

73-74.

Conezio,

10,000

J., 8c Haber,

Single-trial

learning

pictures.

R. N.

of 2500

Journal

ofExperimental

Psychology,

and memory

(1970).

Perception

visual

stimuli. Psychonomic

Science,

for

19,

A feature

8c Gelade,

G.

(1980).

theory of attention.

integration

12, 97-136.

Psychology,

D. A. (2005).

Inattentional

blindness

for a

Levin, D. T., 8cVarakin,

Wayand,

J.W,

noxious

multimodal

stimulus. American

339-352.

118,

Journal

ofPsychology,

Treisman,

A.,

Cognitive

Winer, G. A., 8cCottrell, J. E. (2004). The odd belief that raysexit the eye during

vision.

In D. T. Levin

(Ed.),

Thinking

and

seeing: Visual

and children(pp. 97-120). Cambridge, MA: MIT Press.

Winer,

G. A.,

words,

metacognition

in adults

K. D., 8c Chronister,

M.

(1996).

J. E., Karefilaki,

Images,

Variables

that

influence

beliefs

in children

about

vision

questions:

Cottrell,

and

and adults.Journal ofExperimentalChildPsychology,63, 499-425.

This content downloaded from 209.175.73.10 on Thu, 21 Jan 2016 09:30:44 UTC

All use subject to JSTOR Terms and Conditions

You might also like

- Finishing SchoolDocument8 pagesFinishing Schoolsyama99950% (2)

- Active Vision The Psychology of Looking and Seeing - John M. FindlayDocument184 pagesActive Vision The Psychology of Looking and Seeing - John M. FindlayMuntaser Massad100% (2)

- Grade 7 Exam 9 PDFDocument16 pagesGrade 7 Exam 9 PDFShane Rajapaksha100% (2)

- Catabasis Vergil and The Wisdom Tradition - ClarkDocument82 pagesCatabasis Vergil and The Wisdom Tradition - ClarkDracostinarumNo ratings yet

- Rashvihari Das - A Handbook To Kant's Critique of Pure Reason - HIND KITABS LIMITED (1959)Document249 pagesRashvihari Das - A Handbook To Kant's Critique of Pure Reason - HIND KITABS LIMITED (1959)Monosree chakraborty100% (1)

- The Praise of Twenty-One Taras PDFDocument23 pagesThe Praise of Twenty-One Taras PDFlc099No ratings yet

- Shahani - Gay Bombay. Globalization, Love and (Be) Longing in Contemporary India PDFDocument351 pagesShahani - Gay Bombay. Globalization, Love and (Be) Longing in Contemporary India PDFPablo SalasNo ratings yet

- Understanding Research 1st Edition Neuman Test BankDocument21 pagesUnderstanding Research 1st Edition Neuman Test BankValerieTayloredzmo100% (18)

- ASME & NAFEMS - 2004 - What Is Verification and Validation - SCDocument4 pagesASME & NAFEMS - 2004 - What Is Verification and Validation - SCjadamiat100% (1)

- Reaction Paper On Rewards and RecognitionDocument8 pagesReaction Paper On Rewards and RecognitionMashie Ricafranca100% (2)

- Rosin, Stock, Campbell - Food Systems FailureDocument257 pagesRosin, Stock, Campbell - Food Systems FailureEge Günsür100% (1)

- Broadcasting WorkshopDocument9 pagesBroadcasting WorkshopRyan De la TorreNo ratings yet

- Simons - Cities of Ladies. Beguine Communities in Medieval Low CountriesDocument348 pagesSimons - Cities of Ladies. Beguine Communities in Medieval Low CountriesPaul LupuNo ratings yet

- WALTZ. Peace, Stability and Nuclear WeaponsDocument17 pagesWALTZ. Peace, Stability and Nuclear WeaponsMarina AlmeidaNo ratings yet

- (Continuum Literary Studies) Jon Clay-Sensation, Contemporary Poetry and Deleuze - Transformative Intensities-Bloomsbury Academic (2010)Document220 pages(Continuum Literary Studies) Jon Clay-Sensation, Contemporary Poetry and Deleuze - Transformative Intensities-Bloomsbury Academic (2010)Haydar ÖztürkNo ratings yet

- Abensour 2008Document16 pagesAbensour 2008hobowoNo ratings yet

- Bobolo Us Russia Relations Biden Era 2022 PDFDocument38 pagesBobolo Us Russia Relations Biden Era 2022 PDFLaura AntonNo ratings yet

- Bataille-Letter To René Char On The Incompatibilities of The WriterDocument14 pagesBataille-Letter To René Char On The Incompatibilities of The Writerlin suNo ratings yet

- Critical Theory - and Inclusion - 2021Document10 pagesCritical Theory - and Inclusion - 2021IRENENo ratings yet

- The Psychoanalytic Paradox and Capitalist Exploitation Slavoj I Ek and Juan Carlos Rodr GuezDocument29 pagesThe Psychoanalytic Paradox and Capitalist Exploitation Slavoj I Ek and Juan Carlos Rodr GuezAdrian PrisacariuNo ratings yet

- (2018) - Safe Cities and Queer Spaces The Urban Politics of Radical LGBT ActivismDocument16 pages(2018) - Safe Cities and Queer Spaces The Urban Politics of Radical LGBT ActivismJesus patioNo ratings yet