Professional Documents

Culture Documents

Biostatistics 101: Data Presentation: Yhchan

Uploaded by

Thanos86Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Biostatistics 101: Data Presentation: Yhchan

Uploaded by

Thanos86Copyright:

Available Formats

B a s i c

S t a t i s t i c s

F o r

D o c t o r s

Singapore Med J 2003 Vol 44(6) : 280-285

Biostatistics 101:

Data Presentation

Y H Chan

INTRODUCTION

Now we are at the last stage of the research process(1):

Statistical Analysis & Reporting. In this article, we

will discuss how to present the collected data and the

forthcoming write-ups will highlight on the appropriate

statistical tests to be applied.

The terms Sample & Population; Parameter &

Statistic; Descriptive & Inferential Statistics; Random

variables; Sampling Distribution of the Mean; Central

Limit Theorem could be read-up from the references

indicated(2-11).

To be able to correctly present descriptive (and

inferential) statistics, we have to understand the two

data types (see Fig. 1) that are usually encountered

in any research study.

Fig.1

Data Types

Clinical trials and

Epidemiology

Research Unit

226 Outram Road

Blk A #02-02

Singapore 169039

Y H Chan, PhD

Head of Biostatistics

Correspondence to:

Y H Chan

Tel: (65) 6317 2121

Fax: (65) 6317 2122

Email: chanyh@

cteru.gov.sg

Quantitative

(Takes numerical values)

Qualitative

(Takes coded numerical values)

- discrete

(whole numbers)

e.g. Number of children

- ordinal

(ranking order exists)

e.g. Pain severity

- continuous

(takes decimal places)

e.g. Height, Weight

- nominal

(no ranking order)

e.g. Race, Gender

There are many statistical software programs

available for analysis (SPSS, SAS, S-plus, STATA, etc).

SPSS 11.0 was used to generate the descriptive tables

and charts presented in this article.

It is of utmost importance that data cleaning

needed to be carried out before analysis. For quantitative

variables, out-of-range numbers needed to be weeded

out. For qualitative variables, it is recommended

to use numerical-codes to represent the groups;

eg. 1 = male and 2 = female, this will also simplify the

data entry process. The danger of using string/text

is that a small male is different from a big Male,

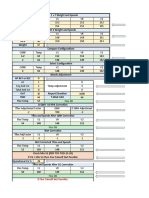

see Table I.

Table 1. Using Strings/Text for Categorical variables.

Valid

Frequency

Percent

Valid

Percent

Cumulative

Percent

female

38

50.0

50.0

50.0

male

13

17.1

17.1

67.1

Male

25

32.9

32.9

100.0

Total

76

100.0

100.0

Researchers are encouraged to discuss the

database set-up with a biostatistician before data

entry, so that data analysis could proceed without

much anguish (more for the biostatistician!). One

common mistake is the systolic/diastolic blood

pressure being entered as 120/80 which should be

entered as two separate variables.

To do this data cleaning, we generate frequency

tables (In SPSS: Analyse Descriptive Statistics

Frequencies) and inspect that there are no strange

values (see Table II).

Table II. Height of subjects.

Valid

Frequency

Percent

Valid

Percent

Cumulative

Percent

1.30

20

26.3

26.3

26.3

1.40

14

18.4

18.4

44.7

1.50

28

36.8

36.8

81.6

1.60

10

13.2

13.2

94.7

1.70

3.9

3.9

98.7

3.70

1.3

1.3

100.0

Total

76

100.0

100.0

Someone is 3.7 m tall! Note that it is not possible to

check the correctness of values like subject number

113 (take note, all subjects must be key-coded; subjects

name, i/c no, address, phone number should not be

in the dataset; the researcher should keep a separate

record for his/her eyes only) is actually 1.5 m in

height (but data entered as 1.6 m) using statistics.

This could only be carried out manually by checking

with the data on the clinical record forms (CRFs).

Singapore Med J 2003 Vol 44(6) : 281

DESCRIPTIVE STATISTICS

Statistics are used to summarise a large set of data

by a few meaningful numbers. We know that it is not

possible to study the whole population (cost and

time constraints), thus a sample (large enough(12)) is

drawn. How do we describe the population from the

sample data? We shall discuss only the descriptive

statistics and graphs which are commonly presented

in medical research.

Quantitative variables

Measures of Central Tendency

A simple point-estimate for the population mean

is the sample mean, which is just the average of the

data collected.

A second measure is the sample median, which

is the ranked value that lies in the middle of the

data. E.g. 3, 13, 20, 22, 25: median = 20; e.g. 3, 13,

13, 20, 22, 25: median = (13 + 20)/2 = 16.5. It is the

point that divides a distribution of scores into two

equal halves.

The last measure is the mode, which is the

most frequent occurring number. E.g. 3, 13, 13,

20, 22, 25: mode = 13. It is usually more informative

to quote the mode accompanied by the percentage

of times it happened; e.g, the mode is 13 with 33%

of the occurrences.

In medical research, mean and median are

usually presented. Which measure of central tendency

should we use? Fig. 2 shows the three types of

distribution for quantitative data.

Fig. 2 Distributions of Quantitative Data.

It is obvious that if the distribution is normal,

the mean will be the measure to be presented,

otherwise the median should be more appropriate.

How do we check for normality?

It is important that we check the normality of the

quantitative outcome variable as to allow us not only

to present the appropriate descriptive statistics but

also to apply the correct statistical tests. There are

three ways to do this, namely, graphs, descriptive

statistics using skewness and kurtosis and formal

statistical tests. We shall use three datasets (right

skew, normal and left skew) on the ages of 76 subjects

to illustrate.

Graphs

Histograms and Q-Q plots

The histogram is the easiest way to observe nonnormality, i.e. if the shape is definitely skewed, we can

confirm non-normality instantly (see Fig. 3). One

command for generating histograms from SPSS is

Graphs Histogram (other ways are, via Frequencies

or Explore).

Another graphical aid to help us to decide normality

is the Q-Q plot. Once again, it is easier to spot nonnormality. In SPSS, use Explore or Graphs QQ plots

to produce the plot. This plot compares the quantiles of

a data distribution with the quantiles of a standardised

theoretical distribution from a specified family of

distributions (in this case, the normal distribution).

If the distributional shapes differ, then the points will

282 : 2003 Vol 44(6) Singapore Med J

plot along a curve instead of a line. Take note that the

interest here is the central portion of the line, severe

deviations means non-normality. Deviations at the

ends of the curve signifies the existence of outliers.

Fig. 3 shows the histograms and their corresponding

Q-Q plots of the three datasets.

Descriptive statistics using skewness and kurtosis

Fig. 3 shows the three types of skewness (right:

skew >0, normal: skew ~0 and left: skew <0).

Skewness ranges from -3 to 3. Acceptable range

for normality is skewness lying between -1 to 1.

Normality should not be based on skewness

Fig. 3

Skew = 1.47

Kurtosis = 2.77

Right skew

Skew = -0.71

Kurtosis = -0.47

Left skew

Skew = 0.31

Kurtosis = -0.32

Normal

Singapore Med J 2003 Vol 44(6) : 283

alone; the kurtosis measures the peakness of

the bell-curve (see Fig. 4). Likewise, acceptable range

for normality is kurtosis lying between -1 to 1.

The corresponding skewness and kurtosis values

for the three illustrative datasets are shown in Fig. 3.

Fig. 4

Table IV. Flowchart for normality checking.

1. Small samples* (n<30): always assume not normal.

2. Moderate samples (30-100).

If formal test is significant, accept non-normality otherwise

double-check using graphs, skewness and kurtosis to confirm

normality.

3. Large samples (n>100).

If formal test is not significant, accept normality otherwise

Double-check using graphs, skewness and kurtosis to confirm

non-normality.

kurtosis >0

0.3

* Reminder: not ethical to do small sized studies(12).

0.2

kurtosis ~0

0.1

kurtosis <0

0.0

-6

-4

-2

Formal statistical tests Komolgorov Smirnov one

Sample test and Shapiro Wilk test

Here the null hypothesis is: Data is normal

Table III. Normality tests.

Kolmogorov-Smirnov

Shapiro-Wilk

Statistic

df

Sig.

Statistic

df

Sig.

Right Skew

0.187

76

0.000

0.884

76

0.000

Normal

0.079

76

0.200

0.981

76

0.325

Left skew

0.117

76

0.012

0.927

76

0.000

From the p-values (sig), see Table III, both

Right skew and Left skew are not normal (as

expected!). To test for normality, in SPSS, use

the Explore command (this will also generate the

QQ plot). One caution in using the formal test is

that these tests are very sensitive to the sample sizes

of the data.

For small samples (n<20, say), the likelihood of

getting p<0.05 is low and for large samples (n>100),

a slight deviation from normality will result in

the rejection of the null hypothesis! Urghh... I

know this is so confusing! So, normal or not? Perhaps,

Table IV will give us some light in our checking for

normality. Take note that the sample sizes suggested

are only guidelines.

Measures of Spread

The measures of central tendency give us an

indication of the typical score in a sample. Another

important descriptive statistics to be presented for

quantitative data is its variability the spread of the

data scores.

The simplest measure of variation is the range

which is given by the difference between the

maximum and minimum scores of the data. However,

this does not tell us whats happening in between

these scores.

A popular and useful measure of spread is the

standard deviation (sd) which tells us how much all

the scores in a dataset cluster around the mean. Thus

we would expect the sd of the age distribution of a

primary one class of pupils to be zero (or at least a

small number). A large sd is indicative of a more

varied data scores. Fig. 5 shows the spread of two

distributions with the same mean.

Fig. 5 Measures of Spread: standard deviations.

0.4

0.3

sd = 1.0

0.2

sd = 2.0

0.1

0.0

-6

-4

-2

For a normal distribution, the mean coupled with

the sd should be presented. Fig. 6 gives us an

indication of the percentage of data covered within

one, two and three standard deviations respectively.

284 : 2003 Vol 44(6) Singapore Med J

0.4

68%

0.3

95%

0.2

99%

0.1

0.0

-4

-3

-2

-1

Here comes the million dollar question? Does a

small sd imply good research data? I believe most of

you (at least 90%) would say yes! Well, partly you are

right it depends.

For the age distribution of the subjects enrolled

in your research study, you would not want the sd to

be small as this will imply that your results obtained

could not be generalised to a larger age-range group.

On the other hand, you would hope that the sd of

the difference in outcome response between two

treatments (active vs control) to be small. This shows

the consistency of the superiority of the active over

the control (hopefully in the right direction!).

Interval Estimates (Confidence Interval)

The accuracy of the above point estimates is dependent

on the sampling plan of the study (the assumption that

a representative sample is obtained). Definitely if we

are allowed to repeat a study (with fixed sample size)

many times, the mean and sd obtained for each study

may be different, and from the theory of the Sampling

Distribution of the Mean, the mean of all the means

of the repeated samples will give us a more precise

point estimate for the population mean.

In medical research, we do not have this luxury of

doing repeated studies (ethical and budget constraints),

but from the Central Limit Theorem, with a sample

large enough(12), an interval estimate provides us a

range of scores within which we are confident, usually

a 95% Confidence Interval (CI), that the population

mean lies within.

Using the Explore command in SPSS, the CI

at any percentage could be easily obtained. For a

simple (large sample) 90% or 95% CI calculation for

the population mean, use

sample mean c * sem

where c = 1.645 or 1.96 for 90% or 95% CI respectively

and sem (standard error of the mean) = sd/ n (where

n is the sample size).

For example, the mean difference in BP reduction

between an active treatment and control is 7.5 (95%

CI 1.5 to 13.5) mmHg. It looks like the active is

fantastic with a 7.5 mmHg reduction but from the

large confidence interval of 12 (= 13.5 - 1.5), it could

possibly be that the study was conducted with a

small sample size or the variation of the difference

was large. Thus from the CI, we are able to assess

the quality of the results.

When should the usual 95% CI be presented.

Surely for treatment differences, it should be specified.

How about variables like age? Theres no need for

age in demographics but if we are presenting the age

of risk of having a disease, for example, then a 95%

CI would make sense.

The error bar plot is a convenient way to show

the CI, see Fig. 7.

Fig. 7 Error bar plot.

30

28

26

Age

Fig. 6 Distribution of data for a normal curve.

24

22

20

female

male

Qualitative variables

For categorical variables, frequency tables would

suffice. For ordinal variables, the correct order of

coding should be used (for example: no pain = 0,

mild pain = 1, etc). Graphical presentations will be

bar or pie charts (will not show any examples as

these plots are familiar to all of us).

CONCLUSIONS

The above discussion on the presentation of data

is by no means exhaustive. Further readings(2-11) are

encouraged. A recommended Table for demographic

in an article for journal publication is

Group A

Group B

Male

n1 (%)

n2 (%)

Female

n3 (%)

n4 (%)

Quantitative variable (e.g. age)

Mean (sd)

Range

Median

Qualitative variable (e.g. sex)

p-value

Singapore Med J 2003 Vol 44(6) : 285

We shall discuss the statistical analysis of

quantitative data in our next issue (Biostatistics

102: Quantitative Data Parametric and NonParametric tests).

REFERENCES

1. Chan YH. Randomised controlled trials (RCTs) Essentials,

Singapore Medical Journal 2003; Vol 44(2):60-3.

2. Beth Dawson-Saunders, Trapp RG. Basic and clinical biostatistics.

Prentice Hall International Inc, 1990.

3. Bowers D, John Wiley and Sons. Statistics from scratch for Health

Care professionals, 1997.

4. Bowers D, John Wiley and Sons. Statistics further from scratch for

Health Care professionals, 1997.

5. Pagano M and Gauvreau K. Principles of biostatistics, Duxbury

Press. Wadsworth Publishing Company, 1993.

6. Lloyd D Fisher, Gerald Van Belle, Biostatistics. A methodology for

the health sciences. John Wiley & Sons, 1993.

7. Campbell MJ, Machin D. Medical statistics A commonsense

approach. John Wiley & Sons, 1999.

8. Bland M. An introduction to medical statistics. Oxford University

Press, 1995.

9. Armitage P and Berry G. Statistical methods in medical research.

3rd edition, Blackwell Science, 1994.

10. Altman DG. Practical statistics for medical research. Chapman

and Hall, 1991.

11. Larry Gonick and Woollcott Smith. The cartoon guide to statistics.

HarperCollins Publishers, Inc. 1993.

12. Chan YH. Randomised controlled trials (RCTs) Sample size: the

magic number? Singapore Medical Journal 2003; Vol 44 (4):172-4.

You might also like

- Data Analysis Chp9 RMDocument9 pagesData Analysis Chp9 RMnikjadhavNo ratings yet

- StatisticsDocument211 pagesStatisticsHasan Hüseyin Çakır100% (6)

- Understanding Statistics - KB Edits040413Document70 pagesUnderstanding Statistics - KB Edits040413Conta KinteNo ratings yet

- Understanding StatisticsDocument23 pagesUnderstanding StatisticsDeepti BhoknalNo ratings yet

- Siu L. Chow Keywords: Associated Probability, Conditional Probability, Confidence-Interval EstimateDocument20 pagesSiu L. Chow Keywords: Associated Probability, Conditional Probability, Confidence-Interval EstimateTammie Jo WalkerNo ratings yet

- 1 Descriptive Statistics - UnlockedDocument18 pages1 Descriptive Statistics - UnlockedNidaOkuyazTüregünNo ratings yet

- Appendix B: Introduction To Statistics: Eneral TerminologyDocument15 pagesAppendix B: Introduction To Statistics: Eneral TerminologyXingjian LiuNo ratings yet

- 2013 Trends in Global Employee Engagement ReportDocument8 pages2013 Trends in Global Employee Engagement ReportAnupam BhattacharyaNo ratings yet

- Naïve Bayes Model ExplainedDocument15 pagesNaïve Bayes Model ExplainedHarshita Sharma100% (1)

- BSChem-Statistics in Chemical Analysis PDFDocument6 pagesBSChem-Statistics in Chemical Analysis PDFKENT BENEDICT PERALESNo ratings yet

- Exploratory Data AnalysisDocument106 pagesExploratory Data AnalysisAbhi Giri100% (1)

- Measures of Central TendencyDocument48 pagesMeasures of Central TendencySheila Mae Guad100% (1)

- Unit-3 DS StudentsDocument35 pagesUnit-3 DS StudentsHarpreet Singh BaggaNo ratings yet

- Basics of Statistics: Definition: Science of Collection, Presentation, Analysis, and ReasonableDocument33 pagesBasics of Statistics: Definition: Science of Collection, Presentation, Analysis, and ReasonableJyothsna Tirunagari100% (1)

- Marketing Research Sample SizesDocument9 pagesMarketing Research Sample SizesRai AbubakarNo ratings yet

- Testing of AssumptionsDocument8 pagesTesting of AssumptionsK. RemlalmawipuiiNo ratings yet

- Error Bars in Experimental BiologyDocument5 pagesError Bars in Experimental BiologyEsliMoralesRechyNo ratings yet

- STATISTICAL ANALYSIS OF QUANTITATIVE DATADocument41 pagesSTATISTICAL ANALYSIS OF QUANTITATIVE DATAabbyniz100% (1)

- Lecture 1 Descriptive Statistics Population & Sample MeasuresDocument30 pagesLecture 1 Descriptive Statistics Population & Sample MeasuresMandeep JaiswalNo ratings yet

- Simplified Statistics For Small Numbers of Observations: R. B. Dean, and W. J. DixonDocument4 pagesSimplified Statistics For Small Numbers of Observations: R. B. Dean, and W. J. Dixonmanuelq9No ratings yet

- Unit 8 TextbookDocument47 pagesUnit 8 TextbookSteve Bishop0% (2)

- BA Module 2 - As of 18th June 2020Document14 pagesBA Module 2 - As of 18th June 2020Sumi Sam AbrahamNo ratings yet

- Top 50 Interview Questions & Answers: Statistics For Data ScienceDocument21 pagesTop 50 Interview Questions & Answers: Statistics For Data SciencemythrimNo ratings yet

- Advanced Stat Day 1Document48 pagesAdvanced Stat Day 1Marry PolerNo ratings yet

- Measure of Central Tendency Dispersion ADocument8 pagesMeasure of Central Tendency Dispersion Aرؤوف الجبيريNo ratings yet

- 1020 - Data Analysis BasicsDocument8 pages1020 - Data Analysis BasicsEzra AnyalaNo ratings yet

- Basic StatisticsDocument52 pagesBasic StatisticsKrishna Veni GnanasekaranNo ratings yet

- Understanding Statistical Tests: Original ReportsDocument4 pagesUnderstanding Statistical Tests: Original ReportsDipendra Kumar ShahNo ratings yet

- Calculate Mean, Median, Mode, Variance and Standard Deviation For Column ADocument22 pagesCalculate Mean, Median, Mode, Variance and Standard Deviation For Column AAmol VirbhadreNo ratings yet

- Research Methodology: Result and Analysis (Part 1)Document65 pagesResearch Methodology: Result and Analysis (Part 1)ramesh babuNo ratings yet

- Statistical Machine LearningDocument12 pagesStatistical Machine LearningDeva Hema100% (1)

- All The Statistical Concept You Required For Data ScienceDocument26 pagesAll The Statistical Concept You Required For Data ScienceAshwin ChaudhariNo ratings yet

- Basic Statistics Lecture NotesDocument6 pagesBasic Statistics Lecture NotesKit LaraNo ratings yet

- Business Statistics SyallbusDocument10 pagesBusiness Statistics Syallbusmanish yadavNo ratings yet

- Statistical Parameters and Measures of Central TendencyDocument22 pagesStatistical Parameters and Measures of Central TendencyJosé Juan Góngora CortésNo ratings yet

- DESCRIPTIVE AND INFERENTIAL STATISTICSDocument10 pagesDESCRIPTIVE AND INFERENTIAL STATISTICSRyan Menina100% (1)

- Evaluating Analytical Data PDFDocument8 pagesEvaluating Analytical Data PDFMahmood Mohammed AliNo ratings yet

- Standard Deviation DissertationDocument5 pagesStandard Deviation DissertationOrderCustomPaperUK100% (1)

- Research PresentationDocument29 pagesResearch PresentationAvitus HamutenyaNo ratings yet

- Probability TheoryDocument354 pagesProbability TheoryMohd SaudNo ratings yet

- Notes On Data Processing, Analysis, PresentationDocument63 pagesNotes On Data Processing, Analysis, Presentationjaneth pallangyoNo ratings yet

- Click To Add Text Dr. Cemre ErciyesDocument69 pagesClick To Add Text Dr. Cemre ErciyesJade Cemre ErciyesNo ratings yet

- DBA-5102 Statistics - For - Management AssignmentDocument12 pagesDBA-5102 Statistics - For - Management AssignmentPrasanth K SNo ratings yet

- Descriptive StatisticsDocument9 pagesDescriptive StatisticsBreane Denece LicayanNo ratings yet

- Descriptive Statistics Inferential Statistics Standard Deviation Confidence Interval The T-Test CorrelationDocument14 pagesDescriptive Statistics Inferential Statistics Standard Deviation Confidence Interval The T-Test CorrelationjoyaseemNo ratings yet

- Introduction To StatisticsDocument21 pagesIntroduction To Statisticsmayur shettyNo ratings yet

- Z Test FormulaDocument6 pagesZ Test FormulaE-m FunaNo ratings yet

- Emerging Trends & Analysis 1. What Does The Following Statistical Tools Indicates in ResearchDocument7 pagesEmerging Trends & Analysis 1. What Does The Following Statistical Tools Indicates in ResearchMarieFernandesNo ratings yet

- Measures of Skewness and KurtosisDocument29 pagesMeasures of Skewness and KurtosisobieliciousNo ratings yet

- How Much Data Does Google Handle?Document132 pagesHow Much Data Does Google Handle?Karl Erol PasionNo ratings yet

- Business AnalyticsDocument40 pagesBusiness Analyticsvaishnavidevi dharmarajNo ratings yet

- Descriptive StatDocument13 pagesDescriptive StatJoshrel CieloNo ratings yet

- 1.medical StatisticsDocument33 pages1.medical StatisticsEINSTEIN2DNo ratings yet

- SPC 0002Document14 pagesSPC 0002Deepa DhilipNo ratings yet

- 91%-UGRD-IT6210 Quantitative Methods or Quantitative (Same Title)Document14 pages91%-UGRD-IT6210 Quantitative Methods or Quantitative (Same Title)michael sevillaNo ratings yet

- Deviation,: ErrorDocument5 pagesDeviation,: ErrorNaeem IqbalNo ratings yet

- Statistical TreatmentDocument7 pagesStatistical TreatmentKris Lea Delos SantosNo ratings yet

- Descriptive StatisticsDocument2 pagesDescriptive StatisticsJolina AynganNo ratings yet

- Dual Luciferase Reporter Assay System Protocol PDFDocument26 pagesDual Luciferase Reporter Assay System Protocol PDFThanos86No ratings yet

- FASEB J 2013 Shahnazari 3505 13Document10 pagesFASEB J 2013 Shahnazari 3505 13Thanos86No ratings yet

- NCK Influences Preosteoblasticosteoblastic MigrationDocument6 pagesNCK Influences Preosteoblasticosteoblastic MigrationThanos86No ratings yet

- Calcium Deposition AssayDocument1 pageCalcium Deposition AssayThanos86No ratings yet

- Promotor Region of CXCR4 (1100BP) : I Wish To Have A Promoter Reporter Assay With Detection Via Luciferase MethodDocument2 pagesPromotor Region of CXCR4 (1100BP) : I Wish To Have A Promoter Reporter Assay With Detection Via Luciferase MethodThanos86No ratings yet

- Administration of Tauroursodeoxycholic Acid Enhances Osteogenic Differentiation of Bone Marrow-Derived Mesenchymal Stem Cells and Bone RegenerationDocument9 pagesAdministration of Tauroursodeoxycholic Acid Enhances Osteogenic Differentiation of Bone Marrow-Derived Mesenchymal Stem Cells and Bone RegenerationThanos86No ratings yet

- A Systematic Review of The Efficacy and Pharmacological Profile of Herba Epimedii in Osteoporosis TherapyDocument10 pagesA Systematic Review of The Efficacy and Pharmacological Profile of Herba Epimedii in Osteoporosis TherapyThanos86No ratings yet

- Activation of STAT3 Is Involved in Malignancy Mediated by CXCL12-CXCR4 Signaling in Human Breast CancerDocument9 pagesActivation of STAT3 Is Involved in Malignancy Mediated by CXCL12-CXCR4 Signaling in Human Breast CancerThanos86No ratings yet

- No - Location Question/Trivia Knowledge/task Answer 1Document3 pagesNo - Location Question/Trivia Knowledge/task Answer 1Thanos86No ratings yet

- Loggerhead Sea Turtle Satoshi KamiyaDocument13 pagesLoggerhead Sea Turtle Satoshi Kamiyacheeleptaherd100% (2)

- Paper Diamond TemplateDocument1 pagePaper Diamond TemplateThanos86No ratings yet

- 6.1 Calculation of Deflection: 1) Short Term Deflection at Transfer 2) Long Term Deflection Under Service LoadsDocument7 pages6.1 Calculation of Deflection: 1) Short Term Deflection at Transfer 2) Long Term Deflection Under Service LoadsAllyson DulfoNo ratings yet

- Create Login Application In Excel Macro Using Visual BasicDocument16 pagesCreate Login Application In Excel Macro Using Visual Basicfranklaer-2No ratings yet

- LG - Week 1 - Operations - ManagementDocument4 pagesLG - Week 1 - Operations - ManagementMechaella Shella Ningal ApolinarioNo ratings yet

- Jason Cafer Biosketch 8-2009Document3 pagesJason Cafer Biosketch 8-2009Jason CaferNo ratings yet

- Ref ModulesDocument148 pagesRef ModuleshoneyNo ratings yet

- A Data-Based Reliability Analysis of ESPDocument19 pagesA Data-Based Reliability Analysis of ESPfunwithcubingNo ratings yet

- Grade-3-DLL MATHEMATICS-3 Q1 W4Document3 pagesGrade-3-DLL MATHEMATICS-3 Q1 W4Jonathan Corveau IgayaNo ratings yet

- Universal Chargers and GaugesDocument2 pagesUniversal Chargers and GaugesFaizal JamalNo ratings yet

- Grade 3 Unit 3 (English)Document1 pageGrade 3 Unit 3 (English)Basma KhedrNo ratings yet

- SurveillanceDocument17 pagesSurveillanceGaurav Khanna100% (1)

- Bill of Quantity: Baner Lifespaces LLPDocument6 pagesBill of Quantity: Baner Lifespaces LLPSales AlufacadesNo ratings yet

- Calicut University: B. A PhilosophyDocument6 pagesCalicut University: B. A PhilosophyEjaz KazmiNo ratings yet

- 1.4 Solved ProblemsDocument2 pages1.4 Solved ProblemsMohammad Hussain Raza ShaikNo ratings yet

- SPMPDocument39 pagesSPMPAnkitv PatelNo ratings yet

- Ata 47-NGS R25Document148 pagesAta 47-NGS R25NadirNo ratings yet

- Embraer ePerf Tablet App Calculates Takeoff & Landing Performance OfflineDocument8 pagesEmbraer ePerf Tablet App Calculates Takeoff & Landing Performance OfflinewilmerNo ratings yet

- Assignment (40%) : A) Formulate The Problem As LPM B) Solve The LPM Using Simplex AlgorithmDocument5 pagesAssignment (40%) : A) Formulate The Problem As LPM B) Solve The LPM Using Simplex Algorithmet100% (1)

- Educ 61 Module 5 ActivityDocument4 pagesEduc 61 Module 5 ActivityMitchille GetizoNo ratings yet

- Cascadable Broadband Gaas Mmic Amplifier DC To 10Ghz: FeaturesDocument9 pagesCascadable Broadband Gaas Mmic Amplifier DC To 10Ghz: Featuresfarlocco23No ratings yet

- Zelenbabini Darovi Ivana N Esic - CompressDocument167 pagesZelenbabini Darovi Ivana N Esic - CompressСања Р.0% (1)

- WINTER 2021 SYLLABUS All Lab Classes - Docx-2Document7 pagesWINTER 2021 SYLLABUS All Lab Classes - Docx-2Sushi M.SNo ratings yet

- (Acta Universitatis Gothoburgensis) Tryggve Göransson - Albinus, Alcinous, Arius Didymus (1995) PDFDocument128 pages(Acta Universitatis Gothoburgensis) Tryggve Göransson - Albinus, Alcinous, Arius Didymus (1995) PDFMarcos EstevamNo ratings yet

- Mains Junior Assistant GSMA - 232021Document81 pagesMains Junior Assistant GSMA - 232021RaghuNo ratings yet

- In The Shadow of The CathedralDocument342 pagesIn The Shadow of The CathedralJoy MenezesNo ratings yet

- Daily Dawn Newspaper Vocabulary with Urdu MeaningsDocument4 pagesDaily Dawn Newspaper Vocabulary with Urdu MeaningsBahawal Khan JamaliNo ratings yet

- Preparation for the entrance examDocument4 pagesPreparation for the entrance examMinh ChâuNo ratings yet

- A320 Flex CalculationDocument10 pagesA320 Flex CalculationMansour TaoualiNo ratings yet

- Noam Text ליגר טסקט םעֹנDocument20 pagesNoam Text ליגר טסקט םעֹנGemma gladeNo ratings yet

- Biocompatibility and HabitabilityDocument143 pagesBiocompatibility and HabitabilitySvetozarKatuscakNo ratings yet

- ACP160DDocument14 pagesACP160Dinbox934No ratings yet