Professional Documents

Culture Documents

Training Images-Based Stochastic Simulation

Uploaded by

PrashantDhoteCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Training Images-Based Stochastic Simulation

Uploaded by

PrashantDhoteCopyright:

Available Formats

PUBLISHED BY

World's largest Science,

Technology & Medicine

Open Access book publisher

2,850+

OPEN ACCESS BOOKS

BOOKS

DELIVERED TO

151 COUNTRIES

97,000+

INTERNATIONAL

AUTHORS AND EDITORS

AUTHORS AMONG

TOP 1%

MOST CITED SCIENTIST

91+ MILLION

DOWNLOADS

12.2%

AUTHORS AND EDITORS

FROM TOP 500 UNIVERSITIES

Selection of our books indexed in the

Book Citation Index in Web of Science

Core Collection (BKCI)

Chapter from the book Modeling and Simulation in Engineering Sciences

Downloaded from: http://www.intechopen.com/books/modeling-and-simulation-inengineering-sciences

Interested in publishing with InTechOpen?

Contact us at book.department@intechopen.com

Chapter 7

Training Images-Based Stochastic Simulation on

Many-Core Architectures

Tao Huang and Detang Lu

Additional information is available at the end of the chapter

http://dx.doi.org/10.5772/64276

Abstract

In the past decades, multiple-point geostatistical methods (MPS) are increasing in

popularity in various fields. Compared with the traditional techniques, MPS techni

ques have the ability to characterize geological reality that commonly has complex

structures such as curvilinear and long-range channels by using high-order statistics for

pattern reconstruction. As a result, the computational burden is heavy, and some

times, the current algorithms are unable to be applied to large-scale simulations. With

the continuous development of hardware architectures, the parallelism implementa

tion of MPS methods is an alternative to improve the performance. In this chapter, we

overview the basic elements for MPS methods and provide several parallel strategies

on many-core architectures. The GPU-based parallel implementation of two efficient

MPS methods known as SNESIM and Direct Sampling is detailed as examples.

Keywords: geostatistics, multiple point, stochastic simulation, training image, manycore architecture

1. Introduction

Geostatistical stochastic simulation is important for the research of geological phenomenon. In

the past several decades, a large number of geostatistical methods have been developed based

on the spatial covariance properties of the geological data. The traditional tool to quantify the

spatial covariance is known as variogram, which measures the covariance among any two points

separated by a certain distance [1]. Although variogram-based methods are successfully applied

to multi-Gaussian system, they have limitations for the characterization of complex systems

such as the curvilinear or long-range continuous facies [24]. An alternative known as multiple-

2016 The Author(s). Licensee InTech. This chapter is distributed under the terms of the Creative Commons

Attribution License (http://creativecommons.org/licenses/by/3.0), which permits unrestricted use, distribution,

and reproduction in any medium, provided the original work is properly cited.

142

Modeling and Simulation in Engineering Sciences

point geostatistical (MPS) simulation was proposed to produce geologically realistic structure

by using high-order spatial statistics based on conceptual training images [5, 6]. The concept of

training image is introduced from explicit to represent geological structures with a numeral

image generated from outcrops, expert sketching, or conditional simulations of variogrambased methods. Since the training images can incorporate additional information such as the

expert guesses, prior database, and physical models, besides the spatial features, the simula

tion using TIs is straightforward and smart [7].

Due to the ability of reconstructing geological realistic, MPS methods are gaining popularity,

and various algorithms have been proposed including pixel-based algorithms [5, 8, 9], patternbased algorithms [1013], and optimal algorithms [1416]. These algorithms have been applied

to broad fields such as oil and gas industry [1719], fluid prediction [20, 21], climate modeling

[22]. However, some application suffers from the computational burden routinely. Since MPS

methods need to scan the training image, abstract patterns, and reconstruct the patterns in the

simulation grid, physical memory and running time are challenging or even unusable for largescale or pattern-rich simulation models.

Many effects have been made to decrease the central processing units (CUP) and RAM expense.

Approaches such as multiple grid technique [23], optimization of data storage with a list

structure [24], hierarchical decomposing [25], Fourier space transform [26] have been intro

duced, while the computational burden remains heavy for very large grids and complex spatial

models.

With the development of hardware, utilization of multiple-core central processing units (CPU),

or graphic processing units (GPU), for parallel applications are increasing in popularity in

various fields including geostatistical simulation. In 2010, Mariethoz investigated the possi

bility to parallelize the MPS simulation process on realization level, path-level, or node-level

and proposed a general conflict management strategy [27]. This strategy has been implement

ed on a patch-based SIMPAT method [28]. Parallel implements for other geostatistical

algorithms, such as the parallel two-point geostatistical simulation [29], parallel pixel-based

algorithms [30], and parallel optimal algorithms [31] have been proposed constantly.

In this article, we will present the parallel several schemes of MPS simulation on many-core

and GPU architectures. The Compute Unified Device Architecture (CUDA) that provides

access between CUPs and GPUs is used to illustrate the parallel strategies [32, 33]. Examples

of the two general MPS algorithms known as SENSIM and DS are implemented and compared

[34, 35] with the original algorithms to present the ability of pattern reproduction and the

improvement of computational performance.

2. Methodology

Currently, geostatistical simulations are processed with a large number of MPS algorithms

using various techniques. Besides the difference in process definition and algorithmic imple

mentation, these algorithms share similarities of the fundamental elements [36].

Training Images-Based Stochastic Simulation on Many-Core Architectures

http://dx.doi.org/10.5772/64276

2.1. General framework

Generally, the overall framework of these algorithms is constructed as follows:

1.

Migrate conditioning points to the corresponding grid nodes.

2.

Define data template to abstract or clustering patterns from the training image.

3.

Define a simulation path for the nodes to be simulated.

4.

Using the conditioning data and previous simulated nodes as priori data to sample from

the training image.

5.

Place the sample to the simulation grid.

6.

Repeat threefive until meeting the stopping criterion.

For each algorithm, one or some of the basic steps may have some identity. In this section, we

will focus on the two algorithms following different theory, single normal equation simulation

(SNESIM) and DS, to illustrate the application of parallel strategies on MPS simulations.

2.2. SNESIM review

The methodology of single normal equation simulation (SNESIM) is a sequential paradigm

developed from the random concepts theory with the property of generating conditional

distribution with local conditioning instead of global conditioning. The local conditional

distribution is aggregated with the local data event. Considering a category property S with

K possible states {s(k), k = 1, , K}, a data template n is comprised of a geometry of n vectors

{h, = 1, , n}. The data event dn centered at u is defined with the template and the corre

sponding n values {s(u + h), = 1, , n}. Consequently, the conditional probability of the

occurrence of state sk denoted as Prob{S(u) = sk|S(u), = 1 n} or Prob{S(u) = sk|dn} is defined

following Bayes theorem:

Prob{S(u)=s k |d n }=

Prob{S(u)=s k and d n }

Prob{d n }

(1)

Where Prob{dn} and Prob{S(u) = sk and dn} are the probability of the occurrence of dn and the

associated to a central value S(u) = sk respectively. In practice, this probability can be obtained

by counting the number of replicates of the training image, which is calculated by:

Prob{S(u)=s k |d n }=

c k (d n )

c(d n )

(2)

c{dn} is the number of replicates of the conditioning data dn, and ck{dn} is the number of

replicates inferred from ck(dn) of which the central node S{u} has a value of s(k).

143

144

Modeling and Simulation in Engineering Sciences

To reduce the computational burden of scanning the training image, SNESIM analyzes the

training image and constructs a search tree to store all the patterns before sequential simula

tion. Then the same data template is used to aggregate the local distribution of the simulation

grid for sampling values from the data base to the simulation grid.

The implementation of SNESIM algorithm is shown in Figure 1.

Figure 1. Flowchart for SNESIM process.

As described, the memory consumption is largely related to the size of data template and the

pattern richness of the training image. At the same time, the pattern retrieving time also

increases for the complex cases. To address this problem, a GPU-based parallel method is

proposed in the following section.

2.3. Direct Sampling review

Direct Sampling is a novel MPS technique that borrows ideas from a sampling method

introduced by Shannon totally abandoning the conditional probability approach. Instead of

the conditional distribution, a measurement distance is used to calculate the similarity between

Training Images-Based Stochastic Simulation on Many-Core Architectures

http://dx.doi.org/10.5772/64276

the local conditioning and the data event got from the training image along a random path.

The distance method enables the application to both categorical and continuous variables.

During the sequential simulation, for each node to be simulated in the simulation grid, the

training image is scanned along a circular unilateral path [37] and the distance is calculated.

As long as the distance is smaller than the defined threshold, the current data event in the

training image is sampled, and the central value is assigned to the node to be simulated in the

simulation grid directly. The flowchart of Direct Sampling is shown in Figure 2.

Figure 2. Flowchart for Direct Sampling process.

145

146

Modeling and Simulation in Engineering Sciences

Since this method does not need to store patterns, it releases the memory intensity; on the other

hand, parameter is the key factor for Direct Sampling.

There are three main input parameters:

1.

Maximum number of closest neighbors n, namely, the size of data template of SNESIM.

2.

The distance threshold t. A value of t = 0 means a trend of verbatim copy of the training

and the increasing value introduce more variabilities between realizations in pattern

reproduction.

3.

The fraction of scanned TI f. The fraction is defined to stop the process of scanning the

training image while no distances are under the threshold. If the percentage of nodes in

the training image reach f, the scanning stops and the pattern with lowest distance is

sampled.

Sensitivity analysis of parameters [38] shows trade-offs between the quality of realizations and

the CPU times.

3. Many-core architectures

3.1. Overview

A computing component that featured with two or more processing units to execute program

instructions independently is known as a multicore processor. With the ability of running

multiple instructions at the same time, multicore processors increase overall speed for many

general-purpose computing. Currently, adding support for more execution threads is the norm

avenue to improve the performance of high-end processors. The many-core architectures are

formed by manufacturing massive multicores on a single component. For general-purpose

parallel computing, many-core architectures on both the central processing unit (CPU) and the

graphics processing unit (GPU) are available for different tasks.

Compared with a many-core CPU architecture known as a supercomputer, the general GPU

has many more cores, which are constructively cheap and suitable for intensive computing.

3.2. GPU and CUDA

Originally, a GPU is a graphic card attached with a cluster of streaming processors aimed at

graphic-oriented details that needs the ability of extremely fast processing of large-volume

data sets. To apply the special-purpose GPU to general-purpose application, NVIDIA provides

a user-friendly development environment that is Compute Unified Device Architecture

(CUDA). The CUDA platform enables the generation of parallel codes on GPUs by driving the

process from the CPU to GPU. A CUDA program is a unified code that consists of executions

on both the host (CUP) and the device (GPU) by CUDA kernel functions that are called out

from the host to the device for asynchronously execution. The massive parallelism is carried

out in each kernel on CUDA threads that are the basic executing units on the GPU. The CUDA

Training Images-Based Stochastic Simulation on Many-Core Architectures

http://dx.doi.org/10.5772/64276

provides an interface named Peripheral Component Interconnect Express (PCI-e) for the

intercommunication between host and device and shared memory for synchronization among

the parallel threads. The general architecture of CUDA and CUDA memory is illustrated in

Figures 3 and 4. More details could be referred from the Guides of NVIDA.

Figure 3. The general architecture of CUDA.

Figure 4. CUDA device memory organization.

147

148

Modeling and Simulation in Engineering Sciences

4. Parallel strategies

Generally, there are three strategies to implement the parallelism of MPS algorithms, that is,

realization-level, path-level, and the node-level parallelization. The bottleneck for large-scale

application focuses on the millions of nodes in the training image and simulation grids. So we

present two parallel implementations on node-level for SNESIM and on both node-level and

path-level for Direct Sampling in this section.

4.1. GPU-based SNESIM implementation

In 2013, a node-level parallelization was applied to SNESIM algorithm and achieved significant

performance improvement [34]. The overall parallel scheme is illustrated in Figure 5.

Figure 5. Procedure of GPU-based SNESIM implementation.

By transferring the training image and simulation grid from the CPU to GPU, each node is

assigned to a CUDA thread. For each unsimulated node along the random simulation path,

Kernel 1 compares each value of the given data template dn with every central nodes in the

training image simultaneously and returns the number of replicates ck(dn) for each stage k.

With these outputs, Kernel 2 calculates the conditional cumulative distribution function

(CCDF) and uses Monte Carlo sampling to draw a value to this node. Repeat the kernels along

a random path until all the nodes in the simulation grid are simulated. Transfer the results

from GPU to CPU.

Training Images-Based Stochastic Simulation on Many-Core Architectures

http://dx.doi.org/10.5772/64276

Since the most time-consuming part, that is getting data event for each node, is parallelized in

Kernel 1, this GPU-based implementation gains significant speedup. Moreover, in contrast

with the increasing physical memory demanding along the template sizes of original imple

mentation, the proposed GPU implementation fixes the amount of memory.

4.2. GPU-based Direct Sampling implementation

Similar parallelization could also be applied to the Direct Sampling algorithm. Besides the

parallelism of the data template, the searching fraction could also be parallelized. The twostage parallel scheme was implemented in 2013 [35]. The procedure of the GPU-based

implementation is illustrated in Figure 6.

Figure 6. Procedure of GPU-based Direct Sampling implementation.

149

150

Modeling and Simulation in Engineering Sciences

As described, there are three important parameters controlling the simulation of Direct

Sampling, that is t, n, and f. This scheme implements the parallelism on two of the three

parameters that is n and f.

4.2.1. Parallelism of n

The number of neighbor is the n closest previously simulated nodes to define a data template.

Different from the data template used in SNESIM that is controlled by the predefined geom

etry, the data templates consisted of the n closest simulation nodes and have the flexibility of

adaption shape and searching range. Due to these flexibilities, this approach can directly catch

large-scale structures and easily condition to hard data. On the other hand, the searching for

the n neighbors is also the most time-consuming part in the serial implementation.

The parallelism of n is achieved in Kernel 2 and Kernel 3. In Kernel 2, each previously simulated

node is allocated to a CUDA thread and calculates the Euclidean distance to the node to be

simulated simultaneously. These distances are transferred to Kernel 3 and sorted using a

parallel sorting algorithm.

4.2.2. Parallelism of f

In the serial program if the similarity-distance between data events of the simulation grid and

the one sampled from the current training image node is higher than the threshold, a new

central node will be sampled from the training image along a random path. Most nodes of the

training image that may be visited is defined as f NTI, as a result, large amount of data event

will be sampled in large-scale 3-days simulations.

The parallelization of f is implemented in Kernel 4 that allocates f NTI threads to f NTI central

nodes in the training image using a unique random path denoting each node. Thus these

similarity distances are calculated simultaneously. There are two possibilities for the similarity

distance and the data sampling strategy is shown as follows:

1.

There are values lower than the threshold t, choose the one that has the smallest path index

from the data events with a lower distance.

2.

There are no values lower than the threshold t, choose the one that has the smallest path

index from the data events with the lowest distance.

Finally, the central value of the chosen data event is assigned to the simulation grid by Kernel

6. Repeat all these kernels until all the nodes in the simulation grid are simulated.

5. Experiments

In this section, the performance of the GPU-based implementations is compared with that

obtained using SGeMS software [39]. A computer with 4 GB main memory, Interal Core i3540

3.07 GHz CPU, and NVIDIA GeForce GTX 680 GPU that contains eight streaming multiproc

essors with 192 CUDA Cores/MP and 2 GB device memory is used for the simulation. The

Training Images-Based Stochastic Simulation on Many-Core Architectures

http://dx.doi.org/10.5772/64276

programming platform is the NVIDIA driver version 301.42 with CUDA version 4.2 imple

mented on VS2010 using C++.

To test the performance of the GPU-based SNESIM algorithm, a 2D porous slice image

obtained by CT scanning is used as the training image. The 200 200 pixels training image and

the corresponding histogram for background and the pore are shown in Figure 7.

Figure 7. Training image of porous slice and the Histogram.

Figure 8. SNESIM realization using CUP and GPU. (a)(d) CPU-based realizations with the number template equals

50, 120, 200 and 350, respectively; (e)(h) GPU-based realizations with the number template equals 50, 120, 200 and

350, respectively.

Realizations of the same size as the training image using data template of 50, 120, 200, and 350

nodes are generated for each simulation. The realizations generated with CPU and GPU are

shown in Figure 8. The average variograms for each simulation are shown in Figure 9a, and

151

152

Modeling and Simulation in Engineering Sciences

the performance is shown in Figure 9b. The results show that the proposed GPU-based

algorithm can generate similar realizations as the original algorithm, whereas significantly

increases the performance. The speedup ranges from six to 24 times depending on the template

size than a larger template size resulting in a larger speedup.

Figure 9. Results and performance comparison between the CPU and GPU implementation. (a) Variogram of the train

ing image and the average variogram of the realizations of Figure 8 and (b) speedup obtained by using the GPU-based

parallel scheme.

The performance of the GPU-based Direct Sampling algorithm is also compared with the

original algorithm on a 100 130 by 20 fluvial reservoir training image as shown in Figure 10.

Figure 10. A 100 130 by 20 fluvial reservoir training image.

Parameter sensitivities are analyzed on n = 30, 50, 100, 200, f = 0.005, 0.01, 0.02, 0.05, and t = 0.01,

0.02, 0.05, 0.1, respectively, with which reasonable realizations are generated. The performance

times are shown in Figure 11.

Training Images-Based Stochastic Simulation on Many-Core Architectures

http://dx.doi.org/10.5772/64276

Figure 11. Performance comparison. (a) Performance with fixed t = 0.2, f = 0.005 and varying n = 30, 50, 100, and 200; (b)

performance with fixed n = 30, t = 0.02 and varying f = 0.005, 0.01, 0.02, and 0.05; (c) performance with fixed n = 30,

f = 0.005 and varying t = 0.01, 0.02, 0.05, and 0.1.

The results show that GPU-based implementation significantly improves the performance for

all the tests. Moreover, the sensitivity of parameters to performance is alleviated with the

parallel scheme. The time difference is around 200 s for n and f and almost none for t for the

GPU-based implementation, whereas it can be as large as several magnitudes for the CPUbased implementation.

In summary, both the presented GPU-based parallel schemes for SNESIM and Direct Sampling

achieve significant speedups, especially for large-scale simulations with their node-level

parallelism strategy. Moreover, the parallel implementations are insensitive to parameters that

are the key points not only for performance but also for simulation results implying for better

results in application. These strategies could be further improved with other parallel optimi

zation methods as well. In fact, besides the node-level parallel schemes for SNESIM and Direct

Sampling, various parallelisms have been proposed and new optimizations are keeping

introduced aimed at further improvements. Up to now, almost all kinds of MPS algorithms

could be implemented on a parallel scheme. Many-core architectures are the current main

streams to improve the performance of extremely massive computing tasks. The developing

of computer hardware and parallel interface techniques promise the wider and wider utiliza

tion of high-performance parallelization. These parallel schemes of training images-based

stochastic simulations approve the application to high-resolution and large-scale simulations.

153

154

Modeling and Simulation in Engineering Sciences

Acknowledgements

This work was financially supported by the CAS Strategic Priority Research Program under

Grant XDB10030402 and the CNPC-CAS Strategic cooperation Research Program

(2015A-4812) and the Fundamental Research Funds for the Central Universities

(WK2090050038).

Author details

Tao Huang and Detang Lu

*Address all correspondence to: thwang@ustc.edu.cn

University of Science and Technology of China, Hefei, Anhui, China

References

[1] Goovaerts, P. Geostatistical tools for characterizing the spatial variability of microbio

logical and physico-chemical soil properties. Biology and Fertility of Soils. 1998; 27(4):

315334.

[2] Journel, A.G., Geostatistics: Roadblocks and Challenges, in Geostatistics Tria 92:

Volume 1, A. Soares, Editor. 1993, Springer Netherlands: Dordrecht. p. 213224.

[3] Krishnan, S., Journel, A.G. Spatial connectivity: from variograms to multiple-point

measures. Mathematical Geology. 2003;35(8):915925.

[4] Journel, A.G., Beyond Covariance: The Advent of Multiple-Point Geostatistics, in

Geostatistics Banff 2004, O. Leuangthong and C.V. Deutsch, Editors. 2005, Springer

Netherlands: Dordrecht. p. 225233.

[5] Strebelle, S., Journel, A.G. Sequential simulation drawing structures from training

images. Geostatistics Capetown 2000. pp. 381392.

[6] Strebelle, S., Conditional simulation of complex geological structures using multiple

point statistics. Mathematical Geology. 2002;34(1):121.

[7] Hu, L., Chugunova, T. Multiple-point geostatistics for modeling subsurface heteroge

neity: a comprehensive review. Water Resources Research. 2008;44(11):W11413.

[8] Mariethoz, G., Renard, P., Straubhaar, J., The Direct Sampling method to perform

multiplepoint geostatistical simulations. Water Resources Research. 2010;46(11):114.

Training Images-Based Stochastic Simulation on Many-Core Architectures

http://dx.doi.org/10.5772/64276

[9] Mustapha, H., Dimitrakopoulos, R., HOSIM: a high-order stochastic simulation

algorithm for generating three-dimensional complex geological patterns. Computers

& Geosciences. 2011;37(9):12421253.

[10] Arpat, G.B. Sequential Simulation with Patterns. Ph.D. Dissertation. Stanford [disser

tation]. Stanford, CA; 2005. 166 p.

[11] Zhang, T., Switzer, P., Journel, A. Filter-based classification of training image patterns

for spatial simulation. Mathematical Geology. 2006;38(1):6380.

[12] Tahmasebi, P., Hezarkhani, A., Sahimi, M. Multiple-point geostatistical modeling

based on the cross-correlation functions. Computational Geosciences. 2012;16(3):779

797.

[13] Mahmud, K., Mariethoz, G., Caers, J., Tahmasebi, P., Baker, A. Simulation of Earth

textures by conditional image quilting. Water Resources Research. 2014;50(4):3088

3107.

[14] Deutsch, C., Wen, X. Integrating large-scale soft data by simulated annealing. Mathe

matical Geology. 2000;32(1):4967.

[15] Caers, J.K., Srinivasan, S., Journel, A.G. Geostatistical quantification of geological

information for a fluvial-type North Sea reservoir. SPE Reservoir Evaluation &

Engineering. 2000;3(5):457467.

[16] Srivastava, M. An overview of stochastic methods of reservoir characterization. AAPG

Computer Applications in Geology. 1995; Tulsa:316.

[17] Okabe, H., Blunt, M.J. Pore space reconstruction using multiple-point statistics. Journal

of Petroleum Science and Engineering. 2005;46(1):121137.

[18] Zhang, T., Bombarde, S., Strebelle, S., Oatney, E. 3D porosity modeling of a carbonate

reservoir using continuous multiple-point statistics simulation. SPE Journal. 2006;11(3):

375379.

[19] Falivene, O., Arbus, P., Gardiner, A., Pickup, G., Muoz, J.A., Cabrera, L. Best practice

stochastic facies modeling from a channel-fill turbidite sandstone analog (the Quarry

outcrop, Eocene Ainsa basin, northeast Spain). AAPG Bulletin. 2006;90(7):10031029.

[20] Hiroshi O, Blunt M J. Pore space reconstruction of vuggy carbonates using microto

mography and multiple-point statistics[J]. Water Resources Research, 2007, 431(12):

179183.

[21] Tahmasebi P., Sahimi M. Cross-correlation function for accurate reconstruction of

heterogeneous media. Physical Review Letters. 2013;110(7):078002

[22] Jha, S.K., Mariethoz, G., Evans, J.P., McCabe, M.F. Demonstration of a geostatistical

approach to physically consistent downscaling of climate modeling simulations. Water

Resources Research. 2013;49(1):245259.

155

156

Modeling and Simulation in Engineering Sciences

[23] Strebelle, S. New multiple-point statistics simulation implementation to reduce

memory and cpu-time demand. In: Conference of the international association for

mathematical geology. September; Portsmouth, UK. 2003.

[24] Straubhaar, J., Renard, P., Mariethoz, G., Froidevaux, R., Besson, O. An improved

parallel multiple-point algorithm using a list approach. Mathematical Geosciences.

2011;43(3):305328.

[25] Maharaja, A., Journel, A.G. Hierarchical Simulation of Multiple-Facies Reservoirs

Using Multiple-Point Geostatistics. SPE Annual Technical Conference and Exhibition.

Society of Petroleum Engineers, Dallas, Texas. 2005

[26] Tahmasebi, P., Sahimi, M., Caers, J. MS-CCSIM: accelerating pattern-based geostatis

tical simulation of categorical variables using a multi-scale search in Fourier space.

Computers & Geosciences. 2014;67:7588.

[27] Mariethoz, G. A general parallelization strategy for random path based geostatistical

simulation methods. Computers and Geosciences. 2010;36(7):953958.

[28] Tahmasebi, P., Sahimi, M., Mariethoz, G., Hezarkhani, A. Accelerating geostatistical

simulations using graphics processing units (GPU). Computers & Geosciences.

2012;46:5159.

[29] Nunes, R., Almeida, J.A. Parallelization of sequential Gaussian, indicator and direct

simulation algorithms. Computers and Geosciences. 2010;36(8):10421052.

[30] Straubhaar, J., Renard, P., Mariethoz, G., Froidevaux, R., Besson, O. An improved

parallel multiple-point algorithm using a list approach. Mathematical Geoscience.

2011;43(3):305328.

[31] Peredo, O., Ortiz, J.M., Parallel implementation of simulated annealing to reproduce

multiple-point statistics. Computers and Geosciences. 2011;37(8):11101121.

[32] Nvidia, C. C Programming Guide v 4.0. Santa Clara, CA, USA: NVIDIA Corporation;

2011.

[33] Nvidia, CUDA C Best Practices Guide Version 4.0. Technical report. Santa Clara, CA,

USA: NVIDIA Corporation; 2009.

[34] Huang, T., Lu, D.T., Li, X., Wang, L. GPU-based SNESIM implementation for multiplepoint statistical simulation. Computers & Geosciences. 2013;54:7587.

[35] Huang, T., Li, X., Zhang, T., Lu, D.T. GPU-accelerated Direct Sampling method for

multiple-point statistical simulation. Computers & Geosciences. 2013;57:1323.

[36] Mariethoz, G., Caers, J. Multiple-point geostatistics: stochastic modeling with training

images. John Wiley & Sons, Ltd, Chichester, UK; 2014.

Training Images-Based Stochastic Simulation on Many-Core Architectures

http://dx.doi.org/10.5772/64276

[37] Daly, C. Higher order models using entropy, Markov random fields and sequential

simulation. In: Leuangthong, O., Deutsch, C. (Eds). In: Geostatistics Banff 2004;

Springer Netherlands; 2005. p. 215224.

[38] Pirot, G., Meerschman, E., Mariethoz, G., Straubhaar, J., Van Meirvenne, M, Renard, P.

Optimizing Direct Sampling algorithm's parameters to performing multiple-points

geostatistical simulations. AGU Fall Meeting Abstracts. 2011;1:1475.

[39] Remy, N., Boucher, A., Wu, J., editors. Applied Geostatistics with SGeMS:A Users

Guide. Cambridge University Press, Cambridge, UK; 2008.

157

You might also like

- Rapid design space exploration with IGADocument42 pagesRapid design space exploration with IGAJorge Luis Garcia ZuñigaNo ratings yet

- Research Collection: Parametric Associative CAE Methods in Preliminary Aircraft DesignDocument6 pagesResearch Collection: Parametric Associative CAE Methods in Preliminary Aircraft DesignroloNo ratings yet

- Banchar Arnonkijpanich, Barbara Hammer and Alexander Hasenfuss - Local Matrix Adaptation in Topographic Neural MapsDocument34 pagesBanchar Arnonkijpanich, Barbara Hammer and Alexander Hasenfuss - Local Matrix Adaptation in Topographic Neural MapsTuhmaNo ratings yet

- A Data Parallel Approach To Modelling and Simulation of Large CrowdDocument11 pagesA Data Parallel Approach To Modelling and Simulation of Large CrowdLayiNo ratings yet

- SGEMS-UQ: An Uncertainty Quantification Toolkit For SgemsDocument60 pagesSGEMS-UQ: An Uncertainty Quantification Toolkit For SgemsSoporte CGeoMinNo ratings yet

- Barbara Hammer and Alexander Hasenfuss - Topographic Mapping of Large Dissimilarity Data SetsDocument58 pagesBarbara Hammer and Alexander Hasenfuss - Topographic Mapping of Large Dissimilarity Data SetsTuhmaNo ratings yet

- Mi 150105Document8 pagesMi 150105古鹏飞No ratings yet

- Barrera Et Al (2005)Document10 pagesBarrera Et Al (2005)Ulisses Miguel CorreiaNo ratings yet

- Bayesian System IdentificationDocument14 pagesBayesian System IdentificationprawinpsgNo ratings yet

- Distributed Graph Neural Network Training: A SurveyDocument37 pagesDistributed Graph Neural Network Training: A Surveytung2303.gradNo ratings yet

- An End-To-End Three-Dimensional Reconstruction Framework of Porous Media From A Single Two-Dimensional Image Based On Deep LearningDocument20 pagesAn End-To-End Three-Dimensional Reconstruction Framework of Porous Media From A Single Two-Dimensional Image Based On Deep Learningpcacais3No ratings yet

- Generalized-ICP: Aleksandr V. Segal Dirk Haehnel Sebastian ThrunDocument8 pagesGeneralized-ICP: Aleksandr V. Segal Dirk Haehnel Sebastian ThrunChuanjiang LuoNo ratings yet

- Bayesian Inference With Subset Simulation: Strategies and ImprovementsDocument22 pagesBayesian Inference With Subset Simulation: Strategies and ImprovementsJimmy PhoenixNo ratings yet

- Rombach High-Resolution Image Synthesis With Latent Diffusion Models CVPR 2022 Paper-2Document12 pagesRombach High-Resolution Image Synthesis With Latent Diffusion Models CVPR 2022 Paper-2Dinuo LiaoNo ratings yet

- tmp5573 TMPDocument11 pagestmp5573 TMPFrontiersNo ratings yet

- Zhong - 2005 - Efficient Online Spherical K-Means ClusteringDocument6 pagesZhong - 2005 - Efficient Online Spherical K-Means ClusteringswarmbeesNo ratings yet

- Comparison of Systems Using Diffusion MapsDocument6 pagesComparison of Systems Using Diffusion MapsAndrew FongNo ratings yet

- Cerra SCC10 FinalDocument6 pagesCerra SCC10 FinaljayashreeraoNo ratings yet

- Generalized-ICP improves scan matchingDocument8 pagesGeneralized-ICP improves scan matchingJasNo ratings yet

- Engineering Structures: Jacob P. Waldbjoern, Amin Maghareh, Ge Ou, Shirley J. Dyke, Henrik StangDocument12 pagesEngineering Structures: Jacob P. Waldbjoern, Amin Maghareh, Ge Ou, Shirley J. Dyke, Henrik StangSachin GuptaNo ratings yet

- A Heuristics-Based Cost Model For Scientific Workflow Scheduling in CloudDocument19 pagesA Heuristics-Based Cost Model For Scientific Workflow Scheduling in CloudsranilNo ratings yet

- Bayseian Time DomainDocument17 pagesBayseian Time DomainJimmy PhoenixNo ratings yet

- Gocheva-Ilieva S. Statistical Data Modeling... Applications 2021Document186 pagesGocheva-Ilieva S. Statistical Data Modeling... Applications 2021Bhagavan BangaloreNo ratings yet

- Rainfall PredictionDocument4 pagesRainfall PredictionoutriderNo ratings yet

- One-Dimensional Vector Based PatternDocument12 pagesOne-Dimensional Vector Based PatternAnonymous Gl4IRRjzNNo ratings yet

- Multi-Objective Workflow Scheduling in Cloud System Based On Cooperative Multi-Swarm Optimization AlgorithmDocument13 pagesMulti-Objective Workflow Scheduling in Cloud System Based On Cooperative Multi-Swarm Optimization AlgorithmOrlando BoNo ratings yet

- Surrogate Modeling of Parametrized Finite Element Simulations With V 2022 ArDocument14 pagesSurrogate Modeling of Parametrized Finite Element Simulations With V 2022 ArMetro Electronics ScaleNo ratings yet

- Model-Reduction Techniques For Bayesian Finite Element ModelDocument24 pagesModel-Reduction Techniques For Bayesian Finite Element Modelsumatrablackcoffee453No ratings yet

- A Review of Surrogate Assisted Multiobjective EADocument15 pagesA Review of Surrogate Assisted Multiobjective EASreya BanerjeeNo ratings yet

- Parallel Ray Tracing Using Mpi and Openmp: January 2008Document18 pagesParallel Ray Tracing Using Mpi and Openmp: January 2008Chandan SinghNo ratings yet

- Remotesensing 12 01257Document26 pagesRemotesensing 12 01257dNo ratings yet

- Landmark Transferencia Con El Gráfico MinimalDocument14 pagesLandmark Transferencia Con El Gráfico MinimalBen Mur MarNo ratings yet

- Spatial Analysis Model For Traffic Accident-Prone Roads Classification: A Proposed FrameworkDocument9 pagesSpatial Analysis Model For Traffic Accident-Prone Roads Classification: A Proposed FrameworkIAES IJAINo ratings yet

- Luo Etal Cvpr16Document9 pagesLuo Etal Cvpr16Chulbul PandeyNo ratings yet

- Weather and Climate Forecasting With Neural Networks: Using General Circulation Models (GCMS) With Different Complexity As A Study GroundDocument13 pagesWeather and Climate Forecasting With Neural Networks: Using General Circulation Models (GCMS) With Different Complexity As A Study GroundtonyNo ratings yet

- 2019 TASEA Two-Layer Adaptive Surrogate-Assisted Evolutionary Algorithm For High-Dimensional Computationally Expensive ProblemsDocument33 pages2019 TASEA Two-Layer Adaptive Surrogate-Assisted Evolutionary Algorithm For High-Dimensional Computationally Expensive ProblemsZhangming WuNo ratings yet

- Aircraft Mechanical SystemsDocument179 pagesAircraft Mechanical SystemsScott TrainorNo ratings yet

- Big Bang - Big Crunch Learning Method For Fuzzy Cognitive MapsDocument10 pagesBig Bang - Big Crunch Learning Method For Fuzzy Cognitive MapsenginyesilNo ratings yet

- Hasan 2019 IOP Conf. Ser. Earth Environ. Sci. 357 012035Document11 pagesHasan 2019 IOP Conf. Ser. Earth Environ. Sci. 357 012035Minh Đinh NhậtNo ratings yet

- Puspita 2019 J. Phys. Conf. Ser. 1196 012073Document8 pagesPuspita 2019 J. Phys. Conf. Ser. 1196 012073Pokerto JavaNo ratings yet

- Multiview Fuzzy Clustering Based On Anchor GraphDocument12 pagesMultiview Fuzzy Clustering Based On Anchor Graphpakardizavand.naserNo ratings yet

- Bayesian Modeling Using The MCMC ProcedureDocument22 pagesBayesian Modeling Using The MCMC ProcedureKian JahromiNo ratings yet

- Sensors: Metaheuristic Based Scheduling Meta-Tasks in Distributed Heterogeneous Computing SystemsDocument12 pagesSensors: Metaheuristic Based Scheduling Meta-Tasks in Distributed Heterogeneous Computing SystemsalkimistuNo ratings yet

- Use Nko 17 Re PlanningDocument8 pagesUse Nko 17 Re Planningageoir goeNo ratings yet

- Axioms 11 00112Document23 pagesAxioms 11 00112nach456No ratings yet

- Remote Sensing Image Fusion Based On Adaptively Weighted Joint Detail InjectionDocument16 pagesRemote Sensing Image Fusion Based On Adaptively Weighted Joint Detail InjectionSR ProjectsNo ratings yet

- Surrogate ModelDocument5 pagesSurrogate ModelBOBBY212No ratings yet

- Local-Global Fuzzy Clustering With Anchor GraphDocument15 pagesLocal-Global Fuzzy Clustering With Anchor Graphpakardizavand.naserNo ratings yet

- Model Reduction High DimensionDocument20 pagesModel Reduction High DimensionLei TuNo ratings yet

- Cloud Computing For Climate Modelling Evaluation CDocument19 pagesCloud Computing For Climate Modelling Evaluation CfmuigaiNo ratings yet

- Interactive Deformation and Visualization of Level Set Surfaces Using Graphics HardwareDocument18 pagesInteractive Deformation and Visualization of Level Set Surfaces Using Graphics Hardwaremilito411No ratings yet

- Target Detection Based On Normalized Cross Co-Relation: Niladri Shekhar Das, S. Arunvinthan, E. GiriprasadDocument7 pagesTarget Detection Based On Normalized Cross Co-Relation: Niladri Shekhar Das, S. Arunvinthan, E. GiriprasadinventionjournalsNo ratings yet

- A Systematic Approach To Composing and Optimizing Application WorkflowsDocument9 pagesA Systematic Approach To Composing and Optimizing Application WorkflowsLeo Kwee WahNo ratings yet

- Accepted Manuscript: Aerospace Science and TechnologyDocument26 pagesAccepted Manuscript: Aerospace Science and TechnologyNajah NurhasanahNo ratings yet

- Two-layer Surrogate Model for Expensive PSODocument22 pagesTwo-layer Surrogate Model for Expensive PSOAndy SyaputraNo ratings yet

- Adaptive TimeDomainDocument29 pagesAdaptive TimeDomainJimmy PhoenixNo ratings yet

- Run-Time MPSOC Management and Mapping of Dynamic Embedded SoftwareDocument3 pagesRun-Time MPSOC Management and Mapping of Dynamic Embedded SoftwareRakeshconclaveNo ratings yet

- Na BIC20122Document8 pagesNa BIC20122jefferyleclercNo ratings yet

- Novel CSID Algorithm Enhances Clinical Diagnosis with Multimodal Image FusionDocument22 pagesNovel CSID Algorithm Enhances Clinical Diagnosis with Multimodal Image FusionDevendra BairagiNo ratings yet

- Multipoint GeostatisticsDocument10 pagesMultipoint GeostatisticsPrashantDhoteNo ratings yet

- Manual For Financial Management and ControlDocument167 pagesManual For Financial Management and ControlPrashantDhoteNo ratings yet

- Instruction: View Full Screen Images in The Slide Show Mode of Display. Read The Explanatory Notes in The Normal View Mode of DisplayDocument57 pagesInstruction: View Full Screen Images in The Slide Show Mode of Display. Read The Explanatory Notes in The Normal View Mode of DisplayPrashantDhoteNo ratings yet

- Gaussian Algorithm Petrel 2010Document12 pagesGaussian Algorithm Petrel 2010PrashantDhoteNo ratings yet

- Part Time PHD FormDocument4 pagesPart Time PHD FormPrashantDhoteNo ratings yet

- A Unfc FinalDocument25 pagesA Unfc FinalPrashantDhoteNo ratings yet

- Online Exam RequirementsDocument4 pagesOnline Exam RequirementsPrashantDhoteNo ratings yet

- Joe - Reservoir Surveillance W Ofm - Fall 2011Document48 pagesJoe - Reservoir Surveillance W Ofm - Fall 2011Diego TenorioNo ratings yet

- Online Exam RequirementsDocument4 pagesOnline Exam RequirementsPrashantDhoteNo ratings yet

- Exploiting TechnosphereDocument25 pagesExploiting TechnospherePrashantDhoteNo ratings yet

- ViewBenefitDetails Arogya PremierDocument1 pageViewBenefitDetails Arogya PremierPrashantDhoteNo ratings yet

- OGR MappingDocument126 pagesOGR MappingarthurvictorNo ratings yet

- Chapter 01 - GeomodelingDocument10 pagesChapter 01 - Geomodelingbella_dsNo ratings yet

- SyllabusDocument2 pagesSyllabusPrashantDhote100% (1)

- SCUA-GOCAD Import-Export PDFDocument230 pagesSCUA-GOCAD Import-Export PDFPrashantDhoteNo ratings yet

- How To Use Excel Unit ConversionDocument4 pagesHow To Use Excel Unit ConversionPrashantDhoteNo ratings yet

- Introduction to Reservoir Simulation in 40 CharactersDocument222 pagesIntroduction to Reservoir Simulation in 40 Charactersshantanuril100% (2)

- Example Calculations X Y Lowrance "Metric Mercator" - 7728720 5534604 Units, Meters, WGS84Document5 pagesExample Calculations X Y Lowrance "Metric Mercator" - 7728720 5534604 Units, Meters, WGS84PrashantDhoteNo ratings yet

- World's Largest Science, Technology & Medicine Open Access Book PublisherDocument19 pagesWorld's Largest Science, Technology & Medicine Open Access Book PublisherPrashantDhoteNo ratings yet

- Presenter Biography - Conference TemplateDocument1 pagePresenter Biography - Conference TemplatePrashantDhoteNo ratings yet

- geoSTATE 1.1 Ocean For Petrel - Installation Guide PDFDocument7 pagesgeoSTATE 1.1 Ocean For Petrel - Installation Guide PDFPrashantDhoteNo ratings yet

- Cs Petrel Ongc123Document2 pagesCs Petrel Ongc123PrashantDhoteNo ratings yet

- Vacciantion FormatDocument1 pageVacciantion FormatPrashantDhoteNo ratings yet

- DFN Case StudyDocument4 pagesDFN Case StudyPrashantDhoteNo ratings yet

- Ree Note - For Gsi PortalDocument3 pagesRee Note - For Gsi PortalPrashantDhoteNo ratings yet

- Unit 5 Machine Learning With PU SolutionDocument68 pagesUnit 5 Machine Learning With PU SolutionKavi Raj AwasthiNo ratings yet

- Digital Communication Systems by Simon Haykin-113Document6 pagesDigital Communication Systems by Simon Haykin-113matildaNo ratings yet

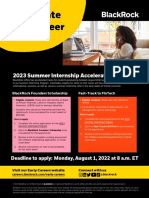

- 2023 BlackRock Summer Analyst Program AcceleratorDocument1 page2023 BlackRock Summer Analyst Program AcceleratorOlaoluwa OgunleyeNo ratings yet

- Quality Council of Indiana document details versions of certification materialsDocument47 pagesQuality Council of Indiana document details versions of certification materialsMelissa MurrayNo ratings yet

- Projector Management Application Instruction Guide: Maxell, LTDDocument42 pagesProjector Management Application Instruction Guide: Maxell, LTDLeonardo TafurNo ratings yet

- Compu CutterDocument2 pagesCompu CutterM YasinNo ratings yet

- Develop Web Services using WSDL and UDDIDocument13 pagesDevelop Web Services using WSDL and UDDIMahamud elmogeNo ratings yet

- Apache Kafka InstallationDocument3 pagesApache Kafka Installationsurendra yandraNo ratings yet

- Check Lagarconne - Coms SEODocument13 pagesCheck Lagarconne - Coms SEOUmar HanifNo ratings yet

- Traditional Vs Agile Project ManagementDocument6 pagesTraditional Vs Agile Project ManagementSaurav Panda100% (1)

- 3022Document2 pages3022markjparrNo ratings yet

- Computing Essentials EsaimenDocument12 pagesComputing Essentials EsaimenRyan MillerNo ratings yet

- 3D Animator User GuideDocument85 pages3D Animator User GuidecarlcoxNo ratings yet

- Crew Visas (C1D) : Step 1: PrepareDocument10 pagesCrew Visas (C1D) : Step 1: PrepareLýMinhTiếnNo ratings yet

- Array and StringDocument35 pagesArray and StringAmanuel DereseNo ratings yet

- Simatic Net: Step by Step: Ethernet Communication Between OPC Server and S7-200 Incl. CP243-1Document45 pagesSimatic Net: Step by Step: Ethernet Communication Between OPC Server and S7-200 Incl. CP243-1Franco SotoNo ratings yet

- HTTP Boot Console LogDocument31 pagesHTTP Boot Console Logdhiraj.testingNo ratings yet

- Ai Scratch NotesDocument25 pagesAi Scratch NotesShivkant Singh100% (4)

- SMR Online Users Guide PDFDocument48 pagesSMR Online Users Guide PDFniku100% (1)

- T05 3-4 2 PDFDocument201 pagesT05 3-4 2 PDFKhyss Ziah HerscheNo ratings yet

- Best Free Antivirus For Windows 10Document10 pagesBest Free Antivirus For Windows 10Kirtan DaveNo ratings yet

- E17040 ZenWiFi AX XT8 UM PDFDocument151 pagesE17040 ZenWiFi AX XT8 UM PDFEP TANNo ratings yet

- HTML Cheatsheet GuideDocument6 pagesHTML Cheatsheet Guidearronstack100% (1)

- License Company Brochure Mockup 2560900Document2 pagesLicense Company Brochure Mockup 2560900Anonymous rPV6LRE1No ratings yet

- Spse01546 S 1050 - enDocument232 pagesSpse01546 S 1050 - engocoolonNo ratings yet

- Puter Vision With TensorFlow 2 X 1838827064Document419 pagesPuter Vision With TensorFlow 2 X 1838827064超揚林100% (2)

- System Requirements For DS Icem Surf 2017Document3 pagesSystem Requirements For DS Icem Surf 2017Hatim EddibNo ratings yet

- Burner Logic System PDFDocument5 pagesBurner Logic System PDFshiviitd02No ratings yet

- Apply for Allahabad Bank Internet BankingDocument6 pagesApply for Allahabad Bank Internet Bankingnijju2009No ratings yet

- TLE 7 1st Quarter Exam SY 2022-2023Document3 pagesTLE 7 1st Quarter Exam SY 2022-2023dodongmakattigbasNo ratings yet

- From Vision to Version - Step by step guide for crafting and aligning your product vision, strategy and roadmap: Strategy Framework for Digital Product Management RockstarsFrom EverandFrom Vision to Version - Step by step guide for crafting and aligning your product vision, strategy and roadmap: Strategy Framework for Digital Product Management RockstarsNo ratings yet

- Certified Solidworks Professional Advanced Weldments Exam PreparationFrom EverandCertified Solidworks Professional Advanced Weldments Exam PreparationRating: 5 out of 5 stars5/5 (1)

- Autodesk Fusion 360: A Power Guide for Beginners and Intermediate Users (3rd Edition)From EverandAutodesk Fusion 360: A Power Guide for Beginners and Intermediate Users (3rd Edition)Rating: 5 out of 5 stars5/5 (2)

- Fusion 360 | Step by Step: CAD Design, FEM Simulation & CAM for Beginners.From EverandFusion 360 | Step by Step: CAD Design, FEM Simulation & CAM for Beginners.No ratings yet

- SolidWorks 2015 Learn by doing-Part 2 (Surface Design, Mold Tools, and Weldments)From EverandSolidWorks 2015 Learn by doing-Part 2 (Surface Design, Mold Tools, and Weldments)Rating: 4.5 out of 5 stars4.5/5 (5)

- Mastering Autodesk Inventor 2014 and Autodesk Inventor LT 2014: Autodesk Official PressFrom EverandMastering Autodesk Inventor 2014 and Autodesk Inventor LT 2014: Autodesk Official PressRating: 5 out of 5 stars5/5 (1)

- Autodesk Inventor 2020: A Power Guide for Beginners and Intermediate UsersFrom EverandAutodesk Inventor 2020: A Power Guide for Beginners and Intermediate UsersNo ratings yet

- Beginning AutoCAD® 2020 Exercise WorkbookFrom EverandBeginning AutoCAD® 2020 Exercise WorkbookRating: 2.5 out of 5 stars2.5/5 (3)