Professional Documents

Culture Documents

Bidirectional Data Import To Hive Using SQOOP

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Bidirectional Data Import To Hive Using SQOOP

Copyright:

Available Formats

Volume 2, Issue 4, April 2017 International Journal of Innovative Science and Research Technology

ISSN No: - 2456 - 2165

Bidirectional Data Import to Hive Using SQOOP

MD.Sirajul Huque1,D.Naveen Reddy2,G.Uttej3,K.Rajesh Kumar4,K.Ranjith Reddy5

Assistant Professor, Computer Science & Engineering, Guru Nanak Institution Technical Campus, Hyderabad, India,

mailtosirajul@gmail.com 1

B.Tech Student, Computer Science & Engineering, Guru Nanak Institution Technical Campus, Hyderabad, India,

dhondapatinaveenreddy@gmail.com2

B.Tech Student, Computer Science & Engineering, Guru Nanak Institution Technical Campus, Hyderabad, India,

uttejgumti97@gmail.com3

B.Tech Student, Computer Science & Engineering, Guru Nanak Institution Technical Campus, Hyderabad, India,

karka.rajeshreddy@gmail.com4

B.Tech Student, Computer Science & Engineering, Guru Nanak Institution Technical Campus, Hyderabad, India,

kranjit.iiit@gmail.com5

Abstract :-Using Hadoop for analytics and data processing complex that it becomes difficult to process using on-hand

requires loading data into clusters and processing it in database management tools.

conjunction with other data that often resides in

production databases across the enterprise. Loading bulk For the past two decades most business analytics have been

data into Hadoop from production systems or accessing it created using structured data extracted from operational

from map reduce applications running on large clusters systems and consolidated into a data warehouse. Big data

can be a challenging task. Users must consider details like dramatically increases both the number of data sources and the

ensuring consistency of data, the consumption of variety and volume of data that is useful for analysis. A high

production system resources, data preparation for percentage of this data is often described as multi-structured to

provisioning downstream pipeline. Transferring data distinguish it from the structured operational data used to

using scripts is inefficient and time consuming. Directly populate a data warehouse. In most organizations, multi-

accessing data residing on external systems from within structured data is growing at a considerably faster rate than

the map reduce applications complicates applications and structured data. Two important data management trends for

exposes the production system to the risk of excessive load processing big data are relational DBMS products optimized

originating from cluster nodes. This is where Apache for analytical workloads (often called analytic RDBMSs, or

Sqoop fits in. Apache Sqoop is currently undergoing ADBMSs) and non-relational systems processing for multi-

incubation at Apache Software Foundation. More structured data.

information on this project can be found at

http://incubator.apache.org/sqoop. Sqoop allows easy The 3 Vs of big data explains the behavior and efficiency of

import and export of data from structured data stores it. In general big data is a problem of unhandled data. Here we

such as relational databases, enterprise data warehouses, propose a new solution to it by using hadoop and its HDFS

and NoSQL systems. Using Sqoop, you can provision the architecture. Hence here everything is done using hadoop

data from external system on to HDFS, and populate only. These Vs are as follows:

tables in Hive and HBase.

1. Volume

Keywords: apache hadoop-1.2.1,apache hive, sqoop, mysql,

hive. 2. Velocity

I. INTRODUCTION 3. Variety

A. Apache Hadoop

Big data exceeds the reach of commonly used hardware

environments and software tools to capture, manage, and software library is a framework that allows for the distributed

process it with in a tolerable elapsed time for its user processing of large data sets across clusters of computers

population. It refers to data sets whose size is beyond the using simple programming models. It is designed to scale up

ability of typical database software tools to capture, store, from single servers to thousands of machines, each offering

manage and analyze. It is a collection of data sets so large and local computation and storage. Rather than rely on hardware

IJISRT17AP67 www.ijisrt.com 158

Volume 2, Issue 4, April 2017 International Journal of Innovative Science and Research Technology

ISSN No: - 2456 - 2165

to deliver high-availability, the library itself is designed to perfect. Sqoop exports the data from distributed file system to

detect and handle failures at the application layer, so database system very optimally. It provides simple command

delivering a highly-available service on top of a cluster of line option, where we can fetch data from different database

computers, each of which may be prone to failures systems by writing the simple sqoop command.

B. Apache Oozie IV. SQOOP

Apache Oozie is a server based workflow scheduling system Hadoop for analytics and data processing requires loading data

to manage Hadoop jobs. into clusters.Loading bulk data into Hadoop from production

systems or accessing it from map reduce applications running

Workflows in Oozie are defined as a collection of control flow

on large clusters can be a challenging task. Transferring data

and action nodes in a directed acyclic graph. Control flow

using scripts is inefficient and time consuming. Sqoop allows

nodes define the beginning and the end of a workflow (start,

easy import and export of data from structured data stores

end and failure nodes) as well as a mechanism to control the

such as relational databases, enterprise data warehouses, and

workflow execution path (decision, fork and join nodes).

NoSQL systems. Using Sqoop, you can provision the data

Action nodes are the mechanism by which a workflow triggers

from external system on to HDFS, and populate tables in Hive

the execution of a computation/processing task. Oozie

and HBase. Sqoop integrates with Oozie, allowing you to

provides support for different types of actions including

schedule and automate import and export tasks.

Hadoop MapReduce, Hadoop distributed file system

operations, Pig, SSH, and email. Oozie can also be extended

A. Importing Data

to support additional types of actions.

C. Apache Hive The following command is used to import all data from a table

called ORDERS from a MySQL database:

Apache Hive is a data warehouse system for Hadoop that

facilitates easy data summarization, ad-hoc queries, and the

$ sqoop import --connect jdbc:mysql://localhost/acmedb \

analysis of large datasets stored in Hadoop-compatible file

--table ORDERS --username test --password ****

systems, such as the MapR Data Platform (MDP). Hive

provides a mechanism to project structure onto this data and

In this command the various options specified are as follows:

query the data using a SQL-like language called HiveQL. At

the same time this language also allows traditional map/reduce import: This is the sub-command that instructs Sqoop to

programmers to plug in their custom mappers and reducers initiate an import.

when it is inconvenient or inefficient to express this logic in

--connect <connect string>, --username <user name>, --

HiveQL.

password <password>: These are connection parameters that

are used to connect with the database. This is no different

from the connection parameters that you use when connecting

D. Apache Sqoop to the database via a JDBC connection.

Apache Sqoop efficiently transfers bulk data between Apache

Hadoop and structured datastores such as relational databases. --table <table name>: This parameter specifies the table

Sqoop helps offload certain tasks (such as ETL processing) which will be imported.

from the EDW to Hadoop for efficient execution at a much In the first Step Sqoop introspects the database to gather the

lower cost. Sqoop can also be used to extract data from necessary metadata for the data being imported. The second

Hadoop and export it into external structured data stores. step is a map-only Hadoop job that Sqoop submits to the

cluster. It is this job that does the actual data transfer using the

II. EXISTING TECHNOLOGY metadata captured in the previous step.

In the existing technology ,we have the datasets stored .In the The imported data is saved in a directory on HDFS based on

HBase database in an unstructured form that have. High the table being imported. As is the case with most aspects of

latency but less integrity .Here data is more sparse and Sqoop operation, the user can specify any alternative directory

unclear to analyze. Therefore there is a need to provide a where the files should be populated.

solution to analyze the datasets easily.

B. Exporting Data

III PROPOSED TECHNOLOGY

In some cases data processed by Hadoop pipelines may be

In the proposed technology, For loading data back to database needed in production systems to help run additional critical

systems, without any overhead mentioned above. Sqoop works business functions. Sqoop can be used to export such data into

IJISRT17AP67 www.ijisrt.com 159

Volume 2, Issue 4, April 2017 International Journal of Innovative Science and Research Technology

ISSN No: - 2456 - 2165

external datastores as necessary. Continuing our example from

above - if data generated by the pipeline on Hadoop

corresponded to the ORDERS table in a database somewhere,

you could populate it using the following command:

$ sqoop export --connect jdbc:mysql://localhost/acmedb \

--table ORDERS --username test --password **** \

--export-dir /user/arvind/ORDERS

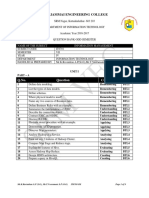

Fig 1: Architecture of SQOOP

In this command the various options specified are as follows:

C. Working of SQOOP

export: This is the sub-command that instructs Sqoop to Before you begin

initiate an export.

--connect <connect string>, --username <user name>, -- The JDBC driver should be compatible with the version of the

password <password>: These are connection parameters that database you are connecting to. Most JDBC drivers are

are used to connect with the database. This is no different backward compatible, so if you are connecting to databases of

from the connection parameters that you use when connecting different versions you can use the higher version of the JDBC

to the database via a JDBC connection. driver. However, for the Oracle JDBC driver, Sqoop does not

--table <table name>: This parameter specifies the table work unless you are using at least version 11g r2 or later

which will be populated. because older version of the Oracle JDBC driver is not

--export-dir <directory path>: This is the directory from supported by Sqoop.

which data will be exported.

About this task

The first step is to introspect the database for metadata,

followed by the second step of transferring the data. Sqoop Sqoop is a set of high-performance open source connectors

divides the input dataset into splits and then uses individual that can be customized for your specific external connections.

map tasks to push the splits to the database. Each map task Sqoop also offers specific connector modules that are

performs this transfer over many transactions in order to designed for different product types. Large amounts of data

ensure optimal throughput and minimal resource utilization. can be imported from various relational database sources into

a InfoSphere BigInsights cluster by using Sqoop.

Fig 2: working of sqoop

IJISRT17AP67 www.ijisrt.com 160

Volume 2, Issue 4, April 2017 International Journal of Innovative Science and Research Technology

ISSN No: - 2456 - 2165

V. SYSTEM ARCHITECTURE are the hardest to change later. The systems architect provides

the architects view of the users' vision for what the system

The systems architect establishes the basic structure of the needs to be and do, and the paths along which it must be able

system, defining the essential core design features and to evolve, and strives to maintain the integrity of that vision as

elements that provide the framework for all that follows, and it evolves during detailed design and implementation.

sqoop

MapReduce

REQUEST Lunging MR Jobs

Temporary data

HDFS File System

DATA

HDFS tmp Data

SQOOP

RESULT

Fig 3: System Architecture

IJISRT17AP67 www.ijisrt.com 161

Volume 2, Issue 4, April 2017 International Journal of Innovative Science and Research Technology

ISSN No: - 2456 - 2165

VI. IMPLEMENTATION VII. FUTURE ENHANCEMENTS

1. Open terminal and mount or open hadoop. Then start it by

entering bin/start-all.sh

Hadoop is uniquely capable of storing, aggregating, and

refining multi-structured data sources into formats that fuel

new business insights. Apache Hadoop is fast becoming the

defector platform for processing Big Data.

Hadoop started from a relatively humble beginning as a point

solution for small search systems. Its growth into an important

technology to the broader enterprise community dates back to

Yahoos 2006 decision to evolve Hadoop into a system for

solving its internet scale big data problems. Eric will discuss

the current state of Hadoop and what is coming from a

development standpoint as Hadoop evolves to meet more

workloads.

Improvements and other components based on Sqoop Unit

that could be built later.

2.Open new terminal to run the sqoop use the command cd

sqoop-1.4.4 For example, we could build a SqoopTestCase and Sqoop

TestSuite on top of SqoopTest to:

1. Add the notion of workspaces for each test.

2. Remove the boiler plate code appearing when there is

more than one test methods.

VIII. CONCLUSION

While this tutorial focused on one set of data in one Hadoop

cluster, you can configure the Hadoop cluster to be one unit of

a larger system, connecting it to other systems of Hadoop

clusters or even connecting it to the cloud for broader

interactions and greater data access. With this big data

infrastructure, and its data analytics application, the learning

3.Enter the command of Sqoop export HDFS to MYSQL: rate of the implemented algorithms will increase with more

access to data at rest or to streaming data, which will allow

you to make better, quicker, and more accurate business

decisions.

With the rise of Apache Hadoop, next-generation enterprise

data architecture is emerging that connects the systems

powering business transactions and business intelligence.

Hadoop is uniquely capable of storing, aggregating, and

refining multi-structured data sources into formats that fuel

new business insights. Apache Hadoop is fast becoming the

defector platform for processing Big Data.

IJISRT17AP67 www.ijisrt.com 162

Volume 2, Issue 4, April 2017 International Journal of Innovative Science and Research Technology

ISSN No: - 2456 - 2165

REFERENCES

WEB RESOURCES

1) https://hadoop.apache.org/docs/r1.2.1/single_node_setup.

html

2) http://www.tutorialspoint.com/hbase/hbase_overview.htm

3) https://cwiki.apache.org/confluence/display/Hive/Getting

Started

4) http://hbase.apache.org/0.94/book.html#getting_started.

5) http://www.tutorialspoint.com/hadoop/hadoop_introducti

on.htm

6) http://www.tutorialspoint.com/hive/hive_introduction.htm

BIBILOGRAPHY

1. Mukherjee, A.; Datta, J.; Jorapur, R.; Singhvi, R.; Haloi,

S.; Akram, W., (18-22 Dec.,2012) , Shared disk big data

analytics with Apache Hadoop

2. Aditya B. Patel, Manashvi Birla, Ushma Nair ,(6-8 Dec.

2012),Addressing Big Data Problem Using Hadoop and

Map Reduce

3. Yu Li ; WenmingQiu ; Awada, U. ; Keqiu Li,,(Dec

2012), Big Data Processing in Cloud Computing

Environments

4. Garlasu, D.; Sandulescu, V. ; Halcu, I. ; Neculoiu, G. ;,(

17-19 Jan. 2013),A Big Data implementation based on

Grid Computing, Grid Computing

5. Sagiroglu, S.; Sinanc, D. ,(20-24 May 2013),Big Data: A

Review

6. Grosso, P. ; de Laat, C. ; Membrey, P.,(20-24 May

2013), Addressing big data issues in Scientific Data

Infrastructure

7. Kogge, P.M.,(20-24 May,2013), Big data, deep data, and

the effect of system architectures on performance

8. Szczuka, Marcin,(24-28 June,2013), How deep data

becomes big data

9. Zhu, X. ; Wu, G. ; Ding, W.,(26 June,2013), Data

Mining with Big Data

10. Zhang, Du,(16-18 July,2013), Inconsistencies in big

data

11. Tien, J.M.(17-19 July,2013), Big Data: Unleashing

information

12. Katal, A Wazid, M. ; Goudar, R.H., (Aug,2013), Big

data: Issues, challenges, tools and Good practices

IJISRT17AP67 www.ijisrt.com 163

You might also like

- Enterprise Analytics in The CloudDocument12 pagesEnterprise Analytics in The CloudAnonymous gUySMcpSqNo ratings yet

- Oracle® Fusion Middleware Installing and Configuring Oracle Data IntegratorDocument110 pagesOracle® Fusion Middleware Installing and Configuring Oracle Data Integratortranhieu5959No ratings yet

- Configuring ODI SettingsDocument25 pagesConfiguring ODI SettingsAsad HussainNo ratings yet

- WilleyDocument330 pagesWilleyabhishek100% (1)

- HDFS ArchitectureDocument47 pagesHDFS Architecturekrishan GoyalNo ratings yet

- Google Bigquery: Sign in To Google Select A Project and DatasetDocument1 pageGoogle Bigquery: Sign in To Google Select A Project and DatasetPavanReddyReddyNo ratings yet

- CIS Oracle Linux 7 Benchmark v1.0.0Document171 pagesCIS Oracle Linux 7 Benchmark v1.0.0Johan Louwers100% (1)

- Spark For Python Developers - Sample ChapterDocument32 pagesSpark For Python Developers - Sample ChapterPackt Publishing100% (6)

- Informatica Bottlenecks OverviewDocument7 pagesInformatica Bottlenecks OverviewDhananjay ReddyNo ratings yet

- Survey On NoSQL Database ClassificationDocument24 pagesSurvey On NoSQL Database ClassificationDecemberNo ratings yet

- Hadoop All InstallationsDocument19 pagesHadoop All InstallationsFernando Andrés Hinojosa VillarrealNo ratings yet

- Thanks For Downloading. Enjoy The ReadingDocument31 pagesThanks For Downloading. Enjoy The ReadingVijay VarmaNo ratings yet

- Administering Oracle Database Classic Cloud Service PDFDocument425 pagesAdministering Oracle Database Classic Cloud Service PDFGanesh KaralkarNo ratings yet

- Step by Step Guide To Become Big Data DeveloperDocument15 pagesStep by Step Guide To Become Big Data DeveloperSaggam Bharath75% (4)

- OpenETL Tools ComparisonDocument4 pagesOpenETL Tools ComparisonManuel SantosNo ratings yet

- ASSIGENMENTDocument13 pagesASSIGENMENTR.N. PatelNo ratings yet

- Hadoop Interview QuestionsDocument28 pagesHadoop Interview QuestionsAnand SNo ratings yet

- Big Data Engineers Path PDFDocument1 pageBig Data Engineers Path PDFsruthianithaNo ratings yet

- Hadoop Interview QuestionsDocument28 pagesHadoop Interview Questionsjey011851No ratings yet

- Oracle GoldenGate 12c Real-Time Access To Real-Time InformationDocument30 pagesOracle GoldenGate 12c Real-Time Access To Real-Time InformationthiyagarajancsNo ratings yet

- Data Engineering Study PlanDocument4 pagesData Engineering Study PlanEgodawatta PrasadNo ratings yet

- Patching Oracle Database ApplianceDocument4 pagesPatching Oracle Database ApplianceMartin StrahilovskiNo ratings yet

- BDE ManagedHadoopDataLakes PAVLIK PDFDocument10 pagesBDE ManagedHadoopDataLakes PAVLIK PDFRajNo ratings yet

- Terminal: The Basics: General InformationDocument7 pagesTerminal: The Basics: General Informationjoannaa_castilloNo ratings yet

- Apps DBA Interview QuestionDocument12 pagesApps DBA Interview QuestionRamesh KumarNo ratings yet

- Edureka Interview Questions - HDFSDocument4 pagesEdureka Interview Questions - HDFSvarunpratapNo ratings yet

- NoSQL MongoDB HBase CassandraDocument142 pagesNoSQL MongoDB HBase Cassandrajustin maxtonNo ratings yet

- Step Install Cloudera Manager & Setup Cloudera ClusterDocument23 pagesStep Install Cloudera Manager & Setup Cloudera ClusterOnne RS-EmpireNo ratings yet

- Spark Project Report: StreamingDocument22 pagesSpark Project Report: Streamingtestyy testtNo ratings yet

- Netezza Oracle Configuration in DatastageDocument8 pagesNetezza Oracle Configuration in DatastagePraphulla RayalaNo ratings yet

- Hadoop Cluster - Architecture, Core ComponentsDocument9 pagesHadoop Cluster - Architecture, Core ComponentsDoubt bro100% (1)

- Setting Up Hadoop Cluster With Cloudera Manager and ImpalaDocument23 pagesSetting Up Hadoop Cluster With Cloudera Manager and Impalarama100% (1)

- Bigdata 2016 Hands On 2891109Document96 pagesBigdata 2016 Hands On 2891109cesmarscribdNo ratings yet

- Hadoop Distributed File System: Presented by Mohammad Sufiyan Nagaraju Kola Prudhvi Krishna KamireddyDocument17 pagesHadoop Distributed File System: Presented by Mohammad Sufiyan Nagaraju Kola Prudhvi Krishna KamireddySufiyan MohammadNo ratings yet

- Hadoop Admin Interview Question and AnswersDocument5 pagesHadoop Admin Interview Question and AnswersVivek KushwahaNo ratings yet

- Oracle DBADocument25 pagesOracle DBAAvinash SinghNo ratings yet

- HOL HiveDocument85 pagesHOL HiveKishore KumarNo ratings yet

- Hadoop Installation CommandsDocument3 pagesHadoop Installation CommandssiddheshNo ratings yet

- Introduction To Hadoop & SparkDocument28 pagesIntroduction To Hadoop & SparkJustin TalbotNo ratings yet

- Apache Cassandra Database - InstaclustrDocument8 pagesApache Cassandra Database - InstaclustrAdebayoNo ratings yet

- Hadoop Interviews QDocument9 pagesHadoop Interviews QS KNo ratings yet

- A00-221 Certification Guide and How To Clear Exam On SAS Big Data Programming and LoadingDocument15 pagesA00-221 Certification Guide and How To Clear Exam On SAS Big Data Programming and LoadingPalak Mazumdar0% (1)

- Cloudera Search User GuideDocument86 pagesCloudera Search User GuideNgoc TranNo ratings yet

- 15 Reasons To Use Redis As An Application Cache: Itamar HaberDocument9 pages15 Reasons To Use Redis As An Application Cache: Itamar Haberdyy dygysd dsygyNo ratings yet

- Seminar Topic NosqlDocument73 pagesSeminar Topic NosqlAnish ARNo ratings yet

- SQL Tuning Guide PDFDocument881 pagesSQL Tuning Guide PDFAniket PaturkarNo ratings yet

- Blue and Grey Software Developer Technology ResumeDocument1 pageBlue and Grey Software Developer Technology ResumeSami AlanziNo ratings yet

- SQL ServerDocument19 pagesSQL ServerSorinNo ratings yet

- 380 Notes Fa2016Document79 pages380 Notes Fa2016Sally Tran0% (2)

- Hive Using HiveqlDocument38 pagesHive Using HiveqlsulochanaNo ratings yet

- Cloudera Administrator Training For Apache Hadoop PDFDocument2 pagesCloudera Administrator Training For Apache Hadoop PDFRocky50% (2)

- Red Hat Enterprise Linux 7 Installation Guide en USDocument424 pagesRed Hat Enterprise Linux 7 Installation Guide en USrpizanaNo ratings yet

- Hive Workshop PracticalDocument29 pagesHive Workshop PracticalSree EedupugantiNo ratings yet

- Oracle Application Server 10g (10.1.2) Installation Requirements For Linux OEL 5 and RHEL 5Document9 pagesOracle Application Server 10g (10.1.2) Installation Requirements For Linux OEL 5 and RHEL 5mvargasm2511No ratings yet

- Practise Quiz Ccd-470 Exam (05-2014) - Cloudera Quiz LearningDocument74 pagesPractise Quiz Ccd-470 Exam (05-2014) - Cloudera Quiz LearningratneshkumargNo ratings yet

- BDA Experiment 14 PDFDocument77 pagesBDA Experiment 14 PDFNikita IchaleNo ratings yet

- HDFS Encryption Zone Hive OrigDocument47 pagesHDFS Encryption Zone Hive OrigshyamsunderraiNo ratings yet

- Top Answers To Couchbase Interview QuestionsDocument3 pagesTop Answers To Couchbase Interview QuestionsPappu KhanNo ratings yet

- Base Sas 9.3 Procedure Guide PDFDocument1,840 pagesBase Sas 9.3 Procedure Guide PDFGerman GaldamezNo ratings yet

- Teradata Architecture TeraData TechDocument30 pagesTeradata Architecture TeraData TechSubash ArumugamNo ratings yet

- Hadoop Admin Download Syllabus PDFDocument4 pagesHadoop Admin Download Syllabus PDFshubham phulariNo ratings yet

- Big Data: Business Intelligence, and AnalyticsDocument31 pagesBig Data: Business Intelligence, and AnalyticsKarthigai SelvanNo ratings yet

- Madhur Manchanda: Career ObjectiveDocument2 pagesMadhur Manchanda: Career Objectivemadhur manchandaNo ratings yet

- Database 12c UpdateDocument9 pagesDatabase 12c UpdateabidouNo ratings yet

- Primer On Big Data TestingDocument24 pagesPrimer On Big Data TestingSurojeet SenguptaNo ratings yet

- Using A Staging Table For Efficient MySQL Data Warehouse IngestionDocument6 pagesUsing A Staging Table For Efficient MySQL Data Warehouse IngestionDany BalianNo ratings yet

- Impact of Stress and Emotional Reactions due to the Covid-19 Pandemic in IndiaDocument6 pagesImpact of Stress and Emotional Reactions due to the Covid-19 Pandemic in IndiaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- An Overview of Lung CancerDocument6 pagesAn Overview of Lung CancerInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Entrepreneurial Creative Thinking and Venture Performance: Reviewing the Influence of Psychomotor Education on the Profitability of Small and Medium Scale Firms in Port Harcourt MetropolisDocument10 pagesEntrepreneurial Creative Thinking and Venture Performance: Reviewing the Influence of Psychomotor Education on the Profitability of Small and Medium Scale Firms in Port Harcourt MetropolisInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Ambulance Booking SystemDocument7 pagesAmbulance Booking SystemInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Designing Cost-Effective SMS based Irrigation System using GSM ModuleDocument8 pagesDesigning Cost-Effective SMS based Irrigation System using GSM ModuleInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Detection and Counting of Fake Currency & Genuine Currency Using Image ProcessingDocument6 pagesDetection and Counting of Fake Currency & Genuine Currency Using Image ProcessingInternational Journal of Innovative Science and Research Technology100% (9)

- Utilization of Waste Heat Emitted by the KilnDocument2 pagesUtilization of Waste Heat Emitted by the KilnInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Forensic Advantages and Disadvantages of Raman Spectroscopy Methods in Various Banknotes Analysis and The Observed Discordant ResultsDocument12 pagesForensic Advantages and Disadvantages of Raman Spectroscopy Methods in Various Banknotes Analysis and The Observed Discordant ResultsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Digital Finance-Fintech and it’s Impact on Financial Inclusion in IndiaDocument10 pagesDigital Finance-Fintech and it’s Impact on Financial Inclusion in IndiaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Computer Vision Gestures Recognition System Using Centralized Cloud ServerDocument9 pagesComputer Vision Gestures Recognition System Using Centralized Cloud ServerInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Study Assessing Viability of Installing 20kw Solar Power For The Electrical & Electronic Engineering Department Rufus Giwa Polytechnic OwoDocument6 pagesStudy Assessing Viability of Installing 20kw Solar Power For The Electrical & Electronic Engineering Department Rufus Giwa Polytechnic OwoInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Predictive Analytics for Motorcycle Theft Detection and RecoveryDocument5 pagesPredictive Analytics for Motorcycle Theft Detection and RecoveryInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Auto Tix: Automated Bus Ticket SolutionDocument5 pagesAuto Tix: Automated Bus Ticket SolutionInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Effect of Solid Waste Management on Socio-Economic Development of Urban Area: A Case of Kicukiro DistrictDocument13 pagesEffect of Solid Waste Management on Socio-Economic Development of Urban Area: A Case of Kicukiro DistrictInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Smart Health Care SystemDocument8 pagesSmart Health Care SystemInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Blockchain Based Decentralized ApplicationDocument7 pagesBlockchain Based Decentralized ApplicationInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Factors Influencing The Use of Improved Maize Seed and Participation in The Seed Demonstration Program by Smallholder Farmers in Kwali Area Council Abuja, NigeriaDocument6 pagesFactors Influencing The Use of Improved Maize Seed and Participation in The Seed Demonstration Program by Smallholder Farmers in Kwali Area Council Abuja, NigeriaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Cyber Security Awareness and Educational Outcomes of Grade 4 LearnersDocument33 pagesCyber Security Awareness and Educational Outcomes of Grade 4 LearnersInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Visual Water: An Integration of App and Web To Understand Chemical ElementsDocument5 pagesVisual Water: An Integration of App and Web To Understand Chemical ElementsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Unmasking Phishing Threats Through Cutting-Edge Machine LearningDocument8 pagesUnmasking Phishing Threats Through Cutting-Edge Machine LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Compact and Wearable Ventilator System For Enhanced Patient CareDocument4 pagesCompact and Wearable Ventilator System For Enhanced Patient CareInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- An Industry That Capitalizes Off of Women's Insecurities?Document8 pagesAn Industry That Capitalizes Off of Women's Insecurities?International Journal of Innovative Science and Research TechnologyNo ratings yet

- Implications of Adnexal Invasions in Primary Extramammary Paget's Disease: A Systematic ReviewDocument6 pagesImplications of Adnexal Invasions in Primary Extramammary Paget's Disease: A Systematic ReviewInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Smart Cities: Boosting Economic Growth Through Innovation and EfficiencyDocument19 pagesSmart Cities: Boosting Economic Growth Through Innovation and EfficiencyInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Insights Into Nipah Virus: A Review of Epidemiology, Pathogenesis, and Therapeutic AdvancesDocument8 pagesInsights Into Nipah Virus: A Review of Epidemiology, Pathogenesis, and Therapeutic AdvancesInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Air Quality Index Prediction Using Bi-LSTMDocument8 pagesAir Quality Index Prediction Using Bi-LSTMInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Parastomal Hernia: A Case Report, Repaired by Modified Laparascopic Sugarbaker TechniqueDocument2 pagesParastomal Hernia: A Case Report, Repaired by Modified Laparascopic Sugarbaker TechniqueInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Impact of Silver Nanoparticles Infused in Blood in A Stenosed Artery Under The Effect of Magnetic Field Imp. of Silver Nano. Inf. in Blood in A Sten. Art. Under The Eff. of Mag. FieldDocument6 pagesImpact of Silver Nanoparticles Infused in Blood in A Stenosed Artery Under The Effect of Magnetic Field Imp. of Silver Nano. Inf. in Blood in A Sten. Art. Under The Eff. of Mag. FieldInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Predict The Heart Attack Possibilities Using Machine LearningDocument2 pagesPredict The Heart Attack Possibilities Using Machine LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Parkinson's Detection Using Voice Features and Spiral DrawingsDocument5 pagesParkinson's Detection Using Voice Features and Spiral DrawingsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Excel As Data Source in OBIEEDocument8 pagesExcel As Data Source in OBIEEAsad HussainNo ratings yet

- A Critical Analysis of Apache Hadoop and Spark For Big Data ProcessingDocument6 pagesA Critical Analysis of Apache Hadoop and Spark For Big Data Processingsalah AlswiayNo ratings yet

- IT6701 Information ManagementDocument9 pagesIT6701 Information Managementtp2006sterNo ratings yet

- A Framework For Social Media Data Analytics Using Elasticsearch and KibanaDocument9 pagesA Framework For Social Media Data Analytics Using Elasticsearch and KibanafestauNo ratings yet

- Big Data and Cloud Computing in Consumer Behaviour AnalysisDocument7 pagesBig Data and Cloud Computing in Consumer Behaviour AnalysisRitam chaturvediNo ratings yet

- Big Data Engineer JDDocument2 pagesBig Data Engineer JDrahman shahNo ratings yet

- UNIT 4 Notes by ARUN JHAPATEDocument20 pagesUNIT 4 Notes by ARUN JHAPATEAnkit “अंकित मौर्य” MouryaNo ratings yet

- CassandraDocument7 pagesCassandraCarlos RomeroNo ratings yet

- Hadoop Map Reduce Performance Evaluation and Improvement Using Compression Algorithms On Single ClusterDocument12 pagesHadoop Map Reduce Performance Evaluation and Improvement Using Compression Algorithms On Single ClusterEditor IJRITCCNo ratings yet

- CC Syllabus Anna UniversityDocument1 pageCC Syllabus Anna UniversityDevika RankhambeNo ratings yet

- Data Tiering in BW4HANA and SAP BW On HANA Roadmap UpdateDocument14 pagesData Tiering in BW4HANA and SAP BW On HANA Roadmap UpdateNarendra PeddireddyNo ratings yet

- 19 Series Curriculum - Viii Sem-2022-2023Document21 pages19 Series Curriculum - Viii Sem-2022-2023SrivatsaNo ratings yet

- Components of A Big Data ArchitectureDocument3 pagesComponents of A Big Data ArchitectureAMIT RAJNo ratings yet

- Expose BDDDocument16 pagesExpose BDDJo ZefNo ratings yet

- Hbase What Is Hbase?Document2 pagesHbase What Is Hbase?Muriel SozimNo ratings yet

- Does Big Data Bring Big Rewards?Document4 pagesDoes Big Data Bring Big Rewards?ginish12No ratings yet

- Big DataDocument3 pagesBig DataMo EmadNo ratings yet

- Avro Quick GuideDocument28 pagesAvro Quick Guidersreddy.ch5919No ratings yet

- RajarajanSadhasivam (9 0)Document7 pagesRajarajanSadhasivam (9 0)AbhinavNo ratings yet

- Internet of Things 18Cs81: Module - 4 Data and Analytics For IotDocument32 pagesInternet of Things 18Cs81: Module - 4 Data and Analytics For IotDumb ZebraNo ratings yet

- Ccs335-Cloud Lab Manual CompleteDocument61 pagesCcs335-Cloud Lab Manual CompletePrakashNo ratings yet

- Introduction To HBaseDocument14 pagesIntroduction To HBasevishnuNo ratings yet

- Walmart's Sales Data Analysis - A Big DataDocument6 pagesWalmart's Sales Data Analysis - A Big DataTiago StohlirckNo ratings yet