Professional Documents

Culture Documents

Data Mining I: Summer Semester 2017

Uploaded by

Hamed RokniOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Data Mining I: Summer Semester 2017

Uploaded by

Hamed RokniCopyright:

Available Formats

Fakultt fr Elektrotechnik und Informatik

Institut fr Verteilte Systeme

Fachgebiet Wissensbasierte Systeme (KBS)

Data Mining I

Summer semester 2017

Lecture 3: Frequent Itemsets and Association Rules Mining

Lectures: Prof. Dr. Eirini Ntoutsi

Exercises: Tai Le Quy and Damianos Melidis

Recap from previous lecture

Dataset = Instances and features

Basic feature types

Binary, categorical (nominal, ordinal), numeric (interval-scale, ratio-scale)

Feature extraction and feature-vector representation

(i) Feature spaces and (ii) Metric spaces

(i) dist(x,y) > 0 (non-negativity)

(i) dist(x,x) = 0 (reflexivity)

(ii) , = 0 = (strictness)

(ii) , : , = (, ) (symmetry)

(ii) x,y,z Dom : dist(x,z) dist(x,y) + dist(y,z) (triangle inequality)

Proximity (similarity, distance) measures for different feature types

When we need normalization?

Data Mining I: Frequent Itemsets and Association Rules Mining 2

Outline

Introduction

Basic concepts

Frequent Itemsets Mining (FIM) Apriori

Association Rules Mining

Apriori improvements

Closed frequent itemsets (CFI) & Maximal frequent itemsets (MFI)

Homework/tutorial

Things you should know from this lecture

Data Mining I: Frequent Itemsets and Association Rules Mining 3

Introduction

Frequent patterns are patterns that appear frequently in a dataset.

Patterns: items, substructures, subsequences

Typical example: Market basket analysis transactions items

Customer transactions

Tid Transaction items

1 Butter, Bread, Milk, Sugar

2 Butter, Flour, Milk, Sugar

3 Butter, Eggs, Milk, Salt

4 Eggs

5 Butter, Flour, Milk, Salt, Sugar

We want to know: What products were often purchased together?

e.g.: beer and diapers? The parable of the beer and diapers:

http://www.theregister.co.uk/2006/08/15/beer_diapers/

Applications:

Improving store layout, Sales campaigns, Cross-marketing, Advertising

Data Mining I: Frequent Itemsets and Association Rules Mining 4

Applications beyond marked basket data

Market basket analysis

Items are the products, transactions are the products bought by a customer during a supermarket visit

Example: {Diapers} {Beer} (0.5%, 60%)

Similarly in an online shop, e.g. Amazon

Example: {Computer} {MS office} (50%, 80%)

University library

Items are the books, transactions are the books borrowed by a student during the semester

Example: {Kumar book} {Weka book} (60%, 70%)

University

Items are the courses, transactions are the courses that are chosen by a student

Example: {CS} ^ {DB} {Grade A} (1%, 75%)

and many other applications.

Also, frequent patter mining is fundamental in other DM tasks.

Data Mining I: Frequent Itemsets and Association Rules Mining 5

Outline

Introduction

Basic concepts

Frequent Itemsets Mining (FIM) Apriori

Association Rules Mining

Apriori improvements

Closed frequent itemsets (CFI) & Maximal frequent itemsets (MFI)

Homework/tutorial

Things you should know from this lecture

Data Mining I: Frequent Itemsets and Association Rules Mining 6

Basic concepts: Items, itemsets and transactions 1/2

Items I: the set of items I = {i1, ..., im}

Tid Transaction items

e.g. products in a supermarket, books in a bookstore 1 Butter, Bread, Milk, Sugar

2 Butter, Flour, Milk, Sugar

Itemset X: A set of items X I 3 Butter, Eggs, Milk, Salt

Itemset size: the number of items in the itemset 4 Eggs

5 Butter, Flour, Milk, Salt, Sugar

k-Itemset: an itemset of size k

e.g. {Butter, Bread, Milk, Sugar} is an 4-Itemset, {Butter, Bread} is a 2-Itemset

Transaction T: T = (tid, XT)

e.g. products bought during a customer visit to the supermarket

Database DB: A set of transactions T

e.g. customers purchases in a supermarket during the last week

Items in transactions or itemsets are lexicographically ordered

Itemset X = (x1, x2, ..., xk ), such as x1 x2 ... xk

Data Mining I: Frequent Itemsets and Association Rules Mining 7

Basic concepts: Items, itemsets and transactions 2/2

Let X be an itemset.

Tid Transaction items

Itemset cover: the set of transactions containing X: 1 Butter, Bread, Milk, Sugar

2 Butter, Flour, Milk, Sugar

cover(X) = {tid | (tid, XT) DB, X XT}

3 Butter, Eggs, Milk, Salt

(absolute) Support/ support count of X: # transactions containing X 4 Eggs

5 Butter, Flour, Milk, Salt, Sugar

supportCount(X) = |cover(X)|

(relative) Support of X: fraction of transactions containing X (or the probability that a transaction contains X)

support(X) = P(X) = supportCount(X) / |DB|

Frequent itemset: An itemset X is frequent in DB if its support is no less than a minSupport threshold s:

support(X) s

Lk: the set of frequent k-itemsets

L comes from Large (large itemsets), another term for frequent itemsets

Data Mining I: Frequent Itemsets and Association Rules Mining 8

Example: Itemsets

I = {Butter, Bread, Eggs, Flour, Milk, Salt, Sugar}

Tid Transaction items

1 Butter, Bread, Milk, Sugar

2 Butter, Flour, Milk, Sugar

3 Butter, Eggs, Milk, Salt

4 Eggs

5 Butter, Flour, Milk, Salt, Sugar

support(Butter) = 4/5=80%

cover(Butter) = {1,2,3,4}

support(Butter, Bread) = 1/5=20%

cover(Butter, Bread) = .

support(Butter, Flour) = 2/5=40%

cover(Butter, Flour) = .

support(Butter, Milk, Sugar) = 3/5=60%

Cover(Butter, Milk, Sugar)= .

Data Mining I: Frequent Itemsets and Association Rules Mining 9

The Frequent Itemsets Mining (FIM) problem

Problem 1: Frequent Itemsets Mining (FIM)

Given:

A set of items I

A transactions database DB over I

A minSupport threshold s Support of 1-Itemsets:

(A): 75%, (B), (C): 50%, (D), (E), (F): 25%,

Goal: Find all frequent itemsets in DB, i.e.: Support of 2-Itemsets:

(A, C): 50%,

{X I | support(X) s} (A, B), (A, D), (B, C), (B, E), (B, F), (E, F): 25%

Support of 3-Itemsets:

transactionID items (A, B, C), (B, E, F): 25%

2000 A,B,C Support of 4-Itemsets: -

1000 A,C Support of 5-Itemsets: -

4000 A,D Support of 6-Itemsets: -

5000 B,E,F

Data Mining I: Frequent Itemsets and Association Rules Mining 10

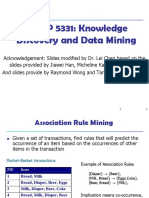

Basic concepts: association rules, support, confidence

Let X, Y be two itemsets: X,Y I and XY= .

Association rules: rules of the form

X Y

head or LHS (left-hand side) or antecedent of the rule body or RHS (right-hand side) or consequent of the rule

Support s of a rule: the percentage of transactions containing X Y in the DB or the probability P(X Y)

support(XY)=P(X Y) = support(X Y)

Confidence c of a rule: the percentage of transactions containing X Y in the set of transactions containing X.

Or, in other words the conditional probability that a transaction containing X also contains Y

( )

= = =

()

Support and confidence are measures of rules interestingness. Explain the rules:

{Diapers} {Beer} (0.5%, 60%)

{Toast bread} {Toast cheese}

Rules are usually written as follows: XY (support, confidence) (50%, 90%)

Data Mining I: Frequent Itemsets and Association Rules Mining 11

Example: association rules

I = {Butter, Bread, Eggs, Flour, Milk, Salt, Sugar}

Tid Transaction items

1 Butter, Bread, Milk, Sugar

2 Butter, Flour, Milk, Sugar

3 Butter, Eggs, Milk, Salt

4 Eggs

5 Butter, Flour, Milk, Salt, Sugar

Sample rules:

{Butter}{Bread} (20%, 25%)

support(Butter Bread)=1/5=20%

support(Butter)=4/5=80%

Confidence = 20%/80%=1/4=25%

{Butter, Milk} Sugar (60%, 75%)

support(Butter, Milk Sugar) = 3/5=60%

Support(Butter,Milk) = 4/5=80%

Confidence = 60%/80%=3/4=75%

Data Mining I: Frequent Itemsets and Association Rules Mining 12

The Association Rules Mining (ARM) problem

Problem 2: Association Rules Mining (ARM)

Given:

A set of items I

A transactions database DB over I

A minSupport threshold s and a minConfidence threshold c

Goal: Find all association rules X Y in DB w.r.t. minimum support s and minimum confidence c, i.e.:

{X Y | support(X Y) s, confidence(XY) c}

These rules are called strong.

transactionID items

Association rules:

2000 A,B,C

A C (Support = 50%, Confidence= 66.6%)

1000 A,C

C A (Support = 50%, Confidence= 100%)

4000 A,D

5000 B,E,F

Data Mining I: Frequent Itemsets and Association Rules Mining 13

Solving the problems

Problem 1 (FIM): Find all frequent itemsets in DB, i.e.: {X I | support(X) s}

Problem 2 (ARM): Find all association rules X Y in DB, w.r.t. min support s and min confidence c, i.e.,: {X Y |

support(X Y) s, confidence(XY) c, X,Y I and XY=}

Problem 1 is part of Problem 2:

Once we have support(X Y) and support(X), we can check if XY is strong.

2-step method to extract the association rules:

Step 1: Determine the frequent itemsets w.r.t. min support s:

Nave algorithm: count the frequencies for all k-itemsets

||

Inefficient!!! There are (

) such subsets FIM problem

Total cost: O(2|I |)

Step 1(FIM) is the most costly, so the overall

=> Apriori-algorithm and variants performance of an association rules mining

Step 2: Generate the association rules w.r.t. min confidence c: algorithm is determined by this step.

from frequent itemsets X, generate Y (X - Y), Y X, Y , YX

Data Mining I: Frequent Itemsets and Association Rules Mining 14

Itemset lattice complexity

The number of itemsets can be really huge. Let us consider a small set of items: I = {A,B,C,D}

4 4! 4!

# 1-itemsets: 4 ABCD

1 (4 1)!*1! 3!

4 4! 4!

# 2-itemsets: 6 ABC ABD ACD BCD

2 (4 2)!*2! 2!*2!

4 4! 4!

# 3-itemsets: 4 AB AC BC AD BD CD

3 (4 3)!*3! 3!

# 4-itemsets:

4 4!

1

4 (4 4)!*4! A B C D

In the general case, for |I| items, there exist:

{}

| I | | I | | I |

... 2|I | 1

1 2 k

So, generating all possible combinations and computing their support is inefficient!

Data Mining I: Frequent Itemsets and Association Rules Mining 15

Outline

Introduction

Basic concepts

Frequent Itemsets Mining (FIM) Apriori

Association Rules Mining

Apriori improvements

Closed frequent itemsets (CFI) & Maximal frequent itemsets (MFI)

Homework/tutorial

Things you should know from this lecture

Data Mining I: Frequent Itemsets and Association Rules Mining 16

Apriori algorithm [Agrawal & Srikant @VLDB94]

Idea: First determine frequent 1-itemsets, then frequent 2-itemsets and so on

ABCD

ABC ABD ACD BCD level wise search

(breadth-first search)

AB AC BC AD BD CD

A B C D

Method overview:

{}

Initially, scan DB once to get frequent 1-itemset

Generate length (k+1) candidate itemsets from length k frequent itemsets

Test the candidates against DB (one scan)

Terminate when no frequent or candidate set can be generated

Data Mining I: Frequent Itemsets and Association Rules Mining 17

Apriori property

Nave approach: Count the frequency of all k-itemsets X from I

|I |

| I |

generate 2 1

|I |

itemsets, i.e., O(2|I|).

k 1 k

for each candidate itemset X, the algorithm evaluates whether X is frequent

To reduce complexity, the set of candidates should be as small as possible!!!

Downward closure property / Monotonic property/Apriori property of frequent itemsets:

If X is frequent, all its subsets Y X are also frequent. {beer, diaper, nuts} 2

e.g., if {beer, diaper, nuts} is frequent, so is {beer, diaper}

{beer, diaper} 2

i.e., every transaction having {beer, diaper, nuts} also contains {beer, diaper}

minSupport =2

similarly for {diaper, nuts}, {beer, nuts}

On the contrary: When X is not frequent, all its supersets are not frequent and thus they should not be

generated/ tested!!! reduce the candidate itemsets set {beer, diaper, nuts} <=1

e.g., if {beer, diaper} is not frequent, {beer, diaper, nuts} would not be frequent also

{beer, diaper} 1

minSupport =2

Data Mining I: Frequent Itemsets and Association Rules Mining 18

Illustration of the Apriori property

Data Mining I: Frequent Itemsets and Association Rules Mining 19

Search space and prunning

Let us consider the following transaction database

Transaction Database

{Chips, Pizza}

{Beer, Chips}

{Chips, Pizza, Wine}

{Wine}

and a minSupport threshold minSupp = 2

Data Mining I: Frequent Itemsets and Association Rules Mining 20

Search space and prunning

{}:?

{Beer}:? {Chips}:? {Pizza}:? {Wine}:?

{Beer,Chips}:? {Beer,Pizza}:? {Beer,Wine}:? {Chips,Pizza}:? {Chips,Wine}:? {Pizza,Wine}:?

{Beer,Chips,Pizza}:? {Beer,Chips,Wine}:? {Beer,Pizza,Wine}:? {Chips,Pizza,Wine}:?

Transaction Database

{Chips, Pizza}

{Beer, Chips} {Beer,Chips,Pizza,Wine}:?

{Chips, Pizza, Wine}

{Wine}

minSupp = 2

Data Mining I: Frequent Itemsets and Association Rules Mining 21

Search space and prunning

{}:4

{Beer}:? {Chips}:? {Pizza}:? {Wine}:?

{Beer,Chips}:? {Beer,Pizza}:? {Beer,Wine}:? {Chips,Pizza}:? {Chips,Wine}:? {Pizza,Wine}:?

{Beer,Chips,Pizza}:? {Beer,Chips,Wine}:? {Beer,Pizza,Wine}:? {Chips,Pizza,Wine}:?

Transaction Database

{Chips, Pizza}

{Beer, Chips} {Beer,Chips,Pizza,Wine}:?

{Chips, Pizza, Wine}

{Wine}

minSupp = 2

Data Mining I: Frequent Itemsets and Association Rules Mining 22

Search space and prunning

{}:4

{Beer}:? {Chips}:3 {Pizza}:? {Wine}:?

{Beer,Chips}:? {Beer,Pizza}:? {Beer,Wine}:? {Chips,Pizza}:? {Chips,Wine}:? {Pizza,Wine}:?

{Beer,Chips,Pizza}:? {Beer,Chips,Wine}:? {Beer,Pizza,Wine}:? {Chips,Pizza,Wine}:?

Transaction Database

{Chips, Pizza}

{Beer, Chips} {Beer,Chips,Pizza,Wine}:?

{Chips, Pizza, Wine}

{Wine}

minSupp = 2

Data Mining I: Frequent Itemsets and Association Rules Mining 23

Search space and prunning

{}:4

{Beer}:1 {Chips}:3 {Pizza}:? {Wine}:?

{Beer,Chips}:? {Beer,Pizza}:? {Beer,Wine}:? {Chips,Pizza}:? {Chips,Wine}:? {Pizza,Wine}:?

{Beer,Chips,Pizza}:? {Beer,Chips,Wine}:? {Beer,Pizza,Wine}:? {Chips,Pizza,Wine}:?

Transaction Database

{Chips, Pizza}

{Beer, Chips} {Beer,Chips,Pizza,Wine}:?

{Chips, Pizza, Wine}

{Wine}

minSupp = 2

Data Mining I: Frequent Itemsets and Association Rules Mining 24

Search space and prunning

{}:4

{Beer}:1 {Chips}:3 {Pizza}:? {Wine}:?

{Beer,Chips}:? {Beer,Pizza}:? {Beer,Wine}:? {Chips,Pizza}:? {Chips,Wine}:? {Pizza,Wine}:?

{Beer,Chips,Pizza}:? {Beer,Chips,Wine}:? {Beer,Pizza,Wine}:? {Chips,Pizza,Wine}:?

Transaction Database

{Chips, Pizza}

{Beer, Chips} {Beer,Chips,Pizza,Wine}:?

{Chips, Pizza, Wine}

{Wine}

Pruned search space

minSupp = 2

Data Mining I: Frequent Itemsets and Association Rules Mining 25

Search space and prunning

{}:4

{Beer}:1 {Chips}:3 {Pizza}:? {Wine}:?

{Chips,Pizza}:? {Chips,Wine}:? {Pizza,Wine}:?

{Chips,Pizza,Wine}:?

Transaction Database

{Chips, Pizza}

{Beer, Chips}

{Chips, Pizza, Wine}

{Wine}

minSupp = 2

Data Mining I: Frequent Itemsets and Association Rules Mining 26

Search space and prunning

{}:4

{Beer}:1 {Chips}:3 {Pizza}:2 {Wine}:?

{Chips,Pizza}:? {Chips,Wine}:? {Pizza,Wine}:?

{Chips,Pizza,Wine}:?

Transaction Database

{Chips, Pizza}

{Beer, Chips}

{Chips, Pizza, Wine}

{Wine}

minSupp = 2

Data Mining I: Frequent Itemsets and Association Rules Mining 27

Search space and prunning

{}:4

{Beer}:1 {Chips}:3 {Pizza}:2 {Wine}:2

{Chips,Pizza}:? {Chips,Wine}:? {Pizza,Wine}:?

{Chips,Pizza,Wine}:?

Transaction Database

{Chips, Pizza}

{Beer, Chips}

{Chips, Pizza, Wine}

{Wine}

minSupp = 2

Data Mining I: Frequent Itemsets and Association Rules Mining 28

Search space and prunning

{}:4

{Beer}:1 {Chips}:3 {Pizza}:2 {Wine}:2

{Chips,Pizza}:2 {Chips,Wine}:? {Pizza,Wine}:?

{Chips,Pizza,Wine}:?

Transaction Database

{Chips, Pizza}

{Beer, Chips}

{Chips, Pizza, Wine}

{Wine}

minSupp = 2

Data Mining I: Frequent Itemsets and Association Rules Mining 29

Search space and prunning

{}:4

{Beer}:1 {Chips}:3 {Pizza}:2 {Wine}:2

{Chips,Pizza}:2 {Chips,Wine}:1 {Pizza,Wine}:?

{Chips,Pizza,Wine}:?

Transaction Database

{Chips, Pizza}

{Beer, Chips}

{Chips, Pizza, Wine}

{Wine}

minSupp = 2

Data Mining I: Frequent Itemsets and Association Rules Mining 30

Search space and prunning

{}:4

{Beer}:1 {Chips}:3 {Pizza}:2 {Wine}:2

{Chips,Pizza}:2 {Chips,Wine}:1 {Pizza,Wine}:?

{Chips,Pizza,Wine}:?

Transaction Database

{Chips, Pizza}

{Beer, Chips}

{Chips, Pizza, Wine}

{Wine}

Pruned Search Space

minSupp = 2

Data Mining I: Frequent Itemsets and Association Rules Mining 31

Search space and prunning

{}:4

{Beer}:1 {Chips}:3 {Pizza}:2 {Wine}:2

{Chips,Pizza}:2 {Chips,Wine}:1 {Pizza,Wine}:?

Transaction Database

{Chips, Pizza}

{Beer, Chips}

{Chips, Pizza, Wine}

{Wine}

minSupp = 2

Data Mining I: Frequent Itemsets and Association Rules Mining 32

Search space and prunning

{}:4

{Beer}:1 {Chips}:3 {Pizza}:2 {Wine}:2

{Chips,Pizza}:2 {Chips,Wine}:1 {Pizza,Wine}:1

Transaction Database

{Chips, Pizza}

{Beer, Chips}

{Chips, Pizza, Wine}

{Wine}

minSupp = 2

Data Mining I: Frequent Itemsets and Association Rules Mining 33

Search space and prunning

Border itemsets X: all subsets Y X are frequent, all supersets Z X are not frequent

{}:4

border frequent {Bier}:1 {Chips}:3 {Pizza}:2 {Wine}:2

non-frequent

{Bier,Chips}:1 {Bier,Pizza}:0 {Bier,Wine}:1 {Chips,Pizza}:2 {Chips,Wine}:1 {Pizza,Wine}:1

Transaction Database {Bier,Chips,Pizza}:0 {Bier,Chips,Wine}:0 {Chips,Pizza,Wine}:1

{Chips, Pizza}

{Bier,Pizza,Wine}:0

{Beer, Chips}

{Chips, Pizza, Wine}

{Wine}

{Bier,Chips,Pizza,Wine}:0

minSupport s = 2

Data Mining I: Frequent Itemsets and Association Rules Mining 34

Search space and prunning

Border itemsets X: all subsets Y X are frequent, all supersets Z X are not frequent

{}:4

border frequent {Bier}:1 {Chips}:3 {Pizza}:2 {Wine}:2

non-frequent

{Bier,Chips}:1 {Bier,Pizza}:0 {Bier,Wine}:1 {Chips,Pizza}:2 {Chips,Wine}:1 {Pizza,Wine}:1

Transaction Database {Bier,Chips,Pizza}:0 {Bier,Chips,Wine}:0 {Chips,Pizza,Wine}:1

{Chips, Pizza}

{Bier,Pizza,Wine}:0

{Beer, Chips}

{Chips, Pizza, Wine}

{Wine}

{Bier,Chips,Pizza,Wine}:0

minSupport s = 1

Data Mining I: Frequent Itemsets and Association Rules Mining 35

Search space and prunning

Border itemsets X: all subsets Y X are frequent, all supersets Z X are not frequent

Positive border: X is also frequent {}:4

Negative border: X is not frequent

{Bier}:1 {Chips}:3 {Pizza}:2 {Wine}:2

{Bier,Chips}:1 {Bier,Pizza}:0 {Bier,Wine}:1 {Chips,Pizza}:2 {Chips,Wine}:1 {Pizza,Wine}:1

Transaction Database

{Chips, Pizza} {Bier,Chips,Pizza}:0 {Bier,Chips,Wine}:0 {Bier,Pizza,Wine}:0 {Chips,Pizza,Wine}:1

{Beer, Chips}

{Chips, Pizza, Wine}

{Wine}

minSupp = 2 {Bier,Chips,Pizza,Wine}:0

Positive border-itemsets minSupport s = 2 Negative border-itemsets

Data Mining I: Frequent Itemsets and Association Rules Mining 36

Frequent itemsets generation: From Lk-1 to Ck to Lk

Lk: frequent itemsets of size k; Ck: candidate itemsets of size k

A 2-step process: Example:

Let L3={abc, abd, acd, ace, bcd}

Join step: generate candidates Ck

- Join step: C4=L3*L3

Lk is generated by self-joining Lk-1: Lk-1 *Lk-1 t C4={abc*abd=abcd; acd*ace=acde}

Two (k-1)-itemsets p, q are joined, if they agree in the first (k-2) items - Prune step (apriori-based):

acde is pruned since cde is not frequent

Prune step: prune Ck and return Lk

- Prune step (DB-based):

Ck is superset of Lk check abcd support in the DB

Nave idea: count the support for all candidate itemsets in Ck .|Ck| might be large!

Use Apriori property: a candidate k-itemset that has some non-frequent (k-1)-itemset cannot be frequent

Prune all those k-itemsets, that have some (k-1)-subset that is not frequent (i.e. does not belong to Lk-1)

Due to the level-wise approach of Apriori, we only need to check (k-1)-subsets

For the remaining itemsets in Ck, prune by support count (DB)

Data Mining I: Frequent Itemsets and Association Rules Mining 37

Apriori algorithm (pseudo-code)

Ck: Candidate itemset of size k

Lk : frequent itemset of size k

L1 = {frequent items};

Candidate generation

for (k = 1; Lk !=; k++) do begin (self-join, apriori property)

Ck+1 = candidates generated from Lk;

for each transaction t in database do DB scan

increment the count of all candidates in Ck+1 that are contained in t

Lk+1 = candidates in Ck+1 with min_support subset function

end Prune by support count (ask DB)

return k Lk;

Subset function:

- The subset function must for each transaction T in DB check all candidates

in the candidate set Ck whether they are part of the transaction T

- Organize candidates Ck in a hash tree

Data Mining I: Frequent Itemsets and Association Rules Mining 38

Example

Supmin = 2 Itemset sup

Database TDB Itemset sup

{A} 2

L1 {A} 2

Tid Items C1 {B} 3

{B} 3

10 A, C, D {C} 3

1st scan {C} 3

20 B, C, E {D} 1

{E} 3

30 A, B, C, E {E} 3

40 B, E

C2 Itemset sup C2

Itemset

{A, B} 1 2nd scan

L2 Itemset sup {A, B}

{A, C} 2

{A, C} 2 {A, C}

{A, E} 1

{B, C} 2

{B, C} 2 {A, E}

{B, E} 3

{B, E} 3 {B, C}

{C, E} 2

{C, E} 2 {B, E}

{C, E}

C3 Itemset 3rd scan L3 Itemset sup

{B, C, E} {B, C, E} 2

Data Mining I: Frequent Itemsets and Association Rules Mining 39

Apriori overview

Advantages:

Apriori property

Easy implementation (in parallel also)

Disadvantages:

It requires up to |I| database scans

It assumes that the DB is in memory

Complexity depends on

minSupport threshold

Number of items (dimensionality)

Number of transactions

Average transaction length

Data Mining I: Frequent Itemsets and Association Rules Mining 40

Outline

Introduction

Basic concepts

Frequent Itemsets Mining (FIM) Apriori

Association Rules Mining

Apriori improvements

Closed frequent itemsets (CFI) & Maximal frequent itemsets (MFI)

Homework/tutorial

Things you should know from this lecture

Data Mining I: Frequent Itemsets and Association Rules Mining 41

Association Rules Mining

(Recall the) 2-step method to extract the association rules:

Determine the frequent itemsets w.r.t. min support s FIM problem (Apriori)

Generate the association rules w.r.t. min confidence c.

Regarding step 2, the following method is followed:

Let X={1,2,3} be frequent

For every frequent itemset X There are 6 candidate rules that can be

for every subset Y of X: Y, Y X, the rule Y (X - Y) is formed generated from X:

Remove rules that violate min confidence c {1,2}3

{1,3}2

support _ count ( X ) {2,3}1

confidence(Y ( X Y ))

support _ count (Y ) {1}{2,3}

{2}{1,3}

Store the frequent itemsets and their supports in a hash table {3}{1,2}

no database access!

We can decide if there are strong using

the support counts (already computed

during the FIM step)

Data Mining I: Frequent Itemsets and Association Rules Mining 42

Pseudocode

Data Mining I: Frequent Itemsets and Association Rules Mining 43

Example

tid XT

1 {Bier, Chips, Wine} Transaction database

2 {Bier, Chips}

3 {Pizza, Wine}

I = {Bier, Chips, Pizza, Wine}

4 {Chips, Pizza} Rule Sup. Freq. Conf.

{Bier} {Chips} 2 50 % 100 %

Itemset Cover Sup. Freq. {Bier} {Wine} 1 25 % 50 %

{} {1,2,3,4} 4 100 % {Chips} {Bier} 2 50 % 66 %

{Bier} {1,2} 2 50 % {Pizza} {Chips} 1 25 % 50 %

{Chips} {1,2,4} 3 75 % {Pizza} {Wine} 1 25 % 50 %

{Pizza} {3,4} 2 50 % {Wine} {Bier} 1 25 % 50 %

{Wine} {1,3} 2 50 % {Wine} {Chips} 1 25 % 50 %

{Bier, Chips} {1,2} 2 50 % {Wine} {Pizza} 1 25 % 50 %

{Bier, Wine} {1} 1 25 % {Bier, Chips} {Wine} 1 25 % 50 %

{Chips, Pizza} {4} 1 25 % {Bier, Wine} {Chips} 1 25 % 100 %

{Chips, Wine} {1} 1 25 % {Chips, Wine} {Bier} 1 25 % 100 %

{Pizza, Wine} {3} 1 25 % {Bier} {Chips, Wine} 1 25 % 50 %

{Bier, Chips, Wine} {1} 1 25 % {Wine} {Bier, Chips} 1 25 % 50 %

Data Mining I: Frequent Itemsets and Association Rules Mining 44

Evaluating Association Rules 1/2

Interesting and misleading association rules

Example:

Database on the behavior of students in a school with 5.000 students

Itemsets:

60% of the students play Soccer,

75% of the students eat chocolate bars

40% of the students play Soccer and eat chocolate bars

Association rules: {Play Soccer} {Eat chocolate bars}, confidence = 40%/60%= 67%

The rule has a high confidence, however:

{Eat chocolate bars}, support= 75%, regardless of whether they play soccer.

Thus, knowing that one is playing soccer decreases his/her probability of eating chocolate (from 75%67%)

Therefore, the rule {Play Soccer} {Eat chocolate bars} is misleading despite its high confidence

Data Mining I: Frequent Itemsets and Association Rules Mining 45

Evaluating Association Rules 2/2

Task: Filter out misleading rules

Let {A} {B}

Measure of interestingness of a rule: interest

P( A B)

P( B)

P( A)

the higher the value the more interesting the rule is

Measure of dependent/correlated events: lift

P( A B) sup port ( A B)

lift

P( A) P( B) sup port ( A) sup port ( B)

the ratio of the observed support to that expected if X and Y were independent.

Data Mining I: Frequent Itemsets and Association Rules Mining 46

Measuring Quality of Association Rules

For a rule A B

Support P( A B)

e.g. support(milk, bread, butter)=20%, i.e. 20% of the transactions contain these

P( A B)

Confidence P( A)

e.g. confidence(milk, bread butter)=50%, i.e. 50% of the times a customer buys milk and bread, butter is bought as well.

P( A B)

Lift

P( A) P( B)

e.g. lift(milk, bread butter)=20%/(40%*40%)=1.25. the observed support is 20%, the expected (if they were

independent) is 16%.

Data Mining I: Frequent Itemsets and Association Rules Mining 47

Outline

Introduction

Basic concepts

Frequent Itemsets Mining (FIM) Apriori

Association Rules Mining

Apriori improvements

Closed frequent itemsets (CFI) & Maximal frequent itemsets (MFI)

Homework/tutorial

Things you should know from this lecture

Data Mining I: Frequent Itemsets and Association Rules Mining 48

Homework/ tutorial

Try Apriori and association rules mining in Weka

E.g., use weka installation folder/data/weather.nominal.arff or

For other datasets to try (including a chess dataset)

http://fimi.ua.ac.be/data/

You need to convert them to .arff if you want to use them for Weka

2nd tutorial follows

Readings:

Tan P.-N., Steinbach M., Kumar V book, Chapter 6.

Han J., KamberM., Pei J.Data Mining: Concepts and Techniques3rd ed., Morgan Kaufmann, 2011 (Chapter 6)

Apriori algorithm: Rakesh Agrawal and R. Srikant, Fast Algorithms for Mining Association Rules, VLDB94.

Data Mining I: Frequent Itemsets and Association Rules Mining 49

Outline

Introduction

Basic concepts

Frequent Itemsets Mining (FIM) Apriori

Association Rules Mining

Apriori improvements

Closed frequent itemsets (CFI) & Maximal frequent itemsets (MFI)

Homework/tutorial

Things you should know from this lecture

Data Mining I: Frequent Itemsets and Association Rules Mining 50

Things you should know from this lecture

Frequent Itemsets, support, minSupport, itemsets lattice

Association Rules, support, minSupport, confidence, minConfidence, strong rules

Frequent Itemsets Mining: computation cost, negative border, downward closure property

Apriori: join step, prune step, DB scans

Association rules extraction from frequent itemsets

Quality measures for association rules

Data Mining I: Frequent Itemsets and Association Rules Mining 51

Acknowledgement

The slides are based on

KDD I lecture at LMU Munich (Johannes Afalg, Christian Bhm, Karsten Borgwardt, Martin Ester, Eshref

Januzaj, Karin Kailing, Peer Krger, Eirini Ntoutsi, Jrg Sander, Matthias Schubert, Arthur Zimek, Andreas

Zfle)

Introduction to Data Mining book slides at http://www-users.cs.umn.edu/~kumar/dmbook/

Pedro Domingos Machine Lecture course slides at the University of Washington

Machine Learning book by T. Mitchel slides at http://www.cs.cmu.edu/~tom/mlbook-chapter-slides.html

Old Data Mining course slides at LUH by Prof. Udo Lipeck

Data Mining I: Frequent Itemsets and Association Rules Mining 52

You might also like

- KDD 3 AssociationRulesDocument55 pagesKDD 3 AssociationRulesOnteru KarthikNo ratings yet

- CA03CA3405Notes On Association Rule Mining and Apriori AlgorithmDocument41 pagesCA03CA3405Notes On Association Rule Mining and Apriori AlgorithmAriel AsunsionNo ratings yet

- 20210913120018D3708 - Session 15-16 Analyzing Frequent Patterns, Associations, and Correlations Basic Concepts and MethodsDocument39 pages20210913120018D3708 - Session 15-16 Analyzing Frequent Patterns, Associations, and Correlations Basic Concepts and MethodsAnthony HarjantoNo ratings yet

- Data Mining Task - Association Rule MiningDocument30 pagesData Mining Task - Association Rule MiningwhyaguilarNo ratings yet

- Association Rule MiningDocument97 pagesAssociation Rule MiningOnteru KarthikNo ratings yet

- Dm&bi - L10-Association RulesDocument43 pagesDm&bi - L10-Association Rulesmarouli90No ratings yet

- 4 Mining .Association 1Document24 pages4 Mining .Association 1Majd ALAssafNo ratings yet

- 04 Frequent Patterns AnalysisDocument37 pages04 Frequent Patterns Analysisaanaon0No ratings yet

- Unit 4 DWM by DR KSR Association - AnalysisDocument68 pagesUnit 4 DWM by DR KSR Association - AnalysisGanesh DegalaNo ratings yet

- Data Mining: Budi Santosa, PHD 2008 Lab Komputasi Dan Optimasi Industri Teknik Industri ItsDocument42 pagesData Mining: Budi Santosa, PHD 2008 Lab Komputasi Dan Optimasi Industri Teknik Industri ItsNengnya Kang MasNo ratings yet

- 3-Associations and Corelations MTech-2016Document157 pages3-Associations and Corelations MTech-2016divyaNo ratings yet

- Associate RulesDocument26 pagesAssociate RulesPepe Garcia EstebezNo ratings yet

- 9 - Association RulesDocument44 pages9 - Association RulesPutri AnisaNo ratings yet

- Lecture06 Association MiningDocument54 pagesLecture06 Association MiningShone DripNo ratings yet

- Association Analysis 1Document55 pagesAssociation Analysis 1Prateek BalchandaniNo ratings yet

- Chap4 PatternMiningBasicDocument52 pagesChap4 PatternMiningBasicKamran AhmedNo ratings yet

- 9 - Association RulesDocument87 pages9 - Association RulesAsmuni HarisNo ratings yet

- CSE 385 - Data Mining and Business Intelligence - Lecture 02Document67 pagesCSE 385 - Data Mining and Business Intelligence - Lecture 02Islam AshrafNo ratings yet

- Association Rule MiningDocument54 pagesAssociation Rule Mininghawariya abelNo ratings yet

- DS2 AssociationDocument48 pagesDS2 Associationmichaelschung0515No ratings yet

- New Microsoft Power Point PresentationDocument18 pagesNew Microsoft Power Point PresentationAllison CollierNo ratings yet

- ANL303 - Week - 4 - Jan 2023Document64 pagesANL303 - Week - 4 - Jan 2023syed aliNo ratings yet

- 06 FPBasicDocument103 pages06 FPBasicVarun ReddyNo ratings yet

- ML Apriori Lect07aDocument36 pagesML Apriori Lect07aadminNo ratings yet

- Association Analysis ModifiedDocument66 pagesAssociation Analysis ModifiedVijeth PoojaryNo ratings yet

- Clickstream AnalyticsDocument22 pagesClickstream AnalyticsArunima SinghNo ratings yet

- Association Rule MiningDocument92 pagesAssociation Rule MiningMahadi Hasan RumiNo ratings yet

- Frequent Pattern Mining Overview: Data Mining Techniques: Frequent Patterns in Sets and SequencesDocument14 pagesFrequent Pattern Mining Overview: Data Mining Techniques: Frequent Patterns in Sets and Sequencesarchiseth_516303960No ratings yet

- Association Rule MiningDocument17 pagesAssociation Rule Miningساره عبد المجيد المراكبى عبد المجيد احمد UnknownNo ratings yet

- 1.2 Association Rule Mining: Abdulfetah Abdulahi ADocument43 pages1.2 Association Rule Mining: Abdulfetah Abdulahi Ajemal yahyaaNo ratings yet

- Lecture Notes For Chapter 6 Introduction To Data Mining: by Tan, Steinbach, KumarDocument82 pagesLecture Notes For Chapter 6 Introduction To Data Mining: by Tan, Steinbach, KumarSanjeet KumarNo ratings yet

- MS (Data Science) Fall 2020 SemesterDocument36 pagesMS (Data Science) Fall 2020 SemesterFaiza IsrarNo ratings yet

- Data Mining Techniques (DMT) by Kushal Anjaria Session-2: Tid ItemsDocument4 pagesData Mining Techniques (DMT) by Kushal Anjaria Session-2: Tid ItemsMighty SinghNo ratings yet

- Association Analysis: Basic Concepts and Algorithms: Market-Basket TransactionsDocument42 pagesAssociation Analysis: Basic Concepts and Algorithms: Market-Basket Transactionsanagha2982No ratings yet

- DM GTU Study Material Presentations Unit-3 21052021124240PMDocument54 pagesDM GTU Study Material Presentations Unit-3 21052021124240PMSarvaiya SanjayNo ratings yet

- Basic Concepts Frequent Itemset Mining Methods Which Patterns Are Interesting?-Pattern Evaluation MethodsDocument45 pagesBasic Concepts Frequent Itemset Mining Methods Which Patterns Are Interesting?-Pattern Evaluation MethodsAsma Batool NaqviNo ratings yet

- Frequent Pattern AnalysisDocument22 pagesFrequent Pattern Analysisarshia saeedNo ratings yet

- Association Rules & Frequent Itemsets: The Market-Basket ProblemDocument5 pagesAssociation Rules & Frequent Itemsets: The Market-Basket ProblemJaskiran HuvorNo ratings yet

- Unit 2Document14 pagesUnit 2Vasudevarao PeyyetiNo ratings yet

- Data Mining: Frequent Itemsets and Association RulesDocument105 pagesData Mining: Frequent Itemsets and Association RuleszoravarNo ratings yet

- 4 Frequent Itemset MiningpdfDocument21 pages4 Frequent Itemset MiningpdfnpcdNo ratings yet

- AssociationAnalysis Part1Document64 pagesAssociationAnalysis Part11NT20CS074 JOSHITHA KNo ratings yet

- Chapter06 (Frequent Patterns)Document47 pagesChapter06 (Frequent Patterns)jozef jostarNo ratings yet

- Unit 4 - DA - Frequent Itemsets and Clustering-1 (Unit-5)Document86 pagesUnit 4 - DA - Frequent Itemsets and Clustering-1 (Unit-5)881Aritra PalNo ratings yet

- CS 412 Intro. To Data MiningDocument55 pagesCS 412 Intro. To Data Miningshehzad AliNo ratings yet

- L5 - Association Rule MiningDocument17 pagesL5 - Association Rule MiningVeena TellaNo ratings yet

- Association Rule MiningDocument16 pagesAssociation Rule MiningKristofar LolanNo ratings yet

- Association AnalysisDocument61 pagesAssociation Analysiskhatri81No ratings yet

- Data Mining Unit-IiDocument29 pagesData Mining Unit-Iisairgvn47No ratings yet

- Chap5-Association AnalysisDocument102 pagesChap5-Association AnalysisMavs ZestNo ratings yet

- Data Mining & Business Intelligence (2170715) : Unit-5 Concept Description and Association Rule MiningDocument39 pagesData Mining & Business Intelligence (2170715) : Unit-5 Concept Description and Association Rule MiningtfybcNo ratings yet

- Concepts and Techniques: Data MiningDocument65 pagesConcepts and Techniques: Data MiningLinh DinhNo ratings yet

- Association Analysis Basic Concepts Introduction To Data Mining, 2 Edition by Tan, Steinbach, Karpatne, KumarDocument104 pagesAssociation Analysis Basic Concepts Introduction To Data Mining, 2 Edition by Tan, Steinbach, Karpatne, KumarChristine CheongNo ratings yet

- Final - Association and CorelationDocument24 pagesFinal - Association and CorelationNEEL GHADIYANo ratings yet

- M2S2Document7 pagesM2S2Atharva PatilNo ratings yet

- Datamining Lect2 FrequentDocument59 pagesDatamining Lect2 FrequentNguyễn Mạnh HùngNo ratings yet

- Tutorial 04Document29 pagesTutorial 04Nehal PatodiNo ratings yet

- AssociationDocument37 pagesAssociationPrashast SinghNo ratings yet

- Unit-5: Concept Description and Association Rule MiningDocument39 pagesUnit-5: Concept Description and Association Rule MiningNayan PatelNo ratings yet

- Investment Guarantees: Modeling and Risk Management for Equity-Linked Life InsuranceFrom EverandInvestment Guarantees: Modeling and Risk Management for Equity-Linked Life InsuranceRating: 3.5 out of 5 stars3.5/5 (2)

- Us and Eu Regulatory Competition and Authentication Standards in Electronic CommerceqDocument22 pagesUs and Eu Regulatory Competition and Authentication Standards in Electronic CommerceqHamed RokniNo ratings yet

- Overview of Electronic Signature Law in The EUDocument3 pagesOverview of Electronic Signature Law in The EUHamed RokniNo ratings yet

- Exercise 1: Assignment 1: Introducing PostgresqlDocument1 pageExercise 1: Assignment 1: Introducing PostgresqlHamed RokniNo ratings yet

- Adaptive Hypermedia SolutionDocument6 pagesAdaptive Hypermedia SolutionHamed RokniNo ratings yet

- Home Project 2017Document3 pagesHome Project 2017Hamed RokniNo ratings yet

- 04 Exercise EntropyDocument3 pages04 Exercise EntropyHamed RokniNo ratings yet

- User Modeling and Personalization 2: AEHS & StereotypesDocument3 pagesUser Modeling and Personalization 2: AEHS & StereotypesHamed RokniNo ratings yet

- 02 Structure of EssayDocument2 pages02 Structure of EssayHamed RokniNo ratings yet

- 01 ICT Law of EU Teaching Program v01Document2 pages01 ICT Law of EU Teaching Program v01Hamed RokniNo ratings yet

- User Modeling and Personalization 1: Adaptive HypermediaDocument2 pagesUser Modeling and Personalization 1: Adaptive HypermediaHamed RokniNo ratings yet

- User Modeling and Personalization: Exercise 3: Bayesian NetworksDocument5 pagesUser Modeling and Personalization: Exercise 3: Bayesian NetworksHamed RokniNo ratings yet

- 7.classification BeforeDocument27 pages7.classification BeforeHamed RokniNo ratings yet

- اﺪﺧ مﺎﻨﺑ ﯽﻟﺮﺘﻨﮐ ﯽﻔﯿﮐ يﺎﻫﺪﻨﯾاﺮﻓ To-Be: Bpmn Process Model (Lane - Task - Gateway)Document1 pageاﺪﺧ مﺎﻨﺑ ﯽﻟﺮﺘﻨﮐ ﯽﻔﯿﮐ يﺎﻫﺪﻨﯾاﺮﻓ To-Be: Bpmn Process Model (Lane - Task - Gateway)Hamed RokniNo ratings yet

- Data Mining I: Summer Semester 2017Document68 pagesData Mining I: Summer Semester 2017Hamed RokniNo ratings yet

- 7.classification AfterDocument51 pages7.classification AfterHamed RokniNo ratings yet

- 5+6 ClassificationDocument95 pages5+6 ClassificationHamed RokniNo ratings yet

- Data Mining I: Summer Semester 2017Document52 pagesData Mining I: Summer Semester 2017Hamed RokniNo ratings yet

- Guideline On Scenario Development For (Distributed) Simulation EnvironmentsDocument88 pagesGuideline On Scenario Development For (Distributed) Simulation EnvironmentsHamed RokniNo ratings yet

- Data Mining I: Summer Semester 2017Document47 pagesData Mining I: Summer Semester 2017Hamed RokniNo ratings yet

- Business Planning For Digital Libraries: International ApproachesDocument3 pagesBusiness Planning For Digital Libraries: International ApproachesHamed RokniNo ratings yet

- Oracle Self-Service E-Billing On Demand For Consumers: Reduce Costs and Improve Customer LoyaltyDocument3 pagesOracle Self-Service E-Billing On Demand For Consumers: Reduce Costs and Improve Customer LoyaltyHamed RokniNo ratings yet

- Researchers Profile in ThesisDocument8 pagesResearchers Profile in Thesiscabursandret1971100% (2)

- College ChatbotDocument24 pagesCollege ChatbotSharwani EktateNo ratings yet

- Running Head: IT-659-Q5209 Cyberlaw and Ethics 20TW5 1Document6 pagesRunning Head: IT-659-Q5209 Cyberlaw and Ethics 20TW5 1Peter KidiavaiNo ratings yet

- Android Campus Potral With Graphical ReportingDocument11 pagesAndroid Campus Potral With Graphical ReportingSowndharya SNo ratings yet

- BIG DATA and Its TraitsDocument25 pagesBIG DATA and Its TraitsJaswanth RachaNo ratings yet

- BP Number Last Name First Name Date of Birth StatusDocument12 pagesBP Number Last Name First Name Date of Birth Statusmimi conjeNo ratings yet

- EMPOWERMENT TECHNOLOGIES Chapter 6 PDFDocument4 pagesEMPOWERMENT TECHNOLOGIES Chapter 6 PDFHannah MedesNo ratings yet

- Engr. Md. Abdur Rashid System Analyst NapdDocument19 pagesEngr. Md. Abdur Rashid System Analyst NapdQuazi Aritra ReyanNo ratings yet

- LESSON 1 Assessment Guide QuestionsDocument3 pagesLESSON 1 Assessment Guide QuestionsAce FloresNo ratings yet

- SQL Exploiter Pro v2.15 ManualDocument15 pagesSQL Exploiter Pro v2.15 ManualjounsnowNo ratings yet

- Asdaf Kota Gorontalo, Provinsi Gorontalo Program Studi Manajemen Sumber Daya Manusia Sektor PublikDocument10 pagesAsdaf Kota Gorontalo, Provinsi Gorontalo Program Studi Manajemen Sumber Daya Manusia Sektor PublikBerlian M HuluNo ratings yet

- Module 7Document25 pagesModule 7krishnabengoswami21No ratings yet

- 3 Lecture 3-ETLDocument42 pages3 Lecture 3-ETLsignup8707No ratings yet

- GIS and ElectionDocument11 pagesGIS and ElectionNabilahNaharudin100% (2)

- DB Lecture Note All in ONEDocument85 pagesDB Lecture Note All in ONEyonasante2121No ratings yet

- r05321204 Data Warehousing and Data MiningDocument5 pagesr05321204 Data Warehousing and Data MiningSRINIVASA RAO GANTANo ratings yet

- Context LiesDocument8 pagesContext LiesTimothy CookNo ratings yet

- Energi Gelombang Laut: Insert The Sub Title of Your PresentationDocument50 pagesEnergi Gelombang Laut: Insert The Sub Title of Your PresentationTamaraNo ratings yet

- Planning and Architecture For Office SharePoint Server 2007, Part 1Document630 pagesPlanning and Architecture For Office SharePoint Server 2007, Part 1Kelly ReedNo ratings yet

- Dbms Module 6 NotesDocument11 pagesDbms Module 6 NotespatricknamdevNo ratings yet

- Computer Mouse and Its TypesDocument1 pageComputer Mouse and Its TypesmbeaelnaaNo ratings yet

- GBEPM 520 - Assignment Distance January 2024Document4 pagesGBEPM 520 - Assignment Distance January 2024violetkabondeNo ratings yet

- Aakanksha Aundhkar Professional SummaryDocument6 pagesAakanksha Aundhkar Professional SummaryharshNo ratings yet

- L2 Introduction To Functions of Different UnitsDocument39 pagesL2 Introduction To Functions of Different UnitsJayan SamaNo ratings yet

- 315 As1011Document11 pages315 As1011Hwang JaesungNo ratings yet

- CSE 446 Final Flashcards QuizletDocument46 pagesCSE 446 Final Flashcards QuizletganamNo ratings yet

- Star Schema BDocument10 pagesStar Schema Brafael_siNo ratings yet

- SpagoBI & ADempiereDocument4 pagesSpagoBI & ADempiereMoses K. WangaruroNo ratings yet

- Diels-Doxographi Graeci PDFDocument883 pagesDiels-Doxographi Graeci PDFGerardo FrancoNo ratings yet

- BVoc-Software-02Sem-DikshaSinghal-DATABASE MANAGEMENT SYSTEMDocument78 pagesBVoc-Software-02Sem-DikshaSinghal-DATABASE MANAGEMENT SYSTEMSnehal Kumar KetalaNo ratings yet