Professional Documents

Culture Documents

Comparative Studies of Data Mining Techniques

Uploaded by

Runa ReddyCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Comparative Studies of Data Mining Techniques

Uploaded by

Runa ReddyCopyright:

Available Formats

MSC INTERNET SYSTEMS ENGINEERING

COMPARITIVE STUDIES OF DATA MINING

TECHINIQUES

BY

MAREPALLY ARUNKUMAR REDDY

0853756

SUBMITTED TO

UNIVERSITY OF EAST LONDON

0853756 1 DATA MINING

MSC INTERNET SYSTEMS ENGINEERING

Table of contents

1. Abstract

2. Introduction

2.1 Introduction to WEKA

2.2 Data Mining

2.3 Implementation of Algorithms

2.3.1 Dataset Introduction: Habermans Survival Data

2.3.2 Data Cleaning

2.3.3 Dataset Transformation

2.3.4 Attribute Description

2.3.5 Class Description

2.3.6 Input Encoding/Input Representation

2.3.7 Input Type

2.4 Implementing the Algorithms

2.4.1 Reasons why WEKA is chosen

2.4.1.1 Implementation of CRUISE

2.4.2 Implementing Algorithms in WEKA

2.4.3 In-depth Analysis of Results of three Algorithms J48, Multilayer

Perceptron and Naïve Bayes

2.4.3.1 Evaluation of J48:

2.4.3.2 Calculation of Accuracy & Confusion Matrix in J48

2.4.3.3 Evaluation of Multi Layer Perceptron

2.4.3.4 Evaluation of Naïve Bayes

2.5 Overall Accuracy rate of the algorithms implemented

2.6 Difficulties faced while using WEKA

3. Research analysis

3.1 Introduction

3.2 Decision Tree

3.3 Neural Networks

3.4 Comparison of J48 Decision Tree and Neural Networks-Multilayer

Perceptron

3.5 Results based on J48 and Multilayer Perceptron

3.6 Conclusion

0853756 2 DATA MINING

MSC INTERNET SYSTEMS ENGINEERING

Comparative Studies of Data Mining Techniques

1. Abstract:

This paper here discusses about implementing Data Mining

techniques using WEKA. This paper is divided into two parts the first

part gives the information about how the implementation of dataset

is done using trees like J48 (Decision tree), Naïve Bayes and Neural

Networks (Multi layer Perceptron). The comparison is done on the

basis of accuracy rate, correctly classified instances and results

before and after pruning by implementing the three algorithms.

Visualization of trees, step by step screen dumps of algorithms and

graphs are involved in the first part.

Second part involves comparison and evaluation of J48

classifier; Naïve Bayes classifier and implementation Multi layer

Perceptron of Neural Networks. This paper also gives a brief

description on how the accuracy rate is calculated in the algorithms.

2. Introduction:

The main objective of this paper is to look at various tools and

techniques in data mining based on classification of testing dataset

which gives us results based on accuracy, correctly classified

instances, incorrectly classified instances and misclassified

instances of the particular dataset.

2.1 Introduction to WEKA:

A software tool used to develop various data mining techniques. It is

developed by University of Waikato, New Zealand. I have used this

software tool to test the dataset based on three different

classifications like J48 decision tree, implementing multi layer

perceptron of Neural Networks and probability based algorithm like

Naïve Bayes.

2.2 Data Mining: is defined as acquiring information or

knowledge from huge amount of data. In the data mining area

various algorithms which can be referred as intelligent algorithms

are applied to the data in order to get different patterns.

Keywords: Data mining, Multilayer Perceptron (Neural Networks),

J48 (Decision Trees)

0853756 3 DATA MINING

MSC INTERNET SYSTEMS ENGINEERING

2.3 Implementation of Algorithms:

In this section we will come across how the Decision Trees, Naïve

Bayes algorithm and Neural Networks are being implemented to the

dataset and are compared to each of the algorithm based on its

results.

2.3.1 Dataset Introduction: Habermans Survival

Data

The dataset Habermans survival data is been chosen from the

archives of UCI – Machine Learning Repository KDD [2]. The dataset

is the multivariate data type and its default task is classification

which consists of 306 instances and 3 attributes and one class

attribute. All the instances, attributes and class attributes are

represented in CSV (comma separated values) format.

2.3.2 Dataset Cleaning:

According to the UCI Archives there are some datasets which posses

missing values and inconsistent values but the dataset Habermans

Survival Data has no missing values. If there are missing values

they are represented as ‘?’ in the data.

2.3.3 Dataset Transformation:

The dataset which is downloaded is then stored in a word document.

The following is the format to save the data.

@Relation ‘Dataset name’

@Attribute ‘------‘

@Attribute ‘------‘

@data

‘Downloaded data’

After following this then the file is saved as ARFF (.arff - Attribute

Relation File Format) format in order to use it in WEKA and is then

opened with WEKA software in order to get the patterns.

2.3.4 Attribute Description:

Attribute Description Type

0853756 4 DATA MINING

MSC INTERNET SYSTEMS ENGINEERING

Patient age Patients age at the Continuous

time of operation.

Operation year Patient’s year of Discrete

operation. (1958-

1990)

Number of Number of positive Discrete

nodes auxiliary nodes

detected.

2.3.5 Class Description:

Class Description

Survival status Survival status of the patient.

2.3.6 Input Encoding/ Input Representation:

The attributes in the dataset consists of both Discrete and

Continuous data types. A simple definition of these data types are, if

the data is changing for example age and experience then the data

is Continuous. If the data remains unchanged such as gender,

name, id etc then the data is Discrete. Here in the data set which

was chosen has a continuous data attribute which can cause some

defects in accuracy and performance of the dataset. Continuous

data can be converted into discrete data by following supervised

discrete procedure in WEKA.

2.3.7 Input Type:

The input file which we open in WEKA should be in .arff file format.

ARFF – Attribute Relation File Format.

0853756 5 DATA MINING

MSC INTERNET SYSTEMS ENGINEERING

Fig: ARFF viewer of the dataset.

2.4 Implementing the Algorithms:

2.4.1 Reasons why WEKA is chosen:

In this coursework I have implemented the algorithms to a particular

dataset using software called WEKA. The main reason for choosing

this software is its user friendly access to the beginners. Various

pre-processed classification, clustering and association algorithms

are being implemented by giving an input file which must be .arff

format. The reason why the file should be in ARFF format is mostly

various data mining tools operate with CSV but in WEKA the file has

to be modified by altering the description of the dataset.

Data format for WEKA: Input file

@RELATION HabemansSurvivalData

@ATTRIBUTE PatientAge NUMERIC

@ATTRIBUTE operationYear NUMERIC

@ATTRIBUTE NumberOfNodes NUMERIC

@ATTRIBUTE class {1, 2}

@DATA

0853756 6 DATA MINING

MSC INTERNET SYSTEMS ENGINEERING

30, 64, 1, 1

30, 62, 3, 1

And so on

One more tool which I have compared with WEKA is CRUISE ()

2.4.1.1 Implementation of CRUISE:

CRUISE is one of the data mining software mainly used for

developing decision tree classifications. In this tool the input is in

two files where as in WEKA the input is just one file. Here in CRUISE,

we give the input in two ways one id the description file and another

is the data file which can be seen in the Fig 2below.

Data format for CRUISE: 1st input file – Descriptive file

Habermans.txt

? (If there are any missing values?)

Column, varname, vartype

1, PatientAge, n

2, operationYear, n

3, NumberOfNodes, n

4, class, d

2nd input file – Data file

30, 64, 1, 1

30, 62, 3, 1

And so on.

0853756 7 DATA MINING

MSC INTERNET SYSTEMS ENGINEERING

Fig 2: CRUISE input format.

0853756 8 DATA MINING

MSC INTERNET SYSTEMS ENGINEERING

2.4.2 Implementing algorithms in WEKA:

The dataset which is saved in .arff format is then retrieved through

WEKA tool for implementing the classification algorithms.

1. After opening the file if there are too many continuous data

types then the dataset has to follow supervised discrete

procedure under filters.

Fig: Discretize procedure for the dataset.

2. Then after choosing the filter click on the classify tab to

choose the algorithm. By clicking on the ‘choose’ button under

classifier we can choose j48 under trees and click start.

0853756 9 DATA MINING

MSC INTERNET SYSTEMS ENGINEERING

Fig: choosing J48 Algorithm

3. In this coursework we come across three algorithms J48 –

Decision Tree, Naïve Bayes and Multi Layer Perceptron –

Neural Networks.

0853756 10 DATA MINING

MSC INTERNET SYSTEMS ENGINEERING

4. After implementing the J48 algorithm a multi layer perceptron

is implemented. The Multi Layer Perceptron is implemented

under functions.

Fig: Implementation of Multi layer Perceptron.

5. After Implementing Multi layer Perceptron another algorithm

Naïve Bayes is introduced to the dataset.

0853756 11 DATA MINING

MSC INTERNET SYSTEMS ENGINEERING

Fig: Implementation of Naïve Bayes algorithm

2.4.3 In depth analysis of Results of three

algorithms J48, Multi Layer Perceptron and Naïve

Bayes:

Here after implementing the algorithms WEKA produces output

based on accuracy and performance of the dataset. The evaluation

of these algorithm results are based on correctly classified

instances, incorrectly classified instances and misclassified

instances (if applicable).

2.4.3.1 Evaluation of J48:

Here in J48 algorithm the results are compared on the basis of ‘Test

Options’ through which the dataset can be tested using J48 decision

tree. Each results of accuracy differ from each ‘Test Option’ used.

The evaluation is also done on the basis of raw and discrete data.

Data J48- J48-

type Binary Binary

split=Fal split=Tr

se ue

Raw 71.8954 71.8954

Data % %

0853756 12 DATA MINING

MSC INTERNET SYSTEMS ENGINEERING

Discret 72.2222 72.2222

e Data % %

Table: Accuracy Rate in J48.

Algorithm Test Option Results

J48 Use 73.5294%

Training Set

J48 Cross 72.2222%

Validation

(10 Folds)

J48 Percentage 76.9231%

Split (66%)

Table: Results based on Test options

Algorithm Test Confusion

Options Matrix

J48 Use 225 0

Training 81

Set 0

J48 Cross 210 1

Validation 5

(10 Folds) 70

11

J48 Percentag 71 1

e Split 2

(66%) 12

9

Table: Confusion Matrix based on Test Options

2.4.3.2 Calculation of Accuracy and Confusion

Matrix in J48:

Total number of Instances: 306

Accuracy rate = number of correctly classified instance/

number of instances

Accuracy rate of J48 (test option- use training set) = 225/306 =

0.735294= 73.5294% (as shown in table)

Similarly accuracy rate of J48 – Cross Validation is done.

But when we use Percentage Split (66%) the Accuracy rate is

calculated in the following way:

As it says 66% the remaining 34% of total number of instances

(306) is = 104

So here the total number of instances used is 104 and Accuracy rate

= 80/104= 0.769231= 76.9231% (as shown in table)

0853756 13 DATA MINING

MSC INTERNET SYSTEMS ENGINEERING

Confusion matrix of J48 (Cross Validation) is solved in the following

way:

a b

210 15

70 11

A B Result

210+ 15 225

70+ 11 81

306(total no

of instances)

2.4.3.3 Evaluation of Multi Layer Perceptron:

Here in this Multi Layer Perceptron algorithm the data is evaluated

on both Raw and Discrete data and the results are compared on the

basis of accuracy and time taken to build the model.

Data type Multi Time

Layer taken to

Perceptro build

n

Raw Data 72.8758% 0.47

seconds

Discrete 73.5294% 0.42

Data seconds

Table: Accuracy of Multi Layer Perceptron.

After calculating and comparing the accuracy of the data then errors

are classified. The accuracy rate is more to discrete data when

compared to raw data because in supervised discretization class

label is known and also there is improvement in time taken to build

the model.

0853756 14 DATA MINING

MSC INTERNET SYSTEMS ENGINEERING

Error classifier gives the graph which shows the wrongly classified

instances. By clicking on the instance in the graph it displays

“instance info” which shows “wrongly classified instance number”

and what could be the prediction class. By changing the prediction

class in the data there could be a certain amount of increasing

difference in accuracy.

2.4.3.4 Evaluation of Naïve Bayes:

The Naïve Bayes algorithm is implemented by taking both the raw

and discrete data. The raw data is changed to discrete in supervised

filter method.

Data Supervised Supervised

Type Discretization=Fa Discretization=T

lse rue

Raw 74.8366% 72.8758%

Discret 72.8758% 72.8758%

e

Table: accuracy rate of Naïve Bayes.

Here in Naïve Bayes algorithm there is decrease in accuracy rate

when the data is discretized in supervised filter. But when the data

is changed to discrete in Unsupervised filter there is increase in

accuracy rate (76.7974%).

0853756 15 DATA MINING

MSC INTERNET SYSTEMS ENGINEERING

2.5 Overall Accuracy rate of the algorithms

implemented:

The table here shows the accuracy rates of the algorithms which are

chosen to evaluate the dataset.

data j48 bs=f multi layer naïve j48 bs=t naïve

type perceptron bayes bayes

Disc=F disc=t

Raw 71.8954 72.8758% 74.8366 71.8954 72.8758

% % % %

Discret 72.2222 73.5294% 72.8758 72.2222 72.8758

e % % % %

Time 0 sec 0.47sec 0sec 0.02sec 0sec

taken

to

build

for

Raw

data

Time 0 sec 0.42sec 0sec 0.02sec 0sec

taken

to

build

discret

e data

Table: percentage of instances classified correctly.

From the above table the accuracy rate of Naïve Bayes is more

when the supervised discretization is set to false for raw data. And

when the raw data is discretized the highest accuracy rate is shown

by Multi layer perceptron. Here in this comparison of accuracy rates

with the algorithms there is not much difference in the percentage.

There is small amount of increase only when the raw data is

discretized.

2.6 Difficulties faced while using WEKA:

• Initially saving the downloaded file into a text document

and saving it to .arff format had some errors.

• It was reduced by saving the file in an already saved .arff

file.

• WEKA is easy to use when compared to CRUISE because

WEKA has only one input file where as CRUISE have two

inputs (data file, description file).

3. Research analysis:

In this section detailed analysis between the two data mining

techniques is done based on results obtained.

3.1 Introduction:

0853756 16 DATA MINING

MSC INTERNET SYSTEMS ENGINEERING

As my chosen dataset deals with Medical and Health care industry

which is growing undoubtedly day by day with the patients

electronic health records. In terms of Medical and health care

industry data mining can be defined as a research every minute

about new diseases and its cure. Here research is nothing but to

acquire, retrieve and gain knowledge from small to large amount of

available data.

Therefore to get the patterns from the data I have used two

techniques Decision Trees and Neural Networks which are one of

the techniques in data mining and also comparison and evaluation

of J48 and Multi Layer Perceptron algorithm based on Neural

Networks.

3.2 Decision Tree:

According to my view from my past learning in Unified Modelling

Language (UML) a decision tree can be defined as a visual

representation of the data which makes human understandable. A

decision tree has a root, nodes, sub nodes.

In order to get the patterns from huge amount of available

data various approaches are being used and Decision tree is one

among the methods which is adopted in areas such as science and

technology, knowledge discovery databases and data mining. [1]

Decision tree calculates and predicts the input which is given

which could be numeric or text and it handles the input easily and

generates decision tree even when there are missing values,

unclassified instances, wrongly classified instances. If the input data

has huge amount of instances there could be some difficulties in

understanding the decision tree according to which node is

connected to which of the decision node.

Fig: Decision Tree

3.3 Neural Networks:

0853756 17 DATA MINING

MSC INTERNET SYSTEMS ENGINEERING

In the data mining tools Neural Network can be used to solve data

patterns and relations between input and output data which are

complex in nature. Using this Neural Networks information can be

gained from large databases. Neural Networks can be used in

clustering algorithms, classification pattern recognition, function

approximation and prediction. Neural Networks are assigned to only

specific tasks.

A simple artificial neuron has input and output based on the

data but when compared to human brain contains more than

10billion nerve cells or neurons. Neural Networks are used in Face

recognition and each pixel is divided into binary splits. If Neural

Networks is operating 10 face recognitions, it first looks for 10

common features.

In data mining Multilayer Perceptron is under Neural

Networks algorithm which has input values, output values and

hidden values. As we can see the output model generated in WEKA

which shows that the input is assigned as weights.

Fig: Neural Network for the chosen dataset.

3.4 Comparison of J48 (Decision Tree) and Neural

Networks (Multilayer Perceptron):

A decision tree has leaf nodes and decision nodes which are similar

to use cases in UML where the actor has use cases represented in

tree fashion. Leaf nodes are nothing but classification of the

instances and decision nodes are the nodes used to test the leaf

nodes. In Neural Networks there are neurons which are used for

classification.

J48 is used as algorithm in decision trees and Multilayer

algorithm is used in neural networks. Implementing J48 to a dataset

can visualise the decision trees and implementing Multilayer

perceptron and changing the properties such GUI (Graphic User

Interface) to true gives the neural network for the chosen dataset.

0853756 18 DATA MINING

MSC INTERNET SYSTEMS ENGINEERING

In Multilayer Perceptron the values of the binary split cannot

be changed where as in J48 the binary splits can be modified to

‘true’ which can increase its accuracy rate. The accuracy rate of

Multilayer perceptron is relatively high when compared to J48

decision tree.

3.5 Results based on J48 and Multilayer Perceptron:

From the above analysis of J48 and Multilayer perceptron results

based on accuracy gives a clear view that Multilayer perceptron has

more accuracy rate than J48 for both the raw data and discrete

data. Neural Networks take more time to compile when compared to

J48 decision tree because of its high network analysis of data. The

time taken for compiling the model in Multilayer perceptron is 0.47

seconds and in J48 the time taken to build the model is 0 seconds.

Multilayer perceptron gives the output which is more easily

understood by the users where as decision trees sometimes can be

very large and complex in nature.

3.6 Conclusion:

The Dataset used in this coursework deals with Medical and Health

care area where the database has huge amount of information. Data

mining in this area means applying different kinds of algorithms to

derive patterns of data. By applying algorithms like J48 and

Multilayer perceptron efficiency is maintained throughout the data.

Even though by using the WEKA tool there are some accuracy

differences between the algorithms which are applied, each

algorithm plays a vital role in developing Decision trees or Neural

Networks.

References:

1. 1.Rokach, L. and O. Maimon (2008). Data mining with decision trees:

theory and applications, World Scientific Pub Co Inc.

2. Li, Y. and E. Hung "Building a Decision Cluster Forest Model to

Classify High Dimensional Data with Multi-classes." Advances in

Machine Learning: 263-277.

3. http://archive.ics.uci.edu/ml/datasets.html

4. Han, J. (1996). Data mining techniques, ACM.

0853756 19 DATA MINING

MSC INTERNET SYSTEMS ENGINEERING

5. Berkhin, P. (2006). "A survey of clustering data mining techniques."

Grouping Multidimensional Data: 25-71.

6. Gardner, M. and S. Dorling (1998). "Artificial neural networks (the

multilayer perceptron)—A review of applications in the atmospheric

sciences." Atmospheric Environment 32(14-15): 2627-2636.

7. SUTER, B. (1990). "The multilayer perceptron as an approximation to a

Bayes optimal discriminant function." IEEE Transactions on Neural

Networks 1(4): 291.

8. Mitchell, T. (1997). "Decision tree learning." Machine Learning 414.

9. Lewis, D. (1998). "Naive (Bayes) at forty: The independence

assumption in information retrieval." Machine Learning: ECML-98: 4-

15.

0853756 20 DATA MINING

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Security Issues in Clinical InformaticsDocument15 pagesSecurity Issues in Clinical InformaticsRuna ReddyNo ratings yet

- Estate Agent Internet SystemsDocument27 pagesEstate Agent Internet SystemsRuna ReddyNo ratings yet

- Middleware and Bugs in Windows: Current Case Studies Marepally Arunkumar Reddy 0853756Document22 pagesMiddleware and Bugs in Windows: Current Case Studies Marepally Arunkumar Reddy 0853756Runa ReddyNo ratings yet

- Comparison of Middleware Technologies in Windows XP and Windows VistaDocument15 pagesComparison of Middleware Technologies in Windows XP and Windows VistaRuna ReddyNo ratings yet

- Web Services Current Trends and Future OpportunitiesDocument28 pagesWeb Services Current Trends and Future OpportunitiesRuna Reddy100% (1)

- Data Mining With RattleDocument161 pagesData Mining With Rattleelz0rr0No ratings yet

- Data Mining Research Papers PDFDocument5 pagesData Mining Research Papers PDFnekynek1buw3100% (3)

- Lecture2 DataMiningFunctionalitiesDocument18 pagesLecture2 DataMiningFunctionalitiesinsaanNo ratings yet

- FRS MRS Report PDFDocument55 pagesFRS MRS Report PDFYshi Mae SantosNo ratings yet

- Data Mining and Warehousing - Christopher Estonilo DIT - LatestDocument6 pagesData Mining and Warehousing - Christopher Estonilo DIT - LatestMultiple Criteria DssNo ratings yet

- Intro To DM2Document92 pagesIntro To DM2Alfin Rahman HamadaNo ratings yet

- Neural - N - Problems - MLPDocument15 pagesNeural - N - Problems - MLPAbdou AbdelaliNo ratings yet

- Managing Cyber ThreatsDocument334 pagesManaging Cyber ThreatssvsivagamiNo ratings yet

- The Role of Data Mining Techniques in Relationship Marketing Neagoe CristinaDocument5 pagesThe Role of Data Mining Techniques in Relationship Marketing Neagoe CristinaMohd Shah RizalNo ratings yet

- 2 Buss Intel AnalyticsDocument43 pages2 Buss Intel AnalyticsNisa SoniaNo ratings yet

- Prediction of Heart Disease Using A Hybrid Technique in Data Mining ClassificationDocument3 pagesPrediction of Heart Disease Using A Hybrid Technique in Data Mining ClassificationGunjan PatelNo ratings yet

- MonashOnline Graduate Diploma in Data ScienceDocument4 pagesMonashOnline Graduate Diploma in Data Sciencebluebird1969No ratings yet

- D1T3 - Clarence Chio and Anto Joseph - Practical Machine Learning in InfosecurityDocument33 pagesD1T3 - Clarence Chio and Anto Joseph - Practical Machine Learning in InfosecuritypmjoshirNo ratings yet

- 2011 PG Elecive-Courses Ver 1-10Document31 pages2011 PG Elecive-Courses Ver 1-10aftabhyderNo ratings yet

- Materi 4 - Analisis Big DataDocument30 pagesMateri 4 - Analisis Big DataGerlan NakeciltauNo ratings yet

- Tut 4 - FromStats2DM - ClusteringDocument20 pagesTut 4 - FromStats2DM - ClusteringTareq NushratNo ratings yet

- Studio RM 2.0 Release NotesDocument39 pagesStudio RM 2.0 Release Notesthomas.edwardsNo ratings yet

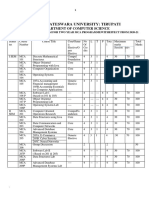

- Sri Venkateswara University: Tirupati: Department of Computer ScienceDocument24 pagesSri Venkateswara University: Tirupati: Department of Computer ScienceANKISETTI BHARATHKUMARNo ratings yet

- Topic 1 ISP565Document58 pagesTopic 1 ISP565Nurizzati Md NizamNo ratings yet

- Data Mining Applications and Feature Scope SurveyDocument5 pagesData Mining Applications and Feature Scope SurveyNIET Journal of Engineering & Technology(NIETJET)No ratings yet

- TextMining PDFDocument47 pagesTextMining PDFWilson VerardiNo ratings yet

- The Structure of ERPDocument27 pagesThe Structure of ERPSumit Gupta50% (2)

- Published IEEE FormatDocument5 pagesPublished IEEE FormatRashmika VarudharajuluNo ratings yet

- DMBI SimplifiedDocument28 pagesDMBI SimplifiedRecaroNo ratings yet

- PHI320 Ch01 PDFDocument12 pagesPHI320 Ch01 PDFViqar AhmedNo ratings yet

- Machine LearningDocument3 pagesMachine LearningMáňòj M ManuNo ratings yet

- Thesis Mining CareersDocument7 pagesThesis Mining CareersBuyingPaperErie100% (1)

- Data Mining Using Sas Enterprise Miner: Mahesh Bommireddy. Chaithanya KadiyalaDocument40 pagesData Mining Using Sas Enterprise Miner: Mahesh Bommireddy. Chaithanya KadiyalaEllisya AnandyaNo ratings yet

- Data Warehousing and Data Mining - HandbookDocument27 pagesData Warehousing and Data Mining - Handbookmannanabdulsattar0% (2)

- An Internet Based Student Admission Screening System Utilizing Data MiningDocument7 pagesAn Internet Based Student Admission Screening System Utilizing Data MiningcharlesNo ratings yet