Professional Documents

Culture Documents

The Past Present and Future of Neural Networks For Signal Processing

Uploaded by

muhammadrizOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

The Past Present and Future of Neural Networks For Signal Processing

Uploaded by

muhammadrizCopyright:

Available Formats

Anniversary Guest Editor's Message

I

n this issue, the Signal Processing Society's works and report recent progress. Topics covered

Neural Networks for Signal Processing Tech- include dynamic modeling, model-based neural net-

nical Committee (NNSP-TC) provides the works, statistical learning, eigen-structure-based

third article in our 50th Anniversary series. processing, active learning, and generalizationcapa-

ComDared to the bilitv. Current and PO-

number of submissions to ICASSP in the neural- cal applications.

networks area, the well-attended annual Neural Essentially, neural networks have become a very ef-

Networks Signal Processing workshops, and a fective tool in signal processing, particularly in vari-

number of special journal issues organized by the ous recognition tasks.

Technical Committee. Furthermore, the Technical Many of you may recall that, during the infancy

Committee is special in that its impact has gone be- of the development of neural-networks technology,

yond the SPS's boundary, because neural networks one thing that excited people's interest was its anal-

really form a core technology of many other socie- ogy to biological systems. Even though there is still a

ties of IEEE. The IEEE Neural Networks Council lot to be understood about the learning processes of

represents the technology's interests across a dozen human neural systems, artificial neural networks

IEEE societies, with strong participation from have, without a doubt, provided solutions to many

members of the Neural Networks for Signal Proc- problems in the signal-processingarea. As you read

essing Technical Committee in Council conferences this article, you will understand how that happened.

and publications. Enjoy!

In this article, members of the NNSP-TC review, Tsuhan Chen

with great insight, the hndamentals of neural net- Guest Editor

28 IEEE SIGNAL PROCESSING MAGAZINE NOVEMBER 1997

identify unknown func

Tulay A d d , Univ. of Maryland, Baltimore County

designed to serve as a re Charles Bach", Naval Research Laboratory

Andrew Back, Laboratory for Artificial Brain Systems,

Andrzej Cichocki, Laboratory for Artificial Brain

learning theory, neural m Anthony G . Constantinides,Imperial College

Bert de Vries, David Sarnoff Research Center

Kevin R. Farrell, T-NETIX, Inc.

Horacio Franco, SRI International

Hsin Chia Fu, National Chiao-Tung University

Ling Guan, University of Sydney

Lars Kai Hansen, Technical University of Denmark

Shigiru Katagiri, ATR (TC Secretary)

Jan Larsen, Technical University of Denmark

Fa-Long Luo, Universitaet Erlangen-Nuernberg

Elias S. Manolakos, Northeastern University

Nelson H. Morgan, International Computer Science

Mahesan Niranjan, Cambridge University

Ananth Sankar,SRI International

Volker Tresp, Siemens AG, Central Research

Marc M. Van Hulle, Laboratorium voor

Neurofysiologie of Belgium

Elizabeth J. Wilson, Raytheon Company

special issues for the

NOVEMBER 1997 IEEE SIGNAL PROCESSING MAGAZINE 29

and shared weights. A neural-nemo specializing in a particular area of human natural lan-

classifier is also used to provide ro sents a significant advance in natural-

hand-printed English text in new models of Apple Com- ness and adaptability to Werent voice qualities over

puters Newton Messagepad [ and concatenative approaches [9].

I Automobile Control: E firing con- tem: By integrating a model-based

trols are being developed by [3] using a network as a low-level vision subsystem and a hierarchi-

recurrent neural network trained with multistreaming, cally structured neural array for higher-level analysis and

which is an adaptation of the Decoupled Extended recognition tasks, the neural vision system has been used

Filter training. Ford researchers believe that recurrent net- for a number of complex real-worldimage-processingap-

works are the mostpromising technology to help meet the plications such as computer-assisted diagnosis of breast

new stringent emission levels of the Clean Air Act. cancers in digital mammograms; biomedical vision to

A Self-organking Feature neurologicaldisorders; and identifyrngand classi-

map (SOM) converts CO ng underwater mines through 3 - 0 sonar image proc-

tionships between high- essing and visualization. For more details, please refer to

geometric relationships on a the section titled Model-BasedNeural Networks for Im-

thereby compresses information while preserving the age Processing, contributed by Ling Guan and Sun-

most important topological and metric relationships of Yuan Kung.

the primary data elements. The SOMs have been applied A 3-D Heart Contour Delineation: Neural networks

to hundreds of practical systems [4]. have also been used in representing 3-D objects, e.g., a

li Aircraft Control: Neural controllers for aircraft are neural-network architecture is used to represent 3-D en-

being developed by several groups [51 both extending op- docardial (inner) and epicardial (outer) heart contours

timal control ideas to nonlinear systems as we and quantitativelyestimate the motion of left ventricles of

ideas based on dynamic programming (adaptive critics). human hearts from ultrasound images acquired wing ul-

The LoFLYTE hypersonic waver is a joint project of trasonic echocardiography. The absolute error measured

NASA and the US Air Force to design experimental air- compares favorably with the human interobserver vari-

craft reaching Mach 5 speeds. distances. With this unique

critics) are being developed fo ble to systematicallyand ef-

curate Automation Inc. fectively measure the amount of 3-D heart motion [101.

A Chaotic Dynamic Reconstruction:Chaotic dynamic The great advantage of MLPs for classification is that

reconstruction has been applied to model complex natu- they carve the input space through the use of saturating

ral phenomena generated by de stic nonlinear phe- nonlinearities in the (neural) processing elements (PES)

nomena such as sea clutt . The universal using directly the information contained in the data and

approximation properties of neural networks (either through a well-established learning algorithm (back-

MLPs or radial-basis functions) coupled with regulariza- propagation) [111.Multilayer perceptrons are universal

tion have been shown to idenufy the nonlinear system approximators [12], and an important theorem by Bar-

that produced the time series [7]. The section titled ron [ 131shows that MLPs are very efficient for fimction

Neural Networks for Dynamic Modeling, written by approximation in high-dimensional spaces. Unlike poly-

Jose C. Principe, offers a clear perspective of creating a nomial approximators, where the rate of the convergence

nonlinear model for the time series using only the avail- of the error decreases with the dimension of the input

able samples of the time series. space, the rate of convergenceof MLPs is independent of

A Cocktail-Party Problem: Separation of blindly the input space dimensionality.This may explain the rea-

mixed signals (such as voices in a better than other statistical pro-

party effect), where neither knowle

of the mixing process is availa

lem in inverse modeling and

equalization, teleconferenci

ony). It has been shown tha complexity of O(Nz),where N is the number of process-

the mixing-system parame ing elements (neurons). This savings is due to the clever

yond second-orderstatistic use of the topology to help com the sensitivities.The

shown to be naturally a importance of the back-pro

their nonlinear activati tive systems is comparable

example capabilities. Bell and Sejnowski [8], among transform (EFT) algorithm

many others, exemplified such a solution recently, and

much of the work in the area is being propelled by the sonably well the problems and applications of static non-

use of nonlinear systems. linear mappings (e.g., system identification and pattern

A Natural Speech Synthesizer: A text-to . Neural networks were very important in

thesizer including five cooperating neural networks, each this arena since they have injected new blood in applied

30 IEEE SIGNAL PROCESSING MAGAZINE NOVEMBER 1997

statistics. The section written by Jenq-Neng Hwang ti- In most signal-processing problems, data arrives se-

tled A Unified Perspective of Statistical Learning Net- quentially and the underlying systemsgenerating the data

works, brings close the missing links between the tend to be nonstationary. For these problems, one needs

model-free statistical regression/classification with the sequential (adaptive)training algorithms (such as the ex-

nonparametric neural-network learning. tended Kalman filter) as well as clever use of prior knowl-

Besides the least-squares methods for linear systems, edge for model selection. The section titled Step Size

the eigen-decomposition (ED) and singular-value de- Selection for On-Line Training of Adaptive Systems,

composition (SVD) methods have been the most power- written by Scott C. Douglas and Andrzej Cichocki, re-

ful tools in most fields of signal processing. Despite many views learning techniques (especially the learning step-

proposals of recursive and adaptive algorithms to per- size parameters that control the degree of averaging)used

form ED and SVD, a good compromise involving per- in sequentialtraining of adaptive systems, which continu-

formance, implementation, and computational ally adapts its internal states as new signal measurements

complexity has not been reached yet. As an alternative, become available.

neural-network approaches have recently attracted much Multilayer perceptrons are purely static and are incapa-

attention as reviewed in the section titled Weural Net- ble of processing time information. One way to extend

works for Eigen-Structure Based Signal Processing, MLPs to time processing is by creating a time window

written by Fa-Long Luo and Rolf Unbehauen. over the data to serve as memory of the past as first done

The other big challenge is how to go about extracting in the time-delayneural network (TDNN) [ 141.Alterna-

more information from the data beyond second-order tively, dynamic neural networks bring the memory inside

statistics by incorporating more domain knowledge, in- the neural-network topology. There are basically two

stead of purely data-driven training. As described in the ways to create a dynamic neural network: either the PES

section titled From Pattern Classification to Active are extended with local memory structuresor the network

Learning, written by Yu-Hen Hu, one such technique is topology becomes recurrent. The first type is simpler to

known as impmancesamplinJ. Based on ones knowledge study than the second with respect to stability.Moreover,

of the underlying system, the importance-sampling the memory structures are generally linear, which means

method samples the data from a misted dim*butionso that that the concepts of filtering and linear modeling can be

the variance of the parameter estimate can be made sig- brought to bear in their study [15]. The gamma model

nificantly smaller than that obtained using random sam- [161 is a good example of the use of linear filtering con-

pling. The trick here is to find this twisted distribution. In cepts to study dynamic networks and shows that dynamic

machine-learning literature, another variance-reduction networks are prewired neural networks for the processing

technique, called active leamind, has received much atten- of time information. Recently, Sandberg [171 proved that

tion recently. In active learning, the learning algorithm MLPs extended with short-term memories are universal

dictates which point to sample. The active learning does approximators for a large class of functional mappings.

so by selecting the most informative sample where the Sontag showed that, unlike linear systems, a nonlinear sys-

most knowledge can be gained. tem provides a unique model for system identification

Doing well on unseen data may at first seem unattain- [ 181. These works establishthe foundation to use dynamic

able, but the ability to generalize in very complex envi- neural networks for nonlinear system identification.

ronments is nevertheless one of the most striking Recurrent connections across the topology provide

properties of neural systems, and indeed one of the rea- very powerful mappings but bring two problems: net-

sons that neural networks have been shown useful in works are not guaranteed to be stable, and they cannot be

practical time-series applications. However, the training trained with standard back-propagation. Clever but par-

with MSE and the MLP topology do not control directly tial recurrent topologies have been proposed to avoid

the generalization ability, so the designer has to deal di- these problems. A simple extension to the existing feed-

rectly with this issue. A rigorous study of the generali- forward structure to deal with temporal sequence data is

zation capability in neural networks for time-series the partial&recuwent network, also called simple recurrent

prediction is given in the section titled Generalization: networks (SRNs). An SRN has the connections that are

The Hidden Agenda of Learning, written by Jan mainly feed-forward but include a carefully chosen set of

Larsen and Lars Hansen. feedback. In most cases the feedback connections are

fued and not trainable. Due to recurrency, it remembers

cues from the past and does not appreciably complicate

Future Challenges of NNSP the training procedure. The most widely used SRNs are

Time processing is probably the greatest challenge with Elmans network [ 191 and Jordans network [20]. In gen-

neural networks, although others exist, too. There has not eral, researchers have to face some approximation defi-

been a genuine attempt to solve time-varying problems ciencies in using SRNs. Narendra extended the ARMA

with nonlinear structures. Time is considered an extra di- models with nonlinearities [21] and recently the same

mension for the representation of information instead of group provided observability and controllability condi-

being utilized directly for information processing. tions for the recurrent networks around equilibrium

NOVEMBER 1997 IEEE SIGNAL PROCESSING MAGAZINE 31

points. Model stability has to be studied using Lyapunov closely related problem of chaotic dynamic reconstruc-

stability, but progress has been slow in this area with tion also known as dynamic modeling. Dynamic model-

some results provided by Sontag [22]. ing attempts to identify the nonlinear system that

In terms of learning algorithms there are two basic ap- generates the observable time series. This method is very

proaches to train dynamic networks: real-time recurrent important in modeling natural phenomena, developing

leawin8 (RTRL) [231 and back-propagation through synthetic models for ta, and producing improved

time (BPTT) [241. They produce equivalent gradient up-

dates (provided the initial conditions are identical), but rs a number of challenging

they chain the computations very differentlyand result in al networks for signal proc-

different properties. RTRL is global in the topology and essing. Many technical challenges such as the principle

local in time, while BPTT is local in the topology and methods of dealing with missing data, fusion of mforma-

global in time (anticipatory). So if on-line learning is re- tion from different sources of information, dealing with

quired, RTRL should be utilized ( B M T is computation- the large variability in the way experts treat information,

ally more efficient but has storage requirements that are and thorough validation of neural-networksolutions are

dependent upon the trajectory length). The recent devel- likely to be addressed in the near hture. Mahesan Niran-

opment of decoupled extended Kalman fdter (DEKF) jan provides the section titled Examples in Medical Ap-

[25] learning provides a powerful alternative to the two plications,)) which describes two studies and discusses

above-mentionedprocedures. lessons to be learned from them.

A hidden Markov model (HMM) is a doubly stochas- logies represent a new opportunity

tic process with an underlying stochastic process that is ong a variety of media such as

not observable (i.e., hidden), but can only be observed video, text, and graphics. The tech-

through another set of stochastic processes that produce change the way we access infor-

the sequence of observed symbols [26]. The trellis dia- s, communicate, educate, learn,

gram realization of an HMM can be considered as a and entertain [29,301. Future multimedi

B M T network expanding in time since its connections will need to handle inform th an increasing level

(transition probabilities) are carrying the information of intelligence, i.e., autom nition and interpre-

about the environment, and it consists of a multilayer net- tation of multimodal signals. The key attributes of neu-

work of simple units activated by the weighted sum of the ral processing essential to intelligent multimedia

unit activations at the previous iteration. In addition, the processing were already discussed in a section in [301

learning technique used in HMMs has a close algorithmic earlier this year, In that article, it was argued that adap-

analogy with that used in the BPTT networks [271. ork technology offers a promising and

Neural networks have not surpassed the power of to a broad spectrum of multimedia ap-

HMMs for speech recognition, while it is accepted by the . The reason why neural networks should be

practitioners that HMMs do not map well to the speech- technology for intell@entmultimedia

recognition problem. Ingenuity is needed to propose new ve learning capability.

ways of looking at time processing, and biology may be why neural networks

again a motivating factor since humans excel in the extrac- ral vital multimedia

tion of information in time signals. The section titled

Applications of Neural Networks to Speech Recogni-

tion contributed by Nelson Morgan and Horacio

Franco, specifically discusses the issues of integrating the

NN techniques into HMMs. More specifically, in these erest in neural net-

speech-recognition systems, neural networks trained to

classifi.the state types can be shown (under some simple

assumptions) to estimate the posterior probability of

state types given the acoustic observations. These poste-

rior probabilities can be converted to observation prob-

abilities (using Bayes Rule) and used within the classical

HMM framework. Networks used in this way have

shown to be comparable in performance to larger and

more complex probabilistic estimators for large-

vocabulary, continuous-speech recognition.

Neural networks have recently been shown to outper-

Refe

form linear models in the task of time-series prediction

1. Y. LeCun, et al. Comparison of Learning Algonthms for Handwritten

[281, which opens up a lot of applications in engineering Digit Recogmtion, International Conference on Artificial Neural Net-

as well as in the financial and service industries. Dynamic works, pp. 53-60, Paris, 1995.

neural networks have also been recently applied to the

32 IEEE SIGNAL PROCESSING MAGAZINE NOVEMBER 1997

2.L. Yaeger, R. Lyon, B Webb, Effective Training of A Neural Network

Character Classifier for Word Recognition,

mam Rocesnng Sysstems 9, pp. 807-813,M

3.G. Puskunus, L. Feldkamp, Weumconrrol Hidden Markov Models,

with Kalman Filter Trained Recurrent Ne

WU&, 5(2).279-297,1994.

ystolic N e d Network Archi-

4.T Kohonen, E. Oja, 0. Sunula, A. Visa, Trms. m ASSF, Vol 37,No.

nons of The Self-OrganizingMap, Proc

1384,October 1996.

uture of Tune Series,

5.P. Werbos, Approximate Dynamic Progr

S& Pmmna,Editors:

March 1996.

M y a m , 13(2):24-49,

30.T. Chen, A. Katsagg ung, Editors, The Past,Present, and

LEIS S t p a l Rwcm~n5%&,

8. T. Bell, T. Sejnowskt,

Source Deconvolutlo

sion with Neural Networks: A Recurrent

Eurospeech 1997.

on Pattern Recognition, Vol. 8,No. 1, pp. 141-

11. D.E. Rumelhart, G.E. Hinton, and R.J. W

sentation by error propagation, in Para&

12.G. Cybenko, uApproximation by Superpos

Mathematics of Conml,S& a d Sys~ms,

13.A.Barron, Complwty Regularization

ral Networks, in Nmparametric Functro

(Ed. ROUSS~S), pp. 561-576,1991.

Recogninon Using Time-Delay Neural s to create an em-

Sped, and S&d Rwcmh~~,

trw, 37(3):

15. J.C. Principe, B.deVries, P. Oliveira, Th

Adaptrve IIR Filters with Restricted Feedb

Proc., 41(2):649-656, Feb. 1993.

16.B de Vnes and J.C. Principe, The Gamma Model A I

time series can be

condtions that

17 I.W. Sandberg and L. Xu, Uniform Ap

works, Neural Nemarks, 10:781-784,l

18.E.Sontag, H.Sidgelmann, On the

works,] Cmp. S p . SCE.50:132-15

179-211,1990.

lation dimension

20. M.I. Jordan, R.A Jacobs, Learningto

Forward Modeling, Advances in NIPS

21.K. Narendra and A. Annaswamy, Stabk Q

1989.

22.E. Sontag, Recurrent Neural Networks: S

in DGdrng with Carnpleag:The Neural

wick, and Kurkova), Springer Verlag, 1

280,1994.

DOIt,RoC.OfZEEE, 78:1550-1560,1990.

NOVEMBER 1997 IEEE SIGNAL PROCESSING MAGAZINE 33

You might also like

- Python Cheat Sheet PDFDocument26 pagesPython Cheat Sheet PDFharishrnjic100% (2)

- Languages, Compilers and Run-time Environments for Distributed Memory MachinesFrom EverandLanguages, Compilers and Run-time Environments for Distributed Memory MachinesNo ratings yet

- Fundamentals of Neural Networks PDFDocument476 pagesFundamentals of Neural Networks PDFShivaPrasad100% (1)

- LAC Reflection JournalDocument6 pagesLAC Reflection JournalEDITHA QUITO100% (1)

- FANUC Series 16 Trouble Diagnosis Specifications: i/18i/21i-MB/TBDocument17 pagesFANUC Series 16 Trouble Diagnosis Specifications: i/18i/21i-MB/TBmikeNo ratings yet

- Editorial Artificial Neural Networks To Systems, Man, and Cybernetics: Characteristics, Structures, and ApplicationsDocument7 pagesEditorial Artificial Neural Networks To Systems, Man, and Cybernetics: Characteristics, Structures, and Applicationsizzul_125z1419No ratings yet

- Rana 2018Document6 pagesRana 2018smritii bansalNo ratings yet

- Echo State NetworkDocument4 pagesEcho State Networknigel989No ratings yet

- Classification of Lung Sounds Using CNNDocument10 pagesClassification of Lung Sounds Using CNNHan ViceroyNo ratings yet

- 9,10 PerceptronDocument46 pages9,10 PerceptronRizky RitongaNo ratings yet

- A Review Paper On Artificial Neural Network in Cognitive ScienceDocument6 pagesA Review Paper On Artificial Neural Network in Cognitive ScienceInternational Journal of Engineering and TechniquesNo ratings yet

- Cortex 00939813 PDFDocument22 pagesCortex 00939813 PDFKurtNo ratings yet

- Recognition of Handwritten Digit Using Convolutional Neural Network in Python With Tensorflow and Comparison of Performance For Various Hidden LayersDocument6 pagesRecognition of Handwritten Digit Using Convolutional Neural Network in Python With Tensorflow and Comparison of Performance For Various Hidden Layersjaime yelsin rosales malpartidaNo ratings yet

- 2019 Spiking Neural P Systems With Learning FunctionsDocument17 pages2019 Spiking Neural P Systems With Learning FunctionsLuis GarcíaNo ratings yet

- Neural NetworksDocument36 pagesNeural NetworksSivaNo ratings yet

- 1 - IntroductionDocument34 pages1 - IntroductionRaj SahaNo ratings yet

- Recent Advances in Transistor-Based Arti Cial SynapsesDocument22 pagesRecent Advances in Transistor-Based Arti Cial SynapsesMinh Duc TranNo ratings yet

- Data-Mining and Knowledge Discovery, Neural Networks inDocument15 pagesData-Mining and Knowledge Discovery, Neural Networks inElena Galeote LopezNo ratings yet

- Paper 8594Document5 pagesPaper 8594IJARSCT JournalNo ratings yet

- Neural Networks Applications in TextileDocument34 pagesNeural Networks Applications in TextileSatyanarayana PulkamNo ratings yet

- Gesture Recognition System: Submitted in Partial Fulfillment of Requirement For The Award of The Degree ofDocument37 pagesGesture Recognition System: Submitted in Partial Fulfillment of Requirement For The Award of The Degree ofRockRaghaNo ratings yet

- Seminar Report ANNDocument21 pagesSeminar Report ANNkartik143100% (2)

- Artificial Neural Networks and Their Applications: June 2005Document6 pagesArtificial Neural Networks and Their Applications: June 2005sadhana mmNo ratings yet

- A Digital Liquid State Machine With Biologically Inspired Learning and Its Application To Speech RecognitionDocument15 pagesA Digital Liquid State Machine With Biologically Inspired Learning and Its Application To Speech RecognitionVangala PorusNo ratings yet

- Analyzing Biological and Artificial Neural Networks - 2019 - Current Opinion IDocument10 pagesAnalyzing Biological and Artificial Neural Networks - 2019 - Current Opinion IShayan ShafquatNo ratings yet

- Neuromorphic Computing - From Robust Hardware Architectures To Testing StrategiesDocument4 pagesNeuromorphic Computing - From Robust Hardware Architectures To Testing StrategieslidhisijuNo ratings yet

- Hand Written Character Recognition Using Back Propagation NetworkDocument13 pagesHand Written Character Recognition Using Back Propagation NetworkMegan TaylorNo ratings yet

- An Introduction To Convolutional Neural Networks: November 2015Document12 pagesAn Introduction To Convolutional Neural Networks: November 2015Alimatu AgongoNo ratings yet

- Transfer Functions in Artificial Neural NetworksDocument4 pagesTransfer Functions in Artificial Neural NetworksDana AbkhNo ratings yet

- Neuromimetic Ics With Analog Cores: An Alternative For Simulating Spiking Neural NetworksDocument5 pagesNeuromimetic Ics With Analog Cores: An Alternative For Simulating Spiking Neural NetworksManjusha SreedharanNo ratings yet

- Chartrand-Deep Learning - A Primer For Radiologists - 2017-Radiographics - A Review Publication of The Radiological Society of North America, IncDocument19 pagesChartrand-Deep Learning - A Primer For Radiologists - 2017-Radiographics - A Review Publication of The Radiological Society of North America, IncAsik AliNo ratings yet

- Ieee Research Paper On Neural NetworkDocument8 pagesIeee Research Paper On Neural Networkafeawqynw100% (1)

- Soft Computing: Project ReportDocument54 pagesSoft Computing: Project ReportShūbhåm ÑagayaÇhNo ratings yet

- Gaoningning 2010Document4 pagesGaoningning 2010smritii bansalNo ratings yet

- International Journal of Computational Engineering Research (IJCER)Document6 pagesInternational Journal of Computational Engineering Research (IJCER)International Journal of computational Engineering research (IJCER)No ratings yet

- Artificial Neural Networks Bidirectional Associative Memory: Computer Science and EngineeringDocument3 pagesArtificial Neural Networks Bidirectional Associative Memory: Computer Science and EngineeringSohail AnsariNo ratings yet

- 2017 Mirko Hansen - Double Barrier Memristive Devices For Unsupervised (Retrieved-2022!04!18)Document11 pages2017 Mirko Hansen - Double Barrier Memristive Devices For Unsupervised (Retrieved-2022!04!18)The great PNo ratings yet

- Artificial Neural Network: 1 BackgroundDocument13 pagesArtificial Neural Network: 1 Backgroundyua_ntNo ratings yet

- An Introduction To Convolutional Neural Networks: November 2015Document12 pagesAn Introduction To Convolutional Neural Networks: November 2015Tehreem AnsariNo ratings yet

- Deep Learning With Spiking NeuronsDocument18 pagesDeep Learning With Spiking NeuronsMohaddeseh MozaffariNo ratings yet

- Artificial Neural NetworkDocument14 pagesArtificial Neural NetworkAzureNo ratings yet

- The SpiNNaker ProjectDocument14 pagesThe SpiNNaker ProjectVeronica MisiedjanNo ratings yet

- Neural Network Approaches To Image Compression: Robert D. Dony, Student, IEEE Simon Haykin, Fellow, IEEEDocument16 pagesNeural Network Approaches To Image Compression: Robert D. Dony, Student, IEEE Simon Haykin, Fellow, IEEEdanakibreNo ratings yet

- Project Assignment TBWDocument6 pagesProject Assignment TBWMuzaffar IqbalNo ratings yet

- Design and Implementation of A Convolutional Neural Network On An Edge Computing Smartphone For Human Activity RecognitionDocument12 pagesDesign and Implementation of A Convolutional Neural Network On An Edge Computing Smartphone For Human Activity Recognitionsdjnsj jnjcdejnewNo ratings yet

- A Review of Recurrent Neural Network-Based Methods in Computational PhysiologyDocument21 pagesA Review of Recurrent Neural Network-Based Methods in Computational PhysiologyatewogboNo ratings yet

- Audio Signal Processing by Neural NetworksDocument34 pagesAudio Signal Processing by Neural NetworksLon donNo ratings yet

- Neural NetworksDocument104 pagesNeural NetworksSingam Giridhar Kumar ReddyNo ratings yet

- Artificial Neural Networks: Fundamentals, Computing, Design, and ApplicationDocument29 pagesArtificial Neural Networks: Fundamentals, Computing, Design, and ApplicationRusty AFNo ratings yet

- Final 1st LectureDocument18 pagesFinal 1st LectureshilpaNo ratings yet

- BackprpDocument6 pagesBackprpAnay ChowdharyNo ratings yet

- Deep Learning with Python: A Comprehensive Guide to Deep Learning with PythonFrom EverandDeep Learning with Python: A Comprehensive Guide to Deep Learning with PythonNo ratings yet

- Advanced Neural ComputersFrom EverandAdvanced Neural ComputersR. EckmillerNo ratings yet

- Hybrid Neural Networks: Fundamentals and Applications for Interacting Biological Neural Networks with Artificial Neuronal ModelsFrom EverandHybrid Neural Networks: Fundamentals and Applications for Interacting Biological Neural Networks with Artificial Neuronal ModelsNo ratings yet

- Evolutionary Algorithms and Neural Networks: Theory and ApplicationsFrom EverandEvolutionary Algorithms and Neural Networks: Theory and ApplicationsNo ratings yet

- Parallel Processing for Artificial Intelligence 1From EverandParallel Processing for Artificial Intelligence 1Rating: 5 out of 5 stars5/5 (1)

- Long Short Term Memory: Fundamentals and Applications for Sequence PredictionFrom EverandLong Short Term Memory: Fundamentals and Applications for Sequence PredictionNo ratings yet

- Neuroevolution: Fundamentals and Applications for Surpassing Human Intelligence with NeuroevolutionFrom EverandNeuroevolution: Fundamentals and Applications for Surpassing Human Intelligence with NeuroevolutionNo ratings yet

- Feedforward Neural Networks: Fundamentals and Applications for The Architecture of Thinking Machines and Neural WebsFrom EverandFeedforward Neural Networks: Fundamentals and Applications for The Architecture of Thinking Machines and Neural WebsNo ratings yet

- Structural Analysis Systems: Software — Hardware Capability — Compatibility — ApplicationsFrom EverandStructural Analysis Systems: Software — Hardware Capability — Compatibility — ApplicationsRating: 5 out of 5 stars5/5 (1)

- Energy Strategy Reviews: SciencedirectDocument9 pagesEnergy Strategy Reviews: SciencedirectpaulNo ratings yet

- Ghauri - The Book - Final Drafte PDFDocument475 pagesGhauri - The Book - Final Drafte PDFMusadiq IslamNo ratings yet

- Animals: Automatic Classification of Cat Vocalizations Emitted in Different ContextsDocument14 pagesAnimals: Automatic Classification of Cat Vocalizations Emitted in Different ContextsmuhammadrizNo ratings yet

- The Data Culture Playbook: A Guide To Building Business Resilience With DataDocument10 pagesThe Data Culture Playbook: A Guide To Building Business Resilience With DatamuhammadrizNo ratings yet

- 305 WRKDOC Sandee Panel Session ReportDocument11 pages305 WRKDOC Sandee Panel Session ReportmuhammadrizNo ratings yet

- Learning Polynomials With Neural NetworksDocument9 pagesLearning Polynomials With Neural NetworksjoscribdNo ratings yet

- P 33Document6 pagesP 33muhammadrizNo ratings yet

- Lab 4 DecisionsDocument6 pagesLab 4 DecisionsmuhammadrizNo ratings yet

- CS 100 - Computational Problem Solving: Fall 2017-2018 Quiz 1Document2 pagesCS 100 - Computational Problem Solving: Fall 2017-2018 Quiz 1muhammadrizNo ratings yet

- SWOT Analysis SampleDocument6 pagesSWOT Analysis Samplehasan_netNo ratings yet

- CH03 - Example CodesDocument7 pagesCH03 - Example CodesmuhammadrizNo ratings yet

- Computational Health Informatics in The Big Data Age - A SurveyDocument36 pagesComputational Health Informatics in The Big Data Age - A SurveymuhammadrizNo ratings yet

- P 33Document6 pagesP 33muhammadrizNo ratings yet

- Practical C++ Programming Teacher's GuideDocument79 pagesPractical C++ Programming Teacher's GuidesomeguyinozNo ratings yet

- Scha PireDocument182 pagesScha PiremuhammadrizNo ratings yet

- BS CS Fall 2017Document2 pagesBS CS Fall 2017muhammadrizNo ratings yet

- LUMS Prayer Times (1 Page)Document1 pageLUMS Prayer Times (1 Page)eternaltechNo ratings yet

- Sparse RepDocument67 pagesSparse RepmuhammadrizNo ratings yet

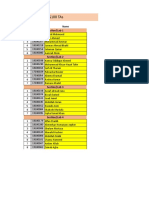

- CS100 TA's ListDocument1 pageCS100 TA's ListmuhammadrizNo ratings yet

- DeepLearning TensorflowTraining CurriculumDocument3 pagesDeepLearning TensorflowTraining CurriculummuhammadrizNo ratings yet

- PHD OpeningsDocument1 pagePHD OpeningsmuhammadrizNo ratings yet

- CS 100 Outline 02Document4 pagesCS 100 Outline 02muhammadrizNo ratings yet

- Advertisement July2017Document1 pageAdvertisement July2017muhammadrizNo ratings yet

- Suggested Welcome AnnouncementDocument3 pagesSuggested Welcome AnnouncementmuhammadrizNo ratings yet

- BSEE Fall-17 Finalterm 22th Batch (F2017019) Datesheet 03.01.18Document3 pagesBSEE Fall-17 Finalterm 22th Batch (F2017019) Datesheet 03.01.18muhammadrizNo ratings yet

- S. No GP Cat Course Code Course - Name CRH Pre Req Sec Batc H Lab/T HDocument9 pagesS. No GP Cat Course Code Course - Name CRH Pre Req Sec Batc H Lab/T HmuhammadrizNo ratings yet

- TH Course - Name CRH Sec Resource Person Monday Tuesday Wednesday Thurday Friday Saturday S. No Course CodeDocument1 pageTH Course - Name CRH Sec Resource Person Monday Tuesday Wednesday Thurday Friday Saturday S. No Course CodemuhammadrizNo ratings yet

- Tasks 9Document2 pagesTasks 9muhammadrizNo ratings yet

- Publication Detail Performa 2016-17Document1 pagePublication Detail Performa 2016-17muhammadrizNo ratings yet

- Feature WritingDocument27 pagesFeature WritingRhea Bercasio-GarciaNo ratings yet

- Opinion Paper AssignmentDocument2 pagesOpinion Paper Assignmentsiti nuraisyah aminiNo ratings yet

- Instar Vibration Testing of Small Satellites Part 3Document9 pagesInstar Vibration Testing of Small Satellites Part 3Youssef wagdyNo ratings yet

- TimelineDocument2 pagesTimelineapi-240600220No ratings yet

- KG 2 1Document10 pagesKG 2 1prashantkumar1lifeNo ratings yet

- Film Art Study Guide Key TermsDocument8 pagesFilm Art Study Guide Key TermsnormanchamorroNo ratings yet

- Factoring Using Common Monomial FactorDocument26 pagesFactoring Using Common Monomial FactorHappyNo ratings yet

- Response of Buried Pipes Taking Into Account Seismic and Soil Spatial VariabilitiesDocument8 pagesResponse of Buried Pipes Taking Into Account Seismic and Soil Spatial VariabilitiesBeh RangNo ratings yet

- Lec29 PDFDocument32 pagesLec29 PDFVignesh Raja PNo ratings yet

- Introduction: Digital Controller Design: SystemDocument13 pagesIntroduction: Digital Controller Design: Systembala_aeroNo ratings yet

- Technical Publication: Logiq V2/Logiq V1Document325 pagesTechnical Publication: Logiq V2/Logiq V1Владислав ВасильєвNo ratings yet

- Resonant Column Testing ChallengesDocument9 pagesResonant Column Testing ChallengesMarina Bellaver CorteNo ratings yet

- The Rectangular Coordinate SystemDocument57 pagesThe Rectangular Coordinate SystemSAS Math-RoboticsNo ratings yet

- Iec TR 62343-6-8-2011Document14 pagesIec TR 62343-6-8-2011Amer AmeryNo ratings yet

- ObjectScript ReferenceDocument4 pagesObjectScript ReferenceRodolfoNo ratings yet

- Bevington Buku Teks Pengolahan Data Experimen - Bab 3Document17 pagesBevington Buku Teks Pengolahan Data Experimen - Bab 3Erlanda SimamoraNo ratings yet

- Lesson 1 - Environmental PrinciplesDocument26 pagesLesson 1 - Environmental PrinciplesGeroline SaycoNo ratings yet

- Chandramohan (2016) Duration (Stanford) PDFDocument448 pagesChandramohan (2016) Duration (Stanford) PDFmgrubisicNo ratings yet

- Email EnglishDocument65 pagesEmail EnglishNghi NguyễnNo ratings yet

- Water Tank SizeDocument1 pageWater Tank SizeTerence LeongNo ratings yet

- Tps 22967Document29 pagesTps 22967Thanh LeeNo ratings yet

- Sample Case StudyDocument23 pagesSample Case StudyDELFIN, Kristalyn JaneNo ratings yet

- CrashUp Surface TensionDocument33 pagesCrashUp Surface TensionSameer ChakrawartiNo ratings yet

- The Complete Strategy LandscapeDocument1 pageThe Complete Strategy LandscapeHmoud AMNo ratings yet

- Lesson 1-Land and Resources of Africa Answer KeyDocument3 pagesLesson 1-Land and Resources of Africa Answer Keyheehee heeheeNo ratings yet

- Ce121 Lec5 BuoyancyDocument18 pagesCe121 Lec5 BuoyancyPetForest Ni JohannNo ratings yet

- Adorna Tm201 01 Worksheet 1 - ArgDocument2 pagesAdorna Tm201 01 Worksheet 1 - ArgJhay CeeNo ratings yet

- 2 Story Telling Templetes 2023Document1 page2 Story Telling Templetes 2023serkovanastya12No ratings yet