Professional Documents

Culture Documents

Supply Chain - Big Data

Uploaded by

Karun RatheeCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Supply Chain - Big Data

Uploaded by

Karun RatheeCopyright:

Available Formats

Fixing your

supply chain with

BIG DATA

Distilling millions of granules of information into operational

decisions will separate tomorrows winners and losers

By Alexander Klein

September 2017 | ISE Magazine 39

W

Fixing your supply chain with big data

What is big data and why is it so important in with many domestic carriers. Each one of those stakehold-

supply chain management today? How do we ers at each point in time must send information to a singular

get past the mere buzzword appeal in captur- system or data repository for analysts to capture the perfor-

ing an audiences attention with trendy apho- mance. That repository is simultaneously capturing other

risms? The term big data is being applied to data elements for performance reporting of their distinct

situations where analysts, managers and con- components of the supply chain. Each one of those stake-

tinuous improvement teams need to weed through massive holders most assuredly has a different level of technological

amounts to support decision-making. But on the quality side ability when it comes to sharing information.

of the fence, it is important to make good quality data avail- The result in some cases is the occurrence of bad or miss-

able to decision-makers in a format that is ready and available ing data that skews the results and leads to poor decision-

for them to use. Wikipedia defines big data as a term for making. Aligning the stakeholders with the expectations of

data sets that are so large or complex that traditional data the shipper and the capabilities of the data repository made

processing application software is inadequate to deal with the management of this big data reliable, predictable and use-

them. able.

Both the quantity and quality aspects of big data are im-

portant, and real-world examples can show where it plays a Technology

key role in the efficient management of typical supply chains. After addressing the quality and quantity aspect of big data as

With respect to quantity of data, our enterprises are now col- it relates to the raw data, our next challenge is presenting this

lecting more data than ever before. Why? Because we can. information so it can help make quick decisions with high

The evolution of data retrieval, storage and analysis allows levels of confidence.

us to quickly but maybe not very efficiently collect any Imagine having a years worth of Targets, Walmarts,

amount of data with extraordinary amounts of granularity. Costcos or any other big box retailers point of sale (POS)

The trick is knowing where this data is and how to get at the data at the purchased item level and needing to make a fore-

information when it is needed. casted buy decision or any other budgetary decision in a short

period of time. Imagine the millions of data records detailing

Data quality/quantity every aspect of every consumer purchase.

The quality end of the spectrum offers a completely differ- As recently as 10 years ago, even if that detail was avail-

ent view. Trust and diligence must be placed both in the able, statisticians would be called in to help define a reason-

originator of the data and the processing and storage of the able and meaningful sample to analyze, followed by analysts

information. Even in this advanced age of data manipulation, using rulers and highlighters against greenbar paper. And in

a lot of work must happen to ensure stable and accurate data. the end, all that effort and personnel would only come up

Its not uncommon to experience situations where you need with a guess. In the past, there was a higher probability that

to remove more than 70 percent of a massive data set due to the immediate future would closely represent the immediate

missing or corrupt data elements within the population. preceding months or years, so number crunchers could get

Is that data really useable? In some situations, you might away with rolling averages of high-level data, and the deci-

have no other choice and will forge ahead with heavy dis- sions made were typically close to meeting expectations or

claimers. In other cases, you would merely have to wait out at least backed into through creative data manipulation.

another cycle of activity using the data set analysis to direct That doesnt cut it any more. Because data are available

improvements in the collection, storage and manipulation and we are more comfortable with how to store and retrieve

process. those numbers and because consumerism is more fickle and

As an example of the due diligence required to harness demanding than ever before, we want to use all of the data

big data into useful information, consider the example of a we can get our hands on and be confident in its quality.

large international active wear retailer interested in convert- Enter the operations research field, which appears to be la-

ing reams of columnar data into a comprehensive monthly ser-focused on querying and using data. Advanced tools and

executive-level report. The raw data are provided by all of knowledge of database management can harness the power

its vendors and supply chain managers. The scope included of analytics at the appropriate level. At the same time, enter

measuring the timeliness of the retailers merchandise from the newly marketed presentation methods like dashboarding

the original purchase order to the store shelf. and live views of activities taking place in the supply chain,

It sounds simple enough, but consider that the project and we find ourselves meeting the definition of big data head

might involve many factories with several ocean carriers on. Over the past several years, reporting capabilities in Excel

for millions of units shipping on thousands of containers have developed almost to the point of a maximum threshold

through multiple destination ports and distribution centers that was screaming for more. Along came dashboard tools

40 ISE Magazine | www.iise.org/ISEmagazine

like Xcelsius and Tableau that understood the shortcomings fort, lets examine a customized program that stands in place

of traditional workbook data reporting and offered not only today only due to the commitment of resources and planning

an alternate but an unbeatable capability. No more static re- efforts. The business needed to develop a key performance

ports that would need to be generated daily, weekly, month- indicators (KPIs) program and operationalize it for monthly

ly, quarterly or annually instead, now we have live views reporting and review. Bulk data are to be delivered to the

of the actual data in any format the user or decision-maker core team by the fifth working day of each month. The core

wanted. team would be held responsible for creating a consolidated

While available technology has brought the idea of a dash- KPI presentation for monthly management meetings.

board to the forefront of corporate technology, the concept Data analysts worked with the shipper to orient themselves

has been around for a long time. Dashboards have been used with the specific processes in the supply chain, to define the

expertly by some of our example-setting corporate entities KPIs and to identify the data sources that contained the nec-

in the guise of tools like the A3. Isnt an A3 report, a format essary data elements that would be used to populate the KPIs.

used heavily in the 1990s and 2000s, a kind of dashboard? Data analysts then turned to information systems and key

A3 reporting is a systematic approach to problem-solv- stakeholders to confirm that those data elements were avail-

ing developed by Toyota Motor Corp. Using a step-by-step able and could be extracted into a database management sys-

process, this system examines how a problem came to be, tem that could generate reports, either with the appropriate

identifies what its effects are and then proposes ways to ad- programming or directly into specific reports.

dress the problem. Ideally, thats what we want a dashboard Information systems determined that extracting data from

to do, but its effectiveness is controlled by the ability to har- multiple operating systems, including enterprise resource

ness big data. We need to take advantage of what we know planning, warehouse management systems, land/logistics

works and the tools and advances in technology that allow us transportation systems, etc., would not provide the most ef-

to get to this point of decision-making excellence. ficient means for a repetitive and replicable process that could

The most common use of big data in third-party logistics collect KPI information in the future. Instead, that depart-

freight management is found in generating the data and re- ment recommended pulling as much data as possible from

porting performance. the purchase order management system (more of a planning

Typically, the two entities agree upon performance mea- system) and then filling in the gaps afterward from opera-

surements and levels at the outset of their relationship. You tions. This would require writing queries to pull data from

could say that measurement and performance levels feed on the various systems mentioned above and cross-referencing

each other, and, as the old saying goes, you cannot fix what using common key data elements that occurred in each of

you cannot measure. So the idea is to collect all of the data the systems.

regarding everything: every movement, every time stamp, A test of the designed data extraction process was con-

every dimension, every reason code, etc. The data needs to ducted using a 90-day shipment cycle population. This de-

be collected in a systemic and systematic way to permit easy termined that in two of the inherent systems, all the data

retrieval and manipulation. In short, a lot of thought needs to could be retrieved automatically. In addition, data required

go into the upfront work. to complete the report that was not available in those systems

Once that is completed, then you turn your focus to how could be collected in an ad hoc manner and connected by

you present the output. You need to understand how the cus- existing key fields data.

tomer wants to view the data to make decisions or improve- Next, a template for presenting the KPIs was created and

ments. Lessons learned from the old A3 format tell us to put gaps between the connected data and the reporting data were

as much information as possible on one page. This permits identified. The final step was creating a standard operating

decision-makers to view trends that normally wouldnt be procedure document to complete the report generation work

seen and pick out how certain performance indicators cor- on an ongoing basis in as automated fashion as possible.

relate to others. Here are some of the issues that the team encountered dur-

More specifically, the decision-makers, supported by keen ing this initial process:

data managers and capable vendors, are gaining access to

cycle time information, facility utilization, conveyance per- Freeform comment fields containing pertinent informa-

formance and most every type of documentation efforts that tion on container load planning decisions required exten-

allow them to manage the bottom line. Any manager who sive data cleansing work. This information was critical in

can do this expertly and consistently will lead the way. reporting the cycle time performance.

Specific routings created double counting and missed

Operationalize that data events based on how the shipments were entered into the

As a comprehensive example of how to corral a big data ef- system manually and systemically.

September 2017 | ISE Magazine 41

Fixing your supply chain with big data

Dont go hungry

Big data analytics can be a useful tool in curbing food waste and meeting regulatory requirements, according

to Quantzig, a global analytics and advisory company.

The United Nations Food and Agriculture Organization recently reported that approximately one-third of

the food produced in the world gets lost or wasted, which affects not only world hunger but carbon emissions,

water and land use. And European countries Italy and France have passed laws that require supermarkets and

grocery stores to donate unsold food to food banks and charities.

But analyzing a businesss waste stream by combining what goes out (waste) and what comes in (supply

chain) can provide a clearer picture of sustainability and profitability. And big data can help manufacturers

use sales information, weather forecasts and seasonal trends to determine optimum inventory, predict

consumer demand and aggressively promote products that are close to their sell-by and expiration dates,

the company reported.

42 ISE Magazine | www.iise.org/ISEmagazine

Some of the time stamps were available for certain rout-

ings, while others were not just by the nature of the

processes involved.

Several key time stamps were being overwritten by pur-

chase order changes, and the automated data extraction

was only picking up the latest change, losing sight of the

original data, which was critical to capturing true time

events.

Some data required manual collection. This created a

burden on resources and had the potential to make the

data collection process lack standardization from month

to month.

For the first five years, this effort was actively managed.

Then the process improvement team was able to transition

the process to a dashboard, which permitted a more auto-

mated data extraction and cleansing process and nearly on-

demand performance measurement availability, all based en-

tirely on the original process.

Reading the granules for better decisions

Consider the hundreds of thousands and perhaps even mil-

lions of lines of related data that are generated. Some of this

information is generated weekly, some monthly and some an-

nually. Being able to turn the most granular data into mean-

ingful and useful reports is powerful. Third-party logistics

providers and other enterprises must focus on supporting

their customers needs for big data analysis with dashboards

that feature any required or requested performance indicator,

landed cost calculations, scorecards, inventory management,

vendor performance, carbon dioxide emissions and even

peer analysis, to name a few measurable activities.

All this information exists, and we should understand how

to use it, making it invaluable not only to us in shaping our

future, but to shippers who can continue to focus on shaping

their own future with high-quality big data management.

Alexander Klein is the senior manager in the supply chain solutions

and engineering group of APL Logistics, a global provider of logistics

services. His efforts include working with sales and business develop-

ment to create supply chain solutions. He also works with the client

base, analyzing supply chain processes and making recommendations

for improvement. His bachelors degree in logistics, operations and

materials management is from George Washington University. His

MBA in general and strategic management is from Temple Univer-

sity. Klein was certified by the International Import-Export Institute

as an international trade logistics specialist in 2001. He previously

worked in warehousing and transportation management for a small

privately owned public warehousing company in New York and Chi-

cago as well as in corporate strategic management for Conrail, a U.S.

domestic freight railroad.

September 2017 | ISE Magazine 43

Copyright of Industrial Engineer: IE is the property of Institute of Industrial Engineers and its

content may not be copied or emailed to multiple sites or posted to a listserv without the

copyright holder's express written permission. However, users may print, download, or email

articles for individual use.

You might also like

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Anushree Vinu: Education Technical SkillsDocument1 pageAnushree Vinu: Education Technical SkillsAnushree VinuNo ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- IMP Q For End Sem ExaminationDocument2 pagesIMP Q For End Sem Examinationzk8745817No ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5795)

- Humidity Ebook 2017 B211616ENDocument17 pagesHumidity Ebook 2017 B211616ENgustavohdez2No ratings yet

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Default Router PasswordsDocument26 pagesDefault Router Passwordszamans98No ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Last Bill This Bill Total Amount DueDocument7 pagesLast Bill This Bill Total Amount DueDjibzlae100% (1)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- Communication Electronic 2 Edition - Frenzel ©2008 Created by Kai Raimi - BHCDocument24 pagesCommunication Electronic 2 Edition - Frenzel ©2008 Created by Kai Raimi - BHCGmae FampulmeNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- CV Alexandru PaturanDocument1 pageCV Alexandru PaturanAlexreferatNo ratings yet

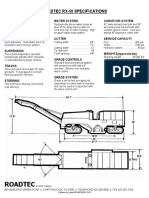

- RX 50 SpecDocument1 pageRX 50 SpecFelipe HernándezNo ratings yet

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Summer Training: Submitted ByDocument29 pagesSummer Training: Submitted ByBadd ManNo ratings yet

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Group Chat Project ReportDocument18 pagesGroup Chat Project ReportSoham JainNo ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Select Business and Technology CollegeDocument1 pageSelect Business and Technology CollegePink WorkuNo ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- APL V80 SP2-Readme enDocument16 pagesAPL V80 SP2-Readme enGrant DouglasNo ratings yet

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Brochure South DL202Document2 pagesBrochure South DL202jimmyNo ratings yet

- Plenty of Room - Nnano.2009.356Document1 pagePlenty of Room - Nnano.2009.356Mario PgNo ratings yet

- Integrated Language Environment: AS/400 E-Series I-SeriesDocument15 pagesIntegrated Language Environment: AS/400 E-Series I-SeriesrajuNo ratings yet

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- NTT Data Ai Governance v04Document23 pagesNTT Data Ai Governance v04radhikaseelam1No ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Outokumpu Stainless Steel For Automotive IndustryDocument20 pagesOutokumpu Stainless Steel For Automotive IndustrychristopherNo ratings yet

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Multi Billion Dollar Industry You Can Make Money From EasilyDocument34 pagesThe Multi Billion Dollar Industry You Can Make Money From EasilyJoshuaNo ratings yet

- PHP Unit-4Document11 pagesPHP Unit-4Nidhi BhatiNo ratings yet

- OSI ModelDocument18 pagesOSI ModelruletriplexNo ratings yet

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Wheel Type Incremental Rotary Encoders: ENC SeriesDocument2 pagesWheel Type Incremental Rotary Encoders: ENC SeriesRoberto RizaldyNo ratings yet

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1091)

- Nokia.Document1 pageNokia.kshiti ahireNo ratings yet

- PVV Solar Cell US0123 PDFDocument2 pagesPVV Solar Cell US0123 PDFNguyễn Anh DanhNo ratings yet

- ODLI20161209 004 UPD en MY Inside Innovation Backgrounder HueDocument4 pagesODLI20161209 004 UPD en MY Inside Innovation Backgrounder HueRizki Fadly MatondangNo ratings yet

- Installation Instructions MODELS TS-2T, TS-3T, TS-4T, TS-5T: Rear View of PlateDocument2 pagesInstallation Instructions MODELS TS-2T, TS-3T, TS-4T, TS-5T: Rear View of PlateXavier TamashiiNo ratings yet

- Create Report Net Content StoreDocument26 pagesCreate Report Net Content StoreJi RedNo ratings yet

- Prediction of Overshoot and Crosstalk of Low Voltage GaN HEMTDocument27 pagesPrediction of Overshoot and Crosstalk of Low Voltage GaN HEMTfirststudentNo ratings yet

- Mg375 ExamDocument12 pagesMg375 ExamdasheNo ratings yet

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- WQR XQ Jhs Mu NW 4 Opj AJ6 N 1643617492Document16 pagesWQR XQ Jhs Mu NW 4 Opj AJ6 N 1643617492Heamnath HeamnathNo ratings yet

- U-BOOTS (1) Technical SeminarDocument18 pagesU-BOOTS (1) Technical SeminarBasavaraj M PatilNo ratings yet

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)