Professional Documents

Culture Documents

2011 Data Compression Conference: 1068-0314/11 $26.00 © 2011 IEEE DOI 10.1109/DCC.2011.95 479

Uploaded by

Firstakis LastakisOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

2011 Data Compression Conference: 1068-0314/11 $26.00 © 2011 IEEE DOI 10.1109/DCC.2011.95 479

Uploaded by

Firstakis LastakisCopyright:

Available Formats

2011 Data Compression Conference

PARALLEL PROCESSING OF DCT ON GPU

Serpil Tokdemir and S. Belkasim

Department of Computer Science, Georgia State University, Atlanta, GA., USA, 30303

The Graphic Processing Unit (GPU) is increasingly becoming an important alternative

for many applications that requires real time processing. More interestingly, digital image

processing applications such as image compression are becoming closer than ever of

being processed in real time. In this paper we explore the implementation of discrete

cosine transform (DCT) on the GPUs. Our study indicates a clear superiority of the GPU

as parallel processor for image compression using DCT over the CPU. It also indicates

that the increase in image size considerably slowed the CPU and did not affect the GPU.

Digital multimedia image compression techniques require real time streaming. In order to

achieve this, the parallel processing of both the compression and decompression stages is

an absolute necessity. Since many image compression techniques have sections with

common computations over many pixels, this fact makes image compression a prime

target for acceleration on the GPU[1]. GPU acceleration of DCT computation is

attributed to the emergence of shader languages such as Cg and CUDA (Compute

Unified Device Architecture) that can be programmed using a C-like coding [2].

The DCT compression/decompression has been implemented on both the GPU and CPU

for images with sizes ranging from 90Kb to 300Kb.

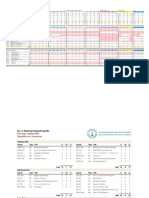

DCT Performance

0.09

0.08

0.07

e(sn)

0.06

0.05 GPU Time

Tim

0.04 CPU Time

0.03

0.02

0.01

0

12

12

12

12

12

12

12

56

12 2

1

x5

x5

x5

x5

x5

x5

x5

2

5

6x

0 12x

12

12

12

12

12

12

25

,5

,5

,5

,5

,5

,5

,5

19 , 5

,

K

K

8

66

92

21

24

25

26

27

30

Size (Kb)

Figure1 Original image Figure2 IDCT Figure3 Processing Time versus image size

A sample image and its inverse DCT version is shown in Figure 1 and Figure 2. As

evident from the chart displayed in Figure 3, the CPU time increases when the image size

increases. On the other hand, the increase in image size results in a negligible increase in

GPU processing time. We noticed that uploading the image from the CPU into the GPU

requires more time than the entire time used by the GPU process. In light of this we can

conclude that implementing the DCT image compression on GPU is far superior to

having it computed solely by CPU.

Our experimental results clearly indicate the GPU is much more efficient as a parallel

processor for DCT image compression. It also indicates that the increase in image size

slows the performance of the CPU while the GPU was not affected. Although we gained

considerable time by processing the DCT blocks in parallel on the GPU, we encountered

some limitations from Cg due to the fact that Cg is not developed for general purpose

programming on GPU.

References

[1]- Owens, J.D. Houston, M. Luebke, D. Green, S. Stone, J.E. Phillips, J.C., GPU

Computing, Proceedings of the IEEE, May 2008, Volume: 96, Issue: 5, pp: 879-899

[2]- Ing. Vaclav Simek, Ram Rakesh ASN, GPU Acceleration of 2D-DWT Image

Compression in MATLAB with CUDA, Proceedings 2nd UKSim European Symposium

on Computer Modelling and Simulation, Liverpool, GB, IEEE CS, p. 274-277, 2008

1068-0314/11 $26.00 2011 IEEE 479

DOI 10.1109/DCC.2011.95

You might also like

- Rindo Da Saudade PDFDocument4 pagesRindo Da Saudade PDFDanilo FernandesNo ratings yet

- Calum Graham - Waiting PDFDocument11 pagesCalum Graham - Waiting PDFGianni CapaldiNo ratings yet

- The Toyota Kata Practice Guide: Practicing Scientific Thinking Skills for Superior Results in 20 Minutes a DayFrom EverandThe Toyota Kata Practice Guide: Practicing Scientific Thinking Skills for Superior Results in 20 Minutes a DayRating: 4.5 out of 5 stars4.5/5 (7)

- Cascading HarmonicsDocument4 pagesCascading Harmonicsco100% (1)

- Nandemonaiya PDFDocument3 pagesNandemonaiya PDFHendrickson TaizuNo ratings yet

- (Free Scores - Com) Powell Baden Berceuse Jussara 97599Document2 pages(Free Scores - Com) Powell Baden Berceuse Jussara 97599Antonio UrtiNo ratings yet

- Vol10 Tab12Document3 pagesVol10 Tab12nagarajanNo ratings yet

- 118 AaHaRaSarZaungDocument52 pages118 AaHaRaSarZaungPugh JuttaNo ratings yet

- Degree Plans - 2020-08-22 (Official)Document32 pagesDegree Plans - 2020-08-22 (Official)hassan bin saadNo ratings yet

- Super Meat Boy - Lil Slugger Wah GuitarDocument1 pageSuper Meat Boy - Lil Slugger Wah GuitarGaston Martinez PannutoNo ratings yet

- Alíquota Interna Do ICMSDocument2 pagesAlíquota Interna Do ICMScristinaNo ratings yet

- Busted 1Document2 pagesBusted 1Olivier BlondelNo ratings yet

- Busted 1Document2 pagesBusted 1Olivier BlondelNo ratings yet

- Busted 1Document2 pagesBusted 1Olivier BlondelNo ratings yet

- Busted 1Document2 pagesBusted 1Olivier BlondelNo ratings yet

- Open em Tuning E B E G B EDocument5 pagesOpen em Tuning E B E G B EWinNo ratings yet

- Chim Vanh KhuyenDocument4 pagesChim Vanh KhuyenDat TestNo ratings yet

- Kućica A: 1:10 (MJERE U CM)Document3 pagesKućica A: 1:10 (MJERE U CM)Marijana KlasanNo ratings yet

- Dust My Broom - Elmore JamesDocument5 pagesDust My Broom - Elmore JamesTab Hunter100% (1)

- Black Hole Sun: Soundgarden AuperunknownDocument3 pagesBlack Hole Sun: Soundgarden AuperunknownT. P.50% (2)

- Universal Molded Case Circuit Breakers For IEC 947-2 and NEMA ApplicationsDocument16 pagesUniversal Molded Case Circuit Breakers For IEC 947-2 and NEMA ApplicationsJosue Crespo GonzalezNo ratings yet

- Opus 48 No 2 by Mauro GiulianiDocument2 pagesOpus 48 No 2 by Mauro GiulianiumitNo ratings yet

- Horizons: Steve Hackett / GenesisDocument3 pagesHorizons: Steve Hackett / GenesisChitarra Creativa ProfessionalNo ratings yet

- Entrando en Jerusalen 2 - tp1bDocument1 pageEntrando en Jerusalen 2 - tp1bArturo Martínez GilNo ratings yet

- Daily Blues Lick 393Document2 pagesDaily Blues Lick 393Ruben Del VillarNo ratings yet

- Nuclear Assault - Brain Death BassDocument8 pagesNuclear Assault - Brain Death BassGustava LafavaNo ratings yet

- RJBBRJ 062Document3 pagesRJBBRJ 062VincenzoPensatoNo ratings yet

- La Catedral 1 PDFDocument4 pagesLa Catedral 1 PDFErick NavaNo ratings yet

- Calum Graham - WaitingDocument11 pagesCalum Graham - WaitingGianni CapaldiNo ratings yet

- BALLADE OF THE GREE BERETS EuphoniumDocument2 pagesBALLADE OF THE GREE BERETS Euphoniumluis choquecotaNo ratings yet

- Alíquota Interna Do ICMSDocument2 pagesAlíquota Interna Do ICMSmarcos0512No ratings yet

- ColorByNumber Game 03 enDocument1 pageColorByNumber Game 03 enlaysa.prestesNo ratings yet

- Ammff 020Document1 pageAmmff 020li LewisNo ratings yet

- MONUMENTS - Cardinal RedDocument4 pagesMONUMENTS - Cardinal RedLorenz Carlo Robedillo GonzalesNo ratings yet

- Snow On The HillsDocument1 pageSnow On The HillsspiderbiotNo ratings yet

- Deguello (Rio Bravo)Document3 pagesDeguello (Rio Bravo)De GuchteneereNo ratings yet

- Debussy - Arabesque N°1Document5 pagesDebussy - Arabesque N°1Ignacio RiosNo ratings yet

- Moliendo CaféDocument1 pageMoliendo CaféRocío LoNo ratings yet

- Tesya Hasan Roller Bracket PartDocument1 pageTesya Hasan Roller Bracket PartTesya Hasan Zein MahmudNo ratings yet

- Metallica - Welcome Home Sanitarium (Pro) JaesDocument14 pagesMetallica - Welcome Home Sanitarium (Pro) JaesayamNo ratings yet

- Lick 4Document1 pageLick 4murat cihanNo ratings yet

- Pentalick 1Document1 pagePentalick 1jpNo ratings yet

- Killing in The Name Rage - Dsitortion Guitar PDFDocument11 pagesKilling in The Name Rage - Dsitortion Guitar PDFAKSAR HIBURNo ratings yet

- ToBeOver - Electric GuitarSoloDocument18 pagesToBeOver - Electric GuitarSoloDominique PeyrucqNo ratings yet

- Holiday Calendar 2023Document1 pageHoliday Calendar 2023Srittam DasNo ratings yet

- Rindo Da Saudade PDFDocument4 pagesRindo Da Saudade PDFmarcioNo ratings yet

- Rindo Da Saudade: Transcribed By: Diogo CarvalhoDocument4 pagesRindo Da Saudade: Transcribed By: Diogo CarvalhoNazareno ColliniNo ratings yet

- Rindo Da Saudade PDFDocument4 pagesRindo Da Saudade PDFmarcioNo ratings yet

- Rindo Da Saudade PDFPDF PDF FreeDocument4 pagesRindo Da Saudade PDFPDF PDF FreeDavi RochaNo ratings yet

- Rindo Da Saudade PDFDocument4 pagesRindo Da Saudade PDFmarcioNo ratings yet

- Rindo Da Saudade PDFDocument4 pagesRindo Da Saudade PDFmarcioNo ratings yet

- 16 Dez 2022Document1 page16 Dez 2022Rodrigo MoreiraNo ratings yet

- Moderate 148 Intro: Standard TuningDocument12 pagesModerate 148 Intro: Standard TuningrubenNo ratings yet

- Arp Vertical - JonicoDocument1 pageArp Vertical - Jonicomatias benavideNo ratings yet

- Flat House (បន្ទប់ទឹកជាន់ផ្ទាល់ដី)Document1 pageFlat House (បន្ទប់ទឹកជាន់ផ្ទាល់ដី)yoeuy zeodyNo ratings yet

- Stevie Ray Vaughan Habits - Lick 43: Freddie King Chords & Sliding Double-StopsDocument1 pageStevie Ray Vaughan Habits - Lick 43: Freddie King Chords & Sliding Double-StopsGabriela InzunzaNo ratings yet

- Tommy Emmanuel Amy Version 3 PDFDocument6 pagesTommy Emmanuel Amy Version 3 PDFZendesk 9No ratings yet

- Amy by Tommy Emmanuel PDFDocument6 pagesAmy by Tommy Emmanuel PDFcgrapskiNo ratings yet

- Daily Blues Lick 294: Standard TuningDocument2 pagesDaily Blues Lick 294: Standard Tuningroad of roadsNo ratings yet

- Depreter Northern LightsDocument6 pagesDepreter Northern LightsMartinNo ratings yet

- FPGA-Based Real-Time Speech Recognition SystemDocument54 pagesFPGA-Based Real-Time Speech Recognition SystemAnis BenNo ratings yet

- Lab ManualDocument40 pagesLab ManualJaymin BhalaniNo ratings yet

- Information Technology - Digital Compression and Coding of Continuous-Tone Still Images Requirements and Guidelines PDFDocument186 pagesInformation Technology - Digital Compression and Coding of Continuous-Tone Still Images Requirements and Guidelines PDFMinh HoNo ratings yet

- Image Compression Using DCT Implementing MatlabDocument23 pagesImage Compression Using DCT Implementing MatlabHarish Kumar91% (11)

- Image Compression 2011Document52 pagesImage Compression 2011sprynavidNo ratings yet

- Matlab TutorialDocument6 pagesMatlab Tutorialekichi_onizuka100% (3)

- Lu Et Al (2018)Document10 pagesLu Et Al (2018)Andi Putri MaharaniNo ratings yet

- Iris Diagnosis - A Quantitative Non-Invasive Tool Fordiabetes DetectionDocument7 pagesIris Diagnosis - A Quantitative Non-Invasive Tool Fordiabetes Detectionkrishna mohan pandeyNo ratings yet

- HandoutsDocument221 pagesHandoutskhubaibahmed3141No ratings yet

- BITS ZG553 Real Time Systems L-1 KGK 1564743779321 PDFDocument41 pagesBITS ZG553 Real Time Systems L-1 KGK 1564743779321 PDFhhNo ratings yet

- Digital Camera With JPEG, MPEG4, MP3 and 802 (1) .11Document12 pagesDigital Camera With JPEG, MPEG4, MP3 and 802 (1) .11Ion CodreanuNo ratings yet

- Compression and DecompressionDocument33 pagesCompression and DecompressionNaveena SivamaniNo ratings yet

- Image Compression Comparison Using Golden Section Transform, Haar Wavelet Transform and Daubechies D4 Wavelet by MatlabDocument29 pagesImage Compression Comparison Using Golden Section Transform, Haar Wavelet Transform and Daubechies D4 Wavelet by Matlabjasonli1880No ratings yet

- Trends in Biomedical Signal Feature Extraction PDFDocument23 pagesTrends in Biomedical Signal Feature Extraction PDFhüseyin_coşkun_1No ratings yet

- Algorithm - How Does MPEG4 Compression Work - Stack Overflow PDFDocument2 pagesAlgorithm - How Does MPEG4 Compression Work - Stack Overflow PDFWaldon HendricksNo ratings yet

- Voice Activation Using Speaker Recognition For Controlling Humanoid RobotDocument6 pagesVoice Activation Using Speaker Recognition For Controlling Humanoid RobotDyah Ayu AnggreiniNo ratings yet

- Medical Image Encryption Using Multi Chaotic MapsDocument10 pagesMedical Image Encryption Using Multi Chaotic MapsTELKOMNIKANo ratings yet

- Information Hiding Techniques PDFDocument19 pagesInformation Hiding Techniques PDFcheintNo ratings yet

- DSP For Matlab and Labview II - Discrete Frequency Transforms - Forrester W.isen (Morgan 2008 215s)Document215 pagesDSP For Matlab and Labview II - Discrete Frequency Transforms - Forrester W.isen (Morgan 2008 215s)EdwingtzNo ratings yet

- High-Efficiency and Low-Power Architectures For 2-D DCT and IDCT Based On CORDIC RotationDocument6 pagesHigh-Efficiency and Low-Power Architectures For 2-D DCT and IDCT Based On CORDIC RotationGopinathan MuthusamyNo ratings yet

- Channel Estimation PDFDocument4 pagesChannel Estimation PDFHuy Nguyễn QuốcNo ratings yet

- When Do Contrastive Learning Signals Help Spatio-TemporalDocument12 pagesWhen Do Contrastive Learning Signals Help Spatio-TemporalliNo ratings yet

- Great Pyramid of Giza and Golden Section Transform PreviewDocument26 pagesGreat Pyramid of Giza and Golden Section Transform Previewjasonli1880No ratings yet

- Xmovie: Architecture and Knplernentation of A Distributed Movie SystemDocument29 pagesXmovie: Architecture and Knplernentation of A Distributed Movie SystemMr. HahhaNo ratings yet

- Image Compression Standards PDFDocument60 pagesImage Compression Standards PDFNehru VeerabatheranNo ratings yet

- Feature Extraction Using MFCCDocument8 pagesFeature Extraction Using MFCCsipijNo ratings yet

- Rizwan - Avi - IJEEERDocument6 pagesRizwan - Avi - IJEEERTJPRC PublicationsNo ratings yet

- JPEG Standard, MPEG and RecognitionDocument32 pagesJPEG Standard, MPEG and RecognitionTanya DuggalNo ratings yet

- Jawaharlal Nehru Engineering College: Digital Image ProcessingDocument26 pagesJawaharlal Nehru Engineering College: Digital Image ProcessingAMRITESHWAR MISHRA50% (2)

- 4-1장 (DFT and Signal Spectrum)Document32 pages4-1장 (DFT and Signal Spectrum)안창용[학생](전자정보대학 전자공학과)No ratings yet