Professional Documents

Culture Documents

Ijcse V2i2p1

Uploaded by

ISAR-PublicationsOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Ijcse V2i2p1

Uploaded by

ISAR-PublicationsCopyright:

Available Formats

International Journal of Computer science engineering Techniques-– Volume 2 Issue 2, Jan - Feb 2017

RESEARCH ARTICLE –– OPEN ACCESS

An Improved Color Image Compression Approach based on Run

Length Encoding

Uqba bn Naffa

Computer Science Department, University of Mustansiriyah, Baghdad, Iraq

Abstract:

Image compression is the noticeable requirement of recent digital image processing strategies as

well as codes to save large digital images in small images. And for the same reason we need the image

compression algorithms which has optimum performance of compression without losing visual quality of

image. This paper presents an improved color image compression approach that has the ability to

compress the color image. The proposed approach divides the color image into RGB bands; each band is

selected to be divided. The division processes of the band into blocks are based on specific criteria with

non-overlapping. For each selection, the some operations are applied. A particular implementation of this

approach was tested, and its performance was quantified using the peak signal-to-noise ratio and similarity

index. Numerical results indicated general improvements in visual quality for color image coding.

Keywords — Image compression, Color image, DCT transforms, Image coding.

the size of the data that images contain. Generally,

image compression schemes in [2, 3] exploit certain

I. INTRODUCTION

data redundancies to convert the image to a smaller

Compression is any method reducing the original form.

amount of data to another less quantity. One can For compression, a luminance-chrominance

categorize already elaborated algorithms to lossless representation is considered superior to the RGB

or lossy techniques: In the first case: the quality is representation. Therefore, RGB images are

totally preserved and we converse about storage or transformed to one of the luminance-chrominance

transmission reduction. In the counterpart, lossy models, performing the compression process, and

algorithms look for the check of the well-known then transform back to RGB model because

tradeoff rate-distortion. It means that the quality of displays are most often provided output image with

the decompressed data must be in the tolerable direct RGB model. The luminance component

bounds defined by each specific application represents the intensity of the image and look like a

according to the maximum possible compression gray scale version. The chrominance components

ratio reachable [1]. represent the color information in the image [4].

In the recent years there has been an astronomical Douak et al. [5] have proposed a new algorithm for

increase in the usage of computers for a variety of color images compression.

tasks. One of the most common usage has been the Mohamed et al. [6] proposed a hybrid image

storage, manipulation, and transfer of digital images. compression method, which the background of the

The files that comprise these images, however, can image is compressed using lossy compression and

be quite large and can quickly take up precious the rest of the image is compressed using lossless

memory space on the computer’s hard drive. In compression. In hybrid compression of color

multimedia application, most of the images are in images with larger trivial background by histogram

color. The color images contain lot of data segmentation, input color image is subjected to

redundancy and require a large amount of storage binary segmentation using histogram to detect the

space. Image compression refers to the reduction of background. The color image is compressed by

ISSN: 2455-135X http://www.ijcsejournal.org Page 1

International Journal of Computer science engineering Techniques-– Volume 2 Issue 2, Jan - Feb 2017

standard lossy compression method. The difference

between the lossy image and the original image is

computed and is called as residue. The residue at

the background area is dropped and rest of the area

Fig. 1 DCT-Based Encoder Processing Steps

is compressed by standard lossless compression

method. This method gives lower bit rate than the

lossless compression methods and is well suited to

any color image with larger trivial background.

II. ARCHITECTURE FOR THE COMPRESSION

TECHNIQUE STANDARD

The compression technique contains the four

“modes of operation”. For each mode, one or more Fig. 2 DCT-Based Decoder Processing Steps

distinct codec's are specified. Codec's within a

A. The 8x8 FDCT and IDCT

mode differ according to the precision of source

image samples they can handle or the entropy At the input to the encoder, source image samples

coding method they use. Although the word codec are grouped into 8x8 blocks, and a DCT is

(encoder/decoder) is used frequently in this project, performed on each block, processing them from left

there is no requirement that implementations must to right, top to bottom, and input to the Forward

include both an encoder and a decoder. Many DCT (FDCT). At the output from the decoder, the

applications will have systems or devices which Inverse DCT (IDCT) outputs 8x8 sample blocks to

require only one or the other [7]. form the reconstructed image. The following

The four modes of operation and their various equations are the idealized mathematical definitions

codec's have resulted from JPEG’s goal of being of the 8x8 FDCT and 8x8 IDCT [8]:

generic and from the diversity of image formats

across applications. The multiple pieces can give

the impression of undesirable complexity, but they

should actually be regarded as a comprehensive

“toolkit” which can span a wide range of

continuous-tone image applications. It is unlikely

that many implementations will utilize every tool --

indeed, most of the early implementations now on

the market (even before final ISO approval) have

implemented only the Baseline sequential codec.

Figures (1) and (2) show the key processing steps Where, the JPEG compression algorithm works

which are the heart of the DCT-based modes of in three steps: the image is first transformed, and

operation. These figures illustrate the special case then the coefficients are quantized, and finally

of single-component (gray scale) image encoded with a variable-length lossless code.

compression [8]. B. Quantization

After output from the FDCT, each of the 64 DCT

coefficients is uniformly quantized in conjunction

with a 64-element Quantization Table, which must

be specified by the application (or user) as an input

to the encoder. Each element can be any integer

value from 1 to 255, which specifies the step size of

the quantizer for its corresponding DCT coefficient.

The purpose of quantization is to achieve further

compression by representing DCT coefficients with

ISSN: 2455-135X http://www.ijcsejournal.org Page 2

International Journal of Computer science engineering Techniques

Techniques-– Volume 2 Issue 2

2, Jan - Feb 2017

no greater precision than is necessary to achieve the At low rates, in order to meet the target

ta coding

desired image quality. Stated another way, the goal rate, the quantization table chosen is so coarse that

of this processing step is to discard information only the DC coefficient is encoded without the

which is not visually significant. Quantization is a higher frequency details. At the decoder this creates

many-to-one

one mapping, and therefore is visually noticeable discontinuities at the block

fundamentally lossy. It is the principal source of boundaries [7].

lossless in DCT-based encoders.

Quantization is defined as division of each DCT

coefficient

cient by its corresponding quantizer step size,

followed by rounding to the nearest integer [8]:

This output value is normalized by the quantizer

step size. Dequantization is the inverse function,

which in this case means simply that the

normalization is removed by multiplying by the

step size, which returns the result to a

representation appropriate for input to the IDCT:

Fig 4. Zigzag ordering of coefficients of the 8 x 8 blocks in the JPEG

algorithm.

C. Entropy Coding

The quantized values are then reordered The final DCT-based

based encoder processing step is

according to a zigzag pattern shown in Figures (3) entropy coding. This step achieves additional

and (4). compression losslessly by encoding the quantized

DCT coefficients more compactly based on their

statistical characteristics. The JPEG proposal

specifies two entropy coding methods Huffman

coding [7] and Run Length coding [5].

D. Run-length encoding

Run-length encoding is a data compression

algorithm that helps us encode large runs of

repeating items by only sending one item from the

run and a counter showing how many times this

item is repeated. Unfortunately this technique is

useless when

en trying to compress natural language

Fig. 3 The Quantization Table. texts, because they don’t have long runs of

To take advantage of the slow varying nature of repeating elements. In the other hand RLE is useful

most natural images, the DC coefficient is predicted when it comes to image compression, because

from the DC coefficient of the previous block and images happen to have long runs pixels with

differentially encoded with a variable length code. identical color. As you can see on o the following

The rest of the coefficients (referred to as AC picture we can compress consecutive pixels by only

coefficients) are run-length

length coded. JPEG produces replacing each run with one pixel from it and a

relatively good performance at medium to high counter showing how many items it contains [9].

coding rates, but suffers from blocking artifacts at

lower rates because of the block-based

based encoding.

ISSN: 2455-135X http://www.ijcsejournal.org Page 3

International Journal of Computer science engineering Techniques-– Volume 2 Issue 2, Jan - Feb 2017

performed the testing on a standard color test

images Lena, Fruit and Airplane of size 256×256

(see Figure 6). We analyze the results obtained with

III. THE PROPOSED IMAGE COMPRESSION the first, second and finally, the proposed algorithm.

APPROACH All images and tables from the experiments are

In order to obtain the best possible compression given. Standard measures for image compression

ratio (CR), Discrete cosine transform (DCT) has [9], like compression ratio (CR) and peak signal to

been widely used in image and video coding noise ratio (PSNR) and structural similarity (SSIM)

systems, where zigzag scan is usually employed for were used, which are calculated for comparing the

DCT coefficient organization and it is the last stage performance of the proposed approach as per the

of processing a compressed image in a transform

coder, before it is fed to final entropy encoding

stage. The basic idea of the new approach is to

divide the image into 8×8 blocks and then extract

the consecutive non-zero coefficients preceding the

zero coefficients in each block. The decompression

process can be performed systematically and the

number of zero coefficients can be computed by

subtracting the number of non-zero coefficients

from 64 for each block. The block diagram of

proposed image compression approach is shown in

Fig.5.

following representations:

Fig 6. The results of proposed approach, left column represent the original

images, right column represent the compressed images.

Fig 5.The block diagram of proposed image compression approach

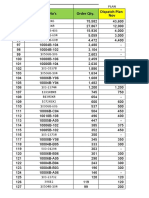

From the results listed in Table (1), the proposed

IV. EXPERIMENTAL RESULTS codec achieves a high performance.

For the implementation and evaluation of the TABLE I

approach we developed a visual basic 6 code and NUMERICAL RESULTS FOR PROPOSED METHOD.

ISSN: 2455-135X http://www.ijcsejournal.org Page 4

International Journal of Computer science engineering Techniques-– Volume 2 Issue 2, Jan - Feb 2017

Color Images with LargerTrivial Background

by Histogram Segmentation“, (IJCSIS)

Image PSNR SSIM CR International Journal of Compute.

Lena 44.67 0.903 10.5 [7]. Makrogiannis S, Economou G, Fotopoulos S.

Fruit 42.32 0.879 16.7 Region oriented compression of color images

Airplane 43.88 0.894 14.5 using fuzzy inference and fast merging,” Pattern

Recognition, 35:1807–20, 2002.

V. CONCLUSIONS [8]. Alkholidi A, Alfalou A, Hamam H. “A new

In this paper an improved color image approach for optical colored image compression

compression approach was proposed along with its using the jpeg standards,” Signal Processing,

applications to compress color images. The 87:569–83, 2007.

obtained results shows the improvement of the [9]. G. Sreelekha, P.S. Sathidevi, "An HVS based

proposed method over the recent published paper adaptive quantization scheme for the

both in quantitative PSNR terms and very compression of color images", Digital Signal

particularly, in visual quality of the reconstructed Processing, Vol. 20(4), pp. 1129-1149, 2010.

images. Furthermore, it increased the compression

rate.

REFERENCES

[1]. Pallavi N. Save and Vishakha Kelkar, “An

Improved Image Compression Method using

LBG with DCT”, IJERT Journal, Volume-

3,Issue-06, June-2014.

[2]. X. O. Zhao and Z. H. He, “Lossless image

compression using super-spatial structure

prediction,” Signal Processing Letters, IEEE,

vol. 17, no. 4, pp. 383–386, 2010.

[3]. W.M. Abd-Elhafiez, “Image compression

algorithm using a fast curvelet transform,”

International Journal of Computer Science and

Telecommunications, vol. 3, no. 4, pp. 43–47,

2012.

[4]. M. Sonka, V. Halva, and T.Boyle, “Image

Processing Analysis and Machine Vision”,

Brooks/Cole Publishing Company, 2nd Ed.,

1999.

[5]. F. Douak, Redha Benzid, Nabil Benoudjit “Color

image compression algorithm based on the DCT

transform combined to an adaptive block

scanning,” Int. J. Electron. Commun. (AEU),

vol. 65, pp. 16–26, 2011.

[6]. M. Mohamed Sathik, K.Senthamarai Kannan and

Y.Jacob Vetha Raj, “Hybrid Compression of

ISSN: 2455-135X http://www.ijcsejournal.org Page 5

You might also like

- Image Compression: Efficient Techniques for Visual Data OptimizationFrom EverandImage Compression: Efficient Techniques for Visual Data OptimizationNo ratings yet

- Raster Graphics Editor: Transforming Visual Realities: Mastering Raster Graphics Editors in Computer VisionFrom EverandRaster Graphics Editor: Transforming Visual Realities: Mastering Raster Graphics Editors in Computer VisionNo ratings yet

- Presentation On Image CompressionDocument28 pagesPresentation On Image Compressionbushra819100% (2)

- Digital Image Processing (Image Compression)Document38 pagesDigital Image Processing (Image Compression)MATHANKUMAR.S100% (2)

- What Is The Need For Image Compression?Document38 pagesWhat Is The Need For Image Compression?rajeevrajkumarNo ratings yet

- Digital Assignment IDocument10 pagesDigital Assignment IRobin SahNo ratings yet

- JPEG Project ReportDocument16 pagesJPEG Project ReportDinesh Ginjupalli100% (1)

- Literature Review On Different Compression TechniquesDocument5 pagesLiterature Review On Different Compression TechniquesInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Haar Wavelet Based Approach For Image Compression and Quality Assessment of Compressed ImageDocument8 pagesHaar Wavelet Based Approach For Image Compression and Quality Assessment of Compressed ImageLavanya NallamNo ratings yet

- Analysing Jpeg Coding With MaskingDocument10 pagesAnalysing Jpeg Coding With MaskingIJMAJournalNo ratings yet

- 5615 Ijcsa 06Document11 pages5615 Ijcsa 06Anonymous lVQ83F8mCNo ratings yet

- Chapter 1Document45 pagesChapter 1Ravi SharmaNo ratings yet

- DCT Image Compression For Color ImagesDocument6 pagesDCT Image Compression For Color ImagesEditor IJRITCCNo ratings yet

- 13imagecompression 120321055027 Phpapp02Document54 pages13imagecompression 120321055027 Phpapp02Tripathi VinaNo ratings yet

- A Novel Image Compression Approach Inexact ComputingDocument7 pagesA Novel Image Compression Approach Inexact ComputingEditor IJTSRDNo ratings yet

- JPEG Using Baseline Method2Document5 pagesJPEG Using Baseline Method2krinunnNo ratings yet

- Low Dynamic Range Discrete Cosine Transform LDRDCT For Highperformance JPEG Image Compressionvisual ComputerDocument26 pagesLow Dynamic Range Discrete Cosine Transform LDRDCT For Highperformance JPEG Image Compressionvisual ComputerHelder NevesNo ratings yet

- Image Compression Using Run Length Encoding (RLE)Document6 pagesImage Compression Using Run Length Encoding (RLE)Editor IJRITCCNo ratings yet

- JPEG Image Compression and Decompression by Huffman CodingDocument7 pagesJPEG Image Compression and Decompression by Huffman CodingInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Spith AlgoDocument54 pagesSpith AlgoSwetha RajNo ratings yet

- DSP TermpaperDocument6 pagesDSP Termpaperਗੁਰਪਰੀਤ ਸਿੰਘ ਸੂਰਾਪੁਰੀNo ratings yet

- (IJCST-V4I2P16) :anand MehrotraDocument4 pages(IJCST-V4I2P16) :anand MehrotraEighthSenseGroupNo ratings yet

- A Novel Hybrid Linear Predictive Coding - Discrete Cosine Transform Based CompressionDocument5 pagesA Novel Hybrid Linear Predictive Coding - Discrete Cosine Transform Based CompressionRahul SharmaNo ratings yet

- Novel Technique For Improving The Metrics of Jpeg Compression SystemDocument8 pagesNovel Technique For Improving The Metrics of Jpeg Compression SystemInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Implementation of Image and Audio Compression Techniques UsingDocument26 pagesImplementation of Image and Audio Compression Techniques UsingHemanth KumarNo ratings yet

- Sidde Q 2017Document17 pagesSidde Q 2017sedraNo ratings yet

- A Novel High-Frequency Encoding Algorithm For Image CompressionDocument18 pagesA Novel High-Frequency Encoding Algorithm For Image CompressionperhackerNo ratings yet

- Call - For - PaperDDiscrete Cosine Transform For Image CompressionDocument7 pagesCall - For - PaperDDiscrete Cosine Transform For Image CompressionAlexander DeckerNo ratings yet

- Project 1Document17 pagesProject 1bahiru meleseNo ratings yet

- 3 Vol 15 No 1Document6 pages3 Vol 15 No 1alkesh.engNo ratings yet

- Project JPEG DecoderDocument16 pagesProject JPEG DecoderParsa AminiNo ratings yet

- Information Theory and Coding: Submitted byDocument12 pagesInformation Theory and Coding: Submitted byAditya PrasadNo ratings yet

- ProjectDocument13 pagesProjectbahiru meleseNo ratings yet

- An Efficient and Fast Image Compression Technique Using Hybrid MethodsDocument4 pagesAn Efficient and Fast Image Compression Technique Using Hybrid MethodserpublicationNo ratings yet

- Still Image CompressionDocument15 pagesStill Image CompressionDivya PolavaramNo ratings yet

- Lossless Image Compression Algorithm For Transmitting Over Low Bandwidth LineDocument6 pagesLossless Image Compression Algorithm For Transmitting Over Low Bandwidth Lineeditor_ijarcsseNo ratings yet

- Intoduction: Image Processing and Compression TechniquesDocument61 pagesIntoduction: Image Processing and Compression TechniquesVinod KumarNo ratings yet

- Patch-Based Image Learned Codec Using OverlappingDocument21 pagesPatch-Based Image Learned Codec Using OverlappingsipijNo ratings yet

- Minor Project Report On Image CompressionDocument8 pagesMinor Project Report On Image CompressionRohanPathakNo ratings yet

- Jpeg Compressor Using MatlabDocument6 pagesJpeg Compressor Using Matlabاحمد ابراهيمNo ratings yet

- Wavelets and Neural Networks Based Hybrid Image Compression SchemeDocument6 pagesWavelets and Neural Networks Based Hybrid Image Compression SchemeInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- 8.a) Image Synthesis Is The Process of Creating New Images From Some Form of Image DescriptionDocument3 pages8.a) Image Synthesis Is The Process of Creating New Images From Some Form of Image Descriptionlucky771983No ratings yet

- Competent Communication of JPEG2012 Over Wireless GrooveDocument4 pagesCompetent Communication of JPEG2012 Over Wireless Grooveeditor_ijarcsseNo ratings yet

- Gip Unit 5 - 2018-19 EvenDocument36 pagesGip Unit 5 - 2018-19 EvenKalpanaMohanNo ratings yet

- JM - Elektro,+37209 80320 5 ED+Document12 pagesJM - Elektro,+37209 80320 5 ED+desi silviaaaNo ratings yet

- DWT SpihtDocument8 pagesDWT SpihtPavan GaneshNo ratings yet

- Development of Medical Image Compression TechniquesDocument5 pagesDevelopment of Medical Image Compression TechniquesLisaNo ratings yet

- Investigations On Reduction of Compression Artifacts in Digital Images Using Deep LearningDocument4 pagesInvestigations On Reduction of Compression Artifacts in Digital Images Using Deep LearningBalachander RajasekarNo ratings yet

- Enhancing The Image Compression Rate Using SteganographyDocument6 pagesEnhancing The Image Compression Rate Using SteganographytheijesNo ratings yet

- Image Compression Using VerilogDocument5 pagesImage Compression Using VerilogvisuNo ratings yet

- EbcotDocument3 pagesEbcotnaveensilveriNo ratings yet

- Image Processing and Compression Techniques: Digitization Includes Sampling of Image and Quantization of Sampled ValuesDocument14 pagesImage Processing and Compression Techniques: Digitization Includes Sampling of Image and Quantization of Sampled ValuesSonali JamwalNo ratings yet

- Video Transcoding: An Overview of Various Architectures & Design IssuesDocument5 pagesVideo Transcoding: An Overview of Various Architectures & Design IssuesInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Research Article Bit-Error Aware Lossless Image Compression With 2D-Layer-Block CodingDocument18 pagesResearch Article Bit-Error Aware Lossless Image Compression With 2D-Layer-Block CodingKyawYe AungNo ratings yet

- FPGA Based Implementation of Baseline JPEG DecoderDocument7 pagesFPGA Based Implementation of Baseline JPEG DecoderUmar AnjumNo ratings yet

- Chapter 2 Digital Image CompressionDocument12 pagesChapter 2 Digital Image CompressionKetan SharmaNo ratings yet

- ImageDocument4 pagesImagehassan IQNo ratings yet

- Chapter 5 Data CompressionDocument18 pagesChapter 5 Data CompressionrpNo ratings yet

- Final Part 3.5Document36 pagesFinal Part 3.5Sailakshmi PaidimarriNo ratings yet

- Color Profile: Exploring Visual Perception and Analysis in Computer VisionFrom EverandColor Profile: Exploring Visual Perception and Analysis in Computer VisionNo ratings yet

- A Heuristic Approach For The Independent Set Problem: AbstractDocument6 pagesA Heuristic Approach For The Independent Set Problem: AbstractISAR-PublicationsNo ratings yet

- Ijcse V2i3p4Document6 pagesIjcse V2i3p4ISAR-PublicationsNo ratings yet

- A Study of Edge Detection Techniques: AbstractDocument10 pagesA Study of Edge Detection Techniques: AbstractISAR-PublicationsNo ratings yet

- Graph Database-An Overview of Its Applications and Its TypesDocument5 pagesGraph Database-An Overview of Its Applications and Its TypesISAR-PublicationsNo ratings yet

- Image Hiding Technique Based On Secret Fragment Visible Mosaic ImageDocument6 pagesImage Hiding Technique Based On Secret Fragment Visible Mosaic ImageISAR-PublicationsNo ratings yet

- Ijcse V2i1p2Document6 pagesIjcse V2i1p2ISAR-PublicationsNo ratings yet

- A Study of Load Balancing Algorithm On Cloud Computing: AbstractDocument5 pagesA Study of Load Balancing Algorithm On Cloud Computing: AbstractISAR-PublicationsNo ratings yet

- A Secure Search Scheme of Encrypted Data On Mobile Cloud: AbstractDocument11 pagesA Secure Search Scheme of Encrypted Data On Mobile Cloud: AbstractISAR-PublicationsNo ratings yet

- Ijcse V2i1p1Document6 pagesIjcse V2i1p1ISAR-PublicationsNo ratings yet

- Stable Cloud Federations in The Sky: Formation Game and MechanismDocument9 pagesStable Cloud Federations in The Sky: Formation Game and MechanismISAR-PublicationsNo ratings yet

- PSMPA: Patient Self-Controllable and Multi-Level Privacy-Preserving Cooperative Authentication in Distributed M-Healthcare Cloud Computing SystemDocument8 pagesPSMPA: Patient Self-Controllable and Multi-Level Privacy-Preserving Cooperative Authentication in Distributed M-Healthcare Cloud Computing SystemISAR-PublicationsNo ratings yet

- Distributing Immediate Social Video Facility Across Multiple CloudsDocument4 pagesDistributing Immediate Social Video Facility Across Multiple CloudsISAR-PublicationsNo ratings yet

- Cloud Computing Security Issues Problem and Strategy: AbstractDocument4 pagesCloud Computing Security Issues Problem and Strategy: AbstractISAR-PublicationsNo ratings yet

- Information Technology Tool in Library Online Public Access Catalog (OPAC)Document3 pagesInformation Technology Tool in Library Online Public Access Catalog (OPAC)ISAR-PublicationsNo ratings yet

- Ijcse V1i4p1Document8 pagesIjcse V1i4p1ISAR-PublicationsNo ratings yet

- Ijcse V1i3p1Document14 pagesIjcse V1i3p1ISAR-PublicationsNo ratings yet

- Ijcse V1i2p2 PDFDocument9 pagesIjcse V1i2p2 PDFISAR-PublicationsNo ratings yet

- Ijcse V1i1p1Document4 pagesIjcse V1i1p1ISAR-PublicationsNo ratings yet

- Mauser 98K - Model 48 Rifle ManualDocument20 pagesMauser 98K - Model 48 Rifle ManualMeor Amri96% (28)

- Mech 3-Module 1Document41 pagesMech 3-Module 1melkisidick angloanNo ratings yet

- IEM PI A401 - ANNEXE - Design & Site ExperienceDocument5 pagesIEM PI A401 - ANNEXE - Design & Site ExperienceapiplajengilaNo ratings yet

- Understanding Low Voltage Power Distribution SystemsDocument64 pagesUnderstanding Low Voltage Power Distribution SystemsJorge Luis Mallqui Barbaran100% (1)

- EMOC 208 Installation of VITT For N2 Cylinder FillingDocument12 pagesEMOC 208 Installation of VITT For N2 Cylinder Fillingtejcd1234No ratings yet

- SMP Gateway SoftPLC Reference ManualDocument47 pagesSMP Gateway SoftPLC Reference Manualswalker948100% (1)

- Jamesbury Polymer and Elastomer Selection GuideDocument20 pagesJamesbury Polymer and Elastomer Selection Guidesheldon1jay100% (1)

- Fast, Accurate Data Management Across The Enterprise: Fact Sheet: File-Aid / MvsDocument4 pagesFast, Accurate Data Management Across The Enterprise: Fact Sheet: File-Aid / MvsLuis RamirezNo ratings yet

- AquaCal Tropical Brochure PDFDocument2 pagesAquaCal Tropical Brochure PDFJC ParedesNo ratings yet

- KST GlueTech 44 enDocument80 pagesKST GlueTech 44 enLeandro RadamesNo ratings yet

- 1.1 General: "Processes and Environmental Management" at SUEZ LTD, BWSSB, TK HalliDocument29 pages1.1 General: "Processes and Environmental Management" at SUEZ LTD, BWSSB, TK HalliYogeesh B ENo ratings yet

- 25252525Document38 pages25252525Ivan GonzalezNo ratings yet

- PT14 Engine Monitor 1Document2 pagesPT14 Engine Monitor 1BJ DixNo ratings yet

- Lecure Two. ReactorsDocument56 pagesLecure Two. ReactorsSophia WambuiNo ratings yet

- DSP45 12aDocument5 pagesDSP45 12aDaniel BarbuNo ratings yet

- 4.10) Arch Shaped Self Supporting Trussless Roof SpecificationsDocument11 pages4.10) Arch Shaped Self Supporting Trussless Roof Specificationshebh123100% (1)

- Asgmnt HECRASDocument7 pagesAsgmnt HECRASShahruzi MahadzirNo ratings yet

- CoreJava Ratan CompleteMarerial PDFDocument398 pagesCoreJava Ratan CompleteMarerial PDFSivaShankar100% (7)

- Order Qty Vs Dispatch Plan - 04 11 20Document13 pagesOrder Qty Vs Dispatch Plan - 04 11 20NPD1 JAKAPNo ratings yet

- Wiring Color and Pin-Out Schematic Electronic Vessel Control EVC - C, D4/D6-DPH/DPRDocument2 pagesWiring Color and Pin-Out Schematic Electronic Vessel Control EVC - C, D4/D6-DPH/DPRSivan Raj50% (2)

- Paket TrainingDocument20 pagesPaket TrainingLukman AriyantoNo ratings yet

- Comparative Study of Nylon and PVC Fluidized Bed Coating On Mild SteelDocument12 pagesComparative Study of Nylon and PVC Fluidized Bed Coating On Mild SteelWaqqas ChaudhryNo ratings yet

- Cisco Network DiagramDocument1 pageCisco Network DiagramĐỗ DuyNo ratings yet

- Build-A-Bard ABB - RFQ-807683Reaprovechamiento Relaves - SR For Cotejado 010A - HVACDocument18 pagesBuild-A-Bard ABB - RFQ-807683Reaprovechamiento Relaves - SR For Cotejado 010A - HVACchristianNo ratings yet

- Honeywell P7640B1032 Differential Pressure Sensors PDFDocument2 pagesHoneywell P7640B1032 Differential Pressure Sensors PDFMarcello PorrinoNo ratings yet

- A9K CatalogueDocument152 pagesA9K CatalogueMohamed SaffiqNo ratings yet

- Parallel Port Programming (PART 1) With CDocument13 pagesParallel Port Programming (PART 1) With ChertzoliNo ratings yet

- Heat Transfer - A Basic Approach - OzisikDocument760 pagesHeat Transfer - A Basic Approach - OzisikMaraParesque91% (33)

- Geotechnical Engineering 1 (RMIT) Course RevisionDocument53 pagesGeotechnical Engineering 1 (RMIT) Course RevisionSaint123No ratings yet

- ISO 9001:2015 Questions Answered: Suppliers CertificationDocument3 pagesISO 9001:2015 Questions Answered: Suppliers CertificationCentauri Business Group Inc.100% (1)