Professional Documents

Culture Documents

Two Dimensional Random Variable

Uploaded by

RajaRaman.GCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Two Dimensional Random Variable

Uploaded by

RajaRaman.GCopyright:

Available Formats

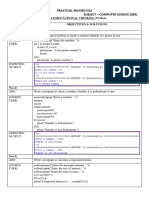

Two Dimensional Random Variables

Two dimensional random variable

Let S be the sample space of a random experiment. Let X and Y be two random

variables defined on S. Then the pair (X,Y) is called a two dimensional random variable.

Discrete bivariate random variable

If both the random variables X and Y are discrete then (X,Y) is called a discrete random

variable.

Joint Probability mass function

Let X take values {x1 , x 2 , …, x n } and Y take values { y1 , y 2 ,… , y m } . Then

p ( xi , y j ) = P ( X = xi , Y = y j ) = P ( X = xi ∩ Y = y j ) . {xi, yj, p(xi,yj)} is called joint

probability mass function.

Marginal Probability Mass Function of X

{xi , p X ( xi )} is called the marginal probability mass function of X where

m

p X ( xi ) = ∑ p ( xi , y j ) .

j =1

Marginal Probability Mass Function of Y

{ y j , pY ( y J )} is called the marginal probability mass function of Y where

n

pY ( y j ) = ∑ p ( xi , y j ) .

i =1

Conditional Probability Mass Function

p ( xi , y j )

• Of X given Y=yj PX / Y ( xi / y j ) = , i = 1,2,… , n

pY ( y j )

p( xi , y j )

• Of Y given X=xi PY / X ( y j / xi ) = , j = 1,2,… , m

p X ( xi )

Independent Random Variables

Two random variables X and Y are said to be independent if

p ( xi , y j ) = p X ( xi ) pY ( y j ) , i = 1,2,… , n ; j = 1,2,… , m

Dr. R. Sujatha / Dr. B. Praba, Maths Dept., SSNCE.

Continuous bivariate random variable

If X and Y are both continuous then (X,Y) is a continuous bivariate random variable.

Joint Probability Density Function

If (X,Y) is a two dimensional continuous random variable such that

dx dx dy dy

Px − ≤ X ≤ x+ ∩y− ≤ Y ≤ y + = f XY ( x, y )dxdy then f(x,y) is called the

2 2 2 2

joint pdf of (X,Y) provided (i) f ( x, y ) ≥ 0, ∀( x, y ) ∈ R XY (ii) ∫∫ f ( x, y )dxdy = 1 .

R XY

Joint Distribution Function

x y

FXY ( x, y ) = P( X ≤ x, Y ≤ y ) = ∫ ∫ f ( x, y )dydx

−∞ − ∞

∂ 2 F ( x, y )

Note: f ( x, y ) =

∂x∂y

Marginal Probability Density Function

∞

• Of X f X ( x) = ∫ f ( x, y )dy

−∞

∞

• Of Y f Y ( y ) = ∫ f ( x, y )dx

−∞

Marginal Probability Distribution Function

x

• Of X FX ( x) = ∫ f X ( x)dx

−∞

y

• Of Y FY ( y ) = ∫ f Y ( y )dy

−∞

Conditional Probability Density Function

f ( x, y )

• Of Y given X = x f Y / X ( y / x) =

f X ( x)

f ( x, y )

• Of X given Y = y f X / Y ( x / y ) =

f Y ( y)

Independent random variables

X and Y are said to be independent if f ( x, y ) = f X ( x) f Y ( y ) i.e, F ( x, y ) = FX ( x) FY ( y ) .

Dr. R. Sujatha / Dr. B. Praba, Maths Dept., SSNCE.

Moments of two dimensional random variable

The (m, n)th moment of a two dimensional random variable (X,Y) is

µ mn = E ( X mY n ) = ∑ ∑ xi m y j n p( xi , y j ) (discrete case)

i j

∞ ∞

= ∫ ∫ x m y n f ( x, y )dxdy (continuous case)

−∞ − ∞

Note : (1) when m=1,n=1 we have E(XY)

(2) when m = 1, n = 0 we have E ( X ) = ∑ ∑ xi p( xi , y j ) = ∑ xi p X ( xi ) (discrete)

i j i

∞ ∞ ∞

= ∫ ∫ xf ( x, y )dxdy = ∫ xf X ( x)dx (continuous)

−∞ − ∞ −∞

(3) when m = 0, n = 1 we have E (Y ) = ∑ ∑ y j p( xi , y j ) = ∑ y j pY ( y j ) (discrete)

i j j

∞ ∞ ∞

= ∫ ∫ yf ( x, y )dxdy = ∫ yf Y ( y )dy (continuous)

−∞ − ∞ −∞

(4) E ( X ) = ∑ xi p X ( xi ) (discrete)

2 2

i

∞

= ∫ x 2 f X ( x)dx (continuous)

−∞

(5) E (Y 2 ) = ∑ y j pY ( y j ) (discrete)

2

j

∞

= ∫ y 2 f Y ( y )dy (continuous)

−∞

Covariance

Cov( X , Y ) = E[( X − X )(Y − Y )] = E ( XY ) − XY

Note: (1) If X and Y are independent then E(XY)=E(X)E(Y). (Multiplication theorem

for expectation). Hence Cov(X,Y)=0.

(2) Var(aX+bY)=a2Var(X)+b2Var(Y)+2abCov(X,Y).

Correlation Coefficient

This is measure of the linear relationship between any two random variables X and Y.

The Karl Pearson’s Correlation Coefficient is

Dr. R. Sujatha / Dr. B. Praba, Maths Dept., SSNCE.

Cov( X , Y )

ρ = r( X ,Y ) =

σ XσY

Note: Correlation coefficient lies between -1 and 1.

Regression lines

σX

The regression line of X on Y: X − X = r (Y − Y )

σY

σ

The regression line of Y on X: Y − Y = r Y ( X − X )

σX

r- Correlation coefficient of X and Y.

Transformation of random variables

Let X, Y be random variables with joint pdf f XY ( x, y ) and let u(x, y) and be v(x, y) be

two continuously differentiable functions. Then U = u(x, y) and V = v(x, y) are random

variables. In other words, the random variables (X, Y) are transformed to random

variables (U, V) by the transformation u = u(x, y) and v = v(x, y).

The joint pdf gUV (u,v ) of the transformed variables U and V is given by

gUV (u , v ) = f XY ( x, y ) J

∂x ∂y

∂ ( x, y ) ∂u ∂u i.e., it is the modulus value of the Jacobian of

where |J| = =

∂ (u, v ) ∂x ∂y

∂v ∂v

transformation and f XY ( x, y ) is expressed in terms of U and V.

Dr. R. Sujatha / Dr. B. Praba, Maths Dept., SSNCE.

You might also like

- Code Gladiators Hunt for India's Best Coders document analysisDocument3 pagesCode Gladiators Hunt for India's Best Coders document analysisJoy BhattacharyaNo ratings yet

- Factorial C ProgramDocument7 pagesFactorial C Programbalaji1986No ratings yet

- Cognizant Coding Syllabus 2019 (Updated) : Type of Question DescriptionDocument7 pagesCognizant Coding Syllabus 2019 (Updated) : Type of Question Descriptionprateek bharadwajNo ratings yet

- 3python Live Class Upload-1Document16 pages3python Live Class Upload-1navinNo ratings yet

- Write A Program To Show Whether Entered Numbers Are Prime or Not in The Given RangeDocument24 pagesWrite A Program To Show Whether Entered Numbers Are Prime or Not in The Given Rangedelta singhNo ratings yet

- Gate 2024 Da Sample Question Paper FinalDocument29 pagesGate 2024 Da Sample Question Paper FinalShrinad PatilNo ratings yet

- Cs Codes 12thDocument33 pagesCs Codes 12thAbhay MehtaNo ratings yet

- Math Project - Boolean AlgebraDocument14 pagesMath Project - Boolean AlgebraDhyana Beladia100% (1)

- Made Easy Ce Gate-2018 Sol Shift 2Document41 pagesMade Easy Ce Gate-2018 Sol Shift 2Gaddam SudheerNo ratings yet

- Java Questions 3: A. B. C. D. EDocument11 pagesJava Questions 3: A. B. C. D. Ezynofus technologyNo ratings yet

- Signals Sampling TheoremDocument3 pagesSignals Sampling TheoremRavi Teja AkuthotaNo ratings yet

- Tech Mahindra Programming Round Coding Questions: #IncludeDocument15 pagesTech Mahindra Programming Round Coding Questions: #IncludeAnil BikkinaNo ratings yet

- Sri Krishna College of Engineering and Technology student's C++ coding assessmentDocument5 pagesSri Krishna College of Engineering and Technology student's C++ coding assessmentRithan KarthikNo ratings yet

- Discrete Mathematics MCQ'SDocument33 pagesDiscrete Mathematics MCQ'SGuruKPO100% (1)

- Programs of SirDocument5 pagesPrograms of Sirlovablevishal143No ratings yet

- Unit WISE 2 MARKS PDFDocument38 pagesUnit WISE 2 MARKS PDFInfi Coaching CenterNo ratings yet

- Illustrative Problems 1. Find Minimum in A ListDocument11 pagesIllustrative Problems 1. Find Minimum in A ListdejeyNo ratings yet

- Numerical Methods Formula MaterialDocument6 pagesNumerical Methods Formula MaterialDhilip PrabakaranNo ratings yet

- NPTEL Online Certification Courses MCQ AssignmentDocument9 pagesNPTEL Online Certification Courses MCQ AssignmentAshana ChandNo ratings yet

- List of C - ProgramsDocument10 pagesList of C - ProgramsMohammed SufiyanNo ratings yet

- AMCAT Automata Questions: Program To Check If Two Given Matrices Are Identical in C LanguageDocument36 pagesAMCAT Automata Questions: Program To Check If Two Given Matrices Are Identical in C LanguageujjwalNo ratings yet

- Cts Aptitude FreshersDocument192 pagesCts Aptitude Freshersgsrekhi1989No ratings yet

- Placement Prep PDFDocument30 pagesPlacement Prep PDFAshwin BalajiNo ratings yet

- AssignmentDocument18 pagesAssignmentpinocchioNo ratings yet

- C Programming Examples and Codes for BeginnersDocument130 pagesC Programming Examples and Codes for BeginnersDevi SinghNo ratings yet

- Algorithm and ComplexityDocument42 pagesAlgorithm and ComplexityRichyNo ratings yet

- A Level - Maths - List of FormulaeDocument10 pagesA Level - Maths - List of Formulaesherlyn may lolNo ratings yet

- Data StructureDocument5 pagesData StructureAhmed GamalNo ratings yet

- Coding Interview Questions For Round 1Document246 pagesCoding Interview Questions For Round 1Free Fire TamilNo ratings yet

- Elitmus All QuestionDocument11 pagesElitmus All QuestionAshish PargainNo ratings yet

- Computer Science Practical File Solutions 2020-21Document36 pagesComputer Science Practical File Solutions 2020-21Soumen MahatoNo ratings yet

- Logical ReasoningDocument10 pagesLogical Reasoningjayanta_1989No ratings yet

- Discrete Mathematics: Logic and ProofsDocument48 pagesDiscrete Mathematics: Logic and ProofsMohammad Gulam AhamadNo ratings yet

- C Programming QuizDocument37 pagesC Programming QuizSiddarth NyatiNo ratings yet

- PythonMCQ ANSWERSDocument14 pagesPythonMCQ ANSWERSSunny Tiwary100% (1)

- CH 3Document62 pagesCH 3RainingGirlNo ratings yet

- Graph Traversal and Minimum Spanning Tree AlgorithmsDocument69 pagesGraph Traversal and Minimum Spanning Tree AlgorithmsEdison Mamani RamirezNo ratings yet

- Placement Questions 1 Verbal Question: 1.find The Correctly Spelled WordDocument16 pagesPlacement Questions 1 Verbal Question: 1.find The Correctly Spelled Wordzynofus technologyNo ratings yet

- Smic Class Xii Python Practicals-2021Document26 pagesSmic Class Xii Python Practicals-2021Aryan YadavNo ratings yet

- Exercises of Design & AnalysisDocument7 pagesExercises of Design & AnalysisAndyTrinh100% (1)

- DXC Technology RecruitmentDocument9 pagesDXC Technology RecruitmentvsbNo ratings yet

- C Programming Questions and Answers – Arrays of StructuresDocument18 pagesC Programming Questions and Answers – Arrays of StructureschitranshpandeyNo ratings yet

- First-Order Logic in Artificial IntelligenceDocument21 pagesFirst-Order Logic in Artificial IntelligenceISHITA GUPTA 20BCE0446No ratings yet

- C QuestionsDocument19 pagesC Questionsramya53919No ratings yet

- Gate-Cs 2008Document31 pagesGate-Cs 2008tomundaNo ratings yet

- Pract File - Part3 - Final Computer Practical Helpful Notes Download ItDocument36 pagesPract File - Part3 - Final Computer Practical Helpful Notes Download ItKavitha KavithaNo ratings yet

- TreeDocument654 pagesTreesumitNo ratings yet

- Sim 2D TRANSFORMATIONS FinalDocument34 pagesSim 2D TRANSFORMATIONS FinalVaishakh SasikumarNo ratings yet

- TCS CodeVita Question PapersDocument51 pagesTCS CodeVita Question PapersSimran 4234No ratings yet

- Adding Two Polynomials Using Linked List: Courses Suggest An ArticleDocument6 pagesAdding Two Polynomials Using Linked List: Courses Suggest An ArticlePratikRoyNo ratings yet

- Daa Lab Record FinalDocument81 pagesDaa Lab Record FinalV VENKATASUBRAMANIAM (RA2011027040006)No ratings yet

- Chap 3 Vectors ECDocument12 pagesChap 3 Vectors ECMidhun BabuNo ratings yet

- Recursion: Data Structures and Algorithms in Java 1/25Document25 pagesRecursion: Data Structures and Algorithms in Java 1/25Tryer0% (1)

- Apcs - Recursion WorksheetDocument5 pagesApcs - Recursion Worksheetapi-355180314No ratings yet

- Analysis of Loops PDFDocument4 pagesAnalysis of Loops PDFZobo CaptainNo ratings yet

- Final For MultivariateDocument73 pagesFinal For MultivariateAishwarya RamNo ratings yet

- Chapter 4: Multiple Random VariablesDocument34 pagesChapter 4: Multiple Random VariablesMaximiliano OlivaresNo ratings yet

- Independence of Random Variables: Example 1Document5 pagesIndependence of Random Variables: Example 1Electrical EngineersNo ratings yet

- Lect6 PDFDocument11 pagesLect6 PDFMarco CocchiNo ratings yet

- Introduction To Probability TheoryDocument13 pagesIntroduction To Probability TheoryarjunvenugopalacharyNo ratings yet

- Affiliated Institutions Anna University of Technology Chennai:: Chennai 600 113 Curriculum 2010Document19 pagesAffiliated Institutions Anna University of Technology Chennai:: Chennai 600 113 Curriculum 2010RajaRaman.GNo ratings yet

- Lect 1Document77 pagesLect 1RajaRaman.GNo ratings yet

- 620-Business Organisation and Office Management-2014-AaDocument2 pages620-Business Organisation and Office Management-2014-AaRajaRaman.GNo ratings yet

- Affiliated Institutions Anna University of Technology Chennai:: Chennai 600 113 Curriculum 2010Document18 pagesAffiliated Institutions Anna University of Technology Chennai:: Chennai 600 113 Curriculum 2010RajaRaman.GNo ratings yet

- Intro PreDocument18 pagesIntro PreRajaRaman.GNo ratings yet

- Computer GraphicsDocument325 pagesComputer GraphicssenathipathikNo ratings yet

- Sem 4Document12 pagesSem 4RajaRaman.GNo ratings yet

- Affiliated Institutions Anna University of Technology Chennai:: Chennai 600 113 Curriculum 2010Document17 pagesAffiliated Institutions Anna University of Technology Chennai:: Chennai 600 113 Curriculum 2010RajaRaman.GNo ratings yet

- Applicable To The Students Admitted From The Academic Year 2010-2011 OnwardsDocument13 pagesApplicable To The Students Admitted From The Academic Year 2010-2011 OnwardsRajaRaman.GNo ratings yet

- Computer GraphicsDocument1 pageComputer GraphicsRaj Bharath RajuNo ratings yet

- II SemDocument17 pagesII SemRajmohan VijayanNo ratings yet

- Ellipse PreDocument15 pagesEllipse PreRajaRaman.GNo ratings yet

- Anna University IT 3rd Semester SyllabusDocument14 pagesAnna University IT 3rd Semester SyllabusaishskyNo ratings yet

- I SEM Common Syllabus BE BTechDocument16 pagesI SEM Common Syllabus BE BTechrjthilakNo ratings yet

- Where To Draw A Line??Document49 pagesWhere To Draw A Line??RajaRaman.GNo ratings yet

- Line CircleDocument20 pagesLine Circleapi-3745439100% (1)

- Anti Aliasing PreDocument22 pagesAnti Aliasing PreRajaRaman.GNo ratings yet

- Line Algorithm PreDocument14 pagesLine Algorithm PreRajaRaman.GNo ratings yet

- Anti Aliasing PreDocument22 pagesAnti Aliasing PreRajaRaman.GNo ratings yet

- Anti Aliasing PreDocument22 pagesAnti Aliasing PreRajaRaman.GNo ratings yet

- Elipse DrawingDocument16 pagesElipse DrawingRajaRaman.GNo ratings yet

- ElipsepostDocument1 pageElipsepostRajaRaman.GNo ratings yet

- AttributesDocument67 pagesAttributesRajaRaman.GNo ratings yet

- 3D Display MethodsDocument18 pages3D Display MethodsRajaRaman.GNo ratings yet

- 2 DviewingpostDocument1 page2 DviewingpostRajaRaman.GNo ratings yet

- 3D ConceptspreDocument10 pages3D ConceptspreRajaRaman.GNo ratings yet

- 2 DViewingDocument49 pages2 DViewingRajaRaman.GNo ratings yet

- 2D Geometric TransformationsDocument21 pages2D Geometric TransformationsRajaRaman.GNo ratings yet

- 2 Dtranspost 1Document1 page2 Dtranspost 1RajaRaman.GNo ratings yet

- 01 IntroductionDocument29 pages01 IntroductionRajaRaman.GNo ratings yet

- Bayesian Model Search and Model AveragingDocument9 pagesBayesian Model Search and Model AveragingRenato Salazar RiosNo ratings yet

- Lecture 2: Basics of Probability Theory: 1 Axiomatic FoundationsDocument7 pagesLecture 2: Basics of Probability Theory: 1 Axiomatic Foundationsshaan76No ratings yet

- Functions of Random Variables DistributionsDocument14 pagesFunctions of Random Variables DistributionsOyster MacNo ratings yet

- Hidden Markov ModelDocument36 pagesHidden Markov ModelMıghty ItaumaNo ratings yet

- Department of Mathematics: 15B11MA301 Probability Theory and Random Processes Tutorial Sheet 1 B.Tech. Core ProbabilityDocument1 pageDepartment of Mathematics: 15B11MA301 Probability Theory and Random Processes Tutorial Sheet 1 B.Tech. Core ProbabilityUddeshya GuptaNo ratings yet

- Spring 2008 Test 2 SolutionDocument10 pagesSpring 2008 Test 2 SolutionAndrew ZellerNo ratings yet

- Chapter 4 ProbabilityDocument35 pagesChapter 4 ProbabilityB-38Hardev GohilNo ratings yet

- HW1 100903Document3 pagesHW1 100903anggrezhkNo ratings yet

- Day2 - Session - 2 - Acropolis - NPPDocument55 pagesDay2 - Session - 2 - Acropolis - NPPVDRATNAKUMARNo ratings yet

- SPSS LiaDocument8 pagesSPSS Liadilla ratnajNo ratings yet

- Chapter Test - Sampling DistributionDocument1 pageChapter Test - Sampling DistributionJennifer Magango100% (1)

- Statistics and Probability NotesDocument9 pagesStatistics and Probability NotesKent DanielNo ratings yet

- SudhanshuDocument25 pagesSudhanshuAvijit BurmanNo ratings yet

- Probability: Applied Statistics in Business & EconomicsDocument9 pagesProbability: Applied Statistics in Business & Economicsdat nguyenNo ratings yet

- 3.17) Refer To Exercise 3.7. Find The Mean and Standard Deviation For Y The Number of EmptyDocument2 pages3.17) Refer To Exercise 3.7. Find The Mean and Standard Deviation For Y The Number of EmptyVirlaNo ratings yet

- 55646530njtshenye Assignment 4 Iop2601 1Document5 pages55646530njtshenye Assignment 4 Iop2601 1nthabisengjlegodiNo ratings yet

- 7 International Probabilistic WorkshopDocument588 pages7 International Probabilistic WorkshopDproske100% (1)

- Exam 2 Practice QuestionsDocument21 pagesExam 2 Practice QuestionsvuduyducNo ratings yet

- Mca4020 SLM Unit 07Document22 pagesMca4020 SLM Unit 07AppTest PINo ratings yet

- Ece368h1s 01 - 22 - 2023Document4 pagesEce368h1s 01 - 22 - 2023Jialun LyuNo ratings yet

- CHP 4Document49 pagesCHP 4Muhammad BilalNo ratings yet

- 2s03 Session 3 CLT & Normal Dist (Handout)Document51 pages2s03 Session 3 CLT & Normal Dist (Handout)Sabrina RuncoNo ratings yet

- Example2 12projectDocument22 pagesExample2 12projectmaherkamelNo ratings yet

- Skewness KurtosisDocument26 pagesSkewness KurtosisRahul SharmaNo ratings yet

- Chi Square Test (Research Methodology) PDFDocument11 pagesChi Square Test (Research Methodology) PDFUtpal BoraNo ratings yet

- PSUnit IV Lesson 2 Understanding Confidence Interval Estimates For The Sample MeanDocument18 pagesPSUnit IV Lesson 2 Understanding Confidence Interval Estimates For The Sample MeanJaneth Marcelino0% (1)

- BUSINESS STATISTICS TITLEDocument4 pagesBUSINESS STATISTICS TITLEShashwat AnandNo ratings yet

- Teori PeluangDocument3 pagesTeori PeluangRio hindar12345No ratings yet

- Signed Off Statistics and Probability11 q2 m3 Random Sampling and Sampling Distribution v3 Pages DeletedDocument52 pagesSigned Off Statistics and Probability11 q2 m3 Random Sampling and Sampling Distribution v3 Pages DeletedGideon Isaac Cabuay56% (9)

- Perkiraan Karakteristika Curah Hujan Dengan Analisis Bangkitan DataDocument11 pagesPerkiraan Karakteristika Curah Hujan Dengan Analisis Bangkitan DataSyifa FauziyahNo ratings yet