Professional Documents

Culture Documents

SAN Protocols and Other Notes

Uploaded by

Srinivas GollanapalliCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

SAN Protocols and Other Notes

Uploaded by

Srinivas GollanapalliCopyright:

Available Formats

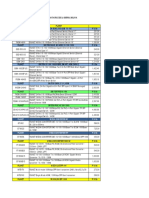

STORAGE PROTOCOLS

FC/FC-AL/FC-SW

ESCON/FICON

Infiniband

CIFS

NFS*

FCoE

FCoTR

---------------------------------

InfiniBand (abbreviated IB) is a computer-networking communications standard used

in high-performance computing that features very high throughput and very low

latency.

It is used for data interconnect both among and within computers. InfiniBand is

also used as either a direct or switched interconnect between servers and storage

systems, as well as an interconnect between storage systems.

As of 2014 it was the most commonly used interconnect in supercomputers. Mellanox

and Intel manufacture InfiniBand host bus adapters and network switches,

and in February 2016 it was reported[2] that Oracle Corporation had engineered its

own Infiniband switch units and server adapter chips

for use in its own product lines and by third parties. Mellanox IB cards are

available for Solaris, RHEL, SLES, Windows, HP-UX, VMware ESX,[3] and AIX.[4]

It is designed to be scalable and uses a switched fabric network topology. As an

interconnect, IB competes with Ethernet, Fibre Channel, and proprietary

technologies[5] such as Intel Omni-Path.

InfiniBand is a type of communications link for data flow between processors and

I/O devices that offers

throughput of up to 2.5 gigabytes per second and support for up to 64,000

addressable devices. Because it is also scalable and supports

quality of service (QoS) and failover, InfiniBand is often used as a server

connect in high-performance computing (HPC) environments.

The internal data flow system in most PCs and server systems is inflexible and

relatively slow. As the amount of data coming into and flowing

between components in the computer increases, the existing bus system becomes a

bottleneck.

Instead of sending data in parallel (typically 32 bits at a time, but in some

computers 64 bits) across the backplane bus,

InfiniBand specifies a serial (bit-at-a-time) bus. Fewer pins and other electrical

connections are required, saving

manufacturing cost and improving reliability. The serial bus can carry multiple

channels of data at the same time

in a multiplexing signal. InfiniBand also supports multiple memory areas, each of

which can addressed by both processors and storage devices.

The InfiniBand Trade Association views the bus itself as a switch because control

information determines the

route a given message follows in getting to its destination address. InfiniBand

uses Internet Protocol Version 6 (IPv6), which enables an almost limitless amount

of device expansion.

With InfiniBand, data is transmitted in packets that together form a communication

called a message.

A message can be a remote direct memory access (RDMA) read or write operation, a

channel send or receive message,

a reversible transaction-based operation or a multicast transmission. Like the

channel model many mainframe users are familiar with,

all transmission begins or ends with a channel adapter. Each processor (your PC or

a data center server, for example) has what is called

a host channel adapter (HCA) and each peripheral device has a target channel

adapter (TCA). These adapters can potentially

exchange information that ensures security or work with a given Quality of Service

level.

The InfiniBand specification was developed by merging two competing designs, Future

I/O, developed by Compaq, IBM,

and Hewlett-Packard, with Next Generation I/O, developed by Intel, Microsoft, and

Sun Microsystems.

----------------------------------------------------

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Issue and ResolutionsDocument6 pagesIssue and ResolutionsSrinivas GollanapalliNo ratings yet

- Emc Vmax3 Family New FeaturesDocument26 pagesEmc Vmax3 Family New FeaturesSrinivas GollanapalliNo ratings yet

- Cisco Imp Commands-Karthik01Document1 pageCisco Imp Commands-Karthik01Srinivas GollanapalliNo ratings yet

- Director Bit Settings' FoundDocument1 pageDirector Bit Settings' FoundSrinivas GollanapalliNo ratings yet

- More More SymcliDocument10 pagesMore More SymcliSrinivas Gollanapalli100% (1)

- EMC Arrays-SymmWinDocument62 pagesEMC Arrays-SymmWinSrinivas GollanapalliNo ratings yet

- Risks and Threats To Storage Area Networks: Free Pass To The RSA ConferenceDocument3 pagesRisks and Threats To Storage Area Networks: Free Pass To The RSA ConferenceSrinivas GollanapalliNo ratings yet

- Symmetrix Imp Questions For InterviewDocument3 pagesSymmetrix Imp Questions For InterviewSrinivas GollanapalliNo ratings yet

- Hadoop Data Lake: Hadoop Log Files JsonDocument5 pagesHadoop Data Lake: Hadoop Log Files JsonSrinivas GollanapalliNo ratings yet

- Block StorageDocument24 pagesBlock StorageSrinivas GollanapalliNo ratings yet

- Detailed Explanation of HBA Bits On InitiatorDocument14 pagesDetailed Explanation of HBA Bits On InitiatorSrinivas GollanapalliNo ratings yet

- 2 IsaDocument50 pages2 IsaAchmad SyatibiNo ratings yet

- MemoryDocument62 pagesMemoryLKNo ratings yet

- Cut Downtime and CutOver Faster For NDM MigrationsDocument3 pagesCut Downtime and CutOver Faster For NDM MigrationsSrinivas GollanapalliNo ratings yet

- Monolithic Vs Modular StorageDocument36 pagesMonolithic Vs Modular StorageSrinivas GollanapalliNo ratings yet

- What Is The Difference Between A Router and A Layer 3 Switch and Super MaterialDocument20 pagesWhat Is The Difference Between A Router and A Layer 3 Switch and Super MaterialSrinivas GollanapalliNo ratings yet

- SRDF Product Guide (WWW - Mianfeiwendang.com)Document144 pagesSRDF Product Guide (WWW - Mianfeiwendang.com)Srinivas GollanapalliNo ratings yet

- Intro To PigDocument33 pagesIntro To PigPranav Waila0% (1)

- FC TopologyDocument23 pagesFC TopologySrinivas GollanapalliNo ratings yet

- FLOGI-plogi in CiscoDocument3 pagesFLOGI-plogi in CiscoSrinivas GollanapalliNo ratings yet

- Brocade InterviewDocument4 pagesBrocade InterviewSrinivas GollanapalliNo ratings yet

- Fabric Switch Migration ProcessDocument5 pagesFabric Switch Migration ProcessSrinivas GollanapalliNo ratings yet

- Sym CommandsDocument10 pagesSym CommandsSrinivas GollanapalliNo ratings yet

- Block StorageDocument24 pagesBlock StorageSrinivas GollanapalliNo ratings yet

- HitachiDocument9 pagesHitachiSrinivas GollanapalliNo ratings yet

- Implementing Fully Automated Storage Tiering (FAST) For EMC Symmetrix VMAX Series Arrays Technical Note 1Document142 pagesImplementing Fully Automated Storage Tiering (FAST) For EMC Symmetrix VMAX Series Arrays Technical Note 1Srinivas GollanapalliNo ratings yet

- SRDF Product Guide (WWW - Mianfeiwendang.com)Document144 pagesSRDF Product Guide (WWW - Mianfeiwendang.com)Srinivas GollanapalliNo ratings yet

- Open ReplicatiorDocument2 pagesOpen ReplicatiorSrinivas GollanapalliNo ratings yet

- Disk Rebalancing-Pool RebalancingDocument1 pageDisk Rebalancing-Pool RebalancingSrinivas GollanapalliNo ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (120)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- OSI Model PDFDocument9 pagesOSI Model PDFLaheeba ZakaNo ratings yet

- Lista de Precio2 SURLINK PDFDocument10 pagesLista de Precio2 SURLINK PDFJhonny AguilarNo ratings yet

- Routing AlgorithmDocument30 pagesRouting AlgorithmAbhishek JainNo ratings yet

- Journey: Installation GuideDocument2 pagesJourney: Installation GuideSamuri FirusiNo ratings yet

- XM Ac2100 DatasheetDocument2 pagesXM Ac2100 DatasheetAbdenago JesusNo ratings yet

- Resume - Ran Trainer - 3g RF Design WCDMA UMTS JobDocument13 pagesResume - Ran Trainer - 3g RF Design WCDMA UMTS JobDiwakar MishraNo ratings yet

- 5G Eng 5G Research-1528754894757Document56 pages5G Eng 5G Research-1528754894757NarayanamoorthiJNo ratings yet

- 8th SemDocument2 pages8th SemVasu Mkv100% (1)

- 28 Hw5-SolDocument18 pages28 Hw5-Solkisibongdem1412No ratings yet

- Bandwidth Utilization: Multiplexing and SpreadingDocument48 pagesBandwidth Utilization: Multiplexing and SpreadingSaurav KumarNo ratings yet

- User Guide: HG630 Home GatewayDocument31 pagesUser Guide: HG630 Home Gatewayd0353011No ratings yet

- IjrsatDocument4 pagesIjrsatSrikanth ShanmukeshNo ratings yet

- Alcatel-Lucent OmniPCX Office R7.1 Configuration GuideDocument11 pagesAlcatel-Lucent OmniPCX Office R7.1 Configuration GuideAdrian Petris100% (1)

- Telecom Sector in IndiaDocument92 pagesTelecom Sector in IndiaTanya GrewalNo ratings yet

- Alcatel Lucent 9400 AWyDocument6 pagesAlcatel Lucent 9400 AWyLan Twin SummerNo ratings yet

- Toaz - Info Network Management Principles and Practice Mani Subramanian 2nd Edition Ch5 PRDocument12 pagesToaz - Info Network Management Principles and Practice Mani Subramanian 2nd Edition Ch5 PRSushma NNo ratings yet

- Data & Signals (Part 1) : Dept. of Computer Engineering Faculty of EngineeringDocument36 pagesData & Signals (Part 1) : Dept. of Computer Engineering Faculty of Engineeringআসিফ রেজাNo ratings yet

- Tethered Aerostats For CommunicationsDocument5 pagesTethered Aerostats For CommunicationsGordon DuffNo ratings yet

- 6ES71936AR000AA0 Datasheet enDocument1 page6ES71936AR000AA0 Datasheet entiagofelipebonesNo ratings yet

- CCNA Exploration Network Fundamentals Ver4 0 Enetwork Final Exam v1 92 With Feedback CorrectionsDocument29 pagesCCNA Exploration Network Fundamentals Ver4 0 Enetwork Final Exam v1 92 With Feedback Correctionsgumball19100% (3)

- 5G - Make In Vietnam: Tổng Công Ty Công Nghiệp Công Nghệ Cao ViettelDocument41 pages5G - Make In Vietnam: Tổng Công Ty Công Nghiệp Công Nghệ Cao ViettelĐoàn Mạnh CườngNo ratings yet

- Extended at Commands v6Document268 pagesExtended at Commands v6idhemizvirNo ratings yet

- Sdvoe-Ready M4300-96X Up To 96-Port 10G, Poe Options: Intelligent Edge Managed SwitchesDocument60 pagesSdvoe-Ready M4300-96X Up To 96-Port 10G, Poe Options: Intelligent Edge Managed SwitchesPuatNo ratings yet

- Microwave Backhaul For Public Safety LTEDocument8 pagesMicrowave Backhaul For Public Safety LTEAviat NetworksNo ratings yet

- The Transmission Control ProtocolDocument2 pagesThe Transmission Control Protocolarchanajadhav278No ratings yet

- Alcatel Telecom Product Catalogue 1998Document49 pagesAlcatel Telecom Product Catalogue 1998Максим Помазан100% (1)

- ZebOS Datasheet Rev0602Document4 pagesZebOS Datasheet Rev0602Atif KamalNo ratings yet

- Cisco HSRP TroubleshootingDocument52 pagesCisco HSRP TroubleshootingSivakonalarao MandalapuNo ratings yet

- Industrial Communication NetworksDocument20 pagesIndustrial Communication Networksabdel taibNo ratings yet

- 5G Session Management FunctionDocument20 pages5G Session Management FunctionGGSN100% (4)