Professional Documents

Culture Documents

Problems With NHST PDF

Uploaded by

JoriwingOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Problems With NHST PDF

Uploaded by

JoriwingCopyright:

Available Formats

Psychology will be a Much Better Science

When We Change the Way We Analyze Data

Geoffrey R. Loftus

University of Washington

In 1964, I entered the field of Psychology

because I believed that within it dwelt some of The Universality of Null Hypothesis Sig-

the most fundamental and challenging problems nificance Testing

of the extant sciences. Who could not be in- The vast bulk of data analysis and data in-

trigued, for example, by the relation between terpretation in the social and behavioral sciences

consciousness and behavior, or the rules guiding is carried out using a set of techniques collec-

interactions in social situations, or the processes tively known as null hypothesis significance

that underlie development from infancy to ma- testing (NHST). The logic of NHST goes as

turity? follows.

Today, in 1996, my fascination with these 1. One begins with the hypothesis that some in-

problems is undiminished. But I've developed a dependent variable will have an effect on some

certain angst over the intervening thirty- dependent variable. At its most general level, the

something years—a constant, nagging feeling hypothesis can be expressed as:

that our field spends a lot of time spinning its not (µ1 = µ2 =...= µJ) (Eq. 1)

wheels without really making all that much pro-

gress. This problem shows up in obvious where µ1...µJ are the means 1 of J population

ways—for instance, in the regularity with which distributions of the dependent variable that cor-

findings seem not to replicate. It also shows up respond to J levels of the independent variable.

in subtler ways—for instance, one doesn't often Equation 1 is generically referred to as an "al-

hear Psychologists saying, "Well this problem is ternative hypothesis," or H1.

solved now; let's move on to the next one" (as,

for example, Johannes Kepler must have said 2. An experiment is conducted in which J ran-

over three centuries ago, after he had cracked dom samples of the dependent variable are ob-

the problem of describing planetary motion). tained—one sample for each level of the inde-

pendent variable. This yields the observed sam-

I've come to believe that at least part of this ple means M 1...M J, which are estimates of the

problem revolves around our tools—particularly population means µ1...µJ. Generally it is not true

the tools that we use in the critical domains of that M 1 = M 2 =...= M J; i.e., there will always be

data analysis and data interpretation. What we some differences among the sample means. What

do, I sometimes feel, is akin to trying to build a needs to be determined is whether the observed

violin using a stone mallet and a chain-saw. The differences among the sample means are due

tool-to-task fit is not all that good, and as a re- only to errors in measurement, and/or to noise,

sult, we wind up building a lot of poor-quality or whether they are due, at least in part, to corre-

violins. sponding differences among the population

means. Informally, the investigator needs to pro-

My purpose here is to elaborate on these is- vide a convincing argument that the observed

sues. In what follows, I will summarize our major effect is “real.”

data-analysis and data-interpretation tools, and

describe what I believe to be amiss with them. I 3. To this end, the investigator endeavors to

will then offer some suggestions for change. compute the probability (known as p) 2 of ob-

1 Actually NHST can be applied to any population pa-

The writing of this manuscript was supported by an

NIMH grant to the author. I thank Nelson Cowan, rameter. I use means here because means are by far the

David Irwin, Gerd Gigerenzer, Elizabeth Loftus, Mich- parameter of greatest concern in social science experi-

ele Nathan, and especially Emanuel Donchin for their ments.

very insightful and helpful comments on early versions 2 A brief note of clarification is in order here. The enti-

of this manuscript. Correspondence should be addressed tity I have referred to as "p", which is computed from

to: gloftus@u.washington.edu. the data, is Fisher's exact level of significance (Fisher,

Current Directions in Psychological Science (1996, 161-171)

GEOFFREY R. LOFTUS SIGNIFICANCE TESTING PAGE 2

serving differences among the M's as great as make sense of the data set, no matter how com-

those that were actually observed given that, in plex the data set might be.

fact, the J population means are all equal, i.e.,

that Although variants of this procedure consti-

tute the primary means of making conclusions

µ1 = µ3 =...= µJ (Eq. 2) from the vast majority of psychology experi-

ments, I do not believe that it is a fruitful way of

This hypothesis that the population means are interpreting data or understanding psychological

equal is referred to as the "null hypothesis," or phenomena. On the contrary, I believe that reli-

H0. ance on NHST has channeled our field into a

4. Based on a comparison of the computed value series of methodological cul-de-sacs, and it’s

of p with a criterion value of p known as α (α is been my observation over the years (particularly

usually set at 0.05), the investigator makes a bi- over my four years as editor of Memory & Cog-

nary decision. If p is less than α then the investi- nition) that conclusions made entirely or even

gator makes a strong decision to "reject H 0" in primarily based on NHST are at best severely

favor of H1 (a decision which is usually phrased limited, and at worst highly misleading. Below I

as, "the observed effect is statistically signifi- will articulate the reasons for these beliefs.

cant"). If p is greater than α , then the investiga- I’m by no means the first to issue such

tor makes a weak decision to "fail to reject H0." charges. Periodically a book or an article will

Two types of errors can be committed in this appear, decrying the enormous reliance we place

setting. One commits a Type-I error when one on NHST 3. Sadly, however, although such air-

incorrectly rejects a true null hypothesis. If the ings of the issues occasionally attract attention,

null hypothesis is true, then the probability of a they have not (up until now, anyway) impelled

Type-I error is, by definition, equal to widespread action4. They have been carefully

α (typically .05). One commits a Type-II error crafted and put forth for consideration, only to

when one incorrectly fails to reject a false null just kind of dissolve away in the vast acid bath of

hypothesis. If the null hypothesis is false, a existing methodological orthodoxy.

Type-II error is committed with probability β. In

general, we do not know the value of β because I have two goals in this article: first to sum-

we have no information, and no assumptions, marize some of the major problems with NHST,

regarding the values of the actual µ’s, given that and second to suggest some alternative tech-

the null hypothesis is false. Statistical power is niques for extracting more insight and un-

defined to be (1 - β), which is interpreted as the derstanding from a data set.

probability of correctly rejecting a false null hy-

pothesis. Because β is not generally known, nei-

ther is power.

5. Finally, based on a series of such deci- 3 A sample of these writings is, in chronological order:

sions—that is, rejecting, or failing to reject a se-

ries of null hypotheses—the investigator tries to Tyler (1931); Jones (1955); Nunnally (1960); Roze-

bloom (1960); Grant (1962); Bakan (1966); Meehl

(1967); Lykken (1968); Carver (1978); Meehl (1978);

Berger & Berry (1988); Hunter & Schmidt (1989);

1925; 1935). The related entity known as "α", described Gigerenzer, Swijtink, Porter, Daston, Beatty, & Kruger

below, is the Neyman-Pearson probability of at Type-I (1989); Rosnow & Rosenthal (1989); Cohen (1990);

error, which is decided upon before the data are collected. Meehl (1990); Loftus (1991); Carver (1994); Cohen

The Pearson and Neyman-Pearson approaches to statis- (1994); Loftus & Masson (1994); Maltz (1994);

tics are quite different but, as splendidly described by Schmidt (1996); and Schmidt & Jones (1997).

Gigerenzer, Swijtink, Porter, Daston, Beatty, & Kruger

(1989, pp. 78-109), they have been "hybridized" over 4 This situation may finally be changing. Symposia at

the years and it is the resulting mish-mash that has been both the 1996 APS convention and the 1996 APA con-

almost universally taught as "the statistical method" vention have aired the shortcomings of NHST as the

over the past half century. A detailed analysis of this primary data-analysis technique in the social sciences;

issue is beyond the scope of this article, but Gigerenzer see also Shea (1996). An APA task force has been set

et al. make a convincing case that the confusion of the up to study the value of NHST. At least one journal ed-

two approaches is responsible for much of what has itor has tried to discourage NHST (Loftus, 1993a,

gone astray with modern statistical practice. The de- which provoked the caustic observation by Greenwald,

scription of NHST that I provide here—and which I will Gonzalez, Harris, & Guthrie, 1994, that every recent

inveigh against—is, essentially, a description of this empirical article in Loftus's journal, Memory & Cogni-

commonly used hybridized approach. tion, has used NHST nonetheless).

Current Directions in Psychological Science (1996, 161-171)

GEOFFREY R. LOFTUS SIGNIFICANCE TESTING PAGE 3

Six Things to not Like about NHST a measurable value on the real-number line) is

In this section, I will articulate six major exactly zero—which has a probability of zero5.

problems with NHST. As I have indicated, they Accordingly, differences in exposure du-

have been described before. But they bear re- ration must lead to performance differences even

peating, and it is useful to consider them in con- if such differences are small, and the relevant

cert. question is not really, "Are there any differences

(1) The Usual Impossibility of a Typical Null among the population means?” Rather, the rele-

Hypothesis vant questions are: (1) How big are the differ-

ences, (2) are they big enough for the in-

NHST usually revolves around the testing vestigator to care about; and if so, (3) what pat-

of a null hypothesis that couldn’t really be true tern do they form? In short, testing the null hy-

to begin with. To illustrate, suppose one pre- pothesis of Equation 2 can’t provide new in-

sented subjects with digits on a computer screen formation. All it can do is to indicate whether

and were interested in effects of stimulus dura- there is enough statistical power to detect what-

tion on the subsequent recall of the digits. One ever differences among the population means

might run an experiment in which digit strings must be there to begin with. As I have noted

were shown for one of five exposure durations elsewhere (Loftus, 1995), rejecting a typical null

ranging from say 10 to 100 ms, measuring pro- hypothesis is like rejecting the proposition that

portion of correctly recalled digits in each con- the moon is made of green cheese. The ap-

dition. In the usual hypothesis-testing frame- propriate response would be: "Well yes, ok...but

work, one would establish the following null hy- so what?"

pothesis:

µ1 = µ2 = µ3 = µ4 = µ5 (Eq. 3) (2) “Significance” vs the Underlying Pattern of

Population Means

where the µ’s refer to the population means of A finding of “statistical significance” only

percent digits recalled for each of the five expo- constitutes evidence (and vague evidence at that,

sure durations. Note that the logic of NHST de- as we shall see) that a null hypothesis of the sort

mands that the equal signs in Equation 3 mean embodied in Equation 2 is false. Such a finding

equal to an infinite number of decimal places. If provides no information about form of the un-

one weakens this requirement such that "=" derlying pattern of population means which is

means "pretty much equal," then one must add presumably what’s important for making scien-

additional assumptions specifying what is meant tific conclusions.

by "pretty much." Although the mathematical

machinery for doing this has been worked out There are several ways of dealing with this

(e.g., Greenwald, 1975; Hays, 1973; Serlin, problem. First, one can address it using post-hoc

1993), it is rarely (if ever) implemented in prac- tests, whereby reject/fail-to-reject decisions are

tice. made about particular pairs of means. But post-

hoc tests have problems. First, within the hy-

This null hypothesis of identical population pothesis-testing framework, the more such tests

means cannot be literally correct. As Paul Meehl are carried out, the greater is the probability of

(1967) has pointed out, "Considering...that eve- committing at least one Type-I error. It is possi-

rything in the brain is connected with everything ble to adjust for this problem, but only at the

else, and that there exist several 'general state- expense of raising the probability of committing

variables' (such as arousal, attention, anxiety and a Type-II error. Second, and more generally,

the like) which are known to be at least slightly post hoc tests focus on specific pairs of means,

influenceable by practically any kind of stimu-

lus input, it is highly unlikely that any psycho-

logically discriminable situation which we apply

5 One caveat is in order here. There are some experi-

to an experimental subject would exert literally

zero effect on any aspect of performance." Al- ments in which a null hypothesis could genuinely be

ternatively, the µ's can be viewed as measurable true (see, e.g., Frick, 1995, for a discussion of this

values on the real-number line. Any two of them topic). A good example (attributable to Greenwald, et

being identical implies that their difference (also al., 1996) is a qualitative null hypothesis such as that a

defendant in a murder case is actually the murderer. In

such a case, the null hypothesis could certainly be true,

and rejecting it (say based on DNA matches) would be a

meaningful conclusion. However, these kinds of exper-

iments are the exception rather than the rule in the so-

cial sciences.

Current Directions in Psychological Science (1996, 161-171)

GEOFFREY R. LOFTUS SIGNIFICANCE TESTING PAGE 4

which, when there are more than two conditions, (4) The Artificial "Effects/Non-Effects" Dichot-

is only an indirect way of assessing the entire omy

pattern of means. A related problem is not, strictly speaking, a

A second means of addressing the problem problem in the logic of NHST. Rather, it is a

is via planned comparisons. Use of planned problem that arises because investigators, like all

comparisons entails first generating a pattern of humans, are averse to making decisions that are

weights—one weight per experimental condi- both complicated and weak, such as, "we fail to

tion—that constitute the prediction of some ex- conclude that the null hypothesis is false."

perimental hypothesis about the overall pattern Rather, people prefer simple strong decisions

of population means. The correlation between such as, "the null hypothesis is true." This fact of

the weights and the observed sample means then human nature fosters an artificial dichotomy that

constitutes (essentially) a measure of how good revolves around the arbitrary nature of the .05

the hypothesis is. Use of planned comparisons criterion α level.

can be an informative and efficient process. The Most people, if pressed, will agree that there

problem with planned comparisons, however, is is no essential difference between finding, say,

that in practice, they are rarely used. I will have that p = .050 and finding that p = .051. How-

more to say about planned comparisons in a ever, investigators, journal editors, reviewers, and

later section. scientific consumers often forget this and behave

(3) Power as if the .05 cutoff were somehow real rather

The third problem has to do with lack of than arbitrary. Accordingly, the world of per-

attention to statistical power. NHST has come to ceived psychological reality tends to get divided

revolve critically around the avoidance of Type- into “real effects” (p ≤ .05) and “non-effects”

I errors. This occurs mainly because the prob- (p > .05). Statistical conclusions about such

ability of a Type-I error (α) can be computed. “real effects” and “non-effects” made in Re-

In contrast, the probability of a Type-II error (β) sults sections then somehow get sanctified and

and concomitantly power (1 - β) usually cannot transmuted into conclusions that endure into

be computed. This is because computation of β Discussion sections and beyond, where they in-

and power requires a specific, quantitative hy- sidiously settle in and become part of our disci-

pothesis (e.g., µ2 = µ1 + 10 in a two-condition pline’s general knowledge structure 6. The mis-

experiment), and such quantitative hypotheses chief thereby stirred up is incalculable. For in-

are exceedingly rare in the social sciences. stance, when one experiment shows a significant

effect (p ≤ .05), and an attempted replication

Lack of power analysis is particularly trou- shows no significant effect (p > .05), a "failure to

blesome when an investigator bases a conclusion replicate" is proclaimed. Feverish activity ensues,

on the proposition that some null hypothesis is as method sections are scoured and new experi-

true (rather than making the logically correct ments run, in an effort to understand the circum-

decision of "failing to reject the null hypothe- stances under which the effect does or does not

sis"). In such a case one cannot be sure whether show up—and all because of an arbitrary cutoff

there are in fact relatively small differences at the .05 α level. No wonder there is an epi-

among the population means (a conclusion that demic of "conflicting" results in psychological

can only be made when there is high power) or research! This state of affairs is analogous to a

whether there may be large differences among chaotic phenomenon in which small initial dif-

population means that are undetected (a conclu- ferences lead to enormous differences in the

sion that is implied by low power). With high eventual outcomes. In the case of data analysis,

power, an investigator may be justified in ac- chaos is inimical to understanding, and it is more

cepting the null hypothesis "for all intents and appropriate that similar results (e.g., p = 0.050

purposes." The lower the power, the less accept- and p = 0.051) yield similar conclusions rather

able is such a conclusion. than entirely different conclusions.

As noted, lack of power analysis often stems (5) The Hypothesis-Testing Tail Wags the The-

from the lack of quantifiable alternative hy- ory-Construction Dog

potheses that characterizes the social sciences in Central to NHST is computation of p, the

general, and Psychology in particular. Nonethe- probability of a Type-I error. However, this

less, there are ways of conveying the overall state

of statistical power in some experiment (par-

ticularly through use of confidence intervals, as

will be illustrated in an example below). 6 See Rosenthal & Gaito, 1963, for an interesting em-

pirical demonstration of this assertion.

Current Directions in Psychological Science (1996, 161-171)

GEOFFREY R. LOFTUS SIGNIFICANCE TESTING PAGE 5

probability is difficult or impossible to compute 1.0

unless routine, simplifying assumptions are

made about the nature of the psychological pro-

0.8 High Original Learning

cesses under consideration. These assumptions

p = Performance

Low Original Learning

then insinuate themselves into, and become in-

tegral to much of psychological theory. Some of 0.6

the most common such assumptions are these:

1. The dependent variable is a linear combina- 0.4

tion of a collection of "effects"—effects due to

the independent variables, to the interactions

0.2

among the independent variables, and to various

sources of "error."

0.0

2. The errors are distributed normally. 0.0 1.0 2.0 3.0 4.0 5.0 6.0

3. The variances of the error distributions are t = Retention Interval (days)

equal across various conditions. F i g . 1 . Forgetting curves following high and low

learning. Horizontal lines are meant to indicate that

Thus, the nature of the data-analysis tech- the two curves are horizontally parallel.

nique generally dictates the nature of psycho-

logical theory which, in turn, engenders strong ing (this logic was used by Slamecka & McElree,

biases against formulating theories incorporating 1983 7).

other perhaps more realistic and/or interesting

assumptions. Accordingly, psychological theory This standard data-analysis procedure,

becomes generic linear-model theory and a lot along with the concomitant conclusion, would

of potential for insight is lost. The situation mask a very interesting regularity in the data: As

might be summarized as, “Off the shelf as- is indicated by the horizontal lines on the figure,

sumption produce off the shelf conclusions ." the horizontal difference between the high-

learning and low-learning forgetting curves is

To illustrate this problem, suppose an ex- constant. As shown by Loftus (1985a; 1985b;

periment is designed to investigate whether the see also Loftus & Bamber, 1990) such horizon-

rate of forgetting depends on degree of original tal equality is, under very general assumptions, a

learning. In this experiment, word lists are taught necessary and sufficient condition to infer equal

to subjects whose recall is tested following inter- high- and low-learning forgetting rates. Indeed,

vals that vary from zero to five days. There are the Figure-1 curves were generated from expo-

two groups of subjects. The high-learning sub- nential decay equations of the form:

jects are allowed to learn the lists to a criterion

level of 100% correct, while low-learning sub- Low learning: p = e-0.3t

jects learn the lists to a lower criterion level. The High learning: p = e-0.3 (t+2)

major data from this experiment are forgetting

curves of the sort shown in Figure 1: here, mem- where p is performance and t is forgetting time

ory performance is plotted as a function of re- (in days). Here, the equal forgetting rates are

tention interval. expressed by the same exponential decay pa-

rameter (0.3) in both equations.

The default data-analysis procedure in such

an experiment would be to carry out a two-way

analysis of variance (ANOVA). Let us suppose

that the experimental power is sufficiently great 7 Actually Slamecka & McElree found no significant

that the ANOVA reveals statistically significant interaction between degree of original learning and reten-

main effects of both learning level and retention tion interval, and hence concluded that forgetting rate did

interval, along with a significant interaction. It is not depend on degree of original learning. However, dif-

evident in Figure 1 that the form of the inter- ferent data sets using the same general paradigm show

action indicates a shallower "slope" for the low- different forms of interactions that would lead variously

learning than for high-learning condition (i.e., to the conclusion that higher-learning forgetting is

the vertical difference between the curves be- faster, that higher-learning forgetting is slower and that

comes smaller with increasing retention interval). high- and low-learning forgetting do not differ (a meta-

The typical conclusion issuing from these obser- finding that should, in and of itself, provoke suspicion

vations would be that forgetting is slower fol- that something is fundamentally amiss). In any event, it

lowing low learning than following high learn- is the logic of the data-analysis technique, not the con-

clusion, that is primarily at issue here.

Current Directions in Psychological Science (1996, 161-171)

GEOFFREY R. LOFTUS SIGNIFICANCE TESTING PAGE 6

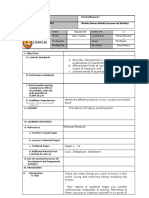

Table 1. Performance as a function of exposure duration for four conditions

Exposure Duration (ms)

Condition 20 50 80 110 140 170 200 230

NVE/HU 0.287 0.503 0.843 1.005 1.468 1.664 2.102 2.257

VE/HU 0.461 1.192 1.399 2.360 3.008 3.236 3.908 4.649

NVE/LU 0.099 0.536 1.192 1.461 1.626 2.048 2.657 2.874

VE/LU 0.683 1.475 2.822 3.747 4.863 5.397 6.861 7.849

aNotes: NVE: No Verbal Encoding. HU: High Uncertainty

VE: Verbal Encoding. LU: Low Uncertainty

In this example, the principal finding is (or information, there is no logical basis for con-

should be) that the horizontal difference be- cluding the validity of (2) given the finding em-

tween the two curves is constant. It is this finding bodied in (1). Indeed, the probability of the null

that would imply no difference between high- hypothesis given the data could be shown to be

and low-learning forgetting rates. However, in- anything given suitable assumptions about the

vestigators working within the hypothesis-testing prior (pre-data) probability that the null hy-

framework would tend to miss this critical regu- pothesis is true. Without specific assumptions

larity, or would dismiss it, for at least two rea- about this prior probability, the exact probability

sons. First, it is not immediately obvious how a of the null hypothesis given the observed data is

standard significance test could be carried out unknown. In short, the common belief that the

that is relevant to the finding8, and without a precise quantity, ".05", refers to anything mean-

significance test a finding is not generally ingful or interesting is illusory.

deemed "valid." Second the logic of the linear

model within which standard ANOVA is Alternatives

couched focuses on differences between the de- I now suggest four (by no means mutually

pendent variable at a fixed level of the inde- exclusive) alternatives to traditional NHST. My

pendent variable (vertical differences), rather major goal in making each of these suggestions

than differences between the independent vari- is simple and modest: it is to increase our ability

able at a fixed level of the dependent variable to understand what a data set is trying to tell us.

(horizontal differences). These techniques are not fancy or esoteric.

(6) NHST Provides Only Imprecise Information They’re just sensible.

about the Validity of the Null Hypothesis

The final problem which has been ham- (1) Plot Data Rather than Presenting Them as

mered at by Bayesian statisticians9 for decades, Tables-Plus-F-and-p-Values

is this. By convention, one rejects some null hy- In a previous article (Loftus, 1993b) I de-

pothesis when scribed some fictional data collected by a fic-

tional psychologist named Jennifer Loeb. The

(1) p(observed data | null hypothesis) < 0.05, story went as follows. Loeb was interested in

while rejecting the null hypothesis implies the memory for visual material and carried out a

conclusion that task in which visual stimuli were displayed and

then recalled. There were three independent

(2) p (null hypothesis | observed data) is small. variables in Loeb’s experiment, all varied at the

(What else could be meant by the phrase "reject time of stimulus presentation, which were:

the null hypothesis"?) But without additional Stimulus exposure duration (eight values, rang-

ing from 20 to 230 ms), verbal encoding (pro-

hibited or required), and stimulus spatial uncer-

8 Which isn't to say such a test couldn't be invented; it tainty (high or low).

simply isn't part of general statistical knowledge or

(what is probably more important) part of present statis- Based on a specific theory, Loeb had three

tics computer packages. predictions. First she predicted her performance

measure to be a linear function of stimulus ex-

9 e.g., Berger & Berry (1988); Winkler (1993); see also posure duration. Second, she predicted the slope

Cohen (1994). of this function to be higher with verbal encod-

Current Directions in Psychological Science (1996, 161-171)

GEOFFREY R. LOFTUS SIGNIFICANCE TESTING PAGE 7

Verbal Encoding Verbal Encoding

Memory Performance

Memory Performance

8.0 8.0

Low Uncertainty Low Uncertainty

6.0 High Uncertainty 6.0 High Uncertainty

4.0 4.0

2.0 2.0

0.0 0.0

0 50 100 150 200 250 0 50 100 150 200 250

Exposure Duration (ms) Exposure Duration (ms)

No Verbal Encoding No Verbal Encoding

Memory Performance

Memory Performance

8.0 8.0

6.0 Low Uncertainty 6.0 Low Uncertainty

High Uncertainty High Uncertainty

4.0 4.0

2.0 2.0

0.0 0.0

0 50 100 150 200 250 0 50 100 150 200 250

Exposure Duration (ms) Exposure Duration (ms)

F i g , 2 . Loeb's data F i g 3 . Loeb's data (plotted with 95% confidence

ing. Third, she predicted the slope to be higher intervals)

with lower spatial uncertainty.

the verbal conditions (top panel) and the non-

Table 1, which shows a common way of verbal conditions (bottom panel). The two

presenting data like Loeb’s, lists the values of curves within each panel are for the low-uncer-

her performance measure for all 8 x 2 x 2 = 32 tainty and high-uncertainty conditions. Best fit-

conditions. Accompanying NHST results—long ting linear functions are drawn through the data

compendia of F-ratios and p-values corre- points. With the data presented like this, one can

sponding to the main ANOVA plus subsidiary acquire in a glance—or at most, a couple of

tests—are generally provided as part of the text glances—the same information that it would

(often spanning many tedious pages). have taken practically forever to get out of Table

Most people find such tabular-cum-text 1. A picture really is worth 1000 words.

data presentation difficult to assimilate. That isn't (2) Provide Confidence intervals

surprising. Decades of cognitive research, plus

millennia of common sense, teach us that the The Figure-2 plot indicates that Loeb’s

human mind isn’t designed to integrate in- obtained pattern of sample means confirms her

formation that is presented in this form. There’s predictions pretty well. The curves are generally

too much of it, and it can’t be processed in par- linear, verbal encoding yields a higher slope

allel10. than no verbal encoding, and low uncertainty

yields a higher slope than high uncertainty.

An alternative way of presenting Loeb’s

data is shown in Figure 2. Here, performance is However, this plot provides no indication of

plotted as a function of exposure duration for the sort of error variance that is typically in-

cluded as part of an ANOVA. Figure 3 remedies

this deficiency: It shows the same data along

10 One might argue that tables are useful when a reader with 95% confidence intervals placed around the

needs exact values of the data points. However, such sample means (computed, in this within-subjects

situations are quite rare, and when they do occur, exact design, as described by Loftus & Masson, 1994).

values are obtainable from the investigators—a process Figure 3 illustrates my second suggestion,

that is particularly easy in these days of electronic which is to put confidence intervals around all

communication. sample statistics that are important for making

Current Directions in Psychological Science (1996, 161-171)

GEOFFREY R. LOFTUS SIGNIFICANCE TESTING PAGE 8

conclusions. Figure 3 provides most of the cru-

cial information about Loeb’s data. The ob- 10 High Power:

served pattern of sample means provides the best Small Standard Errors

Agoraphobia Rating

estimate of the underlying pattern of population 8

means upon which conclusions should be based.

The provision of confidence intervals allows the 6

reader to assess the degree of statistical

power—the smaller the confidence intervals, the 4

greater the power. Power, in contrast to its pro-

foundly convoluted interpretation within the hy-

pothesis-testing framework, can here be simply 2

interpreted as an indication of how seriously the

observed pattern of sample means should be 0

taken as a reflection of the underlying pattern of StandardSanders

Treatment

population means.

One could further analyze these data by, for 10 Low Power:

instance, computing the slope for each of the Large Standard Errors

Agoraphobia Rating

four verbal encoding x uncertainty conditions 8

for each subject. These four slopes could then

be plotted along with their confidence intervals, 6

One could go still further by, for instance, com-

puting mean slope differences along with

their confidence intervals. Such procedures cor- 4

respond to graphic illustrations of various kinds

of interactions. Creative use of such procedures 2

allows one to jettison NHST entirely.

0

Confidence intervals as a guide to "accepting StandardSanders

the null hypothesis" Treatment

The provision of confidence intervals is F i g . 4 . Two possible outcomes of Sanders's experi-

particularly useful when one wants to accept ment, illustrating high and low power.

some null hypothesis “for all intents and pur-

poses.” Another fictional data set 11 involves a Sanders technique was therefore the preferred

clinician whom I will call “Christopher Sand- one.

ers.” In this account, Sanders developed a clini- What is implied by the phrase “not signifi-

cal technique to decrease agoraphobia that is cantly different” in Sanders's report? We can't

cheaper than the generally used “Standard tell, because Sanders provided no indication of

technique.” Sanders ran a simple experiment to statistical power, evaluation of which would be

compare his technique with the Standard tech- critical for justifying his accepting the null hy-

nique. In this experiment, forty agoraphobic pothesis of no treatment difference.

individuals were randomly assigned to be ad-

ministered either the Standard or the Sanders Sanders’ report is consistent with many

treatment. A year later, each individual’s ago- possibilities, two of which are presented in Fig-

raphobia was assessed on a 10-point scale. Sand- ure 4. Given the top panel outcome, where small

ers’ hope was that there would be no difference confidence intervals reflect high experimental

between the two treatments, in which case his power, Sanders’ acceptance of the null hypothe-

treatment, being cheaper, would presumably be sis would be reasonable: Here it is readily appar-

preferred to the Standard treatment. ent that the actual difference between the Sand-

ers and Standard population means must be

Sanders got his hoped-for result, and re- quite small. In contrast, given the bottom panel

ported it thusly: “The mean agoraphobia scores outcome, where large confidence intervals reflect

of the Standard and the Sanders groups were low statistical power, Sanders’ acceptance of the

5.05 and 5.03. The difference between the two null hypothesis would be unconvincing: the ac-

groups was not statistically significant, p > .05.” tual population mean difference between the two

Sanders went on to conclude that the cheaper treatments could vary widely.

There is a noteworthy epilogue to this story:

11 Also introduced by Loftus (1993b). When pressed, over drinks, at a professional con-

ference, Sanders further defended his acceptance

Current Directions in Psychological Science (1996, 161-171)

GEOFFREY R. LOFTUS SIGNIFICANCE TESTING PAGE 9

of the null hypothesis by pointing out that the of using plots plus confidence intervals rather

actual t-value he obtained in his t-test was very than depending on NHST. I have argued above

small—"Practically zero!" he declared proudly. that a major difficulty with NHST is that it re-

And so it was that Sanders committed the com- duces data sets into a series of effect/no effect

mon error of equating smallness of the test sta- decisions and that this process, artificial as it is,

tistic with permissibility of accepting the null leads the field astray in many ways. It imposes

hypothesis. Ironically, it can be easily shown the illusion of certainty on a domain that is in-

that, given a particular mean difference, the herently ambiguous. Simply showing data, with

smaller the t-value, the lower is power—and confidence intervals, provides a superset of the

hence, the less appropriate it is to accept the null quantitative information that is provided by a

hypothesis. hypothesis-testing procedure, but it does not

foster the false security embodied in concrete

Plus ça change, plus c’est la même chose. decision that is based on a foundation of sand.

The notion of using confidence intervals in

this way—as a guide to accepting a null hy- (3) Meta-Analysis and Effect Size

pothesis "for all intents and purposes"—is not An increasingly popular technique is that of

new. Thirty-four years ago the following sug- meta-analysis (e.g., Schmidt, 1992, 1996;

gestion appeared in the pages of the Psycholog- Rosenthal, 1995). Meta-analysis entails con-

ical Review. sidering a large number of independent studies

“In view of our long-term strategy of of some phenomenon (for example, gender dif-

improving our theories, our statistical ferences in spatial ability) and (essentially) av-

tactics can be greatly improved by eraging the observed effects across studies to

shifting emphasis away from overall arrive at a overall effect. This technique is par-

NHST in the direction of statistical es- ticularly useful when two conditions are being

timation. For example [when testing a compared, but trickier when the question under

presumed null pre-treatment difference investigation involves more than two conditions

between two groups, an investigator] (see Richardson, 1996, for a discussion of this

would do better to obtain a...confidence issue).

interval for the pre-treatment difference. To illustrate, consider the question of gen-

If the interval is small and includes zero, der differences in spatial ability. Suppose that, in

[the investigator] is on fairly safe fact, there is some difference in spatial ability

ground; but if the interval is large, even between the population of males and the popu-

though it includes zero, it is immediately lation of females. The direction and magnitude

apparent that the situation is more seri- of this difference might be investigated in many

ous. In both cases, H 0 would have been (say 25) separate studies. Each individual study

accepted” (Grant, 1962). produces some observed gender difference (pre-

sumably the mean male spatial score minus the

Confidence intervals and statistical significance mean female spatial score) that constitutes that

A section on confidence intervals would be experiment's estimate of the population mean

incomplete without a discussion of the question: difference. Meta-analysis would entail averaging

"How do you make a decision about whether all 25 reported differences, thereby arriving at a

some variable has an effect by just looking at single estimated difference that is (roughly

confidence intervals?12" speaking) five times as accurate as any of the

The answer is: You can't 13. This, I would individual estimates.

assert, is an advantage rather than a disadvantage One problem is: What actual number should

be extracted from each of the individual studies?

One could simply use the raw difference, what-

12 In numerous statistics classes and in other forums in ever it might be. The problem with this is that

which I have discussed this issue, a question that I can different studies presumably use somewhat dif-

absolutely count on is: In a two-condition situation, ferent measures of spatial ability, different ways

what is the relation between error-bar overlap, and the of carrying out the experiment, different popu-

reject/fail to reject decision? lations of subjects, and so on; thus the raw meas-

ures wouldn't be comparable across the studies.

13 At least not usually. In a two group design, nonover-

lapping 95% confidence intervals implies that a two-

tailed, α = .05 t-test would lead to a conclusion of "sta- experiment the relation between confidence interval pat-

tistically significant." However if the error bars do over- tern and statistical significance is not immediately ap-

lap, and/or if there are more than two conditions in the parent.

Current Directions in Psychological Science (1996, 161-171)

GEOFFREY R. LOFTUS SIGNIFICANCE TESTING PAGE 10

The typical solution is to compute effect size

which, in its simplest incarnation, is the mean Conclusions

difference observed in a given experiment di- I’ve tried to provide a variety of reasons

vided by the obtained estimate from that ex- why NHST, as typically utilized, is barren as a

periment of the population standard deviation. It means of transiting from data to conclusions.

is these effect sizes that are then averaged to ar- I’ve tried to provide some examples of tech-

rive at an overall estimate of the population ef- niques—these are standard techniques, not bi-

fect size. zarre or fancy ones—to replace standard NHST.

These techniques are: First to plot the data rather

(4) Planned Comparisons (Contrasts) putting them in tabular form, second to put con-

My final suggestion, which I alluded to ear- fidence intervals around important sample statis-

lier, is to carry out planned comparisons on a tics, third to use meta-analysis, and fourth to use

data set. The use of planned comparisons is a planned comparisons. These techniques are all

technique that has been strongly advocated, and designed to assist in the ultimate goal of under-

clearly described by many 14. In my opinion, standing what it is that some data set is trying to

however, it is a technique that is surprisingly un- tell us.

derutilized.

This article is entitled “Psychology will be

Briefly, carrying out a planned comparison a much better science when we change the way

involves the following steps. we analyze data.” I hope that my arguments

1. First, one generates a quantitative hypothesis make it clear why I believe this to be true. I be-

(even a relatively simple one will do) about the lieve that in order for any science to progress

underlying pattern of population means corre- satisfactorily, its primary data-analysis tech-

sponding to the conditions in some experiment. niques must provide genuine insight into what-

Suppose, for example, that one were investigat- ever phenomenon its practitioners set out to in-

ing the relation between problem-solving time vestigate. The primary data analysis technique of

and alcohol consumption. An experiment is de- Psychology—NHST—does not, as I’ve tried to

signed in which subjects are assigned to one of demonstrate, meet this criterion.

five groups that differ in terms of amount of Acquisition of insight is often difficult in

alcohol consumed—0, 1, 2, 3, or 4 oz—and the social sciences, which are cursed with large

problem-solving time is measured for each numbers of uncontrollable variables, and hence

group. A hypothesis to be tested is that problem- error variance that has to be dealt with somehow.

solving time increases linearly with number of I believe that, historically, social scientists have

ounces of consumed alcohol. embraced NHST procedures because they pro-

2. Next, hypothesis in hand, one generates vide the appearance of objectivity. These proce-

weights—one weight per condition—that cor- dures may indeed be objective in the sense that

respond to the hypothesized pattern of means. they provide rules for making scientific deci-

One constraint is that the weights must sum to sions. But objectivity is not, alas, sufficient for

zero; thus, in this example, the weights repre- insight. I believe that these rules provide only

senting the linearity hypothesis would be: -2, -1, the illusion of insight—which is worse than pro-

0, 1, 2. viding no insight at all.

3. The experiment is carried out and the References

means—in this example, the five mean problem- Abelson, R. (1995). Statistics as principled argument.

solving times—are computed. Hillsdale, NJ: Erlbaum.

4. Finally, one (essentially) computes an over- Bakan, D. (1966). The test of significance in psycho-

conditions correlation between the weights and logical research, Psychological Bulletin, 66, 423-

the sample means. The magnitude of the Pear- 437.

son r 2 that emerges reflects the goodness of the Berger, J.O. & Berry, D.A. (1988). Statistical analysis

hypothesis. Various other more sophisticated and the illustion of objectivity, American Scientist,

procedures can also be carried out, the nature of 76, 159-165.

which are beyond the scope of this article. Carver, R.P. (1978). The case against statistical signifi-

cance testing. Harvard Educational Review, 48,

378-399.

Carver, R.P. (1993). The case against statistical signifi-

14See, for example, Abelson (1995), Hays (1973), cance testing, rivisited. Journal of Experimental

Education, 61, 287-292.

Loftus & Loftus (1988), and Rosenthal & Rosnow

(1985).

Current Directions in Psychological Science (1996, 161-171)

GEOFFREY R. LOFTUS SIGNIFICANCE TESTING PAGE 11

Cohen, J. (1990). Things I have learned (so far). Ameri- Loftus, G.R. and Masson, M.E.J. (1994) Using confi-

can Psychologist, 45, 1304-1312 dence intervals in within-subjects designs. Psy-

Cohen, J. (1994). The earth is round (p < .05). Ameri- chonomic Bulletin & Review, 1, 476-490.

can Psychologist, 49, 997-1003. Lykken, D.T. (1968). Statistical significance in psycho-

Fisher, R.A. (1925). Statistical methods for research logical research. Pschological Bulletin, 70, 131-

workers. Edinburgh: Oliver and Boyd. 139.

Fisher, R.A. (1935). The design of experiments. Edin- Maltz, M.D, (1994). Deviating from the mean: The de-

burgh: Oliver and Boyd. clining significance of significance. Journal of re-

search in crime and delinquency, 31, 434-463.

Freedman, D.A., Rothenberg, T. & Sutch, R. (1983).

On Energy Policy Models. Journal of Business and Meehl, P.E. (1967). Theory testing in psychology and

Economic Statistics, 1, 24-36. physics: A methodological paradox. Philosophy of

Science, 34, 103-115.

Frick, R.W. (1995). Accepting the null hypothesis.

Memory & Cognition, 23, 132-138. Meehl, P.E. (1978). Theortical risks and tabular aster-

isks: Sir Karl, Sir Ronald and the slow process of

Gigerenzer, G. , Swijtink, Z., Porter, T., Daston, L., soft psychology. Journal of Consulting and Clini-

Beatty, J., & Kruger, L.. (1989)The Empire of cal Psychology, 46, 806-834.

chance: How probability changed science and every-

day life. Cambridge England: Cambridge University Meehl, P.E. (1990). Why summaries of research on

Press psychological theories are often uninterpretable.

Psychological Reports, Monograph Supplement 1-

Grant, D.A. (1962). Testing the null hypothesis and the V66.

strategy and tactics of investigating theoretical

models. Psychological Review, 69, 54-61. Nunnally, J. (1960). The place of statistics in psychol-

ogy, Educational and Psychological Measurement,

Greenwald, A.G. (1975). Consequences of prejudice 20, 641-650.

against the null hypothesis. Psychological Bulle-

tin, 83, 1-20. Oakes, M.L. (1986). Statistical inference: A commen-

tary for the social and behavioral sciences. New

Greenwald, A.G., Gonzalez, R., Harris, R.J., & York: Wiley.

Guthrie, D. (1996) Effect Sizes and p-values: What

should be reported and what should be replicated? Richardson. J.T.E. (1996). Measures of effect size. Be-

Psychophysiology, 33, 175-183. havior Research Methods, Instrumentation, &

Computers, 28, 12-22.

Hays, W. (1973). Statistics for the Social Sciences

(second edition). New York: Holt. Rozeboom, W.W. (1960). The fallacy of the null-hy-

pothesis significance test. Psychological Bulletin,

Hunter, J.E. & Schmidt, F. (1990). Methods of meta- 57, 416-428.

analysis: Correcting error and bias in research find-

ings. Newbury Park, CA: Sage. Rosenthal, R. (1995). Writing meta-analytic reviews.

Psychological Bulletin, 118, 183-192.

Jones, L.V. (1955). Statistics and research design. An-

nual Review of Psychology, 6, 405-430. Stanford: Rosenthal, R. & Gaito, J. (1963). The interpretation of

Annual Reviews, Inc. levels of significnce by psychological researchers.

Journal of Psychology, 55, 33-38.

Loftus, G.R. (1985). Evaluating forgetting curves.

Journal of Experimental Psychology: Learning, Rosenthal, R. & Rosnow, R.L. (1985). Contrast analy-

Memory, and Cognition, 11, 396-405 (a). sis: Focused comparisons in the analysis of vari-

ance. Cambridge, England: Schmidt, Frank (1996).

Loftus, G.R. (1985). Consistency and confoundings: Statistical significance testing and cumu-lative

Reply to Slamecka. Journal of Experimental Psy- knowledge in Psychology: Implications for training

chology: Learning, Memory and Cognition, 11, of researchers. Psychological Methods, 1, 115-129.

817-820 (b).

Rosnow, R.L. & Rosenthal, R. (1989). Statistical pro-

Loftus, G.R. (1991). On the tyranny of hypothesis test- cedures and the justification of knowledge in psy-

ing in the social sciences, Contemporary Psychol- chological science. American Psychologist, 44,

ogy, 36, 102-105 (1991) 1276-1284.

Loftus, G.R. (1993). Editorial Comment. Memory & Schmidt, Frank (1996). Statistical significance testing

Cognition, 21, 1-3. (a) and cumulative knowledge in Psychology: Implica-

Loftus, G.R. (1993). Visual data representation and hy- tions for training of researchers. Psychological

pothesis testing in the microcomputer age. Behav- Methods, 1, 115-129.

ior Research Methods, Instrumentation, and Com- Schmidt, Frank & Hunter, J. (1997). Eight false objec-

puters, 25, 250-256. (b) tions to the discontinuation of significance testing

Loftus, G.R. (1995). Data analysis as insight. Behavior in the analysis of research data. In L. Harlow & S.

Research Methods, Instrumentation and Computers, Mulaik (Eds.), What if there were no significance

27, 57-59. testing. Hillsdale, NJ: Erlbaum.

Loftus, G.R. & Bamber, D. (1990) Weak models, Serlin, R.C. (1993). Confidence intervals and the scien-

strong models, unidimensional models, and psycho- tific method: A case for Holm on the range. Journal

logical time. Journal of Experimental Psychology: of Experimental Education, 61, 350-360.

Learning, Memory, and Cognition, 16, 916-926.

Current Directions in Psychological Science (1996, 161-171)

GEOFFREY R. LOFTUS SIGNIFICANCE TESTING PAGE 12

Shea, C. (1996). Psychologists debate accuracy of "sig- Tyler, R.W. (1935). What is statistical significance?

nificance test". The Chronicle of Higher Education, Educational Research Bulletin, 10, 115-118, 142.

August, 16. Winkler, R.L. (1993). Bayesian statistics: An overview.

Slamecka, N.J. & McElree, B. (1983). Normal forget- In G. Kerens, and C. Lewis (Eds.) A Handbook for

ting of verbal lists as a function of their degree of Data Analysis in the Behavioral Sciences: Statisti-

learning. Journal of Experimental Psychology: cal Issues, Hillsdale NJ: Erlbaum.

Learning, Memory, and Cognition, 9, 384-397.

Current Directions in Psychological Science (1996, 161-171)

You might also like

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Bill Evans - Rare Transcriptions Vol.1Document91 pagesBill Evans - Rare Transcriptions Vol.1fuckeron100% (4)

- King Lear Sparknotes TranslationDocument157 pagesKing Lear Sparknotes TranslationJoriwing100% (10)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Cracking The SAT, 2008 Ed, 0375766065, PrincetonReview - 01Document44 pagesCracking The SAT, 2008 Ed, 0375766065, PrincetonReview - 01Aman Bhattarai100% (1)

- Analysis of Dprs of Highway ProjectDocument52 pagesAnalysis of Dprs of Highway Projectharsha vardhanNo ratings yet

- Project On SwotDocument57 pagesProject On SwotGenesian Nikhilesh PillayNo ratings yet

- The Role and Applications of Data Science in Different IndustriesDocument2 pagesThe Role and Applications of Data Science in Different IndustriesRJ BuenafeNo ratings yet

- What Is A Comprehensive Mobility Plan - FINALDocument25 pagesWhat Is A Comprehensive Mobility Plan - FINALAditya Karan0% (1)

- Roles and Responsibilities of The Curriculum ChairmanDocument3 pagesRoles and Responsibilities of The Curriculum ChairmanJose Pedro Dayandante100% (3)

- PH D Synopsis Rakhi Singh BanasthaliDocument23 pagesPH D Synopsis Rakhi Singh BanasthaliRakhi SinghNo ratings yet

- NMC AnswersDocument1 pageNMC AnswersJoriwingNo ratings yet

- My First ChartDocument1 pageMy First ChartJoriwingNo ratings yet

- On Self RespectDocument3 pagesOn Self RespectJoriwingNo ratings yet

- Gigerenzer How To Make Cognitive Bias DisappearDocument25 pagesGigerenzer How To Make Cognitive Bias DisappearGisela JönssonNo ratings yet

- 2004 Paper II PDFDocument8 pages2004 Paper II PDFJoriwingNo ratings yet

- Entropy and The Central Limit Theorem Annals ProbabilityDocument8 pagesEntropy and The Central Limit Theorem Annals ProbabilityJoriwingNo ratings yet

- Joe's FORMAT Model PDFDocument5 pagesJoe's FORMAT Model PDFJoriwingNo ratings yet

- Wounded KneeDocument1 pageWounded KneeJoriwingNo ratings yet

- Latin 1st Ed SolutionsDocument439 pagesLatin 1st Ed SolutionsJoriwingNo ratings yet

- Mark Fowlers Repertoire ListDocument11 pagesMark Fowlers Repertoire ListJoriwingNo ratings yet

- Structure ChartDocument2 pagesStructure ChartRyan BurnetteNo ratings yet

- Joe's FORMAT Model PDFDocument5 pagesJoe's FORMAT Model PDFJoriwingNo ratings yet

- Bed TimeDocument23 pagesBed TimeJoriwingNo ratings yet

- The Curious Incident of The Dog in The Night Time Play Script PDFDocument88 pagesThe Curious Incident of The Dog in The Night Time Play Script PDFblake grissom69% (13)

- SimDocument1 pageSimJoriwingNo ratings yet

- Agency FeesDocument1 pageAgency FeesJoriwingNo ratings yet

- Mathematical Recreations: A Puzzle For PiratesDocument2 pagesMathematical Recreations: A Puzzle For PiratesJeff PrattNo ratings yet

- 2009 Courier Autumn WinterDocument17 pages2009 Courier Autumn WinterJoriwingNo ratings yet

- HAD I The HeavensDocument1 pageHAD I The HeavensAkshay ManchandaNo ratings yet

- Forster EssayDocument16 pagesForster EssayJoriwingNo ratings yet

- ClthsDocument1 pageClthsJoriwingNo ratings yet

- Mei Structured Mathematics: Examination Formulae and TablesDocument32 pagesMei Structured Mathematics: Examination Formulae and TablesJoriwingNo ratings yet

- Harta Metrou LondraDocument2 pagesHarta Metrou LondraAle Bizdu100% (1)

- Fairclough Discourse and Social Change PDFDocument2 pagesFairclough Discourse and Social Change PDFJodiNo ratings yet

- SWOT Analysis of India Rubber IndustryDocument6 pagesSWOT Analysis of India Rubber IndustryKeshav Gupta33% (3)

- Composite Pavement - Mechanistic-Empirical (: Lec - ME)Document19 pagesComposite Pavement - Mechanistic-Empirical (: Lec - ME)Mahbub AlamNo ratings yet

- ADAM Trace BilityDocument18 pagesADAM Trace BilityABHIJIT SheteNo ratings yet

- Assessment 2Document10 pagesAssessment 2Masya IlyanaNo ratings yet

- Ijcrt1705169 PDFDocument7 pagesIjcrt1705169 PDFGibson Samuel JosephNo ratings yet

- Children at Risk - An Evaluation of Factors Contributing To Child Abuse and NeglectDocument318 pagesChildren at Risk - An Evaluation of Factors Contributing To Child Abuse and NeglectAlguémNo ratings yet

- Literature Review On Education System in PakistanDocument8 pagesLiterature Review On Education System in Pakistanea6p1e99No ratings yet

- Predicting Intentions To Revisit and Recommend A Sporting Event Using The Event Experience Scale (EES)Document13 pagesPredicting Intentions To Revisit and Recommend A Sporting Event Using The Event Experience Scale (EES)Yogi TioNo ratings yet

- Consent Form For Molecular DiagnosisDocument1 pageConsent Form For Molecular Diagnosisapi-254872111No ratings yet

- Lesson-Exemplar - ResearchDocument9 pagesLesson-Exemplar - ResearchalexNo ratings yet

- Demography and Human DevelopmentDocument18 pagesDemography and Human DevelopmentJovoMedojevicNo ratings yet

- Zhu Detailed Human Shape Estimation From A Single Image by Hierarchical CVPR 2019 PaperDocument10 pagesZhu Detailed Human Shape Estimation From A Single Image by Hierarchical CVPR 2019 PaperHrishikesh Hemant BorateNo ratings yet

- Presentation On AttitudeDocument18 pagesPresentation On AttitudeManinder PruthiNo ratings yet

- Psychological Contract Inventory-RousseauDocument30 pagesPsychological Contract Inventory-RousseauMariéli CandidoNo ratings yet

- Annotated BibliographyDocument6 pagesAnnotated Bibliographyapi-2697563360% (1)

- A General Model For Fuzzy Linear ProgrammingDocument9 pagesA General Model For Fuzzy Linear ProgrammingPrashant GuptaNo ratings yet

- Fitopanacea, LLC: Commercial ProposalDocument2 pagesFitopanacea, LLC: Commercial Proposalmadison7000No ratings yet

- Science Lesson Plan - SemiconductorsDocument5 pagesScience Lesson Plan - Semiconductorsapi-457702142100% (1)

- Work Study: Article InformationDocument21 pagesWork Study: Article InformationsuhailNo ratings yet

- Global Competitiveness Report 2004-2005Document48 pagesGlobal Competitiveness Report 2004-2005Gillian Adams50% (2)

- Violence, Older Adults, and Serious Mental IllnessDocument10 pagesViolence, Older Adults, and Serious Mental IllnessJosé Manuel SalasNo ratings yet

- Sample Items From The Multifactor Leadership Questionnaire (MLQ) Form 5X-ShortDocument1 pageSample Items From The Multifactor Leadership Questionnaire (MLQ) Form 5X-ShortMutia Kusuma DewiNo ratings yet

- Igcse Coursework English ExamplesDocument8 pagesIgcse Coursework English Examplesusutyuvcf100% (2)