Professional Documents

Culture Documents

Microsoft Word - Documento1

Uploaded by

Fabfour Fabrizio0 ratings0% found this document useful (0 votes)

20 views14 pagesVariance - Covariance

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentVariance - Covariance

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

20 views14 pagesMicrosoft Word - Documento1

Uploaded by

Fabfour FabrizioVariance - Covariance

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 14

Covariance, Regression, and Correlation

In the previous chapter, the variancewasintroduced as a measure of the

dispersion

of a univariate distribution. Additional statistics are required to describe the

joint

distribution of two or more variables. The covariance provides a natural

measure

of the association between two variables, and it appears in the analysis of many

problems in quantitative genetics including the resemblance between relatives,

the correlation between characters, and measures of selection.

As a prelude to the formal theory of covariance and regression, we first provide

a brief review of the theory for the distribution of pairs of random variables.

We then give a formal definition of the covariance and its properties. Next, we

show how the covariance enters naturally into statistical methods for

estimating

the linear relationship between two variables (least-squares linear regression)

and

for estimating the goodness-of-fit of such linear trends (correlation). Finally,

we

apply the concept of covariance to several problems in quantitative-genetic

theory.

More advanced topics associated with multivariate distributions involving

three or more variables are taken up in Chapter 8.

JOINTLY DISTRIBUTED RANDOM VARIABLES

The probability of joint occurrence of a pair of random variables (x; y) is

specified

by the joint probability density function, p(x; y), where

P( y1 · y · y2; x1 · x · x2 ) =

Z y2

y1

Z x2

x1

p(x; y) dx dy (3.1)

We often ask questions of the form: Whatis the distribution of y given that x

equals

some specified value? For example, we might want to know the probability

that

parents whose height is 68 inches have offspring with height exceeding 70

inches.

To answer such questions, we use p(yjx), the conditional density of y given x,

where

P( y1 · y · y2 j x) =

Z y2

y1

p(y jx) dy (3.2)

Joint probability density functions, p(x; y); and conditional density functions,

35

36 CHAPTER 3

p(yjx); are connected by

p(x; y) = p(y jx)p(x) (3.3a)

where p(x) =

R+1

¡1 p( y j x ) dy is the marginal (univariate) density of x:

Two random variables, x and y; are said to be independent if p(x; y) can be

factored into the product of a function of x only and a function of y only, i.e.,

p(x; y) = p(x)p(y) (3.3b)

If x and y are independent, knowledge of x gives no information about the value

of y. From Equations 3.3a and 3.3b, if p(x; y) = p(x)p(y); then p( y j x) = p(y):

Expectations of Jointly Distributed Variables

The expectation of a bivariate function, f(x; y); is determined by the joint

probability

density

E[ f(x; y) ] =

Z +1

¡1

Z +1

¡1

f(x; y) p(x; y) dx dy (3.4)

Most of this chapter is focused on conditional expectation, i.e., the expectation

of one variable, given information on another. For example, one may know the

value of x (perhaps parental height), and wish to compute the expected value

of

y (offspring height) given x. In general, conditional expectations are computed

by using the conditional density

E( y j x) =

Z +1

¡1

y p(y jx) dy (3.5)

If x and y are independent, then E(yjx) = E(y), the unconditional expectation.

Otherwise, E( y j x ) is a function of the specified x value. For height in humans

(Figure 1.1), Galton (1889) observed a linear relationship,

E( y j x) = ®+¯x (3.6)

where ® and ¯ are constants. Thus, the conditional expectation of height in

offspring

(y) is linearly related to the average height of the parents (x):

COVARIANCE

Consider a set of paired variables, (x; y). For each pair, subtract the population

mean ¹x from the measure of x; and similarly subtract ¹y from y. Finally, for

each pair of observations, multiply both of these new measures together to

obtain

(x ¡ ¹x)(y ¡ ¹y). The covariance of x and y is defined to be the average of this

quantity over all pairs of measures in the population,

¾(x; y) = E[ (x¡¹x) (y ¡¹y) ] (3.7)

COVARIANCE, REGRESSION, AND CORRELATION 37

yyy

xxx

(A) (B) (C)

Figure 3.1 Scatterplots for the variables x and y. Each point in the x-y plane

corresponds to a single pair of observations (x; y). The line drawn through the

scatterplot gives the expected value of y given a specified value of x. (A) There

is no linear tendency for large x values to be associated with large (or small) y

values, so ¾(x; y) = 0. (B) As x increases, the conditional expectation of y given

x, E(yjx), also increases, and ¾(x; y) > 0. (C) As x increases, the conditional

expectation of y given x decreases, and ¾(x; y) < 0.

We often denote covariance by ¾x;y. BecauseE(x) = ¹x andE(y) = ¹y; expansion

of the product leads to further simplification,

¾(x; y) = E[ (x¡¹x) (y ¡¹y) ]

= E(xy ¡ ¹y x ¡ ¹x y + ¹x ¹y )

= E(xy)¡¹y E(x)¡¹xE(y) + ¹x ¹y

= E(xy)¡¹x ¹y (3:8)

In words, the covariance is the mean of the pairwise cross-product xy minus

the

cross-product of the means. The sampling estimator of ¾(x; y) is similar in form

to that for a variance,

Cov(x; y) = n( xy ¡ x ¢ y )

n¡1

(3.9)

where n is the number of pairs of observations, and

xy =

1

n

Xn

i=1

xi yi

The covariance is a measure of association between x and y (Figure 3.1). It

is positive if y increases with increasing x, negative if y decreases as x increases,

and zero if there is no linear tendency for y to change with x. If x and y are

independent, then ¾(x; y) = 0, but the converse is not true — a covariance of

zero does not necessarily imply independence. (We will return to this shortly;

see

Figure 3.3.)

38 CHAPTER 3

Useful Identities for Variances and Covariances

Since ¾(x; y) = ¾(y; x), covariances are symmetrical. Furthermore, from the

definition

of the variance and covariance,

¾(x; x) = ¾2(x) (3.10a)

i.e., the covariance of a variable with itself is the variance of that variable. It also

follows

from Equation 3.8 that, for any constant a;

¾(a; x) = 0 (3:10b)

¾(ax; y) = a¾(x; y) (3:10c)

and if b is also a constant

¾(ax; b y) = ab¾(x; y) (3.10d)

From Equations 3.10a and 3.10d,

¾2(ax) = a2¾2(x) (3.10e)

i.e., the variance of the transformed variable ax is a2 times the variance of x. Likewise,

for any constant a,

¾[ (a+x); y ] = ¾(x; y) (3.10f)

so that simply adding a constant to a variable does not change its covariance with

another

variable.

Finally, the covariance of two sums can be written as a sum of covariances,

¾[ (x+y); (w +z) ] = ¾(x;w) + ¾(y;w) + ¾(x; z) + ¾(y; z) (3.10g)

Similarly, the variance of a sum can be expressed as the sum of all possible

variances

and covariances. From Equations 3.10a and 3.10g,

¾2(x + y) = ¾2(x) + ¾2(y) + 2¾(x; y) (3.11a)

More generally,

¾2

Ã

Xn

i

xi

!

=

Xn

i

Xn

j

¾(xi; xj) =

Xn

i

¾2(xi) + 2

Xn

i<j

¾(xi; xj) (3.11b)

Thus, the variance of a sum of uncorrelated variables is just the sum of the variances

of

each variable.

We willmakeconsiderable use of the preceding relationships in the remainder

of this chapter and in chapters to come. Methods for approximating variances

and

covariances of more complex functions are outlined in Appendix 1.

COVARIANCE, REGRESSION, AND CORRELATION 39

REGRESSION

Depending on the causal connections between two variables, x and y, their true

relationship may be linear or nonlinear. However, regardless of the true

pattern

of association, a linear model can always serve as a first approximation. In this

case, the analysis is particularly simple,

y = ® + ¯x + e (3.12a)

where ® is the y-intercept, ¯ is the slope of the line (also known as the regression

coefficient), and e is the residual error. Letting

by = ® + ¯x (3.12b)

be the value of y predicted by the model, then the residual error is the deviation

between the observed and predicted y value, i.e., e = y¡by. When information

on

x is used to predict y, x is referred to as the predictor or independent variable

and y as the response or dependent variable.

The objective of linear regression analysis is to estimate the model parameters,

® and ¯, that give the \best fit" for the joint distribution of x and y. The true

parameters ® and ¯ are only obtainable if the entire population is sampled.With

an incomplete sample, ® and ¯ are approximated by sample estimators,

denoted

as a and b. Good approximations of ® and ¯ are sometimes obtainable by visual

inspection of the data, particularly in the physical sciences, where deviations

from

a simple relationship are due to errors of measurement rather than biological

variability. However, in biology many factors are often beyond the

investigator’s

control. The data in Figure 3.2 provide a good example. While there appears to

be a weak positive relationship between maternal weight and offspring

number

in rats, it is difficult to say anything more precise. An objective definition of

\best

fit" is required.

Derivation of the Least-Squares Linear Regression

The mathematical method of least-squares linear regression provides one

such

best-fit solution. Without making any assumptions about the true joint

distribution

of x and y, least-squares regression minimizes the average value of the

squared (vertical) deviations of the observed y from the values predicted by

the

regression line. That is, the least-squares solution yields the values of a and b

that minimize the mean squared residual, e2. Other criteria could be used to

define

\best fit." For example, one might minimize the mean absolute deviations

(or cubed deviations) of observed values from predicted values. However, as

we

will now see, least-squares regression has the unique and very useful property

of

maximizing the amount of variance in y that can be explained by a linear

model.

Consider a sample of n individuals, each of which has been measured for x

and y: Recalling the definition of a residual

e = y ¡ by = y ¡ a ¡ bx (3.13a)

and then adding and subtracting the quantity ( y+b x ) on the right side, we

obtain

40 CHAPTER 3

Number of offspring

Weight of mother (g)

31–40 11–20

14

12

10

8

6

4

2

0

100 120 140 160 180 200

Individuals in bivariate class:

40 60 80

21–30 1–10

Figure 3.2 A bivariate plot of the relationship between maternal weight and

number of offspring for the sample of rats summarized in Table 2.2. Differentsized

circles refer to different numbers of individuals in the bivariate classes.

e = (y¡y )¡b(x¡x)¡(a+bx¡y ) (3.13b)

Squaring both sides leads to

e2 = (y¡y )2 ¡2b(y¡y ) (x¡x) + b2(x¡x)2 + (a+bx¡y )2

¡ 2 ( y ¡y ) (a+bx¡y ) + 2b (x¡x)( a + b x ¡ y ) (3.13c)

Finally, we consider the average value of e2 in the sample. The final two terms

in

Equation 3.13b drop out here because, by definition, the mean values of (x ¡ x)

and (y ¡ y) are zero. However, by definition, the mean values of the first three

terms are directly related to the sample variances and covariance. Thus,

e2 =

µ

n¡1

n

¶£

Var(y) ¡ 2 b Cov(x; y) + b2 Var(x)

¤

+ (a+bx¡y )2 (3.13d)

The values of a and b that minimize e2 are obtained by taking partial

derivatives

COVARIANCE, REGRESSION, AND CORRELATION 41

of this function and setting them equal to zero:

@ (e2)

@a

= 2(a+bx¡y) = 0

@ (e2)

@b

=2

·µ

n¡1

n

¶

[¡Cov(x; y) + b Var(x) ]+x(a+bx¡y )

¸

=0

The solutions to these two equations are

a = y ¡ b x (3.14a)

b=

Cov(x; y)

Var(x)

(3.14b)

Thus, the least-squares estimators for the intercept and slope of a linear

regression

are simple functions of the observed means, variances, and covariances. From

the

standpoint of quantitative genetics, this property is exceedingly useful, since

such

statistics are readily obtainable from phenotypic data.

Properties of Least-squares Regressions

Herewe summarize some fundamental features and useful properties of the

leastsquares

approach to linear regression analysis:

1. The regression line passes through the means of both x and y. This relationship

should be immediately apparent from Equation 3.14a, which implies y =

a + b x.

2. The average value of the residual is zero. From Equation 3.13a, the mean

residual is e = y ¡ a ¡ b x, which is constrained to be zero by Equation

3.14a. Thus, the least-squares procedure results in a fit to the data such

that the sum of (vertical) deviations above and below the regression line

are exactly equal.

3. For any set of paired data, the least-squares regression parameters, a and b,

define the straight line that maximizes the amount of variation in y that can be

explained by a linear regression on x. Since e = 0, it follows that the variance

of residual errors about the regression is simply e2. As noted above, this

variance is the quantity minimized by the least-squares procedure.

4. The residual errors around the least-squares regression are uncorrelated with the

predictor variable x. This statement follows since

Cov(x; e) = Cov[ x; (y ¡ a ¡ b x) ] = Cov(x; y) ¡ Cov(x; a) ¡ b Cov(x; x)

= Cov(x; y) ¡ 0 ¡ b Var(x)

= Cov(x; y) ¡ Cov(x; y)

Var(x)

Var(x) = 0

42 CHAPTER 3

ye

e

xx

Figure 3.3 Alinear least-squares fit to an inherently nonlinear data set. Although

there is a systematic relationship between the residual error (e) and the predictor

variable (x), the two are uncorrelated (show no net linear trend) when viewed

over the entire range of x. The mean residual error ( e = 0) is denoted by the

dashed line on the right graph.

Note, however, that Cov(x; e) = 0 does not guarantee that e and x are

independent. In Figure 3.3, for example, because of a nonlinear relationship

between y and x, the residual errors associated with extreme values

of x tend to be negative while those for intermediate values are positive.

Thus, if the true regression is nonlinear, then E( e j x ) 6= 0for some x values,

and the predictive power of the linear model is compromised. Even

if the true regression is linear, the variance of the residual errors may vary

with x, in which case the regression is said to display heteroscedasticity

(Figure 3.4). If the conditional variance of the residual errors given any

specified x value, ¾2( e j x ), is a constant (i.e., independent of the value

of x), then the regression is said to be homoscedastic.

5. There is an important situation in which the true regression, the value of

E( y j x ), is both linear and homoscedastic — when x are y are bivariate normally

distributed. The requirements for such a distribution are that the

univariate distributions of both x and y are normal and that the conditional

distributions of y given x, and x given y, are also normal (Chapter

8). Since statistical testing is simplified enormously, it is generally desirable

to work with normally distributed data. For situations in which the

raw data are not so distributed, a variety of transformations exist that can

render the data close to normality (Chapter 11).

6. It is clear from Equations 3.14a,b that the regression of y on x is different from

the regression of x on y unless the means and variances of the two variables are

equal. This distinction is made by denoting the regression coefficient by

b(y; x) or by;x when x is the predictor and y the response variable.

COVARIANCE, REGRESSION, AND CORRELATION 43

x

y

x

y

(A) (B)

Figure 3.4 The dispersion of residual errors around a regression. (A) The regression

is homoscedastic — the variance of residuals given x is a constant. (B) The

regression is heteroscedastic—the variance of residuals increases with x. In this

case, higher x values predict y with less certainty.

For practical reasons, we have expressed properties 1 – 6 in terms of the

estimators a, b, Cov(x; y), and Var(x). They also hold when the estimators are

replaced by the true parameters ®, ¯, ¾(x; y), and ¾2(x).

Example 1. Suppose Cov(x; y) = 10, Var(x) = 10, Var(y) = 15, and x = y = 0.

Compute the least-squares regressions of y on x, and of x on y.

From Equation 3.14a, a = 0for both regressions. However,

b(y; x) = Cov(x; y)=Var(x) = 10=10 = 1

while b(x; y) = Cov(x; y)=Var(y) = 2=3. Hence, by = x is the least-squares

regression of y on x, while bx = (2=3)y is the regression of x on y.

CORRELATION

For purposes of hypothesis testing, it is often desirable to use a dimensionless

measure of association. The most frequently used measure in bivariate analysis

is the correlation coefficient,

r(x; y) =

Cov(x; y)

p

Var(x) Var(y)

(3.15a)

44 CHAPTER 3

Note that r(x; y) is symmetrical, i.e., r(x; y) = r(y; x): Thus, where there is

no ambiguity as to the variables being considered, we abbreviate r(x; y) as r.

The parametric correlation coefficient is denoted by ½(x; y) (or ½) and equals

¾(x; y)=¾(x)¾(y). The least-squares regression coefficient is related to the

correlation

coefficient by

b(y; x) = r

s

Var(y)

Var(x)

(3.15b)

An advantage of correlations over covariances is that the former are scale

independent.

This can be seen by noting that if w and c are constants,

r(wx; c y) =

Cov(wx; c y)

p

Var(wx) Var(c y)

= w cCov(x; y)

p

w2 Var(x) c2 Var(y)

= r(x; y) (3.16a)

Thus scaling x and/or y by constants does not change the correlation coefficient,

although the variances and covariances are affected. Since r is dimensionless

with

limits of §1, it gives a direct measure of the degree of association: if jrj is close

to

one, x and y are very strongly associated in a linear fashion, while if jrj is close

to

zero, they are not.

The correlation coefficient has other useful properties. First, r is a standardized

regression coefficient (the regression coefficient resulting from rescaling x and y such

that each has unit variance). Letting x0 = x=

p

Var(x) and y0 = y=

p

Var(y) gives

Var(x0) = Var(y0) = 1, implying

b(y

0

;x

0) = b(x

0

;y

0) = Cov(x

0

;y

0) =

Cov(x; y)

p

Var(x) Var(y)

= r (3.16b)

Thus, when variables are standardized, the regression coefficient is equal to

the

correlation coefficient regardless of whether x0 or y0 is chosen as the predictor

variable.

Second, the squared correlation coefficient measures the proportion of the variance

in y that is explained by assuming that E(yjx) is linear. The variance of the response

variable y has two components: r2 Var(y), the amount of variance accounted for

by the linear model (the regression variance), and (1 ¡ r2) Var(y), the remaining

variance not accountable by the regression (the residual variance). To obtain

this

result, we derive the variance of the residual deviation defined in Equation

3.13a,

Var(e) = Var( y ¡ a ¡ bx) = Var( y ¡ bx )

= Var(y) ¡ 2 b Cov(x; y) + b2 Var(x)

= Var(y) ¡ 2 [ Cov(x; y) ]2

Var(x)

+

[ Cov(x; y) ]2 Var(x)

[ Var(x) ]2

=

µ

1¡ [ Cov(x; y) ]2

Var(x) Var(y)

¶

Var(y) = (1¡r2) Var(y) (3.17)

COVARIANCE, REGRESSION, AND CORRELATION 45

Example 2. Returning to Table 2.1, the preceding formulae can be used to

characterize

the relationship between maternal weight and offspring number in rats.

Here we take offspring number as the response variable y and maternal weight

as the predictor variable x. The mean and variance for maternal weight were

found to be x = 118:90 and Var(x) = 623:06 (Table 2.1). For offspring number,

y = 5:49 and Var(y) = 2:94. In order to obtain an estimate of the covariance,

we first require an estimate of E(xy). Taking the xy cross-product of all classes

in Table 2.1 (using the midpoint of the interal for the value of x) and weighting

them by their frequencies,

xy =

(1 ¢ 4 ¢ 55) + (3 ¢ 5 ¢ 55) + (1 ¢ 6 ¢ 55) + ¢ ¢ ¢ + (1 ¢ 10 ¢ 195)

1003

= 660:14

The covariance estimate is then obtained using Equation 3.9,

Cov(x; y) =

1003

1002

[ 660:14 ¡ (118:90 £ 5:49) ] = 7:39

From Equation 3.14b, the slope of the regression is found to be

b(y; x) =

7:39

623:06

= 0:01

Thus, the expected increase in number of offspring per gram increase in maternal

weight is about 0.01. How predictable is this change? From Equation 3.15a, the

correlation coefficient is estimated to be

r=

7:39 p

623:06 £ 2:94

= 0:17

Squaring this value, r2 = 0:03. Therefore, only about 3 percent of the variance

in offspring number can be accounted for with a model that assumes a linear

relationship with maternal weight.

You might also like

- The Scalar Algebra of Means, Covariances, and CorrelationsDocument21 pagesThe Scalar Algebra of Means, Covariances, and CorrelationsOmid ApNo ratings yet

- 03 ES Regression CorrelationDocument14 pages03 ES Regression CorrelationMuhammad AbdullahNo ratings yet

- Climate Change Detection Using StatisticsDocument12 pagesClimate Change Detection Using StatisticsAracely SandovalNo ratings yet

- Moore's Law and linear regression analysis in researchDocument10 pagesMoore's Law and linear regression analysis in researchq1w2e3r4t5y6u7i8oNo ratings yet

- Linear Regression Analysis for STARDEX: Key MethodsDocument6 pagesLinear Regression Analysis for STARDEX: Key MethodsSrinivasu UpparapalliNo ratings yet

- Simple Linear Regression and Correlation Analysis: Chapter FiveDocument5 pagesSimple Linear Regression and Correlation Analysis: Chapter FiveAmsalu WalelignNo ratings yet

- Regression and CorrelationDocument13 pagesRegression and CorrelationzNo ratings yet

- Regression Analysis ExplainedDocument28 pagesRegression Analysis ExplainedRobin GuptaNo ratings yet

- Correlation and Regression Analysis ExplainedDocument30 pagesCorrelation and Regression Analysis ExplainedgraCENo ratings yet

- Method Least SquaresDocument7 pagesMethod Least SquaresZahid SaleemNo ratings yet

- StatisDocument7 pagesStatisfahmimuhammad.2999No ratings yet

- CovarianceDocument5 pagesCovariancesoorajNo ratings yet

- Multicollinearity Among The Regressors Included in The Regression ModelDocument13 pagesMulticollinearity Among The Regressors Included in The Regression ModelNavyashree B MNo ratings yet

- Chapter 5Document16 pagesChapter 5EdwardNo ratings yet

- RegressionDocument6 pagesRegressionMansi ChughNo ratings yet

- 13 - Data Mining Within A Regression FrameworkDocument27 pages13 - Data Mining Within A Regression FrameworkEnzo GarabatosNo ratings yet

- Introduction To Statistics and Data Analysis - 11Document47 pagesIntroduction To Statistics and Data Analysis - 11DanielaPulidoLunaNo ratings yet

- Regression and CorrelationDocument14 pagesRegression and CorrelationAhmed AyadNo ratings yet

- Scatter Plot Linear CorrelationDocument4 pagesScatter Plot Linear Correlationledmabaya23No ratings yet

- Bivariate Regression: Multiple Regression (MR) Prediction With Continuous VariablesDocument5 pagesBivariate Regression: Multiple Regression (MR) Prediction With Continuous VariablesjayasankaraprasadNo ratings yet

- Question 4 (A) What Are The Stochastic Assumption of The Ordinary Least Squares? Assumption 1Document9 pagesQuestion 4 (A) What Are The Stochastic Assumption of The Ordinary Least Squares? Assumption 1yaqoob008No ratings yet

- 09 Inference For Regression Part1Document12 pages09 Inference For Regression Part1Rama DulceNo ratings yet

- Chap7 Random ProcessDocument21 pagesChap7 Random ProcessSeham RaheelNo ratings yet

- Statistics of Two Variables: FunctionsDocument15 pagesStatistics of Two Variables: FunctionsAlinour AmenaNo ratings yet

- VCV Week3Document4 pagesVCV Week3Diman Si KancilNo ratings yet

- Correlation & Regression-Moataza MahmoudDocument35 pagesCorrelation & Regression-Moataza MahmoudAnum SeherNo ratings yet

- TMA1111 Regression & Correlation AnalysisDocument13 pagesTMA1111 Regression & Correlation AnalysisMATHAVAN A L KRISHNANNo ratings yet

- Unit 3 Simple Correlation and Regression Analysis1Document16 pagesUnit 3 Simple Correlation and Regression Analysis1Rhea MirchandaniNo ratings yet

- Regression and Correlation AnalysisDocument5 pagesRegression and Correlation AnalysisSrinyanavel ஸ்ரீஞானவேல்No ratings yet

- Correlation TheoryDocument34 pagesCorrelation TheoryPrashant GamanagattiNo ratings yet

- Regression and CorrelationDocument4 pagesRegression and CorrelationClark ConstantinoNo ratings yet

- Random Variables: Corr (X, Y) Cov (X, Y) / Cov (X, Y) Is The Covariance (X, Y)Document15 pagesRandom Variables: Corr (X, Y) Cov (X, Y) / Cov (X, Y) Is The Covariance (X, Y)viktahjmNo ratings yet

- Regression and Correlation Analyses - 1Document59 pagesRegression and Correlation Analyses - 1MaythuzarkhinNo ratings yet

- Correlation Analysis-Students NotesMAR 2023Document24 pagesCorrelation Analysis-Students NotesMAR 2023halilmohamed830No ratings yet

- MAE 300 TextbookDocument95 pagesMAE 300 Textbookmgerges15No ratings yet

- REGRESSION INTRODUCTIONDocument32 pagesREGRESSION INTRODUCTIONRam100% (1)

- Second-Order Nonlinear Least Squares Estimation: Liqun WangDocument18 pagesSecond-Order Nonlinear Least Squares Estimation: Liqun WangJyoti GargNo ratings yet

- Mathematical Modeling and Data PresentationDocument7 pagesMathematical Modeling and Data PresentationAwindya CandrasmurtiNo ratings yet

- Regression and CorrelationDocument37 pagesRegression and Correlationmahnoorjamali853No ratings yet

- Random ProcessDocument21 pagesRandom ProcessgkmkkNo ratings yet

- Linear Regression Analysis: Module - IvDocument10 pagesLinear Regression Analysis: Module - IvupenderNo ratings yet

- Simple Linear Regression AnalysisDocument7 pagesSimple Linear Regression AnalysiscarixeNo ratings yet

- Unit 3 Covariance and CorrelationDocument7 pagesUnit 3 Covariance and CorrelationShreya YadavNo ratings yet

- BIOS 6040-01: Regression Analysis of Estriol Level and BirthweightDocument9 pagesBIOS 6040-01: Regression Analysis of Estriol Level and BirthweightAnonymous f6MGixKKhNo ratings yet

- Logistic RegressionDocument8 pagesLogistic RegressionBeatriz RodriguesNo ratings yet

- Hypothesis Testing in Classical RegressionDocument13 pagesHypothesis Testing in Classical Regressionkiiroi89No ratings yet

- Combining Error Ellipses: John E. DavisDocument17 pagesCombining Error Ellipses: John E. DavisAzem GanuNo ratings yet

- Linear Model Small PDFDocument15 pagesLinear Model Small PDFGerman Galdamez OvandoNo ratings yet

- Case studies in PK laboratory manual 2022/2023Document98 pagesCase studies in PK laboratory manual 2022/2023Rasha MohammadNo ratings yet

- Multivariate Normal DistributionDocument9 pagesMultivariate Normal DistributionccffffNo ratings yet

- DR PDFDocument17 pagesDR PDFvazzoleralex6884No ratings yet

- Chapter Three MultipleDocument15 pagesChapter Three MultipleabdihalimNo ratings yet

- Regression and Correlation Analysis ExplainedDocument21 pagesRegression and Correlation Analysis ExplainedXtremo ManiacNo ratings yet

- Assignment 3Document7 pagesAssignment 3Dileep KnNo ratings yet

- Capitulo7 GujaratiDocument46 pagesCapitulo7 GujaratiPietro Vicari de OliveiraNo ratings yet

- Encyclopaedia Britannica, 11th Edition, Volume 9, Slice 7 "Equation" to "Ethics"From EverandEncyclopaedia Britannica, 11th Edition, Volume 9, Slice 7 "Equation" to "Ethics"No ratings yet

- Abnormal Psychology, Exam I Study CardsDocument12 pagesAbnormal Psychology, Exam I Study CardsVanessa97% (32)

- CLASS XI - Experimental Psychology614Document8 pagesCLASS XI - Experimental Psychology614Tasha SharmaNo ratings yet

- Muhammad Yasar, 2017 - Pag 59 PDFDocument205 pagesMuhammad Yasar, 2017 - Pag 59 PDFdiegobellovNo ratings yet

- Evaluating and Institutionalising OD InterventionsDocument35 pagesEvaluating and Institutionalising OD InterventionsMohan NaviNo ratings yet

- Place Attachment and Place Identity in N PDFDocument10 pagesPlace Attachment and Place Identity in N PDFMia KisicNo ratings yet

- Company Analysis - Applied Valuation by Rajat JhinganDocument13 pagesCompany Analysis - Applied Valuation by Rajat Jhinganrajat_marsNo ratings yet

- Effects of Supply Chain Management Practices On Organizational Performance: A Case of Food Complex Industries in Asella TownDocument8 pagesEffects of Supply Chain Management Practices On Organizational Performance: A Case of Food Complex Industries in Asella TownInternational Journal of Business Marketing and ManagementNo ratings yet

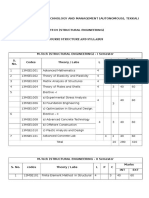

- M.tech (Structural Engineering)Document50 pagesM.tech (Structural Engineering)vinodkumar_72291056No ratings yet

- Corporate Governance - International Best Practices (A Case Study For Cement Industry in Pakistan)Document20 pagesCorporate Governance - International Best Practices (A Case Study For Cement Industry in Pakistan)fakihasadafziniaNo ratings yet

- Understanding EpidemiologyDocument40 pagesUnderstanding EpidemiologyCharityOchiengNo ratings yet

- Multivariate Statistical Process Control With Industrial Applications - Mason, Young - Asa-Siam, 2002 PDFDocument277 pagesMultivariate Statistical Process Control With Industrial Applications - Mason, Young - Asa-Siam, 2002 PDFMestddl100% (3)

- 1 - 290 - LR623 Prediction of Storm Rainfall in East AfricaDocument53 pages1 - 290 - LR623 Prediction of Storm Rainfall in East AfricaJack 'oj' Ojuka75% (4)

- Bomberos - AnthropometryDocument190 pagesBomberos - AnthropometryJonathan Alexis Echeverry MiraNo ratings yet

- Employees' Perception of Organizational Climate and Its Implications For Organizational Effectiveness in Amhara Regional Public Service OrgansDocument157 pagesEmployees' Perception of Organizational Climate and Its Implications For Organizational Effectiveness in Amhara Regional Public Service OrgansMesfin624No ratings yet

- Evaluating The Relationship Between Tooth Color and Enamel Thickness, Using Twin Flash Photography, Cross-Polarization Photography, and SpectrophotometerDocument11 pagesEvaluating The Relationship Between Tooth Color and Enamel Thickness, Using Twin Flash Photography, Cross-Polarization Photography, and Spectrophotometer陳奕禎No ratings yet

- Analysing Data Using MEGASTATDocument33 pagesAnalysing Data Using MEGASTATMary Monique Llacuna LaganNo ratings yet

- Nurses Awareness On Ethico-Legal Aspects of NursiDocument4 pagesNurses Awareness On Ethico-Legal Aspects of NursiHarold BarrentoNo ratings yet

- Hardened Concrete Methods of Test: Indian StandardDocument14 pagesHardened Concrete Methods of Test: Indian Standardjitendra86% (7)

- Strengths Finder Technical ReportDocument46 pagesStrengths Finder Technical ReportMichael TaylorNo ratings yet

- Simple Regression: Multiple-Choice QuestionsDocument36 pagesSimple Regression: Multiple-Choice QuestionsNameera AlamNo ratings yet

- BA PsychologyDocument18 pagesBA PsychologyNitin KordeNo ratings yet

- Calculating NP of mining wastes based on mineral compositionDocument7 pagesCalculating NP of mining wastes based on mineral compositionEstNo ratings yet

- Mba Tgouexam Dec2011 PapeDocument166 pagesMba Tgouexam Dec2011 Papejacklee1918No ratings yet

- The Verbal and Nonverbal Correlates of The Five Flirting StylesDocument28 pagesThe Verbal and Nonverbal Correlates of The Five Flirting StylesJosue PinillosNo ratings yet

- Supply ChainDocument6 pagesSupply ChainSara Rahimian86% (7)

- Tutorial Review Calculating Standard Deviations and Confidence Intervals With A Universally Applicable Spreadsheet TechniqueDocument5 pagesTutorial Review Calculating Standard Deviations and Confidence Intervals With A Universally Applicable Spreadsheet TechniqueDïö ValNo ratings yet

- Experimental PsychologyDocument8 pagesExperimental PsychologyTimothy JamesNo ratings yet

- M.E. Env EngDocument68 pagesM.E. Env EngPradeepNo ratings yet

- Yung Chapter 5 Nalang Tas Print NaDocument19 pagesYung Chapter 5 Nalang Tas Print NaMitchico CanonicatoNo ratings yet

- Pearson RDocument18 pagesPearson RJeffrey Dela CruzNo ratings yet