Professional Documents

Culture Documents

Measuring Effectiveness of TBRP Method For Different Capacity Data Centers

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Measuring Effectiveness of TBRP Method For Different Capacity Data Centers

Copyright:

Available Formats

IPASJ International Journal of Information Technology (IIJIT)

Web Site: http://www.ipasj.org/IIJIT/IIJIT.htm

A Publisher for Research Motivation ........ Email:editoriijit@ipasj.org

Volume 7, Issue 5, May 2019 ISSN 2321-5976

Measuring effectiveness of TBRP method

for different capacity Data Centers

Seema Chowhan1, Ajay Kumar2 and Shailiaja Shirwaikar3

Assistant Professor, Department of Computer Science, Baburaoji Gholap College, Pune, India

Director, Jayawant Institute of computer Application, Pune, India

Associate Professor, Savitribai Phule Pune University, Pune, India

Abstract

Cloud computing refers to applications and services that run on a distributed network using virtualized resources and accessed

through web as utility. Resource provisioning, policies allow efficient sharing of resources available in a data center, and these

policies help to evaluate and enhance the cloud performance. Resource provisioning that maintains the quality of service with

best resource utilization is a challenge. The template based resource provisioning and utilization method (TBRP) overcomes the

problem of over-provisioning and under-provisioning of resources by designing appropriate templates with QoS parameters

which avoid SLA violations. The results show that in TBRP method one need to design variable template for better performance

and use depending upon the capacity of Data-Center.

Keywords: Service Level Agreement, Quality of Service, Virtual Machines, Resource Provisioning.

1. INTRODUCTION

The implementation and escalation of cloud computing represent a paradigm shift by outsourcing IT and computational

needs. Cloud computing is an abstraction based on the notion of pooling physical resources and presenting them as a

virtual resources to access information anytime anywhere. It is evolution of a variety of technologies that bundles

together to provide IT infrastructure as per organization’s needs [13], [14]. Cloud provider offers software, platform

and infrastructure as a service to cloud users. Cloud users and Cloud service providers have to agree upon Service level

attributes which are specified in the Service Level agreement (SLA). The terms in SLA are responsible for maintaining

the Quality of Service (QoS) which can be monitored, measured and controlled [2]. The consumer of the service may

provide one or more service level objectives depending on specific application requirements but the cloud provider has

to translate them into low level (uptime, downtime, CPU utilization, etc.) technical attributes that can be monitored and

controlled to achieve the higher level (availability, reliability, etc.) Objectives [12]. The main objective of a cloud

service provider is to minimize the cost yet maintaining customer satisfaction.

Load balancing at Resource provisioning involves the distribution of resources to different cloud users without

increasing unused capacities and yet maintaining required Quality of service [1], [4], [8], [10] [17]. Resource allocation

is a main issue in cloud computing environment. Scheduling task, computational performance, reallocation, response

time and cost efficiency are variant level of issues in resource allocation. Resource allocation is the process of providing

services and storage space to the particular task given by the users. One of the very important issue in resource

allocation is capacity planning. Capacity planning seeks to match demand to available resource so as to accommodate

the workload where resources and tasks are diverse. In case of some tasks there is a high demand for the CPU, while in

some other case more storage is important issue. One main focus is to reduce time during scheduling and improving

performance, w.r.t response time, optimal span and completion time.

Template-Based Resource Provisioning (TBRP) method [5] for resource provisioning and optimum utilization of

idle capacity without breaking the SLA on variation of workload, need to be measured on different capacity Data-

Center works with fixed template or variable templates is the objective of this research.

Objectives of Research

• To study whether different configuration of basic VMs give better resource utilization in Data-Center.

• To find whether variable Template improve resource utilization in different capacity Data-Centers.

To test the objectives of this research, TBRP is applied on three different capacity Data-Center with fixed or

variable templates for comparison and analysis. The simulation experiments are carried out to compare completion

time as one of QoS parameter in different workload scenarios for different capacity Data-Center for fixed and variable

templates.

Volume 7, Issue 5, May 2019 Page 6

IPASJ International Journal of Information Technology (IIJIT)

Web Site: http://www.ipasj.org/IIJIT/IIJIT.htm

A Publisher for Research Motivation ........ Email:editoriijit@ipasj.org

Volume 7, Issue 5, May 2019 ISSN 2321-5976

2. BACKGROUND AND RELATED WORK

a. Cloud computing and virtualization

Cloud is a huge bundle of hardware and software resources that is available to its customer on demand as pay per use

model [2]. The contractual agreement between Cloud provider and customer is documented as a service level agreement

(SLA) , that specifies Quality of service attributes such as reliability , availability etc. measured through performance

parameters such as response time, throughput etc.[1],[8],[10],[17]. Virtualization technology that partitions physical

resources into logical units, helps dynamic allocation of computing environments to cloud customers that can be scaled

up and down on demand [9],[11]. A virtual machine combines physical resources such as CPU, RAM and bandwidth

along with software services such as operating system and appropriate application software.

b. Data-Center cost model

Cloud service providers build massive Data-Centers to provide better performance and increased reliability to its

customers. Huge proportion of Data-Center cost is accorded to servers followed by infrastructure that ensures consistent

power supply and mitigation of heat generated by servers. A smaller proportion is spend on actual power consumption

and networking management [7]. Efficient Resource utilization is essential to avoid wastage of energy used by idle

nodes that are unaccounted for [19]. Resource provisioning schemes that ensure quality of service as specified in SLA,

avoid SLA violations and at the same time carry out efficient Resource utilization are becoming popular [17 ].

c. Resource Provisioning and utilization

Resource allocation strategy should avoid Resource contention, fragmentation, over provisioning and under

provisioning [16]. Over provisioning leads to inefficient resource utilization increasing the cost while under

provisioning ends up in SLA violations leading to penalty costs. Cloud provider needs to optimally provision the

resources to increase return on investment and at the same time maintaining high level of customer satisfaction [15],

[18].

3. TEMPLATE BASED RESOURCE PROVISIONING (TBRP) METHOD

Proposed TBRP method fulfills following objectives

1) Provisioning of resources as per workload requirements of user applications.

2) Avoid SLA violation as per completion time SLA clause designed.

3) Monitoring of resource utilization and minimization of resource utilization cost.

In this method resource provisioning and utilization strategy system is designed such that VMs are utilized

minimally at low workload and relevantly at high work load to maintain SLA by maintaining Quality of Service (QoS)

levels. In TBRP method the entire resource are sliced into small, medium and large VM types and different

combination of these VM types are designed as Templates. So that resource provisioning strategy periodically maps idle

capacity into a set of VM templates for cost constraint and efficient resources utilization. The MIPs rating (processing

capacity) of small-VM, medium-VM and large-VM depends on capacity of Data-Center and can vary from Data-Center

to Data-Center that will meet users SLA requirements and helps in managing overprovisioning and under-

provisioning. In case of customer need, data-center will provide available templates to satisfy customer requirements or

data-center will create custom made templates to meet customer requirement. The method also utilizes full capacity of

Data-Center to avoid over-provisioning and under-provisioning while giving attractive pricing for users. The Cloud

service provider can also maximize profit without affecting the customer satisfaction.

4. EXPERIMENTAL SET UP AND PARAMETERS

Procedure

One of the essential pre requisite of this method is deciding the configurations of low, medium and high size VMs. As

the choice depends on the capacity of the Data-Center this papers carries simulation for different capacity Data-Center

and presents a comparative study of these Data-Centers at varying workload.

The essential steps of TBRP method is

1) Deciding the configurations of low, medium and high size VMs as tabulated in Table-2.

2) Setting up the configuration for different cloud elements like Data-Center, host, VMs and cloudlets.

3) Designing templates with appropriate cost and SLA clauses for DC-1, DC-2 and DC-3.

4) Performing experiments for parameter extraction and comparison.

Volume 7, Issue 5, May 2019 Page 7

IPASJ International Journal of Information Technology (IIJIT)

Web Site: http://www.ipasj.org/IIJIT/IIJIT.htm

A Publisher for Research Motivation ........ Email:editoriijit@ipasj.org

Volume 7, Issue 5, May 2019 ISSN 2321-5976

Configuration of different data-Center

Capacity of Data-Centers vary from Data-Center to Data-Center. In CloudSim environment, Datacenter consists of

fixed or varied configuration of hosts (servers). In TBRP method, entire resource is divided into a set of VM types that

is small, medium and large which are priced according to their characteristics such as MIPS rating, RAM, and storage

etc. [5], [6], [18]. For the experiment three Data-Center are considered with different MIPs rating for small, medium

and large VMs as presented in Table-2.

Table-2: Data-Center with MIPs rating of VMs

DATA-CENTER MIPS RATING (MIPS)

SMALL-VM MEDIUM-VM LARGE-VM

DC-1 100 150 200

DC-2 200 300 400

DC-3 300 450 600

The number of VMs of each type is fixed for each Data-Center. The overhead costs vary from Data-Center to Data-

Center and the pricing strategy for VM templates need to provide for these issues.

The customer must choose between the available templates that satisfy their QoS requirements or negotiate for a

custom-made template. The next step is to design templates with appropriate SLA clauses by simulating different

workload situations.

5. TEMPLATES AND SLA CLAUSE FOR DIFFERENT CAPACITY DATA-CENTER

a. Small scale Data-Center

The four different workload situations are considered as per MIPs job of DC-1 presented in the Table 3 where the

workload is the product of cloudlet length and number of cloudlets. The template with MIPs rating of 100 for Small-

VMs, 150 for Medium-VMs and 200 for Large-VMs is considered for experiments. The completion time on the

different VMs is used to extract SLA parameters that will avoid SLA violations when using resource utilization

strategy. Completion. Completion time SLA clause is presented in Table 4.

Table-3: Workload scenario for DC-1

User No of Cloudlet Completion Time (Sec)

Id Cloud- Length Single Single Single Single Template VM

Lets SVM MVM SVM+MVM LVM 450

MIPs

1 10 100 10 6.67 3.97 5 2.49 2.22

2 100 100 100 66.67 39.73 50 22.81 22.22

3 10 10000 1000 666.67 399.97 500 249.99 222.22

4 100 10000 10000 6666.67 3999.73 5000 2298.88 2222.22

Volume 7, Issue 5, May 2019 Page 8

IPASJ International Journal of Information Technology (IIJIT)

Web Site: http://www.ipasj.org/IIJIT/IIJIT.htm

A Publisher for Research Motivation ........ Email:editoriijit@ipasj.org

Volume 7, Issue 5, May 2019 ISSN 2321-5976

Table-4: Completion time SLA clause for DC-1

Workload Type Workload Value Completion Time CT value(Sec)

Low workload 1000 minCTvalue 10

Medium workload 10000 medCTvalue 40

Large workload 100000 maxCTvalue 250

b. Medium Scale Data-Center

The four different workload situations are considered as per MIPs capacity of DC-2 presented in the Table-5 where the

workload is the product of cloudlet length and number of cloudlets. The template with MIPs rating of 200 for Small-

VMs, 300 for Medium-VMs and 400 for Large-VMs is considered for experiments. The completion time on the

different VMs is used to extract SLA parameters that will avoid SLA violations when using a resource utilization

strategy. Completion time, SLA clause is presented in Table-6.

Table-5: Workload scenario for DC-2

User Id No of Cloudlet Completion Time (Sec)

Cloud- Length Single Single Single Single Template VM

Lets SVM MVM SVM+MVM LVM 900

MIPs

1 10 500 25 16.67 9.99 12.5 6.24 5.56

2 100 500 250 166.67 99.87 125 62.43 55.56

3 10 50000 2500 1666.67 999.99 1250 624.99 555.56

4 100 50000 25000 16666.67 9999.87 12500 6249.93 5555.56

Table-6: Completion time SLA clause for DC-2

Workload Type Workload Value Completion Time CT value(Sec)

Low workload 5000 minCTvalue 25

Medium workload 50000 medCTvalue 100

Large workload 500000 maxCTvalue 625

c. Large scale data-Center

The four different workload situations are considered as per MIPs capacity of DC-3 presented in the Table-7 where the

workload is the product of cloudlet length and number of cloudlets. The MIPs rating of 300 for Small-VMs, 450 for

Medium-VMs and 600 for Large-VMs is considered for experiments. The completion time on the different VMs is used

to extract SLA parameters that will avoid SLA violations when using a resource utilization strategy. Completion time,

SLA clause is presented in Table-8.

Table-7: workload scenarios for DC-3

Volume 7, Issue 5, May 2019 Page 9

IPASJ International Journal of Information Technology (IIJIT)

Web Site: http://www.ipasj.org/IIJIT/IIJIT.htm

A Publisher for Research Motivation ........ Email:editoriijit@ipasj.org

Volume 7, Issue 5, May 2019 ISSN 2321-5976

User No of Cloudlet Completion Time (Sec)-DC2

Id Cloud- Length Single Single Single Single Template VM

Lets SVM MVM SVM+MVM LVM 1350

MIPs

1 10 1000 33.33 22.22 13.32 16.67 8.32 7.74

2 100 1000 333.33 222.22 133.24 166.67 83.32 74.07

3 10 100000 3333.33 2222.22 1333.32 1666.67 833.32 740.74

4 100 100000 33333.33 22222.22 13333.24 16666.67 8333.27 7407.41

Table-8: SLA clause constants with values

Workload Type Workload Value Completion Time CT value(Sec)

Low workload 10000 minCTvalue 34

Medium workload 100000 medCTvalue 134

Large workload 1000000 maxCTvalue 834

6. COMPARATIVE STUDY OF DIFFERENT CAPACITY DATA-CENTER

Different Data-Centers can have different combinations of templates as per the MIPs capacity of Data-Center. Some

combinations are presented in Table-9 for three Data-Centers DC-1, DC-2 and DC-3. Different available template's

combinations are executed for three Data-Centers to get completion time value for comparative study .Table-10

presents completion time values of 600 MIPs capacity templates in DC-1, DC-2 and DC-3.

Table-9: Available template with different MIPs capacity for three Data-Center

MIPs VM.Templates-DC-1 VM.Templates-DC-2 VM.Templates-DC-1

Capacity (SVM=100,MVM=150, (SVM=200,MVM=300, (SVM=300,MVM=450,

LVM=200) LVM=400) LVM=600)

300 T101,T030,T300 T010 T100

600 T040,T600,T003,T320, T101,T300,T020 T200,T001

T202,T121

900 T104,T212,T421,T023 T030,T111,T310 T300,T101,T020

1200 T124,T242,T161,T043 T121,T202,T040,T401,T320,T003 T120,T002,T201

1500 T424,T343,T144 T212,T411,T013,T050 T021,T102,T220,T500

Table-11: Completion time range of SLA clause for VM templates in DC-1, DC-2 and DC-3

Volume 7, Issue 5, May 2019 Page 10

IPASJ International Journal of Information Technology (IIJIT)

Web Site: http://www.ipasj.org/IIJIT/IIJIT.htm

A Publisher for Research Motivation ........ Email:editoriijit@ipasj.org

Volume 7, Issue 5, May 2019 ISSN 2321-5976

Cloudlets Cloudlet Workload 600 MIPs Capacity completion time (sec)

(Number length (bytes) DC-1 DC-2 DC-3

of task) (byte) T040 T600 T003 T320 T202 T121 T101 T300 T020 T200 T001

Low workload

1.0 0.33 0.33 0.33

2 100 200 0.66 1 0.5 0.83 1 2 0.5

2.0 0.66 0.66 0.66

4 100 400 0.66 1 0.75 1.5 2 3.99 0.75

2.98 0.99 0.99 0.99

6 100 600 1 1 1 2 2.98 5.96 1

3.98 1.33 1.33 1.33

8 100 800 1.33 1.5 1.24 2.74 3.99 7.96 1.24

5.0 1.66 1.66 1.66

10 100 1000 1.99 2 1.74 3.38 5.17 10 1.74

Medium workload

3.75 5.0 3.33 3.33 3.33

2 1000 2000 6.66 10 5.0 8.33 10 8.33

7.5 7.5 6.66 6.66 6.66

4 1000 4000 6.67 10 7.5 9.16 20 10.83

9.99 10.0 9.99 9.99 9.99

6 1000 6000 10 10 10 10.55 29.97 16.1

13.43 13.74 13.33 13.33 13.33

8 1000 8000 13.33 15 13.74 14.57 39.99 22.07

16.99 16.99 16.66 16.66 16.66

10 1000 10000 16.66 18 16.99 19.6 49.99 28.32

Large workload

37.5 50.0 33.33 33.33 33.33

2 10000 20000 66.66 100 50 83.33 50 58.33

75.0 75.0 66.66 66.66 66.66

4 10000 40000 66.66 100 75 91.66 75 108.32

100.0 100.0 99.99 99.99 99.99

6 10000 60000 111.11 100 100 105.55 100 116.66

134.37 137.49 99.99 99.99 99.99

8 10000 80000 133.33 150 137.49 141.66 150 154.16

172.3 199.9 169.9 173.3 169.9 176.6 169.99 169.99 166.66 166.66 166.66

10 10000 100000 3 9 9 2 9 5

The completion time range of SLA clauses on above defined VM-Templates are presented in Table-11 and are

extracted from Table-10 for different workload scenarios.

Table-10: Template execution with 600 MIPs capacity for three Data-Center

Template Completion Time range of SLA clauses

T040 (0 ≤ W < L Completion Time < 2)AND(L≤W<M Completion Time<17)AND

(M ≤ W<H Completion Time <173)

T600 (0 ≤ W < L Completion Time < 2)AND(L≤W<M Completion Time<18)AND

(M ≤ W<H Completion Time <200)

T003 (0 ≤ W < L Completion Time < 2)AND(L≤W<M Completion Time<17)AND

(M ≤ W<H Completion Time <170)

T320 (0 ≤ W < L Completion Time < 4)AND(L≤W<M Completion Time<20)AND

(M ≤ W<H Completion Time <175)

T202 (0 ≤ W < L Completion Time < 6)AND(L≤W<M Completion Time<50)AND(M ≤

W<H Completion Time <170)

T121 (0 ≤ W < L Completion Time < 10)AND(L≤W<M Completion Time<29)AND(M ≤

W<H Completion Time <177)

T101 (0 ≤ W < L Completion Time < 5)AND(L≤W<M Completion Time<17)AND(M ≤

W<H Completion Time <170)

Volume 7, Issue 5, May 2019 Page 11

IPASJ International Journal of Information Technology (IIJIT)

Web Site: http://www.ipasj.org/IIJIT/IIJIT.htm

A Publisher for Research Motivation ........ Email:editoriijit@ipasj.org

Volume 7, Issue 5, May 2019 ISSN 2321-5976

T300 (0 ≤ W < L Completion Time < 2)AND(L≤W<M Completion Time<17)AND(M ≤

W<H Completion Time <170)

T020 (0 ≤ W < L Completion Time < 2)AND(L≤W<M Completion Time<17)AND(M ≤

W<H Completion Time <167)

T200 (0 ≤ W < L Completion Time < 2)AND(L≤W<M Completion Time<17)AND(M ≤

W<H Completion Time <167)

T001 (0 ≤ W < L Completion Time < 2)AND(L≤W<M Completion Time<17)AND(M ≤

W<H Completion Time <167)

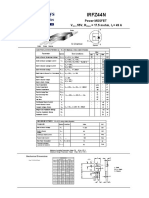

Figure-1: Template completion time in three Data-Center with 600 MIPs capacity for all workload

Figure-2: Template completion time at DC-1, DC-2 and DC-3 for low workload

Volume 7, Issue 5, May 2019 Page 12

IPASJ International Journal of Information Technology (IIJIT)

Web Site: http://www.ipasj.org/IIJIT/IIJIT.htm

A Publisher for Research Motivation ........ Email:editoriijit@ipasj.org

Volume 7, Issue 5, May 2019 ISSN 2321-5976

Observation:

Figure-1 presents template execution with 600 MIPs capacity at three Data-Center for low, medium and large

workload. It is observed from Figure-2 that for low workload templates DC3T001, DC2T020, DC1040 performed

uniformly with good results whereas DC1T121 performs poorly as compared to all other templates. Similar results are

observed for medium and large workloads. Table-12 presents completion time values of 900 MIPs capacity templates in

DC-2 and DC-3.

Table-12: Template completion time for DC-2 and DC-3 with 900 MIPs capacity

Figure-3: Template completion time at DC-2 and DC-3 for all workload

Volume 7, Issue 5, May 2019 Page 13

IPASJ International Journal of Information Technology (IIJIT)

Web Site: http://www.ipasj.org/IIJIT/IIJIT.htm

A Publisher for Research Motivation ........ Email:editoriijit@ipasj.org

Volume 7, Issue 5, May 2019 ISSN 2321-5976

Figure-4: Template completion time at DC-2 and DC-3 for low workload

Figure-5: Template completion time at DC-2 and DC-3 for medium workload

Figure-6: Template completion time at DC-2 and DC-3 for large workload

Volume 7, Issue 5, May 2019 Page 14

IPASJ International Journal of Information Technology (IIJIT)

Web Site: http://www.ipasj.org/IIJIT/IIJIT.htm

A Publisher for Research Motivation ........ Email:editoriijit@ipasj.org

Volume 7, Issue 5, May 2019 ISSN 2321-5976

Observations: Figure-3 presents template execution with 900 MIPs capacity at two Data-Center for low, medium and

large workload. For low workload performance of templates DC3T020 and DC3T300 is better. For templates DC2310

and DC2030 performance is good, whereas template DC2T111 performs poorly as presented in Figure-4. It is observed

from Figure-5 that for medium workload templates DC3T020, DC3T101, DC3T300 and DC2T310 performs uniformly,

whereas template DC2T111 performs poorly. For large workload all templates performs uniformly with better result for

template DC3T020 as presented in Figure-6.

Discussion: In this experiments even though the capacity of DC-1, DC-2 and DC-3 is different, fixed VM

configuration of VM template results into same performance with poor resource utilization. In case of variability in

configuration of basic VMs improve performance as well as improves resource utilization

7. CONCLUSION

Template based resource provisioning and utilization method and procedure overcomes the problem of over-provision

and under-provision of resources at Data-Center without effecting QoS and meeting SLA. Capacity of Data-Center

plays important role in designing the template If Data-Center is of low capacity and grow to high capacity same TBRP

method can be applied. Result shows that if configuration of basic VMs in templates are fixed for different capacity

Data-Center there is no change in performance but resource utilization is poor. In case of variability in configuration of

basic VMs improve performance as well as improves resource utilization. In TBRP method one need to design variable

template for better performance and utilization depending upon capacity of Data-Center.

REFERENCES

[1] Bianco, P., Lewis, G. A., & Merson, P. (2008). Service level agreements in service-oriented architecture

environments (No. CMU/SEI-2008-TN-021). Carnegie-Mellon Univ Pittsburgh Pa Software Engineering Inst.

[2] Buyya, R., Abramson, D., & Giddy, J. (2000, June). An Economy Driven Resource Management Architecture for

Global Computational Power Grids. In PDPTA (pp. 26-29).

[3] Buyya, R., Yeo, C. S., Venugopal, S., Broberg, J., &Brandic, I. (2009). Cloud computing and emerging IT

platforms: Vision, hype, and reality for delivering computing as the 5th utility. Future Generation computer

systems, 25(6), 599-616.

[4] Byun, E. K., Kee, Y. S., Kim, J. S., &Maeng, S. (2011). Cost optimized provisioning of elastic resources for

application workflows. Future Generation Computer Systems, 27(8), 1011-1026.

[5] Chowhan, S. S., Shirwaikar, S., & Kumar, A. (2019). Template-Based Efficient Resource Provisioning and

Utilization in Cloud Data-Center, International Journal of Computer Sciences and Engineering, Vol.7, Issue.1,

pp.463-477.

[6] Garg, S. K., Gopalaiyengar, S. K., &Buyya, R. (2011, October). SLA-based resource provisioning for

heterogeneous workloads in a virtualized cloud datacenter. In International conference on Algorithms and

architectures for parallel processing (pp. 371-384). Springer, Berlin, Heidelberg.

[7] Greenberg, A., Hamilton, J., Maltz, D. A., & Patel, P. (2008). The cost of a cloud: research problems in data

center networks. ACM SIGCOMM computer communication review, 39(1), 68-73.

[8] John, M., Gurpreet, S., Steven, W., Venticinque, S., Massimiliano, R., David, H., & Ryan, K. (2012). Practical

Guide to Cloud Service Level Agreements.

[9] Kremer, J. (2010). Cloud Computing and Virtualization. White paper on virtualization.

[10] Liu, F., Tong, J., Mao, J., Bohn, R., Messina, J., Badger, L., & Leaf, D. (2011). NIST cloud computing reference

architecture. NIST special publication, 500(2011), 1-28.Malhotra, L., Agarwal, D., &Jaiswal, A. (2014).

Virtualization in cloud computing. J Inform Tech SoftwEng, 4(2), 136.

[11] Nurmi, D., Wolski, R., Grzegorczyk, C., Obertelli, G., Soman, S., Youseff, L., &Zagorodnov, D. (2009, May).

The eucalyptus open-source cloud-computing system. In Proceedings of the 2009 9th IEEE/ACM International

Symposium on Cluster Computing and the Grid (pp. 124-131). IEEE Computer Society.

[12] Radojević, B., & Žagar, M. (2011, May). Analysis of issues with load balancing algorithms in hosted (cloud)

environments. In 2011 Proceedings of the 34th International Convention MIPRO (pp. 416-420). IEEE.

[13] Shawish, A., &Salama, M. (2014). Cloud computing: paradigms and technologies. In Inter-cooperative collective

intelligence: Techniques and applications (pp. 39-67). Springer Berlin Heidelberg.

[14] Sosinsky, B. (2010). Cloud computing bible (Vol. 762). John Wiley & Sons.

Volume 7, Issue 5, May 2019 Page 15

IPASJ International Journal of Information Technology (IIJIT)

Web Site: http://www.ipasj.org/IIJIT/IIJIT.htm

A Publisher for Research Motivation ........ Email:editoriijit@ipasj.org

Volume 7, Issue 5, May 2019 ISSN 2321-5976

[15] Tsakalozos, K., Kllapi, H., Sitaridi, E., Roussopoulos, M., Paparas, D., & Delis, A. (2011, April). Flexible use of

cloud resources through profit maximization and price discrimination. In 2011 IEEE 27th International

Conference on Data Engineering (pp. 75-86). IEEE.

[16] Vinothina, V., Sridaran, R., & Ganapathi, P. (2012). A survey on resource allocation strategies in cloud

computing. International Journal of Advanced Computer Science and Applications, 3(6), 97-104.

[17] Wu, L., &Buyya, R. (2012). Service level agreement (sla) in utility computing systems. IGI Global, 15.

[18] Wu, L., Garg, S. K., & Buyya, R. (2011, May). SLA-based resource allocation for software as a service provider

(SaaS) in cloud computing environments. In Proceedings of the 2011 11th IEEE/ACM International Symposium

on Cluster, Cloud and Grid Computing (pp. 195-204). IEEE Computer Society.

[19] Zhang, X., Wu, T., Chen, M., Wei, T., Zhou, J., Hu, S., & Buyya, R. (2019). Energy-aware virtual machine

allocation for cloud with resource reservation. Journal of Systems and Software, 147, 147-161.

AUTHOR

Ms. Seema Chowhan is working as a faculty and head in subject of computer science in Baburaoji Gholap

College Pune, India affiliated to Savitribai Phule Pune University, Pune. She has 18+ years of experience in

teaching UG and PG courses. She has completed M.Phil (CS).Her research interests include Cloud Computing

and Networking.

Dr. Ajay Kumar experience covers more than 26 years of teaching and 6 years of Industrial experience as IT

Technical Director and Senior Software project manager. He has an outstanding academic career completed

B.Sc. App. Sc. (Electrical) in 1988, M.Sc. App.Sc. (Computer Science-Engineering and Technology) in1992 and

PhD in1995. Presently, working as Director at JSPMs Jayawant Technical Campus, Pune (Affiliated to Pune

University). His research areas are Computer Networks, Wireless and Mobile Computing, Cloud computing,

Information and Network Security. There are 74 publications at National and International Journals and

Conferences and also worked as expert, appointed by C-DAC to find Patent-ability of Patent Applications in ICT area. Six

commercial projects are completed by him for various companies/ Institutions. He holds variety of imperative position like

Examiner, Member of Board of Studies for Computer and IT, Expert at UGC.

Dr. Shailaja Shirwaikar has a Ph. D. in Mathematics of Mumbai University, India and worked as Associate

Professor at Department of Computer Science, Nowrosjee Wadia College affiliated to Savitribai Phule Pune

University, Pune for last 27 years. Her research interests include Soft Computing, Big Data Analytics, Software

Engineering and Cloud Computing.

Volume 7, Issue 5, May 2019 Page 16

You might also like

- Experimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterDocument7 pagesExperimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Detection of Malicious Web Contents Using Machine and Deep Learning ApproachesDocument6 pagesDetection of Malicious Web Contents Using Machine and Deep Learning ApproachesInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- THE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSDocument7 pagesTHE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- THE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSDocument7 pagesTHE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- An Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewDocument9 pagesAn Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Study of Customer Experience and Uses of Uber Cab Services in MumbaiDocument12 pagesStudy of Customer Experience and Uses of Uber Cab Services in MumbaiInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Customer Satisfaction A Pillar of Total Quality ManagementDocument9 pagesCustomer Satisfaction A Pillar of Total Quality ManagementInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Analysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyDocument6 pagesAnalysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Soil Stabilization of Road by Using Spent WashDocument7 pagesSoil Stabilization of Road by Using Spent WashInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Experimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterDocument7 pagesExperimental Investigations On K/s Values of Remazol Reactive Dyes Used For Dyeing of Cotton Fabric With Recycled WastewaterInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Customer Satisfaction A Pillar of Total Quality ManagementDocument9 pagesCustomer Satisfaction A Pillar of Total Quality ManagementInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Analysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyDocument6 pagesAnalysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Study of Customer Experience and Uses of Uber Cab Services in MumbaiDocument12 pagesStudy of Customer Experience and Uses of Uber Cab Services in MumbaiInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Detection of Malicious Web Contents Using Machine and Deep Learning ApproachesDocument6 pagesDetection of Malicious Web Contents Using Machine and Deep Learning ApproachesInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- The Mexican Innovation System: A System's Dynamics PerspectiveDocument12 pagesThe Mexican Innovation System: A System's Dynamics PerspectiveInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- An Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewDocument9 pagesAn Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Synthetic Datasets For Myocardial Infarction Based On Actual DatasetsDocument9 pagesSynthetic Datasets For Myocardial Infarction Based On Actual DatasetsInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Secured Contactless Atm Transaction During Pandemics With Feasible Time Constraint and Pattern For OtpDocument12 pagesSecured Contactless Atm Transaction During Pandemics With Feasible Time Constraint and Pattern For OtpInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- A Digital Record For Privacy and Security in Internet of ThingsDocument10 pagesA Digital Record For Privacy and Security in Internet of ThingsInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- The Impact of Effective Communication To Enhance Management SkillsDocument6 pagesThe Impact of Effective Communication To Enhance Management SkillsInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Predicting The Effect of Fineparticulate Matter (PM2.5) On Anecosystemincludingclimate, Plants and Human Health Using MachinelearningmethodsDocument10 pagesPredicting The Effect of Fineparticulate Matter (PM2.5) On Anecosystemincludingclimate, Plants and Human Health Using MachinelearningmethodsInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Staycation As A Marketing Tool For Survival Post Covid-19 in Five Star Hotels in Pune CityDocument10 pagesStaycation As A Marketing Tool For Survival Post Covid-19 in Five Star Hotels in Pune CityInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Performance of Short Transmission Line Using Mathematical MethodDocument8 pagesPerformance of Short Transmission Line Using Mathematical MethodInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Design and Detection of Fruits and Vegetable Spoiled Detetction SystemDocument8 pagesDesign and Detection of Fruits and Vegetable Spoiled Detetction SystemInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- A Comparative Analysis of Two Biggest Upi Paymentapps: Bhim and Google Pay (Tez)Document10 pagesA Comparative Analysis of Two Biggest Upi Paymentapps: Bhim and Google Pay (Tez)International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- A Deep Learning Based Assistant For The Visually ImpairedDocument11 pagesA Deep Learning Based Assistant For The Visually ImpairedInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Impact of Covid-19 On Employment Opportunities For Fresh Graduates in Hospitality &tourism IndustryDocument8 pagesImpact of Covid-19 On Employment Opportunities For Fresh Graduates in Hospitality &tourism IndustryInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Anchoring of Inflation Expectations and Monetary Policy Transparency in IndiaDocument9 pagesAnchoring of Inflation Expectations and Monetary Policy Transparency in IndiaInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Challenges Faced by Speciality Restaurants in Pune City To Retain Employees During and Post COVID-19Document10 pagesChallenges Faced by Speciality Restaurants in Pune City To Retain Employees During and Post COVID-19International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Advanced Load Flow Study and Stability Analysis of A Real Time SystemDocument8 pagesAdvanced Load Flow Study and Stability Analysis of A Real Time SystemInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5784)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Bootload DesignDocument13 pagesBootload Designron_cppNo ratings yet

- HP 245 G6 Notebook PC: Maintenance and Service GuideDocument106 pagesHP 245 G6 Notebook PC: Maintenance and Service GuideStevenson QuinteroNo ratings yet

- Coding BreakdownDocument2 pagesCoding BreakdownAlex SouzaNo ratings yet

- 9619 Sanyo LCD-32XH4 Chassis UH2-L Manual de ServicioDocument49 pages9619 Sanyo LCD-32XH4 Chassis UH2-L Manual de Serviciohectormv22100% (1)

- Hq2000 Service Manual 408Document136 pagesHq2000 Service Manual 408UNITY LEARN100% (1)

- CPU-Z TXT Report AnalysisDocument25 pagesCPU-Z TXT Report AnalysisPrasun BiswasNo ratings yet

- Sensors 21 05524Document22 pagesSensors 21 05524ccnrrynNo ratings yet

- FM Demodulator Circuit ExperimentDocument8 pagesFM Demodulator Circuit ExperimentBruno CastroNo ratings yet

- The New Software EngineeringDocument830 pagesThe New Software EngineeringApocalipsis2011175No ratings yet

- Introduction to Open Financial Services (OFS) MessagesDocument23 pagesIntroduction to Open Financial Services (OFS) Messagesrs reddyNo ratings yet

- Bonfiglioli ACU R00 0 e PDFDocument88 pagesBonfiglioli ACU R00 0 e PDFThiago MouttinhoNo ratings yet

- Qwilt - Edge Cloud Vision and Motivation - May 2021v2Document30 pagesQwilt - Edge Cloud Vision and Motivation - May 2021v2Ary Setiyono100% (1)

- A1 Group1 NotesDocument46 pagesA1 Group1 Notesislam2059No ratings yet

- Samsung HT-C453 Schematic DiagramDocument12 pagesSamsung HT-C453 Schematic DiagramJosip HrdanNo ratings yet

- Warner AZ400 SlidesDocument93 pagesWarner AZ400 SlidesYegnasivasaiNo ratings yet

- 174CEV20040 - MB+ User GuideDocument152 pages174CEV20040 - MB+ User GuideChristopherNo ratings yet

- Vmware Vsphere 5 Troubleshooting Lab Guide and SlidesDocument14 pagesVmware Vsphere 5 Troubleshooting Lab Guide and SlidesAmit SharmaNo ratings yet

- Philips 55pus7809-12 Chassis Qm14.3e La SM PDFDocument98 pagesPhilips 55pus7809-12 Chassis Qm14.3e La SM PDFLuca JohnNo ratings yet

- Eee R19 Iii IiDocument35 pagesEee R19 Iii IiN Venkatesh JNTUK UCEVNo ratings yet

- HOPEX IT Architecture: User GuideDocument159 pagesHOPEX IT Architecture: User GuideAlex Hernández RojasNo ratings yet

- Ford 2003 My Obd System OperationDocument2 pagesFord 2003 My Obd System OperationBeverly100% (54)

- Ic ps9850Document3 pagesIc ps9850ayu fadliNo ratings yet

- Medion MD8088-Engl Motherboard ManualDocument25 pagesMedion MD8088-Engl Motherboard ManualEcoCharly100% (4)

- Microstrip and Stripline DesignDocument7 pagesMicrostrip and Stripline DesignDurbha RaviNo ratings yet

- ©silberschatz, Korth and Sudarshan 1.1 Database System ConceptsDocument21 pages©silberschatz, Korth and Sudarshan 1.1 Database System ConceptsYogesh DesaiNo ratings yet

- MIDI Keyboard Manual: MIDIPLUS ORIGIN 37Document21 pagesMIDI Keyboard Manual: MIDIPLUS ORIGIN 37David100% (1)

- IRFZ44N Power MOSFETDocument1 pageIRFZ44N Power MOSFETFherdzy AnNo ratings yet

- Polycom List For PersolDocument35 pagesPolycom List For PersolKofi Adofo-MintahNo ratings yet

- Learners Activity Sheet 4 UpdatedDocument8 pagesLearners Activity Sheet 4 UpdatedJohn Raymund MabansayNo ratings yet

- Borland® C#Builder™Document12 pagesBorland® C#Builder™BharatiyulamNo ratings yet