Professional Documents

Culture Documents

How Microprocessors Work

Uploaded by

zahimbCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

How Microprocessors Work

Uploaded by

zahimbCopyright:

Available Formats

How Microprocessors Work e 1 of 64

ZM

How Microprocessors Work

ZAHIDMEHBOOB

+923215020706

ZAHIDMEHBOOB@LIVE.COM

2003

BS(IT)

PRESTION UNIVERSITY

How Microprocessors Work

The computer you are using to read this page uses a microprocessor to do its work. The

microprocessor is the heart of any normal computer, whether it is a desktop machine, a

server or a laptop. The microprocessor you are using might be a Pentium, a K6, a PowerPC,

a Sparc or any of the many other brands and types of microprocessors, but they all do

approximately the same thing in approximately the same way.

If you have ever wondered what the microprocessor in your computer is doing, or if you have

ever wondered about the differences between types of microprocessors, then read on.

Microprocessor History

A microprocessor -- also known as a CPU or central processing unit -- is a complete

computation engine that is fabricated on a single chip. The first microprocessor was the Intel

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 2 of 64

ZM

4004, introduced in 1971. The 4004 was not very powerful -- all it could do was add and

subtract, and it could only do that 4 bits at a time. But it was amazing that everything was on

one chip. Prior to the 4004, engineers built computers either from collections of chips or from

discrete components (transistors wired one at a time). The 4004 powered one of the first

portable electronic calculators.

The first microprocessor to make it into a home computer was the Intel 8080, a complete 8-

bit computer on one chip, introduced in 1974. The first microprocessor to make a real splash

in the market was the Intel 8088, introduced in 1979 and incorporated into the IBM PC

(which first appeared around 1982). If you are familiar with the PC market and its history, you

know that the PC market moved from the 8088 to the 80286 to the 80386 to the 80486 to the

Pentium to the Pentium II to the Pentium III to the Pentium 4. All of these microprocessors

are made by Intel and all of them are improvements on the basic design of the 8088. The

Pentium 4 can execute any piece of code that ran on the original 8088, but it does it about

5,000 times faster!

The following table helps you to understand the differences between the different processors

that Intel has introduced over the years.

Clock Data

Name Date Transistors Microns MIPS

speed width

8080 1974 6,000 6 2 MHz 8 bits 0.64

16 bits

8088 1979 29,000 3 5 MHz 0.33

8-bit bus

80286 1982 134,000 1.5 6 MHz 16 bits 1

80386 1985 275,000 1.5 16 MHz 32 bits 5

80486 1989 1,200,000 1 25 MHz 32 bits 20

32 bits

Pentium 1993 3,100,000 0.8 60 MHz 64-bit 100

bus

32 bits

Pentium II 1997 7,500,000 0.35 233 MHz 64-bit ~300

bus

32 bits

Pentium

1999 9,500,000 0.25 450 MHz 64-bit ~510

III

bus

32 bits

Pentium 4 2000 42,000,000 0.18 1.5 GHz 64-bit ~1,700

bus

Compiled from The Intel Microprocessor Quick Reference Guide and TSCP Benchmark Scores

Information about this table:

• The date is the year that the processor was first

introduced. Many processors are re-introduced at

higher clock speeds for many years after the original release date.

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 3 of 64

ZM

• Transistors is the number of transistors on the chip. You can see that the number of

transistors on a single chip has risen steadily over the years.

• Microns is the width, in microns, of the smallest wire on the chip. For comparison, a

human hair is 100 microns thick. As the feature size on the chip goes down, the number

of transistors rises.

• Clock speed is the maximum rate that the chip can be clocked at. Clock speed will make

more sense in the next section.

• Data Width is the width of the ALU. An 8-bit ALU can add/subtract/multiply/etc. two 8-bit

numbers, while a 32-bit ALU can manipulate 32-bit numbers. An 8-bit ALU would have to

execute four instructions to add two 32-bit numbers, while a 32-bit ALU can do it in one

instruction. In many cases, the external data bus is the same width as the ALU, but not

always. The 8088 had a 16-bit ALU and an 8-bit bus, while the modern Pentiums fetch

data 64 bits at a time for their 32-bit ALUs.

• MIPS stands for "millions of instructions per second" and is a rough measure of the

performance of a CPU. Modern CPUs can do so many different things that MIPS ratings

lose a lot of their meaning, but you can get a general sense of the relative power of the

CPUs from this column.

From this table you can see that, in general, there is a relationship between clock speed and

MIPS. The maximum clock speed is a function of the manufacturing process and delays

within the chip. There is also a relationship between the number of transistors and MIPS. For

example, the 8088 clocked at 5 MHz but only executed at 0.33 MIPS (about one instruction

per 15 clock cycles). Modern processors can often execute at a rate of two instructions per

clock cycle. That improvement is directly related to the number of transistors on the chip and

will make more sense in the next section.

Inside a Microprocessor

To understand how a microprocessor works, it is helpful to look inside and learn about the

logic used to create one. In the process you can also learn about assembly language -- the

native language of a microprocessor -- and many of the things that engineers can do to

boost the speed of a processor.

A microprocessor executes a collection of machine instructions that tell the processor what

to do. Based on the instructions, a microprocessor does three basic things:

• Using its ALU (Arithmetic/Logic Unit), a microprocessor can perform mathematical

operations like addition, subtraction, multiplication and division. Modern

microprocessors contain complete floating point processors that can perform

extremely sophisticated operations on large floating point numbers.

• A microprocessor can move data from one memory location to another.

• A microprocessor can make decisions and jump to a new set of instructions based

on those decisions.

There may be very sophisticated things that a microprocessor does, but those are its three

basic activities. The following diagram shows an extremely simple microprocessor capable of

doing those three things:

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 4 of 64

ZM

This is about as simple as a microprocessor gets. This microprocessor has:

• An address bus (that may be 8, 16 or 32 bits wide) that sends an address to

memory

• A data bus (that may be 8, 16 or 32 bits wide) that can send data to memory or

receive data from memory

• An RD (read) and WR (write) line to tell the memory whether it wants to set or get the

addressed location

• A clock line that lets a clock pulse sequence the processor

• A reset line that resets the program counter to zero (or whatever) and restarts

execution

Let's assume that both the address and data buses are 8 bits wide in this example.

Here are the components of this simple microprocessor:

• Registers A, B and C are simply latches made out of flip-flops. (See the section on

"edge-triggered latches" in How Boolean Logic Works for details.)

• The address latch is just like registers A, B and C.

• The program counter is a latch with the extra ability to increment by 1 when told to do

so, and also to reset to zero when told to do so.

• The ALU could be as simple as an 8-bit adder (see the section on adders in How

Boolean Logic Works for details), or it might be able to add, subtract, multiply and

divide 8-bit values. Let's assume the latter here.

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 5 of 64

ZM

• The test register is a special latch that can hold values from comparisons performed

in the ALU. An ALU can normally compare two numbers and determine if they are

equal, if one is greater than the other, etc. The test register can also normally hold a

carry bit from the last stage of the adder. It stores these values in flip-flops and then

the instruction decoder can use the values to make decisions.

• There are six boxes marked "3-State" in the diagram. These are tri-state buffers. A

tri-state buffer can pass a 1, a 0 or it can essentially disconnect its output (imagine a

switch that totally disconnects the output line from the wire that the output is heading

toward). A tri-state buffer allows multiple outputs to connect to a wire, but only one of

them to actually drive a 1 or a 0 onto the line.

• The instruction register and instruction decoder are responsible for controlling all of

the other components.

Although they are not shown in this diagram, there

would be control lines from the instruction decoder that

would:

• Tell the A register to latch the value currently on the data bus

• Tell the B register to latch the value currently on the data bus

• Tell the C register to latch the value currently on the data bus

• Tell the program counter register to latch the value currently on the data bus

• Tell the address register to latch the value currently on the data bus

• Tell the instruction register to latch the value currently on the data bus

• Tell the program counter to increment

• Tell the program counter to reset to zero

• Activate any of the six tri-state buffers (six separate lines)

• Tell the ALU what operation to perform

• Tell the test register to latch the ALU's test bits

• Activate the RD line

• Activate the WR line

Coming into the instruction decoder are the bits from the test register and the clock line, as

well as the bits from the instruction register.

RAM and ROM

The previous section talked about the address and data buses, as well as the RD and WR

lines. These buses and lines connect either to RAM or ROM -- generally both. In our sample

microprocessor, we have an address bus 8 bits wide and a data bus 8 bits wide. That means

that the microprocessor can address (28) 256 bytes of memory, and it can read or write 8 bits

of the memory at a time. Let's assume that this simple microprocessor has 128 bytes of

ROM starting at address 0 and 128 bytes of RAM starting at address 128.

ROM stands for read-only memory. A ROM chip is programmed with a permanent collection

of pre-set bytes. The address bus tells the ROM chip which byte to get and place on the data

bus. When the RD line changes state, the ROM chip presents the selected byte onto the

data bus.

RAM stands for random-access memory. RAM contains bytes of information, and the

microprocessor can read or write to those bytes depending on whether the RD or WR line is

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 6 of 64

ZM

signaled. One problem with today's RAM chips is that they forget everything once the power

goes off. That is why the computer needs ROM.

By the way, nearly all computers contain some amount of ROM (it is possible to create a

simple computer that contains no RAM -- many microcontrollers do this by placing a handful

of RAM bytes on the processor chip itself -- but generally impossible to create one that

contains no ROM). On a PC, the ROM is called the BIOS (Basic Input/Output System).

When the microprocessor starts, it begins executing instructions it finds in the BIOS. The

BIOS instructions do things like test the hardware in the machine, and then it goes to the

hard disk to fetch the boot sector (see How Hard Disks Work for details). This boot sector is

another small program, and the BIOS stores it in RAM after reading it off the disk. The

microprocessor then begins executing the boot sector's instructions from RAM. The boot

sector program will tell the microprocessor to fetch something else from the hard disk into

RAM, which the microprocessor then executes, and so on. This is how the microprocessor

loads and executes the entire operating system.

Microprocessor Instructions

Even the incredibly simple microprocessor shown in the previous example will have a fairly

large set of instructions that it can perform. The collection of instructions is implemented as

bit patterns, each one of which has a different meaning when loaded into the instruction

register. Humans are not particularly good at remembering bit patterns, so a set of short

words are defined to represent the different bit patterns. This collection of words is called the

assembly language of the processor. An assembler can translate the words into their bit

patterns very easily, and then the output of the assembler is placed in memory for the

microprocessor to execute.

Here's the set of assembly language instructions that the designer might create for the

simple microprocessor in our example:

• LOADA mem - Load register A from memory address

• LOADB mem - Load register B from memory address

• CONB con - Load a constant value into register B

• SAVEB mem - Save register B to memory address

• SAVEC mem - Save register C to memory address

• ADD - Add A and B and store the result in C

• SUB - Subtract A and B and store the result in C

• MUL - Multiply A and B and store the result in C

• DIV - Divide A and B and store the result in C

• COM - Compare A and B and store the result in test

• JUMP addr - Jump to an address

• JEQ addr - Jump, if equal, to address

• JNEQ addr - Jump, if not equal, to address

• JG addr - Jump, if greater than, to address

• JGE addr - Jump, if greater than or equal, to address

• JL addr - Jump, if less than, to address

• JLE addr - Jump, if less than or equal, to address

• STOP - Stop execution

If you have read How C Programming Works, then you know that this simple piece of C code

will calculate the factorial of 5 (where the factorial of 5 = 5! = 5 * 4 * 3 * 2 * 1 = 120):

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 7 of 64

ZM

a=1;

f=1;

while (a <= 5)

{

f = f * a;

a = a + 1;

}

At the end of the program's execution, the variable f contains the factorial of 5.

A C compiler translates this C code into assembly language. Assuming that RAM starts at

address 128 in this processor, and ROM (which contains the assembly language program)

starts at address 0, then for our simple microprocessor the assembly language might look

like this:

// Assume a is at address 128

// Assume F is at address 129

0 CONB 1 // a=1;

1 SAVEB 128

2 CONB 1 // f=1;

3 SAVEB 129

4 LOADA 128 // if a > 5 the jump to 17

5 CONB 5

6 COM

7 JG 17

8 LOADA 129 // f=f*a;

9 LOADB 128

10 MUL

11 SAVEC 129

12 LOADA 128 // a=a+1;

13 CONB 1

14 ADD

15 SAVEC 128

16 JUMP 4 // loop back to if

17 STOP

So now the question is, "How do all of these instructions look in ROM?" Each of these

assembly language instructions must be represented by a binary number. For the sake of

simplicity, let's assume each assembly language instruction is given a unique number, like

this:

• LOADA - 1

• LOADB - 2

• CONB - 3

• SAVEB - 4

• SAVEC mem - 5

• ADD - 6

• SUB - 7

• MUL - 8

• DIV - 9

• COM - 10

• JUMP addr - 11

• JEQ addr - 12

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 8 of 64

ZM

• JNEQ addr - 13

• JG addr - 14

• JGE addr - 15

• JL addr - 16

• JLE addr - 17

• STOP - 18

The numbers are known as opcodes. In ROM, our little program would look like this:

// Assume a is at address 128

// Assume F is at address 129

Addr opcode/value

0 3 // CONB 1

1 1

2 4 // SAVEB 128

3 128

4 3 // CONB 1

5 1

6 4 // SAVEB 129

7 129

8 1 // LOADA 128

9 128

10 3 // CONB 5

11 5

12 10 // COM

13 14 // JG 17

14 31

15 1 // LOADA 129

16 129

17 2 // LOADB 128

18 128

19 8 // MUL

20 5 // SAVEC 129

21 129

22 1 // LOADA 128

23 128

24 3 // CONB 1

25 1

26 6 // ADD

27 5 // SAVEC 128

28 128

29 11 // JUMP 4

30 8

31 18 // STOP

You can see that seven lines of C code became 17 lines of assembly language, and that

became 31 bytes in ROM.

The instruction decoder needs to turn each of the opcodes into a set of signals that drive the

different components inside the microprocessor. Let's take the ADD instruction as an

example and look at what it needs to do:

1. During the first clock cycle, we need to actually load the instruction. Therefore the

instruction decoder needs to:

• activate the tri-state buffer for the program counter

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 9 of 64

ZM

• activate the RD line

• activate the data-in tri-state buffer

• latch the instruction into the instruction register

2. During the second clock cycle, the ADD instruction is decoded. It needs to do very

little:

• set the operation of the ALU to addition

• latch the output of the ALU into the C register

3. During the third clock cycle, the program counter is incremented (in theory this could

be overlapped into the second clock cycle).

Every instruction can be broken down as a set of sequenced operations like these that

manipulate the components of the microprocessor in the proper order. Some instructions,

like this ADD instruction, might take two or three clock cycles. Others might take five or six

clock cycles

Microprocessor Performance

The number of transistors available has a huge effect on the performance of a processor.

As seen earlier, a typical instruction in a processor like an 8088 took 15 clock cycles to

execute. Because of the design of the multiplier, it took approximately 80 cycles just to do

one 16-bit multiplication on the 8088. With more transistors, much more powerful multipliers

capable of single-cycle speeds become possible.

More transistors also allow for a technology called pipelining. In a pipelined architecture,

instruction execution overlaps. So even though it might take five clock cycles to execute

each instruction, there can be five instructions in various stages of execution simultaneously.

That way it looks like one instruction completes every clock cycle.

Many modern processors have multiple instruction decoders, each with its own pipeline. This

allows for multiple instruction streams, which means that more than one instruction can

complete during each clock cycle. This technique can be quite complex to implement, so it

takes lots of transistors.

The trend in processor design has been toward full 32-bit ALUs with fast floating point

processors built in and pipelined execution with multiple instruction streams. There has also

been a tendency toward special instructions (like the MMX instructions) that make certain

operations particularly efficient. There has also been the addition of hardware virtual memory

support and L1 caching on the processor chip. All of these trends push up the transistor

count, leading to the multi-million transistor powerhouses available today. These processors

can execute about one billion instructions per second!

Computer Caches

A computer is a machine in which we measure time in very small increments. When the

microprocessor accesses the main memory (RAM), it does it in about 60 nanoseconds (60

billionths of a second). That's pretty fast, but it is much slower than the typical

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 10 of 64

ZM

microprocessor. Microprocessors can have cycle times as short as 2 nanoseconds, so to a

microprocessor 60 nanoseconds seems like an eternity.

What if we build a special memory bank, small but very fast (around 30 nanoseconds)?

That's already two times faster than the main memory access. That's called a level 2 cache

or an L2 cache. What if we build an even smaller but faster memory system directly into the

microprocessor's chip? That way, this memory will be accessed at the speed of the

microprocessor and not the speed of the memory bus. That's an L1 cache, which on a 233-

megahertz (MHz) Pentium is 3.5 times faster than the L2 cache, which is two times faster

than the access to main memory.

There are a lot of subsystems in a computer; you can put cache between many of them to

improve performance. Here's an example. We have the microprocessor (the fastest thing in

the computer). Then there's the L1 cache that caches the L2 cache that caches the main

memory which can be used (and is often used) as a cache for even slower peripherals like

hard disks and CD-ROMs. The hard disks are also used to cache an even slower medium --

your Internet connection.

Your Internet connection is the slowest link in your computer. So your browser (Internet

Explorer, Netscape, Opera, etc.) uses the hard disk to store HTML pages, putting them into a

special folder on your disk. The first time you ask for an HTML page, your browser renders it

and a copy of it is also stored on your disk. The next time you request access to this page,

your browser checks if the date of the file on the Internet is newer than the one cached. If the

date is the same, your browser uses the one on your hard disk instead of downloading it

from Internet. In this case, the smaller but faster memory system is your hard disk and the

larger and slower one is the Internet.

Cache can also be built directly on peripherals. Modern hard disks come with fast memory,

around 512 kilobytes, hardwired to the hard disk. The computer doesn't directly use this

memory -- the hard-disk controller does. For the computer, these memory chips are the disk

itself. When the computer asks for data from the hard disk, the hard-disk controller checks

into this memory before moving the mechanical parts of the hard disk (which is very slow

compared to memory). If it finds the data that the computer asked for in the cache, it will

return the data stored in the cache without actually accessing data on the disk itself, saving a

lot of time.

Here's an experiment you can try. Your computer caches your floppy drive with main

memory, and you can actually see it happening. Access a large file from your floppy -- for

example, open a 300-kilobyte text file in a text editor. The first time, you will see the light on

your floppy turning on, and you will wait. The floppy disk is extremely slow, so it will take 20

seconds to load the file. Now, close the editor and open the same file again. The second

time (don't wait 30 minutes or do a lot of disk access between the two tries) you won't see

the light turning on, and you won't wait. The operating system checked into its memory

cache for the floppy disk and found what it was looking for. So instead of waiting 20 seconds,

the data was found in a memory subsystem much faster than when you first tried it (one

access to the floppy disk takes 120 milliseconds, while one access to the main memory

takes around 60 nanoseconds -- that's a lot faster). You could have run the same test on

your hard disk, but it's more evident on the floppy drive because it's so slow.

To give you the big picture of it all, here's a list of a normal caching system:

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 11 of 64

ZM

• L1 cache - Memory accesses at full microprocessor speed (10 nanoseconds, 4

kilobytes to 16 kilobytes in size)

• L2 cache - Memory access of type SRAM (around 20 to 30 nanoseconds, 128

kilobytes to 512 kilobytes in size)

• Main memory - Memory access of type RAM (around 60 nanoseconds, 32

megabytes to 128 megabytes in size)

• Hard disk - Mechanical, slow (around 12 milliseconds, 1 gigabyte to 10 gigabytes in

size)

• Internet - Incredibly slow (between 1 second and 3 days, unlimited size)

Cache Technology

One common question asked at this point is, "Why not make all of the computer's memory

run at the same speed as the L1 cache, so no caching would be required?" That would work,

but it would be incredibly expensive. The idea behind caching is to use a small amount of

expensive memory to speed up a large amount of slower, less-expensive memory.

In designing a computer, the goal is to allow the microprocessor to run at its full speed as

inexpensively as possible. A 500-MHz chip goes through 500 million cycles in one second

(one cycle every two nanoseconds). Without L1 and L2 caches, an access to the main

memory takes 60 nanoseconds, or about 30 wasted cycles accessing memory.

When you think about it, it is kind of incredible that such relatively tiny amounts of memory

can maximize the use of much larger amounts of memory. Think about a 256-kilobyte L2

cache that caches 64 megabytes of RAM. In this case, 256,000 bytes efficiently caches

64,000,000 bytes. Why does that work?

In computer science, we have a theoretical concept called locality of reference. It means

that in a fairly large program, only small portions are ever used at any one time. As strange

as it may seem, locality of reference works for the huge majority of programs. Even if the

executable is 10 megabytes in size, only a handful of bytes from that program are in use at

any one time, and their rate of repetition is very high. Let's take a look at the following

pseudo-code to see why locality of reference works (see How C Programming Works to

really get into it):

Output to screen « Enter a number between 1 and 100 »

Read input from user

Put value from user in variable X

Put value 100 in variable Y

Put value 1 in variable Z

Loop Y number of time

Divide Z by X

If the remainder of the division = 0

then output « Z is a multiple of X »

Add 1 to Z

Return to loop

End

This small program asks the user to enter a number between 1 and 100. It reads the value

entered by the user. Then, the program divides every number between 1 and 100 by the

number entered by the user. It checks if the remainder is 0 (modulo division). If so, the

program outputs "Z is a multiple of X" (for example, 12 is a multiple of 6), for every number

between 1 and 100. Then the program ends.

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 12 of 64

ZM

Even if you don't know much about computer programming, it is easy to understand that in

the 11 lines of this program, the loop part (lines 7 to 9) are executed 100 times. All of the

other lines are executed only once. Lines 7 to 9 will run significantly faster because of

caching.

This program is very small and can easily fit entirely in the smallest of L1 caches, but let's

say this program is huge. The result remains the same. When you program, a lot of action

takes place inside loops. A word processor spends 95 percent of the time waiting for your

input and displaying it on the screen. This part of the word-processor program is in the

cache.

This 95%-to-5% ratio (approximately) is what we call the locality of reference, and it's why a

cache works so efficiently. This is also why such a small cache can efficiently cache such a

large memory system. You can see why it's not worth it to construct a computer with the

fastest memory everywhere. We can deliver 95 percent of this effectiveness for a fraction of

the cost.

What is Virtual Memory?

.

Most computers today have something like 32 or 64 megabytes of RAM available for the

CPU to use (see How RAM Works for details on RAM). Unfortunately, that amount of RAM is

not enough to run all of the programs that most users expect to run at once.

For example, if you load the operating system, an e-mail program, a Web browser and word

processor into RAM simultaneously, 32 megabytes is not enough to hold it all. If there were

no such thing as virtual memory, then once you filled up the available RAM your computer

would have to say, "Sorry, you can not load any more applications. Please close another

application to load a new one." With virtual memory, what the computer can do is look at

RAM for areas that have not been used recently and copy them onto the hard disk. This

frees up space in RAM to load the new application.

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 13 of 64

ZM

Because this copying happens automatically, you don't even know it is happening, and it

makes your computer feel like is has unlimited RAM space even though it only has 32

megabytes installed. Because hard disk space is so much cheaper than RAM chips, it also

has a nice economic benefit.

6th Generation CPU Comparisons

The following is a comparative text meant to give people a feel for the differences

in the various 6th generation x86 CPUs. For this little ditty, I've chosen the Intel

P-II (aka Klamath, P6), the AMD K6 (aka NX686), and the Cyrix 6x86MX (aka

M2). These are all MMX capable 6th generation x86 compatible CPUs, however I

am not going to discuss the MMX capabilities at all beyond saying that they all

appear to have similar functionality. (MMX never really took off as the software

enabling technology Intel claimed it to be, so its not worth going into any depth

on it.)

In what follows, I am assuming a high level of competence and knowledge on the

part of the reader (basic 32 bit x86 assembly at least). For many of you, the

discussion will be just slightly over your head. For those, I would recommend

sitting through the 1 hour online lecture on the Post-RISC architecture by

Charles Severence to get some more background on the state of modern

processor technology. It is really an excellent lecture, that is well worth the time:

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 14 of 64

ZM

• Beyond RISC - The Post RISC Architecture (Mark Brehob, Travis

Doom, Richard Enbody, William H. Moore, Sherry Q. Moore, Ron Sass,

Charles Severance )

Much of the following information comes from online documentation from Cyrix,

AMD and Intel. I have played a little with Pentiums and Pentium-II's from work, as

well as my AMD-K6 at home. I would also like to thank, Dan Wax, Lance Smith

and "Bob Instigator" from AMD who corrected me on several points about the

K6, and both Andreas Kaiser and Lee Powell who also provided insightful

information, and corrections gleened from first hand experiences with these

CPUs. Also, thanks to Terje Mathisen who pointed out an error, and Brian

Converse who helped me with my grammar.

Comments welcome.

The AMD K6

The K6 architecture seems to mix some of the ideas of the P-II and 6x86MX

architectures. They made trade offs, and decisions that they believed would

deliver the maximal performance over all potential software. They have

emphasized short latencies (like the 6x86MX) but the K6 translates their x86

instructions into RISC operations that are queued in large instruction buffers and

feed many (7 in all) independent units (like the P-II.) While they don't always

have the best single implementation of any specific aspect, this was the result of

conscious decisions that they believe helps strike a balance that hits a good

performance sweet spot. Versus the P-II, they avoid situations of really deep

pipelining which has high penalties when the pipeline has to be backed out.

Versus the Cyrix, the AMD is a fully POST-RISC architecture which is not as

susceptible to pipeline stalls which artificially back ups other stages.

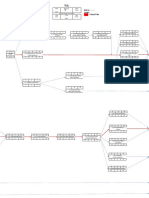

General Architecture

The K6 is an extremely short and elegant pipeline. The AMD-K6 MMX

Enhanced Processor x86 Code Optimization Application Note contains the

following diagrams:

This seems remarkably simple considering the features that are claimed for the

K6. The secret, is that most of these stages do very complicated things. The light

blue stages execute in an out of order fashion (and were colored by me, not

AMD.)

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 15 of 64

ZM

The fetch stage, is much like a typical Pentium instruction fetcher, and is able to

present 16 cache aligned bytes of data per clock. Of course this means that

some instructions that straddle 16 byte boundaries will suffer an extra clock

penalty before reaching the decode stage, much like they do on a Pentium. (The

K6 is a little clever in that if there are partial opcodes from which the predecoder

can determine the instruction length, then the prefetching mechanism will fetch

the new 16 byte buffer just in time to feed the remaining bytes to the issue

stage.)

The decode stage attempts to simultaneously decode 2 simple, 1 long, and fetch

from 1 ROM x86 instruction(s). If both of the first two fail (usually only on rare

instructions), the decoder is stalled for a second clock which is required to

completely decode the instruction from the ROM. If the first fails but the second

does not (the usual case when involving memory, or an override), then a single

instruction or override is decoded. If the first succeeds (the usual case when not

involving memory or overrides) then two simple instructions are decoded. The

decoded "OpQuad" is then entered into the scheduler.

Thus the K6's execution rate is limited to a maximum of two x86

instructions per clock. This decode stage decomposes the x86 instructions into

RISC86 ops.

This last statement has been generally misunderstood in its importance (even by

me!) Given that the P-II architecture can decode 3 instructions at once, it is

tempting to conclude that the P-II can execute typically up to 50% faster than a

K6. According to "Bob Instigator" (a technical marketroid from AMD) and "The

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 16 of 64

ZM

Anatomy of a High-Performance Microprocessor A Systems Perspective"

this just isn't so. Besides the back-end limitations and scheduler problems that

clog up the P-II, real world software traces analyzed at Advanced Micro Devices

indicated that a 3-way decoder would have added almost no benefit while

severely limiting the clock rate ramp of the K6 given its back end architecture.

That said, in real life decode bandwidth limitation crops up every now and then

as a limiting factor, but is rarely egregiously in comparison to ordinary execution

limitations.

The issue stage accepts up to 4 RISC86 instructions from the scheduler. The

scheduler is basically an OpQuad buffer that can hold up to 6 clocks of

instructions (which is up to 12 dual issued x86 instructions.) The K6 issues

instructions subject only to execution unit availability using an oldest unissued

first algorithm at a maximum rate of 4 RISC86 instructions per clock (the X and Y

ALU pipelines, the load unit, and the store unit.) The instructions are marked as

issued, but not removed until retirement.

The operand fetch stage reads the issued instruction operands without any

restriction other than register availability. This is in contrast with the P-II which

can only read up to two retired register operands per clock (but is unrestricted in

forwarding (unretired) register accesses.) The K6 uses some kind of internal

"register MUX" which allows arbitrary accesses of internal and commited register

space. If this stage "fails" because of a long data dependency, then according to

expected availability of the operands the instruction is either held in this stage for

an additional clock or unissued back into the scheduler, essentially moving the

instruction backwards through the pipeline!

This is an ingenious design that allows the K6 to perform "late" data dependency

determinations without over-complicating the scheduler's issue logic. This clever

idea gives a very close approximation of a reservation station architecture's

"greedy algorithm scheduling".

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 17 of 64

ZM

The execution stages perform in one or two pipelined stages (with the exception

of the floating point unit which is not pipelined, or complex instructions which stall

those units during execution.) In theory, all units can be executing at once.

Retirement happens as completed instructions are pushed out of the scheduler

(exactly 6 clocks after they are entered.) If for some reason, the oldest OpQuad

in the scheduler is not finished, scheduler advancement (which pushes out the

oldest OpQuad and makes space for a newly decoded OpQuad) is halted until

the OpQuad can be retired.

What we see here is the front end starting fairly tight (two instruction) and the

back end ending somewhat wider (two integer execution units, one load, one

store, and one FPU.) The reason for this seeming mismatch in execution

bandwidth (as opposed to the Pentium, for example which remains two-wide

from top to bottom) is that it will be able to sustain varying execution loads as the

dependency states change from clock to clock. This at the very heart of what an

out of order architecture is trying to accomplish, being wider at the back-end is a

natrual consequence of this kind design.

Branch Prediction

The K6 uses a very sophisiticated branch prediction mechanism which delivers

better prediction and fewer stalls than the P-II. There is a 8192 table of two bit

prediction entries which combine historic prediction information for any given

branch with a heuristic that takes into account the results of nearby branches.

Even branches that have somehow left the branch prediction table, still can have

the benefit of nearby branch activity data to help their prediction. According to

published papers which studied these branch prediction implementations, this

allows them to achieve a 95% prediction rate versus the P-II's 90% prediction

rate.

Additional stalls are avoided by using a 16 entry times 16 byte branch target

cache which allows first instruction decode to occur simultaneously with

instruction address computation, rather than requiring (E)IP to be known and

used to direct the next fetch (as is the case with the P-II.) This removes an (E)IP

calculation dependency and instruction fetch bubble. (This is a huge advantage

in certain algorithms such as computing a GCD; see my examples for the code)

The K6 allows up to 7 outstanding unresolved branches (which seems like more

than enough since the scheduler only allows up to 6 issued clocks of pending

instructions in the first place.)

The K6 benefits additionally from the fact that it is only a 6 stage pipeline (as

opposed to a 12 stage pipeline like the P-II) so even if a branch is incorrectly

predicted it is only a 4 clock penalty as opposed to the P-II's 11-15 clock penalty.

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 18 of 64

ZM

One disadvantage pointed out to me by Andreas Kaiser is that misaligned branch

targets still suffer an extra clock penalty and that attempts to align branch targets

can lead to branch target cache tag bit aliasing. This is a good point, however it

seems to me that you can help this along by hand aligning only your most inner

loop branches.

Another (also pointed out to me by Andreas Kaiser) is that such a prediction

mechanism does not work for indirect jump predictions (because the verification

tables only compare a binary jump decision value, not a whole address.) This is a

bit of a bummer for virtual C++ functions.

Back of envelope calculation

This all means that the average loop penalty is:

(95% * 0) + (5% * 4) = 0.2 clocks per loop

But because of the K6's limited decode bandwidth, branch instructions take up

precious instruction decode bandwidth. There are no branch execution clocks in

most situations, however, branching instructions end up taking a slot where there

is essentially no calculations. In that sense K6 branches have a typical penalty of

about 0.5 clocks. To combat this, the K6 executes the LOOP instruction in a

single clock, however this instruction performs so badly on Intel CPUs, that no

compiler generates it.

Floating Point

The common high demand, high performance FPU operations (FADD, FSUB,

FMUL) all execute with a throughput and latency of 2 clocks (versus 1 or 2 clock

throughput and 3-5 clock latency on the P-II.) Amazingly, this means that it can

complete FPU operations faster than the P-II, however is worse on FPU code that is

optimally scheduled for the P-II. Like the Pentium, in the P-II Intel has worked hard

on fully pipelining the faster FPU operations which works in their favor. Central to

this is FXCH which, in combination with FPU instruction operands allows two

new stack registers to be addressed by each binary FPU operation. The P-II

allows FXCH to execute in 0 clocks -- the early revs of the K6 took two clocks,

while later revs based on the "CXT core" can execute them in 0 clocks.

Unfortunately, the P-II derives much more benefit from this since its FPU

architecture allows it to decode and execute at a peak rate of one new FPU

instruction on every clock.

More complex instructions such as FDIV, FSQRT and so on will stall more of the

units on the P-II than on the K6. However since the P-II's scheduler is larger it will

be able to execute more instructions in parallel with the stalled FPU instruction

(21 in all, however the port 0 integer unit is unavailable for the duration of the

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 19 of 64

ZM

stalled FPU instruction) while the K6 can execute up to 11 other x86 instructions

a full speed before needing to wait for the stalled FPU instruction to complete.

In a test I wrote (admittedly rigged to favor Intel FPUs) the K6 measured to only

perform at about 55% of the P-II's performance. (Update: using the K6-2's new

SIMD floating point features, the roles have reversed -- the P-II can only execute

at about 70% of a K6-2's speed.)

An interesting note is that FPU instructions on the K6 will retire before they

completely execute. This is possible because it is only required that they work

out whether or not they will generate an exception, and the execution state is

reset on a task switch, by the OS's built-in FPU state saving mechanism.

The state of floating point has changed so drastically recently, that its hard to

make a definitive comment on this without a plethora of caveats. Facts: (1) the

pure x87 floating point unit in the K6 does not compare favorably with that of the

P-II, (2) this does not tend to always reflect in real life software which can be

made from bad compilers, (3) the future of floating point clearly lies with SIMD,

where AMD has clearly established a leadership role. (4) Intel's advantage was

primarily in software that was hand optimized by assembly coders -- but that has

clearly reversed roles since the introduction of the K6-2.

Cache

The K6's L1 cache is 64KB, which is twice as large as the P-II's L1 cache. But it

is only 2 way set associative (as opposed to the P-II which is 4 way). This makes

the replacement algorithm much simpler, but decreases its effectiveness in

random data accesses. The increased size, however, more than compensates

for the extra bit of associativity. For code that works with contiguous data sets,

the K6 simply offers twice the working set ceiling of the P-II.

Like the P-II, the K6's cache is divided into two fixed caches for separate code

and data. I am not as big a fan of split architectures (commonly referred to as the

Harvard Architecture) because they set an artificial lower limit on your working

sets. As pointed out to me by the AMD folk, this keeps them from having to worry

about data accesses kicking out their instruction cache lines. But I would expect

this to be dealt with by associativity and don't believe that it is worth the trade off

of lower working set sizes.

Among the design benefits they do derive from a split architecture is that they

can add pre-decode bits to just the instruction cache. On the K6, the predecode

bits are used for determining instruction length boundaries. Their address tags

(which appears to work out to 9 bits) point to a sector which contains two 32 byte

long cache lines, which (I assume) are selected by standard associativity rules.

Each cache line has a standard set of dirty bits to indicate accessibility state

(obsolete, busy, loaded, etc).

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 20 of 64

ZM

Although the K6's cache is non-blocking, (allowing accesses to other lines even if

a cache line miss is being processed) the K6's load/store unit architecture only

allows in-order data access. So this feature cannot be taken advantage of in the

K6. (Thanks to Andreas Kaiser for pointing this out to me.)

In addition, like the 6x86MX, the store unit of the K6 actually is buffered by a

store Queue. A neat feature of the store unit architecture is that it has two

operand fetch stages -- the first for the address, and the second for the data

which happens one clock later. This allows stores of data that are being

computed in the same clock as the store to occurr without any apparent stall.

That is so darn cool!

But perhaps more fundamentally, as AMD have said themselves, bigger is better,

and at twice the P-II's size, I'll have to give the nod to AMD (though a bigger nod

to the 6x86MX; see below.)

The K6 takes two (fully pipelined) clocks to fetch from its L1 cache from within its

load execution unit. Like the original P55C, the 6x86MX spends extra load clocks

(i.e., address generation) during earlier stages of their pipeline. On the other

hand this compares favorably with the P-II which takes three (fully pipelined)

clocks to fetch from the L1 cache. What this means is that when walking a

(cached) linked list (a typical data structure manipulation), the 6x86MX is the

fastest, followed by the K6, followed by the P-II.

Update: AMD has released the K6-3 which, like the Celeron adds a large on die

L2 cache. The K6-3's L2 cache is 256K which is larger than the Celeron's at

128K. Unlike Intel, however, AMD has recommended that motherboard continue

to include on board L2 caches creating what AMD calls a "TriLevel cache"

architecture (I recall that an earler Alpha based system did exactly this same

thing.) Benchmarks indicate that the K6-3 has increased in performance between

10% and 15% over similarly clocked K6-2's! (Wow! I think I might have to get one

of these.)

Other

• The K6 has bad memory bandwidth. One is an unknown bottleneck in

their block move and bursting over their bus (I and others have observed

this through testing, though there is no documentation available from AMD

that explains this. Update: the K6 did not support pipelined stores which

has been corrected in the "CXT core".)

• The K6 has a 2/3 (32 bits ready/64 bits ready) clock integer multiply, which

is good counter to the P-II's 1/4 (throughput/latency) clock integer multiply.

Programmers usually only use the base 32 bit LSB result of the multiply,

and so are likely to achieve realistic 2-clock throughputs. On the other

hand the P-II's 4 clock "hands off" rule is unlikely to be so easily

scheduled, since no contentions in 4 clocks is unlikely. In the real world, I

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 21 of 64

ZM

would be very surprised if the P-II actually achieves a 1 or even 2 clock

throughput.

• The K6 does not suffer the same kind of partial register stalls that the P-II

does. Register contention is accurate down to the byte sub-register as

required. Special clearing of the register is unnecessary. However 16 bit

partial register instructions will have instruction decode overrides which

will cost an extra clock.

• The K6 seems to prefer [esi+0] to [esi] memory addressing (for faster pre-

decoding.) This is a side effect of the 386 ISA's strange encoding rules for

this operand. Basically, the 16 bit mod/rm encodings and 32 bit modrm

encodings cause an mode or operand conflict for this situation. Basically,

if they made the [esi] decoding fast, numerous 16 bit modrm decodings

would be very slow. This trade off was more beneficial to more code at the

time.

• The K6 has a wider riscop instruction window than the P-II. That is to say

instructions are entered into and retired from their scheduler at a rate of 4

RISC86 ops per clock, while the P-II enters and retires microops from their

reorder buffer at a rate of 3 microops per clock.

• The K6 has a fast (1 cycle) LOOP instruction. It looks like the Intel CPUs

may be the lone wolves with their slow LOOP instruction. If you ask me,

this is the most ideal instruction to use for loops.

• Of course, the K6 has more a sophisticated instruction decode

implementation in the sense that they can decode two 7 byte instructions

or one 11 byte instruction in a single clock. Like the 6x86MX (though, with

an entirely different mechanism) it can only decode a maximum of two

instructions per clock versus the P-II's maximum rate of 3 instructions per

clock. However, the P-II's decoding is overly optimistic since it balks on

any instructions more than 7 bytes long and is also limited by micro-op

decode restrictions.

Anyhow, this design is very much in line with AMD's recommendation of

using complicated load and execute instructions which tend to be longer

and would favor the K6 over the P-II. In fact, the AMD just seems better

suited overall for the CISCy nature of the x86 ISA. For example, the K6

can issue 2 push reg instructions per clock, versus the P-II's 1 push reg

per clock.

According to AMD, the typical 32 bit decode bandwidth is about the same

for both the K6 and the P-II, but 16 bit decode is about 20% faster for the

K6. Unfortunately for AMD, if software developers and compiler writers

heed the P-II optimization rules with the same vigor that they did with the

Pentium, the typical decode bandwidth will change over time to favor the

P-II.

• The K6's issue to execute scheduling is pretty cool. They use complete

logical comparisons between pipeline stages to always find the best path

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 22 of 64

ZM

to propagate from issue to operand read to execute. This is particularly

effective to divide the work between the two integer units. The scheduler

will actually push stalled instructions backwards through the pipeline to

simplify and avoid over speculation in multi-clock stalls situations. This

also allows other instructions to slip through rather than being caught

behind a stalled instruction. This is an effective alternative to the P-II's

reservation station which is an optional extra pipeline stage that serves a

similar purpose.

6x86MX seems to just let their pipelines accumulate with work moving

only in a forward direction which makes them more susceptible to being

backed up, but they do allow their X and Y pipes to swap contents at one

stage.

• The K6 does not support the new P6 ISA instructions, specifically, the

conditional move instructions. It also does not appear to support the set of

MSRs that the P6 does (besides the ever important TSC register.) So from

a programmer's architecture point of view, the K6 is more like a Pentium

than a Pentium-II. Its not clear that this is a real big issue since all the

modern compilers still target the 80386 ISA.

Update: AMD's new "CXT Core" has enabled write combining.

As I have been contemplating the K6 design, it has really grown on me.

Fundamentally, the big problem with x86 processors versus RISC chips is that

they have too few registers and are inherently limited in instruction decode

bandwidth due to natural instruction complexity. The K6 addresses both of these

by maximizing performance of memory based in-cache data accesses to make

up for the lack of registers, and by streamlining CISC instruction issue to be

optimally broken down into RISC like sub-instructions.

It is unfortunate, that compilers are favoring Intel style optimizations. Basically

there are several instructions and instruction mixes that compilers avoid due to

their poor performance on Intel CPUs, even though the K6 executes them just

fine. As an x86 assembly nut, it is not hard to see why I favor the K6 design over

the Intel design.

Optimization

AMD realizing that there is a tremendous interest for code optimization for certain

high performance applications, decided to write up some Optimization

documentation for the K6 (and now K6-2) processor(s). The documentation is

fairly good about describing general strategies as well as giving a fairly detailed

description for modelling the exact performance of code. This documentation far

exceeed the quality of any of Intel's "Optimization AP notes", fundamentally

because its accurate and more thorough.

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 23 of 64

ZM

The reason I have come to this conclusion is that the architecture of the chip

itself is much more straight forward than, say the P-II, and so there is less

explanation necessary. So the volume of documentation is not the only

determining factor to measuring its quality.

If companies were interested in writing a compiler that optimized for the K6 I'm

sure they could do very well. In my own experiments, I've found that optimizing

for the K6 is very easy.

Recommendations I know of: (1) Avoid vector decoded instructions including

carry flag reading instructions and shld/shrd instructions, (2) Use the loop

instruction, (3) Align branch targets and code in general as much as possible, (4)

Pre-load memory into registers early in your loops to work around the load

latency issue.

Brass Tacks

The K6 is cheap, supports super socket 7 (with 100Mz Bus), that has established

itself very well in the market place, winning businnes from all the top tier OEMs

(with the exception of Dell, which seems to have missed the consumer market

shift entirely, and taken a serious step back from challenging Compaq's number

one position.) AMD really changed the minds of people who thought the x86

market was pretty much an Intel deal (including me.)

Their marketting strategy of selling at a low price while adding features (cheaper

Super7 infrastructure, SIMD floating point, 256K on chip L2 cache combined with

motherboard L2 cache) has paid off in an unheard of level brand name

recognition outside of Intel. Indeed, 3DNow! is a great counter to Intel Inside. If

nothing else they helped create a real sub-$1000 PC market, and have dictated

the price for retail x86 CPUs (Intel has been forced to drop even their own prices

to unheard of lows for them.)

AMD has struggled more to meet the demand of new speeds as they come

online (they seem predictably optimistic) but overall have been able to sell a boat

load of K6's without being stepped on by Intel.

Previously, in this section I maintained a small chronical of AMD's acheivements

as the K6 architecture grew, however we've gotten far beyond the question of

"will the K6 survive?" (A question only idiots like Ashok Kumar still ask.) From the

consumer's point of view, up until now (Aug 99) AMD has done a wonderful job.

Eventually, they will need to retire the K6 core -- it has done its tour of duty.

However, as long as Intel keeps Celeron in the market, I'm sure AMD will keep

the K6 in the market. AMD has a new core that they have just introduced into the

market: the K7. This processor has many significant advantages over "6th

generation architectures".

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 24 of 64

ZM

The real CPU WAR has only just begun ...

AMD performance documentation links

The first release of their x86 Optimization guide is what triggered me to write this

page. With it, I had documentation for all three of these 6th generation x86

CPUs. Unfortunately, they often elect to go with terse explanations that assume

the reader is very familiar with CPU architecture and terminologies. This lead me

to some misunderstandings from my initial reading of the documentation (I'm just

a software guy.) On the other hand, the examples they give really help clarify the

inner workings of the K6.

• AMD K6-2 specifications

• AMD-K6 Optimization Guide

• AMD-K6 Processor Data Sheet

Update: The IEEE Computer Society has published a book called "The Anatomy

of a High-Performance Microprocessor A Systems Perspective" based on the

AMD K6-2 microprocessor. It gives inner details of the K6-2 that I have never

seen in any other documentation on Microprocessors before. These details are a

bit overwhelming for a mere software developer, however, for a hard core x86

hacker its a treasure trove of information.

• The Anatomy of a High-Performance Microprocessor A Systems

Perspective

The Intel P-II

This was the first processor (I knew of) to have completely documented post-

RISC features such as dynamic execution, out of order execution and retirement.

(PA-RISC predated it as far as implementing the technology, however; I am

suspicious that HP told Intel to either work with them on Merced, or be sued up

the wazoo.) This stuff really blew me away when I first read it. The goal is to

allow longer latencies in exchange for high throughput (single cycle whenever

possible.) The various stages would attempt to issue/start instructions with the

highest possible probability as often as possible, working out dependencies,

register renaming requirements, forwarding, resource contentions, as later parts

of the instruction pipe by means of speculative and out of order execution.

Intel has enjoyed the status of "defacto standard" in the x86 world for some time.

Their P6/P-II architecture, while not delivering the same performance boost of

previous generational increments, solidifies their position. Its is the fastest, but it

is also the most expensive of the lot.

General Architecture

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 25 of 64

ZM

The P-II is a highly pipelined architecture with an out of order execution engine in

the middle. The Intel Architecture Optimization Manual lists the following two

diagrams:

The two sections shown are essentially concatenated, showing 10 stages of in-

order processing (since retirement must also be in-order) with 3 stages of out of

order execution (RS, the Ports, and ROB write back colored in light blue by me,

not Intel.)

Intel's basic idea was to break down the problem of execution into as many units

as possible and to peel away every possible stall that was incurred by their

previous Pentium architecture as each instructions marches forward down their

assembly line. In particular, Intel invests 5 pipelined clocks to go from the

instruction cache to a set of ready to execute micro-ops. (RISC architectures

have no need for these 5 stages, since their fixed width instructions are generally

already specified to make this translation immediate. It is these 5 stages that truly

separate the x86 from ordinary RISC architectures, and Intel has essentially

solved it with a brute force approach which costs them dearly in chip area.)

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 26 of 64

ZM

Each of Intel's "Ports" is used as a feeding trough for microops to various

groupings of units as shown in the above diagram. So, Intel's 5 micro-op

execution per clock bandwidth is a little misleading in the sense that two ports

are required for any single storage operation. So, it is more realistic to consider

this equivalent, to at most 4 K6 RISC86 ops issued per clock.

As a note of interest, Intel divides the execution and write back stages into two

seperate stages (the K6 does not, and there is really no compelling reason for

the P6's method that I can see.)

Although it is not as well described, I believe that Intel's reservation station and

reorder buffer combinations serves substantially the same purpose as the K6's

scheduler, and similarly the retire unit acts on instruction clusters in exactly the

same way as they were issued (CPUs are not otherwise known to have sorting

algorithms wired into them.) Thus the micro-op throughput is limited to 3 per

clock (compared with 4 RISC86 ops for the K6.)

So when everything is working well, the P-II can take 3 simple x86 instructions

and turn them into 3 micro-ops on every clock. But, as can be plainly seen in

their comments, they have a bizzare problem: they can only read two physical input

register operands per clock (rename registers are not constrained by this

condition.) This means scheduling becomes very complicated. Registers to be

read for multiple purposes will not cost very much, and data dependencies don't

suffer from any more clocks than expected, however the very typical trick of

spreading calculations over several registers (used especially in loop unrolling)

will upper bound the pipeline to two micro-ops per clock because of a physical

register read bottleneck.

In any event, the decoders (which can decode up to 6 micro-ops per clock) are

clearly out-stripping the later pipeline stages which are bottlenecked both by the

3 micro-op issue and two physical register read operand limit. The front end

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 27 of 64

ZM

easily outperforms the back end. This helps Intel deal with their branch bubble,

by making sure the decode bandwidth can stay well ahead of the execution units.

Something that you cannot see in the pictures above is the fact that the FPU is

actually divided into two partitioned units. One for addition and subtraction and

the other for all the other operations. This is found in the Pentium Pro

documentation and given the above diagram and the fact that this is not

mentioned anywhere in the P-II documentation I assumed that in fact the P-II

was different from the PPro in this respect (Intel's misleading documentation is

really unhelpful on this point.) After I made some claims about these differences

on USENET some Intel engineer (who must remain anonymous since he had a

copyright statement insisting that I not copy anything he sent me -- and it made

no mention of excluding his name) who claims to have worked on the PPro felt it

his duty to point out that I was mistaken about this. In fact, he says, the PPro and

P-II have an identical FPU architecture. So in fact the P-II and PPro really are the

same core design with the exception of MMX, segment caching and probably

some different glue logic for the local L2 caches.

This engineer also reiterated Intel's position on not revealing the inner works of

their CPU architectures thus rendering it impossible for ordinary software

engineers to know how to properly optimize for the P-II.

Branch Prediction

Central to facilitating the P-II's aggressive fire and forget execution strategy is full

branch prediction. The functionality has been documented by Agner Fog, and

can track very complex patterns of branching. They have advertised a prediction

rate of about 90% (based on academic work using the same implementation.)

This prediction mechanism was also incorporated into the Pentium MMX CPUs.

Unlike the K6, the branch target buffer contains target addresses, not instructions

and predictions only for the current branch. This means an extra clock is required

for taken branches to be able to decode their branch target. Branches not in the

branch target buffer are predicted statically (backward jumps taken, forward

jumps not.) However, this "extra clock" is generally overlapped with execution

clocks, and hence is not a factor except in short loops, or code loops with poorly

translated code sequences (like compiled sprites.)

In order to do this in a sound manner, subsequent instructions must be executed

"speculatively" under the proviso that any work done by them may have to be

undone if the prediction turns out to be wrong. This is handled in part by

renaming target write-back registers to shadow registers in a hidden set of extra

registers. The K6 and 6x86MX have similar rename and speculation

mechanisms, but with its longer latencies, it is a more important feature for the P-

II. The trade off is that the process of undoing a mispredicted branch is slow (since the

pipelines must completely flush) costing as much as 15 clocks (and no less than 11.)

These clocks are non-overlappable with execution, of course, since the execution

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 28 of 64

ZM

stream cannot be correctly known until the mispredict is completely processed. This

huge penalty offsets the performance of the P-II, especially in code in which no

P6/P-II optimizations considerations have been made.

The P-II's predictor always deals with addresses (rather than boolean compare

results as is done in the K6) and so is applicable to all forms of control transfer

such as direct and indirect jumps and calls. This is critical to the P-II given that

the latency between the ALUs and the instruction fetch is so large.

In the event of a conditional branch both addresses are computed in parallel. But

this just aids in making the prediction address ready sooner; there is no

appreciable performance gained from having the mispredicted address ready

early given the huge penalty. The addresses are computed in an integer

execution port (seperate from the FPU) so branches are considered an ALU

operation. The prefetch buffer is stalled for one clock until the target address is

computed, however since the decode bandwidth out-performs the execution

bandwidth by a fair margin, this is not an issue for non-trivial loops.

Back of envelope calculation

This all means that the average loop penalty is:

(90% * 0) + (10% * 13) = 1.3 clocks per loop

This is obviously a lot higher than the K6 penalty. (The zero as the first penalty

assumes that the loop is sufficiently large to hide the one clock branch bubble.)

For programmers this means one major thing: Avoid mispredicted branches in

your inner loops at all costs (make that 10% closer to 0%). Using tables or

conditional move instructions are common methods, however since the predictor

is used even in indirect jumps, there are situations with branching where you

have no choice but to suffer from branch prediction penalties.

Floating Point

In keeping with their post-RISC architecture, the P-II's have in some cases

increased the latency of some of the FPU instructions over the Pentium for sake

of pipelining at high clock rates and with idea that it hopefully will not matter if the

code is properly scheduled. Intel says that FXCH requires no execution cycles,

but does not explicitly state whether or not throughput bubbles are introduced.

Other than latency, the P-II is very similar to the Pentium in terms of performance

characteristics. This is because all FPU operations go through port 0 except

FXCH's which go to port 1, and the first stage of a multiply takes two non-

pipelined clocks. This is pretty much identical to the P5 architecture.

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 29 of 64

ZM

The Intel floating point design has traditionally beat the Cyrix and AMD CPUs on

floating point performance and this still appears to hold true as tests with Quake

and 3D Studio have confirmed. (The K6 is also beaten, but not by such a large

margin -- and in the case of Quake II on a K6-2 the roles are reversed.)

The P-II's floating point unit is issued from the same port as one of the ALU units.

This means that it cannot issue two integer and 1 floating point operation on

every clock, and thus is likely to be constrained to an issue rate similar to the K6.

As Andreas Kaiser points out, this does not necessarily preclude later execution

clocks (for slower FPU operations for eg) to execute in parallel from all three

basic math units (though this same comment applies to the K6).

As I mentioned above, the P-II's floating point unit is actually two units, one is a

fully pipelined add and subtract unit, and the other is a partially pipelined complex

unit (including multiplies.) In theory this gives greater parallelism opportunities

over the original Pentium but since the single port 0 cannot feed the units at a

rate greater than 1 instruction per clock, the only value is design simplification.

For most code, especially P5 optimized code, the extra multiply latency is likely

to be the most telling factor.

Update: Intel has introduced the P-!!! which is nothing more than a 500Mhz+ P6

core with 3DNow!-like SIMD instructions. These instructions appear to be very

similar in functionality and remarkably similar in performance to the 3DNow!

instruction set. There are a lot of misconceptions about the performance of SSE

versus 3DNow! The best analysis I've seen so far indicate that they are nearly

identical by virtue of the fact that Intel's "4-1-1" issue rate restriction holds back

the mostly meaty 2 micro-op SSE instructions. Furthermore, there are twice as

many subscribers to the SSE units per instruction than 3DNow! which totally

nullifies the doubled output width. In any event, its almost humorous to see Intel

playing catch up to AMD like this. The clear winner: consumers.

Cache

The P-II's L1 cache is 32KB divided into two fixed 16KB caches for separate

code and data. These caches are 4-way set associative which decreases

thrashing versus the K6. But relatively speaking, this is quite small and inflexible

when compared with the 6x86MX's unified cache. I am not a big fan of the P-II's

smaller, less flexible L1 cache, and it appears as though they have done little to

justify it being half the size of their competitors' L1 caches.

The greater associativity helps programs that are written indifferently with respect

to data locality, but has no effect on code mindful of data locality (i.e., keeping

their working sets contiguous and no larger than the L1 cache size.)

The P-II also has an "on PCB L2 cache". What this means is they do not need

use the motherboard bus to access their L2 cache. As such the communications

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 30 of 64

ZM

interface can (and does) have a much higher frequency. In current P-II's it is 1/2

the CPU clock rate. This is an advantage over K6, K6-2 and 6x86MX cpus which

access motherboard based L2 caches at only 66Mhz or 100Mhz. (However the

K6-III's on die L2 cache runs at the CPU clock rate, which is thus twice as fast as

the P-II's)

Other

• The P-II has a partial register stall which is very costly. This occurs when

writing to a sub-register within a few clocks of writing to a 32 bit register.

That is to say, writing to a ?l or ?h 8 bit register will cause a partial register

stall when next reading the corresponding ?x or e?x register. The same is

true of writing to a ?x register then reading the corresponding e?x register.

As described by Agner Fog, the front end is in-order and must assign

internal registers before the instruction can be entered into the

reservations stations. If there is a partial register overlap with a live

instruction ahead of it, then a disjoint register cannot be assigned until that

instruction retires. This is a devastating performance stall when it occurs

because new instructions cannot even be entered into the reservations

stations until this stall is resolved. Intel lists this as having roughly a 7

clock cost.

Intel recommends using XOR reg,reg or SUB reg,reg which will

somehow mark the partial register writes as automatically zero extending.

But obviously this can be inappropriate if you need other parts of the

register to be non-zero. It is not clear to me whether or not this extends to

memory address forwarding (it probably does.) I would recommend simply

seperating the partial register write from the dependent register read by as

much distance as possible.

This is not a big issue so long as the execution units are kep busy with

instructions leading up to this partial registers stall, but that is a difficult

criteria to code towards. One way to accomplish this would be to try to

schedule this partial register stall as far away from the previous branch

control transfer as possible (the decoders usually get well ahead of the

ALUs after several clocks following a control transfer.)

• The P-II, like the P6, performs worse on 16 bit code per clock rate than the

Pentium. (Significantly worse than the Cyrix 6x86MX, and somewhat

worse than the K6.) However, the P-II is not as bad as the P6. In

particular, it uses a small 16 bit segment/selector cache which the P6

does not.

• The P-II's data access actually require an additional address unit for

stores. What this means is that memory writes must be broken down into

ZAHIDMEHBOOB@LIVE.COM +923215020706 (2003)

How Microprocessors Work e 31 of 64

ZM

"address store" and "data store" micro-ops. This increases data write

latency (versus the K6.)

• The P-II can decode instructions to many, many micro-ops, but really only

decodes optimally when 2 out of every 3 instructions are decoded to a

single micro-op and in a specific "4-1-1" sequence (that is for three

instructions to decode in parallel the first must decode to no more than 4

micro-ops, and the second and third in no more than 1 micro-op).

Instructions must also be 8 bytes or less to allow other instructions to be

decoded in the same clock. According to MicroProcessor Report, only one

load or store memory operation can be decoded in the first of the at most

3 instructions. If this is true, it certainly detracts from the "one load or store

operations per clock" claim Intel makes (of course the second of the two

store microops might execute at the same time as a load.)