Professional Documents

Culture Documents

Bethany Percha - Machine Learning Approaches Poster

Uploaded by

AMIA0 ratings0% found this document useful (0 votes)

210 views1 pageClinical information is often recorded as narrative (unstructured) text. Natural language processing could be used to extract relevant information. A feedback system would prompt the physician to modify the report as needed.

Original Description:

Copyright

© Attribution Non-Commercial (BY-NC)

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentClinical information is often recorded as narrative (unstructured) text. Natural language processing could be used to extract relevant information. A feedback system would prompt the physician to modify the report as needed.

Copyright:

Attribution Non-Commercial (BY-NC)

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

210 views1 pageBethany Percha - Machine Learning Approaches Poster

Uploaded by

AMIAClinical information is often recorded as narrative (unstructured) text. Natural language processing could be used to extract relevant information. A feedback system would prompt the physician to modify the report as needed.

Copyright:

Attribution Non-Commercial (BY-NC)

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 1

Machine Learning Approaches to Automatic BI-RADS Classification of Mammography Reports

Bethany Percha and Daniel Rubin

Program in Biomedical Informatics and Department of Radiology, Stanford University

Introduction Preprocessing Classification

Clinical information is often recorded as 41,142 reports extracted from Stanford’s radTF database Technique % Accuracy

narrative (unstructured) text. 38,665 were diagnostic mammograms (not specimen analyses or descriptions of biopsy Naive Bayes 76.4

This is problematic for both researchers and procedures) Multinomial Naive Bayes 83.1

clinicians, as free text thwarts attempts to 22,109 had BI-RADS codes (older reports frequently don’t have them) and were unilateral K-Nearest Neighbors (K=10) 87.5

standardize language and ensure document (single-breast) mammography reports Support Vector Machines

completeness. Each remaining report was processed as follows: LIBLINEAR (L2-norm, one-against-one) 89.3

Natural language processing could be used LIBLINEAR (Multiclass Cramer) 89.3

to extract relevant information from LIBLINEAR-POLY2 (polynomial kernel, degree 2) 90.1

unstructured text reports, but reports must Accuracy was determined using 10-fold cross-validation.

be both complete and consistent.

Misclassification error did not decrease significantly with more training

A feedback system which extracts relevant data (high bias). Including more features, such as bigrams, did not

information from text as it is being generated improve performance.

and prompts the physician to modify the

report as needed would be useful, both in

physician training and clinical practice.

Here we demonstrate our preliminary results

in building an automatic classification

system to automatically assign BI-RADS

assessment codes to mammography

reports.

0 Incomplete

1 Negative

2 Benign finding(s) The final confusion matrix (units are %) was:

3 Probably benign Class Classified As. . .

4 Suspicious After preprocessing, the reports were converted into feature vectors, where each feature was the 0 1 2 3 4 5 6

0 93.7 2.3 3.1 0.1 0.8 0.0 0.0

abnormality number of times a given word stem appeared in a report. There were 2,216 unique stems. 1 0.4 93.6 5.9 0.1 0.0 0.0 0.0

5 Highly suggestive of 2 0.9 11.1 87.1 0.1 0.6 0.0 0.1

malignancy Feature Ranking 3 7.1 21.1 49.1 9.7 12.6 0.0 0.3

6 Known biopsy - 4 8.5 3.7 10.6 0.6 75.9 0.0 0.7

The most informative features were chosen using chi-squared attribute evaluation. The 5 0.0 0.0 0.0 0.0 100.0 0.0 0.0

proven malignancy most informative stems were: 6 4.9 4.9 24.6 0.8 27.9 0.0 36.9

Stem Most Common Context Occurrences per report by class

0 1 2 3 4 5 6 Conclusions

breast (Many contexts.) 4.2 1.9 3.8 4.6 5.7 6.9 7.7

featur no mammographic features of malignancy 0.1 1.1 1.2 0.1 0.1 0.1 0.1 Radiologists’ word choices are a good indicator of which BI-RADS class

nippl x cm from the nipple (Describing a mass.) 1.2 0.1 0.2 0.8 2.3 4.6 2.8 they choose, but the correspondence is not perfect, particularly for the

malign no mammographic features of malignancy 0.1 1.1 1.2 0.1 0.1 0.3 0.3 higher BI-RADS values.

evalu incompletely evaluated 1.0 0.0 0.0 0.1 0.1 0.1 0.2

The development of training software for radiologists based on this

incomplet incompletely evaluated 0.9 0.0 0.0 0.0 0.0 0.0 0.0

mammograph no mammographic features of malignancy 0.3 1.5 1.8 0.7 0.9 1.7 1.2 approach could help them standardize their descriptions of images, and

stabl stable post-biopsy change 0.2 0.3 1.5 0.7 0.4 0.2 0.5 learn to better describe which specific features of the image cause them

calcif calcifications 0.6 0.1 0.7 1.3 1.5 1.9 2.0 to place it in a given class.

You might also like

- A Bayesian Network-Based Genetic Predictor For Alcohol DependenceDocument1 pageA Bayesian Network-Based Genetic Predictor For Alcohol DependenceAMIANo ratings yet

- Privacy-By-Design-Understanding Data Access Models For Secondary DataDocument42 pagesPrivacy-By-Design-Understanding Data Access Models For Secondary DataAMIANo ratings yet

- Process Automation For Efficient Translational Research On Endometrioid Ovarian CarcinomaI (Poster)Document1 pageProcess Automation For Efficient Translational Research On Endometrioid Ovarian CarcinomaI (Poster)AMIANo ratings yet

- An Exemplar For Data Integration in The Biomedical Domain Driven by The ISA FrameworkDocument32 pagesAn Exemplar For Data Integration in The Biomedical Domain Driven by The ISA FrameworkAMIANo ratings yet

- Standard-Based Integration Profiles For Clinical Research and Patient Safety - SALUS - SRDC - SinaciDocument18 pagesStandard-Based Integration Profiles For Clinical Research and Patient Safety - SALUS - SRDC - SinaciAMIANo ratings yet

- Platform For Personalized OncologyDocument33 pagesPlatform For Personalized OncologyAMIANo ratings yet

- Standard-Based Integration Profiles For Clinical Research and Patient Safety - IntroductionDocument5 pagesStandard-Based Integration Profiles For Clinical Research and Patient Safety - IntroductionAMIANo ratings yet

- Privacy Beyond Anonymity-Decoupling Data Through Encryption (Poster)Document2 pagesPrivacy Beyond Anonymity-Decoupling Data Through Encryption (Poster)AMIANo ratings yet

- Capturing Patient Data in Small Animal Veterinary PracticeDocument1 pageCapturing Patient Data in Small Animal Veterinary PracticeAMIANo ratings yet

- Research Networking Usage at A Large Biomedical Institution (Poster)Document1 pageResearch Networking Usage at A Large Biomedical Institution (Poster)AMIANo ratings yet

- TBI Year-In-Review 2013Document91 pagesTBI Year-In-Review 2013AMIANo ratings yet

- Phenotype-Genotype Integrator (PheGenI) UpdatesDocument1 pagePhenotype-Genotype Integrator (PheGenI) UpdatesAMIANo ratings yet

- Bioinformatics Needs Assessment and Support For Clinical and Translational Science ResearchDocument1 pageBioinformatics Needs Assessment and Support For Clinical and Translational Science ResearchAMIANo ratings yet

- The Clinical Translational Science Ontology Affinity GroupDocument16 pagesThe Clinical Translational Science Ontology Affinity GroupAMIANo ratings yet

- Genome and Proteome Annotation Using Automatically Recognized Concepts and Functional NetworksDocument21 pagesGenome and Proteome Annotation Using Automatically Recognized Concepts and Functional NetworksAMIANo ratings yet

- Analysis of Sequence-Based COpy Number Variation Detection Tools For Cancer StudiesDocument8 pagesAnalysis of Sequence-Based COpy Number Variation Detection Tools For Cancer StudiesAMIANo ratings yet

- Standardizing Phenotype Variable in The Database of Genotypes and PhenotypesDocument21 pagesStandardizing Phenotype Variable in The Database of Genotypes and PhenotypesAMIANo ratings yet

- Drug-Drug Interaction Prediction Through Systems Pharmacology Analysis (Poster)Document1 pageDrug-Drug Interaction Prediction Through Systems Pharmacology Analysis (Poster)AMIANo ratings yet

- Research Data Management Needs of Clinical and Translational Science ResearchersDocument1 pageResearch Data Management Needs of Clinical and Translational Science ResearchersAMIANo ratings yet

- A Probabilistic Model of FunctionalDocument17 pagesA Probabilistic Model of FunctionalAMIANo ratings yet

- An Efficient Genetic Model Selection Algorithm To Predict Outcomes From Genomic DataDocument1 pageAn Efficient Genetic Model Selection Algorithm To Predict Outcomes From Genomic DataAMIANo ratings yet

- Creating A Biologist-Oriented Interface and Code Generation System For A Computational Modeling AssistantDocument1 pageCreating A Biologist-Oriented Interface and Code Generation System For A Computational Modeling AssistantAMIANo ratings yet

- Educating Translational Researchers in Research Informatics Principles and Methods-An Evaluation of A Model Online Course and Plans For Its DisseminationDocument29 pagesEducating Translational Researchers in Research Informatics Principles and Methods-An Evaluation of A Model Online Course and Plans For Its DisseminationAMIANo ratings yet

- Beyond The Hype-Developing, Implementing and Sharing Pharmacogenomic Clinical Decision SupportDocument31 pagesBeyond The Hype-Developing, Implementing and Sharing Pharmacogenomic Clinical Decision SupportAMIANo ratings yet

- Developing, Implementing, and Sharing Pharmacogenomics CDS (TBI Panel)Document23 pagesDeveloping, Implementing, and Sharing Pharmacogenomics CDS (TBI Panel)AMIANo ratings yet

- A Workflow For Protein Function Discovery (Poster)Document1 pageA Workflow For Protein Function Discovery (Poster)AMIANo ratings yet

- Predicting Antigenic Simillarity From Sequence For Influenza Vaccine Strain Selection (Poster)Document1 pagePredicting Antigenic Simillarity From Sequence For Influenza Vaccine Strain Selection (Poster)AMIANo ratings yet

- Clustering of Somatic Mutations To Characterize Cancer Heterogeneity With Whole Genome SequencingDocument1 pageClustering of Somatic Mutations To Characterize Cancer Heterogeneity With Whole Genome SequencingAMIANo ratings yet

- Qualitative and Quantitative Image-Based Biomarkers of Therapeutic Response For Triple Negative CancerDocument47 pagesQualitative and Quantitative Image-Based Biomarkers of Therapeutic Response For Triple Negative CancerAMIANo ratings yet

- An Empirical Framework For Genome-Wide Single Nucleotide Polymorphism-Based Predictive ModelingDocument16 pagesAn Empirical Framework For Genome-Wide Single Nucleotide Polymorphism-Based Predictive ModelingAMIANo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- A Fuzzy Expert System For Earthquake Prediction, Case Study: The Zagros RangeDocument4 pagesA Fuzzy Expert System For Earthquake Prediction, Case Study: The Zagros RangeMehdi ZareNo ratings yet

- Explaining The Placebo Effect: Aliefs, Beliefs, and ConditioningDocument33 pagesExplaining The Placebo Effect: Aliefs, Beliefs, and ConditioningDjayuzman No MagoNo ratings yet

- Dr. Ramon de Santos National High School learning activity explores modal verbs and nounsDocument3 pagesDr. Ramon de Santos National High School learning activity explores modal verbs and nounsMark Jhoriz VillafuerteNo ratings yet

- Wood - Neal.2009. The Habitual Consumer PDFDocument14 pagesWood - Neal.2009. The Habitual Consumer PDFmaja0205No ratings yet

- Mind-Body (Hypnotherapy) Treatment of Women With Urgency Urinary Incontinence: Changes in Brain Attentional NetworksDocument10 pagesMind-Body (Hypnotherapy) Treatment of Women With Urgency Urinary Incontinence: Changes in Brain Attentional NetworksMarie Fraulein PetalcorinNo ratings yet

- Revised Blooms TaxonomyDocument4 pagesRevised Blooms TaxonomyJonel Pagalilauan100% (1)

- ملزمة اللغة الكتاب الازرق من 1-10Document19 pagesملزمة اللغة الكتاب الازرق من 1-10A. BASHEERNo ratings yet

- Curry Lesson Plan Template: ObjectivesDocument4 pagesCurry Lesson Plan Template: Objectivesapi-310511167No ratings yet

- Classroom Talk - Making Talk More Effective in The Malaysian English ClassroomDocument51 pagesClassroom Talk - Making Talk More Effective in The Malaysian English Classroomdouble timeNo ratings yet

- Problem Based Learning in A Higher Education Environmental Biotechnology CourseDocument14 pagesProblem Based Learning in A Higher Education Environmental Biotechnology CourseNur Amani Abdul RaniNo ratings yet

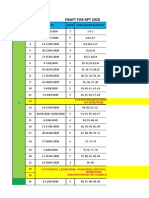

- Draft 2020 English Yearly Lesson Plan for Year 2Document5 pagesDraft 2020 English Yearly Lesson Plan for Year 2Nurul Amirah Asha'ariNo ratings yet

- Word Retrieval and RAN Intervention StrategiesDocument2 pagesWord Retrieval and RAN Intervention StrategiesPaloma Mc100% (1)

- English Writing Skills Class 11 ISCDocument8 pagesEnglish Writing Skills Class 11 ISCAnushka SwargamNo ratings yet

- Rhythmic Activities Syllabus Outlines Course OutcomesDocument5 pagesRhythmic Activities Syllabus Outlines Course OutcomesTrexia PantilaNo ratings yet

- Fatima - Case AnalysisDocument8 pagesFatima - Case Analysisapi-307983833No ratings yet

- Lesson Plan Bahasa Inggris SMK Kelas 3Document5 pagesLesson Plan Bahasa Inggris SMK Kelas 3fluthfi100% (1)

- Nature of Human BeingsDocument12 pagesNature of Human BeingsAthirah Md YunusNo ratings yet

- SE Constructions in SpanishDocument4 pagesSE Constructions in Spanishbored_15No ratings yet

- Mariam Toma - Critique Popular Media AssignmentDocument5 pagesMariam Toma - Critique Popular Media AssignmentMariam AmgadNo ratings yet

- Claremont School of BinangonanDocument6 pagesClaremont School of BinangonanNerissa Ticod AparteNo ratings yet

- Prime Time 3 Work Book 78-138pgDocument60 pagesPrime Time 3 Work Book 78-138pgSalome SamushiaNo ratings yet

- Etp 87 PDFDocument68 pagesEtp 87 PDFtonyNo ratings yet

- Level 1, Module 3 Hot Spot Extra Reading PDFDocument3 pagesLevel 1, Module 3 Hot Spot Extra Reading PDFNgan HoangNo ratings yet

- Academic Paper2Document10 pagesAcademic Paper2api-375702257No ratings yet

- Politics and Administration Research Review and Future DirectionsDocument25 pagesPolitics and Administration Research Review and Future DirectionsWenaNo ratings yet

- Disabled Theater Dissolves Theatrical PactDocument11 pagesDisabled Theater Dissolves Theatrical PactG88_No ratings yet

- Neural Networks and CNNDocument25 pagesNeural Networks and CNNcn8q8nvnd5No ratings yet

- Lesson Plan in Science 6 4 Quarter: Kagawaran NG Edukasyon Sangay NG Lungsod NG DabawDocument2 pagesLesson Plan in Science 6 4 Quarter: Kagawaran NG Edukasyon Sangay NG Lungsod NG DabawEmmanuel Nicolo TagleNo ratings yet

- 1 My First Perceptron With Python Eric Joel Barragan Gonzalez (WWW - Ebook DL - Com)Document96 pages1 My First Perceptron With Python Eric Joel Barragan Gonzalez (WWW - Ebook DL - Com)Sahib QafarsoyNo ratings yet

- Compliment of SetDocument4 pagesCompliment of SetFrancisco Rosellosa LoodNo ratings yet