Professional Documents

Culture Documents

Assignment 1

Uploaded by

Udeeptha_Indik_6784Original Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Assignment 1

Uploaded by

Udeeptha_Indik_6784Copyright:

Available Formats

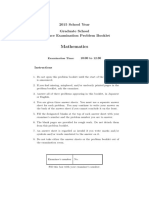

THE UNIVERSITY OF MORATUWA Department of Electronic and Telecommunication Engineering EN5254-Information Technology Assignment # 1

The assignment is due on Sunday, January 30, 2011. You have to submit the answers only to questions 4, 6, 9 and 10.

1. Example of joint entropy Let p(x, y) be given by P X=0 X=1 Y=0 1/3 0 Y=1 1/6 1/2

Find (a) H(X), H(Y ). (b) H(X|Y ), H(Y |X). (c) H(X, Y ). (d) H(Y ) H(Y |X). (e) I(X; Y ). (f) Draw a Venn diagram for the quantities in (a) through (e). 2. Coin ips A fair coin is ipped until the rst head occurs. Let X denote the number of ips required. (a) Find the entropy H(X) in bits. The following expressions may be useful:

rn =

n=1

r , 1r

nrn =

n=1

r 2 . 1r

(b) A random variable X is drawn according to this distribution. Find an ecient sequence of yes-no questions of the form, Is X contained in the set S? Compare H(X) to the expected number of questions required to determine X. 3. Entropy of functions of a random variable Let X be a discrete random variable. Show that the entropy of a function of X is less than or equal to the entropy of X by justifying the following steps: H(X, g(X)) = H(X) + H(g(X)|X) = H(X); H(X, g(X)) = H(g(X)) + H(X|g(X)) H(g(X)). Thus H(g(X)) H(X). 1

4. World Series The World Series is a seven-game series that terminates as soon as either team wins four games. Let X be the random variable that represents the outcome of a World Series between teams A and B; possible values of X are AAAA, BABABAB, and BBBAAAA. Let Y be the number of games played, which ranges from 4 to 7. Assuming that A and B are equally matched and that the games are independent, calculate H(X), H(Y ), H(Y |X), and H(X|Y ). 5. Average entropy Let H(p) = p log2 p (1 p) log2 (1 p) be the binary entropy function. (a) Evaluate H(1/4) using the fact that log2 3 1.584. Hint: Consider an experiment with four equally likely outcomes, one of which is more interesting than the others. (b) Calculate the average entropy H(p) when the probability p is chosen uniformly in the range 0 p 1. (c) Calculate the average entropy H(p1 , p2 , p3 ) where (p1 , p2 , p3 ) is a uniformly distributed probability vector. Generalize to dimension n. 6. Run-length coding Let X1 , X2 , . . . , Xn be (possibly dependent) binary random variables. Suppose that one calculates the run lengths R = (R1 , R2 , . . .) of this sequence (in order as they occur). For example, the sequence X = 000 11 00 1 00 yields run lengths R = (3, 2, 2, 1, 2). Compare H(X1 , X2 , . . . , Xn ), H(R), and H(Xn , R). Show all equalities and inequalities, and bound all the dierences. 7. Entropy of a sum Let X and Y be random variables that take on values x1 , x2 , . . . , xr and y1 , y2 , . . . , ys , respectively. Let Z = X + Y . (a) Show that H(Z|X) = H(Y |X). Argue that if X, Y are independent, then H(Y ) H(Z) and H(X) H(Z). Thus the addition of independent random variables adds uncertainty. (b) Give an example (of necessarily dependent random variables) in which H(X) > H(Z) and H(Y ) > H(Z). (c) Under what conditions does H(Z) = H(X) + H(Y )? 8. A measure of correlation Let X1 and X2 be identically distributed, but not necessarily independent. Let =1 (a) Show = I(X1 ; X2 )/H(X1 ). (b) Show 0 1. (c) When is = 0? (d) When is = 1? H(X2 |X1 ) . H(X1 )

9. Random questions One wishes to identify a random object X p(x). A question Q r(q) is asked at random according to r(q). This results in a deterministic answer A = A(x, q) {a1 , a2 , . . .}. Suppose that X and Q are independent. Then I(X; Q, A) is the uncertainty in X removed by the question - answer (Q, A). (a) Show that I(X; Q, A) = H(A|Q). Interpret. (b) Now suppose that two i.i.d. questions Q1 , Q2 , r(q) are asked, eliciting answers A1 and A2 . Show that two questions are less valuable than twice a single question in the sense that I(X; Q1 , A1 , Q2 , A2 ) 2I(X; Q1 , A1 ). 10. Bottleneck Suppose a (non-stationary) Markov chain starts in one of n states, necks down to k < n states, and then fans back to m > k states. Thus X1 X2 X3 , X1 {1, 2, . . . , n}, X2 {1, 2, . . . , k}, X3 {1, 2, . . . , m}. (a) Show that the dependence of X1 and X3 is limited by the bottleneck by proving that I(X1 ; X3 ) log k. (b) Evaluate I(X1 ; X3 ) for k = 1, and conclude that no dependence can survive such a bottleneck.

You might also like

- Project ON Probability: Presented by - Name: Bhawesh Tiwari Class - X B' Roll No. 35 Subject - MathematicsDocument14 pagesProject ON Probability: Presented by - Name: Bhawesh Tiwari Class - X B' Roll No. 35 Subject - MathematicsUjjwal Tiwari65% (34)

- Solved ProblemsDocument7 pagesSolved ProblemsTaher ShaheenNo ratings yet

- EE5143 Problem Set 1 practice problemsDocument4 pagesEE5143 Problem Set 1 practice problemsSarthak VoraNo ratings yet

- Problem Set1Document2 pagesProblem Set1Abhijith ASNo ratings yet

- EE376A Homework 1 Entropy, Joint Entropy, Data Processing InequalityDocument4 pagesEE376A Homework 1 Entropy, Joint Entropy, Data Processing InequalityhadublackNo ratings yet

- HW 1Document3 pagesHW 1Vera Kristanti PurbaNo ratings yet

- Mathematical methods in communication homework entropy and correlationDocument5 pagesMathematical methods in communication homework entropy and correlationDHarishKumarNo ratings yet

- Tutorial1 20Document2 pagesTutorial1 20Er Rudrasen PalNo ratings yet

- Joint Probability Distributions of Two-Dimensional Random VariablesDocument66 pagesJoint Probability Distributions of Two-Dimensional Random VariablesViba R UdupaNo ratings yet

- Midterm SolutionsDocument9 pagesMidterm SolutionsAlexanderNo ratings yet

- Tutsheet 4Document2 pagesTutsheet 4vishnuNo ratings yet

- MStat PSB 2016Document5 pagesMStat PSB 2016SOUMYADEEP GOPENo ratings yet

- EE5143 Tutorial1Document5 pagesEE5143 Tutorial1Sayan Rudra PalNo ratings yet

- Elements of Information Theory.2nd Ex 2.4Document4 pagesElements of Information Theory.2nd Ex 2.4Ricardo FonsecaNo ratings yet

- E2 201: Information Theory (2019) Homework 3: Instructor: Himanshu TyagiDocument2 pagesE2 201: Information Theory (2019) Homework 3: Instructor: Himanshu Tyagis2211rNo ratings yet

- Eco Survey 2017-18 SummaryDocument1 pageEco Survey 2017-18 SummarySarojkumar BhosleNo ratings yet

- paper 1 J. Adv. Math. Stud 2013Document9 pagespaper 1 J. Adv. Math. Stud 2013amnakalsoombuttNo ratings yet

- Tutsheet 5Document2 pagesTutsheet 5qwertyslajdhjsNo ratings yet

- HW 2Document3 pagesHW 2hadublackNo ratings yet

- Problem Set 1Document2 pagesProblem Set 1lsifualiNo ratings yet

- Problem Sheet 3.3Document3 pagesProblem Sheet 3.3Niladri DuttaNo ratings yet

- E2 201: Information Theory (2019) Homework 4: Instructor: Himanshu TyagiDocument3 pagesE2 201: Information Theory (2019) Homework 4: Instructor: Himanshu Tyagis2211rNo ratings yet

- MSO 201a: Probability and Statistics 2015-2016-II Semester Assignment-XDocument23 pagesMSO 201a: Probability and Statistics 2015-2016-II Semester Assignment-XVibhorkmrNo ratings yet

- Kuo - On C-Sufficiency of Jets Of...Document5 pagesKuo - On C-Sufficiency of Jets Of...Charmilla FreireNo ratings yet

- UMN Info Theory Homework 5Document2 pagesUMN Info Theory Homework 5Sanjay KumarNo ratings yet

- CS 70 Discrete Mathematics and Probability Theory Fall 2019 Alistair Sinclair and Yun S. SongDocument3 pagesCS 70 Discrete Mathematics and Probability Theory Fall 2019 Alistair Sinclair and Yun S. SongbobHopeNo ratings yet

- 5 Prob - OverviewDocument10 pages5 Prob - OverviewRinnapat LertsakkongkulNo ratings yet

- Random Variable Modified PDFDocument19 pagesRandom Variable Modified PDFIMdad HaqueNo ratings yet

- Pas Problem SetDocument4 pagesPas Problem SetAnkita ChandraNo ratings yet

- 09EC352 Assignment 1Document1 page09EC352 Assignment 1aju1234dadaNo ratings yet

- Maths Model Paper 2Document7 pagesMaths Model Paper 2ravibiriNo ratings yet

- Mathematical StatsDocument99 pagesMathematical Statsbanjo111No ratings yet

- Tutsheet 5Document2 pagesTutsheet 5vishnuNo ratings yet

- Standard Part of The Theory of Linear OperatorsDocument2 pagesStandard Part of The Theory of Linear OperatorsAlexandre DieguezNo ratings yet

- Isi JRF Stat 07Document10 pagesIsi JRF Stat 07api-26401608No ratings yet

- Discrete Random Variables and Probability DistributionDocument20 pagesDiscrete Random Variables and Probability DistributionShiinNo ratings yet

- 1187w01 Prex3Document2 pages1187w01 Prex3Nguyen Manh TuanNo ratings yet

- IITM Logo (1) .PNGDocument12 pagesIITM Logo (1) .PNGJia SNo ratings yet

- MSO 201a: Probability and Statistics 2019-20-II Semester Assignment-V Instructor: Neeraj MisraDocument27 pagesMSO 201a: Probability and Statistics 2019-20-II Semester Assignment-V Instructor: Neeraj MisraAdarsh BanthNo ratings yet

- Probability Problem SetDocument2 pagesProbability Problem SetSaiRatna SajjaNo ratings yet

- 15math eDocument7 pages15math eJuan PerezNo ratings yet

- Probability S2010Document2 pagesProbability S2010acebagginsNo ratings yet

- BS UNIT 2 Note # 3Document7 pagesBS UNIT 2 Note # 3Sherona ReidNo ratings yet

- Lec38 - 210108071 - AKSHAY KUMAR JHADocument12 pagesLec38 - 210108071 - AKSHAY KUMAR JHAvasu sainNo ratings yet

- Chapter 3-2857Document8 pagesChapter 3-2857Kevin FontynNo ratings yet

- Tuljaram Chaturchand College of Arts, Science and Commerce, BaramatiDocument20 pagesTuljaram Chaturchand College of Arts, Science and Commerce, BaramatiLiban Ali MohamudNo ratings yet

- Lecture 1: Entropy and Mutual Information: 2.1 ExampleDocument8 pagesLecture 1: Entropy and Mutual Information: 2.1 ExampleLokesh SinghNo ratings yet

- Department of Mathematics Seoul National University: Introduction Into Topology (Prof. Urs Frauenfelder) Assignment 1Document1 pageDepartment of Mathematics Seoul National University: Introduction Into Topology (Prof. Urs Frauenfelder) Assignment 1ChoHEEJeNo ratings yet

- Qualifying Exam in Probability and Statistics PDFDocument11 pagesQualifying Exam in Probability and Statistics PDFYhael Jacinto Cru0% (1)

- paper 2 amna n Safeer sb 2014Document13 pagespaper 2 amna n Safeer sb 2014amnakalsoombuttNo ratings yet

- JRF Stat STB 2020Document3 pagesJRF Stat STB 2020Abhigyan MitraNo ratings yet

- HW1 SolDocument2 pagesHW1 SolAlexander LópezNo ratings yet

- Chapter 4Document21 pagesChapter 4Anonymous szIAUJ2rQ080% (5)

- Solutions To Information Theory Exercise Problems 1-4Document11 pagesSolutions To Information Theory Exercise Problems 1-4sodekoNo ratings yet

- Aditya Mock Test 2023 PDFDocument1 pageAditya Mock Test 2023 PDFShrajal KushwahaNo ratings yet

- Cohomology Operations (AM-50), Volume 50: Lectures by N. E. Steenrod. (AM-50)From EverandCohomology Operations (AM-50), Volume 50: Lectures by N. E. Steenrod. (AM-50)No ratings yet

- Assignment 1Document3 pagesAssignment 1Udeeptha_Indik_6784No ratings yet

- Mobile Core Network EvolutionDocument2 pagesMobile Core Network EvolutionUdeeptha_Indik_6784No ratings yet

- Load Sharing in SS7Document6 pagesLoad Sharing in SS7api-3806249No ratings yet

- Mobile Core Network EvolutionDocument2 pagesMobile Core Network EvolutionUdeeptha_Indik_6784No ratings yet

- Probability LectureNotesDocument16 pagesProbability LectureNotesbishu_iitNo ratings yet

- Continuous Random Variable Can Take Any One of An Unlimited NumberDocument73 pagesContinuous Random Variable Can Take Any One of An Unlimited Numbermath magicNo ratings yet

- Mean, Variance, and Standard Deviation of A Discrete Probability Distribution and Its ApplicationDocument20 pagesMean, Variance, and Standard Deviation of A Discrete Probability Distribution and Its ApplicationDia CoraldeNo ratings yet

- The sampling distribution of sample means willalso be normally distributed for any sample size nDocument31 pagesThe sampling distribution of sample means willalso be normally distributed for any sample size nPulanco GabbyNo ratings yet

- Rubric - Math - Problem Solving - Mutually Exclusive ExamplesDocument1 pageRubric - Math - Problem Solving - Mutually Exclusive Examplesrpittma12No ratings yet

- Probability Concepts and ApplicationsDocument9 pagesProbability Concepts and ApplicationsimtiazbscNo ratings yet

- Probability L2 PTDocument14 pagesProbability L2 PTpreticool_2003No ratings yet

- Continuous Probability Distributions ExplainedDocument84 pagesContinuous Probability Distributions ExplainedARYAN CHAVANNo ratings yet

- Ayodhya Disaster Management PlanDocument107 pagesAyodhya Disaster Management PlanHarshita MittalNo ratings yet

- Permutation, Combination, and Probability PDFDocument6 pagesPermutation, Combination, and Probability PDFShehab ShahreyarNo ratings yet

- 18 Principles Counting Numbers Multiplication AdditionDocument31 pages18 Principles Counting Numbers Multiplication AdditionJohnlouie RafaelNo ratings yet

- Tabel R Product Moment Big SampleDocument4 pagesTabel R Product Moment Big SampleRieneke KusmawaningtyasNo ratings yet

- Probability Questions With SolutionsDocument13 pagesProbability Questions With SolutionsTATATAHERNo ratings yet

- On the notion of equilibrium in economics and its challengesDocument32 pagesOn the notion of equilibrium in economics and its challengesNelson MucanzeNo ratings yet

- Constructing Probability DistributionDocument14 pagesConstructing Probability DistributionOdessa Raquel VistaNo ratings yet

- Power System ReliabilityDocument11 pagesPower System ReliabilityDia Putranto Harmay100% (1)

- 5.3 and 5.4 Live Meeting Filled inDocument5 pages5.3 and 5.4 Live Meeting Filled inGhazal LailaNo ratings yet

- Stat 110 Strategic Practice 4, Fall 2011Document16 pagesStat 110 Strategic Practice 4, Fall 2011nxp HeNo ratings yet

- Approaches To ProbabilityDocument4 pagesApproaches To ProbabilitybhishmNo ratings yet

- Football Trading Compound Plan CalculatorDocument13 pagesFootball Trading Compound Plan CalculatorRaph SunNo ratings yet

- OPERRES First Long Exam Reviewer. Show All Solutions. Round Off Final Answers To The Nearest Two Decimal Places and Encircle/box ThemDocument5 pagesOPERRES First Long Exam Reviewer. Show All Solutions. Round Off Final Answers To The Nearest Two Decimal Places and Encircle/box ThemNikita San MagbitangNo ratings yet

- MinitabDocument30 pagesMinitabAruni JayathilakaNo ratings yet

- Chapters 5Document20 pagesChapters 5حنين الباشاNo ratings yet

- Probability and Statistic1Document42 pagesProbability and Statistic1Mayette Rose Sarroza100% (1)

- Group 3 - Chapter 8 - Emperical PhilosophersDocument12 pagesGroup 3 - Chapter 8 - Emperical PhilosophersConrad Pranze VillasNo ratings yet

- Lesson 7Document19 pagesLesson 7History RoseNo ratings yet

- Identify If The Two Events Is Mutually Exclusive or Inclusive Then Find The Probability. (3 Points - Solution, 2 Points - Final Answer)Document5 pagesIdentify If The Two Events Is Mutually Exclusive or Inclusive Then Find The Probability. (3 Points - Solution, 2 Points - Final Answer)jayve carbonNo ratings yet

- Quiz SLTN 4Document7 pagesQuiz SLTN 4frabziNo ratings yet

- CH 2Document42 pagesCH 2Eden SerawitNo ratings yet