Professional Documents

Culture Documents

Multi Modal Human Computer Interaction

Uploaded by

mtech@seemuOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Multi Modal Human Computer Interaction

Uploaded by

mtech@seemuCopyright:

Available Formats

PROCEEDING OF NCCN-10, 12-13 MARCH,10

Multimodal Human Computer Interaction

Seema Rani*1, Rajeev Kumar Ranjan*2

*

Department of ECE, Sant Longowal Institute of Engineering and Technology, Longowal, India

1

seemagoyal.2009@gmail.com

2

rkranjan2k@yahoo.co.in

II.

Abstract: Human-to-human communication takes advantage of an abundance of information and cues, human computer interaction is limited to only a few input modalities (usually only keyboard and mouse) In this paper, we present an overview to overcome some of these human computer communication barriers. Multimodal interfaces are to include not only typing, but speech, lip-reading, eye-tracking, face recognition and tracking, and gesture and handwriting recognition. Keywords: Multiple modalities, speech recognition, gesture recognition, lip reading.

DIFFERENT INPUT MODALITIES

A. Gesture Recognition Humans use a very wide variety of gestures ranging from simple actions of using the hand to point at objects to the more complex actions that express feelings and allow communication with others. Gestures should therefore play an essential role in MMHCI. A major motivation for these research efforts is the potential of using hand gestures in various applications aiming at natural interaction between the human and the computer-controlled interface. There are several important issues that should be considered when designing a gesture recognition system . The first phase of a recognition task is choosing a mathematical model that may consider both the spatial and the temporal characteristics of the hand and hand gestures. The approach used for modeling plays a crucial role in the nature and performance of gesture interpretation. Once the model is detected, an analysis stage is required for computing the model parameters from the features that are extracted from single or multiple input streams. These parameters represent some description of the hand pose or trajectory and depend on the modeling approach used. After the parameters are computed, the gestures represented by them need to be classified and interpreted based on the accepted model and based on

I.

INTRODUCTION

The process of using multiple mode of interaction for the communication between user and computer is called multimodal interaction. Speech and mouse, speech and peninput, mouse and pen input, mouse and keyboard are the examples of multimodal interaction. Todays focus is on the combination of speech and gesture recognition as a form of multimodal interaction. There are many reasons to study multimodal interaction. It enhances mobility, speed and usability. It provides greater expressive powers and increases flexibility. In human-human communication, interpreting the mix of audio-visual signals is essential in understanding communication. Researchers in many fields recognize this, and advances in the development of unimodal techniques and in hardware technologies there has been a significant growth in multimodal human computer interaction (MMHCI) research. we place input modalities in two major groups: based on human senses (vision, audio and touch), and others (mouse, keyboard, etc.). The visual modality includes any form of interaction that can be interpreted visually, and the audio modality any form that is audible. Multimodal techniques can be used to construct a variety of interfaces. Of particular interest for our goals are perceptual and attentive interfaces. Perceptual interfaces are highly interactive, multimodal interfaces that enable rich, natural, and efficient interaction with computers. Attentive interfaces, on the other hand, are context-aware interfaces that rely on a persons attention as the primary input. The goal of these interfaces is to use gathered information to estimate the best time and approach for communicating with the user.

Fig1 Architecture of gesture recognition

some grammar rules that reflect the internal syntax of gestural commands. The grammar may also encode the interaction of gestures with other communication modes such as speech,

152

PROCEEDING OF NCCN-10, 12-13 MARCH,10

gaze, or facial expressions. A number of systems have been designed to use gestural input devices to control computer memory and display. These systems perceive gestures through a variety of methods and devices. While all the systems presented identify gestures, only some systems transform gestures into appropriate system specific commands. The representative architecture for these systems is show in Figure1 A basic gesture input device is the word processing tablet statistical techniques.

.1) Word Spotting Word spotting systems for continuous, speaker independent speech recognition are becoming more and more popular because of the many advantages they afford over more conventional large scale speech recognition systems. Because of their small vocabulary and size, they offer a practical and efficient solution for many speech recognition problems that depend on the accurate recognition of a few important keywords.

Fig2: Gesture recognition using HMM Fig 4 word spotting (HMM based)

B. Speech Recognition Among human communication modalities, speech and language undoubtedly carries a significant part of the information in human communication. At Carnegie Mellon several approaches toward robust high performance speech

Fig3: Hidden Markov Models (HMM)

C. Lip Reading Most approaches to automated speech perception are very sensitive to background noise or fail totally when more than one speaker talk simultaneously, as it often happens in offices, conference rooms, and other real-world environments. Humans deal with these distortions by considering additional sources such as context information and visual information, such as lip movements. This latter source is involved in the recognition process and is even more important for hearingimpaired people, but also contributes significantly to normal hearing recognition. In order to exploit lip-reading as a source of information complementary to speech, lip-reading system is developed based on the MS-TDNN and testing it on a letter spelling task for the German alphabet. The recognition performance is understandably poor (31% using lip-reading only) because some phonemes cannot be distinguished using pure visual information; however, the thrust of this work is to show how a state-of-the-art speech recognition system can be significantly improved by considering additional visual

recognition is under way. They include Hidden Markov Models (HMM) and several hybrid connectionist and

153

PROCEEDING OF NCCN-10, 12-13 MARCH,10

information in the recognition process. This section presents only the lip-reading component; its combination with speech recognition. D. Combination of Speech and Lip Movement Early fusion applies to combinations like speech+lip movement. It is difficult because: Of the need for MM training data. Because data need to be closely synchronized. Computational and training costs E. Combination of Gesture and Speech

scope specified by the circle in the gesture frame. The word spotter produces "delete word", which causes the parser to fill the action slot with delete and the unit subslot of source-scope with word. The frame merger then produces a unified frame in which action=delete, source-scope has unit=word and type=box with coordinates as specified by the drawn circle. From this the command interpreter constructs an editing command to delete the word circled by the user. III. CONCLUSION

We have highlighted major approaches for multimodal human-computer interaction. We discussed techniques for gesture recognition, speech recognition, and lip reading. The information that is presented via several modalities is merged and refers to various aspects of the same process. Combining modalities could be seen to: improve recognition performance significantly by exploiting redundancy provide greater expressiveness and flexibility by exploiting complementary information in different modalities improve understanding in allowing for complementary modalities to take effect.

Figure2: Multi-Modal Interpretation

IV.

REFERENCES

We based the interpretation of multi-modal inputs on frames. As explained above, a frame consists of slots representing parts of an interpretation. In our case, there are three slots named action, source-scope, and destination-scope (the destination is used only for the move command). Within each scope slot are subslots named type and unit. The possible scope types are: point (specified by coordinates), box (specified by coordinates of opposite corners), and selection (i.e. currently highlighted text). The unit subslot specifies the unit of text to be operated on, e.g. character or word. Consider an example in which a user draws a circle and says "Please delete this word". The gesture-processing subsystem recognizes the circle and fills in the coordinates of the box

[1] J.K. Aggarwal and Q. Cai, Human motion analysis: A review, CVIU, 73(3):428-440, 1999. [2] Application of Affective Computing in Human-computer Interaction, Int. J. of Human-Computer Studies, 59(1-2), 2003. [3] J. Ben-Arie, Z. Wang, P. Pandit, and S.Rajaram, Human activity recognition using multidimensional indexing, IEEE Trans. On PAMI, 24(8):1091-1104, 2002. [4] A.F. Bobick and J. Davis, The recognition of human movement using temporal templates, IEEE Trans. on PAMI, 23(3):257267, 2001. [5] I. Guyon, P. Albrecht, Y. LeCun, J. Denker, and W. Hub-bard. Design of a Neural Network Character Recognizer for a Touch Terminal. Pattern Recognition, 1990. [6] P. Haffner, M. Franzini, and A. Waibel. Integrating Time Alignment and Neural Networks for High Performance Continuous Speech Recognition. In Proc. ICASSP91. [7]. Baecker , R., et al., "A Historical and Intellectual Perspective," in Readings in Human-Computer Interaction: Toward the Year 2000, Second Edition, R. Baecker, et al., Editors. 1995, Morgan Kaufmann Publishers, Inc.: San Francisco. pp. 35-47.

154

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Grade 8 Least Mastered Competencies Sy 2020-2021: Handicraft Making Dressmaking CarpentryDocument9 pagesGrade 8 Least Mastered Competencies Sy 2020-2021: Handicraft Making Dressmaking CarpentryHJ HJNo ratings yet

- Cooperative LinuxDocument39 pagesCooperative Linuxrajesh_124No ratings yet

- Revised Exam PEDocument3 pagesRevised Exam PEJohn Denver De la Cruz0% (1)

- Chemistry For Changing Times 14th Edition Hill Mccreary Solution ManualDocument24 pagesChemistry For Changing Times 14th Edition Hill Mccreary Solution ManualElaineStewartieog100% (50)

- STIHL TS410, TS420 Spare PartsDocument11 pagesSTIHL TS410, TS420 Spare PartsMarinko PetrovićNo ratings yet

- Open Book Online: Syllabus & Pattern Class - XiDocument1 pageOpen Book Online: Syllabus & Pattern Class - XiaadityaNo ratings yet

- COE301 Lab 2 Introduction MIPS AssemblyDocument7 pagesCOE301 Lab 2 Introduction MIPS AssemblyItz Sami UddinNo ratings yet

- PCZ 1503020 CeDocument73 pagesPCZ 1503020 Cedanielradu27No ratings yet

- System Administration ch01Document15 pagesSystem Administration ch01api-247871582No ratings yet

- The Elder Scrolls V Skyrim - New Lands Mod TutorialDocument1,175 pagesThe Elder Scrolls V Skyrim - New Lands Mod TutorialJonx0rNo ratings yet

- Crop Science SyllabusDocument42 pagesCrop Science Syllabusbetty makushaNo ratings yet

- English 9 Week 5 Q4Document4 pagesEnglish 9 Week 5 Q4Angel EjeNo ratings yet

- Paper 11-ICOSubmittedDocument10 pagesPaper 11-ICOSubmittedNhat Tan MaiNo ratings yet

- The 100 Best Books For 1 Year Olds: Board Book HardcoverDocument17 pagesThe 100 Best Books For 1 Year Olds: Board Book Hardcovernellie_74023951No ratings yet

- Kalitantra-Shava Sadhana - WikipediaDocument5 pagesKalitantra-Shava Sadhana - WikipediaGiano BellonaNo ratings yet

- The Origin, Nature, and Challenges of Area Studies in The United StatesDocument22 pagesThe Origin, Nature, and Challenges of Area Studies in The United StatesannsaralondeNo ratings yet

- Decolonization DBQDocument3 pagesDecolonization DBQapi-493862773No ratings yet

- KKS Equipment Matrik No PM Description PM StartDocument3 pagesKKS Equipment Matrik No PM Description PM StartGHAZY TUBeNo ratings yet

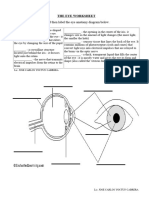

- The Eye WorksheetDocument3 pagesThe Eye WorksheetCally ChewNo ratings yet

- Project - Dreambox Remote Video StreamingDocument5 pagesProject - Dreambox Remote Video StreamingIonut CristianNo ratings yet

- P&CDocument18 pagesP&Cmailrgn2176No ratings yet

- Chapter 17 Study Guide: VideoDocument7 pagesChapter 17 Study Guide: VideoMruffy DaysNo ratings yet

- Review Test 1: Circle The Correct Answers. / 5Document4 pagesReview Test 1: Circle The Correct Answers. / 5XeniaNo ratings yet

- Film Interpretation and Reference RadiographsDocument7 pagesFilm Interpretation and Reference RadiographsEnrique Tavira67% (3)

- GRADE 302: Element Content (%)Document3 pagesGRADE 302: Element Content (%)Shashank Saxena100% (1)

- Outbound Idocs Code Error Event Severity Sap MeaningDocument2 pagesOutbound Idocs Code Error Event Severity Sap MeaningSummit YerawarNo ratings yet

- Super Gene 1201-1300Document426 pagesSuper Gene 1201-1300Henri AtanganaNo ratings yet

- Statistics and Probability Course Syllabus (2023) - SignedDocument3 pagesStatistics and Probability Course Syllabus (2023) - SignedDarence Fujihoshi De AngelNo ratings yet

- P4 Science Topical Questions Term 1Document36 pagesP4 Science Topical Questions Term 1Sean Liam0% (1)

- Temperature Measurement: Temperature Assemblies and Transmitters For The Process IndustryDocument32 pagesTemperature Measurement: Temperature Assemblies and Transmitters For The Process IndustryfotopredicNo ratings yet