Professional Documents

Culture Documents

Is Science Art

Uploaded by

Ujjawal KumarOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Is Science Art

Uploaded by

Ujjawal KumarCopyright:

Available Formats

Hooke

Election 2000

Is Science Art?

Hooke - The Westminster School Science Magazine

Editorial

Charlie Ogilvie Having been somewhat belatedly asked to edit Hooke I have been pleasantly surprised at how eager people are to contribute and I would like to thank the various contributors as well as my co-editors who have worked with a certain degree of tirelessness on this issue! We have tried to keep the layout much the same as it seems to work, but if you have any suggestions for next issue please let us know. I would like to see a questions and answers page as featured in the back of New Scientist in the next issue so if you have any questions, or answers to the questions posed in this issue please E-mail them to me, along with any other comments: Charlieogilvie@hotmail.com. I hope you enjoy the issue. Fatima Dhalla Welcome to the new edition of Hooke. This edition has been put together at fairly short notice but thanks to the efforts of Charlie, Emad, Valerie and Kaveh we have managed to pull together a number of interesting articles and exciting new features. As for the biology section biology tends to have a bad press amongst other scientists; however, I assure you the biology articles in this edition of Hooke raise a number of interesting and provocative points. So read the articles and feel free to come and see me with any feedback or ideas for future articles. Valerie Diederichs Finally the moment you have all been waiting for has arrived your latest copy of Hooke is here and once again your world is complete. First, a plug for my area, the physics aspect of the magazine, which I am sure you will all find incredibly interesting. For those of you

PAGE 2

that think physics isnt your thing and that it doesnt affect you then turn to the article about the cosmological constant, and find out about the history, the present and the future of the universe. For those of you that are going to take physics A-level well there is an insight into your future, and for those of you that arent, well you will find out what you are missing. That is enough from me so read the articles and come and see me with any ideas for future issues. Kaveh Barkhordar Hooke has always been a magazine for scientists, so this editions leader is of great interest for the artistic minority who read Hooke. Sadly, as always, chemistry is pretty much in the background, but I hope to change this in the future, so contact me with any chemistry ideas! Finally, a big thank you to everyone who contributed, especially Charlie, Val, Emad and Fatima. Also thanks to Julian Elliot for his invaluable assistance, which made this edition of Hooke possible. Mohammed Mostaque Hello and welcome to the Election 2000 edition of Hooke magazine. Weve been a bit pushed this time, as has been said virtually every issue so far Never mind, here it is in all its glory. Thats enough for now, unless you want some jokes if H20 is water and H202 is hydrogen peroxide, what is H204? Drinking. Haha. There we go, no matter how bad the magazine is, you wont beat that On a more serious note though Id like to thank the various writers who made this magazine possible through their hard work and efforts, and on the short time period they were given. Articles from anyone in the school are always welcomejust contact one of us editors either personally, or by e-mail. Enjoy.

ELECTION 2000

Hooke - The Westminster School Science Magazine

Contents

2 3 4 5 8 9 12 16 16 17 19 21 24 26 29 30 32 Editorial Contents An interview with the Headmaster Is Science Art? The Tizard Lecture 2000 Gene Therapy The Prisoners Dilemma The New Telescope The Physicists Song 2,3,7,8-Tetra What? Physics Phun Cladistics, Evolution and the Death of the Ladder Krazy Kavehs Khaotic Kekul Korner A Science of History Proteins and Microgravity Einsteins Greatest Error The Chemical Elements

PAGE 3

ELECTION 2000

Hooke - The Westminster School Science Magazine

An Interview with the Headmaster

Charlie and Emad interview the headmaster about science at Westminster WOULD YOU SAY SCIENCE HAS BECOME THE NEW CLASSICS?

No, as that would imply that the classics are dead, and this is not so. They are alive and flourishing so there is no comparison to be made. However, the emphasis on science is changing, the cutting edge used to be physics, especially theoretical physics, but now Biology and specifically Biochemistry have become the new science and more people are now being involved in this aspect.

OVERTAKE OXBRIDGE IN SCIENCE?

Id think that Imperial would say that they have already overtaken Oxbridge in science. WILL THIS DENT THE TRADITIONAL LINKS BETWEEN WESTMINSTER AND OXBRIDGE AS MORE SCIENCE STUDENTS GO TO IMPERIAL FROM WESTMINSTER? No, Westminster has very strong links with Imperial and if you consider it to be one college then more people go there than any other college. However, I personally believe that it is better to get away and meet new people as well as experience a change of approach. I also believe in the tutorial system, where as in other places the smallest teaching groups will be 12-15 in a seminar.

SHOULD WE INCREASE THE EMPHASIS ON SCIENCE IN THIS SCHOOL?

No, science is now well staffed, and everyone who wishes to do it may. In fact, the amount of science A-Levels overall in the country has been decreasing, and no expansion is required as we are ahead in this aspect.

SO THE SUBJECT PROPORTIONS WILL STAY THE SAME IN THIS SCHOOL FOR THE FORSEEABLE FUTURE AND NEW SYSTEM?

Yes, all pupils will do five straight AS levels, and although the numbers studying science may change due to the increased number required, the proportions doing it as opposed to Arts will not. WILL THE NEW A-LEVELS AFFECT THE QUALITY OF EDUCATION? This is not easy to see. The new physics syllabus seems to be a lot easier, but the examination boards are maintaining that the synoptic paper will maintain the current standard of examinations. WILL THE DECREASE IN STANDARDS AFFECT WESTMINSTERS? No, S Levels and the new World Class tests will help maintain the current high standards. In fact, Oxford and Cambridge are thinking of reinstating entrance tests for all subjects, as opposed to the small number that currently require them.

DO YOU INTEND TO INCREASE THE ACADEMIC TEACHING OF COMPUTER SCIENCE AND INFORMATION TECHNOLOGY AS ITS IMPORTANCE IN OUR DAILY LIVES GROWS?

We will not introduce an IT A level or GCSE. However we hope that increasing numbers of lower school pupils will pass the European Computer Driving Licence (EDCL) so that new sixth form options using these necessary skills can be introduced. IN RESPONSE TO THE CREATIONIST MOVEMENT IN THE UNITED STATES, DO YOU BELIEVE ALTERNATIVE BEGINNINGS SHOULD BE TAUGHT AS SCIENCE OR R.S. OR SIMPLY AVOIDED? Darwin should be taught in schools, as nothing in Darwin either proves or disproves the existence of a God - who knows, God might like Darwin. It is appalling for the state to prescribe exactly what should be taught in schools, neglecting other points of views.

SO YOU WOULD ENCOURAGE DISCUSSION OF THESE TOPICS?

Yes, certainly, although this is a church school, but that is a different matter. ELECTION 2000

WILL IMPERIAL COLLEGE, LONDON

PAGE 4

Hooke - The Westminster School Science Magazine

Is Science an Art?

Knowledge has killed the sun, making it a ball of gas with spots The world of reason and science This is the and sterile world the abstracted mind inhabits - D.H. Lawrence The Hooke team asks the heads of science whether they agree...

Dr Beavon

I doubt that the great Nobel prize -winning physicist Richard Feynman ever met DH Lawrence. More of a loss to Lawrence than Feynman (quite right - I dont like Lawrence). In 1980 Feynman, in a Horizon programme on The Pleasure of Finding Things Out, raised the question why knowing more about something seems, in some peoples eyes, to be knowing less. Lawrences point. Knowing the fusion reaction that powers the Sun does not devalue the beauty of the Sun. It does not affect the poetic sunbeam/ sunlight/sunshine it remains the stone that put the stars to flight. How can it be devalued? Is it not more of a comment on Lawrences insecurity than it is a concern for Natural Philosophy? Robert Hooke would not have understood the argument at all. For him the natural world was there to explore, all aspects of it being legitimate areas for creative thought. At school here he had mastered a good deal of Classics and Mathematics and Science, and I dont suppose he thought of them as requiring a different psyche. Why should it be different now? CP Snow in the 1950s bemoaned the two cultures as being wasteful and unnecessary, but it exists still. Indeed it is alive and well and living at Westminster. Ironic, really, given the enormous increase in scientific teaching though possibly not in scientific education. The problems associated with creativity in science stem, firstly, from the long apprenticeship that is necessary before truly creative work can be done; secondly from the fact that it is not an unfettered creativity. I can perfectly well understand someone saying I am happy with my world view; I recognise the value of the scientific method, but my interests lie elsewhere; in consequence I do not wish to serve that apprenticeship. Fine. We are not all the same. But do not then accuse the scientist of being uncreative just because the creativity is of a different type. The process of developing a working model of the Universe requires insight, and leaps of the imagination, an idea of how it could be. The ELECTION 2000

constraints arise from the necessity to conform with experimental data; an hypothesis giving rise to the necessity for red leaves on trees clearly requires more thought. The idea that in science nothing occurs other than mere discovery, the successive turning over of ever more deeply buried playingcards in the Universal pack is risible. The mutual sniping between subjects is endemic, and though common in schools is not confined to them. And it is mutual; those there are that think the knowledge of how to wire a plug is in some way superior to knowledge of Greek, or vice-versa. I find this argument utterly incomprehensible. Its rather like asking me whether I prefer porridge or a bicycle. But the sniping is there, as a judgment of who is educated. Oh, havent you read.? in tones of incredulity. People, it seems, are fond of a canon, a list of works that defines the educated person. Oddly it always seems to be the books that they have read! What about my canon? Have you, as an educated person, read oh, Wilkie Collins? George Borrow? Thackeray? Trollope (both varieties)? Flaubert, Tobias Smollett, Defoe? How about Ambrose Bierce? Brian Phelan? Daniel Dennett, Paul Davies, Omar Khayyam, Gogol, Mario Vargas Lhosa? How about Catullus, Ovid? Shall I go on? No, because the whole notion of a canon is absurd. What you have read is accidental as well as intentional; imposed and voluntary; probably some is unfinished. My list is not a canon. It is (part of) my list. So, whence the antipathy? Tribalism. Insecurity. Why should someone who knows little or nothing about science find me a threat? If he cares that much he can go and find out about whatever it is that is bothersome. He might be better than me, eventually. After all, suggest an author to me and I will usually have a look. I dont feel threatened by not having read Martial or Terence or Corneille or Dawkins. Nor do I feel uneducated I am simply PAGE 5

Hooke - The Westminster School Science Magazine

ignorant of what these people have to say. In reality every one of us, however educated we are, is ignorant of all but a minuscule proportion of what has been written, even in our own speciality. I simply do not believe anyone who claims otherwise. Perhaps its all down to the shade of Benjamin Jowett. Sometime Master of Balliol, theologian and classicist, it was written of him I am the Master of this College and what I dont know isnt knowledge. Jowett lives on, and I find this deeply sad. Im with Feynman if I know all there is to be known about the biochemistry of a flower, and the beauty of the flower is available to me as it is to you, then I know more than the pure aesthete. I dont understand how it can be otherwise. The difference may be that I object less to the aesthete than seemingly he does to me. of art there is. * particularly physicists! ** If you like, scientists and artists are people using the same data in different ways. The trick is to be able to analyse the data in lots of ways - there are no dividing lines!

Dr Roli Roberts

To those of us involved in science, and perhaps most specifically the study of living things, the assertion (by D.H. Lawrence and many others) that to dissect nature is to destroy its magic is incomprehensible; indeed it is in the details that the true miracle of nature is revealed. Where else might one find machines a millionth of a centimetre across which use quantum mechanical effects to carry out physics and chemistry to which 21st century human technology can only aspire? A single cell (whose very existence would remain unknown today were it not for the curiosity of scientists) contains more marvels than we could possibly imagine from a leisurely perusal of our macroscopic world. From the humblest bug to our own brains, intricacy that would shame a watchmaker drives the most complex processes in the universe. Do we then wonder at the splendour that the tenacity of survival can wring from an unsupervised evolutionary process acting on a bowl of chemicals? Or do we see it as incontrovertible evidence of a mindful creator of bewildering ingenuity? People may gaze with awe at a spectacular sunset, a beautiful flower, a child speaking its first words, but if they follow the Luddite's plea and shy away from exploring how these things arise then they deprive themselves of the greatest show of all, whether God or Nature be the ringmaster. As for the question of creativity in science, for every Picasso there are a hundred derivative ELECTION 2000

Dr Walsh

Science as a creative 'art'? It is interesting that it is the word 'art' that finds itself in inverted commas; again, here is a discussion, the depth of which hangs on definitions and contextual interpretations. What is 'art'? What is 'science'? Huxley's view was that science is basically organised common sense, and so scientific approach can reasonably be applied to everything. But of course both art and science are products of the human mind, both explorations of and by conscious thought and for me there is no confusion about 'dividing lines' as Wolpert puts it - they are not required. As for science killing the Sun, well, I'm with Feynman on this one: not only do scientists* as humans appreciate beauty and natural phenomena, the wonder and delight is enhanced by even a modicum of understanding - or wanting to understand mechanisms ** Whilst I find quantum mechanics very challenging and difficult to explore, I still find it to be very beautiful - it is one of the finest works PAGE 6

Hooke - The Westminster School Science Magazine

plodders who churn out "To Our Dearest Daughter" greetings cards, and for every Shostokovich an army of Ronnie Hazlehursts penning mindless games show theme tunes. Science too has its inspired geniuses and its prosaic drones. But what IS "creativity" in science? Although creativity in the arts is as diverse an animal as the arts it infuses, I would claim that its scientific counterpart is a card-carrying member of the club; indeed its principle is almost indistinguishable from that of creativity in poetry. The creative act in both science and poetry is the construction of metaphor. In each field a hitherto incommunicable entity (an often invisible natural phenomenon in science, and an equally intangible impression in the mind of the poet) is packaged into a form which can be understood by others by reference to a seemingly unrelated but universally familiar object. Almost all successful poetry is metaphorical, and likewise almost every scientific term (we often resort to Latin or Greek to disguise our sheepishness!) takes its name from an everyday item or concept. As in poetry, this is not merely for the sake of giving something a label; the very nature of the chosen metaphor shapes the way that other people will think about the idea. And as in poetry, that metaphor may either flower and bear fruit, or stagnate and live only as testament to the banality or misconceptions of its originator. Dr Roli Roberts Lecturer in Molecular Genetics. tional side, too, though their main purpose is to entertain and thus provide vital interludes in the monotonous existence of human life. Science, on the other hand, sets out to explain rather than entertain. But, worryingly, popular belief holds that science is no more than a dry body of facts. It is not. Science is an approach; it makes a serious attempt to explain the world around us. It has to involve method and rules. It certainly includes difficult concepts, complex terminology and abstract thinking. And there is no place for anarchy within scientific methodology. Scientists must obey the conventions if they are to have any validity. But science is so much more than this. There is excitement in discovery and delight from having ones observations and hypotheses irrefutably confirmed. And, a biologist, whilst seeking to relate structure to function, will also view living things aesthetically. Most of you reading this will be familiar with the names of James Watson and Francis Crick. They were the young biologists who, in the middle of the 20th century, worked out the structure of DNA (deoxyribose nucleic acid). Arguably, this was the most significant discovery of that century, possibly even the millennium. DNA is after all the very essence of life and universal to all organisms whether they are bacteria, fungi, plants or animals. Who could not be moved by the account of how they pieced together the structure of DNA from the evidence available? Who could fail to wonder at the sheer beauty of DNA, the simplicity of its complexity, the flexibility of its constancy, the mutability of its stability? DNA contains the code for life. At the time of writing, the human genome project is all but finished. Its secrets will soon be revealed. So what are my conclusions? I would argue that there is a clear boundary between the arts and sciences. Yet there is overlap. Science is not just another art form although it relies on creative thought and the odd leap of faith. And, the manifestation of some of the arts requires scientific knowledge; printing, photography and painting to name but three examples. So why is there so much antagonism between the pretenders of the two camps? Let us continue PAGE 7

Mrs Lambert

While most of us have a clear idea of what constitutes the arts, the majority would not seriously include science as part of it. My initial reaction is to throw out the motion. However, I shall proceed with caution and explore some ideas. I propose that some of the common features of the various art forms are freedom of expression, provocation, titillation, excitement and the ability to evoke a variety of emotions. But they have a raELECTION 2000

Hooke - The Westminster School Science Magazine

The Tizard Lecture 2000

Valerie Diederichs gives a report on this years Tizard Lecture This years Tizard lecture was on the leaning tower of Pisa and was given by Prof John Burland, professor of soil mechanics at Imperial College London. In 1989 the Tower of Pisa was shut due to the collapse of a similar tower in Italy. The threat of economical loss to Pisa resulted in the Mayor setting up an International commission of scientists to stabilise the tower. Prof Burland was on this commission. When the tower was first inspected it revealed that the tower stood on very soft ground and that any movement or disturbance of the soil on the downward side may result in the tower falling over, as well as the materials in the tower having reached stresses near to their point of structural failure. The tower was first temporarily stabilised using large blocks of lead each weighing 10 tonnes, to a total of 600 tonnes. Although these stopped the movement of the tower, they neither straightened the tower, nor did they provide an attractive option. Being a tourist attraction, the stabilisation technique had to preserve the overall appearance of the tower as well as ensure the safety of the visitors. The commission then proceeded to develop a more permanent solution. A student at Imperial College, London developed a special drill. This drill which would be inserted on the north side (the upward end of the tower) allowed material to be drilled out without disturbing the surrounding soil. The drill could then be retracted slowly and the cavities allowed to fill with minimal disturbance. The removal of soil would lower this side of the tower, and thus reduce the lean of the tower. Tests and mathematical models were generated, and the controversial method was granted permission for a test on the tower. Before that the test was conducted a support cable was built and the cable was fixed to the ground at the north end of the tower. The test conducted in February 1999 was to remove a small amount of soil, enough to correct the lean of the tower by twenty arc seconds, an amount which would not be visible to the naked eye. The uncertainty and the conditions of the soil meant that a maximum of twenty litres of soil every two days could be extracted from under the tower. The test was successful. The apparatus used is shown in the middle. The operation ended in the beginning of June 1999 at which stage the tower had moved through 90 arc seconds. By September 1999 the tower had moved a further 40 arc seconds. Due to the success of this operation, a continuation with a total of 41 drills will take place this year. If successful this operation will result in an overall movement of half a degree. The lecture provided a fascinating insight into one of the worlds most famous landmarks. The speaker was informative and humorous as the audience was entertained with short stories from the site, as well as fascinating details of the history of the tower and its construction. The

PAGE 8

ELECTION 2000

Hooke - The Westminster School Science Magazine

Gene Therapy

Fatima Dhalla explores the developing world of Gene Therapy

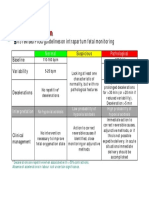

What is Gene Therapy? Many people have a clouded view of what gene therapy actually entails. Images of transgenic mutants spring to mind. However, in reality gene therapy serves as a corrective measure rather than a cosmetic commodity. Gene therapy is the altering of a patient's genetic material in order to fight or prevent disease. Gene therapy has many useful applications and its use could revolutionise clinical medicine: Genetic disorders can be corrected by providing cells with healthy copies of missing or flawed genes, in other words by altering the genetic makeup of certain cells. Genetic defects can be prevented from being passed on to future generations by the alteration of germ cells in germ-line gene therapy. Gene therapy could also be used as a form of drug delivery by the insertion of a gene, which produces a useful product, into the DNA of the patient's cells. For example, during blood vessel surgery, a gene that makes an anticlotting factor could be inserted into the DNA of cells lining blood vessels to help prevent dangerous blood clots from forming. This application would save much effort, money, and time. It has been more than a decade since the first approved clinical trial to put genes into the cells of human beings was initiated and since then more than 3000 patients have been treated. Initially there was an overoptimistic view that gene therapy would be quick to revolutionise medicine and the technical challenges involved in the process were not fully understood. However, lessons learnt from early studies have redirected the course of research and the prospect of clinical applications of gene therapy is fast becoming a reality. Two types of gene therapy exist: in vivo gene therapy: A vector carrying the therapeutic gene or genes is directly administered to the patient. ex vivo gene therapy: Cells from the patient are harvested, then cultivated in the laboratory and ELECTION 2000

incubated with vectors carrying a corrective/ therapeutic gene. Cells with the new genetic material are then harvested and transplanted back into the patient from whom they were derived. One challenge that was not initially fully appreciated was the difficulty in delivering genes to cells needy of correction. The techniques used in gene correction therapy, whereby mutant genes are modified directly, were far too inefficient, therefore it was necessary to treat genetic disorders by addition gene therapy, whereby a normal copy of the mutant gene is added to the cells. Both viral and non-viral techniques for gene delivery have been clinically tested and, to date, viral methods have proved to be most effective, especially in cases that require the stable integration of the delivered gene. Most gene therapy experiments rely on disabled mouse retroviruses to deliver the healthy gene; these viruses usually carry their genetic information into cells and integrate it into the cell's own genetic material. In order to modify retroviruses for safe use in gene therapy scientists remove crucial genes so that the viruses cannot reproduce after they deliver their genetic information. The problem with crippling replication is that the mechanism the viruses use to spread genes is also inactivated and therefore the spread of the vector is governed by diffusion, which is often limited by the small intercellular spaces through which the viral vectors must move. It is also possible give the retrovirus a new gene that makes it susceptible to an antibiotic so that the cells that it infects can easily be destroyed if they become cancerous or if the virus delivers the genes to the wrong cells. An advantage of using retroviruses in gene delivery is their specificity. Scientists can select a particular retrovirus that normally infects cells of a desired type, deactivate it and use it as a gene vector. To achieve the long-term effects of gene therapy, integration of the added gene into the chromosomal DNA of the host may be essential. Unfortunately, the most efficient integrating gene transfer systems use small viral vectors (usually retroviruses) that are unable to accommodate full-length human genes. Furthermore random integration of the gene transfer vectors onto different chromosomal locations can also adPAGE 9

Hooke - The Westminster School Science Magazine

versely influence gene expression. In addition to this, scientists have encountered problems related to the development of immune responses directed towards the viral vectors and some of the genes being transferred. The number of modified viruses being used in gene therapy is growing, as is the understanding of the immunology of these systems. Scientists have worked to produce a new generation of adeno-vectors without viral genes - the "gutted" adenovirus. These adenoviruses have shown long-term "survival" in animal models. The virus is not associated with any serious human disease and has a low potential to induce an immune response or produce inflammation. It is also able to infect mature, well-differentiated cells, such as muscle cells or neurones. The main drawbacks are the limited coding capacity of the virus (about 4.0kb) and the laborious production systems. Gene therapy using viral methods results in the addition of genetic material; it does not correct the underlying genetic defect causing the disease. Revolutionary changes are being investigated in non-viral mediated gene transfer and gene correction. Experiments to correct single nucleotide mutations in genomic DNA have recently been developed using RNA and DNA residues in duplex conformation to target the desired nucleotide exchange . The RNA-DNA sequence is complementary to that of the target gene, except that it contains one mismatched nucleotide when aligned with the genomic DNA sequence. It appears that this unpaired nucleotide is recognised by endogenous repair systems, leading to an alteration of the DNA sequence of the target gene. This method could herald a major advance in gene therapy that could mediate the correction of mutations that cause genetic diseases. It may seem surprising that the majority of current clinical applications of gene therapy show a preponderance of treatment for cancer rather than genetic disorders. The chart in the middle of the page shows the proportion of protocols for human gene therapy trials relating to various types of disease. The following are examples of clinical trials that are currently underway using gene therapy: trial in gene therapy delivered by aerosol to the lungs of patients with cystic fibrosis. The treatment consists of a corrected version of the faulty cystic fibrosis gene in an adeno-associated virus shell. It is hoped that the virus transfers the corrective gene into lung epithelial cells. The patients receive the treatment through a nebuliser, which blows a mist consisting of the viral particles into the air passages.

SEVERE COMBINED IMMUNO DEFICIENCY SYNDROME (SCIDS)

Gene therapy has been successfully used in France to treat SCIDS. Severe Combined ImmunoDeficiency Syndrome is an x-linked, life-threatening disorder, which usually results in death before the infant reaches one year due to the inability to mount an immune response to infection. The defective gene encodes part of a cell receptor that signals the stem cells of T-lymphocytes to develop and grow. Thus SCIDS patients are left vulnerable to even the slightest infections. The treatment involves the harvesting of the patient's bone marrow and infecting it with a retrovirus carrying the corrective gene. After three days of repeated infection, the bone marrow is put back into the patient. In the recent trials doctors were able to demonstrate the corrected version of the gene in the new cells of the patient. Of the two children that have been treated both have cell counts comparable to those of normal children of the same age but will have to be monitored to ensure the long-term success of the therapy.

HAEMOPHILIA

Haemophilia is potentially one of the few genetic diseases that are curable with gene transfer technology because clotting factors do not require physiological regulation and as little as 1% plasma concentration will convert a severe haemophiliac to a mild haemophiliac. Any gene therapy strategy for haemophilia should be low risk, however, they have to be tested in animal model before clinical trials can commence.

CYSTIC FIBROSIS

Researchers at Stanford University have started a PAGE 10

HIV-1

ELECTION 2000

Hooke - The Westminster School Science Magazine

In HIV-1 infection it may be possible to apply the technology of gene therapy to deliver anti-viral agents directly to the infected cells and potentially benefit the infected individual. Retroviral vectors that inhibit the HIV-1 virus at various points in the viral cycle have been constructed. Clinical trials using various vectors have begun, in which Tlymphocytes are harvested, transduced with vectors containing potentially therapeutic genes, cultured and infused back into the patient. To check for success the relative survival engineered T-cell populations is monitored by vector specific PCR, while the recipients' functional immune system is monitored by standard in vitro and in vivo testing protocols. for years and gene therapy is not different to any other kind of risky medical research. However, in the aftermath of Jesse Gelsinger's death it has become evident that better regulation of trails, and coordination between data safety and monitoring boards and institutional review boards to share information on adverse outcomes are vital if clinical trials of gene therapy are to be effective. Patients cannot be entered into a clinical research trial unless they may benefit. They must be healthy enough for the trial to show whether the treatment benefits them. If they are near death, the researchers cannot determine whether death resulted from the treatment or from the disease. They must also be able to give informed consent, which usually rules out children or babies.

CANCER

Scientists are currently working on ways to genetically alter immune cells that It has been suggested that reare naturally or deliberately searchers' ties to biotechnoltargeted to cancers. They are ogy companies might comprointerested in arming such mise studies. In the United cells with cancer fighting States federal law has encourgenes and returning them to aged such ties in order to bring the body, where they could AIDS PATIENT RECEIVING GENE THERAPY research efforts to the market more forcefully attack the place and benefit patients. Alcancer. Clinical trials along most all leading researchers have such ties. these lines are in progress for the treatment of melanoma. The future for gene therapy is hopeful. Although there has been more speculation and optimism than Alternatively, cancer cells can be taken from the products, the results of recent trials augur well for a body and altered genetically so that they elicit a future in clinical gene therapy, which has the potenstrong immune response. These cells can then be tial to dramatically returned to the body in the hope that they will act as transform the treata cancer vaccine. A variety of clinical trials using ment of a great many this approach are now under way. medical conditions. It is also possible to inject a tumour with a gene that renders the tumour cells vulnerable to an antibiotic or other drug. Subsequent treatment with the drug should kill only the cells that contain the foreign gene. Since other cells would be spared, the treatment should have few side effects. Two trials using this approach are in progress for treatment of brain tumours.

GENE THERAPY DEATH

A gene therapy experiment at the University of Pennsylvania led to the death of Jesse Gelsinger, an 18 year old man with an inherited metabolic disorder. Ethical issues about genetics have been discussed ELECTION 2000 PAGE 11

Hooke - The Westminster School Science Magazine

The Prisoners Dilemma

SengHin Kong talks about decisions, both easy and hard

Decision-making is at the heart of life itself, whether decisions be programmed responses or consciously evaluated. Although there may be a great multiplicity of responses in any given situation, and the decision and its outcomes may involve incalculable complexity, it is possible to crystallise these ideas into a mathematical framework, such as the Prisoners Dilemma. In order to simplify analysis, a decision taken by an individual may only have two outcomes: cooperation (being nice) or defection (being nasty). Also, it is assumed that the decisions are made by individuals pursuing self interest without central authority (which may enforce a certain cooperation/defection). This appears to be a very restrictive assumption, but in fact, the application of this model extends very far, and of course, can be made more complex to accommodate more than two outcomes. Therefore, the question is, when to cooperate, when to defect, and whether any particular strategy can be employed. There is also an evolutionary aspect to this conundrum: how can a particular strategy invade an environment dominated by other strategies, can this strategy evolve to become more widespread, and is the strategy will be stable? The Prisoners Dilemma originally describes the situation where two accomplices to a crime are arrested and questioned separately. Either can defect against the other by confessing and hoping for a lighter sentence. But if both confess, their confessions are not as valuable. On the other hand, if both cooperate with each other by refusing to confess, the district attorney can only convict them on a minor charge. Formally, then, the Prisoners Dilemma game is defined as a two player game in which each player can either cooperate (C) or defect (D). If both cooperate, both get the reward, R. if both defect, both get the punishment P. If one cooperates and the other defects, the first gets the suckers payoff, S, and the other gets the rewards from exploitation, E. The payoffs are ordered E>R>P>S, and satisfy R>(E+S)/2 indeed the payoffs may be qualitatively and quantitatively different for each player as long as these inequalities hold (this is important in an iterated situation so that players cannot gain by alternating being exploited by and exploiting each other). The rules of the game are now defined, first for one iteration. All strategies are possible. There is no way to be certain of the other players move, (hence, communication, although allowed, is quite worthless). The players must make exactly one of either move. The payoffs are unchangeable. The payoffs are illustrated in the grid below, where payoffs are shown (row player, column player): Therefore, in a one-off meeting, cooperation is the optimal bilateral strategy, but defection is the optimal unilateral strategy to adopt (whatever the opponent does, you maximise your own points by defecting). Since bilateral agreements cannot be made with certainty, decisions are always unilateral, and hence defection is the dominant strategy. However, if the game is played more than once, then each player may react to the others strategy this is an iterated Prisoners Dilemma. Imagine that the game is played a known finite number of times what would your last move be? On the last move there is no future to influence, in effect reducing the situation to a one off game, so both players anticipate defection for that move, and so the penultimate move is reduced to a one-off game and so on until the first move. Therefore, there is no incentive to cooperate if the number of interactions is known and finite. However, in most realistic settings, such as business relationships the players cannot be sure when the last interaction between them will take place. When considering an iterated Prisoners dilemma, the only information available on the players about each other is the history of their interaction so far. What makes it possible for cooperation to emerge is that the players might meet again. However, the future is less important than the present, since the game may

PAGE 12

ELECTION 2000

Hooke - The Westminster School Science Magazine

be terminated unexpectedly (e.g. other player dies) and a payoff now is usually more attractive than an equal payoff in the future. So can you see a way of accounting for this? This can be represented by a discount parameter (between 0 and 1) the degree to which the payoff of each move is discounted relative to the previous move. If this discount parameter is sufficiently high, there is no best strategy. For example, if one player adopts the strategy of always defecting, then the other player should only ever defect; however, if one player adopts a strategy of cooperation, which if suckered by a defection from the opponent, changes to permanent defection, then the other player should never defect. However, in formulating strategies, rationality is not at all necessary, procedural rationality, based on rules of thumb can produce satisficing outcomes, and even simple operational procedures followed blindly can produce interesting results. Thus, the framework of the Prisoners Dilemma is broad enough to encompass situations from international relations to bacterial colonies. Having just established the absence of an optimal strategy, in a tournament set up by Robert Axelrod, where competing strategies were pitted against each other, one strategy emerged victorious. This strategy, called TIT FOR TAT, involved cooperating on the first move and then reciprocating the opponents previous move thereafter. This very simple strategy had several interesting qualities. People are accustomed to thinking in zero-sum situations, where one persons loss is the others gain (e.g. a football match if one team loses, then the other must win). In this frame of mind, when people enter a Prisoners Dilemma situation, the only standard by which they judge their own strategys performance is the benchmark set by the opponent in each game in short, envy is manifested, and this is not a good strategy unless ones goal is to destroy the other player. Therefore, a player should compare their score to that which could be obtained using another strategy. In a variegated environment of many other strategies, each individual game becomes only a part of the whole, so a strategy that can do well across the board is better than strategies which can exploit a few weaker ones, but end up punishing each other with mutual defections. In an iterated Prisoners Dilemma of long duration, ones opponents success is virtually a prerequisite for ones own success, as mutual cooperation is the most rewarding outcome, and so envy is counter-productive. It may be observed that the British system of government may not serve the interests of the people to the extent that one might think, at least qualitatively. There are two main parties, Conservative and Labour, ELECTION 2000 competing for votes in order to remain in power thus they are in a zero sum situation, and the goal is to destroy the other party. This results in polarisation over many issues, such as Europe, education, taxation etc, so that the electorate must choose between the parties and cannot agree with both. However, the process of guiding a country to a socioeconomic optimum is a process of cooperation drawing battle lines over every issue only hinders sensible debate and compromise, which so often would be beneficial for example over European Monetary Union. TIT FOR TAT is never the first to defect, it always approaches with open arms, and hence increases the potential for cooperation, and avoids unnecessary conflicts, but this generosity must be supported by a mechanism to respond to provocation, or else the strategy may suffer exploitation. Furthermore, when interactions are likely to be short, i.e. the discount parameter is low, it pays to be meaner (for example, alternating cooperation and defection where opponents are using TIT FOR TAT). For example, if it is known that an influential MP is likely to be expelled from the party for scandalous behaviour, the incentive diminishes for other MPs to do political favours for him/her or for businesses to keep him/her on their payroll for questions in parliament, because reciprocity is unlikely. Another possibility is that no other strategy would reciprocate cooperation, and it is difficult to determine this in advance. A possible alternative strategy would be to defect until the other player cooperates, but this is a very risky strategy as it could lead to indefinite bilateral defection. In real life, if you greet people with a slap in the face (metaphorical or otherwise), they are unlikely to be all that forgiving, and you would probably regret not having started off cooperating. The policy of reciprocity is very robust because it is rewarding in a wide range of circumstances. TIT FOR TAT, for example, can discriminate between those strategies that return its initial cooperation and those which do not; indeed being immediately provocable is a prerequisite for a strategy to succeed. For example, if the strategy were modified to TIT FOR TWO TATS, it is clear that an opponent which started off cooperating and then alternated between defection and cooperation would be able to exploit this generosity. Reciprocity strikes a balance between punishing and forgiving defection, and indeed this balance may be altered to suit the situation. However, if more than one defection was exacted per defection from the opponent, there is the risk of escalation of the conflict, whereas a more forgiving policy risks exploitation. A related concept in a reality where strategies are not always as evident, is a players reputation PAGE 13

Hooke - The Westminster School Science Magazine

(embodied in the beliefs of others about the strategy that the player will use) which influences others. A reputation is typically established through observing the actions of that player when interacting with other players. For example, Britains reputation for being provocable was certainly enhanced by its decision to retake the Falklands in response to the Argentine invasion. By contrast, the United Nations rather clumsy operation in Kosovo recently sent conflicting signals on the one hand the UN is prepared to intervene, but may not be properly committed or competent. Knowledge of a players reputation provides some information even before the first move. This has implications on both players. A clear strategy such as TIT FOR TAT is easily identifiable, so as the opponent would do well to cooperate as this would lead to mutual gains. If, however, a strategy can be exploited, then informed opponents can clean up. The utter simplicity of TIT FOR TAT makes it easy to assert as a fixed pattern of behaviour, and is an effective way of making the other player adapt to cooperation; hence it cannot be drawn into a detrimental pattern of behaviour. Although it is certainly advantageous to a player for having a firm reputation for using TIT FOR TAT, it is not the best reputation to have, which is a reputation for being a bully. The optimal kind of bully is the one who has the reputation for squeezing the most out of the other player while not tolerating any defections at all from that other player. This can be done by defecting so often so that the other player just prefers cooperating all the time to defecting all the time, and the best way to coerce the other player into cooperation is to be known for permanent defection if provoked even once. However, these reputations are not always easy to establish. In a white vs. blacks situation, there is a large majority of whites to support such exploitative behaviour against black people. If this is not the case, and everyone is attempting to get the better of everyone else, in the reputation stakes, then a prerequisite is frequent defection. However, this strategy, as observed before, is likely to provoke other players into retaliation, leading to many unrewarding contests of will (e.g. power struggles in the mafia). One might also consider the development of status hierarchies. So, if two people, races or countries are trying to establish a tough reputation against each other, it is easy to imagine a vicious cycle of defections leading to endless mutual punishment. Indeed, this can be seen to be the case in World War I. Here, all the players needed to maintain a reputation of instant defection when defected against. When Serbia rallied for the independence of their Slav neighbours in Bosnia -Herzegovina by assassinating Archduke Franz Ferdinand, the heir to the AustrioPAGE 14 Hungarian throne, Austria -Hungary, if they were to prevent a fate similar to that of the Turkish empire (which suffered wars of independence from many of its states), needed to respond with clarity and force. In other words, the Slav people had defected and punishment was necessary, or else other states might believe that Austria -Hungarys forgiveness could be exploited by attempting to gain independence. However, if the champion of the Slav people, Russia, were to maintain her status as guarantor of Slav rights, she would have to intervene, i.e. defect against Austria -Hungary. Germany, being allied with Austria Hungary, had to deliver an ultimatum to Russia ordering the threat of war to be removed. This brought France into the foray as well, as she was allied to Russia. Then, as the German war machine was mobilised according to the Schlieffen plan, Belgium was invaded, and her protector, Britain, which may have remained in splendid isolation for a while longer, was drawn in to retaliate against Germany. Hence, this very costly single defection by each country started the war, which could have been prevented (or at least delayed the pressures were already mounting in Europe despite the assassination) if Austria -Hungary and the Bosnians had reached a mutual compromise i.e. cooperated. Once the war started, another game was being played in the trenches, where soldiers at the front line were supposed to slaughter each other in a war of attrition for a few square miles of land (or less), but instead, a live-and-let-live system of warfare developed i.e. cooperation. This brings us to the question of how cooperation can develop in a hostile environment, where everyone starts off defecting by trying to kill each other. It has already been established that the discount parameter, or equivalently, the expected length of the game, must be fairly high in order for cooperation to stand a chance, but what else is necessary? In the scenario of trench warfare, mutual restraint is preferred to mutual punishment, and indeed, unilateral restraint met with punishment would result in a worse outcome for those who were restrained than those who dealt out the punishment. Moreover, both sides would prefer mutual restraint to the alternation of serious hostility (entails high casualties). Therefore, the inequalities that define a Prisoners Dilemma hold in this situation. If the scenario is restricted to two entrenched units facing each other across No Mans Land, it is clear that whatever the opponent does, shooting to kill is the dominant strategy. However, the same units often face each other for extended periods of time, so cooperation has a chance to evolve, so let us appeal to mathematics. The question is whether a strategy of permanent ELECTION 2000

Hooke - The Westminster School Science Magazine

defection can be invaded by a nice strategy such as TIT FOR TAT. Having established that reciprocity is useless, at an individual level, if the other player intends always to defect, if several players, start adopting a TIT FOR TAT strategy, and all players interact with each other, cooperation does stand a chance. Referring to the matrix shown in table 1, where E=5 (exploiting other player), R=3 (mutual cooperation), P=1 (mutual defection), S=0 (being exploited by other player), if the discount parameter is 0.9, using the result for the summing of an infinite geometric progression where the common ratio is between 0 and 1, in a population where the only strategy is defection, each individual accrues a score of 10 points. If several players employing TIT FOR TAT start playing with the defectors, then, against defectors they score 9 points, and against each other they score 30 points. If TIT FOR TAT newcomers are a negligible proportion of the entire population, the high scores between mutual cooperators will be more the offset by the fact the TIT FOR TAT scores less than a defector against a defector. If TIT FOR TAT has some proportion, p, of its interactions with other TIT FOR TAT players, it will have (1-p) with the defectors. So its average score will be 30p+9(1-p). If this score is greater than 10 points, then the TIT FOR TAT newcomers can successfully establish themselves. Therefore, if p>1/21, cooperation can evolve in this specific situation. The proportion required drops substantially if the expected duration of the game increases. Furthermore, it can be seen that if TIT FOR TAT becomes the dominant strategy, p can be expected to increase until defectors are eliminated. Therefore, in World War I, a clear escalation of cooperation should be observed after spontaneous nonaggression appeared once trench warfare had past its bloody and highly mobile initial stage. For example, a slow down in fighting due to miserable weather, or mutual restraint shown by not bombing ration supplies could spread to other forms of cooperation. When defections actually did occur, a two-for-one, or threeto-one punishment system was often imposed, as a firm response was necessary for TIT FOR TAT reciprocity. An inherent damping process in the form of restraint exercised by the offending party would prevent escalating conflict, and even apologies were shouted across No Mans Land for third party shelling. Rotation of troops would have interrupted any relationships, but the common practices of the liveand-let-live system could easily be communicated to fresh troops. For the high commanders, it was a zero sum game defeat of the enemy results in victory, but not so for those at the front line whose lives were in the direct line of fire. Indeed elaborate procedures were developed in order to deceive officers who did ELECTION 2000 not approve of cooperation, such as deliberately inaccurate shooting and shelling. In the end, it was the frequent raids made mandatory by high command which spelled the end for the live-and-let-live system, as random defections were made necessary, and cooperation would have lead to high casualties in the event of a raid. Therefore, cooperation can emerge in the most bitter of circumstances The evolution of cooperation is dependent upon the robustness, initial viability and stability of a strategy. It has already been shown that TIT FOR TAT is initially viable in a hostile environment, given that the proportion of newcomers is great enough, and that TIT FOR TAT is very robust as it can cope with a wide variety of situations, and is discriminating. In order for any strategy which is first to cooperate to be collectively stable, the discount parameter must be sufficiently large. The reason is that for a strategy to be collectively stable it must protect itself from invasion by any challenger, including the strategy which always defects. If the native strategy ever cooperates, a strategy of always defecting will get E for exploiting the native strategy, but the population average can be no greater than R per move. So, in order for the population average to be no less than the score of the challenging defectors, the interaction must last long enough for the gain from exploitation to be nullified over future moves. Furthermore, the score achieved by a strategy that comes in a cluster is a weighted average of two component: how it does with others of its kind and how it does with the predominant strategy. Both of these components are less than or equal to the score achieved by the predominant, nice strategy. Therefore, if the predominant strategy cannot be invaded by a single individual, it cannot be invaded by a cluster either. Therefore, if the shadow of the future on the present is great enough, TIT FOR TAT is collectively stable. This amounts to a ratchet mechanism in the evolution of cooperation within the rules of the game, cooperation cannot regress to defection. These ideas about the Prisoners Dilemma can be represented in a very attractive way inherently selfish individuals, who are not necessarily rational, can learn to cooperate, and indeed the evolution of cooperation has a ratchet mechanism that prevents degeneration into undesirable mutual punishment by defection. However, nice strategies such as TIT FOR TAT can invade nasty populations with surprising ease, as long as a few people are willing to cooperate, given that conditions do not change, and indeed that nice strategy can become dominant. However, perhaps the most interesting thing is that mutual cooperation is rational in even a one-off situation, since if everyone were perfectly rational, they would presumably all think in exactly one way, and hence the outcomes would be PAGE 15

Hooke - The Westminster School Science Magazine

The New Telescope

The school has purchased a new telescope, the deThe physics department has recently purchased a telescope. Its a Meade ETX90 and comes with a tripod, eyepieces, a photoadapter and a number of other accessories including a solar filter. This excellent system is highly portable and with the minimum of training extremely easy to use. Interested pupils will be able to make observations and take photographs in London or on school trips such as Alston or expeditions. The telescope allows the Physics Department the option of offering a GCSE Astronomy option to Uppershell/Sixth form pupils. The course requires several pieces of observational coursework such as monitoring Jupiters moons, photographs of the Moon and the active regions of the Sun; the telescope is ideal for these purposes. In addition to its use by Westminster pupils, the telescope will be a valuable tool in helping to forge closer links with prep schools through the Departments CLOSPA scheme whereby members of the Department visit a prep school to give a talk on some aspect of astronomy. It is hoped that upper school pupils (either as an option or even a station activity) will eventually be given the chance to become involved with this project by giving demonstrations of the telescopes use and assist these younger pupils in making observations. If pupils are interested in taking the GCSE Astronomy option, helping with the CLOSPA scheme, or in making observations and photographs please see your physics teacher asap.

The Physicist Song

Mohammed Mostaque Sung to the tune of The Lumberjack Song Physicist: I'm a physicist, and I'm okay, I sleep all night and I work all day. I do my tests, I take results, I do them all quite happily. On occasion they dont quite work, Experimental errors, you see. Chorus: He's a physicist, and he's okay, He sleeps all night and he works all day. He does his tests, he takes results, He does them all quite happily. Even though they dont quite work, The theory is still right, you see.

Physicist: I'm a physicist, and I'm okay, I derive equations all day. Integrals and differentials Are all easy for me. Just give me a system, I'll model it with glee! Chorus: He's a physicist, and he's okay, He derives equations all day. Integrals and differentials Are all easy for him. If you told him to model, You'd see he isn't dim.

Physicist: I'm a physicist, and I'm okay, I work all night and I work all day. I make sure all the equipment All completely safe, Im really happy if My lab coat doesnt chafe. Chorus: Hes a physicist, and hes okay, We works all night, and he works all day, He cleans all of his equipment And he tries to be safe. Hes only happy if His lab coat doesnt chafe

Physicist: Im a physicist, and Im okay So go on, do some physics today!

PAGE 16

ELECTION 2000

Hooke - The Westminster School Science Magazine

2,3,7,8-tetra what?

Paola investigates the presence of dioxins within our everyday lives

As under a green sea/ He plunges at me, guttering, choking, drowning. The images and what we naturally associate with any word or phrase vary from one person to the next and are constantly being distorted through our experience. For those that survived the Great War chlorine will forever be linked to memories of friends and companions stumbling and drowning in a green sea of chlorine gas. However the darker days of chlorine are behind us and we know chlorine for its somewhat more beneficial contributions to our lives. The number of ways in which chlorine is useful to us is huge, as a disinfectant and purifier as a component in pharmaceuticals and agrochemicals and in numerous manufacturing processes. Cholera epidemics racked the cities of Victorian Britain killing thousands. In this country at least we no longer need to worry about the threat of cholera as our water is purified by chlorine whereas in many less developed nations cholera is still a very real danger as they do not have the resources to properly treat their water. A wide range of ordinary and some not so ordinary goods rely on chlorine, our paper is bleached using chlorine, silicon chips contain chlorine as do bullet-proof vests and swimming pool disinfectants. To meet this demand for chlorine Western European chlorine manufacturers alone produce more than 9 million tonnes of chlorine every year. Of this tremendous volume of chlorine turned out by the industry a third is recycled, mostly as hydrochloric acid, within the production plants. Roughly two thirds of Europes entire chemical production depends, directly or indirectly, on chlorine and the value of the chlorine industry alone is an estimated EUR 230,000 million per annum. However the recent past of chlorine has not always been so good, CFCs being one example, and new environmental issues concerning the products and the manufacturing of chlorine are almost constantly being brought to the publics eyes, ears and any other senELECTION 2000 sory organ that the media can reach. The latest scare is dioxins. Dioxins are a group of compounds formed in the presence of carbon, oxygen, hydrogen, chlorine and large amounts of heat and so are mainly found to be undesirable by-products of the Chlor-alkali industry and various combustion and other industrial processes, but it is also known that some occur naturally. The word dioxin has come to represent just one of the dioxin family, 2,3,7,8-tetrachlorodibenzo-para-dioxin (2,3,7,8-TCDD). This dioxin is formed in particular during the synthesis of 2,4,5-trichlorophenol, and other useful compounds, which is in turn used in the manufacture herbicides, 2,4,5trichlorophenoxyacetic acid (used in Vietnam by the U.S Army in the defoliant Agent Orange) and hexachlorophene which is antibacterial agent formerly used in soap an deodorants. A very small amount of dioxins are also intentionally produced for research purposes. The structure of dioxins, or more accurately dibenzo-p-dioxins, is as follows; two benzene rings which are connected by two atoms of oxygen. In total the two benzene rings have twelve carbons between themselves of which four are bonded to the pair of oxygen atoms. The remaining carbons can form bonds to hydrogens or atoms of other elements such as chlorine. The carbons that still have the possibility to form bonds with other atoms are, by convention, numbered in the following manner; the remaining carbons in the first benzene ring are labelled with numbers ranging from one to four and on the second ring from six to nine. More toxic dioxins are bonded to chlorines at these positions. Dioxins are not readily soluble in water, however they are highly lipophilic, that is they are extremely soluble in fatty substances and in other fat like organic materials. Pure dioxins are colourless and odourless solids whose melting and boiling points are high and evaporate at a slow rate. 2,3,7,8-TCDD is the most well known of all the PAGE 17

Hooke - The Westminster School Science Magazine

dioxins, the most studied, is extremely stable and is also recognised as being the most toxic of all the dioxins. It is insoluble in water and in most other organic substances but is soluble in oils. This property means that dioxin in soil is resistant to dilution by rainwater. These properties are what cause the dioxin to seek and then enter the fatty tissues of any body into which it enters. The toxicity of TCDD is thought to be a result of its ability to bind with a certain type of receptor protein, which is found in some of the bodys cells. The TCDD-receptor complex, which is the result of the binding of the dioxin and the protein, is able to enter the nucleus of the cells and bind to the DNA. This will adversely affect the cells ability to produce proteins. To reiterate dioxins are by products of many combustion processes and also of the Chlor-alkali and related industries. Hospital and other waste incinerators, wood fires, power plants and motor vehicles all release dioxins every day. Once these dioxins become airborne they can come to settle on farmlands and due to their chemical they are able to make their way into the food chain as well as into our waterways. Very recently an advertisement was published in the New York Times highlighting the alarm that dioxins have caused in the United States, while here the issue is very much in the background, as we seem to be far more concerned with other matters. It is entitled Guess What You Had For Breakfast?, it makes a point of drawing the publics attention to the effects to humans from dioxin exposure and what some of the sources of our dioxin consumption are. Dioxin is now pervasive in fish, beef, milk, poultry, pork and eggs. Infants get dioxin in breast milk. Dioxin is a known cause of cancer. The advertisements text goes on to list further harmful effects of the exposure to dioxins; Learning disabilities, birth defects, endometriosis and diabetes. Dioxin weakens the human immune system and decreases the level of the male hormone testosterone. There is great confusion and allegations are far from being few and far between in the U.S concerning the dioxin issue, much like the current situation here with GM foods, and this advertisement is a prime example of the panic and alarm that an incorrectly informed press can cause. In fact the side effects experienced by humans from exposure and ingestion of dioxins are much less serious than what the media would have us believe. The main source of the confusion was a paper released in 1994 by the U.S Environmental Protection Agency (USEPA). The substance of the report had not PAGE 18 been properly or accurately and scientifically researched. The report was based on many unproved assumptions and untested hypotheses, and what evidence was given as to the effects of dioxins on humans were drawn from conclusions based on testing on guinea pigs that were exposed to ridiculous amounts of the 2,3,7,8TCDD, the most toxic of all the dioxins. The most accurate information available to us regarding the effects of dioxins on humans comes from observations made of communities that have through industrial accidents and the like been exposed to very large amounts of dioxins over a long period of time and also on workers that are working day to day in the presence of dioxins. The findings from such studies are very much different to those published in 1994 by the USEPA. Cancer was not found to be a much larger problem among these groups as it is among others and some dioxins have even been found to inhibit the growth of breast tumours. None of the other effects were documented as being serious problems in these sectors and most often the worst effect on those exposed to that amount of dioxins on a daily basis was found to be a skin disease very similar to acne. These were the findings of studies conducted on people exposed to thousands of times more dioxins than the average human being. There is great concern as to the amount of dioxins that we are daily exposed to, as is illustrated by the advertisement in the New York Times. This has prompted WHO to decree what they think acceptable exposure to dioxins to be. WHO has recently released the value of one to four picogrammes per kilo bodyweight per day as acceptable daily dioxin intake. The amount of dioxins that we are actually exposed to is roughly half of that value. As per usual the chemical industry has been blamed for the pollution by the media and many influential and yet ill-informed members of the public. Many sources such as European Dioxin inventories have shown that the chemical industry is but a very minor contributor to the levels of dioxins in the environment, less than 1% in fact and some sectors of the industry, such as the pulp and paper sector have practically eliminated dioxins from their waste. It is not chlorine and its related industries that are at the heart of the issue as the environmentalists would have us all believe, combustion processes occurring in all different circumstances, including in nature, that are chief contributors to the dioxin contamination. Dioxins are indeed quite dangerous, should levels ever be high enough (they have dropped considerably over the past thirty years), due to their chemical properties. However we should take what is published with a pinch of salt as inappropriately tested theories ELECTION 2000

Hooke - The Westminster School Science Magazine

Physics Phun

Valerie Diederichs provides a short insight into the physics trips in the sixth form

When someone mentions the word school trip everyone immediately imagines standing in a pond scooping insects and counting how many water beetles they can find. However the physics department have brought the sixth form on many school trips, with not a pond or traffic survey in sight. The first was a day spent at the conference hall in Russell Square where we enjoyed a host of lectures on many contemporary subjects. After having filed into the large auditorium surrounded by hundreds of other physics A-level students we listened to a lecture on mobile phones. This lecture led us through the history of mobile phones, as well as how they worked and how they communicated with the network to which they were registered. Mobile phones being an integral part of many teenagers lives the lecture captured the attention of the audience, as all were eager to find out the finer points of how we actually stayed in touch. This was followed by a lecture on the different forms of night vision. The lecturer worked in coordination with DERA and explained techniques such as infrared vision and light intensifying techniques. Heat techniques were also mentioned. The lecturer used fascinating slides and as the advantages of each method were mentioned we were entertained with incredible images. The downfalls of each method were also vividly illustrated with slides. The following lecture was on a lighter note and was very topical at the time. These lectures were conducted just before the end of the play term and the next lecture was given the title the physics of Christmas. The lecturer proceeded to tell us that it was in fact possible for there to be a Father Christmas and for him to ride in a sledge pulled by reindeer and deliver his presents all in one evening. This lecture had the hall audience roaring with laughter as well as simultaneously being very surprised by the implications of the lecture. This lecture was followed by a lunch break. Having returned refreshed from our break we were seated and once again amused by the following lectures title, the physics of sex. This lecture was to describe the discovery of the first biological wheel. This wheel had been found on a sperm, and showed how incredibly ELECTION 2000 efficient their swimming technique was. A more conventional lecture followed, as biodegradable plastics and the scam behind most currently available plastics captivated the audience. The lecturer had been involved in a research team, which researched current biodegradable plastics and found them to break down only into long strands of polymer, rather than the actual monomers involved in the material. These long strands of polymer were still harmful to the environment. The research team then continued to develop a plastic that would actually break down into its monomers rather than long chains of monomer. The research team succeeded in doing this and the climax of the lecture came as the lecturer swallowed a spoonful of this plastic to prove that it was completely harmless. The final lecture of the day was on chaos theory and compound pendulums. The lecturer explained to us the unpredictable nature of the pendulums when more than one was attached end-to-end and allowed to swing. A demonstration of this followed and once again the lecture climaxed when the lecturer selected a volunteer from the audience and set a new world record for the most pendulums balanced end-to-end vertically upright. All in all it was an educational day and everyone left feeling satisfied that they had learnt more of daily aspects of physics, and many left astonished as to how much physics affected our daily lives. The following sixth form physics trip was to the Rutherford-Appleton Laboratory in Oxfordshire. When we arrived we were led to a large room filled with a host of displays. We were firstly introduced, before being split into smaller groups and being led around the particle accelerator. In small groups it was explained how the particle accelerator worked as well as being explained why it was built, and a little of the history. The accelerator has many cells leading off the main chute where the particles are accelerated. In these cells experiments are carried out. The cells can be rented out for a time period of a couple of days to several months, and are used by many different groups, from university students to global companies. We then PAGE 19

Hooke - The Westminster School Science Magazine

visited two specific cells. In the first cell research was being conducted on super-conductors, and some of the properties of superconductors at low temperatures were displayed, as a small magnet lay suspended above the superconductor. We were then led into a cell that was under construction and we were able to see the incredible technology involved in such research. After lunch we had two options. The first was a talk on lasers and the second was a talk on space technology. I chose the talk on space technology. Two scientists gave this lecture from STEP, which is an International Collaboration in Fundamental Physics and it is a joint USEuropean project into the phenomenon of gravity. The lecture explained how the distance to different stars from the earth is measured. He explained the different techniques as well as the implications this had, if several readings were taken. The lecturer also explained how the mass of stars could be calculated. When this lecture was finished we were given a talk on materials, specifically composite materials. The combined properties of composite materials were explained as well as the science and the molecular implications of these properties. This was followed by a tour around a laboratory where materials were tested. We were shown how inserting solid carbon dioxide into the balloon could blow up a balloon and as the solid warmed and evaporated the balloon expanded and eventually burst. Again inserting them into a balloon and observing their behaviour showed the properties of different gases. A large machine was then used to apply a tension of up to one tonne on an aluminium rod of diameter 12 mm. Viewing different forms of carbon composites completed the tour of the laboratory, and their different properties explained. Our latest physics adventure has been to two power stations in Didcot near Oxford. The first power station we visited was Didcot A, a large traditional coal fired power station. We begin the day by an introduction into the power station as well as an explanation into the various stages of the power production. At various stages in the production of electricity from coal waste PAGE 20 products are formed. We were able to see these waste products as well as being given an explanation as to how they are being processed into more useful forms, such as insulating blocks for building purposes. The group of physicists were then split up into smaller groups of roughly five people and a guide accompanied us around the site. We were able to see key features such as the control room, which was originally built in the 1960s and is now being refurbished to make it more modern. As this refurbishment takes place the amount of staff needed to run the power station decreases dramatically, and when we were there only four staff were in the control room. The power station was very impressive and the magnitude of the equipment needed to produce sufficient electricity was astonishing. In the afternoon we visited JET. This is the Joint European Taurus and is a research project into the possibilities of producing electricity from nuclear fusion. It is a tokamak reactor as in the diagram. The plasma is an ionised gas and is produced by heating an ordinary gas of neutral atoms beyond the temperature at which electrons are knocked out of the atoms. The gas consists of free negative electrons and positive nuclei. This plasma will then undergo fusion, and thus releasing energy. We were split into smaller groups where we were able to see a full-scale model of the Taurus as well as being talked to about the different stages of development, which the Taurus has undergone. Finally we were allowed into the control room, from which we were able to witness a test. The test can be viewed because as the gas reaches a plasma a lilac haze is emitted. Sensors in the ring detect this and transmit the colour to screens in the control room. It was a thoroughly enjoyable day, in which we were able to see the past, present and the future of electricity production. Visiting JET also helped settle some of the safety concerns associated with nuclear power and allowed us to see nuclear fuels as a real and potentially inexhaustible supply of electricity to meet our daily needs.

ELECTION 2000

Hooke - The Westminster School Science Magazine

Cladistics, Evolution, and the Death of the ladder

Patrick Mellor reviews our existing views of evolution