Professional Documents

Culture Documents

Rank 2005

Uploaded by

newspaperOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Rank 2005

Uploaded by

newspaperCopyright:

Available Formats

The Rank of a Matrix

Francis J. Narcowich Department of Mathematics Texas A&M University January 2005

Rank and Solutions to Linear Systems

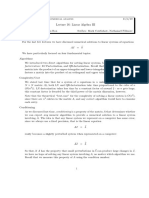

The rank of a matrix A is the number of leading entries in a row reduced form R for A. This also equals the number of nonrzero rows in R. For any system with A as a coecient matrix, rank[A] is the number of leading variables. Now, two systems of equations are equivalent if they have exactly the same solution set. When we discussed the row-reduction algorithm, we also mentioned that row-equivalent augmented matrices correspond to equivalent systems: Theorem 1.1 If [A|b] and [A |b ] are augmented matrices for two linear systems of equations, and if [A|b] and [A |b ] are row equivalent, then the corresponding linear systems are equivalent. By examining the possible row-reduced matrices corresponding to the augmented matrix, one can use Theorem 1.1 to obtain the following result, which we state without proof. Theorem 1.2 Consider the system Ax = b, with coecient matrix A and augmented matrix [A|b]. As above, the sizes of b, A, and [A|b] are m 1, m n, and m (n + 1), respectively; in addition, the number of unknowns is n. Below, we summarize the possibilities for solving the system. i. Ax = b is inconsistent (i.e., no solution exists) if and only if rank[A] < rank[A|b]. ii. Ax = b has a unique solution if and only if rank[A] = rank[A|b] = n . iii. Ax = b has innitely many solutions if and only if rank[A] = rank[A|b] < n.

To illustrate this theorem, lets look at the simple systems below. x1 + 2x2 = 1 3x1 + x2 = 2 3x1 + 2x2 = 3 6x1 4x2 = 0 3x1 + 2x2 =3 6x1 4x2 = 6

The augmented matrices for these systems are, respectively, 1 2 1 3 1 2 3 2 3 6 4 0 3 3 2 6 4 6 .

Applying the row-reduction algorithm yields the row-reduced form of each of these augmented matrices. The results are, again respectively, 1 0 1 0 1 1

2 1 3 0 0 0 1

1 2 1 3 0 0 0

From each of these row-reduced versions of the augmented matrices, one can read o the rank of the coecient matrix as well as the rank of the augmented matrix. Applying Theorem 1.2 to each of these tells us the number of solutions to expect for each of the corresponding systems. We summarize our ndings in the table below. System First Second Third rank[A] 2 1 1 rank[A|b] 2 2 1 n 2 2 2 # of solutions 1 0 (inconsistent)

Homogeneous systems. A homogeneous system is one in which the vector b = 0. By simply plugging x = 0 into the equation Ax = 0, we see that every homogeneous system has at least one solution, the trivial solution x = 0. Are there any others? Theorem 1.2 provides the answer. Corollary 1.3 Let A be an m n matrix. A homogeneous system of equations Ax = 0 will have a unique solution, the trivial solution x = 0, if and only if rank[A] = n. In all other cases, it will have innitely many solutions. As a consequence, if n > mi.e., if the number of unknowns is larger than the number of equations, then the system will have innitely many solutions. Proof: Since x = 0 is always a solution, case (i) of Theorem 1.2 is eliminated as a possibility. Therefore, we must always have rank[A] = rank[A|0] n. By Theorem 1.2, case (ii), equality will hold if and only if x = 0 is the only solution. When it does not hold, we are always in case (iii) of Theorem 1.2; there are thus innitely many solutions for the system. If n > m, then we need only note that rank[A] m < n to see that the system has to have innitely many solutions. 2

Linear Independence and Dependence

k

A set of k vectors {u1 , u2 , . . . , uk } is linearly independent (LI) if the equation cj uj = 0,

j=1

where the cj s are scalars, has only c1 = c2 = = ck = 0 as a solution. Otherwise, the vectors are linearly dependent (LD). Lets assume the vectors are all m 1 column vectors. If they are rows, just transpose them. Now, use the basic matrix trick (BMT) to put the equation in matrix form:

k

Ac =

j=1

cj uj = 0, where A = [u1 u2 uk ] and c = (c1 c2 ck )T .

The question of whether the vectors are LI or LD is now a question of whether the homogeneous system Ac = 0 has a nontrivial solution. Combining this observation with Corollary 1.3 gives us a handy way to check for LI/LD by nding the rank of a matrix. Corollary 2.1 A set of k column vectors {u1 , u2 , . . . , uk } is linearly independent if and only if the associated matrix A = [u1 u2 uk ] has rank[A] = k. Example 2.2 Determine whether the vectors below are linearly independent or linearly dependent. 0 2 1 1 1 1 u1 = 2 , u2 = 1 , u3 = 1 . 1 1 1 Solution. Form the associated matrix A and perform row reduction on it: 2 1 2 0 1 0 3 1 1 1 1 0 1 3 . A= 2 0 0 0 1 1 1 1 1 0 0 0 The rank of A is 2 < k = 3, so the vectors are LD. The row-reduced form of A gives us additional information; namely, we can read o the cj s for which k 2 1 j=1 cj uj = 0. We have c1 = 3 t, c2 = 3 t, and c3 = t. As usual, t is a parameter. 3

You might also like

- Week3 EEE5012 Systems of Linear Eq Part1Document11 pagesWeek3 EEE5012 Systems of Linear Eq Part1Omar Seraj Ed-DeenNo ratings yet

- MATH219 Lecture 7Document16 pagesMATH219 Lecture 7Serdar BilgeNo ratings yet

- Diagonal IzationDocument13 pagesDiagonal IzationSeife ShiferawNo ratings yet

- 2.5 The Inverse MatrixDocument13 pages2.5 The Inverse MatrixFelicia IdajiliNo ratings yet

- Notes03 - Linear EquationsDocument5 pagesNotes03 - Linear EquationsjessNo ratings yet

- 5 System of Linear EquationDocument12 pages5 System of Linear EquationAl Asad Nur RiyadNo ratings yet

- 1850 Lecture3Document9 pages1850 Lecture3Wing cheung HoNo ratings yet

- CH 2 Linear Equations 11Document28 pagesCH 2 Linear Equations 11zhero7No ratings yet

- 1 System of Linear Equations and Their Solutions: An Example For MotivationDocument5 pages1 System of Linear Equations and Their Solutions: An Example For MotivationMehul JainNo ratings yet

- Numerical Analysis-Solving Linear SystemsDocument23 pagesNumerical Analysis-Solving Linear SystemsMohammad OzairNo ratings yet

- Matrix Fundamentals for Engineering StudentsDocument23 pagesMatrix Fundamentals for Engineering StudentspsnspkNo ratings yet

- MATHEMATICS II: Linear Algebra and Complex AnalysisDocument40 pagesMATHEMATICS II: Linear Algebra and Complex AnalysisjayNo ratings yet

- 3-CHAPTER 0010 V 00010croppedDocument14 pages3-CHAPTER 0010 V 00010croppedmelihNo ratings yet

- 2) Solving - Linear - SystemsDocument19 pages2) Solving - Linear - SystemsSümeyyaNo ratings yet

- MM2 Linear Algebra 2011 I 1x2Document33 pagesMM2 Linear Algebra 2011 I 1x2Aaron LeeNo ratings yet

- Introduction To Financial Econometrics Appendix Matrix Algebra ReviewDocument8 pagesIntroduction To Financial Econometrics Appendix Matrix Algebra ReviewKalijorne MicheleNo ratings yet

- Chapter 5Document25 pagesChapter 5Omed. HNo ratings yet

- Solve Systems of Linear Equations Using Matrix MethodsDocument24 pagesSolve Systems of Linear Equations Using Matrix MethodsEugene BusicoNo ratings yet

- Financial Mathematics 101 - Week 1: Lecture 2: 1 Types of LinesDocument6 pagesFinancial Mathematics 101 - Week 1: Lecture 2: 1 Types of LinesKristel AndreaNo ratings yet

- Part4 Linear EquationsDocument39 pagesPart4 Linear Equationszepozepo06No ratings yet

- Chapter 2 Mat423Document11 pagesChapter 2 Mat423Mutmainnah ZailanNo ratings yet

- Sma3013 Linear Algebra SEMESTER 1 2020/2021Document53 pagesSma3013 Linear Algebra SEMESTER 1 2020/2021Ab BNo ratings yet

- Introduction to linear AlgebraDocument90 pagesIntroduction to linear AlgebraEbrahem BarakaNo ratings yet

- A A A A A A: Solution of Linear SystemDocument11 pagesA A A A A A: Solution of Linear SystemAsif KarimNo ratings yet

- Differential Equations: Systems of Two First Order EquationsDocument81 pagesDifferential Equations: Systems of Two First Order EquationsHebert Nelson Quiñonez OrtizNo ratings yet

- Linear Algebra Chapter 1 SolutionsDocument23 pagesLinear Algebra Chapter 1 SolutionsLê Vũ Anh NguyễnNo ratings yet

- VAR Stability AnalysisDocument11 pagesVAR Stability AnalysisCristian CernegaNo ratings yet

- System of of Linear Linear Equations EquatioDocument47 pagesSystem of of Linear Linear Equations EquatioSandeep SinghNo ratings yet

- LAWeek1 MA2006-2Document32 pagesLAWeek1 MA2006-2davidbehNo ratings yet

- Appendix and IndexDocument104 pagesAppendix and IndexkankirajeshNo ratings yet

- Linear Algebra: Simultaneous Linear EquationsDocument19 pagesLinear Algebra: Simultaneous Linear EquationsTarun SharmaNo ratings yet

- Principles of Least SquaresDocument44 pagesPrinciples of Least SquaresdrdahmanNo ratings yet

- DeterminantDocument14 pagesDeterminantNindya SulistyaniNo ratings yet

- Solving Linear Systems in 3 StepsDocument36 pagesSolving Linear Systems in 3 StepspudnchinaNo ratings yet

- Lin 3037Document8 pagesLin 3037Worse To Worst SatittamajitraNo ratings yet

- Curvve Fitting - Least Square MethodDocument45 pagesCurvve Fitting - Least Square Methodarnel cabesasNo ratings yet

- Applications of Differential and Difference EquationsDocument177 pagesApplications of Differential and Difference EquationsEswar RcfNo ratings yet

- 2024-02-20 MA110 Slides CompilationDocument62 pages2024-02-20 MA110 Slides CompilationmayankspareNo ratings yet

- Chapter 11Document3 pagesChapter 11muhammedNo ratings yet

- Antonchap1 LinsystemsDocument23 pagesAntonchap1 Linsystemsapi-2612829520% (1)

- Chapter 2Document53 pagesChapter 2LEE LEE LAUNo ratings yet

- Unit 1 PT 3 Gaussian Elim Part 3Document10 pagesUnit 1 PT 3 Gaussian Elim Part 3sprinklesdb16No ratings yet

- Matrices, Determinants & Linear SystemsDocument30 pagesMatrices, Determinants & Linear SystemssilviubogaNo ratings yet

- Leon 8 ErrataDocument17 pagesLeon 8 ErrataMichael DavisNo ratings yet

- Lecture 16: Linear Algebra III: cs412: Introduction To Numerical AnalysisDocument7 pagesLecture 16: Linear Algebra III: cs412: Introduction To Numerical AnalysisZachary MarionNo ratings yet

- Linear Algebra-1Document56 pagesLinear Algebra-1EDISON OCHIENGNo ratings yet

- Least Squares Solution and Pseudo-Inverse: Bghiggins/Ucdavis/Ech256/Jan - 2012Document12 pagesLeast Squares Solution and Pseudo-Inverse: Bghiggins/Ucdavis/Ech256/Jan - 2012Anonymous J1scGXwkKDNo ratings yet

- WEEK 8 Further Physics Solutions From Konrad HarradineDocument6 pagesWEEK 8 Further Physics Solutions From Konrad HarradineDulwich PhysicsNo ratings yet

- M 1Document38 pagesM 1Divya TejaNo ratings yet

- Linear AlgebraDocument12 pagesLinear AlgebraSivanathan AnbuNo ratings yet

- Linear Systems ParametrisationDocument30 pagesLinear Systems ParametrisationLodeNo ratings yet

- Maths PDF 2Document140 pagesMaths PDF 2tharunvara000No ratings yet

- CE SOLUTIONSDocument9 pagesCE SOLUTIONSakatsuki shiroNo ratings yet

- Errata Leon 8 EdDocument12 pagesErrata Leon 8 EdAnjali SinghNo ratings yet

- Chapter Three Systems of Linear Equations: Dr. Asma AlramleDocument12 pagesChapter Three Systems of Linear Equations: Dr. Asma AlramleRetaj LibyaNo ratings yet

- Lecture 4: Linear Systems of Equations and Cramer's Rule: Zhenning Cai September 2, 2019Document5 pagesLecture 4: Linear Systems of Equations and Cramer's Rule: Zhenning Cai September 2, 2019Liu JianghaoNo ratings yet

- Lechlar Full Cone NozzlesDocument14 pagesLechlar Full Cone NozzlesBharathi RajaNo ratings yet

- Sub Cooling Duration in Space Cryogenic Propellant StorageDocument9 pagesSub Cooling Duration in Space Cryogenic Propellant StorageBharathi RajaNo ratings yet

- Ds TMF Mfs MFC EngDocument12 pagesDs TMF Mfs MFC EngBharathi RajaNo ratings yet

- Freezing Point Depression Molar Mass DeterminationDocument2 pagesFreezing Point Depression Molar Mass DeterminationMaryam Adila JusohNo ratings yet

- Rocket PropulsionDocument20 pagesRocket PropulsionParthiban SekarNo ratings yet

- Poly WaxDocument4 pagesPoly WaxBharathi RajaNo ratings yet

- CEAexec WinDocument1 pageCEAexec WinBharathi RajaNo ratings yet

- Design of A Rocket Injector For A Liquid Bi-Propellant SystemDocument26 pagesDesign of A Rocket Injector For A Liquid Bi-Propellant SystemBharathi RajaNo ratings yet

- 10 1 1 51Document13 pages10 1 1 51Bharathi RajaNo ratings yet

- AE1007Document1 pageAE1007Bharathi RajaNo ratings yet

- Shiva MantraDocument7 pagesShiva Mantraapi-3768869No ratings yet

- FEM in Two-Dimension: Strain Energy DensityDocument14 pagesFEM in Two-Dimension: Strain Energy DensityBharathi RajaNo ratings yet