Professional Documents

Culture Documents

Apsolute Bandwidth Management

Uploaded by

Bruno Scatolin OlivaOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Apsolute Bandwidth Management

Uploaded by

Bruno Scatolin OlivaCopyright:

Available Formats

Bandwidth Management in

Radwares APSolute OS Architecture

North America

Radware Inc. 575 Corporate Dr Suite 205 Mahwah, NJ 07430 Tel 888 234 5763

International

Radware Ltd. 22 Raoul Wallenberg St Tel Aviv 69710, Israel Tel 972 3 766 8666

www.radware.com

Bandwidth Management White Paper

January , 2005 Page - 2 -

Introduction

Radwares APSolute OS architecture is made up of five modules, each with its own rich set of features: Traffic Redirection, Health Monitoring, Bandwidth Management, Application Security and DoS Shield. The Traffic Redirection module is responsible for the actual load balancing of user sessions among the available resources, be it Application servers, content servers, security servers (IDS servers, Anti-Viruses, URL Filters) or ISP links. The Health Monitoring module is responsible for assuring that the resources being managed are available, healthy, and capable of handling user traffic. These two modules incorporate the traditional feature sets of Radware products. For a complete APSolute OS implementation, Bandwidth Management and Application Security, and a DoS Shield module can be added to Traffic Redirection and Health Monitoring modules. The Bandwidth Management module includes a feature set that allows administrators to have full control over their available bandwidth. Using these features, applications can be prioritized according to a wide array of criteria, while taking the bandwidth used by each application into account. As sessions are prioritized, bandwidth thresholds can be configured to either ensure a guaranteed bandwidth for a certain application and/or to keep them below a pre-determined bandwidth limit. The Application Security module includes a feature set that enables Radwares products to protect sensitive network resources against security risks. The system includes advanced security measures such as server overload protection and the ability to hide resources from the general Internet population. This is coupled with the ability to provide advanced security for the sensitive resources that APSolute OS provides. This includes detection of and prevention from over 1,500 malicious attack signatures, including trojan, backdoor, DoS and DDoS attacks. The purpose of this white paper is to discuss the Bandwidth Management module in Radwares APSolute OS architecture. First, some basic concepts will be discussed, followed by a full discussion of Radwares implementation. This document is updated to version 10.20 of the Bandwidth Management. Although future enhancements to the module will be made, the underlying fundamentals will be the same as those discussed here. Note: In order to benefit from the full feature set of Bandwidth Management Module, the module has to be activated with a BWM and IPS Activation Key.

What is Bandwidth Management?

Bandwidth management, in general, is a simple concept. The idea is to be able to differentiate or classify traffic according to a wide array of criteria and then assign various priorities to each classified packet or session. For example, bandwidth management allows an administrator to give HTTP traffic a higher priority over SMTP traffic, which in turn may have higher priority over FTP traffic. At the same time, a bandwidth management solution can track the actual bandwidth used by each application and set limits as to how much each classified traffic pattern can utilize and in addition, set the guaranteed bandwidth for each application. There are a variety of methods used in order to enforce the bandwidth management policies configured by an administrator. The simplest method would be to discard packets when certain thresholds are reached or when certain pre-allocated session buffers are

Bandwidth Management White Paper

January , 2005 Page - 3 -

overflowing. More complex mechanisms include TCP rate shaping and priority based queuing. TCP rate shaping uses the inherent flow control mechanisms of the TCP protocol. By adjusting parameters in the packets TCP headers, a bandwidth management solution can signal the end nodes to throttle the rate at which packets are transmitted. Needless to say, the mechanism only works with TCP sessions. TCP rate shaping also has some uncertainties associated with it, as the amount of bandwidth associated with sessions can rarely be exactly enforced. Rate shaping also does not work well with protocols that use short-lived sessions (such as HTTP), since such sessions usually end before the bandwidth manager has decided how to shape the rate of the session. Priority based queuing is a mechanism by which all classified packets are placed in packet queues, each with its own preset priority. A number of queues are available and when it comes to traffic forwarding, packets are forwarded from the higher priority queues first. This is an oversimplified version of what really happens, but it presents the general concept. Various algorithms and safety measures should be deployed to ensure methodical packet forwarding as well as protection against starvation, where lower priority packets wait in queues for intolerably long amounts of time. Radwares Bandwidth Management solution uses priority queues as the fundamental framework behind its operation. The remainder of this document will concentrate on the details of Radwares implementation.

General Overview of Radwares Implementation

Although the general concept of bandwidth management is simple, the complexity lies within the implementation and the intricacies therein. To present Radwares bandwidth management solution, its best to first start with general concepts and analyze the basic logical flow of packets/sessions as they go through the bandwidth management system. The following diagram describes the general components and tasks that make up Radwares bandwidth management mechanism:

There are 4 major components in the system: the classifier, the queues, the scheduler and the Policy Database. The packet first flows into the system through the classifier. Its the classifiers duty to decide what to do with the packet. A very comprehensive set of user-configurable policies that make up the policy database; controls how the classifier identifies each packet and

Bandwidth Management White Paper

January , 2005 Page - 4 -

what it does with each packet. The policy database will be discussed in greater details later in this document. When the classifier sees the packet, it can do one of three things: Discard the packet This allows the classifier to provide a very robust and granular packet filtering mechanism. Forward the packet in real time This means that the packet bypasses the entire bandwidth management system and is immediately forwarded by the device. The end-result is effectively the same as if bandwidth management was not enabled at all. Prioritize the packet This allows the mechanism to provide actual bandwidth management services.

How the classifier treats the packet is governed by the policy that best matches the packet. After the classifier prioritizes the packet, it places it into a queue, which then gets a priority from 0-7, with 0 being the highest priority and 7 the lowest. Each policy gets its own queue. So, the number of queues is equal to the number of policies in the policy database, but each queue is labeled with one of the 8 priorities 0-7. This means that there could be 100 queues (if there are 100 policies), with each queue having a label from 0-7. Finally, its the job of the scheduler to take packets from the many queues and forward them. The scheduler operates through one of two algorithms: WFQ (Weighted Fair Queuing) and CBQ (Class Based Queuing). With the WFQ algorithm, the scheduler gives each priority a preference ratio of 2:1 over the immediately adjacent lower priority. In other words, a 0 queue has twice the priority of a 1 queue, which has twice the priority of a 2 queue, and so on. The general flow of the cyclic algorithm can be presented as the following chain of packets: 0,0,1,0,0,1,2,0,0,1,0,0,1,2,3, etc. Note that two packets with priority 0 are forwarded before a packet with priority 1; and two packet with priority 1 are forwarded before a packet with priority 2; and so on. Remember that each policy has its own queue, which means there could be many queues with a priority of 0. The scheduler systematically goes through queues of the same priority when it is time to forward a packet with this priority. The CBQ algorithm has the same packet-forwarding pattern as the WFQ algorithm, with one significant difference. The CBQ algorithm is aware of a predefined bandwidth configured per policy. Recall that each policy has its own queue. As policies are configured, they can be given a minimum (guaranteed) allotted bandwidth number, in Kbps (this will be discussed in detail later). This is a good time to discuss the concept of bandwidth borrowing. As already discussed, if the scheduler is operating through the CBQ algorithm, it can forward packets from queues, considering the minimum (guaranteed) bandwidth configured by that queues policy. If borrowing is enabled and the scheduler visits a queue whose bandwidth has been exceeded (or is about to be exceeded), then the scheduler will check if any other policy has left over bandwidth. If such a policy is found, bandwidth is borrowed from that policy and allocated to the policy whose bandwidth limit is about to be violated. This allows a scheduling scheme where available bandwidth can be used from other queues if the queue in question has exceeded its configured limit. If a borrowing limit is set for a certain policy, as its queue is visited for packet forwarding, the bandwidth limit is examined. If forwarding this packet from the queue will violate the

Bandwidth Management White Paper

January , 2005 Page - 5 -

limit configured within the policy, then the scheduler skips this packet and chooses another packet from another queue of the same priority. This way, the classifier can govern the scheduler and not allow certain applications to go over a pre-defined bandwidth allotment.

Bandwidth Management Operating Modes

Now that the general concepts of the system have been discussed, its time to delve into the details of the bandwidth management operating mechanism. Bandwidth management offers several operating options to allow for a large range of applications. Each of the following parameters can be configured by an administrator in order to best match the needs of the network.

Classification Mode

This parameter determines whether the bandwidth management mechanism is enabled or disabled. When enabled, the classifier and the policy database will see packets as they flow through the device. When disabled, the bandwidth management mechanism is inactive and does not operate. Note: Changing the status of classification mode requires a device reset. When classification is enabled, this parameter defines the type of classification to be used:

1. Disabled Bandwidth Management module is disabled. 2. Policies - The device classifies each packet by matching it to policies configured by the user. The policies can use various parameters, such as source and destination IP addresses, application, and so on. If required, the DSCP field in the packets can be marked according to the policy the packet matches. 3. Diffserv - The device classifies packets only by the DSCP (Differentiated Services Code Point) value. 4. ToS - The device classifies packets only by the ToS (Type of Service) bit value.

Application Classification

This option integrates the general bandwidth management module with the specific Radware device that hosts it. All Radware devices operate through the session table for traffic which is only passing via the device or by the Client Table for traffic which is load balanced by the device. Classification happens in one of two places. It happens either as a packet causes an entry to be made into the client table, or as a packet is about to be forwarded by the device and then an entry is created in the Session Table. A packet cannot be classified twice. If Application Classification is defined as Per Packet, the classifier will classify every packet that flows through the device. In this mode, every single packet must be individually classified. If Application Classification is defined as Per Session, all packets are classified by session. An intricate algorithm is used to classify all packets in a session until a best fit policy is found, fully classifying the session. Once the session is fully classified, all

Bandwidth Management White Paper

January , 2005 Page - 6 -

packets that match that client table entry are classified according to the classification of the session. This not only allows for true session classification but also saves some overhead for the classifier, as it only needs to classify sessions, and not every single packet. Application Classification, if applicable, is a powerful tool for true session management, which is an integral part of all Radware devices.

Scheduler Algorithm

As already discussed, the scheduler can operate in one of two modes: Cyclic (WFQ) and CBQ. Both operate in the same packet queue sequence (2:1), with the CBQ algorithm being aware of the bandwidth associated with each configured policy. In other words, the Cyclic algorithm works only with prioritization, and is not aware of any bandwidth limitations, configured or otherwise. Note that unless CBQ is used, policies cannot be configured with an associated bandwidth.

Random Early Detection (RED)

The RED algorithm can be used in order to protect queues from overflowing, which may cause serious session disruption. The algorithm draws from the inherent retransmission and flow control characteristics of TCP. When all queues are full, packets are dropped from all sessions. All TCP session endpoints are forced to use flow control to slow down each session causing all sessions to be throttled down and retransmissions are necessary. Furthermore, UDP packets are dropped and lost, since UDP does not have any inherent packet recovery mechanism. The purpose of RED is to prevent queue overflows before they happen. When the RED algorithm is deployed, the status of queues is monitored. When the queues are approaching full capacity, random TCP packets are intercepted and dropped. Note: only TCP packets are dropped, and packet selection is entirely random. This protects the queues from becoming completely full, thus causing less disruption across all TCP sessions and also protects UDP packets. Radwares bandwidth management mechanism deploys RED in two forms: Global RED and Weighted RED (WRED). When Global RED is used, RED is deployed just prior to the classifier, as shown in the figure below:

Bandwidth Management White Paper

January , 2005 Page - 7 -

RED is activated when 25% of the capacity of all the queues is reached. The probability of a packet being dropped increases until 75% of the total queue size (for all the queues) is reached. At that point, all TCP packets are dropped. So, Global RED will monitor the capacity of all the queues (i.e. the global set of queues) and randomly discard TCP packets before the classifier sees them. With WRED, the algorithm is deployed after the classifier and per queue (instead of for all the packets in all the queues). The general concept is shown in the figure below:

As shown above, each queue will use RED independently of other queues. So, a queue with priority 1 may be dropping TCP packets, while a queue with priority 2 (or another queue with priority 1) is not. The second difference between WRED and Global RED is that the priority of the queue affects a packet gets dropped or not. The thresholds are still at 25% and 75%. In other words, when a queue hits 25% of its full capacity, packets start being dropped, with the probability of a drop (only for that queue) increasing as the queue approaches 75% of its capacity. However, the priority of the queue has a direct effect on the probability of a packet drop. Queues with lower priority have a higher probability of having a packet dropped. For example, if queue1 with priority 0 and queue2 with priority 3 are both at 30% capacity, a new TCP packet for queue2 will have a higher probability of being dropped than a new TCP packet for queue1.

Classifier and Policy Database

Now that the general concepts of the APSolute OS bandwidth management module have been discussed, the details of the classifier and the policy database that drives it will be explored. The policy database consists of two sections. The first is the temporary or inactive section. Policies belonging to the inactive database can be altered and configured without affecting the current operation of the device. As these policies are adjusted, the changes do not affect the flow of packets unless the inactive database is activated. The activation basically updates the active policy database, which is what the classifier uses to sort through the packets that flow through it. The second section of the Policiy Dadabase is the actual polices, by which the device classifies the traffic. In order to activate the inactive database, administartos has to manually update the policiy database.

Bandwidth Management White Paper

January , 2005 Page - 8 -

When the classifier classifies the traffic it scans the entire policy database until there is a match between the packet/session to one of the policies. Once there is a match the classifier stops the scan. Hence it is important to set the order of the policy according to the volume of the traffic, and set the most common protocol first. Default Policy: The last policy in the policy database if the default policy. All traffic that is not matched by the user defined policy will be matched by the default policy. By default, the default policy forward traffic is assigned a priority 4.

Policy Components

A policy has two main functions: To define how traffic should be classified to match the policy To define which action is to be applied on the classified traffic

Traffic can be classified according to the following parameters: Inbound Physical Port Group Enables the user to set different policies to identical traffic classes that are received on different interfaces of the device. For example, the user can allow HTTP access to the main server only for traffic entering the device via physical interface 3. This provides greater flexibility in configuration. The user should first configure Port Groups. VLAN Tag Group In environments where VLAN Tagging is used, it may be required to differentiate between different types of traffic using VLAN Tags. This field allows the user to define policies that classify traffic according to VLAN tag. The user should first configure VLAN Tag Groups. Note: This feature is not supported by Application Switch 3. Source Address Packets with source IP addresses that match the Source Address field will be considered. The Source Address can be either a specific host IP address, or one of a set of configured Networks. A Network list is configured separately, and individual elements of the Network list are then used in the individual policy. An entry in the Network list is known by a configured name and can be either a range of IP address (from IPa to IPb) or a network address with a subnet mask. In addition a Network can encompass multiple disjointed IP address ranges or a group of discrete IP addresses. This is achieved by allowing multiple entries in the Networks table to have the same name. For example in order to group network 10.0.0.0/24 and range 10.10.10.13 10.10.10.243 the network names for the two entities must be identical. Note: In order to configure policies where the destination IP address is the device's interface, it is mandatory to explicitly specify the IP address and not to create a network containing the interface's IP address. Destination Address Packets with destination IP addresses that match the Destination Address field will be considered. The semantics of Destination Address are exactly the same as that of the Source Address.

Bandwidth Management White Paper

January , 2005 Page - 9 -

Direction A policy can be configured as a OneWay policy or a TwoWay policy. OneWay policies will only look at packets from the configured Source Address to the configured Destination Address (as described above). In OneWay policies, retuning packets will not be matched to the policy. A TwoWay policy will look at packets from Source to Destination (both Source IP and Source port and destination IP and Destination Port). If matching application ports are also defined for this policy (see Services), direction takes this into account as well. For example if a TwoWay policy was configured for HTTP traffic from Source A to Destination B, the policy will match the following: Source IP = IPa, Source Port X, Destination IPb, destination port 80 As well as Source IP = IPb , Source Port 80, Destination IPa, destination port X This means that a client in network A will be able to communicate with a web server in network B, but a client in network B will not be able to communicate with a web server in network A. Service The Service associated with a policy takes the capabilities of the classifier far beyond simple identification by source and destination IP addresses. The Service configured per policy can allow the policy to consider many other aspects of the packet. The Service can consider the protocol (IP/TCP/UDP), TCP/UDP port numbers, bit patterns at any offset in the packet, and actual content (such as URLs or cookies) deep in the upper layers of the packet. Available Services are very granular, and as such, warrant a complete discussion, which will immediately follow the analysis of the policy database, below. Traffic Flow Identification Bandwidth Management provides the ability to limit allocated bandwidth per single traffic flow. A traffic flow can be defined as all traffic that comes from a client IP, or as single session traffic and so on. The traffic flow Identification field defines what type of traffic flow we are going to limit via this policy. The available options are: Client (source IP) Session (source IP and port) Connection (source IP and destination IP) Full Layer4Session (source and destination IP and port) SessionCookie (must configure Cookie Field Identifier)

Classification Point In the nature of Traffic Redirection and Load Balancing decisions, the device has to modify packets when it forwards the packets to and from the servers. In AppDirector for example, client's traffic is destined to the Layer 4 Policy VIP, but the AppDirector makes a Load Balancing decision and forwards the packet to the selected server, it has to change the destination IP address to the server's real IP address. On LinkProof, clients use their own IP addresses and after LinkProof forwards the traffic to the NHR, it uses the SmartNAT and changes the source IP address. Bandwidth Management allows administrators to select at which point in the traffic flow the classification is performed: before modifying the packet or after the modification.

Bandwidth Management White Paper

January , 2005 Page - 10 -

Bandwidth Management Rules

The following rules are applied to the matched traffic:

Action

The action associated with the policy governs the action taken if the packet is a successful match. There are 4 options: 1. Forward the packet is forwarded. It can either be forwarded in real time, or it can be placed in a priority queue, as defined below. 2. Block the packet is dropped. 3. Block and reset the packet is dropped and a TCP Reset is sent to the source. 4. Block and bidirectional reset the packet is dropped and TCP Resets are sent to both endpoints of the packet: the source and the destination. The two block-and-reset options will only send TCP Reset packets in case the session is TCP. This is very useful if the classifier is looking for content that can only be seen after successful session establishment. Blocking of certain HTTP GETs is a good example of a useful block and reset action, since a GET is sent only after a TCP session is established. When a packet is being blocked by the Bandwidth Management module, System administrators may configure the module to send a trap, reporting about the blocked packets (including source IP; Source Port and Policy Index) in case the Report Block Packets flag is enabled.

Priority

If the action associated with the policy is forward, then the packet will be classified according to the configured priority. There are 9 options available: Real time forwarding and priorities 0 through 7. Note: Prioritization takes place only when the physical ports are saturated. Therefore it is mandatory to set the Port Bandwidth parameters for each physical port.

Guaranteed Bandwidth

If the CBQ algorithm is deployed for the scheduler, the policy can be assigned a minimum (guaranteed) bandwidth. The Scheduler will not allow packets that are classified through this policy to exceed the allotted bandwidth, unless borrowing is enabled. Note that the maximum bandwidth configured for the entire device, as described above, overrides perpolicy bandwidth configurations. In other words, the sum of the guaranteed bandwidth for all the policies cannot be higher than the total device bandwidth.

Borrowing Limit

As discussed above, Bandwidth Borrowing can be enabled when the scheduler operates through the CBQ algorithm. If enabled, the scheduler borrows bandwidth from queues that can spare it, in order to forward packets from queues that have exceeded (or are about to exceed) their allotted amount of bandwidth.

Bandwidth Management White Paper

January , 2005 Page - 11 -

The combination of the Guaranteed Bandwidth and Borrowing Limit fields value causes the bandwidth allotted to a policy to behave as follows:

Guaranteed Bandwidth

0 X 0 X X

Borrowing Limit

0 0 Y Y (Y>X) X

Policy bandwidth Burstable with no limit, no minimum guaranteed. Burstable with no limit, minimum of X guaranteed. Burstable to Y, no minimum guaranteed. Burstable to Y, minimum of X guaranteed. Non-burstable, X guaranteed.

Traffic Flow Max Bandwidth

Bandwidth Management introduces the ability to limit allocated bandwidth per single traffic flow. A traffic flow can be defined as all traffic that comes from a client IP, or as single session traffic and so on. This functionality provides Radware customers with: Flexibility to set a general rule that is applicable for all users, thus eliminating the need to set individual rules per user. Ability to protect applications and services from excessive use and DoS attacks by limiting the number of open sessions per user. Ability to provide fair service to all users by limiting the amount of bandwidth allocated to each user or session.

Business examples ISPs To an ISP this functionality can provide the following benefits: Protect their infrastructure from excessive usage of P2P applications by limiting P2P traffic per each client. Control FTP downloads from hosted sites by: o o Limiting FTP traffic per each session. Limiting the number of concurrent sessions a client can open.

Generate more revenue by offering differentiated download services for hosted sites. Protect key applications such as DNS from DoS by limiting number of open sessions per user.

Bandwidth Management White Paper

January , 2005 Page - 12 -

Business examples Universities To a university this functionality can provide the following benefits: Protect their infrastructure from excessive usage of P2P applications by: o o o Limiting P2P traffic per each client. Limiting the overall traffic consumed by P2P applications. Control the bandwidth consumed by each student.

The maximum bandwidth allocated per traffic flow defined in the Traffic Flow Identifier field.

Maximum Concurrent Sessions

The maximum number of concurrent sessions allowed for each traffic flow defined in Traffic Flow Identifier field (If traffic flow is defined as session, this parameter is not applicable).

Limit number of HTTP requests per Traffic Flow

Sometimes it is not sufficient to limit only the number of concurrent connection or to limit bandwidth, since some severs are sensitive to the number of new HTTP GET requests per second. When such servers are in use, the user may send numerous requests, which slows down the servers. Also an attacker can easily attack the server by sending many requests per second. Using the Bandwidth Management per Traffic Flow, it is possible to limit the number of HTTP requests per second per traffic flow. Using this parameter, administrator can limit the number of HTTP GET/POST and HEAD requests, arriving from the same user per second. When the user configures this parameter, Bandwidth Management module keeps a track of new requests per second per traffic flow, whether the traffic flow identification is SessionCookie or any other parameter.

Packet Marking

Refers to Differentiated Services Code Point (DSCP) or Diffserv. Enables the device to mark the packet with a range of bits when the packet is matched to the policy.

Policy Groups

BWM allows users to define several bandwidth borrowing domains on a device by organizing policies in groups. Only policies that participate in a specific group can share bandwidth. The total bandwidth available for a policy group is the sum of the Guaranteed Bandwidth values of all policies in the group. When some of the policies do not utilize all their Guaranteed Bandwidth, this bandwidth is used by the other policies in the group. The spare bandwidth is split between the policies that have already reached their guaranteed bandwidth according to their relative weight within the group. Example: We have a group containing the following policies: o 100k to HTTP traffic o 100k to Citrix traffic

Bandwidth Management White Paper

January , 2005 Page - 13 -

50k to FTP traffic 26k to SMTP traffic The total bandwidth available for this group is 256k. If at a certain moment there is only 50k of HTTP traffic, this means there are 50k of spare traffic for this group. The spare bandwidth will be split between the other 3 policies 28k to Citrix, 14k to FTP and 7k to SMTP. o

o

Policy Scheduler

System administrators may require that certain policies not be active during certain hours of the day, or a certain policy will only be activated at a specific time of the day for specific duration time. For example a school's library, may want to block instant messaging during school hours, but allowing instant messages after school hours or an enterprise may give high priority for mail traffic between 08:00 10:00. Using the Event Scheduler, administrators can create events which can then be attached to a policy's configurations. Events define the date and time in which an action should be performed. There are three types of events: 1. Once The event occurs only once. 2. Daily The event occurs every day on the same time. 3. Weekly The event occurs on specific day(s) every week. For each Bandwidth Management policy, administrators can configure activation schedule and inactivation schedule. Whenever the activation event occurs, the module changes the status of the policy's Operational Status from Inactive to Active. Note: Creation of new BWM polices or modification of existing policies does not effect the traffic classification immediately. For the new or modified policies to take effect, the policy database must be updated using the Update Policies function.

Bandwidth Management Services

A very advanced and granular set of services is configured within the bandwidth management system. Services are configured separately from policies. However, as each policy is configured, it is associated with a configured Service. The service associated with a policy in the policy database is a basic filter, an advanced filter, or a filter group. This represents tremendous flexibility for the classifier as it essentially gives the system a large number of possibilities for packet identification.

Basic Filters

The basic building block of a Service is a basic filter. The relationship between a filter and a service will be discussed shortly, but its important to first understand what a basic filter is. A basic filter is made up of the following components:

Protocol

This defines the specific protocol that the packet carries. The possible choices are IP, TCP, UDP, ICMP and Non-IP. If the protocol is configured as IP, all IP packets (including TCP and UDP) are considered. When configuring TCP or UDP protocol, some additional parameters are also available: 1. Destination Port - Destination port number for that protocol. For example, for HTTP, the protocol is configured as TCP and the destination port as 80.

Bandwidth Management White Paper

January , 2005 Page - 14 -

2. Source Port - Like the destination port, the source port that a packet carries in order to match the filter can be configured. The source port configuration can also allow for a range of source ports to be configured. This can help in situations where an application may use a well-known range of source ports. Note: the device supports configuring range of Source Ports and Destination Ports, using the dash sign "-" as a separator between the first port in the range and the last port in the range.

Offset Mask Pattern Condition (OMPC)

The OMPC is a means by which any bit pattern can be located for a match for any offset in the packet. This can aid in locating specific bits in the IP header, for example. TOS and Diffserv bits are perfect examples of where OMPCs can be useful. It is not mandatory to configure an OMPC per filter. However, if an OMPC is configured, there should be an OMPC match in addition to a protocol (and source/destination port) match. In other words, if an OMPC is configured, the packet needs to match the configured protocol (and ports) AND the OMPC. When configuring OMPC based filters it is possible to configure the following parameters: OMPC Offset - The location in the packet from which the checking of data is started in order to find specific bits.

OMPC Offset Relative to Indicates to which OMPC offset the selected offset is relative

to. You can set the following parameters: None, IP Header, IP Data, L4 Data, L4 Header, Ethernet or ASN1.

OMPC Mask - The mask for the OMPC Pattern. Possible values: a combination of hexadecimal numbers (0-9, a-f). The value must be defined according to the OMPC Length parameter. The OMPC Pattern parameter definition must contain 8 characters. If the OMPC Length value is lower than four Bytes, it has to be completed it with zeros. For example, if OMPC Length is two Bytes, OMPC Mask can be:abcd0000.

OMPC Pattern - The fixed size pattern within the packet that OMPC rule attempts to find.

Possible values: a combination of hexadecimal numbers (0-9, a-f). The value must be defined according to the OMPC Length parameter. The OMPC Pattern parameter definition must contain 8 characters. If the OMPC Length value is lower than four Bytes, it has to be completed with zeros. For example, if OMPC length is two Bytes, OMPC Pattern can be: abcd0000.

OMPC Condition - The OMPC condition can be either N/A, equal, NotEqual, GreaterThan or LessThan. OMPC Length - The length of the OMPC (Offset Mask Pattern Condition) data can be N/A, OneByte, TwoBytes, ThreeBytes or FourBytes.

Bandwidth Management White Paper

January , 2005 Page - 15 -

Content

In case the protocol configured is TCP or UDP, it is possible to search for any text string in the packet. Like OMPCs, a text pattern can be searched for at any offset in the packet. HTTP URLs are perfect examples of how a text search can aid in classifying a session. The service editor allows you to choose between multiple types of configurable content:

-

URL The Classifier searches in the HTTP Request for the configured URL. No

normalization procedures are taken.

Normalized URL - The Classifier normalized the configured URL and searches in the

HTTP Request for the configured URL.

Hostname The Classifier searches for the Hostname in the HTTP Header. HTTP header field - The Content field includes the header field name, and the Content data

field includes the field value.

Cookie - The Classifier searches for HTTP cookie field. The Content field includes the

cookie name, and the content data field includes the cookie value

Mail domain - The Classifier searches for the mail domain in the SMTP Header. Mail to - The Classifier searches for the mail to in the SMTP Header. Mail from - The Classifier searches for the mail from in the SMTP Header. Mail subject - The Classifier searches for the mail subject in the SMTP Header. File type - The Classifier searches for the type of the requested file in the http GET

command (jpg, exe and so on).

POP3 User - The Classifier searches for the POP3 user in POP3 traffic

FTP Command: The Classifier performs parsing of FTP commands to commands and arguments, while performing normalization of the FTP packets and stripping of telnet opcodes.

FTP content - The Classifier scans the data transmitted using FTP, performing normalization of the FTP packets and stripping of telnet opcodes. Regular expression - The Classifier searches for Regular Expression anywhere in the packet. Text - The Classifier searches for text string anywhere in the packet.

If the content type is URL for example, then the session is assumed to be HTTP with a GET, HEAD, or POST method. The classifier searches the URL following the GET/HEAD/POST to find a match for the configured text. In this case, the configured offset is meaningless, since the GET/HEAD/POST is in a fixed location in the HTTP header. If the content type is text, then the entire packet is searched, starting at the configured offset, for the content text. Content Encoding By allowing a filter to take actual content of a packet/session into account, the classifier gains a powerful way to recognize and classify even a wider array of packets and sessions. Like OMPCs, content rules are not mandatory to configure. However, if a content rule exists in the filter, then the packet needs to match the configured protocol (and ports), the configured OMPC (if one exists), AND the configured content rule.

Bandwidth Management White Paper

January , 2005 Page - 16 -

Advanced Filters and Filter Groups

The above components are involved in configuring individual basic filters in the bandwidth management module of the APSolute OS architecture. Radware devices are preconfigured with a set of basic filters that represent applications commonly found in most networks. These include individual default basic filters for IP, TCP, UDP, HTTP, FTP, HTTPS, DNS, and SMTP, among others. Now that the individual components of a basic filter have been analyzed, its a simple transition to discuss advanced filters and filter groups. An Advanced Filter is a combination of basic filters with a logical AND between them. Lets assume filters F1, F2, and F3 have been individually configured. Advanced filter AF1 can be defined as: AF1= {F1 AND F2 AND F3} In order for AF1 to be a match, all three filters of F1, F2, and F3 must match the packet being classified. A Filter Group is a combination of basic filters and advanced filters, with a logical OR between them. To continue the example above, filter group FG1 can be defined as: FG1 = {F1 OR F2 OR F3} In order for filter group FG1 to be a match, either basic filter F1, or basic filter F2, or basic filter F3 have to match the packet being classified. Radware devices are pre-configured with a set of filters that represent applications commonly found in most networks. For a list of pre-configured filters, see Appendix A.

Protocol Discovery

For optimal use the Bandwidth Management feature, network administrators must be aware of the different applications running on their network and the amount of bandwidth they consume. To allow a full view of the different protocols running on the network a traffic discovery feature is available. The protocol discovery feature can be activated on the entire network or on separate subnetworks by defining Protocol Discovery policies. A Protocol Discovery policy consists of a set of Layer1 to 3 traffic classification criteria: inbound physical port, source and/or destination MAC, VLAN tag, source and destination address. Note: It is recommended that this feature be activated for a finite time (a day or two) and not used continuously.

Bandwidth Management White Paper

January , 2005 Page - 17 -

Reporting

To view the results of bandwidth management The following reports are available: Policy Utilization Policy Utilization with Peaks Top N Policies Policy Packet Rate Policy Maximum Threshold Rate Guaranteed Rate Failures Top N protocols (from the discovery process)

Following are some reporting samples:

Figure 1- Top N Policies

Bandwidth Management White Paper

January , 2005 Page - 18 -

Figure 2 - Policy Utilization (Comparative graph)

Figure 3 - Top N protocols

Bandwidth Management White Paper

January , 2005 Page - 19 -

Examples

Example 1 Basic configuration of BWM

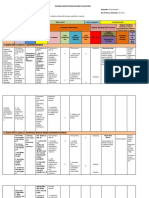

Now that the specifics of the Radware bandwidth management module have been analyzed, we can consider an example that can illustrate the practicality of the mechanism. Well build a policy database, starting with the Network list and various filters. First, lets assume the following Networks have been configured: Network Name Any Net1 Net2 Net2 Net3 Address 10.0.0.0 80.1.1.0 Mask 255.0.0.0 255.255.255.0 IP-from 0.0.0.0 20.1.1.1 30.1.1.1 IP-to 255.255.255.255 20.1.1.100 30.1.1.100 -

Note that Net2 has been configured twice. This is a legal configuration and the system recognizes Net2 as 20.1.1.1->20.1.1.100 and 30.1.1.1->30.1.1.100 Now, lets consider some basic filters, advanced filters, and filter groups: Basic Filter HTTP: dest. TCP port 80 Basic Filter SMTP: dest. TCP port 25 Basic Filter FTP: dest. TCP port 21 Basic Filter DNS: dest. UDP port 53 Basic Filter ompc1: dest TCP port 333; ompc 11223300/ffffff00 offset 50 Basic Filter ompc2: dest TCP port 333; ompc 55667700/ffffff00 offset 80 Basic Filter content1: dest. TCP port 80; content picture.gif; content type=URL Basic Filter content2: dest. TCP port 80; content image.gif; content type=URL Basic Filter content3: dest. TCP port 80; content cookie=gold; content type=TXT Advanced Filter AF1={ompc1 AND ompc2}; which means both OMPC rules must be met. Filter Group FG1={HTTP OR SMTP} Filter Group FG2={content1 OR content2}

Bandwidth Management White Paper

January , 2005 Page - 20 -

After the above has been configured, lets assume the following policy database, which includes all the pieces defined so far: Order 1 2 3 4 5 Src Any Net1 Any Net1 Any Dest Any Net2 Any Net3 Any Direction Action TwoWay TwoWay TwoWay TwoWay TwoWay Drop Forward Forward Forward Forward Priority 1 3 4 Bndwdth Service 300 DNS FG1 FTP AF1 -

RealTime -

If the above policy database exists, when the classifier receives a packet, it will start at policy #1 and try to find the first policy that fully applies to the packet in question. Since the database is searched in descending order, the following will be the basic decision making flow of the classifier: 1. Check to see if the packet is DNS from any IP to any IP. If so, drop. If not, continue searching. 2. Check to see if the packet is either HTTP or SMTP from Net1 to Net2 or from Net2 to Net1. If so, forward in real time. If not, continue searching. 3. Check to see if the packet is FTP. If so, queue with a priority of 1 and do not forward past 300Kbps. If not, continue searching. 4. Check to see if the packet is from Net1 to Net3 or from Net3 to Net1. If so, check to see if 11223300/fffff00 is found at offset 50 and 55667700/ffffff00 is found at offset 80. If so, queue with a priority of 3. If not, continue searching. 5. Check to see if the packet is from any IP to any IP. If so, queue with a priority of 4. To conclude the analysis of this sample policy database, its important to consider three notes: Policy #5 is effectively the default policy of the system. This is because it matches any packet that flows through the classifier. But, since its the last in the list, it will only match if none of the other policies match the packet. A TwoWay policy reverses source and destination port numbers if the source and destination networks are distinct IP addresses. For example, lets say we have configured a policy above with source Net1, destination Net2, and Service HTTP(destination TCP port 80). Such a policy will consider packets from Net1 to Net2 with destination port 80 and packets from Net2 to Net1 with source port 80. We did not use advanced filter group FG2, which has 2 filters each with a content rule. Had we used it, the policy with an FG2 service should have been configured before policy #2. This is because policy #2 would match any HTTP packet. If we had configured a policy with FG2 at #3, for example, it would never match a packet, since policy #2 would return a successful match for every HTTP packet, even for those containing the content configured in FG2.

Bandwidth Management White Paper

January , 2005 Page - 21 -

Example 2 BWM for P2P

Peer to Peer (P2P) file trading is the single fastest-growing consumer of network capacity and is becoming one of the major challenges for carriers and service providers due to the continuous growth of file sharing applications and users. Carries and service providers are required to invest more time, money and resources to satisfy the endless demand for the ever increasing bandwidth consumed by the P2P users, however, providing more bandwidth is not an effective solution, since the added bandwidth will immediately be used by the P2P traffic. A true solution for the P2P never-ending bandwidth consumption is a traffic shaping solution, were it is possible to block completely, prioritize or limit the verity of P2P applications. P2P Bandwidth Management The Bandwidth Management module includes a feature set that allows administrators to have full control over their available bandwidth. Using these features, applications can be prioritized according to a wide array of criteria, while taking the bandwidth used by each application into account. As sessions are prioritized, bandwidth thresholds can be configured to either ensure a guaranteed bandwidth for a certain application and/or to keep them below a pre-determined bandwidth limit. Bandwidth Management module supports the following P2P applications: BitTorrent eDonkey eMule Kazaa WinMX Gnutella Winny FastTrack Configuration Bandwidth Management for P2P traffic can be done both for traffic passing via the device (CID or DefensePro) and for traffic that is load balanced by the device (LinkProof, SecureFlow or AppDirector). In case BWM for P2P is needed for load balanced traffic only (sessions which appear in the Client Table), it is not needed to enable Session Table. 1. From device setup, click on Global and edit Session Table Parameters. Ensure that Session Table Status checkbox is checked and "Session Table Lookup Mode" is set to "Full Layer 4". Note: In some environments, based on traffic volumes, there might be a need to change the session table tuning.

Bandwidth Management White Paper

January , 2005 Page - 22 -

2. From BWM global Parameters, change the classification mode to Policies and make sure that Application Classification is set to "Per Session". Click Ok and reboot the device.

3. Create a BWM policy for P2P traffic. For BitTorrent and applications that uses the BitTorrent network, select Service Type Grouped Service and from the Service Name dropdown menu, select the BT group. For other P2P application, select P2P group.

Bandwidth Management White Paper

January , 2005 Page - 23 -

Conclusion

As part of Radwares APSolute OS architecture, bandwidth management allows network administrators to provide controlled traffic differentiation through their ITM infrastructure. Bandwidth management is a powerful value added service that APSolute OS delivers at sensitive and strategic locations on the networks where Radware devices can be deployed. Through this value added service, user traffic can be controlled with great granularity and network resources can be efficiently optimized.

Bandwidth Management White Paper

January , 2005 Page - 24 -

Appendix A

Service Name ERP/CRM Sap Database Mssql Mssql-monitor Mssql-server Oracle Oracle-v1 Oracle-v2 Description Filter Type Basic Group Basic Basic Group Basic Basic Basic Basic Basic

Microsoft SQL service group SQL monitoring traffic SQL server traffic Oracle database application service group Oracle SQL*Net v1-based traffic (v6, Oracle7) Oracle SQL*Net v2/Net 8-based traffic (Oracle7, 8, 8i, 9i) Oracle-server1 Oracle Server (e-business solutions) on port 1525 Oracle-server2 Oracle Server (e-business solutions) on port 1527 Oracle-server3 Oracle Server (e-business solutions) on port 1529 Thin Client or Server Based citrix Citrix connectivity application service group. Enables any type of client to access applications across any type of network connection. citrix-ica Citrix Independent Computer Architecture (ICA) citrix-rtmp Citrix RTMP citrix-ima Citrix Integrated Management Architecture citrix-ma-client Citrix MA Client citrix-admin Citrix Admin Peer-to-Peer p2p Peer-2-peer applications bt Bittorrent File Sharing Application. Includes the following basic filters: bitports, Bittorrent, btannounce, bt-bitfield , bt-cancel, bt-choke, bthave, bt-interested, bt-ninterested, bt-peer, btpiece, bt-port, udp1, bt-udp2, bt-unchoke edonkey gnutella fasttrack kazaa winmx(tcp) winmx(udp) winny ed2ksignature Internet File Sharing Application File sharing and distribution network. Data transfer protocol used by Kazaa Kazaa File Sharing Application (Note: Music City Morpheus and Grokster also classify as Kazaa) File Sharing Application File Sharing Application File Sharing Application EDonkey & eMule File Sharing Application (dynamic port)

Group

Basic Basic Basic Basic Basic Group Group

Basic Basic Basic Basic Basic Basic Basic Advanced

Bandwidth Management White Paper

January , 2005 Page - 25 -

Service Name dns ftp-session http http-alt https icmp ip nntp telnet tftp udp Instant Messaging aol-msg aim-aol-any icq msn-msg msn-any yahoo-msg yahoo-msg1 yahoo-msg2 yahoo-msg3 yahoo-any Email mail smtp imap Pop3

Description Domain Name Server protocol File Transfer Protocol service both FTP commands and data Web traffic Web traffic on port 8080 Secure web traffic Internet Control Message Protocol IP traffic Usenet NetNews Transfer Protocol

Filter Type Basic Basic Basic Basic Basic Basic Basic Basic Basic Basic Basic Basic Basic Basic Basic Basic Group Basic Basic Basic Basic Group Basic Basic Basic

AOL Instant Messenger AIM/AOL Instant Messenger ICQ MSN Messenger Chat Service MSN Messenger Chat Service (any port) Yahoo! Messenger Yahoo! Messenger on port 5000 Yahoo! Messenger on port 5050 Yahoo! Messenger on port 5100 Yahoo! Messenger on any port

You might also like

- QoS - Linux - NSM - IntroductionDocument62 pagesQoS - Linux - NSM - Introductionharikrishna242424No ratings yet

- BW BenchmarkDocument22 pagesBW BenchmarkCentaur ArcherNo ratings yet

- Describe The Rules For Basic Priority Inheritance Protocol With ExampleDocument7 pagesDescribe The Rules For Basic Priority Inheritance Protocol With ExampleAshok KuikelNo ratings yet

- Congestion Management Overview: Cisco IOS Quality of Service Solutions Configuration GuideDocument34 pagesCongestion Management Overview: Cisco IOS Quality of Service Solutions Configuration GuidekumarcscsNo ratings yet

- Scalable System DesignDocument22 pagesScalable System DesignMd Hasan AnsariNo ratings yet

- Implementation: 3.1. Module DescriptionDocument3 pagesImplementation: 3.1. Module DescriptionsathyajiNo ratings yet

- Configure QoS On Cisco RoutersDocument9 pagesConfigure QoS On Cisco Routersblackmailer963No ratings yet

- Techniques To Improve QoSDocument10 pagesTechniques To Improve QoSNITESHWAR BHARDWAJNo ratings yet

- Applying Qos Policies: Cisco Security Appliance Command Line Configuration Guide Ol-6721-02Document16 pagesApplying Qos Policies: Cisco Security Appliance Command Line Configuration Guide Ol-6721-02dick1965No ratings yet

- Overview: Bandwidth Control Management: Manual Chapter: Managing Traffic With Bandwidth ControllersDocument8 pagesOverview: Bandwidth Control Management: Manual Chapter: Managing Traffic With Bandwidth ControllersarthakristiwanNo ratings yet

- Network Performance Architecture PresentationDocument21 pagesNetwork Performance Architecture PresentationAhmad M. ShaheenNo ratings yet

- 04.dca1201 - Operating SystemDocument13 pages04.dca1201 - Operating SystemRituraj SinghNo ratings yet

- Quality-of-Service in Packet Networks Basic Mechanisms and DirectionsDocument16 pagesQuality-of-Service in Packet Networks Basic Mechanisms and DirectionsoicfbdNo ratings yet

- Resource Literature ReviewDocument5 pagesResource Literature ReviewpriyaNo ratings yet

- Nsdi13 Final164Document14 pagesNsdi13 Final164dil17No ratings yet

- Explain Different Distributed Computing Model With DetailDocument25 pagesExplain Different Distributed Computing Model With DetailSushantNo ratings yet

- Using Fuzzy Logic Control To Provide Intelligent Traffic Management Service For High-Speed NetworksDocument9 pagesUsing Fuzzy Logic Control To Provide Intelligent Traffic Management Service For High-Speed NetworksanraomcaNo ratings yet

- Application Delivery Controller and Virtualization (18CS7G2)Document16 pagesApplication Delivery Controller and Virtualization (18CS7G2)Ritesh HosunNo ratings yet

- Combined To Many Logics: Iii. ImplementationDocument4 pagesCombined To Many Logics: Iii. ImplementationNandkishore PatilNo ratings yet

- Mule NotesDocument28 pagesMule NotesRhushikesh ChitaleNo ratings yet

- Configuration of Quality of Service Parameters inDocument10 pagesConfiguration of Quality of Service Parameters inHamka IkramNo ratings yet

- Network Border PatrolDocument58 pagesNetwork Border PatrolSandesh KumarNo ratings yet

- System Design BasicsDocument33 pagesSystem Design BasicsSasank TalasilaNo ratings yet

- Chapter - 1: Objective StatementDocument73 pagesChapter - 1: Objective StatementMithun Prasath TheerthagiriNo ratings yet

- Deriv SolutionDocument3 pagesDeriv Solutionminto5050No ratings yet

- Throughput-Aware Resource Allocation For Qos Classes in Lte NetworksDocument8 pagesThroughput-Aware Resource Allocation For Qos Classes in Lte NetworksAntonio GeorgeNo ratings yet

- Destination, Computes The Delay and Sends A Backward: Iv Snapshots SourceDocument4 pagesDestination, Computes The Delay and Sends A Backward: Iv Snapshots SourceNandkishore PatilNo ratings yet

- Policy Based Management of ContentDocument7 pagesPolicy Based Management of ContentkrajstNo ratings yet

- Journey To The Center of The Linux KernelDocument25 pagesJourney To The Center of The Linux KernelagusalsaNo ratings yet

- (IJCST-V8I4P1) :afraa Mohammad, Ahmad Saker AhmadDocument8 pages(IJCST-V8I4P1) :afraa Mohammad, Ahmad Saker AhmadEighthSenseGroupNo ratings yet

- Project TemainDocument36 pagesProject TemainMithun DebnathNo ratings yet

- Research Seminar: A Survey and Classification of Controller Placement Problem in SDNDocument25 pagesResearch Seminar: A Survey and Classification of Controller Placement Problem in SDNTony Philip JoykuttyNo ratings yet

- Quality of ServicesDocument7 pagesQuality of ServicesVaishnavi TawareNo ratings yet

- Educative System Design Basic Part0Document44 pagesEducative System Design Basic Part0Shivam JaiswalNo ratings yet

- Tcp-Ip Unit 3Document14 pagesTcp-Ip Unit 3poojadharme16No ratings yet

- High-Performance Linux Cluster Monitoring Using Java: Curtis Smith and David Henry Linux Networx, IncDocument14 pagesHigh-Performance Linux Cluster Monitoring Using Java: Curtis Smith and David Henry Linux Networx, IncFabryziani Ibrahim Asy Sya'baniNo ratings yet

- Sandvine PtsDocument12 pagesSandvine PtsКирилл Симонов100% (2)

- Presentation On Real Time Scheduling With Security EnhancementDocument17 pagesPresentation On Real Time Scheduling With Security EnhancementIsmail TaralNo ratings yet

- ES II AssignmentDocument10 pagesES II AssignmentRahul MandaogadeNo ratings yet

- Chapter 1Document13 pagesChapter 1Hemn KamalNo ratings yet

- QOS of OpnetDocument8 pagesQOS of OpnetsszNo ratings yet

- U4S9Document18 pagesU4S9shubham gupta (RA1911004010290)No ratings yet

- Chapter 5 QoS and Traffic ManagementDocument27 pagesChapter 5 QoS and Traffic ManagementShame BopeNo ratings yet

- Combined To Many Logics: Iii. ImplementationDocument4 pagesCombined To Many Logics: Iii. ImplementationNandkishore PatilNo ratings yet

- Bandwidth ManagementDocument5 pagesBandwidth ManagementMania PauldoeNo ratings yet

- Qos and IpsDocument16 pagesQos and Ipsbone9xNo ratings yet

- Assignment 2part 3 Asynchronou MiddlewareDocument18 pagesAssignment 2part 3 Asynchronou MiddlewareعليNo ratings yet

- Unit-5 Mobile ComputingDocument133 pagesUnit-5 Mobile Computingvdr556No ratings yet

- Linux Kong ResDocument10 pagesLinux Kong RespashaapiNo ratings yet

- Policy Control & Charging SystemDocument26 pagesPolicy Control & Charging SystemAtanu MondalNo ratings yet

- Paraphrasing 4Document4 pagesParaphrasing 4fatima asadNo ratings yet

- VoIP QoS in PracticeDocument19 pagesVoIP QoS in PracticeewrdietschNo ratings yet

- Network (In) Security Through IP Packet FilteringDocument14 pagesNetwork (In) Security Through IP Packet FilteringSalman HabibNo ratings yet

- Minimizing The Maximum Firewall Rule Set in A Network With Multiple FirewallsDocument3 pagesMinimizing The Maximum Firewall Rule Set in A Network With Multiple FirewallsluckylakshmiprasannaNo ratings yet

- Firewall Rulebase Analysis ToolDocument8 pagesFirewall Rulebase Analysis ToolShubham MittalNo ratings yet

- IISRT Arunkumar B (Networks)Document5 pagesIISRT Arunkumar B (Networks)IISRTNo ratings yet

- Software-Defined NetworkDocument11 pagesSoftware-Defined NetworkForever ForeverNo ratings yet

- Dos Mid PDFDocument16 pagesDos Mid PDFNirmalNo ratings yet

- Adaptive Multi-Resource End-to-End Reservations For Component-Based Distributed Real-Time SystemsDocument10 pagesAdaptive Multi-Resource End-to-End Reservations For Component-Based Distributed Real-Time SystemsCesar UANo ratings yet

- DC Servo MotorDocument6 pagesDC Servo MotortaindiNo ratings yet

- Kaitlyn LabrecqueDocument15 pagesKaitlyn LabrecqueAmanda SimpsonNo ratings yet

- Polytropic Process1Document4 pagesPolytropic Process1Manash SinghaNo ratings yet

- Properties of Moist AirDocument11 pagesProperties of Moist AirKarthik HarithNo ratings yet

- IPO Ordinance 2005Document13 pagesIPO Ordinance 2005Altaf SheikhNo ratings yet

- Level 3 Repair: 8-1. Block DiagramDocument30 pagesLevel 3 Repair: 8-1. Block DiagramPaulo HenriqueNo ratings yet

- Job Description For QAQC EngineerDocument2 pagesJob Description For QAQC EngineerSafriza ZaidiNo ratings yet

- Form16 2018 2019Document10 pagesForm16 2018 2019LogeshwaranNo ratings yet

- Basic Vibration Analysis Training-1Document193 pagesBasic Vibration Analysis Training-1Sanjeevi Kumar SpNo ratings yet

- Building New Boxes WorkbookDocument8 pagesBuilding New Boxes Workbookakhileshkm786No ratings yet

- Engineering Notation 1. 2. 3. 4. 5.: T Solution:fDocument2 pagesEngineering Notation 1. 2. 3. 4. 5.: T Solution:fJeannie ReguyaNo ratings yet

- Analysis of Material Nonlinear Problems Using Pseudo-Elastic Finite Element MethodDocument5 pagesAnalysis of Material Nonlinear Problems Using Pseudo-Elastic Finite Element MethodleksremeshNo ratings yet

- Richards Laura - The Golden WindowsDocument147 pagesRichards Laura - The Golden Windowsmars3942No ratings yet

- Dr. Eduardo M. Rivera: This Is A Riveranewsletter Which Is Sent As Part of Your Ongoing Education ServiceDocument31 pagesDr. Eduardo M. Rivera: This Is A Riveranewsletter Which Is Sent As Part of Your Ongoing Education ServiceNick FurlanoNo ratings yet

- Social Media Marketing Advice To Get You StartedmhogmDocument2 pagesSocial Media Marketing Advice To Get You StartedmhogmSanchezCowan8No ratings yet

- 4th Sem Electrical AliiedDocument1 page4th Sem Electrical AliiedSam ChavanNo ratings yet

- Instructions For Microsoft Teams Live Events: Plan and Schedule A Live Event in TeamsDocument9 pagesInstructions For Microsoft Teams Live Events: Plan and Schedule A Live Event in TeamsAnders LaursenNo ratings yet

- 5 Star Hotels in Portugal Leads 1Document9 pages5 Star Hotels in Portugal Leads 1Zahed IqbalNo ratings yet

- Evaluating Project Scheduling and Due Assignment Procedures An Experimental AnalysisDocument19 pagesEvaluating Project Scheduling and Due Assignment Procedures An Experimental AnalysisJunior Adan Enriquez CabezudoNo ratings yet

- Applied-Entrepreneurship PPTDocument65 pagesApplied-Entrepreneurship PPTJanice EscañoNo ratings yet

- Fidp ResearchDocument3 pagesFidp ResearchIn SanityNo ratings yet

- MOTOR INSURANCE - Two Wheeler Liability Only SCHEDULEDocument1 pageMOTOR INSURANCE - Two Wheeler Liability Only SCHEDULESuhail V VNo ratings yet

- What Caused The Slave Trade Ruth LingardDocument17 pagesWhat Caused The Slave Trade Ruth LingardmahaNo ratings yet

- ARUP Project UpdateDocument5 pagesARUP Project UpdateMark Erwin SalduaNo ratings yet

- SM Land Vs BCDADocument68 pagesSM Land Vs BCDAelobeniaNo ratings yet

- Cryo EnginesDocument6 pagesCryo EnginesgdoninaNo ratings yet

- Perkins 20 Kva (404D-22G)Document2 pagesPerkins 20 Kva (404D-22G)RavaelNo ratings yet

- Aluminum 3003-H112: Metal Nonferrous Metal Aluminum Alloy 3000 Series Aluminum AlloyDocument2 pagesAluminum 3003-H112: Metal Nonferrous Metal Aluminum Alloy 3000 Series Aluminum AlloyJoachim MausolfNo ratings yet

- A Novel Adoption of LSTM in Customer Touchpoint Prediction Problems Presentation 1Document73 pagesA Novel Adoption of LSTM in Customer Touchpoint Prediction Problems Presentation 1Os MNo ratings yet

- Separation PayDocument3 pagesSeparation PayMalen Roque Saludes100% (1)