Professional Documents

Culture Documents

Target Recognition Based On 3D Lidar Data

Uploaded by

Jang-Seong ParkOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Target Recognition Based On 3D Lidar Data

Uploaded by

Jang-Seong ParkCopyright:

Available Formats

The Ninth International Conference on Electronic Measurement & Instruments ICEMI2009

New Approach of Imagery Generation and Target Recognition Based on 3D

LIDAR Data

Jinhui Lan Zhuoxun Shen

(Department of Measurement and Control Technologies, School of Information Engineering,

University of Science and Technology Beijing, Beijing 100083, China)

E-mail: shenzhuoxun@gmail.com

Abstract Light Detection and Ranging (LIDAR) sensor is an

advanced technology of 3D-measurement with high accuracy.

The processing of 3D point cloud data collected via LIDAR

sensor is of topical interest for 3D target recognition. In this

paper, a new approach of imagery generation and target

recognition based on 3D LIDAR data is presented. The raw 3D

point cloud data are transformed and interpolated to be stored

in 2D matrix. The target imagery is generated and visualized by

means of height-gray mapping principle proposed in paper.

For different poses of target, the affine invariable moments of

target imagery are selected as features for recognition because

of its invariance in rotation, scaling, translation and affine

transformation. BP neural network algorithm and Support

Vector Machine (SVM) algorithm are utilized as method of

target classification and recognition. The recognition results

by two algorithms are compared against and analyzed

detailedly. The new method had been applied into target

recognition in outdoor experiments. Different types of targets

are classified and the rate of correct recognition is greater than

95%. Through outdoor experiments, it can be proven that this

new method is applied to the field of 3D target recognition

effectively and stability.

Key words LIDAR sensor, target recognition, 3D point cloud,

imagery generation, affine invariable moment.

.INTRODUCTION

In recent years, noticeable progresses of optical

3D-measurement techniques have been made through

interdisciplinary cooperation in optoelectronics, image

processing and close-range photogrammetry.

Among the various optical 3D-measuring devices,

Light Detection and Ranging (LIDAR) sensor is capable

of measuring millions of dense 3D-points in a few

seconds under various conditions. LIDAR is an optical

sensing technology that measures properties of scattered

laser light to find range and/or other information of a

distant target.

The processing of 3D point cloud data collected via

LIDAR sensor is of topical interest for 3D target

recognition. The 3D point cloud data of target represent

range information between LIDAR sensor and target.

The range is only dependent on the geometric size and

shape of target without influence of illumination or

temperature disturbance which is the key to solve in

target recognition by traditional CCD or Infrared image.

So LIDAR sensor has advantages of 3D target

recognition [1].

This paper proposed a new method of data processing

and target imagery generation based on 3D LIDAR data.

Target is scanned by LIDAR sensor with pattern of raster

scanning. The raw range data are XYZ coordinate values

of per laser footprint under coordinate system of LIDAR

sensor. The 3D data are transformed to be stored in 2D

matrix according to data processing. The pseudo-gray

imagery of target is generated and visualized by means of

height-gray mapping principle presented in paper.

We gain many different imageries of target when they

have various poses in three-dimensional space. How to

select a low-dimensional feature descriptor that

represents high-dimensional complex target for

recognition is the key problem. In this paper, the affine

invariable moments of target at different poses are

selected as features for classification and recognition

because of its invariance in rotation, translation, scaling

and affine transformation. Both of BP neural network

algorithm and SVM algorithm are utilized as methods of

target classification and recognition.

.IMAGERY GENERATION

LIDAR sensor can collect range data of target region.

The coordinate system of LIDAR sensor is illustrated in

Fig.1

(a)

1-612

_____________________________

978-1-4244-3864-8/09/$25.00 2009 IEEE

The Ninth International Conference on Electronic Measurement & Instruments ICEMI2009

(b)

Fig.1. LIDAR sensor is placed approximately vertical to the ground that

can be seen in image (a). XOY plane under coordinate system is in

parallel with ground which is supposed to be a horizontal plane. Z-axis

direction is perpendicular to XOY plane. It represents the height

direction of target. Y-axis represents the depth direction. Image (b)

shows 3D point cloud data of target.

The raw coordinate values of per 3D point are stored

in Windows Notepad illustrated in Fig.2. From the figure,

we know each row represents one laser footprint. Three

columns respectively represent x, y, z coordinate values

of each laser footprint.

Fig.2. A section of data is stored in Windows Notepad.

Three-dimensional coordinate value of per laser footprint is stored.

Each row represents one laser footprint. The first column is x-axis

coordinate value of per point, the second column is y-axis coordinate

value of the point, and the third column is z-axis coordinate value.

THE RESEARCH IS DONE BY MATLAB

SOFTWARE.

A. Data processing

X(i),Y(i),Z(i) are three column vectors that are used to

respectively store three coordinate values of per laser

footprint and i represents the serial number of points.

The scanning mode of LIDAR sensor[2] is raster

scanning illustrated in Fig.3.

Fig.3. Schematic diagram of raster scanning. Black points represent

laser footprints. Solid lines represent direction of real scanning process.

Dashed lines represent supposed scanning not real scanning process.

Z represent the direction of Z-axis is perpendicular to paper surface

pointing toward readers.

Under the pattern of raster scanning, there is a

phenomenon that a large jump in value of x-axis

direction occurs between the last point and the first point

in every two adjacent scanning lines.

( ) ( ) 1 X i X i T + > (1)

Where X (i+1) and X (i) are x-axis coordinate values of

two adjacent points in raw data. T is a threshold value

relevant to the scanned region. It can be given by the

priority information.

The two adjacent points which satisfy Eq.(1) can be

considered as the end point and first point in two adjacent

scanning lines. So the total number of scanning lines

(Parameter R in Eq.(2)) is determined. Because of the

precision error of equipment, low-reflectance object and

laser signal of atmospheric attenuation, the emitted laser

pulses can not be completely received. Therefore, the

number of laser footprint in each scanning line is not

completely equal.

( ) ( )( ) max 1, 2, 3, , C M j j R = = ! (2)

Where M(j) represents number of the entire laser

footprints per scanning line. j represents the serial

number of per scanning line. Parameter R is total number

of scanning lines.

Parameters R, C are confirmed as row number and

column number of the 2D matrix. The matrix is used to

store data of laser footprints. However, each element

value of matrix is not defined because all values of M(j)

are not completely equal. We apply the means of

interpolation to supplement those lost data.

If the number of laser footprint in one scanning line is

less than C, we adopt the method of cubic spline

interpolation to construct new point data of this scanning

line. The number of new point data is equal to C.

Compared with linear interpolation and polynomial

interpolation, the cubic spline interpolation uses

low-degree polynomials that cost less computing time,

incurs curve smoother and smaller error. More important,

it cant suffer the Runges phenomenon. After

interpolation, the number of laser footprint per scanning

line is equal to Parameter C. We use the matrix to save

each z-axis coordinate value of per point including real

laser footprint and interpolated laser footprint. So each

element value of matrix can be defined.

B. Imagery generation

In order to get imagery of scanning scene, we should

computer the grey value of per pixels in imagery before.

In this section, we propose the principle of height-grey

mapping to gain the pseudo-grey imagery of target based

on the z band information (or height in the scene).

The each element of matrix is z-axis coordinate value

that represents the height of point. We find the maximal

value and minimal value in matrix. The maximal value

and the minimal value respectively represent the highest

point and lowest point in scanning scene. It is supposed

that the gray value of highest point is 255 and the gray

1-613

The Ninth International Conference on Electronic Measurement & Instruments ICEMI2009

value of lowest point is 0. The gray values of other points

are successively mapped between 0 and 255.

( )

min

max min

255

Z Z

Gray

Z Z

(3)

Where Z is the z-axis coordinate value of point to be

computed. Z

max

and Z

min

are z-axis coordinate values of

the highest point and lowest point that present the

maximal value and minimal value of height of points.

According to the Eq.(3), gray value of per pixel can be

calculated. The imageries of scanning scene are showed

in Fig.4.

(a) (b)

(c)

Fig.4. Pseudo-grey imagery of target is generated based on the

height-grey mapping. Images (a), (b) are single target in scanning scene.

Image (c) is two classes of target in one scanning scene.

.TARGET RECOGNITION

The main parts of the framework of target recognition

are illustrated in Fig.5. Imagery of scanning scene has

been gained in Sec.2. In image processing, we adopt the

methods of filtering, thresholding and region labelling.

Median filter is used to remove salt-and-pepper noise.

Weighted average filter is used to smooth the discrete

sampling values of laser points. The imagery after

processing is segmented by heuristic approach, based on

visual inspection of the histogram. The target and

background can be distinguished. Then the targets are

labelled by fixed rectangles. Affine moments of target

region are extracted as features. It has better invariability

than traditional Hu moments in processing the

perspective transformation of images. The improved BP

network algorithm and SVM algorithm are applied to

target classification and recognition.

Fig.5. Main parts in target recognition framework

The original pseudo-grey images are processed. The

results are shown in Fig.6.

(a) (b)

(c)

Fig.6. Original pseudo-grey imageries of target are processed. Images

(a), (b) are single target in scanning scene. Image (c) is two classes of

target in one scanning scene. The targets are labelled with green

rectangles.

For a digital image f(x,y), the moment of order (p+q)

is defined as

( ) ,

p q

pq

x y

m x y f x y dxdy =

(4)

Where p,q=0, 1, 2,

The central moments are defined as

( ) ,

p q

pq

x y

x x y y f x y dxdy u

| | | |

=

| |

\ . \ .

(5)

Where

10

00

m

x

m

= and

01

00

m

y

m

=

The affine moments are defined as [3]

2

20 02 11

1 4

00

A

u u u

u

=

2 2 3 3 2 2

30 03 30 12 21 03 30 12 03 21 21 12

2 10

00

6 4 4 3

A

u u u u u u u u u u u u

u

+ +

=

( ) ( ) ( )

2 2

20 21 03 12 11 30 03 12 21 02 30 12 21

3 7

00

A

u u u u u u u u u u u u u

u

+

=

Image generation

Image processing

Feature extraction

Target recognition

1-614

The Ninth International Conference on Electronic Measurement & Instruments ICEMI2009

Where this set of affine moments

1

A ,

2

A ,

3

A are

invariant to translation, rotation, scale, and affine

transformation.

In the outdoor experiment, we construct a database of

target imagery. As single vehicle rotates per five degrees,

it is scanned by LIDAR sensor. Then two classes of

vehicle are placed together, both of them are scanned in

one scene. The affine moments of target image are

extracted as features for recognition.

(a) (b) (c) (d)

(e) (f) (g) (h)

Fig.7. Four typical poses of targets in images. Image (a) ~ (d) are

imageries of transporter. Images (e) ~ (h) are imageries of racing car.

Target regions have been labelled by green rectangles.

Then the affine moments A

1

,A

2

,A

3

can be calculated.

Traditional Hu moments are also calculated [4]. Here, we

select the four invariants

1

~

4

. Te experimental data

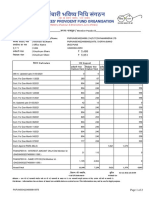

are shown in Table 1 and Table 2.

Table 1. Affine moments and Hu moments of transporter

(a) (b) (c) (d)

A

1

(10

-3

) 7.751 7.612 7.686 7.673

A

2

(10

-8

) 1.340 1.162 1.171 1.326

A

3

(10

-6

) 8.740 8.608 8.783 8.713

1

(10

-1

) 1.852 3.017 3.347 3.420

2

(10

-2

) 0.762 6.215 8.173 8.642

3

(10

-3

) 0.099 2.438 3.153 3.556

4(

10

-3

) 0.002 1.124 1.521 1.741

From the tables, we can know that the affine moment

values A

1

,A

2

,A

3

are very close in the same class and has

large space between different classes. Compared with

traditional Hu moments, the standard deviations of affine

moment are less and therefore, it has excellent

invariability.

Then the affine moments are inputs for BP neural

network and SVM classifier.

BP neural network is a supervised neural network

classifier that learns to classify inputs by given features.

BP neural networks can be used to model complex

relationships between inputs and outputs or to find

patterns in data. In essence, BP neural network can be

viewed as a function that maps input vectors to output

vectors. The knowledge is presented by the

interconnection weight, which is adjusted during the

learning stage using the back-propagation learning

algorithm that uses a gradient search technique to

minimize the mean square error between the actual

output pattern of the network and the desired output

pattern [5, 6].

Table 2. Affine moments and Hu moments of racing car

A

1

(10

-3

) 6.802 6.681 6.747 6.662

A

2

(10

-10

) 3.864 3.565 3.746 3.496

A

3

(10

-6

) 1.324 1.299 1.497 1.308

1

(10

-1

) 3.142 3.486 3.018 3.239

2

(10

-2

) 7.208 9.434 6.429 7.834

3

(10

-4

) 5.731 5.878 5.255 5.257

4(

10

-4

) 3.199 0.596 2.162 2.254

The number of neurons in the input layer and the

output layer is completely determined according to the

requirement of the users. As discussed above, under the

experimental condition, the varied parameters concerned

about the target imagery are affine moments A

1

, A

2

, A

3

.

So the neuron number in the input layer is 3. If the target

is a transporter, we defined the output is (1, 0). If the

target is a racing car, we defined the output is (0, 1). The

number of neurons of output layer is 2. The BP

architecture with one hidden layer is enough for the

majority of applications. Hence, only one hidden layer

was adopted here. Until now, there is not a perfect theory

for instructing choice for the number of neurons in

hidden layers. An empirical equality of number of hidden

layer is shown in Eq.(6). We can finally select a

reasonable value by testing the value that accordance to

the Eq.(6) one by one. The value of L is 6 and the error

between the actual output and desired output has minimal

value.

(1 10) L I O a a = + + s s (6)

Where L is the number of hidden layer, I represents

the number of input layer, O represents the number of

output layer, a represents the constant between 1 and 10.

Among the training algorithms, the

Levenberg-Marquardt (LM) algorithm is the fastest and

least memory consuming one. In this paper, the LM

algorithm was used for training the network. Neural

network toolbox of MATLAB software was used. The

training parameters were set as following: the target

value is 10

-5

, learning ratio is 0.02 and the maximum

training number is 300.

Support vector machine is a very useful technique for

data classification by the principle of structural risk

minimization. SVM-based classifiers are claimed to have

good generalization properties compared to conventional

1-615

The Ninth International Conference on Electronic Measurement & Instruments ICEMI2009

classifiers, because in training the SVM classifier, the

so-called structural misclassification risk is to be

minimized, whereas traditional classifiers are usually

trained so that the empirical risk is minimized[7].

The recognition results are shown in Fig.8.

(a)

(b)

Fig.8 Different types of target are recognized.

We choose 60 images of two classes of target for

testing. The recognition results are given in Table 3.

Table 3. Results of recognition by BP neural network and SVM

BP neural network SVM

Number of correct

recognition

53 58

Number of false recognition 7 2

Total number 60 60

Recognition rate 88.33% 96.67%

The training result for BP neural network is not steady.

And the suitable model for training is difficult to confirm.

The time for computing by BP neural network is more

than SVM. The algorithm of SVM doesnt have the

over-learning phenomena. And recognition rate is higher

than BP neural network. It has advantages in small

samples classification.

.CONCLUSION

In this paper we proposed a sequential approach for

target imagery generation, target classification and

recognition. The processing of data collected by LIDAR

sensor is novel, and it can transform one-dimensional

non-visual original data into two-dimensional visual

imagery. The imagery generation is based on the

principle of height-gray mapping. This method can

effectively distinguish the target from environment.

Targets are classified and recognized by means of

feature-based algorithm. Compared with model-based

algorithm, it has more advantages. The algorithm of

model-based requires storing 3D model of target in

database. These models are often difficult to construct

and not readily available for all types of targets that need

to be recognized. Instead of using a stored model, a

classifier can be constructed to learn a model of each

target type based on a set of observed features from

training imagery. The performance of a classifier in

this feature-based model is dependent on the quality of

the features used.

The affine moments are more excellent than

traditional moments on invariance of perspective

transformation of images. The methods of BP network

and SVM for classification and recognition are

implemented in outdoor experiment. The target

recognition system has been built by the affine moments

and SVM algorithm. In the work described in this paper,

we have succeeded in processing the raw data collected

by LIDAR sensor. In the future we expect to have

non-scanning system to support the imaging speed of

target region. When the imaging speed is available,

real-time performance of recognition system will be

made. If also the system is carried on the aircraft, the

experimental data will be more comprehensive.

REFERENCES

[1] A.Vasile, R.M.Marino. Pose-independent automatic target

detection and recognition using 3-D ladar data[J], Proc. SPIE

5426, 67~83(2004)

[2] R.Shu, Y.Hu, and X.Xue. Scanning modes of an airborne

scanning laser ranging-imager sensor[J], Proc. SPIE 4130,

760~767(2000)

[3] J.Heikkila. Pattern matching with affine moment descriptors[J],

Pattern Recognition, 37(9),1825~1834(2004)

[4] Chen Qing, Petriu Emil, Yang Xiaoli. A comparative study of

fourier descriptors and Hus seven moment invariants for image

recognition[C], Canadian Conference on Electrical and

Computer Engineering, Vol.1, pp: 0103~0106, 02 May,2004.

[5] L.V.Fausett. Fundamentals of neural networks: Architectures,

Algorithms and Applications[M], Prentice Hall press, US. (1994)

[6] S.Haykin. Neural network: A comprehensive foundation(2nd

Edition) [M], Prentice Hall press, US. (1998)

[7] N.Vapnik. The nature of statistical learning theory[M], Springer

press, New York. (1999)

1-616

You might also like

- Augmented UKFDocument6 pagesAugmented UKFJang-Seong ParkNo ratings yet

- Unscented KF Using Agumeted State in The Presence of Additive NoiseDocument4 pagesUnscented KF Using Agumeted State in The Presence of Additive NoiseJang-Seong ParkNo ratings yet

- Robust - Algorithm For ATR Using Radar Cross SectionDocument12 pagesRobust - Algorithm For ATR Using Radar Cross SectionJang-Seong ParkNo ratings yet

- CS672 Reinforcement Learning: Introduction & LogisticsDocument8 pagesCS672 Reinforcement Learning: Introduction & LogisticsJang-Seong ParkNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- TEACHING PROFESSION MIDTERM EXAMINATIONDocument7 pagesTEACHING PROFESSION MIDTERM EXAMINATIONRonibe B MalinginNo ratings yet

- DEO Assignment3Document2 pagesDEO Assignment3monk0062006No ratings yet

- The Lenovo® Thinkpad® T430 Laptop: Enhanced Features For Better, Round-The-Clock PerformanceDocument4 pagesThe Lenovo® Thinkpad® T430 Laptop: Enhanced Features For Better, Round-The-Clock Performanceopenid_AePkLAJcNo ratings yet

- How to Make a Smoke Bomb at HomeDocument4 pagesHow to Make a Smoke Bomb at HomeMurali Krishna GbNo ratings yet

- ASO-turbilatex: Store 2-8ºC. Principle of The Method The ASO-Turbilatex Is A Quantitative Turbidimetric Test For TheDocument1 pageASO-turbilatex: Store 2-8ºC. Principle of The Method The ASO-Turbilatex Is A Quantitative Turbidimetric Test For ThePhong Nguyễn WindyNo ratings yet

- Community Nutrition Assignment by Fransisca Stephanie, 030 10 109, Medical TrisaktiDocument5 pagesCommunity Nutrition Assignment by Fransisca Stephanie, 030 10 109, Medical Trisaktilady1605No ratings yet

- Analysis of Hydrodynamic Journal Bearing Using Fluid Structure Interaction ApproachDocument4 pagesAnalysis of Hydrodynamic Journal Bearing Using Fluid Structure Interaction ApproachseventhsensegroupNo ratings yet

- Introduction to UNIX SystemsDocument1 pageIntroduction to UNIX Systemsabrar shaikNo ratings yet

- VR10 Radar Installation and Functions GuideDocument24 pagesVR10 Radar Installation and Functions GuideKingsley AsanteNo ratings yet

- 2024 California All-State EnsemblesDocument99 pages2024 California All-State Ensemblescameronstrahs1No ratings yet

- ? @abcd E FG? HI JE Gbi? KLM JGJD E FGC? HBCD ? NDocument54 pages? @abcd E FG? HI JE Gbi? KLM JGJD E FGC? HBCD ? Nrhymes2uNo ratings yet

- Ghastly Ghosts by Gina D. B. ClemenDocument15 pagesGhastly Ghosts by Gina D. B. ClemenДавид ПустельникNo ratings yet

- AlphaConnect Configuration ManualDocument62 pagesAlphaConnect Configuration Manualtecnicoeem50% (2)

- Klasifikasi Berita Hoax Dengan Menggunakan Metode: Naive BayesDocument12 pagesKlasifikasi Berita Hoax Dengan Menggunakan Metode: Naive BayesSupardi MsNo ratings yet

- LEPU MEDICAL Pocket ECG PCECG-500 CatalogDocument4 pagesLEPU MEDICAL Pocket ECG PCECG-500 CatalogAlbert KayNo ratings yet

- Edu 214 Final Presentation Lesson PlanDocument3 pagesEdu 214 Final Presentation Lesson Planapi-540998942No ratings yet

- English 8Document8 pagesEnglish 8John Philip PatuñganNo ratings yet

- ONCE UPON A TIME: Fairy Tale StructureDocument13 pagesONCE UPON A TIME: Fairy Tale StructureLailya Reyhan RahmadianiNo ratings yet

- World History Final Study GuideDocument6 pagesWorld History Final Study GuidecherokeemNo ratings yet

- D e Thi THPT 1Document19 pagesD e Thi THPT 1Lê Thị Linh ChiNo ratings yet

- Em'cy-030-Steering Gear FailureDocument2 pagesEm'cy-030-Steering Gear FailurelaNo ratings yet

- Sayantrik Engineers Profile & CapabilitiesDocument32 pagesSayantrik Engineers Profile & CapabilitiesAbhishek GuptaNo ratings yet

- Nair Hospital Job DetailsDocument15 pagesNair Hospital Job DetailsKSNo ratings yet

- LNL Iklcqd /: Grand Total 11,109 7,230 5,621 1,379 6,893Document2 pagesLNL Iklcqd /: Grand Total 11,109 7,230 5,621 1,379 6,893Dawood KhanNo ratings yet

- Quadratic Equations Shortcuts with ExamplesDocument21 pagesQuadratic Equations Shortcuts with ExamplesamitNo ratings yet

- Pub - Principles of Molecular NeurosurgeryDocument676 pagesPub - Principles of Molecular NeurosurgerymarrenixNo ratings yet

- Literary TermsDocument40 pagesLiterary TermsMariana AndoneNo ratings yet

- Fairy Tales and Psychological Life Patterns Transactional AnalysisDocument9 pagesFairy Tales and Psychological Life Patterns Transactional Analysissaas100% (1)

- Chemistry 11Document22 pagesChemistry 11Mohammad Aamir RazaNo ratings yet

- Ancient Persian Religion-ZoroastrianismDocument9 pagesAncient Persian Religion-ZoroastrianismspeedbirdNo ratings yet