Professional Documents

Culture Documents

Binocular Hands and Head Tracking Using Projective Joint Probabilistic Data Association Filter

Uploaded by

Miyabi MayoOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Binocular Hands and Head Tracking Using Projective Joint Probabilistic Data Association Filter

Uploaded by

Miyabi MayoCopyright:

Available Formats

Binocular Hands and Head Tracking using Projective

Joint Probabilistic Data Association Filter

Nataliya Shapovalova

Heriot-Watt University, University of Girona, University of Burgundy

A Thesis Submitted for the Degree of

MSc Erasmus Mundus in Vision and Robotics (VIBOT)

2009

Abstract

The tracking and reconstruction of hands and head trajectory is an important problem solution

of which is required in many applications like video surveillance, human-computer interface,

programming by demonstration, among others.

Hands and head tracking plays a particular role in manipulation action and manipulated

objects recognition since in most of the cases trajectories of hands and head give the main cue

to such a system.

The goal of this thesis is to estimate and recover 3D trajectories of both hands and head using

binocular vision system. Two main problems are discussed in this thesis: data association and

occlusions, typical for multitarget tracking, and 3D trajectory reconstruction, based on fusing

information from several views.

The thesis makes the contribution of combining Joint Probabilistic Data Association Fil-

ter, applied to multitarget tracking, and Projective Kalman Filter, applied to recovering 3D

trajectories with observation from several cameras, to one general framework Projective Joint

Probabilistic Data Association Filter.

Research is what Im doing when I dont know what Im doing. . . .

Werner von Braun

Contents

1 Introduction 1

1.1 Motivation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.2 Problem Formulation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.3 Scope of the Thesis and Contribution of the Thesis . . . . . . . . . . . . . . . . . 4

1.4 Outline of the Thesis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2 State of the Art 6

2.1 Hands and Head Detection and Feature Extraction . . . . . . . . . . . . . . . . . 7

2.2 Tracking Methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

2.2.1 3D Tracking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

2.2.2 Multitarget Tracking and Occlusions . . . . . . . . . . . . . . . . . . . . . 10

3 Methodology 11

3.1 Hands and Head Detection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

3.1.1 Detection of Skin-Color Areas . . . . . . . . . . . . . . . . . . . . . . . . . 11

3.1.2 Parameters Extraction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

3.2 Kalman Filter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

3.2.1 Kalman Filter Data Model . . . . . . . . . . . . . . . . . . . . . . . . . . 16

3.2.2 Kalman Filter Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

3.3 3D Tracking: Projective Kalman Filter . . . . . . . . . . . . . . . . . . . . . . . . 19

i

3.3.1 Projective Geometry and Camera Model . . . . . . . . . . . . . . . . . . . 19

3.3.2 Projective Kalman Filter . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

3.4 Multitarget Tracking:Joint Probabilistic Data Association Filter . . . . . . . . . 22

3.4.1 Problem Formulation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

3.4.2 Association Probabilities Estimation . . . . . . . . . . . . . . . . . . . . . 23

3.5 3D Multitarget Tracking: Projective Joint Probabilistic Data Association Filter . 25

3.6 Intialization of Trackers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

4 Results 30

4.1 System Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

4.2 Experiment Results Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

5 Conclusions 38

5.1 Future work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Bibliography 43

ii

List of Figures

1.1 The manipulation actions recognition system . . . . . . . . . . . . . . . . . . . . 3

2.1 General framework of hands and head tracking . . . . . . . . . . . . . . . . . . . 7

3.1 Original image . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

3.2 Skin color segmentation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

3.3 Background subtraction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

3.4 Detection of hands and head . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

3.5 Feature extraction using weighted centre . . . . . . . . . . . . . . . . . . . . . . . 15

3.6 Correspondance between real world and observation . . . . . . . . . . . . . . . . 16

3.7 Fitting the ellipse model (a) and error calculation (b): N

C

is number of brown

pixels, N

E

is number of orange pixels . . . . . . . . . . . . . . . . . . . . . . . . . 16

3.8 Example of error estimation for a case when there are 3 objects of interest in the

scene . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

3.9 Selection of represantative points . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

3.10 The ongoing Kalman lter cycle . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

3.11 Pinhole camera model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

3.12 Example with two targets and three measurements . . . . . . . . . . . . . . . . . 24

3.13 Initial image frame . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

3.14 Triangulation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

iii

4.1 Selected frames from PJPDAF tracking; red tracker - head, green tracker - right

hand, blue tracker - left hand . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

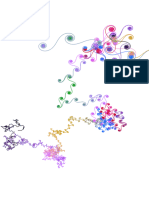

4.2 3D tracking by PJPDAF . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

4.3 Accuracy estimation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

4.4 3D trajectory of an action drinking a coke; red tracker - head, green tracker -

right hand, blue tracker - left hand . . . . . . . . . . . . . . . . . . . . . . . . . . 34

4.5 Selected frames from PJPDAF tracking: a boy is drinking a coke; red tracker -

head, green tracker - right hand, blue tracker - left hand . . . . . . . . . . . . . . 35

4.6 Segmentation of frame 21, both hands are presented as one skin-color area . . . . 36

4.7 Selected frames from PJPDAF tracking; red tracker - head, green tracker - right

hand, blue tracker - left hand . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

iv

List of Tables

4.1 Parameters and their description . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

v

Chapter 1

Introduction

1.1 Motivation

Automatic recognition of human activity is useful in a number of domains, for example:

Video Surveillance

Human-Computer Interaction

Programming by Demonstration

Video Surveillance is a growing research area which has recently gained exceptional impor-

tance due to increased terroristic threats in public places. People are observed everywhere by

CCTV cameras: in airports, railway stations, shopping malls, subway, among others. However,

nowadays automated surveillance is not developed enough; in most of the cases video stream

from the cameras are observed by people. Taking into account human factor, the most measur-

able eect of CCTV is not on crime prevention, but crime detection. In this aspect, automatic

analysis of manipulation action is of importance as it would help to analyze human behavior

and avoid a possible crime situation.

As computer and other smart devices are getting involved in our everyday life more and

more, the human-machine interaction is also facing new challenges. Conventional methods

(mice, keyboards, buttons, etc) work well with a desktop, but they are not convenient to control

complex devices such as robots. Inspired by interpersonal communication, natural methods of

control became popular recently; among them can be distinguished vision based and audio

based instructions. Vision based methods are usually based on human actions and provide

more information to a robot rather than verbal instructions. Moreover, manipulation actions

can be applicable to a computer games, e. g. Wii. For example, while playing tennis with Wii,

1

people will not manipulate a toy copy of tennis racket, but will be able to use real equipment,

and therefore, improve their technique.

Programming by Demonstration (PbD) is a method for teaching a computer (or robot) new

behavior by demonstrating the task instead of programming it using low-level instructions. The

user should just instruct the computer to observe the action. After observations, computer cre-

ates the program that corresponds to the shown action. This approach has several advantages.

First, it allows creating user oriented devices when every user can program the computer ac-

cording to his/her needs. Second, it is not required that end user knows a formal programming

language; he/she just should be able to demonstrate the action. In this case robot is learning

like a human. Third, the developer of such a robot does not need to know details of the actions

to be done by a robot.

In this thesis we study tracking ot the persons head and hands in 3D. Information about

head and hands trajectories is in particular interest of manu action recognition applications.

It is important to analyze not only interactions between humans but also between humans

and objects. Actually, objects play a crucial role in many cases, without taking them into

account actions can be easily misinterpreted. Manipulation actions belong to such a class of

activity where objects play an important role and give additional information about what the

human is doing [33].

1.2 Problem Formulation

The problem of analysing manipulation actions is not trivial because it requires analysis of

both hand and handled object which provide complementary information. Knowing something

about the object, it is easier to recognize the action; similarly, information about the action is

a strong cue for object recognition. Therefore, simultaneous recognition of action and object is

of interest; the general framework of such a system was proposed by Kjellstrom et al. [15] and

is demonstrated in Fig. 1.1.

As it was already mentioned, information about hands and object is of interest. Therefore,

it is necessary to dene what kind of knowledge is required about hands and object and how

to extract it.

For hands based actions, 3D trajectories of the hands plays a key role; e.g. when the person

is drinking, the hand is moving from down up to the head; when person is writing, the hand

is usually smoothly moving along the surface. However, if we know absolute location about

human hands, usually it does not provide sucient information; in this case the relative position

of a hand according to a head is much more powerful cue for the manipulation action analysis.

Moreover, sometimes head also participates in manipulation action, e.g. drinking from a cup;

2

Figure 1.1: The manipulation actions recognition system

therefore analysing 3D head trajectory along with 3D trajectories of both hands will increase

robustness of the manipulation action recognition system.

The parameters of manipulated objects are also of interest. First, it is necessary to estimate

its physical characteristics such as size, color, etc [33]. Second, location of the object and how

it corresponds to hands trajectory provide additional information about manipulation action.

Talking about manipulation action recognition, it is necessary to know when the action

starts and when it is over. It can be done with temporal segmentation, which assumes that the

action starts when the human picks the object up and the action is over when the human puts

the object down.

T. Moeslund in [20] denes tracking as combination of two processes:

Figure ground segmentation, which allows detecting the object in the scene;

Temporal correspondance, which associates the detected objects in the current frame with

those in previous frames.

In the context of the previous denition we can dene several main problems in our appli-

cation:

3

object detection and tracking: since we do not know anything about the object, detecting

an unkown object is a tricky question. This is not within the scope of this thesis but is

discussed in [33].

hands and head detection and tracking: hands in manipulation actions can move freely,

therefore we cannot avoid occlusions; moreover, estimation of 3D trajectory from an stereo

images requires special approach because of the uncertanties in tracking. This is studied

below.

Tracking is a general problem which solution highly depends on the system requirements.

In our case, we dene several preconditions to the environment:

there is a table

a person is sitting behind the table

objects are on the table

background is nearly static

Once again, we here study the tracking of head and hands while object tracking is studied

in [33].

1.3 Scope of the Thesis and Contribution of the Thesis

The focus of this master thesis is on analysis of the hands and head movement in 3D space.

The designed system will be integrated as a module into the Simultaneous Action and Object

Recognition system [15] with the architecture presented in Fig. 1.1. The tracking system is

divided into two main layers:

hands and head detection: localizing possible hands and head in the image plane

3D tracking: estimating temporal correspondances of hands and head;

Estimation of 3D position in multitarget tracking requires a particular approach that will

take into account uncertanty of data association as well as projective nature of the measure-

ment. The contribution of this paper is in combination of Joint Probabilistic Data Association

Filter (JPDAF) [11], famous for handling occlusions, and Projective Kalman Filter (PKF) [7],

known for estimation of 3D location of the target, into the Projective Joint Probabilistic Data

Association Filter (PJPDAF)

4

1.4 Outline of the Thesis

The thesis is organized in the following manner:

Chapter 1: Introduction Motivation and problem formulation of the research are

presented and analysed; scope of the thesis is dened.

Chapter 2: State of the Art Related work in the context of 3D hands and head

tracking are analyzed. Cons and pros of existing methods are discussed.

Chapter 3: Methodology The tracking framework is described. Main contribution of

the paper, PJPDAF algorithm, is presented;

Chapter 4: Experiments and Results Analysis The experimental setup and results

are discussed

Chapter 5: Conclusions The thesis is concluded with a summary and the scope of

future work is discussed.

5

Chapter 2

State of the Art

Vision based estimation of human motion is an active and growing research area due to its

complexity and increasing number of potential applications. The applications include human-

machine interaction, surveillance, image coding, biometrics, animation, automatic pedestrian

detection in cars, computer games, etc. These application cover dierent aspects of human

tracking, including estimation of full body motion, hands and even face mimic tracking.

The methods of tracking have evolved a lot. Magnetic and optical trackers, used to be

popular in the beginning of tracking algorithms, are almost not used anymore even though

they provide good enough accuracy. Previously popular glove-based hand tracking [25] is quite

seldom used nowadays. Special equipment like gloves, trackers makes the tracking process easier

from one point of view (robustness of tracking), but cannot be used in everyday life environment

where tracking algorithms should be applicable. That is why now the things are done in a more

natural way, and researchers try to nd way how to track humans in dierent occasions and

dierent places. The survey of current tracking techniques can be found in [20].

For simultaneous visual recognition of manipulation actions and manipulated objects we

are mostly interested in the tracking hands and head of the human; therefore we will present

general framework of the hands and head tracking and will not go to details about other tracking

human systems. Moreover, taking into accoung that 3D trajectories of both hands and head

are important features for this application, we will pay special attention to dierent approaches

of 3D tracking from images. In addition, considering that we need to track several objects of

interest simultaneously, occlusion and data association problems will be discussed.

General framework for hand and head tracking is presented in Fig. 2.1. Actually, the

framework itself is quite similar to many of the object tracking algorithm, however the methods

used for hand and head tracking in dierent steps are quite limited and used mostly for dealing

with skin-color areas.

6

Figure 2.1: General framework of hands and head tracking

2.1 Hands and Head Detection and Feature Extraction

The purpose of the detection stage is to localize the object of interest in the image frame. In

hands and head tracking usually there are 3 approaches how to do it: background subtraction,

skin-color segmentation and learning-based algorithms.

Background segmentation is a widely used technique and it is applied in dierent applica-

tions, e.g. [3, 28]. The basic approach for background subtraction is to substract the image with

static background from an input image frame [3] and apply threshold to the result. Therefore,

only those pixels, which are signicantly dierent from a background are labeled as foreground.

The main problem of this method is to estimate the proper threshold level. If the threshold is

too high, this approach will easily fail when the foreground and background are similar. If the

threshold is too low, the foreground image will contain a lot of noise due to small changes in

the background even if they are not noticable for human eyes (e.g. non-uniformal light).

Another algorithm for background subtraction was introduced by Stauer and Grimson [24].

The main idea of the algrorithm is to statistically model color of every pixel using a mixture

of Gaussians, both foreground and background are represented in this model. In this method,

we try to t each pixel of the current frame into existing Gaussian Mixture Model (GMM) of

this pixel estimated according to previous frames. If the pixel falls to the GMM, corresponding

Gaussian is updated; otherwise a new Guassian with mean equal to the current pixel and small

initial variance is introduced to the mixture model. According to the matching results, pixel is

labeled as foreground or background.

7

The compromise between described methods for background subtraction is presented by [32].

In their approach Wren et al. model the color of the background with single Guassian. This

method is a good trade-o between computational complexity and robust results; therefore we

adopted this method for our applications, details can be found in 3.1.1

Other powerfull methods for detection are segmentation and learning. Considering that

for many skin-color model learning process is required, these two methods will be discussed

together. Segmentation in hands and head tracking is usually done using a skin-color as a main

feature. According to [30], there are 3 dierent approaches to skin-color detection:

explicity dened skin region

nonparametric skin distribution modelling

parametric skin distribution modelling

In the rst approach, skin color region is explicitly dened in some colorspace. The numerical

results for RGB space can be found in [16].

For modeling skin-color using nonparametric distribution dierents solutions are used. One

of the interesting algorithms is to use rectangle features and attentional cascade for head de-

tection, introduced by Viola and Jones [31]. Trained attentional cascade allows to reject areas

of the image without faces and study more the areas where faces can be found. Another set

of techniques for nonparametric modeling are based on look-up tables, histograms and tem-

plates [3, 5]. According to Bradski [5] the skin-color model is represented by the 1D histogram

constructed from chromotical component of skin-color pixels. Argyros and Lourakis [1] oered

to use Bayesian classifeir for skin-blob detection.

For the parametric modelling of the skin-color distribution the Gaussian model is usually

applied. [14]. Approximation of skin-color with three 3D Gaussian is described in [22]. The

parameters of these Gaussian are estimated by using k-means clustering over the large training

set. Pixels in a color input image are classied according to the Mahalanobis distances to the

three clusters.

Nonparametric and parametric skin distribution modelling require supervised learning step,

and therefore cannot be used when automatic tracking is required. Therefore, we will use

the rst approach, which already have dierent numerical solutions [30]. Moreover, to have

more robust detection of skin-color areas, we use combination of segmentation and background

subtraction.

After skin-areas are detected, it is necessary to extract features for futher tracking. The

selection of these features depends a lot on the nal purpose of the application. For exam-

ple, many of the researches are dedicated not only to tracking the location, but also to the

reconstruction of the 3D articulated model of the hand and its pose recognition [9, 27]. In

8

such a systems usually special points of interest like ngertips and valleys are extracted from

the segmented hand. In other application when shape is important, countours can be good

representation of the hand model [1]. In our case, we are interested in location of the hands

and head and therefore centroid point representation of the hands and head will work pretty

well. For simplicity we will use point representation term.

2.2 Tracking Methods

For head tracking the special version of Mean shift tracking algorithm is widely used [5]. It

is operates on probability distribution which represents correspondance of the input image to

calculated skin color model. However for point-based tracking, statictical methods are more

suitable [34]. The advantage of these methods is that they take into account the facts that

meausurements are usually noisy and object motion can have random perturbations, what

is quite typical for hands. Statistical methods solve these problems by considering the mea-

surement and model uncertainties during object state estimation. Two main algorithms are

distuinguished: Kalman Filters [13] and Particle Filters [26]. Kalman lter is used to estimate

the state of a linear system where state and both measurement and motion noise are assumed

assumed to be normally distributed. Particle lters are used when these constrains are not met.

Both Kalman and Particle lters have been used for tracking systems, e.g. for hand and head

tracking examples can be found in [14, 19, 28]. In our system we decided to use Kalman Filters.

We can easily assume Guassian state and noise models, and, moreover Kalman lters are less

computationaly expensive comparing to Particle lters.

2.2.1 3D Tracking

Some application still do not require 3D information, results of tracking hands and head in

image plane can be found e.g. in [28]. Other applications need 3D information about target,

though. Stereo systems not only give additional information to recognition module, but also

simplify many tasks, like handling occlusions, overcoming ambiguities and providing more ro-

bust tracking. However, depth estimation is not an easy task even with calibrated cameras.

There are three main approaches in 3D estimation in tracking systems.

Fuse data from several views and do tracking directly in 3D;

Estimate trajectory of the target in image plane and in the end of each frame estimate

3D location;

Observe target in image plane and update model of tracker in 3D;

9

In rst approach disparity map is estimated from stereo images and features extracted for

tracking are estimated in 3D space. Nickel et al. [21] searches for a head and hands in the

disparity map of each new frame using skin-color distribution. Similar method is described

in [6] where Shape-from-Silhouette technique is used. This approach looks easy and logical,

however disparity map does not provide robust enough results due to homogeneous texture of

skin-blobs.

Another approach is tracking object of interest in image plane and then in the end of each

frame use simple geometrical calculations and estimate 3D location of the target, this method

is applied e.g. in [29]. In [14] the tracking is even done only in one view and second view is only

used for 3D information retrieval. This approach cannot guarantee the smooth reconstructed

3D trajectory of the target, though. Besides that another interesting implementation of depth

estimation was done by Argyros and Lourakis in [2]. They matched contour points based

on approaching both contours by a rough estimation follow by optimization according to the

stable marriage problem. The results of this marriage problem are good, but the underlying

assumptions in the optimization are not always kept.

The last approach is clearly presented by Canton-Ferrer et al. in [7]. Having a model of the

target in 3D and updating it in each frame after observing objects of interest in image planes,

allows to fuse data from multiple views and provide smooth estimation of the target trajectory

in 3D space. The model used in our system is similar to this (Chapter 3.3).

2.2.2 Multitarget Tracking and Occlusions

When the action of the human is presented through the 3D trajectories of hands and head,

simultaneous tracking of of several objects of interest is required; therefore advanced multitarget

algorithms should be applied. The main problem in multitarget tracking is correct association

between target and observation. In most of the systems data association in current frame is

done according to the nearest skin-blob to the tracker from the previous frame [7] and one-

target-to-one-measurement association is usually assumed [1]. However, in our application we

suppose free movement of both hands and therefore self-occlusions are an evident problem.

In this case we can have one measurement for several blobs; another problem is that the we

track the hand which can handle an manipulated object, therefore we can face the case when

there are several measurements produced by a target. The solition to data association and

occlusion problems is explaine by Fortmann in [11]. The Joint Probabilistic Data Association

Filter(JPDAF) is presented and shown how it can handle multiple measurements and problems

of data association technique. More details about JPDAF will be given in Chapter 3.4.

10

Chapter 3

Methodology

3.1 Hands and Head Detection

The purpose of this section is to dene how hands and head are detected in an image frame

and how the elliptical models are tted to this areas.

3.1.1 Detection of Skin-Color Areas

The purpose of this section is to dene the algorithm that allows to detect hands and head

from an image similar to the one presented in Fig. 3.1.

Skin Colour Segmentation

The most important part of skin-colour segmentation is to nd an appropriate skin-colour

model, which should be adaptable for dierent skin-colours in any lightning conditions. The

common RGB representation of colour images is not suitable for characterizing skin-colour. In

the RGB space, the triple component (r, g, b) represents not only colour but also luminance.

Luminance may vary across a persons face due to the ambient lighting and is not a reliable

measure in separating skin from non-skin region. Luminance can be removed from the colour

representation in the chromatic colour space. Therefore, the HSV colour space has been selected

for this purpose, as it produces a good separation between skin-colour and other colours with

varying illumination [17].

HSV is a cylindrical coordinate system and the subset of this space dened by the colours is

a hexcone or a six-sided pyramid. The Hue value (H) is given by the angle around the vertical

axis with red at 0, yellow at 60, green at 120, cyan at 180, blue at 240, and magenta at 300. The

Saturation value (S) varies between 0 and the maximum value of 1 in the triangular sides of the

11

Figure 3.1: Original image

Figure 3.2: Skin color segmentation

hexcone and refers to the ratio of purity of the corresponding hue to its maximum purity when

S equals 1. The Value (V) also ranges between 0 and 1 and is a measure of relative brightness.

At the origin, when the V component equals 0, the H and S components become undened and

the resulting colour is black. The set of equations that transform a point in the RGB colour

space to a point in the HSV colour space is presented in [10].

For skin colour modeling, hue and saturation have been reported [8] to be sucient for

discriminating colour information. Therefore, for skin segmentation, we used set of 4 parame-

ters: minimum and maximum Hue as well as minimum and maximum Saturation (Hmin, Hmax,

Smin, Smax) which values were determined empirically. The results of skin-colour segmentation

can be observed in Fig. 3.2.

12

Figure 3.3: Background subtraction

Background Subtraction

For background subtraction the algorithm described in [32] has been implemented. The process

of background subtraction can be described in two steps:

learning background model

background subtraction for every image frame.

In the learning step, we model the color of each pixel I(x, y) with a single 3D (H, S, V)

Gaussian I(x, y) N((x, y), (x, y)). The model parameters (x, y), (x, y)) are learnt from

the color observation in several frames with stationary background. In the second step, after the

background model is derived, for every pixel in current image (x, y), the likelihood of its color

coming from N((x, y), (x, y)) is computed. The pixels that deviate from the background

model are labeled as foreground pixels (Fig. 3.3).

After the skin segmentation and background subtraction are done, their results are com-

bined together and combination of morphological operations is applied. In addition, all the

blobs which are too small are removed. The total procedure of hands and head detection is

demonstated in Fig. 3.4.

3.1.2 Parameters Extraction

Considering that every object of interest (hand or head) occupies a relatively small part of the

image, we use a point based model for tracking. In order to have more accurate detection of

hands and head, we approximate their shape with ellipses. In this section we discuss how to t

ellipses to the detected objects and how to nd the representative point.

13

Figure 3.4: Detection of hands and head

The most common algorithm for estimating representative point of an skin-colored area, is

to calculate the weighted centre of the object of interest. Even though this approach will work

in most of the cases (Fig. 3.5a), in particular situations (Fig. 3.5b) using weighted centre will

lead us to wrong result.

According to the requirements of the system, we know that in the image we should observe

at least one head and maximum one head and both hands. However, due to segmentation noise

and occlusions, it may happen that number of detected skin-color blobs does not correspond to

actual number of object of interest in the image. For example, when the results of segmentation

are not perfect, we can observe 5 blobs, when we actually have only 3 objects of interest. Another

case is when skin areas occlude each other, and we can detect e.g. only 1 blob when actually

there are two objects - hand and head.

What we can see in real world when one skin-colored blob (Fig. 3.6a) is detected:

Head (we have an assumption that head can be always perferctly seen by both cameras),

Fig. 3.6b

One hand overlaps with the head, Fig. 3.6c

Both hands overlap with the head, Fig. 3.6d

14

(a) Correct estimation (b) Wrong estimation

Figure 3.5: Feature extraction using weighted centre

In this case, weighted centre of the skin-color blob will give totally wrong measurement. In

order to overcome this problem, we generate several hypotheses about actual number of objects

of interest in the scene.

Assume that we detected N number of skin-colored blobs after background substraction and

skin-color segmentation. Then we generate K = N + 2 hypotheses. The hypothesis k means

that there are k objects of interest in the scene. Next step is to apply k-means clustering [18] to

the segmented image with number of clusters equal to k. After that the problem of estimation

the proper number of skin-blobs turns into nding the optimal number of clusters that t the

data. This can be done assuming that [23]:

clusters should be compact

clusters should be well separated.

In order to provide compactness of the clusters, we t an ellipse to each cluster and estimate

error by summing up number of points that do not t the model (Fig. 3.7):

Err = N

C

+N

E

(3.1)

where N

C

is number of cluster points outside the ellipse and N

E

is number of ellipse points

that do not belong to the cluster. The smaller this error is, the better estimation of number

of actual objects we have (Fig. 3.8a). In order to provide good separation of the clusters and

overcome problem of overclusterization, the penalty is applied (Fig. 3.8b):

Err

P

= Err(1 +k/p) (3.2)

where p is a penalty coecient. In our case, p = 5.

After the optimal number of objects of interest is found, the centers of ellipses (Fig. 3.9)

that t every cluster, are used as representative points of observation data.

15

(a)

(b) (c) (d)

Figure 3.6: Correspondance between real world and observation

Used approach does not solve the problem of false detection caused by segmentation noise,

though, but signically improves results of feature extraction for occluded skin areas.

3.2 Kalman Filter

3.2.1 Kalman Filter Data Model

Kalman Filter was rst introduced by Kalman [13]; a good introduction can be found in [?].

It is used to estimate the state of a linear system where the state and noise have Gaussian

(a) (b)

Figure 3.7: Fitting the ellipse model (a) and error calculation (b): N

C

is number of brown

pixels, N

E

is number of orange pixels

16

(a) Err (b) Err

P

Figure 3.8: Example of error estimation for a case when there are 3 objects of interest in the

scene

Figure 3.9: Selection of represantative points

distribution. The dynamic model of the state x

n

is governed by the linear stochastic

dierence equation

x

k

= Fx

k

+w

k1

(3.3)

with a measurement z

m

that is

z

k

= Hx

k

+v

k

(3.4)

The matrix F in the dierence equation equation relates the state at the previous time step

k 1 to the state at the current step k. The matrix H in the measurement equation equation

relates the state x

k

to the measurement z

k

. The random variables w

k

and v

k

represent the

process and measurement noise (respectively). They are assumed to be independent (of each

other), white, and with normal probability distributions

17

Figure 3.10: The ongoing Kalman lter cycle

p(w) N(0, Q) (3.5)

p(v) N(0, R) (3.6)

where Q is process noise covariance and R is measurement noise covariance matrices. In practice

F, H, Q and R might change with each time step, but in standard Kalman Filter it is assumed

these matrices to be constant.

3.2.2 Kalman Filter Algorithm

The Kalman lter is an recursive algorithm that estimates a process using a form of feedback

control: the lter estimates state x

k

at time k and then obtains feedback in the form of (noisy)

measurement z

k

. Therefore, the equations [?] for the Kalman lter fall into two groups: time

update equations (prediction stage) and measurement update equations(correction stage). The

time update equations are responsible for estimating the state x

k

and error covariance matrix

P

k

given the state x

k1

and P

k1

in the previous time step k 1. The measurement update

equations are responsible for the correction of the estimated state and covariance according

to the observed data. Kalman gain K is computed according to the covariance matrix S of

the innovation vector z

k

; the innovation vector z

k

is dened as a dierence between real and

estimated measurement. Both K and z

k

are used to compute the corrected state vector x

k

and its covariance P

k

, which are used as an input for the next interation. In the Fig. 3.10 the

Kalman Filter cycle is presented.

Prediction:

x

k

= Fx

k1

(3.7)

P

k

= FP

k1

F

T

+Q (3.8)

where x

k

and

P

k

are the state and ther covariance predictions at time k.

18

z

k

= H x

k

(3.9)

where z

k

is predicted observation at time k.

Update:

x

k

= x

k

+K

k

z

k

(3.10)

P

k

= (I K

k

H)

P

k

(3.11)

where the lter gain matrix K

k

is

K

k

= P

k

H

T

S

1

k

(3.12)

and the innovation vector z

k

z

k

= z

k

z

k

(3.13)

has the covariance matrix S

k

S

k

= H

P

k

H

T

+R (3.14)

3.3 3D Tracking: Projective Kalman Filter

3.3.1 Projective Geometry and Camera Model

We use simple pinhole camera model with known parameters [12]. The pinhole camera model

is demonstrated in Fig. 3.11.

Let Q be a point of the Euclidean 3D space and q its projection, both Q and q are represented

by homogeneous coordinates. Then transformation from Q to q can be described using camera

matrix M:

q = MQ, M = K

R t

0

1x3

1

(3.15)

where R and t are rotation and translation, which dene extrinsic parameter of the camera; K

19

Figure 3.11: Pinhole camera model

contains the intrinsic parameters of the camera:

K =

x

0 x

0

0

0

y

y

0

0

0 0 1 0

(3.16)

where (x

0

, y

0

) are the coordinates of the principal point of the image plane;

x

= fm

x

and

y

= fm

y

represent the focal length f of the camera in terms of pixel dimensions in the x and

y direction with scale factors m

x

and m

y

respectively.

It is important to mention that actually projection from 3D space to image plane is dened

up to a scale s: q = [x y s]

T

; hence, normalized coordinates q = ( x y 1)

T

can be dened:

q =

q

s

(3.17)

Therefore, in order to model projection from 3D space to normalized coordinates in image

plane, it is neccessary to take into acount non-linearity of such a transformation.

3.3.2 Projective Kalman Filter

In Projective Kalman Filter, designed by [7], the state x

k

, from Eq. (3.3), is dened as the

position and velocity that decribe the dynamics of the tracked 3D location in homogeneous

coordinates:

20

x

k

=

X Y Z 1

X

Y

Z 0

T

(3.18)

The measurement process described by Eq. (3.4) must be modelled according to the pro-

jective nature of the observations. The data captured by the N cameras forms the observation

vector z

k

z =

z

1

. . . z

N

T

=

x

1

y

1

1 . . . x

N

y

N

1

T

(3.19)

The key point of the projective Kalman lter is in denition of the adaptive matrix H

k

that maps the state x

k

to the measurement z

k

according to the Eq. (3.4). Canton-Ferrer [7]

proposed to use H

k

dened as:

H

k

=

1

k

. . . 0

.

.

.

.

.

.

.

.

.

0 . . .

N

k

M

1

0

3x4

.

.

.

.

.

.

M

N

0

3x4

(3.20)

with

k

that models the nonlinearity of projection from 3D coodinates to normalized coordinates

in image plane:

n

k

=

1

M

3

n

x

k

I

3x3

(3.21)

where M

n

is transformation matrix of camera n, M

3

n

is the 3rd row of M

n

, x

k

is predicted state.

Incorrect measurements caused by occlusions can cause big leap in 3D reconstruction. In

order to assure smooth trajectory in 3D space, a special approach for dening the covariance

matrix of measurement noise R is used [7]. Covariance matrix R is dened as:

R

k

=

R

k1

. . . 0

.

.

.

.

.

.

.

.

.

0 . . . R

kN

(3.22)

where R

kn

is noise covariance matrix at time n. Whenever occlusion is detected (one mea-

surement can be assigned to several targets, disscused in section 3.4) in view n, R

kn

is dened

as:

R

kn

=

. . . 0

.

.

.

.

.

.

.

.

.

0 . . .

(3.23)

21

3.4 Multitarget Tracking:Joint Probabilistic Data Associ-

ation Filter

3.4.1 Problem Formulation

In this section the problem of assigning each target state to a measurement is discussed. Data

association is a key stage of a widely used JPDAF algorithm introduced by Fortmann [11].

Kalman Filter as well as Projected Kalman Filter, described in Section 3.2, assume that

correspondence among skin-colored areas and objects is one-to-one. However, as it was shown

above, it is not always like this. Sometimes several targets can produce the same measurement,

and sometimes one target splits into several areas. The JPDAF can handle all this cases and

properly deal with data association.

The data association problem can be formulated as follows [11](to simplify the notion, we

will suppress the time subscript k from all variables, unless it is required for clarity).

Let m and T be the numbers of measuremets and targets. At each time step the sensor

provides a set of candidate measurements to be associated with targets (or rejected). It is

done by forming a validation gate around the predicted measurement z

t

from each target t,

t = 1, ..., T, and retaining only those detections z

j

, j = 1, ..., m, that lie within the gate. The

gate is normally presented by a g-sigma ellipsoid:

z

t

j

S

1

t

z

t

j

g

2

t

(3.24)

where z

t

j

is innovation corresponding to measurement j and predicted measurement z

t

:

z

t

j

= z

j

z

t

(3.25)

Conditional mean estimate x

t

is obtained from Eq. (3.10) by using combined (weighted)

innovation:

z

t

=

m

j=1

t

j

z

t

j

(3.26)

where

j

is the probability that jth measurement (or no measurement for j = 0) is the correct

one.

The update part of the error covariance matrix P

t

k

Eq. (3.11) for target t becomes [11]:

P

t

k

=

P

t

(1

t

0

)K

t

S

t

K

t

+K

t

k

j=1

j

z

t

j

z

t

j

z

t

z

t

K

t

(3.27)

22

where

t

0

is the probability that none of the measurements originated from target t.

The key stage of JPDAF algorithm is in calculating weights taking into account multiple

targets.

3.4.2 Association Probabilities Estimation

Computation of the begins with the construction of a so-called validation matrix for the

T targets and m measurements. The validation matrix is a m (T + 1) rectangular matrix

dened as [11]:

= [

jt

] =

t

. .. .

0 1 2 . . . t

1

11

12

. . .

1T

1

21

22

. . .

2T

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

1

m1

m2

. . .

mT

1

2

.

.

.

m

j

(3.28)

where

j0

= 1 shows that measurement j could originate from clutter,

jt

= 1 if measure-

ment j is inside the validation gate of terget t and

jt

= 0 if measurement j is outside the

validation gate for target t for j = 1, 2, ..., m and t = 1, 2, ..., T. Based on the validation matrix

data association hypotheses (feasible events) are generated subject to two restriction:

each measurement can have only one origign (either a specic target or a clutter)

no more than one measurement originates from a target.

Each feasible event is represented by a hypothesis matrix

of the same size as . A

typical element in

is denoted by

jt

where

jt

= 1 only if measurement j is assumed to be

associated with a clutter (t = 0) or target (t > 0).

Consider an example with two targets and 3 measurements presented in Fig. 3.12. In this

case, validation matrix and hypothesis matrices

will be determined as:

=

1 1 0

1 1 1

1 0 1

0

=

1 0 0

1 0 0

1 0 0

1

=

0 1 0

1 0 0

1 0 0

2

=

0 1 0

0 0 1

1 0 0

3

=

0 1 0

1 0 0

0 0 1

23

Figure 3.12: Example with two targets and three measurements

4

=

1 0 0

0 1 0

1 0 0

5

=

1 0 0

0 1 0

0 0 1

6

=

1 0 0

0 0 1

1 0 0

7

=

1 0 0

1 0 0

0 0 1

After each

is obtained, the measurement association indicator

j

(), target detection

indicator

t

() and total number of false measurements in event are computed:

measurement association indicator shows whether measurement j is associated with any

target in event :

j

() =

T

t=1

jt

(); (3.29)

target detection indicator shows whether target t is associated with any measurement in

event :

t

() =

m

j=1

jt

(); (3.30)

total number of false measurements in event is dened as:

() =

m

j=1

[1

j

()] . (3.31)

Conditional probability of the corresponding data association hypothesis or feasible event

subject to observed data Z

k

at time k is calculated by a formula:

24

P

|Z

k

=

C

j:j=1

exp

1

2

( z

tj

j

)

S

1

tj

( z

tj

j

)

(2)

m/2

|S

tj

|

1/2

t:t=1

P

t

D

t:t=0

(1 P

t

D

) (3.32)

where P

t

D

is a probability of detection of target t, C is a probability of false measurement, c is

a normalization constant c =

|Z

k

.

The probability

t

j

that measurement j belongs to target t may now be obtained by summing

over all feasible events :

t

j

=

|Z

k

jt

() (3.33)

and probability that none of the measurements originated from target t

t

0

= 1

m

j=1

t

j

(3.34)

These probabilities are used to form the combined innovation for each target (3.26) and in

the update of the covariance equation (3.27)

3.5 3D Multitarget Tracking: Projective Joint Probabilis-

tic Data Association Filter

After we analysed Projective Kalman Filter (PKF) and Joint Probabilistic Data Association

Filter (JPDAF), it is neccesary to design a clear scheme that will combine both methods in one

algorithm, Projective Joint Probabilistic Data Association Filter (PJPDAF)

Let T be number of targets, N - the number of views from which me observe measurement

and m

n

- number of measurement in the view n. The PJPDAF algorithm for a time step k and

target t will look like:

1. Prediction: Predict 3D state x

t

k

and error covariance matrix

P

t

k

given the state and its

covariance at step k 1

2. Observation and data association:

measurement prediction: project estimated state x

t

k

into every image plane n as a

measurement z

tn

k

Estimate weighted innovation z

tn

k

for every image plane taking into account m

n

observations

Combine innovations from all the planes together into innovation z

t

k

25

3. Update: Do correction of state and its covariance.

Prediction Prediction step of state x

t

k

and its covariance

P

t

k

is done according to Eq. (3.7,

3.8):

x

t

k|k1

= F

t

x

t

k1

(3.35)

P

t

k

= F

t

P

t

k1

F

T

t

+Q

t

(3.36)

Observation and data association:

Measurement prediction: projection of state to the measurement space is done using Eq.

(3.9) z

tn

k

= H

tn

k

x

t

k

, where H

tn

k

is computed according to Eq. (3.20) taking into account only

one view n:

H

tn

k

=

n

k

M

n

0

3x4

(3.37)

Covariance of innovation S

tn

k

is also computed separately for each view n according to Eq. 3.14

Weighted innovation is estimated using JPDAF approach, and therefore can be determined

as

z

tn

k

=

mn

j=1

jn

z

jn

(3.38)

where z

jn

is dened by Eq. (3.25) and

jn

can be calculated from Eq. (3.33).

The probabiltity that target t was not detected in image plane n

tk

0n

is estimated from Eq.

(3.34).

Next step is that we need to prepare data for update step. Considering model of measurement

described in Eq. (3.19), innovations from all the image planes are combined together:

z

t

k

=

z

t1

k

.

.

.

z

tN

k

(3.39)

where covariance of innovation S

t

k

is computed according to Eq. 3.14 using H

t

k

dened as:

H

t

k

=

H

t1

k

.

.

.

H

tN

k

(3.40)

.

26

Update: Update of state and its covariance is done based on equations (3.10 - 3.12):

Kalman gain K

t

k

is obtained from (3.12) using H

t

k

and S

t

k

dened above; State x

t

k

is corrected

according to Eq. (3.10) using combined innovation z

t

k

and Kalman gain K

t

k

Error covariance

matrix dened in Eq. (3.27) becomes:

P

t

k

=

P

t

k

(1

tk

0

)K

t

k

S

t

k

K

t

k

+K

t

k

t

k

t

k

K

t

k

(3.41)

where

tk

0

is the probabiltity that target was not detected in any view:

tk

0

=

N

n=1

tk

0n

(3.42)

t

k

is:

t

k

=

t1

k

. . . 0

.

.

.

.

.

.

.

.

.

0 . . .

tN

k

tn

k

=

mn

j=1

jn

z

jn

z

jn

(3.43)

and

t

k

is:

t

k

=

z

t1

k

z

t1

k

. . . 0

.

.

.

.

.

.

.

.

.

0 . . . z

tN

k

z

tN

k

(3.44)

3.6 Intialization of Trackers

In previous chapters we introduced the PJPDAF algorithm for 3D tracking of multiple objects

using projective JPDAF; in this chapter we discuss how to do the initial guess about 3D positions

of our objects of interest (hands and head).

In order to estimate initial 3D positions of head and left and right hands, we use following

assumptions (Fig. 3.13):

person is manipulating objects while sitting near the table;

we have results of temporal segmentation: we know when action starts;

when action starts, person has its hand lying on the table; hands and head are visible to

both cameras;

The rst step of initialization is to detect skin-colored areas which can possibly represent

hands and head. The largest ellipse-shaped detected area in the top is considered to be a

head, and skin-colored elliptical areas that are in the middle left and right parts of the image

27

Figure 3.13: Initial image frame

are considered to be left and right hands correspondingly. After the data association is done

between objects and observations, the center of each detected skin area is estimated by the

algorithm described in Section 3.1 .

Second step of initialization is to estimate 3D location with known 2D position of hands

and head in both images. The 3D location is computed using triangulation algorithm. Since we

need approximate estimation of 3D location, linear triangulation algorithm works pretty well.

The algorithm for linear triangulation (Fig. 3.14) can be described as follows:

In each image we have a measurement q = MQ, q

= M

Q, and these equations can

be combined into a form AQ = 0, which is an equation linear in X. Considering equations

q (MQ) = 0 and q

(M

Q) = 0, we can get combined equation of a form QX = 0 where A

is:

A =

xM

3T

M

1T

yP

3T

M

2T

x

M

3T

M

1T

yM

3T

M

2T

(3.45)

where M

i

is 3rd row of M.

After we know A, Q can be estimated using eigen-analysis: X lies in the nullspace of

A = UDV

T

.

28

Figure 3.14: Triangulation

29

Chapter 4

Results

This chapter demonstrates the performance of the PJPDAF algorithm, introduced in Chapter

3.5. Qualitive and quantative characteristics of this algorithm are evaluated; dierent image

sequences illustrating tracking using PJPDAF are also provided.

4.1 System Setup

System setup can be devided into hardware and software setup.

The hardware used in this system is a humanoid robot head with two foveal and two wideeld

cameras; in our application we analyse images only from wide-eld cameras. In order to know

parameters of the cameras (intrinsic and extrinsic), cameras are calibrated using OpenCV

calibration toolbox. Images are captured at a frame rate of 3 frames per second and have

resolution 640x480. The environment of the room should have normal lightning conditions

and static background during tracking section. The person whose hands and head are tracked,

should wear cloth which leaves open only hands and head.

Software includes grabber of image sequences, and a tracking system, which is composed

from two modules: hands and head detection module (HHD module) and PJPDAF module.

Both modules are written in Matlab and work oine, since running speed is not the main point

of this thesis. HHD module includes also undistortion of captured images, which is done using

Matlab Calibration Toolbox [4].

In our application, set of dierent parameters is used; their values were empirecally estimated

and summarized in Table 4.1.

30

Table 4.1: Parameters and their description

Parameter Value Description Module

A

min

500 minimum size of the skin-

color area to be considered as

hand/head

DDH

p 5 penalty coecient for k-means

clustering

DDH

P

t

0

diag(70, 70, 70, 0, 30, 30, 30, 0) covariance matrix of the initial

state

PJPDAF

Q

t

diag([50 50 50 0 5 5 5 0]) covariance matrix of motion

noise

PJPDAF

R

t

n

diag( 15 15 0.5) covariance matrix of measure-

ment noise

PJPDAF

P

t

D

0.9 detection probability of a target PJPDAF

g

t

n

sqrt(15) gate validation PJPDAF

C 0.1 probability of false measurement PJPDAF

- where t = 1..T, T is number of targets

- where n = 1..N, N is number of views

4.2 Experiment Results Analysis

In this section we analyse results of tracking using PJPDAF algorithm. We concentrate on

joint performance of the HHD and PJPDAF modules and do not present evaluation of HHD

module and PJPDAF module separately.

Results of tracking are in 3D, therefore in order to evaluate how good is the tracker, we

need to know exact 3D position of our objects of interest (head and hands in this application).

However, due to limited hardware (we do not have any 3D sensors), all the evaluation of the

PJPDAF will be done in image planes. It will be done by projecting reconstructed 3D trajectory

to both left and right images.

Accuracy of tracking target t can be expressed by the Err

t

acc

:

Err

t

acc

=

1

N

N

n=1

(x

e

nt

x

g

nt

)

2

+ (y

e

nt

y

g

nt

)

2

(4.1)

where [x

g

nt

, y

g

nt

] is a groundtruth estimation of the target t location based on manual marking,

[x

e

nt

, y

e

nt

] - location of the target t obtained from PJPDAF module.

It is worth to notice that manual estimation is also not perfect, especially considering that

we do not know exactly which point to consider as a centre of the hand/head.

Selected frames from sequence for accuracy estimation are presented in Fig. 4.1. 3D re-

31

construction of the target locations is shown in Fig. 4.2. Accuracy error for every frame for

each target is presented in Fig. 4.3. It can be observed, that error of head tracking is much

more stable than error of hands tracking. It can be explained by two factors: First, head is

usually lightened in the same manner, while illumination of hands is not constant due to their

motion and non-uniformity of light in the room; second; hands move much more then the head,

therefore error in tracking also changes according to the motion. Once again, this estimation

of error is not very accurate due to manual marking, which can be done dierently by dierent

people.

(a) Frame 1

(b) Frame 15 (c) Frame 50

(d) Frame 75 (e) Frame 115

(f) Frame 150

Figure 4.1: Selected frames from PJPDAF tracking; red tracker - head, green tracker - right

hand, blue tracker - left hand

More complicated example of tracking is shown in Fig. 4.5. Here we can observe a boy

drinking a coke. In frame 21-28 hand is occluded with a head, in images both tracker do not

look clear. However, as we can see in frames 30-49, trackers are still properly associated with

32

600

400

200

0

1400

1600

400

300

200

100

x

z

y

Figure 4.2: 3D tracking by PJPDAF

0 50 100 150

0

5

10

15

20

Head tracking error

Right hand tracking error

Left hand tracking error

Figure 4.3: Accuracy estimation

targets. 3D trajectory, presented in Fig. 4.4, is smooth and represent the action done: pick up

a coke, drink and put the coke back on the table.

It is also important to analyse in which conditions the PJPDAF fails and loose the tracker

(and cannot recover). From Fig. 4.7 we can observe how good the occlusion in frames 21-22

was handled: even though two hands are together and represent one skin-blob (Fig. 4.6), we

still have two trackers. After that in frame 62 hands were partially occluded, because of the

fast motion, right and left hands were confused. Later, however, in frames 82-99 hands again

lose their tracker and then recover. In the end of the sequence, due to the fast motion, both

tracker of hands were lost.

We found out that fast motion is our main problem which can be result of low speed images

capturing (just 3 frames per second). Another problem is confusing trackers between hands. It

33

400

300

200

100

0

1200

1400

500

400

300

200

100

x z

y

Figure 4.4: 3D trajectory of an action drinking a coke; red tracker - head, green tracker -

right hand, blue tracker - left hand

can be explained by random hand motion and hand acceleration.

34

(a) Frame 1 (b) Frame 21

(c) Frame 23 (d) Frame 27

(e) Frame 30 (f) Frame 31

(g) Frame 32 (h) Frame 36

(i) Frame 40 (j) Frame 49

Figure 4.5: Selected frames from PJPDAF tracking: a boy is drinking a coke; red tracker -

head, green tracker - right hand, blue tracker - left hand

35

(a) Left image (b) Right image

Figure 4.6: Segmentation of frame 21, both hands are presented as one skin-color area

36

(a) Frame 1 (b) Frame 21

(c) Frame 23 (d) Frame 62

(e) Frame 63 (f) Frame 64

(g) Frame 82 (h) Frame 84

(i) Frame 99 (j) Frame 121

Figure 4.7: Selected frames from PJPDAF tracking; red tracker - head, green tracker - right

hand, blue tracker - left hand

37

Chapter 5

Conclusions

Manipulation actions and manipulated objects recognition is a important part of dierent ap-

plications in video surveillance, human-computer interface, programming by demonstrations

and many others. Meanwhile, hands and head tracking plays particular role in manipulation

action and manipulated objects recognition since in most of the cases trajectories of hands and

head give the main cue to such a system.

The thesis is focused on the two problems: object detection and object tracking. Detection

module, based on background subtraction and color segmentation, showed good results in ob-

ject localization. The disadvantage of this method is that the static and known environment

is assumed. Tracking module designed according to the introduced concept of Projective Joint

Probabilistic Data Association Filter, which is based on two well-known techniques Projec-

tive Kalman Filter and Joint Probabilistic Data Association Filter. PKF algorithm handles

3D tracking with 2D observation and JPDAF provide reliable data association algorithms for

mapping between measurements and targets.

Result analysis showed that PJPDAF is able to track hands and head in 3D space and

overcome occlusion problem in many cases. The main problem in PJPDAF is that trackers

can occasionally swap between left and right hands. The main problem with hands is that

they can move unpredictably, sometimes following linear trajectories and suddenly changing

direction of moving. In these conditions, distinguishing between left and right hands becomes

a real problem with non-trivial solution. Motion cue can show direction of hands movement,

and therefore reduce ambiguity.

38

5.1 Future work

3D tracking of humans head and hands is a complex problem with numerous specic solutions

and without generalized algorithm. For manipulation action and object recognition system,

proposed solution can be improved in dierent ways. The future work includes enhancing of

both detection and PJPDAF modules. Detection model should be improved and be able to

localize hands and head of the person in any environment. Concerning PJPDAF, many dierent

cues can be incorporated into it and therefore, results will be more accurate and tracking will be

more robust. Within my interests, I believe that adding information about motion can help us

to achieve better performance of tracking. Moreover, online reconstruction of 3D trajectories of

hands and head is also an important factor. Therefore, optimization of the introduced algorithm

to achieve real-time tracking is also within the scope of future research.

39

Bibliography

[1] Antonis A. Argyros and Manolis I. A. Lourakis. Real-time tracking of multiple skin-colored

objects with a possibly moving camera. In Tomas Pajdla and Jiri Matas, editors, ECCV

(3), volume 3023 of Lecture Notes in Computer Science, pages 368379. Springer, 2004.

[2] Antonis A. Argyros and Manolis I. A. Lourakis. Binocular hand tracking and reconstruc-

tion based on 2d shape matching. In ICPR 06: Proceedings of the 18th International

Conference on Pattern Recognition, pages 207210, Washington, DC, USA, 2006. IEEE

Computer Society.

[3] O. Bernier and D. Collobert. Head and hands 3d tracking in real time by the em algorithm.

In Recognition, Analysis, and Tracking of Faces and Gestures in Real-Time Systems, 2001.

Proceedings. IEEE ICCV Workshop on, pages 7581, 2001.

[4] G.R. Bradski. Real time face and object tracking as a component of a perceptual user

interface. In Applications of Computer Vision, 1998. WACV 98. Proceedings., Fourth

IEEE Workshop on, pages 214219, Oct 1998.

[5] C. Canton-Ferrer, J. Salvador, J. R. Casas, and M. Pard`as. Multi-person tracking strategies

based on voxel analysis. Multimodal Technologies for Perception of Humans: International

Evaluation Workshops CLEAR 2007 and RT 2007, Baltimore, MD, USA, May 8-11, 2007,

Revised Selected Papers, pages 91103, 2008.

[6] Cristian Canton-Ferrer, Josep R. Casas, A. Murat Tekalp, and Montse Pard`as. Projective

kalman lter: Multiocular tracking of 3d locations towards scene understanding. In Steve

Renals and Samy Bengio, editors, MLMI, volume 3869 of Lecture Notes in Computer

Science, pages 250261. Springer, 2005.

[7] Hyun-Chul Do, Ju-Yeon You, and Sung-Il Chien. Skin color detection through estimation

and conversion of illuminant color under various illuminations. Consumer Electronics,

IEEE Transactions on, 53(3):11031108, Aug. 2007.

40

[8] Ayman El-Sawah, Chris Joslin, Nicolas D. Georganas, and Emil M. Petriu. A framework

for 3d hand tracking and gesture recognition using elements of genetic programming. In

CRV 07: Proceedings of the Fourth Canadian Conference on Computer and Robot Vision,

pages 495502, Washington, DC, USA, 2007. IEEE Computer Society.

[9] James D. Foley, Richard L. Phillips, John F. Hughes, Andries van Dam, and Steven K.

Feiner. Introduction to Computer Graphics. Addison-Wesley Longman Publishing Co.,

Inc., Boston, MA, USA, 1994.

[10] T. Fortmann, Y. Bar-Shalom, and M. Schee. Sonar tracking of multiple targets using

joint probabilistic data association. Oceanic Engineering, IEEE Journal of, 8(3):173184,

Jul 1983.

[11] R. I. Hartley and A. Zisserman. Multiple View Geometry in Computer Vision. Cambridge

University Press, ISBN: 0521540518, second edition, 2004.

[12] Rudolph Emil Kalman. A new approach to linear ltering and prediction problems. Trans-

actions of the ASMEJournal of Basic Engineering, 82(Series D):3545, 1960.

[13] Ig-Jae Kim, Shwan Lee, S.C. Ahn, Yong-Moo Kwon, and Hyoung-Gon Kim. 3d tracking

of multi-objects using color and stereo for hci. In Image Processing, 2001. Proceedings.

2001 International Conference on, volume 3, pages 278281 vol.3, 2001.

[14] Hedvig Kjellstrom, Javier Romero, David Martnez, and Danica Kragic. Simultaneous

visual recognition of manipulation actions and manipulated objects. In ECCV 08: Pro-

ceedings of the 10th European Conference on Computer Vision, pages 336349, Berlin,

Heidelberg, 2008. Springer-Verlag.

[15] J. Kovac, P. Peer, and F. Solina. Human skin color clustering for face detection. In

EUROCON 2003. Computer as a Tool. The IEEE Region 8, volume 2, pages 144148

vol.2, Sept. 2003.

[16] Choong Hwan LEE, Jun Sung Kim, and Kyu Ho Park. Automatic human face location in a

complex background using motion and color information. Pattern Recognition, 29(11):1877

1889, 1996.

[17] S. Lloyd. Least squares quantization in pcm. Information Theory, IEEE Transactions on,

28(2):129137, 1982.

[18] Stephen Mckenna, Stephen Mckenna, Shaogang Gong, and Shaogang Gong. Tracking faces.

In In Proceedings of International Conference on Automatic Face and Gesture Recognition,

pages 271276. IEEE Computer Society Press, 1996.

41

[19] Thomas B. Moeslund, Adrian Hilton, and Volker Kr uger. A survey of advances in vision-

based human motion capture and analysis. Comput. Vis. Image Underst., 104(2):90126,

2006.

[20] Kai Nickel, Edgar Seemann, and Rainer Stiefelhagen. 3d-tracking of head and hands for

pointing gesture recognition in a human-robot interaction scenario. Automatic Face and

Gesture Recognition, IEEE International Conference on, 0:565, 2004.

[21] Son L. Phung, A. Bouzerdoum, and D. Chai. A novel skin color model in ycbcr color space

and its application to human face detection. In Image Processing. 2002. Proceedings. 2002

International Conference on, volume 1, pages I289I292 vol.1, 2002.

[22] S. Ray and R. Turi. Determination of number of clusters in k-means clustering and appli-

cation in colour image segmentation, 1999.

[23] C. Stauer and W. E. L. Grimson. Adaptive background mixture models for real-time

tracking. In Computer Vision and Pattern Recognition, 1999. IEEE Computer Society

Conference on., volume 2, page 252 Vol. 2, 1999.

[24] David J. Sturman and David Zeltzer. A survey of glove-based input. IEEE Computer

Graphics and Applications, 14(1):3039, 1994.

[25] Hisashi Tanizaki and Roberto S. Mariano. Nonlinear and non-gaussian state-space model-

ing with monte carlo simulations. Journal of Econometrics, 83(1-2):263290, 1998.

[26] Arasanathan Thayananthan. Model-based hand tracking using a hierarchical bayesian

lter. IEEE Trans. Pattern Anal. Mach. Intell., 28(9):13721384, 2006. Member-Stenger,,

Bjorn and Senior Member-Torr,, Philip H. S. and Member-Cipolla,, Roberto.

[27] A. Vacavant and T. Chateau. Realtime head and hands tracking by monocular vision. In

Image Processing, 2005. ICIP 2005. IEEE International Conference on, volume 2, pages

II3025, Sept. 2005.

[28] Xavier Varona, Jose Maria Buades Rubio, and Francisco J. Perales Lopez. Hands and face

tracking for vr applications. Computers Graphics, 29(2):179187, 2005.

[29] Vladimir Vezhnevets, Vassili Sazonov, and Alla Andreeva. A survey on pixel-based skin

color detection techniques. In in Proc. Graphicon-2003, pages 8592, 2003.

[30] P. Viola and M. Jones. Rapid object detection using a boosted cascade of simple features.

In Computer Vision and Pattern Recognition, 2001. CVPR 2001. Proceedings of the 2001

IEEE Computer Society Conference on, volume 1, pages I511I518 vol.1, 2001.

42

[31] Christopher Wren, Ali Azarbayejani, Trevor Darrell, and Alex Pentland. Pnder: Real-

time tracking of the human body. IEEE Transactions on Pattern Analysis and Machine

Intelligence, 19:780785, 1997.

[32] Zhanwu Xiong. Robust object tracking using multiple cues. Masters thesis, KTH, 2009.

[33] A. Yilmaz, O. Javed, and M. Shah. Object tracking: A survey. ACM Comput. Surv.,

38(4):13, 2006.

43

You might also like

- Matlab Introduction TutorialDocument44 pagesMatlab Introduction Tutorialpriyanka236No ratings yet

- PID Controller Working Principle Explained For BeginnersDocument6 pagesPID Controller Working Principle Explained For BeginnersPramillaNo ratings yet

- Business Analytics, Volume II - A Data Driven Decision Making Approach For BusinessDocument421 pagesBusiness Analytics, Volume II - A Data Driven Decision Making Approach For BusinessTrà Nguyễn Thị Thanh100% (1)

- Data Analysis for Social Science: A Friendly and Practical IntroductionFrom EverandData Analysis for Social Science: A Friendly and Practical IntroductionNo ratings yet

- Multiple Target Detection and Tracking in A Multiple Camera NetworkDocument59 pagesMultiple Target Detection and Tracking in A Multiple Camera NetworkRazvanHaragNo ratings yet

- Customer Segmentation and Profiling ThesisDocument76 pagesCustomer Segmentation and Profiling ThesisAnkesh Srivastava100% (2)

- Learning Rust NotesDocument106 pagesLearning Rust NotesDavid Matos salvadorNo ratings yet

- Python For AIDocument304 pagesPython For AISalilNo ratings yet

- Stochastic Processes and Simulations - A Machine Learning PerspectiveDocument96 pagesStochastic Processes and Simulations - A Machine Learning Perspectivee2wi67sy4816pahnNo ratings yet

- Water Hammer Analysis Using An Implicit Finite-Difference Method PDFDocument12 pagesWater Hammer Analysis Using An Implicit Finite-Difference Method PDFOmarNo ratings yet

- Big Data and The WebDocument170 pagesBig Data and The WebcjmancorNo ratings yet

- Automated High Precision Optical Tracking of Flying Objects: Master ThesisDocument45 pagesAutomated High Precision Optical Tracking of Flying Objects: Master ThesisSteve WilsonNo ratings yet

- A Portable Vision System For Detecting and Counting Maggots: Lazar LazarovDocument59 pagesA Portable Vision System For Detecting and Counting Maggots: Lazar LazarovAnonymous jQ6kN4No ratings yet