Professional Documents

Culture Documents

0411035

Uploaded by

vphucvoOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

0411035

Uploaded by

vphucvoCopyright:

Available Formats

A FP-Tree Based Approach for Mining All Strongly

Correlated Pairs without Candidate Generation

`

Zengyou He, XiaoIei Xu, Shengchun Deng

Department of Computer Science and Engineering Harbin Institute of Technologv,

92 West Da:hi Street, P.O Box 315, P. R. China, 150001

Email: zengyouheyahoo.com, xiaoIei, dsc}hit.edu.cn

Abstract Given a user-speciIied minimum correlation threshold and a transaction database, the

problem oI mining all-strong correlated pairs is to Iind all item pairs with Pearson's correlation

coeIIicients above the threshold . Despite the use oI upper bound based pruning technique in

the Taper algorithm |1|, when the number oI items and transactions are very large, candidate pair

generation and test is still costly.

To avoid the costly test oI a large number oI candidate pairs, in this paper, we propose an

eIIicient algorithm, called Tcp, based on the well-known FP-tree data structure, Ior mining the

complete set oI all-strong correlated item pairs. Our experimental results on both synthetic and

real world datasets show that, Tcp's perIormance is signiIicantly better than that oI the previously

developed Taper algorithm over practical ranges oI correlation threshold speciIications.

Keywords Correlation, Association Rule, Transactions, FP-tree, Data Mining

1 Introduction

More recently, the mining oI statistic correlations is applied to transaction databases |1|,

which retrieves all pairs oI items with high positive correlation in a transaction databases. The

problem can be Iormalized as Iollows |1|. Given a user-speciIied minimum correlation threshold

and a transaction database with N items and T transactions, all-strong- pairs correlation query

Iinds all item pairs with correlations above the minimum correlation threshold .

DiIIerent Irom the association-rule mining problem |2-4|, it is well known that an item pair

with high support may have a very low correlation. Additionally, some item pairs with high

correlations may also have very low support. Hence, we have to consider all possible item pairs in

the mining process. Consequently, when the number oI items and transactions are very large,

candidate pair generation and test will be very costly.

To eIIiciently identiIy all strong correlated pairs, Xiong et al. |1| proposed the Taper

algorithm, in which an upper bound oI Pearson's correlation coeIIicient is provided to prune

candidate pairs. As shown in |1|, the upper bound based pruning technique is very eIIective in

eliminating a large portion oI item pairs in the candidate generation phase. However, when the

database contains a large number oI items and transactions, even testing those remaining candidate

*

This work was supported by the High Technology Research and Development Program oI China (No.

2002AA413310, No. 2003AA4Z2170, 2003AA413021) and the IBM SUR Research Fund.

pairs over the whole transaction database is still costly.

Motivated by the above observation, we examine other possible solutions Ior the problem oI

correlated pairs mining. We note that, the FP-tree |4| approach does not rely on the candidate

generation step. We, thereIore, consider how to make use oI the FP-tree Ior the mining oI all pairs

oI items with high positive correlation.

An FP-tree (Irequent pattern tree) is a variation oI the trie data structure, which is a

preIix-tree structure Ior storing crucial and compressed inIormation about support inIormation.

Thus, it is possible to utilize such inIormation to alleviate the multi-scan problem to speed up

mining by working directly on the FP-Tree.

In this paper, we propose an eIIicient algorithm, called Tcp (FP-Tree based Correlation Pairs

Mining), based on the FP-tree data structure, Ior mining the complete set oI all- strong correlated

pairs between items.

The Tcp algorithm consists oI two steps: FP-tree construction and correlation mining. In the

FP-tree construction step, the Tcp algorithm constructs a FP-tree Irom the transaction database. In

the original FP-tree method |4|, the FP-tree is built only with the items with suIIicient support.

However, in our problem setting, there is no support threshold used initially. We, thereIore,

propose to build a complete FP-tree with all items in the database. Note that this is equivalent to

setting the initial support threshold to zero. The size oI an FP-tree is bounded by the size oI its

corresponding database because each transaction will contribute at most one path to the FP-tree,

with the path length equal to the number oI items in that transaction. Since there is oIten a lot oI

sharing oI Irequent items among transactions, the size oI the tree is usually much smaller than its

original database |4|. In the correlation-mining step, we utilize a pattern-growth method to

generate all item pairs and compute their exact correlation values, since we can get all support

inIormation Irom the FP-tree. That is, diIIerent Irom the Taper algorithm, Tcp algorithm doesn`t

need to use the upper bound based pruning technique. Hence, Tcp algorithm is insensitive to the

input minimum correlation threshold , which is highly desirable in real data mining

applications. In contrast, the perIormance oI Taper algorithm is highly dependent on both data

distribution and minimum correlation threshold , because these Iactors have a great eIIect on

the results oI upper bound based pruning.

Our experimental results show that Tcp's perIormance is signiIicantly better than that oI the

Taper algorithm Ior mining correlated pairs on both synthetic and real world datasets over

practical ranges oI correlation threshold speciIications.

2 Related Work

Association rule mining is a widely studied topic in data mining research. However, it is well

recognized that true correlation relationships among data objects may be missed in the

support-based association-mining Iramework. To overcome this diIIiculty, correlation has been

adopted as an interesting measure since most people are interested in not only association-like

co-occurrences but also the possible strong correlations implied by such co-occurrences. Related

literature can be categorized by the correlation measures.

Brin et al. |5| introduced lift and a

2

correlation measure and developed methods Ior

mining such correlations. Ma and Hellerstein |6| proposed a mutually dependent patterns and an

Apriori-based mining algorithm. Recently, Omiecinski |7| introduced two interesting measures,

called all confidence and bond. Both have the downward closure property. Lee et al. |8| proposed

two algorithms by extending the pattern-growth methodology |4| Ior mining all confidence and

bondcorrelation patterns. As an extension to the concepts proposed in |7|, a new notion oI the

confidence-closed correlated patterns is presented in |9|. And an algorithm, called CCMine Ior

mining those patterns is also proposed in |9|. Xiong et al. |10| independently proposed the

h-confidence measure, which is equivalent to the all confidence measure oI |7|.

In |1|, eIIiciently computing Pearson's correlation coeIIicient Ior binary variables is

considered. In this paper, we Iocus on developing a FP-Tree base method Ior eIIiciently

identiIying the complete set oI all- strong correlated pairs between items, with Pearson's

correlation coeIIicient as correlation measure.

3 Background-Pearson's Correlation Coefficient

In statistics, a measure oI association is a numerical index that describes the strength or

magnitude oI a relationship among variables. Relationships among nominal variables can be

analyzed with nominal measures oI association such as Pearson's Correlation CoeIIicient. The

correlation coeIIicient |11| is the computation Iorm oI Pearson's Correlation CoeIIicient Ior

binary variables.

As shown in |1|, when adopting the support measure oI association rule mining |2|, Ior two

items A and B in a transaction database, we can derive the support Iorm oI the correlation

coeIIicient as shown below in Equation (1):

)) sup( 1 ( * )) sup( 1 ( * ) sup( * ) sup(

) sup( * ) sup( ) , sup(

B A B A

B A B A

(1)

where sup(A), sup(B) and sup(A,B) are the support oI item(s) A, B and AB separately.

Furthermore, as shown in |1|, given an item pair A, B}, the support value sup(A) Ior item A,

and the support value sup(B) Ior item B, without loss oI generality, let sup(A)~sup(B). The upper

bound ) (

) , ( B A

upper oI an item pair A, B } is:

) sup( 1

) sup( 1

*

) sup(

) sup(

) (

) , (

B

A

A

B

upper

B A

(2)

As can be seen in Equation (2), the upper bound oI correlation coeIIicient Ior an item pair

A, B} only relies on the support value oI item A and the support value oI item B. In other words,

there is no requirement to get the support value sup(A, B) oI an item pairA, B} Ior the calculation

oI this upper bound. As a result, in the original Taper algorithm |1|, this upper bound is used to

serve as a coarse Iilter to Iilter out item pairs that are oI no interest, thus saving I/O cost by

reducing the computation oI the support value oI those pruned pairs.

The Taper algorithm |1| is a two-step Iilter-and-reIine mining algorithm, which consists oI

two steps: Iiltering and reIinement. In the Iiltering step, the Taper algorithm applies the upper

bound oI the correlation coeIIicient as a coarse Iilter. In other words, iI the upper bound oI the

correlation coeIIicient Ior an item pair is less than the user-speciIied correlation threshold, we

can prune this item pair right way. In the reIinement step, the Taper algorithm computes the exact

correlation Ior each surviving pair Irom the Iiltering step and retrieves the pairs with correlations

above the user-speciIied correlation threshold as the mining results.

4 Tcp Algorithm

In this section, we introduce Tcp algorithm Ior mining correlated pairs between items. We

adopt ideas oI the FP-tree structure |4| in our algorithm.

4.1 FP-Tree Basics

An FP-tree (Irequent pattern tree) is a variation oI the trie data structure, which is a

preIix-tree structure Ior storing crucial and compressed inIormation about Irequent patterns. It

consists oI one root labeled as 'NULL, a set oI item preIix sub-trees as the children oI the root,

and a Irequent item header table. Each node in the item preIix sub-tree consists oI three Iields:

item-name, count, and node-link, where item-name indicates which item this node represents,

count indicates the number oI transactions containing items in the portion oI the path reaching this

node, and node-link links to the next node in the FP-tree carrying the same item-name, or null iI

there is none. Each entry in the Irequent item header table consists oI two Iields, item name and

head oI node-link. The latter points to the Iirst node in the FP-tree carrying the item name.

Let us illustrate by an example in |4| the algorithm to build an FP-tree using a user speciIied

minimum support threshold. Suppose we have a transaction database shown in Fig. 1 with

minimum support threshold 3.

By scanning the database, we get the sorted (item: support) pairs, (f: 4), (c:4), (a:3), (b:3),

(m:3), (p:3) (oI course, the Irequent 1-itemsets are: f, c, a, b, m, p). We use the tree construction

algorithm in |4| to build the corresponding FP-tree. We scan each transaction and insert the

Irequent items (according to the above sorted order) to the tree. First, we insert f, c, a, m, p~ to

the empty tree. This results in a single path:

root (NULL) (f: 1) (c:1) (a:1) (m:1) (p:1)

Then, we insert f, c, a, b, m~. This leads to two paths with f, c and a being the common

preIixes:

root (NULL) (f: 2 ) (c:2) (a:2) (m:1) (p:1)

and

root (NULL) (f: 2) (c:2) (a:2) (b:1) (m:1)

Third, we insert f, b~. This time, we get a new path:

root (NULL) (f: 3) (b:1)

In a similar way, we can insert c, b, p~ and f, c, a, m, p~ to the tree and get the resulting

tree as shown in Fig.1, which also shows the horizontal links Ior each Irequent 1-itemset in

dotted-line arrows.

root

f :4

c:3

a:3

m:2

p:2

b:1

b:1

m:1

c:1

b:1

p:1

p

m

b

a

c

I

item

Header table

a, I, c, e, l, p, m, n

b, c, k, s, p

b, I, h, j , o

a, b, c, I, l,m, o

I, a, c, d, g, I, m, p

Items bought

I, c, a, m, p 500

c, b, p 400

I, b 300

I, c, a, b, m 200

I, c, a, m, p 100

(ordered) freq items TID

Fig. 1. Database and corresponding FP-tree.

With the initial FP-tree, we can mine Irequent itemsets oI size k, where k~2. An FP-growth

algorithm |4| is used Ior the mining phase. We may start Irom the bottom oI the header table and

consider item p Iirst. There are two paths: (f: 4) (c:3) (a:3) (m:3) (p:2) and (c:1) (b:1)

(p:1). Hence, we get two preIix paths Ior p: fcam: 2~ and cb: 1~, which are called p`s

conditional pattern base. We also call p the base oI this conditional pattern base. An FP-tree on

this conditional pattern base (conditional FP-tree) is constructed, which acts as a transaction

database with respect to item p. Recursively mining this resulting FP-tree to Iorm all the possible

combinations oI items containing p. Similarly, we consider items m, b, a, c and f to get all Irequent

itemsets.

4.2 Tcp Algorithm

The Tcp algorithm consists oI two steps: FP-tree construction and correlation mining. In the

FP-tree construction step, the Tcp algorithm constructs a FP-tree Irom the transaction database. In

the correlation-mining step, we utilize a pattern-growth method to generate all item pairs and

compute their exact correlation value. Fig.2 shows the main algorithm oI Tcp. In the Iollowing,

we will illustrate each step thoroughly.

FP-tree construction step

In the original FP-tree method |4|, the FP-tree is built only with the items with suIIicient

support. However, in our problem setting, there is no support threshold used initially. We,

thereIore, propose to build a complete FP-tree with all items in the database (Step 3-4). Note that

this is equivalent to setting the initial support threshold to zero. The size oI an FP-tree is bounded

by the size oI its corresponding database because each transaction will contribute at most one path

to the FP-tree, with the path length equal to the number oI items in that transaction. Since there is

oIten a lot oI sharing oI Irequent items among transactions, the size oI the tree is usually much

smaller than its original database |4|.

As can be seen Irom Fig.2, beIore constructing the FP-Tree, we use an additional scan over

the database to count the support oI each item (Step 1-2). At the Iirst glance, one may argue that

this additional I/O cost is not necessary since we have to build the complete FP-Tree with zero

support. However, the rationale behind is as Iollows.

(1) Knowing the support oI each item will help us in building the more compact

FP-Tree, e.g., ordered FP-Tree.

(2) In the correlation-mining step, single item support is required in the computation oI

the correlation value Ior each item pair.

Tcp Algorithm

Input:

(1): A transaction database D

(2): : A user speciIied correlation threshold

Output:

CP: All item pairs having correlation coeIIicients above correlation threshold

Steps:

(A) FP-Tree Construction Step

(1) Scan the transaction database, D. Find the support oI each item

(2) Sort the items by their supports in descending order, denoted as sorted-list

(3) Create a FP-Tree T, with only a root node with label being 'NULL.

(4) Scan the transaction database again to build the whole FP-Tree with all ordered items

(B) Correlation Mining Step

(5) For each item Ai in the header table oI T do

(6) Get the conditional pattern base oI Ai and compute its conditional FP-Tree Ti

(7) For each item Bj in the header table oI Ti do

(8) Compute the correlation value oI (Ai, Bj), ) , ( Bf Ai

(9) iI ) , ( Bf Ai then add (Ai, Bj) to CP

(10) Return CP

Fig. 2. The Tcp Algorithm

Correlation mining step

In the correlation-mining step, we utilize a pattern-growth method to generate all item pairs

and compute their exact correlation values (Step 5-9), since we can get all support inIormation

Irom the FP-tree.

As can be seen Irom Fig.2, diIIerent Irom the Taper algorithm, Tcp algorithm doesn`t need to

use the upper bound based pruning technique, since in Step 8 and Step 9 we can directly compute

the correlation values. ThereIore, the Tcp algorithm is insensitive to the input minimum

correlation threshold , which is highly desirable in real data mining applications. In contrast,

the perIormance oI Taper algorithm is highly dependent on both data distribution and minimum

correlation threshold , because these Iactors have a great eIIect on the results oI upper bound

based pruning.

5 Experimental Results

A perIormance study has been conducted to evaluate our method. In this section, we describe

those experiments and their results. Both real-liIe datasets Irom UCI |12| and synthetic datasets

were used to evaluate the perIormance oI our Tcp algorithm again Taper algorithm.

All algorithms were implemented in Java. All experiments were conducted on a

Pentium4-2.4G machine with 512 M oI RAM and running Windows 2000.

5.1 Experimental Datasets

We experimented with both real datasets and synthetic datasets. The mushroom dataset Irom

UCI |12| has 22 attributes and 8124 records. Each record represents physical characteristics oI a

single mushroom. From the viewpoint oI transaction databases, the mushroom dataset has 119

items totally.

The synthesized datasets are created with the data generator in ARMiner soItware

1

, which

also Iollows the basic spirit oI well-known IBM synthetic data generator Ior association rule

mining. The data size (i.e., number oI transactions), the number oI items and the average size oI

transactions are the major parameters in the synthesized data generation. Table 1 shows the Iour

datasets generated with diIIerent parameters and used in the experiments. The main diIIerence

between these datasets is that they have diIIerent number oI items, range Irom 400 to 1000.

Table 1. Test Synthetic Data Sets

Data Set Name Number of Transactions Number of Items Average Size of Transactions

T10I400D100K 100,000 400 10

T10I600D100K 100,000 600 10

T10I800D100K 100,000 800 10

T10I1000D100K 100,000 1000 10

5.2 Empirical Results

We compared the perIormance oI Tcp with Taper on the 5 datasets by varying correlation

threshold Irom 0.9 to 0.1.

Fig. 3 shows the running time oI the two algorithms on the mushroom dataset Ior ranging

Irom 0.9 to 0.1. We observe that, Tcp's running time remains stable over the whole range oI .

The Tcp`s stability on execution time is also observed in the consequent experiments on synthetic

datasets. The reason is that Tcp doesn`t depend on upper bound based pruning technique, and

hence is less aIIected by threshold parameter. In contrast, the execution time Ior Taper algorithm

increases as the correlation thresholds are decreased. When reaches 0.4, Tcp starts to

outperIorm Taper. Although the perIormance oI Tcp is not good as that oI Taper when is

larger than 0.4, Tcp at least achieves same level perIormance as that oI Taper on the mushroom

dataset. The reason Ior the Tcp`s unsatisIied perIormance is that, Ior dataset with Iewer items, the

advantage oI Tcp is not very signiIicant. As can be seen in consequent experiments, Tcp's

1

http://www.cs.umb.edu/~laur/ARMiner/

perIormance is signiIicantly better than that oI the Taper algorithm when the dataset has more

items and transactions.

0

1

?

3

1

o

b

0.9 0.8 0. 0.b 0.o 0.1 0.3 0.? 0.1

Th~ o1n1ouo oII~1u1on hI~:ho1d:

E

x

e

c

u

t

i

o

n

T

i

m

e

s

(

s

)

7DSHU 7FS

Fig.3 Execution Time Comparison between Tcp and Taper on Mushroom Dataset

Fig.4, Fig.5, Fig.6 and Fig.7 show the execution times oI the two algorithms on Iour synthetic

diIIerent datasets as correlation threshold is decreased. From these Iour Iigures, some important

observations are summarized as Iollows.

(1) Tcp keeps its stable running time Ior the whole range oI correlation threshold on all the

synthetic datasets. It Iurther conIirmed the Iact that Tcp algorithm is robust with to input

parameters.

(2) Taper keeps its increase in running time when the correlation threshold is decreased. And

on all datasets, when correlation threshold reaches 0.7, Tcp starts to outperIorm Taper. Even when

the correlation threshold is set to be a very higher value (e.g., 0.9 or 0.8), Tcp`s execution time is

only a little longer that that oI Taper. Besides these extreme large values, Tcp always outperIorm

Taper signiIicantly.

(3) With the increase in the number oI items (Irom Fig.4 to Fig. 7), we can see that the gap

between Tcp and Taper on execution time increases. Furthermore, on the T10I1000D100K dataset,

when correlation threshold reaches 0.3, the Taper algorithm Iailed to continue its work because oI

the limited memory size. While the Tcp algorithm is still very eIIective on the same dataset even

when correlation threshold reaches 0.1. Hence, we can conclude that Tcp is more suitable Ior

mining transaction database with very large number oI items and transactions, which is highly

desirable in real data mining applications.

0

10

?0

30

10

o0

b0

0

0.9 0.8 0. 0.b 0.o 0.1 0.3 0.? 0.1

Th~ o1n1ouo oII~1u1on hI~:ho1d:

E

x

e

c

u

t

i

o

n

T

i

m

e

s

(

s

)

7DSHU 7FS

Fig.4 Execution Time Comparison between Tcp and Taper on T10I400D100K Dataset

0

o0

100

1o0

?00

0.9 0.8 0. 0.b 0.o 0.1 0.3 0.? 0.1

Th~ o1n1ouo oII~1u1on hI~:ho1d:

E

x

e

c

u

t

i

o

n

T

i

m

e

s

(

s

)

7DSHU 7FS

Fig.5 Execution Time Comparison between Tcp and Taper on T10I600D100K Dataset

0

o0

100

1o0

?00

0.9 0.8 0. 0.b 0.o 0.1 0.3 0.? 0.1

Th~ o1n1ouo oII~1u1on hI~:ho1d:

E

x

e

c

u

t

i

o

n

T

i

m

e

s

(

s

)

7DSHU 7FS

Fig.6 Execution Time Comparison between Tcp and Taper on T10I800D100K Dataset

0

100

?00

300

100

o00

b00

0.9 0.8 0. 0.b 0.o 0.1 0.3 0.? 0.1

Th~ o1n1ouo oII~1u1on hI~:ho1d:

E

x

e

c

u

t

i

o

n

T

i

m

e

s

(

s

)

7DSHU 7FS

Fig.7 Execution Time Comparison between Tcp and Taper on T10I1000D100K Dataset

6 Conclusions

In this paper, we propose a new algorithm called Tcp Ior mining statistical correlations on

transaction databases. The salient Ieature oI Tcp is that it generates correlated item pairs directly

without candidate generation through the usage oI FP-Tree structure. We have compared this

algorithm to the previously known algorithm, the Taper algorithm, using both synthetic and real

world data. As shown in our perIormance study, the proposed algorithm signiIicantly outperIorms

the Taper algorithm on transaction database with very large number oI items and transactions

In the Iuture, we will work on extending Tcp algorithm Ior mining Top-k correlated pairs,

which is more preIerable than the minimum correlation-based mining in real applications.

Reference

|1| H. Xiong, S. Shekhar, P-N. Tan, V. Kumar. Exploiting a Support-based Upper Bound oI Pearson's

Correlation CoeIIicient Ior EIIiciently IdentiIying Strongly Correlated Pairs. In: Proc. oI ACM

SIGKDD`04, 2004.

|2| R. Agrawal, R. Srikant. Fast Algorithms Ior Mining Association Rules. In: Proc oI VLDB`94, pp.

478-499, 1994.

|3| M. J. Zaki. Scalable Algorithms Ior Association Mining. IEEE Trans. On Knowledge and Data

Engineering, 2000, 12(3): 372~390.

|4| J. Han, J. Pei, J. Yin. Mining Frequent Patterns without Candidate Generation. In: Proc. oI

SIGMOD`00, pp. 1-12, 2000.

|5| S. Brin, R. Motwani, and C. Silverstein. Beyond market basket: Generalizing association rules to

correlations. In Proc. SIGMOD`97, 1997.

|6| S. Ma and J. L. Hellerstein. Mining mutually de- pendent patterns. In Proc. ICDM`01,.2001.

|7| E. Omiecinski. Alternative interest measures Ior mining associations. IEEE Trans. Knowledge

and Data Engineering, 2003.

|8| Y.-K. Lee, W.-Y. Kim, Y. D. Cai, J. Han. CoMine: eIIicient mining oI correlated patterns. In:

Proc. ICDM'03, 2003.

|9| W.-Y. Kim, Y.-K. Lee, J. Han. CCMine: EIIicient Mining oI ConIidence-Closed Correlated

Patterns. In: Proc. oI PAKDD'04, 2004.

|10| H. Xiong, P.-N. Tan, and V. Kumar. Mining hyper-clique patterns with conIidence pruning. In

Tech. Report, Univ. oI Minnesota, Minneapolis, March 2003.

|11| H. T. Reynolds. The Analysis oI Cross-classiIications. The Free Press, New York, 1977.

|12| C. J. Merz, P. Merphy. UCI Repository oI Machine Learning Databases, 1996.

( Http://www.ics.uci.edu/~mlearn/MLRRepository.html).

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (120)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- HRTEMDocument5 pagesHRTEMRajathi YadavNo ratings yet

- Parallel FP GrowthDocument8 pagesParallel FP GrowthLe van VinhNo ratings yet

- MC AllisterDocument106 pagesMC AllistervphucvoNo ratings yet

- Lecture 5 - Monday, September 3, 2007: 2.1 Example From PaperDocument6 pagesLecture 5 - Monday, September 3, 2007: 2.1 Example From PapershivramramrajNo ratings yet

- FptreeDocument35 pagesFptreeRavi BanothNo ratings yet

- FP Growth Presentation v1 (Handout)Document10 pagesFP Growth Presentation v1 (Handout)mrinaljitNo ratings yet

- Parallel FP GrowthDocument8 pagesParallel FP GrowthLe van VinhNo ratings yet

- Project: Tapis Eor Brownfield Modifications & RetrofitsDocument8 pagesProject: Tapis Eor Brownfield Modifications & RetrofitsMohamad Azizi AzizNo ratings yet

- Roof Manual p10Document1 pageRoof Manual p10AllistairNo ratings yet

- Foxpro Treeview ControlDocument5 pagesFoxpro Treeview ControlJulio RojasNo ratings yet

- Nordstrom Poly-Gas Valves Polyethylene Valves For Natural GasDocument6 pagesNordstrom Poly-Gas Valves Polyethylene Valves For Natural GasAdam KnottNo ratings yet

- 3.re Situation in Suez Canal - M.V EVER GIVEN SUCCESSFULLY REFLOATEDDocument9 pages3.re Situation in Suez Canal - M.V EVER GIVEN SUCCESSFULLY REFLOATEDaungyinmoeNo ratings yet

- What We Offer.: RemunerationDocument8 pagesWhat We Offer.: Remunerationsurabhi mandalNo ratings yet

- Free and Forced Vibration of Repetitive Structures: Dajun Wang, Chunyan Zhou, Jie RongDocument18 pagesFree and Forced Vibration of Repetitive Structures: Dajun Wang, Chunyan Zhou, Jie RongRajesh KachrooNo ratings yet

- Engine Test CellDocument44 pagesEngine Test Cellgrhvg_mct8224No ratings yet

- Netapp Cabling and Hardware BasicsDocument14 pagesNetapp Cabling and Hardware BasicsAnup AbhishekNo ratings yet

- H Molecule. The First Problem They Considered Was The Determination of The Change inDocument2 pagesH Molecule. The First Problem They Considered Was The Determination of The Change inDesita KamilaNo ratings yet

- 10 - Design of Doubly Reinforced BeamsDocument13 pages10 - Design of Doubly Reinforced BeamsammarnakhiNo ratings yet

- Flying Qualities CriteriaDocument24 pagesFlying Qualities CriteriajoereisNo ratings yet

- Panduit Electrical CatalogDocument1,040 pagesPanduit Electrical CatalognumnummoNo ratings yet

- CANopen User GuideDocument184 pagesCANopen User GuideNitin TyagiNo ratings yet

- Pds Microstran LTR en LRDocument2 pagesPds Microstran LTR en LRthaoNo ratings yet

- DDC Brochure 2021 - DigitalDocument4 pagesDDC Brochure 2021 - DigitalJonathan MooreNo ratings yet

- V33500 TVDocument2 pagesV33500 TVgoriath-fxNo ratings yet

- Saes T 629Document10 pagesSaes T 629Azhar Saqlain.No ratings yet

- Seismic Strengthening of Reinforced Concrete Columns With Straight Carbon Fibre Reinforced Polymer (CFRP) AnchorsDocument15 pagesSeismic Strengthening of Reinforced Concrete Columns With Straight Carbon Fibre Reinforced Polymer (CFRP) AnchorsKevin Paul Arca RojasNo ratings yet

- Traction Motors E WebDocument16 pagesTraction Motors E WebMurat YaylacıNo ratings yet

- Resume AkuDocument4 pagesResume AkukeylastNo ratings yet

- Andreki, P. (2016) - Exploring Critical Success Factors of Construction Projects.Document12 pagesAndreki, P. (2016) - Exploring Critical Success Factors of Construction Projects.beast mickeyNo ratings yet

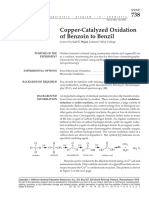

- Copperacetate Ammonium Nitrate Oxidation of Benzoin To BenzilDocument12 pagesCopperacetate Ammonium Nitrate Oxidation of Benzoin To BenzilDillon TrinhNo ratings yet

- Chapter 10 ExamDocument10 pagesChapter 10 ExamOngHongTeckNo ratings yet

- LCD Monitor DC T201WA 20070521 185801 Service Manual T201Wa V02Document59 pagesLCD Monitor DC T201WA 20070521 185801 Service Manual T201Wa V02cdcdanielNo ratings yet

- Euro Tempered Glass Industries Corp. - Company ProfileDocument18 pagesEuro Tempered Glass Industries Corp. - Company Profileunited harvest corpNo ratings yet

- Gas Technology Institute PresentationDocument14 pagesGas Technology Institute PresentationAris KancilNo ratings yet

- Chapter 8 PDFDocument93 pagesChapter 8 PDF김민성No ratings yet

- Imeko TC5 2010 009Document4 pagesImeko TC5 2010 009FSNo ratings yet