Professional Documents

Culture Documents

The Problem: Library (MASS) Data (Faithful) Attach (Faithful)

Uploaded by

Diego MorenoOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

The Problem: Library (MASS) Data (Faithful) Attach (Faithful)

Uploaded by

Diego MorenoCopyright:

Available Formats

36-724 Old Faithful E-M Example

The Problem

> library(MASS)

> data(faithful)

> attach(faithful)

denes a vector waiting of n = 272 waiting times between eruptions of the geyser Old Faithful

at Yellowstone Park. [A related data set, geyser is discussed by Venables and Ripley (MASS 3rd

ed, pp 252; 4th ed. pp. 127)].

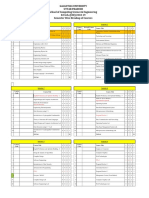

A histogram of the data in waiting looks like this:

Histogram of waiting

waiting

F

r

e

q

u

e

n

c

y

40 50 60 70 80 90 100

0

1

0

2

0

3

0

4

0

5

0

and suggests that a plausible model for the waiting times y

1

, . . . , y

n

is a mixture of two normals:

y

i

|

1

,

2

,

1

,

2

, q

iid

_

q

1

2

1

exp[(y

i

1

)

2

/2

2

1

]

+ (1 q)

1

2

2

exp[(y

i

2

)

2

/2

2

2

]

_

()

We wish to estimate the parameters

1

,

2

,

1

,

2

and q.

Letting f

1

(y

i

|

1

,

1

) =

1

2

1

exp[(y

i

1

)

2

/2

2

1

], and f

2

(y

i

|

2

,

2

) =

1

2

2

exp[(y

i

2

)

2

/2

2

2

],

it is clear that the observed data likelihood is

g(y|

1

,

1

,

2

,

2

, q) =

n

_

i=1

{q f

1

(y

i

|

1

,

1

) + (1 q) f

2

(y

i

|

2

,

2

)}.

Data augmentation

This likelihood is greatly simplied by using data augmentation (why it is simpler will only become

clear later). Consider the latent variable (a.k.a. missing data, or augmentation data)

z

i

=

_

1 if y

i

belongs to the left hump

0 if y

i

belongs to the right hump

, i = 1, . . . , n .

1

If we assume

z

i

| q

iid

Bin(1, q)

y

i

|

1

,

2

,

1

,

2

, z

i

indep

_

N(

1

,

2

1

), if z

i

= 1

N(

2

,

2

2

), if z

i

= 0

then

f (y

i

, z

i

|

1

,

1

,

2

,

2

, q) = f (y

i

|

1

,

1

,

2

,

2

, z

i

, q) f (z

i

|q)

Therefore the complete data likelihood is

f (y, z|

1

,

1

,

2

,

2

, q)

=

n

_

i=1

_

1

2

1

exp[(y

i

i

)

2

/2

2

1

]

_

z

i

_

1

2

2

exp[(y

i

2

)

2

/2

2

2

]

_

1z

i

q

z

i

(1 q)

1z

i

=

n

_

i=1

_

q

1

2

1

exp[(y

i

1

)

2

/2

2

1

]

_

z

i

_

(1 q)

1

2

2

exp[(y

i

2

)

2

/2

2

2

]

_

1z

i

.

where n = 272 and z

+

=

_

n

i=1

z

i

= (number of observations in the left hump). That is,

f (y, z|

1

,

2

,

1

,

2

, q) =

n

_

i=1

{q f

1

(y

i

|

1

,

1

)}

z

i

{(1 q) f

2

(y

i

|

2

,

2

)}

1z

i

E-M Algorithm(s)

To derive the E-M algorithm for this data, we must compute Q(,

k

) = E[log f (X|)|Y,

k

], where

= (q,

1

,

2

,

2

1

,

2

2

). Since

logf (y, z|) =

n

i=1

_

z

i

logq f

1

(y

i

|

1

,

1

) + (1 z

i

)log(1 q) f

2

(y

i

|

2

,

2

)

_

=

n

i=1

_

log

_

q f

1

(y

i

|

1

,

1

)

(1 q) f

2

(y

i

|

2

,

2

)

_

z

i

+ log[(1 q) f

2

(y

i

|

2

,

2

)]

_

we know we will have to compute

z

k

i

= E[z

i

|y

i

,

k

] = P(z

i

= 1|y

i

,

k

) =

f (z

i

= 1, y

i

|

k

)

f (z

i

= 1, y

i

|

k

) + f (z

i

= 0, y

i

|

k

)

=

q

k

f

1

(y

i

|

k

1

,

k

1

)

q

k

f

1

(y

i

|

k

1

,

k

2

) + (1 q

k

) f

2

(y

i

|

k

2

,

k

2

)

This leads to

E-step: Compute

Q(,

k

) = E[logf (y, z|)|y,

k

] =

n

i=1

_

z

k

i

logq f

1

(y

i

|

1

,

1

) + (1 z

k

i

)log(1 q) f

2

(y

i

|

2

,

2

)

_

2

M-step: compute

k+1

= argmax

Q(,

k

)

We can implement this E-M algorithm as it stands (see below), but a simplication is possible.

If we round each z

k

i

to zero or one, then Q(,

k

) looks like the sum of two normal log-likelihoods,

Q(,

k

) =

i: z

k

i

=0

log q f

1

(y

i

|

1

,

1

) +

i: z

k

i

=1

log(1 q) f

2

(y

i

|

2

,

2

)

where z

k

i

= 0 assigns some of the y

i

s to the left-hump likelihood and z

k

i

= 1 assigns the rest of

the y

i

s to the right-hump likelihood. This suggests a modied E-M algorithm as follows:

E-step: Compute z

k

i

rounded to 0 or 1 as above.

M-step: Estimate

n

k

1

=

_

n

i=1

z

k

i

n

k

2

=

_

n

i=1

(1 z

k

i

)

k+1

1

=

1

n

k

1

_

i

z

k

i

y

i

k+1

2

=

1

n

k

2

_

i

(1 z

k

i

)y

i

2

1

(k+1)

=

1

n

k

1

_

i

z

k

i

(y

i

k+1

1

)

2

2

1

(k+1)

=

1

n

k

2

_

i

(1 z

k

i

)(y

i

k+1

2

)

2

q

k+1

= n

k

1

/n

That is, each

i

or

2

i

is computed from the y

i

s assigned to the corresponding hump, and

q is the proportion of y

i

s assigned to the left hump.

Implementation

Point Estimates

Full E-M

Following is an implementation of the full E-M algorithm. The results are essentially identical

with the simpler E-M algorithm that comes from rounding the z

k

i

s.

The histogram suggests that (

1

= 55,

1

= 5,

2

= 80,

2

= 5, q = 0.5) might be a good set of

starting points. The following program stops when the log-likelihood is close enough.

library(MASS)

attach(faithful)

sqrt2PI <- sqrt(2*3.1415926535)

y <- waiting

### define th[1] = mu_1, th[2] = sigma_1,

### th[3] = mu_2, th[4] = sigma_2, th[5] = q

3

normf <- function(y, mu, sigma) { # normal density

1/(sqrt2PI*sigma)*exp(-(y-mu)2/(2*sigma2)) # (could use dnorm!)

}

lognormf <- function(y, mu, sigma) { # log of normal density

-(y-mu)2/(2*sigma2) - log(sqrt2PI*sigma) # (could use dnorm(...,log=T)!)

}

llg <- function(th) { # log-likelihood

sum( log(th[5]*normf(y, th[1], th[2]) +

(1-th[5])*normf(y, th[3], th[4])) )

}

NQ <- function(th){ #E-step: Negative Q

Ez <- th0[5]*normf(y,th0[1],th0[2]) /

(th0[5]*normf(y,th0[1],th0[2]) +

(1-th0[5])*normf(y,th0[3],th0[4]))

-sum( Ez*(log(th[5])+lognormf(y,th[1],th[2]))+

(1-Ez)*(log(1-th[5])+lognormf(y,th[3],th[4])) )

}

th0 <- c(60, 10, 90, 10, 0.5) # a good guess

# th0 <- c(100, 1, 30, 1, 0.9) # a wild guess

# th0 <- c(65, 10, 65, 10, 0.5) # leads to a local maximum/saddle point

i <- 0

while(1) {

print(c(i, th0, llg(th0)))

# th1 <- nlmin(NQ, th0)$x #Splus: M-step: Minimize -Q

th1 <- nlm(NQ,th0)$estimate #R: M-step: Minimize -Q

if( (i>1000) || (abs(llg(th0) - llg(th1))<(1e-9)*abs(llg(th0))))

break

th0 <- th1

i <- i+1

}

There is some evidence of multiple maxima and/or saddle points for this likelihood (as we would

expect!). Here is a summary of the runs:

4

Starting Values #iter

(60, 10, 90, 10, 0.5) 27 (54.62, 5.87, 80.09, 5.87, 0.36)

(100, 1, 30, 1, 0.9) 15 (80.09, 5.87, 54.61, 5.87, 0.64)

(65, 10, 65, 10, 0.5) 1 (70.90, 13.57, 70.89, 13.57, 0.50)

Simplied E-M

Here is the code for the simplied E-M algorithm that we get by rounding the zs:

th0 <- c(60, 10, 90, 10, 0.5) # a good guess

# th0 <- c(100, 1, 30, 1, 0.9) # a wild guess

# th0 <- c(65, 10, 65, 10, 0.5) # leads to a local maximum/saddle point

i <- 0

th1 <- th0

while(1) {

print(c(i,th0,llg(th0)))

Ez <- th0[5]*normf(y,th0[1],th0[2]) /

(th0[5]*normf(y,th0[1],th0[2]) +

(1-th0[5])*normf(y,th0[3],th0[4]))

z <- round(Ez)

n <- length(z)

n0 <- sum(z)

n1 <- n- n0

th1[1] <- sum(z*y)/n0

th1[3] <- sum((1-z)*y)/n1

th1[2] <- sqrt (sum(z*(y-th1[1])2)/n0)

th1[4] <- sqrt (sum((1-z)*(y-th1[3])2)/n1)

th1[5] <- n0/n

if( (i>1000) || (abs(llg(th0) - llg(th1))<(1e-9)*abs(llg(th0))))

break

th0 <- th1

i <- i+1

}

5

and here are the results of running the full and partial E-M algorithmfrom each of the given starting

values:

Starting Full E-M Simplied E-M

Values #iter

# iter

(60, 10, 90, 10, 0.5) 27 (54.62, 5.87, 80.09, 5.87, 0.36) 5 (54.75, 5.87, 80.28, 5.61, 0.37)

(100, 1, 30, 1, 0.9) 15 (80.09, 5.87, 54.61, 5.87, 0.64) 3 (80.21, 5.68, 54.63, 5.76, 0.64)

(65, 10, 65, 10, 0.5) 1 (70.90, 13.57, 70.89, 13.57, 0.50) 1 ( NaN, NaN, 70.90, 13.57, 0.00)

Not only does the simplied E-M algorithm run in fewer iterations (why??) but if you run the

two algorithms yourself you will see that each step of the simplied algorithm takes much less

time than a step of the full E-M algorithm (why??). Since the results of the two algorithms are

dierent, only one can be producing MLEs. . . Which one is it? Why??

Aside: In many practical optimization problems you will have a choice of two algorithms, say,

algorithm A that is guaranteed to get to the right answer quickly but may be unstable if the initial

guess is not good, or perhaps A runs very slowly, and algorithm B that is more stable and/or faster,

but is not guaranteed to get to the right answer. A common strategy in such situations is to run

algorithm B to get into the ballpark of the correct answer, and then switch to algorithmA to polish

things up. In some problems, Newton-Raphson plays the role of A (fast but unstable), and E-M

plays the role of B (slow but more stable); or perhaps E-M plays the role of A (slow but guaranteed

to get to the right answer), and an approximate E-M algorithm plays the role of B (faster but not

guaranteed to get to the right answer). In other situations, there may be other choices for A and B.

Standard Errors

To calculate standard errors for the MLEs (

1

= 80.09,

1

= 5.87,

2

= 54.62,

2

= 5.87, q =

0.64), we can use either the missing information principle or do numerical dierentiation.

To compute standard errors using the Missing Information Principle,

() = E[

2

log f (x|)|y, ] Var [

log f (x|)|y, ], ()

we know that we only need the main diagonal terms of each of the two matrices on the right.

For the second term on the right hand side of (), Var [

log f (x|)|y, ], we note that

logf (y, z|) =

n

i=1

_

z

i

y

i

1

2

1

, z

i

_

(y

i

1

)

2

3

1

1

_

, (1 z

i

)

y

i

2

2

2

, (1 z

i

)

_

(y

i

2

)

2

3

2

2

_

,

z

i

q

1 z

i

1 q

_

T

.

and to compute the variance of each of these terms we will need the fact that Var(z

i

|y

i

, ) =

E(z

i

|y

i

, )(1 E(z

i

|y

i

, )). Then the R/Splus code below calculates

Var[logf (y, z|)|y,

k

] = (0.3936528, 1.4835747, 0.5189960, 2.5355206, 58.9115844)

T

6

Ez <- th0[5]*normf(y, th0[1], th0[2]) /

(th0[5]*normf(y, th0[1], th0[2]) + (1-th0[5])*normf(y, th0[3], th0[4]))

Vz <- Ez*(1-Ez) # variance of binomial(1, p)

V <- c(sum(Vz*(y-th0[1])2/th0[2]4), sum(Vz*((y-th0[1])2/th0[2]3 - 1/th0[2])2),

sum(Vz*(y-th0[3])2/th0[4]4), sum(Vz*((y-th0[3])2/th0[4]3 - 1/th0[4])2),

sum(Vz*(1/th0[5]+1/(1-th0[5]))2))

For the rst term on the right-hand side of (), E[

2

log f (x|)|y, ], we note that the main

diagonal of

2

logf (y, z|) is

n

i=1

_

z

i

2

1

, z

i

_

3(y

i

1

)

2

4

1

2

1

_

,

1 z

i

2

2

, (1 z

i

)

_

3(y

i

2

)

2

4

2

2

2

_

,

z

i

q

2

+

1 z

i

(1 q)

2

_

T

.

The following code evaluates

E[

2

logf (y, z|)|y,

k

] = (2.846707, 5.692247, 5.050176, 10.101635, 1179.206112)

T

Ez <- th0[5]*normf(y, th0[1], th0[2]) /

(th0[5]*normf(y, th0[1], th0[2]) + (1-th0[5])*normf(y, th0[3], th0[4]))

Vz <- Ez*(1-Ez) # variance of binomial(1, p)

E <- c(sum(Ez)/th0[2]2,

sum(Ez*(3*(y-th0[1])2/(th0[2]4)-1/(th0[2]2))),

sum(1-Ez)/th0[4]2, sum((1-Ez)*(3*(y-th0[3])2/(th0[4]4)-1/(th0[4]2))),

sum(Ez)/th0[5]2+sum(1-Ez)/(1-th0[5])2 )

Now, calculating the standard errors using the missing information principle, S E(

) =

1/(E V),

and using the infomat.s routine, is easy:

SE1 <- sqrt(1/(E-V)) # SE from Missing Information Principle

source("infomat.s") # numerical differentiator...

SE2 <- sqrt(1/diag(info.mtx.fun(llg, th0, hinit=rep(.001,5))))

# SE from infomat.s

> SE1

[1] 0.63847878 0.48744705 0.46977981 0.36354950 0.02987679

> SE2

[1] 0.63842116 0.48739376 0.46974987 0.36349582 0.02987575

We see that the two methods, SE1 = S E

mip

(

), and SE2 = S E

numerical

(

), give almost the same

result.

7

You might also like

- Lab1 Soln PDFDocument24 pagesLab1 Soln PDFJuan Carlos MerchanNo ratings yet

- Solutions Chapter 9Document34 pagesSolutions Chapter 9reloadedmemoryNo ratings yet

- Tssol 11Document5 pagesTssol 11aset999No ratings yet

- Grade 3 - Unit 1 Increase and Decrease PatternDocument7 pagesGrade 3 - Unit 1 Increase and Decrease PatternKyo ToeyNo ratings yet

- Csir Life Sciences Fresh Instant NotesDocument4 pagesCsir Life Sciences Fresh Instant NotesAlps Ana33% (3)

- SubaruDocument7 pagesSubaruclaude terizlaNo ratings yet

- Example of MLE Computations, Using RDocument9 pagesExample of MLE Computations, Using Rnida pervaizNo ratings yet

- Lecture 2 Time ComplexityDocument17 pagesLecture 2 Time ComplexityKaheng ChongNo ratings yet

- R QuestionsDocument5 pagesR QuestionsNatiqNo ratings yet

- ME21B172 - Anuj - Jagannath - Said - Assignment - 1Document10 pagesME21B172 - Anuj - Jagannath - Said - Assignment - 1Anuj SaidNo ratings yet

- MAE653 Sp10 Lesson06Document9 pagesMAE653 Sp10 Lesson06Edwin MachacaNo ratings yet

- Random Number GenerationDocument42 pagesRandom Number GenerationNikhil AggarwalNo ratings yet

- Statistics 512 Notes 19Document12 pagesStatistics 512 Notes 19Sandeep SinghNo ratings yet

- Xavier University - Ateneo de Cagayan University College of Engineering Electronics Engineering DepartmentDocument10 pagesXavier University - Ateneo de Cagayan University College of Engineering Electronics Engineering DepartmentMor DepRzNo ratings yet

- Eviews UnderstandingDocument23 pagesEviews UnderstandingarmailgmNo ratings yet

- Lecture - 4 Box-Jenkins ModelDocument16 pagesLecture - 4 Box-Jenkins ModelNuur AhmedNo ratings yet

- EE 627: Term 2/2016 Homework 4 Due April 25, 2017: Solution: The Autoregressive Polynomial Can Be Factorized AsDocument4 pagesEE 627: Term 2/2016 Homework 4 Due April 25, 2017: Solution: The Autoregressive Polynomial Can Be Factorized AsluscNo ratings yet

- Monte Carlo SimulationDocument70 pagesMonte Carlo SimulationJano Lima100% (2)

- GMMDocument20 pagesGMMsaw4321No ratings yet

- Merton Jump - Diffusion.modelDocument7 pagesMerton Jump - Diffusion.modelIoannis MilasNo ratings yet

- Solución de La Asignación 1 Del Curso de R: 1. SolucionesDocument14 pagesSolución de La Asignación 1 Del Curso de R: 1. SolucionesjuanNo ratings yet

- Statistics 512 Notes 18Document10 pagesStatistics 512 Notes 18Sandeep SinghNo ratings yet

- Runge Kutta Lab With Pendulum ProblemDocument21 pagesRunge Kutta Lab With Pendulum ProblemJoshua CookNo ratings yet

- Edu 2008 Spring C SolutionsDocument117 pagesEdu 2008 Spring C SolutionswillhsladeNo ratings yet

- CIR CalibrationDocument8 pagesCIR CalibrationNicolas Lefevre-LaumonierNo ratings yet

- Algorithms - : SolutionsDocument11 pagesAlgorithms - : SolutionsDagnachewNo ratings yet

- Solutions To Chapters 15 & 16Document8 pagesSolutions To Chapters 15 & 16Lâm HàNo ratings yet

- Ec2 4Document40 pagesEc2 4masudul9islamNo ratings yet

- HW3 - Figure 5.5: Read Data From Table 3.4Document9 pagesHW3 - Figure 5.5: Read Data From Table 3.4梁嫚芳No ratings yet

- Kim Minjung Bioen 485 Lab 3Document8 pagesKim Minjung Bioen 485 Lab 3api-281990237No ratings yet

- Macroeconomic Theory 110 Assignment 1Document7 pagesMacroeconomic Theory 110 Assignment 1Greco S50No ratings yet

- Univariate Time Series Modelling and ForecastingDocument72 pagesUnivariate Time Series Modelling and Forecastingjamesburden100% (1)

- Elosery/matlabDocument44 pagesElosery/matlabOmar EzzatNo ratings yet

- Problem Set 4 Solution Numerical MethodsDocument6 pagesProblem Set 4 Solution Numerical MethodsAriyan JahanyarNo ratings yet

- GMM Said Crv10 TutorialDocument27 pagesGMM Said Crv10 TutorialShipra JainNo ratings yet

- CH 06Document52 pagesCH 06Ali AhmedNo ratings yet

- hw6 SolDocument7 pageshw6 SolKalai PremNo ratings yet

- The Royal Statistical Society 2003 Examinations: SolutionsDocument9 pagesThe Royal Statistical Society 2003 Examinations: SolutionsJeromeWeirNo ratings yet

- Lab 8Document13 pagesLab 8naimoonNo ratings yet

- C5.MDOF1 Compatibility Mode PDFDocument16 pagesC5.MDOF1 Compatibility Mode PDFArThur BangunNo ratings yet

- Exerc2 GabDocument7 pagesExerc2 Gabmonica0423No ratings yet

- Programming R Compatibility ModeDocument18 pagesProgramming R Compatibility ModeHamidah MidahNo ratings yet

- Chinese Reminder TheoremDocument7 pagesChinese Reminder Theoremevan lionheartNo ratings yet

- CH3. Dynamic Programming (DP) : - Mergesort, Quicksort, Binary SearchDocument22 pagesCH3. Dynamic Programming (DP) : - Mergesort, Quicksort, Binary SearchJungWoo ChoNo ratings yet

- Chapter 3 Laplace TransformDocument20 pagesChapter 3 Laplace TransformKathryn Jing LinNo ratings yet

- HW3 Solutions2printDocument6 pagesHW3 Solutions2printයෙදුම් KarunarathnaNo ratings yet

- NC Post 007Document20 pagesNC Post 007Xyed Haider BukhariNo ratings yet

- Probability Theory and Mathematical Statistics: Homework 5, Vitaliy PozdnyakovDocument12 pagesProbability Theory and Mathematical Statistics: Homework 5, Vitaliy PozdnyakovGarakhan TalibovNo ratings yet

- RT Solutions-22!01!2012 XIII VXY Paper II Code A SolDocument16 pagesRT Solutions-22!01!2012 XIII VXY Paper II Code A Solvishal110085No ratings yet

- D 57 W 14 MtsolDocument8 pagesD 57 W 14 MtsolInstantRamenNo ratings yet

- Assignment-4: %assignment Submitted by %name: Jeswin Tom Joseph %roll - No: CH17B052Document14 pagesAssignment-4: %assignment Submitted by %name: Jeswin Tom Joseph %roll - No: CH17B052Jeswin Tom Joseph ch17b052No ratings yet

- Optimal Binary Search TREES (Contd..) : P (K) +COST (L) +COST (R) +W (0, k-1) +W (K, N) .....Document55 pagesOptimal Binary Search TREES (Contd..) : P (K) +COST (L) +COST (R) +W (0, k-1) +W (K, N) .....Slim ShadyNo ratings yet

- Detailed Solutions A-07 JUNE 2003: I X X F F IDocument32 pagesDetailed Solutions A-07 JUNE 2003: I X X F F IAdzLinkBalaoangNo ratings yet

- 3.6. The Autocovariance-Generating Function: L) (Y, - M) N (IDocument4 pages3.6. The Autocovariance-Generating Function: L) (Y, - M) N (IAna ScaletNo ratings yet

- Chapter 9 Cox Proportional Hazards: Library (Survival)Document10 pagesChapter 9 Cox Proportional Hazards: Library (Survival)Yosef GUEVARA SALAMANCANo ratings yet

- Tema 7Document14 pagesTema 7alex LeizaNo ratings yet

- Homework Assignment 3 Homework Assignment 3Document10 pagesHomework Assignment 3 Homework Assignment 3Ido AkovNo ratings yet

- Kill MeDocument23 pagesKill MeEmilyNo ratings yet

- Practice MidtermDocument4 pagesPractice MidtermArka MitraNo ratings yet

- Dynamic ProgrammingDocument7 pagesDynamic ProgrammingGlenn GibbsNo ratings yet

- Analytic Geometry: Graphic Solutions Using Matlab LanguageFrom EverandAnalytic Geometry: Graphic Solutions Using Matlab LanguageNo ratings yet

- Typesetting MathematicsDocument1 pageTypesetting MathematicsDiego MorenoNo ratings yet

- An Experiment and A Model of The Rise of A Gas Bubble in A LiquidDocument10 pagesAn Experiment and A Model of The Rise of A Gas Bubble in A LiquidDiego MorenoNo ratings yet

- Con VolDocument3 pagesCon VolDiego MorenoNo ratings yet

- Función CaracterísticaDocument1 pageFunción CaracterísticaDiego MorenoNo ratings yet

- A P P E N D I X Powers of Ten and Scientific NotationDocument5 pagesA P P E N D I X Powers of Ten and Scientific NotationAnthony BensonNo ratings yet

- Practice - Test 2Document5 pagesPractice - Test 2Nguyễn QanhNo ratings yet

- Work Breakdown StructureDocument8 pagesWork Breakdown StructurerenshagullNo ratings yet

- CBC Heo (Wheel Loader) NC IIDocument58 pagesCBC Heo (Wheel Loader) NC IIJohn JamesNo ratings yet

- ..Product CatalogueDocument56 pages..Product Catalogue950 911No ratings yet

- How Chargers WorkDocument21 pagesHow Chargers WorkMuhammad Irfan RiazNo ratings yet

- PM Jobs Comp Ir RandDocument9 pagesPM Jobs Comp Ir Randandri putrantoNo ratings yet

- The Turning Circle of VehiclesDocument2 pagesThe Turning Circle of Vehiclesanon_170098985No ratings yet

- WWW Studocu Com in N 29646569 Sid 01682568219Document1 pageWWW Studocu Com in N 29646569 Sid 01682568219Nivetha SelvamuruganNo ratings yet

- Grade 8 Science - Second GradingDocument5 pagesGrade 8 Science - Second GradingMykelCañete0% (1)

- Plastic Properties HandbookDocument15 pagesPlastic Properties HandbookguilloteARGNo ratings yet

- 2201 IntGCSE (9-1) Subject Grade Boundaries V1Document4 pages2201 IntGCSE (9-1) Subject Grade Boundaries V1Fariha RahmanNo ratings yet

- Generation III Sonic Feeder Control System Manual 20576Document32 pagesGeneration III Sonic Feeder Control System Manual 20576julianmataNo ratings yet

- Job Satisfaction VariableDocument2 pagesJob Satisfaction VariableAnagha Pawar - 34No ratings yet

- The Scope and Method of Economics: © 2007 Prentice Hall Business Publishing Principles of Economics 8e by Case and FairDocument36 pagesThe Scope and Method of Economics: © 2007 Prentice Hall Business Publishing Principles of Economics 8e by Case and FairLangson phiriNo ratings yet

- Victor 2Document30 pagesVictor 2EmmanuelNo ratings yet

- Sample Learning Module As PatternDocument23 pagesSample Learning Module As PatternWilliam BulliganNo ratings yet

- Geotechnical PaperDocument16 pagesGeotechnical PaperTxavo HesiarenNo ratings yet

- Loctite 586 PDFDocument9 pagesLoctite 586 PDForihimieNo ratings yet

- Research FinalDocument55 pagesResearch Finalkieferdem071908No ratings yet

- Galgotias University Uttar Pradesh School of Computing Science & Engineering B.Tech. (CSE) 2018-19 Semester Wise Breakup of CoursesDocument2 pagesGalgotias University Uttar Pradesh School of Computing Science & Engineering B.Tech. (CSE) 2018-19 Semester Wise Breakup of CoursesRohit Singh BhatiNo ratings yet

- Alchemy of The HeartDocument7 pagesAlchemy of The HeartAbdul RahimNo ratings yet

- IT Level 4 COCDocument2 pagesIT Level 4 COCfikru tesefaye0% (1)

- Smart Door Lock System Using Face RecognitionDocument5 pagesSmart Door Lock System Using Face RecognitionIJRASETPublicationsNo ratings yet

- Carnegie Mellon Thesis RepositoryDocument4 pagesCarnegie Mellon Thesis Repositoryalisonreedphoenix100% (2)

- Dialectical Relationship Between Translation Theory and PracticeDocument5 pagesDialectical Relationship Between Translation Theory and PracticeEverything Under the sunNo ratings yet

- Vitamins - CyanocobalaminDocument12 pagesVitamins - CyanocobalaminK PrashasthaNo ratings yet