Professional Documents

Culture Documents

Confidence Intervals Continued: Statistics 512 Notes 4

Uploaded by

Sandeep SinghOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Confidence Intervals Continued: Statistics 512 Notes 4

Uploaded by

Sandeep SinghCopyright:

Available Formats

Statistics 512 Notes 4

Confidence Intervals Continued

Role of Asymptotic (Large Sample) Approximations in

Statistics: It is often difficult to find the finite sample

sampling distribution of an estimator or statistic.

Review of Limiting Distributions from Probability

Types of Convergence:

Let

1

, ,

n

X X K

be a sequence of random variables and let

X be another random variable. Let

n

F

denote the CDF of

n

X

and let F denote the CDF of X .

1.

n

X

converges to X in probability, denoted

P

n

X X

if

for every

>0,

(| | ) 0

n

P X X >

as

n

.

2.

n

X

converges to X in distribution, denoted

D

n

X X

if

for every

>0,

( ) ( )

n

F t F t

as

n

at all t for which F is continuous.

Weak Law of Large Numbers

Let

1

, ,

n

X X K

be a sequence of iid random variables having

mean

and variance

2

< . Let

1

n

i

i

n

X

X

n

. Then

P

n

X

.

Interpretation: The distribution of

n

X

becomes more and

more concentrated around

as n gets large.

Proof: Using Chebyshevs inequality, for every

>0

2

2 2

( )

(| | )

n

n

Var X

P X

n

>

which tends to 0 as

n

.

Central Limit Theorem

Let

1

, ,

n

X X K

be a sequence of iid random variables having

mean

and variance

2

< . Then

( )

( )

D

n n

n

n

X n X

Z Z

Var X

where

~ (0,1) Z N

. In other words,

2

/ 2

1

lim ( ) ( )

2

z

x

n

n

P Z z z e dx

.

Interpretation: Probability statements about

n

X

can be

approximated using a normal distribution. Its the

probability statements that we are approximating, not the

random variable itself.

Some useful further convergence properties:

Slutskys Theorem (Theorem 4.3.5):

, , ,

D P P

n n n

X X A a B b

then

D

n n n

A B X a bX + +

.

Continuous Mapping Theorem (Theorem 4.3.4):

Suppose

n

X

converges to X in distribution and g is a

continuous function on the support of X. Then

( )

n

g X

converges to g(X) in distribution.

Application of these convergence properties:

Let

1

, ,

n

X X K

be a sequence of iid random variables having

mean

, variance

2

<

and

4

( )

i

E X <

. Let

1

n

i

i

n

X

X

n

and

2 2

1

1

( )

n

n i n

i

S X X

n

. Then

D

n

n

n

X

T Z

S

n

where

~ (0,1) Z N

.

Proof (only for those interested):

We can write

n

n

n

X

T

S

n

. Using the Central Limit

Theorem which says

D

n

X

Z

n

and Slutksys Theorem, to

prove that

D

n

T Z

, it is sufficient to prove that

1

P

n

S

.

We can write

2 2

2 2 2

1 1 1

2

n n n

i n i i

i i i

n n n

X X X X

S X X

n n n

+

. By the

weak law of large numbers,

2 2 2 2

( ) [ ( )]

p

n i i

S E X E X

or equivalently

2

2

1

P

n

S

.

Back to Confidence Intervals

CI for mean of iid sample

1

,

n

X X K

from unknown

distribution with finite variance and

4

( )

i

E X <

:

By the application of the central limit theorem above,

D

n

n

n

X

T Z

S

n

.

Thus, for large n,

1 1

2 2

1 1

2 2

1 1

2 2

1

n

n

n n

n n

n n

n n

X

P z z

S

n

S S

P z X z X

n n

S S

P X z X z

n n

_

,

_

,

_

+

,

Thus,

1

2

n

n

S

X z

n

t

is an approximate

(1 )

confidence

interval.

How large does n need to be for this to be a good

approximation? Traditionally textbooks say n>30. Well

look at some simulation results later in the course.

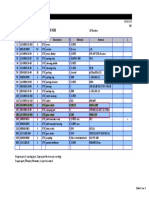

Application: A food-processing company is considering

marketing a new spice mix for Creole and Cajun cooking.

They took a simple random sample of 200 consumers and

found that 37 would purchase such a product. Find an

approximate 95% confidence interval for p, the true

proportion of buyers.

Let

1 if ith consumer would buy product

0 if ith consumer would not buy product

i

X

'

.

If the population is large (say 50 times larger than the

sample size), a simple random sample can be regarded as a

sample with replacement. Then a reasonable model is that

1 200

, , X X K

are iid Bernoulli(p). We have

200 200

2 2

2 2 2

1 1

37

0.185

200

( )

0.185 (0.185) 0.151

200

n

i n i

i i

n n

X

X X X

S X

n

Thus, an approximate 95% confidence interval for p is

1

2

0.151

0.185 1.96 (0.131, 0.239)

200

n

n

S

X z

n

t t

.

Note that for an iid Bernoulli (

p

) sample, we can write

2

n

S

in a simple way. In general,

2 2 2

2

1 1

2 2

2 2

2

1 1

( ) ( 2 )

2

=

n n

i n i i n n

i i

n

n n

i i

i n n i

n

X X X X X X

S

n n

X X

nX nX

X

n n n n

+

+

For an iid Bernoulli sample, let

n n

p X

.

n

p

is a natural

point estimator of

p

for the Bernoulli. Note that for a

Bernoulli sample,

2

i i

X X

. Thus, for a Bernoulli sample

2 2

n n n

S p p

and an approximate 95% confidence interval

for

p

is

2

1.96

n n

n

p p

p

n

t

Choosing Between Confidence Intervals

Let

1

, ,

n

X X K

be iid

2

( , ) N where

2

is known.

Suppose we want a 95% confidence interval for

. Then

for any

a

and b that satisfy

( ) 0.95 P a Z b

,

, X b X a

n n

_

,

is a 95% confidence interval because:

0.95

X

P a b

n

P a X b X

n n

P X b X a

n n

,

_

,

_

,

For example, we could choose (1) a=-1.96, b=1.96

[P(Z<a)=.025; P(Z>b)=.025); the choice we have used

before]; (2) a=-2.05, b=1.88 [P(Z<a)=0.02, P(Z>b)=0.03];

(3) a=-1.75, b=2.33 [P(Z<a)=0.04, P(Z>b)=0.01].

Which is the best 95% confidence interval?

Reasonable criterion: Expected length of the confidence

interval. Among all 95% confidence interval, choose the

one that is expected to have the smallest length since it will

be the most informative.

Length of the confidence interval =

( ) ( ) ( ) X a X b b a

n n n

,

thus we want to choose the confidence interval with the

smallest value of b-a.

The value of b-a for the three confidence intervals above is

(1) a=-1.96, b=1.96, (b-a)=3.92; (2) a=-2.05, b=1.88, (b-

a)=3.93; (3) a=-1.75, b=2.33, (b-a)=4.08.

The best 95% confidence interval is (1) with a=-1.96,

b=1.96. In fact, it can be shown that for this problem the

best choice of a and b are a=-1.96, b=1.96.

You might also like

- ch8 4710Document63 pagesch8 4710Leia SeunghoNo ratings yet

- Chapter 9Document8 pagesChapter 9sezarozoldekNo ratings yet

- Chebyshev PDFDocument4 pagesChebyshev PDFSoumen MetyaNo ratings yet

- ChebyshevDocument4 pagesChebyshevAbhishek GoudarNo ratings yet

- Lecture No.10Document8 pagesLecture No.10Awais RaoNo ratings yet

- Confidence Intervals For A Single Sample: H.W. Kayondo C 1Document16 pagesConfidence Intervals For A Single Sample: H.W. Kayondo C 1shjahsjanshaNo ratings yet

- Chapter 8Document7 pagesChapter 8sezarozoldekNo ratings yet

- Bayesian Credible IntervalDocument8 pagesBayesian Credible IntervalPrissy MakenaNo ratings yet

- Math 235#6Document29 pagesMath 235#6digiy40095No ratings yet

- Math489/889 Stochastic Processes and Advanced Mathematical Finance Solutions For Homework 7Document6 pagesMath489/889 Stochastic Processes and Advanced Mathematical Finance Solutions For Homework 7poma7218No ratings yet

- Osobine VarDocument19 pagesOsobine Varenes_osmić_1No ratings yet

- Strong LawDocument9 pagesStrong LawAbhilash MohanNo ratings yet

- Lect 9Document18 pagesLect 9Dharyl BallartaNo ratings yet

- Central Limit TheoremDocument16 pagesCentral Limit Theoremmano17doremonNo ratings yet

- Intervall de ConfidenceDocument19 pagesIntervall de ConfidenceKarimaNo ratings yet

- Stat 255 Supplement 2011 FallDocument78 pagesStat 255 Supplement 2011 Fallgoofbooter100% (1)

- HW 5Document2 pagesHW 5Jake SmithNo ratings yet

- 1 Upper Bounds On The Tail ProbabilityDocument7 pages1 Upper Bounds On The Tail Probabilitykunduru_reddy_3No ratings yet

- Lec 5Document64 pagesLec 5dhadkanNo ratings yet

- Asymptotic Theory For OLSDocument15 pagesAsymptotic Theory For OLSSegah MeerNo ratings yet

- Tests Based On Asymptotic PropertiesDocument6 pagesTests Based On Asymptotic PropertiesJung Yoon SongNo ratings yet

- Biostatistics For Academic3Document28 pagesBiostatistics For Academic3Semo gh28No ratings yet

- 5 BMDocument20 pages5 BMNaailah نائلة MaudarunNo ratings yet

- Lectuer 21-ConfidenceIntervalDocument41 pagesLectuer 21-ConfidenceIntervalArslan ArshadNo ratings yet

- CLT PDFDocument4 pagesCLT PDFdNo ratings yet

- EstimationDocument12 pagesEstimationwe_spidus_2006No ratings yet

- Neyman Pearson DetectorsDocument5 pagesNeyman Pearson DetectorsGuilherme DattoliNo ratings yet

- Notes 6Document13 pagesNotes 6webpixel servicesNo ratings yet

- Large Deviations: S. R. S. VaradhanDocument12 pagesLarge Deviations: S. R. S. VaradhanLuis Alberto FuentesNo ratings yet

- Basic Concepts of Inference: Corresponds To Chapter 6 of Tamhane and DunlopDocument40 pagesBasic Concepts of Inference: Corresponds To Chapter 6 of Tamhane and Dunlopakirank1No ratings yet

- Convergence Concepts: 2.1 Convergence of Random VariablesDocument6 pagesConvergence Concepts: 2.1 Convergence of Random VariablesJung Yoon SongNo ratings yet

- MIT14 30s09 Lec17Document9 pagesMIT14 30s09 Lec17Thahir HussainNo ratings yet

- 1.8. Large Deviation and Some Exponential Inequalities.: B R e DX Essinf G (X), T e DX Esssup G (X)Document4 pages1.8. Large Deviation and Some Exponential Inequalities.: B R e DX Essinf G (X), T e DX Esssup G (X)Naveen Kumar SinghNo ratings yet

- Statistics Exam ReviewDocument8 pagesStatistics Exam Reviewhxx lxxxNo ratings yet

- Let,, ., Be A Random Sample From ( ,) - Construct A (1) Confidence Interval ForDocument5 pagesLet,, ., Be A Random Sample From ( ,) - Construct A (1) Confidence Interval ForRayan H BarwariNo ratings yet

- 8 Statistical EstimationDocument12 pages8 Statistical EstimationAnusia ThevendaranNo ratings yet

- Unbiased StatisticDocument15 pagesUnbiased StatisticOrYuenyuenNo ratings yet

- MIT6 262S11 Lec02Document11 pagesMIT6 262S11 Lec02Mahmud HasanNo ratings yet

- Lec 10Document22 pagesLec 10GuillyNo ratings yet

- Analytic Number Theory Winter 2005 Professor C. StewartDocument35 pagesAnalytic Number Theory Winter 2005 Professor C. StewartTimothy P0% (1)

- Central Limit TheoremDocument1 pageCentral Limit TheoremguipingxieNo ratings yet

- Chapter 3. Random Interest Rates: School of Mathematics & Statistics Stat3364 - Applied Probability in Commerce & FinanceDocument16 pagesChapter 3. Random Interest Rates: School of Mathematics & Statistics Stat3364 - Applied Probability in Commerce & Financecridds01No ratings yet

- Sample SizeDocument4 pagesSample Sizeumardraz1852023No ratings yet

- Lecture11 571 PDFDocument13 pagesLecture11 571 PDFJonathan SitanggangNo ratings yet

- Sampling & EstimationDocument19 pagesSampling & EstimationKumail Al KhuraidahNo ratings yet

- STAT2602B Topic 4 With Exercise Suggested SolutionDocument12 pagesSTAT2602B Topic 4 With Exercise Suggested SolutiongiaoxukunNo ratings yet

- Stat 106Document24 pagesStat 106Eid IbrahimNo ratings yet

- Confidence Intervals: Guy Lebanon February 23, 2006Document3 pagesConfidence Intervals: Guy Lebanon February 23, 2006william waltersNo ratings yet

- Part 8Document17 pagesPart 8Alyza Caszy UmayatNo ratings yet

- GENG5507 Stat TutSheet 5 SolutionsDocument5 pagesGENG5507 Stat TutSheet 5 SolutionsericNo ratings yet

- UDJ Cheat Sheet - MergedDocument2 pagesUDJ Cheat Sheet - MergeddewNo ratings yet

- Exam 3 SolutionDocument8 pagesExam 3 SolutionPotatoes123No ratings yet

- Lesson4 MAT284 PDFDocument36 pagesLesson4 MAT284 PDFMayankJain100% (1)

- Chapters4 5 PDFDocument96 pagesChapters4 5 PDFrobinNo ratings yet

- Consistency of Estimators: Guy Lebanon May 1, 2006Document2 pagesConsistency of Estimators: Guy Lebanon May 1, 2006Juan EduardoNo ratings yet

- Testing of HypothesisDocument15 pagesTesting of HypothesisALLADA JAYAVANTH KUMARNo ratings yet

- Lecture - 6 Problem Estimasi Satu Dan Dua SampelDocument17 pagesLecture - 6 Problem Estimasi Satu Dan Dua SampelanggaraNo ratings yet

- Module 25 - Statistics 2 (Self Study)Document9 pagesModule 25 - Statistics 2 (Self Study)api-3827096No ratings yet

- PGD Sta - Sta 703Document25 pagesPGD Sta - Sta 703adeyemi idrisNo ratings yet

- Statistics 512 Notes 26: Decision Theory Continued: FX FX DDocument11 pagesStatistics 512 Notes 26: Decision Theory Continued: FX FX DSandeep SinghNo ratings yet

- Statistics 512 Notes 24: Uniformly Most Powerful Tests: X FX X FX X XDocument7 pagesStatistics 512 Notes 24: Uniformly Most Powerful Tests: X FX X FX X XSandeep SinghNo ratings yet

- Statistics 512 Notes 25: Decision Theory: of Nature. The Set of All Possible Value ofDocument11 pagesStatistics 512 Notes 25: Decision Theory: of Nature. The Set of All Possible Value ofSandeep SinghNo ratings yet

- Notes 21Document7 pagesNotes 21Sandeep SinghNo ratings yet

- Statistics 512 Notes 19Document12 pagesStatistics 512 Notes 19Sandeep SinghNo ratings yet

- Statistics 512 Notes 18Document10 pagesStatistics 512 Notes 18Sandeep SinghNo ratings yet

- Statistics 512 Notes 16: Efficiency of Estimators and The Asymptotic Efficiency of The MLEDocument6 pagesStatistics 512 Notes 16: Efficiency of Estimators and The Asymptotic Efficiency of The MLESandeep SinghNo ratings yet

- Statistics 512 Notes 8: The Monte Carlo Method: X X H H X Is Normal (With UnknownDocument7 pagesStatistics 512 Notes 8: The Monte Carlo Method: X X H H X Is Normal (With UnknownSandeep SinghNo ratings yet

- Statistics 512 Notes 12: Maximum Likelihood Estimation: X X PX XDocument5 pagesStatistics 512 Notes 12: Maximum Likelihood Estimation: X X PX XSandeep SinghNo ratings yet

- Sum of Weibull VariatesDocument6 pagesSum of Weibull VariatesSandeep SinghNo ratings yet

- Notes 2Document8 pagesNotes 2Sandeep SinghNo ratings yet

- Statistics 512 Notes I D. SmallDocument8 pagesStatistics 512 Notes I D. SmallSandeep SinghNo ratings yet

- PANCREATITISDocument38 pagesPANCREATITISVEDHIKAVIJAYANNo ratings yet

- Motorcycle Troubleshooting ManualDocument15 pagesMotorcycle Troubleshooting ManualJan Warmerdam100% (1)

- FP Lecture Midterm Exam Sec - Sem.2020Document4 pagesFP Lecture Midterm Exam Sec - Sem.2020SAEEDA ALMUQAHWINo ratings yet

- Biasing Opamps Into Class ADocument11 pagesBiasing Opamps Into Class AsddfsdcascNo ratings yet

- SFT PresentationDocument16 pagesSFT Presentationapna indiaNo ratings yet

- Inspirational Quotes General and ExamsDocument6 pagesInspirational Quotes General and Examsasha jalanNo ratings yet

- What A Wonderful WorldDocument3 pagesWhat A Wonderful Worldapi-333684519No ratings yet

- Chapter 01Document16 pagesChapter 01deepak_baidNo ratings yet

- Sop ExcelDocument104 pagesSop ExcelRizky C. AriestaNo ratings yet

- Bahasa Inggris PATDocument10 pagesBahasa Inggris PATNilla SumbuasihNo ratings yet

- Annotated Bib-BirthingDocument3 pagesAnnotated Bib-Birthingapi-312719022No ratings yet

- مشخصات فنی بیل بکهو فیات کوبلکو b200Document12 pagesمشخصات فنی بیل بکهو فیات کوبلکو b200Maryam0% (1)

- Ield Methods: A Typical Field Mapping Camp in The 1950sDocument4 pagesIeld Methods: A Typical Field Mapping Camp in The 1950sshivam soniNo ratings yet

- Biochem Acids and Bases Lab ReportDocument4 pagesBiochem Acids and Bases Lab ReportShaina MabborangNo ratings yet

- Etl 213-1208.10 enDocument1 pageEtl 213-1208.10 enhossamNo ratings yet

- ZW250-7 BROCHURE LowresDocument12 pagesZW250-7 BROCHURE Lowresbjrock123No ratings yet

- Aesa Based Pechay Production - AbdulwahidDocument17 pagesAesa Based Pechay Production - AbdulwahidAnne Xx100% (1)

- The Royal Commonwealth Society of Malaysia: Function MenuDocument3 pagesThe Royal Commonwealth Society of Malaysia: Function MenuMynak KrishnaNo ratings yet

- Air System Sizing Summary For NIVEL PB - Zona 1Document1 pageAir System Sizing Summary For NIVEL PB - Zona 1Roger PandoNo ratings yet

- Hazel Rhs Horticulture Level 2 Essay 1Document24 pagesHazel Rhs Horticulture Level 2 Essay 1hazeldwyerNo ratings yet

- Pantalla Anterior Bienvenido: Cr080vbesDocument3 pagesPantalla Anterior Bienvenido: Cr080vbesJuan Pablo Virreyra TriguerosNo ratings yet

- Astm D974-97Document7 pagesAstm D974-97QcHeNo ratings yet

- API Casing Collapse CalcsDocument8 pagesAPI Casing Collapse CalcsJay SadNo ratings yet

- Book 2 - Koning (COMPLETO)Document100 pagesBook 2 - Koning (COMPLETO)Kevin VianaNo ratings yet

- Joseph Conrad - Heart of DarknessDocument86 pagesJoseph Conrad - Heart of DarknessCaztor SscNo ratings yet

- EU - Guidance On GMP For Food Contact Plastic Materials and Articles (60p)Document60 pagesEU - Guidance On GMP For Food Contact Plastic Materials and Articles (60p)Kram NawkNo ratings yet

- Thesis Brand BlanketDocument4 pagesThesis Brand BlanketKayla Smith100% (2)

- Pitman SolutionDocument190 pagesPitman SolutionBon Siranart50% (2)

- Strange Christmas TraditionsDocument2 pagesStrange Christmas TraditionsZsofia ZsofiaNo ratings yet

- Charles Haanel - The Master Key System Cd2 Id1919810777 Size878Document214 pagesCharles Haanel - The Master Key System Cd2 Id1919810777 Size878Hmt Nmsl100% (2)