Professional Documents

Culture Documents

00589532

Uploaded by

Zhazar ToktabolatOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

00589532

Uploaded by

Zhazar ToktabolatCopyright:

Available Formats

1464

IEEE TRANSACTIONS ON NUCLEAR SCIENCE, VOL. 44, NO. 3, JUNE 1997

Importance of Input Data Normalization for the Application of Neural Networks to Complex Industrial Problems

J. Sola and J. Sevilla

Abstract Recent advances in articial intelligence have allowed the application of such technologies in real industrial problems. We have studied the application of backpropagation neural networks to several problems of estimation and identication in nuclear power plants. These problems often have been reported to be very time-consuming in the training phase. Among the different approaches suggested to ease the backpropagation training process, input data pretreatment has been pointed out, although no specic procedure has been proposed. We have found that input data normalization with certain criteria, prior to a training process, is crucial to obtain good results as well as to fasten signicantly the calculations. This paper shows how data normalization affects the performance error of parameter estimators trained to predict the value of several variables of a PWR nuclear power plant. The criteria needed to accomplish such data normalization are also described. Index Terms Network learning, neural networks, nuclear power plants, power prediction system, reactor pressure, transients.

TABLE I TRANSIENTS SIMULATED TO OBTAIN DATA

I. INTRODUCTION

An adequate normalization, not only for the network output variables but also for the input ones, prior to the training process is very important to obtain good results and reduce signicantly the calculation time. In the work presented in this paper, we have studied systematically the effect of different data normalization procedures in the performance of estimation networks trained with this data. The networks used have been designed to estimate the value of different variables of a pressurized water reactor (PWR)-type nuclear power plant. II. SYSTEM STUDIED The results presented here are part of a wider project aiming to obtain virtual measurements based on neural networks for PWR nuclear power plants [12]. Particularly, two cases have been studied: rst an estimator of pressurizer pressure and second an estimator of the power transferred between the reactor coolant system and the main vapor system. In both cases supervised training with multilayer perceptron are used [2]. Data needed to train the networks as well as to evaluate their performance were obtained from simulations accomplished with the Nuclear Plant Analyzer of Westinghouse, based on the code TREAT. The virtual measurement system was intended to work properly (in a rst stage) around nominal power, thus several transients (ten) were selected, all starting in a normal state at 100% power, and simulated. These transients are summarized in Table I. The rst 5 min of each transient, recording data each second, provided more than 3000 points representing plant states that were used for the networks development. The network studies have been carried out with the neural network simulator SINAPSIS. This PC-based simulator has been developed at the Universidad P blica de Navarra [13]. u In both cases, three-layer perceptron have been used and trained through adaptive backpropagation algorithm [14]. The

ECENT advances in the eld of Articial Intelligence have allowed the application of a series of new technologies to actual industrial problems [1]. In particular, neural networks, in their different topologies, have been applied to solve problems from very different elds [2]. In nuclear power plants, the application of neural networks technology to different problems of identication [3], [4] and estimation [5], [6] has been reported. In the use of backpropagation networks to these types of problems, several authors report very long training times and propose different approaches to solve the problem [7][10]. When using backpropagation networks, depending on the activation function of the neurons, it will be necessary to perform some pretreatment of the data used for training. Supposing that logistic sigmoids are used, the interval of variation of the output variables has to be accommodated to the maximum output range of the sigmoid, that is, from zero to one. Although such a form of normalization has been used [11], no specic procedure is clearly established in the literature.

Manuscript received July 15, 1996; revised December 11, 1996 and February 27, 1997. The authors are with Department of Electrical and Electronic Engineering, Universidad P blica de Navarra, 31006 Pamplona, Spain. u Publisher Item Identier S 0018-9499(97)04248-2.

00189499/97$10.00 1997 IEEE

SOLA AND SEVILLA: IMPORTANCE OF INPUT DATA NORMALIZATION

1465

TABLE II INPUT VARIABLES USED FOR THE PRESSURE ESTIMATOR

TABLE III SUMMARY OF THE UNITS AND NOMINAL VALUES AT 100% POWER FOR

THE

VARIABLES OF TABLE II

neuron activation function is, in all cases, a unity slope logistic sigmoid. Input variables were a small number of typical plant variables (or combinations of them). In the case of the pressurizer estimator, six input variables have been used (see Table II), while in the power transfer case eight variables were needed. Network architecture inuence has been studied by evaluating the performance of a wide range of possibilities. Results showed that the optimum architecture varies with data pretreatment procedure. For the best normalization, relatively small networks perform as well as more complex ones. In order to avoid confusion in the discussions, all the results presented in this paper have been obtained with the same network architecture, namely 6-5-1 and 8-7-1, one for each of the cases studied. In both cases the training set was made with 100 points randomly selected out of the roughly 3000 available. III. RESULTS When facing the original data for the rst time, one realizes that they are expressed in very different units, not even belonging to the same units system. Not only are the units different, but also the order of magnitude of their absolute values are very different, ranging from 2.610 kW for the nuclear power-to-turbine demand that is expressed normalized to unity. In Table III, a summary of the units and nominal values (at 100% power) for the variables of Table II, together with their maximum variation range along the transients considered, is presented. Numerical values of the order of 10 generate extremely large numbers when calculating network output, exceeding ordinary computers capacity. So, it is completely necessary to perform some kind of input data pretreatment. To investigate the effect of input data pretreatment, we have rst selected one training set (of 100 points randomly selected). With this original set we have created ve different sets with different normalizations of the same data. These

normalizations are linear transformations of the original data. The transformations used are shown in Table IV. All data transformations, different than the one labeled 5, are not normalizations strictly speaking, and are articially introduced in order to study systematically the effect of input data variation range in the output performance of the network. The transformations labeled 1, 2, and 4 (see Table IV) are accomplished through the product of input data with specic factors for each variable. In all cases these factors are well below unity in order to obtain absolute numbers well suited for computation. In the rst normalization (second column and 10 so that the big of Table IV), factors are 10 differences in the absolute values of the original data are almost kept. A comparison of this case with the others can be studied if the differences in the absolute values are as interesting for network performance as they are for human interpretation (the origins of these values are the unit systems selected by plant design engineers). Normalizations 2 and 3 are introduced to evaluate the effect of equalizing absolute values of the variables. With transformation 2, the original difference of 10 between the highest and the lowest data is reduced to 10 and with 4 to 10 . Transformation 3 is performed dividing each data by the nominal value of the corresponding variable at 100% of the nuclear power. Absolute variable variation obtained is of three orders of magnitude, similarly as in #4, but through a different procedure. This allows us to study whether variations in the network performance are due only to data absolute values variability. Finally, with normalization 5 it is intended to expand input data interval of variation to the input-layer neuron interval of variation. As these are unity slope sigmoids, this interval runs from zero to one. Nevertheless, the maximum output range used in normalization 5 (see Table IV) runs from 0.050.95. This output range has been selected after investigating the performance of three different intervals: (0.1, 0.9), (0.05, 0.95),

1466

IEEE TRANSACTIONS ON NUCLEAR SCIENCE, VOL. 44, NO. 3, JUNE 1997

TABLE IV DIFFERENT DATA TRANSFORMATIONS USED

AS

NORMALIZATIONS

Fig. 1. Average mean error of the pressure estimation network for different normalizations.

Fig. 2. Average mean error of the transferred power estimation network for different normalizations.

and (0, 1). The nal average mean errors obtained are very similar in all cases (differences below 0.05%), while the one chosen shows slightly better performance in the initial stages of the training phase (up to 5 10 iterations). In all cases the output variable is scaled following criteria . for normalization 5, that is, is expressed in kg/cm , Remember, from Table III, that and 157.1 is the value at 100% power. In the transients under consideration, this variable never falls below 40%; this is included by subtracting 0.4. The factor 1.285 is the scaling will become almost factor that assures that variations in unity in variation. This procedure assures that all values can be provided by the output neuron (unity slope sigmoid). With this data, the same network (6-5-1) was trained in an identical manner. Its performance was quantied averaging the differences between network output and real output for all available data, that is, more than 3000 points (including the 100 used for training). This mean error (expressed in % of nominal value, i.e., 157.15 kg/cm ) is plotted in Fig. 1 for the normalizations considered. In the ve cases the mean error after 10, 20, 100, and 200 thousand training iterations is represented. In Fig. 2 the results obtained from a similar study performed with the network designed to estimate power transferred

between reactor coolant system (RCS) and the main vapor system are presented. In both cases it can be seen that the error gradually decreases as normalization reduces the differences between the variation range of the different variables. It is interesting to note that when the variables span in the same orders of magnitude (as happens with normalizations 3 and 4), the results are better for the case with less variables out of the most common order of magnitude. From the worst to the best case, the results are better in one order of magnitude. Most striking is the fact that errors do not follow a constant decrease with the number of iterations, but on the contrary they reach a certain minimum and start increasing again as iterations grow. This can be appreciated more clearly in Fig. 3, where the same mean error data are plotted against the number of iterations with different curves for each normalization. The worsening of the results as training iterations progress is more evident for normalizations 1 and 3. IV. DISCUSSION The results previously shown can be summarized in two relevant features. On one hand, network performance (training iteration number and nal error attained) is enhanced as input variable ranges are equalized by normalization. On the other

SOLA AND SEVILLA: IMPORTANCE OF INPUT DATA NORMALIZATION

1467

Previous discussion indicates that the best situation should be when all input variables are in the same order of magnitude, especially if they are in the order of one, as happens with the best normalization presented in the study. However, to perform this transformation one has to dene the absolute maximum and minimum values for each input variable. An exact extreme value assignment can be difcult in many real problems. Nevertheless, to obtain a good normalization, no special accuracy is needed. Extreme value variations of 20 or 30% do not alter signicantly the results obtained. We think that in most cases there will always be a heuristic argument to assign extreme values within a 30% interval. For example, when considering pressure of a PWR plant, the nominal value is 157.15 kg/cm , and the design maximum pressure is around 180 kg/cm . So, any value between 160200 can be taken for the maximum to perform the normalization with approximately the same results.

Fig. 3. Average mean error evolution for different numbers of training iterations (pressure estimation network).

V. CONCLUSIONS hand, when the iteration number exceeds a certain value, the square mean error tends to rise again; this value depends on the normalization method. A possible explanation for the second observation can be found in the well-known effect of overtraining [15], which means that the network tends to learn by heart the training set. Thus, as training data are tted more closely, its performance for other data start to become worse. This overtraining process can be expected when the training set size is smaller than (approximately) ten times the number of connections of the network, as is the case in the problems under study. Results obtained training the networks with training sets of different numbers of elements strongly support this idea. To understand why input data normalization can enhance network performance, the rst point to remember is that the neural network simulator used initializes weights to random values in the ( 1, 1) interval. The slope of the sigmoids used as activation functions is also unity. On the other hand, all normalizations considered are linear scale transformations, and thus the minimum to which the network converges should be the same one in all cases, only shifted by the same linear transformation. Therefore, the initial state for the backpropagation algorithm to begin is always a point in the vicinity of coordinate space origin, while distance to the desired minimum is drastically changed by the scales considered in each case. So, scales that compress all the searching space to a unitary hypercube reduce the distance to be covered, iteration by iteration, by the backpropagation algorithm. Furthermore, if scales are very dissimilar for the different values, the bigger ones will have a higher contribution to the output error, and so the error reduction algorithm will be focused on the variables of higher values, neglecting the information from the small valued variables. We have tried to test this idea by tracking the weight shift as backpropagation proceeds in different cases. The results obtained support this explanation. A systematic study on the effect of input data pretreatment prior to its use for neural network training has been accomplished. The study has been performed with two neural networks developed to estimate two PWR nuclear power plant variables. We can conclude that an adequate normalization of input data prior to network training generates two clear advantages. Estimation errors can be reduced in a factor between ve and ten. Calculation time needed in the training process to obtain such results is reduced in one order of magnitude. An adequate normalization is a linear scale conversion that assigns the same absolute values to the same relative variations.

ACKNOWLEDGMENT The authors acknowledge Westinghouse Spain, and especially C. Pulido, for assistance with TREAT-NPA data generation. The fruitful discussion with Dr. G. Lera is also recognized.

REFERENCES

[1] S. H. Rubin, Ed., Articial intelligence for engineering, design, and manufacturing, ISA Trans., vol. 31, p. 2, 1992. [2] R. P. Lippmann, An introduction to computing with neural nets, IEEE Acoust. Speech, Signal Processing, vol. ASSP-4, p. 4, Feb. 1987. [3] R. Kozma, M. Kitamura, J. E. Hoogemboom, and M. Sakamura, Credibility of anomaly detection in nuclear reactors using neural networks, Trans. Amer. Nucl. Soc., vol. 70, pp. 102103, 1994. [4] B. C. Hwang, Intelligent control for nuclear power plant using articial neural networks, in Proc. IEEE Int. Conf. Neural Networks, 1994, vol. 4, pp. 25802584. [5] K. Nabeshima, R. Kozma, and E. Turkcan, Analysis of the response time of an on-line boiling monitoring system in research reactors with MTR-type fuel, in Proc. 16th Int. Meet. Reduced Enrichment Res. Test Reactors, Japan, 1993, pp. 266273. [6] O. Sung-Hun, K. Dae-Il, and K. Kern-Jung, A study on the signal validation of the pressurizer level sensor in nuclear power plants, Trans. Korean Inst. Elec. Eng., vol. 44, no. 4, pp. 502507, 1995.

1468

IEEE TRANSACTIONS ON NUCLEAR SCIENCE, VOL. 44, NO. 3, JUNE 1997

[7] W. J. Kim, S. H. Chang, and B. H. Lee, Applications of neural networks to signal prediction in nuclear power plant, IEEE Trans. Nucl. Sci., vol. 40, no. 5, pp. 13371341, 1993. [8] J. E. Vitela and J. Reifman, Improving learning of neural networks for nuclear power plant transient classications, Trans. Amer. Nucl. Soc., vol. 66, pp. 116117, 1992. [9] J. Reifman and J. E. Vitela, Accelerating learning of neural networks with conjugate gradients for nuclear power plants applications, Nucl. Tech., vol. 106, no. 2, pp. 225241, 1994. [10] K. K. Shukla, A neuro computer based learning controller for critical industrial applications, in Proc. 1995 IEEE/IAS Int. Conf. Indust. Automat. Contr., pp. 4348.

[11] B. R. Upadhyaya and E. Eryurek, Application of neural nets for sensor validation and plant monitoring, Nucl. Tech., vol. 97, pp. 170176, 1992. [12] J. Sevilla, J. Sola, C. Achaerandio, and C. Pulido, Neural network technology applications to nuclear power plants instrumentation, in Proc. 22nd Annu. Meet. Soc. Nucl. Espa ola, in press. n [13] G. Lera, personal communication; glera@si.upna.es. [14] A. G. Parlos, J. Muthusami, and A. F. Atiya, Incipient fault detection and identication in process system using accelerated neural network learning, Nucl. Tech., vol. 105, p. 2, 1994. [15] J. Sj berg and L. Ljung, Overtraining, regulation, and searching for o minimum in neural networks, Link ping Univ., Dept. Electrical Eng. o Internal Rep.; sjoberg@isi.liu.se.

You might also like

- Application of Artificial Neural Network For Path Loss Prediction in Urban Macrocellular EnvironmentDocument6 pagesApplication of Artificial Neural Network For Path Loss Prediction in Urban Macrocellular EnvironmentAJER JOURNALNo ratings yet

- Prediction of Critical Clearing Time Using Artificial Neural NetworkDocument5 pagesPrediction of Critical Clearing Time Using Artificial Neural NetworkSaddam HussainNo ratings yet

- Pillay Application GeneticDocument10 pagesPillay Application GenetickfaliNo ratings yet

- Industrial Applications Using Neural NetworksDocument11 pagesIndustrial Applications Using Neural Networksapi-26172869No ratings yet

- Applications of Data Mining Technique For Power System Transient Stability PredictionDocument4 pagesApplications of Data Mining Technique For Power System Transient Stability Predictionapi-3697505No ratings yet

- Modeling of Induction Heating Systems Using Artificial Neural NetworksDocument16 pagesModeling of Induction Heating Systems Using Artificial Neural NetworksAli Faiz AbotiheenNo ratings yet

- Power System Transient Stability ThesisDocument8 pagesPower System Transient Stability ThesisCollegeApplicationEssayHelpManchester100% (1)

- Control Engineering Practice PDFDocument12 pagesControl Engineering Practice PDFAbu HusseinNo ratings yet

- ANN-Based Differential Transformer ProtectionDocument4 pagesANN-Based Differential Transformer ProtectionAyush AvaNo ratings yet

- Materials & DesignDocument10 pagesMaterials & DesignNazli SariNo ratings yet

- Fault IdentificationDocument5 pagesFault IdentificationRajesh Kumar PatnaikNo ratings yet

- RBF BP JieeecDocument5 pagesRBF BP JieeecEyad A. FeilatNo ratings yet

- Artificial Neural Networks For The Performance Prediction of Large SolarDocument8 pagesArtificial Neural Networks For The Performance Prediction of Large SolarGiuseppeNo ratings yet

- Transient Stability Assessment of A Power System Using Probabilistic Neural NetworkDocument8 pagesTransient Stability Assessment of A Power System Using Probabilistic Neural Networkrasim_m1146No ratings yet

- Anantharaman (2007)Document6 pagesAnantharaman (2007)Roody908No ratings yet

- Manifold DataminingDocument5 pagesManifold Dataminingnelson_herreraNo ratings yet

- Application of Artificial Neural Network (ANN) Technique For The Measurement of Voltage Stability Using FACTS Controllers - ITDocument14 pagesApplication of Artificial Neural Network (ANN) Technique For The Measurement of Voltage Stability Using FACTS Controllers - ITpradeep9007879No ratings yet

- Energies: Reliability Assessment of Power Generation Systems Using Intelligent Search Based On Disparity TheoryDocument13 pagesEnergies: Reliability Assessment of Power Generation Systems Using Intelligent Search Based On Disparity TheoryIda Bagus Yudistira AnggradanaNo ratings yet

- Study of Black-Box Models and Participation Factors For The Positive-Mode Damping SDocument10 pagesStudy of Black-Box Models and Participation Factors For The Positive-Mode Damping Sbinghan.sunNo ratings yet

- Electric Power Systems Research: Distribution Power Ow Method Based On A Real Quasi-Symmetric MatrixDocument12 pagesElectric Power Systems Research: Distribution Power Ow Method Based On A Real Quasi-Symmetric MatrixSatishKumarInjetiNo ratings yet

- Bretas A.S. Artificial Neural Networks in Power System RestorationDocument6 pagesBretas A.S. Artificial Neural Networks in Power System RestorationNibedita ChatterjeeNo ratings yet

- Research ArticleDocument9 pagesResearch Articleerec87No ratings yet

- Modeling combined-cycle power plants for simulation of frequency excursionsDocument1 pageModeling combined-cycle power plants for simulation of frequency excursionsAbdulrahmanNo ratings yet

- Back Propagation Neural Network For Short-Term Electricity Load Forecasting With Weather FeaturesDocument4 pagesBack Propagation Neural Network For Short-Term Electricity Load Forecasting With Weather FeaturesVishnu S. M. YarlagaddaNo ratings yet

- NN For Self Tuning of PI and PID ControllersDocument8 pagesNN For Self Tuning of PI and PID ControllersAlper YıldırımNo ratings yet

- A Comparative Study On Different ANN Techniques in Wind Speed Forecasting For Generation of ElectricityDocument8 pagesA Comparative Study On Different ANN Techniques in Wind Speed Forecasting For Generation of ElectricityranvijayNo ratings yet

- Gupta 2019 An Online Power System Stability MoDocument9 pagesGupta 2019 An Online Power System Stability Mo黄凌翔No ratings yet

- 43TOREJDocument9 pages43TOREJprmthsNo ratings yet

- Type-2 Fuzzy Wavelet Neural Network For Estimation Energy Performance of Residential BuildingsDocument9 pagesType-2 Fuzzy Wavelet Neural Network For Estimation Energy Performance of Residential BuildingsSanan AbizadaNo ratings yet

- Diagnosing Failed Distribution Transformers Using Neural NetworksDocument6 pagesDiagnosing Failed Distribution Transformers Using Neural NetworksLuchito BedoyaNo ratings yet

- Nonlinear Identification of PH Process by Using Nnarx Model: M.Rajalakshmi, G.Saravanakumar, C.KarthikDocument5 pagesNonlinear Identification of PH Process by Using Nnarx Model: M.Rajalakshmi, G.Saravanakumar, C.KarthikGuru SaravanaNo ratings yet

- A Power Distribution Network Restoration Via Feeder Reconfiguration by Using Epso For Losses ReductionDocument5 pagesA Power Distribution Network Restoration Via Feeder Reconfiguration by Using Epso For Losses ReductionMohammed ShifulNo ratings yet

- MISO Feedforward ANN PDFDocument4 pagesMISO Feedforward ANN PDFJesus CamachoNo ratings yet

- Bining Neural Network Model With Seasonal Time Series ARIMA ModelDocument17 pagesBining Neural Network Model With Seasonal Time Series ARIMA ModelMiloš MilenkovićNo ratings yet

- Multi-Way Optimal Control of A Benchmark Fed-Batch Fermentation ProcessDocument24 pagesMulti-Way Optimal Control of A Benchmark Fed-Batch Fermentation ProcessVenkata Suryanarayana GorleNo ratings yet

- Multiobjective Optimal Reconfiguration of MV Networks With Different Earthing SystemsDocument6 pagesMultiobjective Optimal Reconfiguration of MV Networks With Different Earthing Systemssreddy4svuNo ratings yet

- Identification of Brushless Excitation System Model Parameters at Different Operating Conditions Via Field TestsDocument7 pagesIdentification of Brushless Excitation System Model Parameters at Different Operating Conditions Via Field TestsFrozzy17No ratings yet

- Creep Modelling of Polyolefins Using Artificial Neural NetworksDocument15 pagesCreep Modelling of Polyolefins Using Artificial Neural Networksklomps_jrNo ratings yet

- A Performance Evaluation of A Parallel Biological Network Microcircuit in NeuronDocument17 pagesA Performance Evaluation of A Parallel Biological Network Microcircuit in NeuronijdpsNo ratings yet

- Weekly Wimax Traffic Forecasting Using Trainable Cascade-Forward Backpropagation Network in Wavelet DomainDocument6 pagesWeekly Wimax Traffic Forecasting Using Trainable Cascade-Forward Backpropagation Network in Wavelet DomainInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Vulnerability Assessment of A Large Sized Power System Using Neural Network Considering Various Feature Extraction MethodsDocument10 pagesVulnerability Assessment of A Large Sized Power System Using Neural Network Considering Various Feature Extraction MethodsMarysol AyalaNo ratings yet

- Applied Thermal Engineering: Y.El. Hamzaoui, J.A. Rodríguez, J.A. Hern Andez, Victor SalazarDocument10 pagesApplied Thermal Engineering: Y.El. Hamzaoui, J.A. Rodríguez, J.A. Hern Andez, Victor SalazarMossa LaidaniNo ratings yet

- Artificial Neural Networks for Modelling Engine SystemsDocument25 pagesArtificial Neural Networks for Modelling Engine SystemsaaNo ratings yet

- Thesis On Power System Stability PDFDocument5 pagesThesis On Power System Stability PDFWhitney Anderson100% (1)

- Psce ConferenceDocument96 pagesPsce ConferenceSeetharam MahanthiNo ratings yet

- Statistical Analysis and Forecasting of Damping in The Nordic Power SystemDocument10 pagesStatistical Analysis and Forecasting of Damping in The Nordic Power SystemB. Vikram AnandNo ratings yet

- Pergamon: (Received 6 AugustDocument24 pagesPergamon: (Received 6 Augustamsubra8874No ratings yet

- Improved Transformer Fault Classification Using ANN and Differential MethodDocument9 pagesImproved Transformer Fault Classification Using ANN and Differential MethoddimasairlanggaNo ratings yet

- Detecting Voltage Instability in Philippine Power Grids Using AIDocument7 pagesDetecting Voltage Instability in Philippine Power Grids Using AIPocholo RodriguezNo ratings yet

- Effect of Aggregation Level and Sampling Time On Load Variation Profile - A Statistical AnalysisDocument5 pagesEffect of Aggregation Level and Sampling Time On Load Variation Profile - A Statistical AnalysisFlorin NastasaNo ratings yet

- A New Algorithm For Simulation of Power Electronic Systems Using Piecewise-Linear Device Models, 1Document9 pagesA New Algorithm For Simulation of Power Electronic Systems Using Piecewise-Linear Device Models, 1Ali H. NumanNo ratings yet

- Application of ANN To Predict Reinforcement Height of Weld Bead Under Magnetic FieldDocument5 pagesApplication of ANN To Predict Reinforcement Height of Weld Bead Under Magnetic FieldInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- 5198a006 PDFDocument6 pages5198a006 PDFsajolNo ratings yet

- Static Security Assessment of Power System Using Kohonen Neural Network A. El-SharkawiDocument5 pagesStatic Security Assessment of Power System Using Kohonen Neural Network A. El-Sharkawirokhgireh_hojjatNo ratings yet

- Application of Neural Network To Load Forecasting in Nigerian Electrical Power SystemDocument5 pagesApplication of Neural Network To Load Forecasting in Nigerian Electrical Power SystemAmine BenseddikNo ratings yet

- Load Modeling For Fault Location in Distribution Systems With Distributed GenerationDocument8 pagesLoad Modeling For Fault Location in Distribution Systems With Distributed Generationcastilho22No ratings yet

- Assignment - Role of Computational Techniques On Proposed ResearchDocument9 pagesAssignment - Role of Computational Techniques On Proposed ResearchSnehamoy DharNo ratings yet

- Introduction To Streering Gear SystemDocument1 pageIntroduction To Streering Gear SystemNorman prattNo ratings yet

- Ipo Exam Revised SyllabusDocument1 pageIpo Exam Revised Syllabusজ্যোতিৰ্ময় বসুমতাৰীNo ratings yet

- Lec 10 - MQueues and Shared Memory PDFDocument57 pagesLec 10 - MQueues and Shared Memory PDFUchiha ItachiNo ratings yet

- Eudragit ReviewDocument16 pagesEudragit ReviewlichenresearchNo ratings yet

- Research Paper Theory of Mind 2Document15 pagesResearch Paper Theory of Mind 2api-529331295No ratings yet

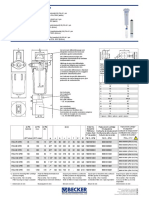

- Medical filter performance specificationsDocument1 pageMedical filter performance specificationsPT.Intidaya Dinamika SejatiNo ratings yet

- Write 10 Lines On My Favourite Subject EnglishDocument1 pageWrite 10 Lines On My Favourite Subject EnglishIrene ThebestNo ratings yet

- Call SANROCCO 11 HappybirthdayBramanteDocument8 pagesCall SANROCCO 11 HappybirthdayBramanterod57No ratings yet

- Trimble Oem Gnss Bro Usl 0422Document3 pagesTrimble Oem Gnss Bro Usl 0422rafaelNo ratings yet

- City of Brescia - Map - WWW - Bresciatourism.itDocument1 pageCity of Brescia - Map - WWW - Bresciatourism.itBrescia TourismNo ratings yet

- Types of LogoDocument3 pagesTypes of Logomark anthony ordonioNo ratings yet

- PM - Network Analysis CasesDocument20 pagesPM - Network Analysis CasesImransk401No ratings yet

- Rakpoxy 150 HB PrimerDocument1 pageRakpoxy 150 HB Primernate anantathatNo ratings yet

- Front Cover Short Report BDA27501Document1 pageFront Cover Short Report BDA27501saperuddinNo ratings yet

- Wsi PSDDocument18 pagesWsi PSDДрагиша Небитни ТрифуновићNo ratings yet

- Levels of Attainment.Document6 pagesLevels of Attainment.rajeshbarasaraNo ratings yet

- Riedijk - Architecture As A CraftDocument223 pagesRiedijk - Architecture As A CraftHannah WesselsNo ratings yet

- C11 RacloprideDocument5 pagesC11 RacloprideAvina 123No ratings yet

- Overview for Report Designers in 40 CharactersDocument21 pagesOverview for Report Designers in 40 CharacterskashishNo ratings yet

- EE-434 Power Electronics: Engr. Dr. Hadeed Ahmed SherDocument23 pagesEE-434 Power Electronics: Engr. Dr. Hadeed Ahmed SherMirza Azhar HaseebNo ratings yet

- HP 5973 Quick ReferenceDocument28 pagesHP 5973 Quick ReferenceDavid ruizNo ratings yet

- Ch. 7 - Audit Reports CA Study NotesDocument3 pagesCh. 7 - Audit Reports CA Study NotesUnpredictable TalentNo ratings yet

- Addition and Subtraction of PolynomialsDocument8 pagesAddition and Subtraction of PolynomialsPearl AdamosNo ratings yet

- Mba Project GuidelinesDocument8 pagesMba Project GuidelinesKrishnamohan VaddadiNo ratings yet

- Donny UfoaksesDocument27 pagesDonny UfoaksesKang Bowo D'wizardNo ratings yet

- COT EnglishDocument4 pagesCOT EnglishTypie ZapNo ratings yet

- Conserve O Gram: Understanding Histograms For Digital PhotographyDocument4 pagesConserve O Gram: Understanding Histograms For Digital PhotographyErden SizgekNo ratings yet

- Aircraft ChecksDocument10 pagesAircraft ChecksAshirbad RathaNo ratings yet

- Brooks Cole Empowerment Series Becoming An Effective Policy Advocate 7Th Edition Jansson Solutions Manual Full Chapter PDFDocument36 pagesBrooks Cole Empowerment Series Becoming An Effective Policy Advocate 7Th Edition Jansson Solutions Manual Full Chapter PDFlois.guzman538100% (12)

- Panasonic TC-P42X5 Service ManualDocument74 pagesPanasonic TC-P42X5 Service ManualManager iDClaimNo ratings yet