Professional Documents

Culture Documents

Applications in Parallel MATLAB

Uploaded by

Mubarak CeOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Applications in Parallel MATLAB

Uploaded by

Mubarak CeCopyright:

Available Formats

Applications in Parallel MATLAB

Brian Guilfoos, Judy Gardiner, Juan Carlos Chaves, John Nehrbass, Stanley Ahalt, Ashok Krishnamurthy, Jose Unpingco, Alan Chalker, Laura Humphrey, and Siddharth Samsi Ohio Supercomputer Center, Columbus, OH {guilfoos, judithg, jchaves, nehrbass, ahalt, ashok, unpingo, alanc, humphrey, samsi}@osc.edu 1. Introduction

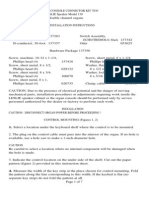

The parallel MATLAB implementations used for this project are MatlabMPI[1] and pMatlab[1], both developed by Dr. Jeremy Kepner at MIT-LL. MatlabMPI is based on the Message Passing Interface standard, in which processes coordinate their work and communicate by passing messages among themselves. The pMATLAB library supports parallel array programming in MATLAB. The user program defines arrays that are distributed among the available processes. Although communication between processes is actually done through message passing, the details are hidden from the user. between classes is found in this space. Some typical choices for kernel functions are linear, polynomial, sigmoid, and Radial Basis Functions (RBF). SVM training involves optimizing over a number of parameters for the best performance and is usually done using a search procedure. The chosen implementation of the SVM classifier is the Sequential Minimal Optimization algorithm.[7,8] SMO operates by iteratively reducing the problem to a single pair of points that may be solved linearly. The problem is parallelized by passing subsets to nodes in the cluster, and recombining the solved subsets to give a partially solved problem. After reaching the tip of the Cascade, the trained data set is passed back to the original nodes to test for convergence. This process is repeated until the support vectors have converged on the solution to the problem as defined by all training points satisfying the KKT conditions. This deliverable demonstrates that toy datasets can be run through an SVM classifier implemented with the SMO algorithm with a Cascade parallelization approach[3] as shown in Figure 1, completely within native MATLAB and MatlabMPI.[5] An example output is shown in Figure 2. Figure 3 shows the median SVM algorithm execution time versus number of processors for five trials on a Pentium 4 cluster using 10000 samples in the checkerboard pattern of Figure 2. Execution is fast in all cases, with some possible benefit from added processors. However, it appears that communication overhead may start to outweigh this benefit as a larger number of processors are used. There are clear improvements that could be made to the codes (including tweaking the Cascade code to encourage faster convergence and adding implementations for alternative kernel functions), but this is an encouraging proof-of-concept that should scale reasonably well to larger problems.

2. Objective

The objective of this PET project was to develop parallel MATLAB code for selected algorithms that are of interest to the Department of Defense (DoD) Signal/Image Processing (SIP) community and to run the code on the HPCMP systems. The algorithms selected for parallel MATLAB implementation were a Support Vector Machine (SVM) classifier, Metropolis-Hastings Markov Chain Monte Carlo (MCMC) simulation, and ContentBased Image Compression (CBIC).

3. Methodology

3.1. SVM

SVMs[1] are used for classification, regression, and density estimation, and they have applications in SIP, machine learning, bioinformatics, and computational science. In a typical classification application, a set of labeled training data is used to train a classifier, which is then used to classify unlabeled test data. The training of an SVM classifier may be posed as an optimization problem. Typically, the training vectors are mapped to a higher-dimensional space using a kernel function, and the linear separating hyperplane with the maximal margin

3.2. MCMC

The MCMC algorithm is used to draw independent and identically distributed (IID) random variables from a

HPCMP Users Group Conference (HPCMP-UGC'06) 0-7695-2797-3/06 $20.00 2006

distribution (x), which may be defined over a highdimensional space. In many cases of interest, it is impossible to sample (x) directly. MCMC algorithms play an important role in image processing, machine learning, statistics, physics, optimization, and computational science. Applications include traditional Monte Carlo methods, such as integration, and generating IID random variables for simulations. MCMC also has numerous applications in the realm of Bayesian inference and learning, such as model parameter estimation, image segmentation, DNA sequence segmentation, and stock market forecasting, to name a few. We have successfully implemented the MCMC algorithm in MATLAB, MatlabMPI, and pMATLAB. The algorithm lends itself naturally to parallel computing, so both the MatlabMPI and pMATLAB versions may be run efficiently on multiple processing nodes. The code is modular and designed to be customized by the user to simulate any desired probability distribution, including multivariate distributions. The MCMC algorithm works by generating a sequence of variables or vectors Xi using a Markov chain. The probability distribution of Xi approaches some desired distribution (x) as i . The update at each step is accomplished using the Metropolis-Hastings algorithm.[6] After some burn-in period, the current value of Xi is taken as a sample of (x). The only requirement on (x) is that a weighting function, or unnormalized density function, may be calculated at each value of x. The algorithm has two parts: 1) proposing a value for Xi + 1 given the value of Xi, based on a proposal density function, and 2) accepting or rejecting the proposed value with some probability. The proposal density function in the general case takes the form q(a,b), where a is the value of Xi and b is the proposed value for Xi + 1 . In our implementation, proposals are restricted to the form b = a + R, where R is a random variable with a probability density function p(r). The function p(r) need not be symmetric. The proposed value of Xi + 1 is accepted with ( b ) p ( r ) probability , where = min ,1 . If the (a) p (r ) value is not accepted, then Xi + 1 = Xi. Note that is calculated using the unnormalized density function for the target density (x). If the proposal density is symmetric, p(r) = p(r) and the second factor disappears. In our implementation, the user provides functions for the target and proposal density functions, as well as the number of burn-in iterations to use between samples, and the number of samples to be drawn. One of our goals was to make it easy for users to customize the code to generate other probability distributions, use different proposal densities, and change various parameters. Two

examples are included with the code. The first example simulates samples from a bivariate normal distribution using a uniform proposal density. The second example simulates samples from a Rayleigh distribution using an asymmetric proposal density. The problem of generating independent samples of a random variable is naturally parallel. Both of the parallel versions of the algorithm simply divide the number of samples to be generated among the available processors. Each processor generates its share of the total samples separately from the others. The samples are then combined into one output file. Figure 4 shows an example output. The left graph shows the desired distribution: a two-dimensional normal distribution with zero mean and a non-diagonal covariance matrix. The right graph shows 1000 samples generated by the MCMC routine using a uniform proposal density function. Figure 5 shows the median time over five trials to generate these 1000 samples using 1000 burn-in iterations between samples on a Pentium 4 cluster. The execution time decreases with diminishing returns as more processors are added.

3.3. CBIC

A typical image compression system applies the same compression strategy to the entire image, effectively spreading the quantization error uniformly over the image. This effectively limits the compression ratio to the maximum that can be tolerated for the important and relevant portions of the image. However, in many applications, the portion of the image that is of interest may be small compared to the entire image. For example, in a SAR image taken from an aircraft, only the portion of the image containing the target of interest needs to be preserved with high quality; the rest of the image can be compressed quite heavily. This leads to the idea of CBIC, in which selected portions of the image are compressed losslessly, while the rest of the image is compressed at a high ratio. In many applications, a sensor may be acquiring a stream of images, and compressing each rapidly for storage or transmission is essential. Parallel CBIC algorithms are useful in such situations. The initial code for this project was taken from an incomplete copy of thesis work by a graduate student. The code, written in MATLAB, implemented serial wavelet compression[9] of a segmented image. Unfortunately, it was poorly written, with difficult to decipher function names, poorly thought out file I/O, and virtually zero comment lines. A significant effort was undertaken to document the existing source before parallelization could be attempted. Additionally, the code did not determine the areas of interest in the image to be compressedit required a pre-existing mask on disk.

HPCMP Users Group Conference (HPCMP-UGC'06) 0-7695-2797-3/06 $20.00 2006

A very rudimentary serial image segmentation code was written to provide the mask for the compression step. The areas of interest are designated by pixels that are a certain percentage above or below the average brightness of the entire image. For most of our test images, this was surprisingly effective at picking out targets, such as tanks in a SAR image. A better option for a mask generator might be to use a trained, parallelized Support Vector Machine. Other options include a mask generator based on attention and perception, such as through symmetry.[2] Due to the modularity of the implementation, a new mask generator should be trivial to mate to the image compression routine. Once the mask is generated, it is written to disk in a PGM format. Due to the simplicity of this particular segmentation method, effort was not taken to parallelize it. The parallelization efforts centered upon using pMatlab.[4] This implementation is limited due to the inherently serial nature of the pre-existing code, and not as efficient as it could be. Testing on HPC systems was successful and Figure 6 shows that selective compression of an image was achieved. The left image is a sample SAR image showing two columns of tanks flanking a road. The right image shows the results of a parallel CBIC operation on the source image using the simple masking algorithm described earlier. Figure 6 shows that the masking algorithm successfully selected the tanks as objects of interest, as well as portions of the road and other objects that stick out from the background. The background portions of the image are then heavily compressed. Figure 7 shows median execution times over five trials for the image shown in Figure 6 using a Pentium 4 cluster. A small benefit is seen by adding a second processor; however, execution times increase afterwards. More efficient code may produce better results.

necessarily reflect the views of the DoD or Mississippi State University.

References

1. Burges, Christopher J.C., A Tutorial on Support Vector Machines for Pattern Recognition. In Data Mining and Knowledge Discovery, volume 2, Kluwer Academic Publishers, Boston, MA, 1998. 2. Ges, Vito Di and Cesare Valenti, Detection of Regions of Interest Via the Pyramid Discrete Symmetry Transform. In Advances in Computer Vision, Springer, New York, 1997. 3. Graf, H.-P., E. Cosatto, L. Bottou, I. Dourdanovic, and V. Vapnik, Parallel Support Vector Machines: The Cascade SVM. In Advances in Neural Information Processing Systems, volume 17, MIT Press, Cambridge, MA, 2005. 4. Kepner, Jeremy, pMatlab: Parallel Matlab Toolbox. http://www.ll.mit.edu/pMatlab/, accessed May 19, 2006. 5. Kepner, Jeremy, Parallel Programming with MatlabMPI. http://www.ll.mit.edu/MatlabMPI/, accessed May 19, 2006. 6. Byron J. T. Morgan, Applied Stochastic Modelling. New York: Oxford U. Press Inc., 2000, ch. 7. 7. Platt., J., Fast Training of Support Vector Machines Using Sequential Minimal Optimization. In Advances in Kernel Methods Support Vector Learning, MIT Press, Cambridge, MA, 1998. 8. Platt., J., Using sparseness and analytic QP to speed training of support vector machines. In Advances in Neural Information Processing Systems, volume 13, MIT Press, Cambridge, MA, 1999. 9. Topiwala, Pankaj, ed., Wavelet Image and Video Compression, Kluwer Academic Publishers, Boston, MA, 1998.

4. Results

The authors now have three rudimentary applications that demonstrate that the algorithms can be implemented in parallel MATLAB. While the implementations may be limited (the SVM classifier, for example, only has one available kernel), they provide a proof-of-concept as well as a starting code base should a DoD user wish to apply any of these algorithms to their problem.

Acknowledgements

This publication was made possible through support provided by DoD HPCMP PET activities through Mississippi State University under contract. The opinions expressed herein are those of the author(s) and do not

Figure 1. Simple two-layer cascade SVM

HPCMP Users Group Conference (HPCMP-UGC'06) 0-7695-2797-3/06 $20.00 2006

Figure 2. Sample output of a trained SVM with training vectors in a checkerboard pattern

Figure 5. MCMC median execution time in seconds versus number of processors for 1000 samples with 1000 burn-in iterations from the distribution shown in Figure 4

Figure 6. CBIC compression of a sample SAR image

Figure 3. SVM execution time in seconds versus number of processors for 10000 samples from the distribution shown in Figure 3

Figure 4. Sample MCMC output for a 2-D normal distribution

Figure 7. CBIC median execution time in seconds versus number of processors for the picture shown in Figure 6

HPCMP Users Group Conference (HPCMP-UGC'06) 0-7695-2797-3/06 $20.00 2006

You might also like

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Intel® Desktop Board DX48BT2 Product Guide: Order Number: E27416-001Document86 pagesIntel® Desktop Board DX48BT2 Product Guide: Order Number: E27416-001Joao GuilhermeNo ratings yet

- ADC 2100 Advanced Digital Control REOZD Operation ManualDocument60 pagesADC 2100 Advanced Digital Control REOZD Operation ManualManuel Otero100% (4)

- Select A Location Under The Keyboard Shelf Where The Control Is To Be MountedDocument7 pagesSelect A Location Under The Keyboard Shelf Where The Control Is To Be MountedRichard UrbanNo ratings yet

- PICkit 3 Poster 51792aDocument1 pagePICkit 3 Poster 51792aEugenio Morocho CayamcelaNo ratings yet

- Mitsubishi F700 VFD Instruction Manual-BasicDocument142 pagesMitsubishi F700 VFD Instruction Manual-BasicMROstop.com0% (1)

- Finite Diff PDFDocument14 pagesFinite Diff PDFElshan AliyevNo ratings yet

- SML ISUZU Training Report 0009Document46 pagesSML ISUZU Training Report 0009Lovedeep SalgotraNo ratings yet

- Yale HSA6400 Alarm Installation ManualDocument32 pagesYale HSA6400 Alarm Installation Manualeskrow750% (2)

- Chapters 15-17: Transaction Management: Transactions, Concurrency Control and RecoveryDocument67 pagesChapters 15-17: Transaction Management: Transactions, Concurrency Control and RecoveryImranNo ratings yet

- OBD-EnET Cable Build - Detailed InstructionsDocument8 pagesOBD-EnET Cable Build - Detailed InstructionsReza Varamini100% (1)

- Xdho LTP 00054 - kg001sDocument11 pagesXdho LTP 00054 - kg001samruthkiranbabujiNo ratings yet

- GEM FirewallDocument26 pagesGEM Firewallrbk1234No ratings yet

- Chapter 4Document73 pagesChapter 4Phú Quốc PhạmNo ratings yet

- Irc5 PDFDocument326 pagesIrc5 PDFHuỳnh Gia Vũ100% (1)

- Cybertek - Issue #8Document17 pagesCybertek - Issue #8GBPPR100% (1)

- Software Engineering PatternDocument7 pagesSoftware Engineering PatternKarthikeyan VijayakumarNo ratings yet

- Lenovo V110 14IAP Platform SpecificationsDocument1 pageLenovo V110 14IAP Platform Specificationskhamida nur faizahNo ratings yet

- Assembly Language ProgrammingDocument20 pagesAssembly Language ProgrammingSolomon SBNo ratings yet

- Gva-Hl-Dl 02.04.13Document6 pagesGva-Hl-Dl 02.04.13Ariel Huchim AldanaNo ratings yet

- Avc A1hdaDocument2 pagesAvc A1hdaRaj KumarNo ratings yet

- Complete Assignment of Computer ArchitectureDocument5 pagesComplete Assignment of Computer ArchitectureSakar KhatriNo ratings yet

- Jalan Belanga No. 4C Medan 20118 - Indonesia HP: +62 815 2032 2035Document4 pagesJalan Belanga No. 4C Medan 20118 - Indonesia HP: +62 815 2032 2035Christin SilalahiNo ratings yet

- ALEXA Metadata White Paper SUP8Document29 pagesALEXA Metadata White Paper SUP8vveksuvarnaNo ratings yet

- Bio-Chemistry Analyzer: CS-T240 Operation ManualDocument72 pagesBio-Chemistry Analyzer: CS-T240 Operation ManualErick SiguNo ratings yet

- Dealer Price DEC 10Document10 pagesDealer Price DEC 10Rajgor DhavalNo ratings yet

- Unit-6 Distributed Shared MemoryDocument71 pagesUnit-6 Distributed Shared Memorydeepak2083No ratings yet

- Multiple Choice QuestionsDocument5 pagesMultiple Choice QuestionsNel Sweetie0% (1)

- Semiconductor Main MemoryDocument15 pagesSemiconductor Main MemoryBereket TarikuNo ratings yet

- GPI 130 QuickStart OnlineDocument2 pagesGPI 130 QuickStart OnlineMohd. Usman ArifNo ratings yet

- BI Interview Questions Ver1.0Document71 pagesBI Interview Questions Ver1.0Siddhu GottipatiNo ratings yet