Professional Documents

Culture Documents

Internet Protocol: Function

Uploaded by

Suganya AlagarOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Internet Protocol: Function

Uploaded by

Suganya AlagarCopyright:

Available Formats

Internet Protocol

The Internet Protocol (IP) is the principal communications protocol used for relaying datagrams (also known as network packets) across an internetwork using the Internet Protocol Suite. Responsible for routing packets across network boundaries, it is the primary protocol that establishes the Internet. IP is the primary protocol in the Internet Layer of the Internet Protocol Suite and has the task of delivering datagrams from the source host to the destination host solely based on the addresses. For this purpose, IP defines datagram structures that encapsulate the data to be delivered. It also defines addressing methods that are used to label the datagram source and destination. Historically, IP was the connectionless datagram service in the original Transmission Control Program introduced by Vint Cerf and Bob Kahn in 1974, the other being the connection-oriented Transmission Control Protocol (TCP). The Internet Protocol Suite is therefore often referred to as TCP/IP. The first major version of IP, now referred to as Internet Protocol Version 4 (IPv4) is the dominant protocol of the Internet, although the successor, Internet Protocol Version 6 (IPv6) is in active, growing deployment worldwide.

[edit] Function

The Internet Protocol is responsible for addressing hosts and routing datagrams (packets) from a source host to the destination host across one or more IP networks. For this purpose the Internet Protocol defines an addressing system that has two functions: identifying hosts and providing a logical location service. This is accomplished by defining standard datagrams and a standard addressing system.

[edit] Datagram construction

Sample encapsulation of application data from UDP to a Link protocol frame Each datagram has two components, a Header (computing) and a payload. The IP header is tagged with the source IP address, destination IP address, and other meta-data needed to route and deliver the datagram. The payload is the data to be transported. This process of nesting data payloads in a packet with a header is called encapsulation.

[edit] IP addressing and routing

Main articles: IP address and IP forwarding algorithm Perhaps the most complex aspects of IP are IP addressing and routing. Addressing refers to how end hosts become assigned IP addresses and how subnetworks of IP host

addresses are divided and grouped together. IP routing is performed by all hosts, but most importantly by internetwork routers, which typically use either interior gateway protocols (IGPs) or external gateway protocols (EGPs) to help make IP datagram forwarding decisions across IP connected networks. IP routing is also common in local networks. For example, Ethernet switches sold today support IP multicast.[1] These switches use IP addresses and Internet Group Management Protocol for control of the multicast routing but use MAC addresses for the actual routing.

[edit] Reliability

The design principles of the Internet protocols assume that the network infrastructure is inherently unreliable at any single network element or transmission medium and that it is dynamic in terms of availability of links and nodes. No central monitoring or performance measurement facility exists that tracks or maintains the state of the network. For the benefit of reducing network complexity, the intelligence in the network is purposely mostly located in the end nodes of each data transmission, cf. end-to-end principle. Routers in the transmission path simply forward packets to the next known local gateway matching the routing prefix for the destination address. As a consequence of this design, the Internet Protocol only provides best effort delivery and its service is characterized as unreliable. In network architectural language it is a connection-less protocol, in contrast to so-called connection-oriented modes of transmission. The lack of reliability permits various error conditions, such data corruption, packet loss and duplication, as well as out-of-order packet delivery. Since routing is dynamic for every packet and the network maintains no state of the path of prior packets, it is possible that some packets are routed on a longer path to their destination, resulting in improper sequencing at the receiver. The only assistance that the Internet Protocol provides in Version 4 (IPv4) is to ensure that the IP packet header is error-free through computation of a checksum at the routing nodes. This has the side-effect of discarding packets with bad headers on the spot. In this case no notification is required to be sent to either end node, although a facility exists in the Internet Control Message Protocol (ICMP) to do so. IPv6, on the other hand, has abandoned the use of IP header checksums for the benefit of rapid forwarding through routing elements in the network. The resolution or correction of any of these reliability issues is the responsibility of an upper layer protocol. For example, to ensure in-order delivery the upper layer may have to cache data until it can be passed to the application. In addition to issues of reliability, this dynamic nature and the diversity of the Internet and its components provide no guarantee that any particular path is actually capable of, or suitable for, performing the data transmission requested, even if the path is available and

reliable. One of the technical constraints is the size of data packets allowed on a given link. An application must assure that it uses proper transmission characteristics. Some of this responsibility lies also in the upper layer protocols between application and IP. Facilities exist to examine the maximum transmission unit (MTU) size of the local link, as well as for the entire projected path to the destination when using IPv6. The IPv4 internetworking layer has the capability to automatically fragment the original datagram into smaller units for transmission. In this case, IP does provide re-ordering of fragments delivered out-of-order.[2] Transmission Control Protocol (TCP) is an example of a protocol that will adjust its segment size to be smaller than the MTU. User Datagram Protocol (UDP) and Internet Control Message Protocol (ICMP) disregard MTU size thereby forcing IP to fragment oversized datagrams.[3]

[edit] Version history

In May 1974, the Institute of Electrical and Electronic Engineers (IEEE) published a paper entitled "A Protocol for Packet Network Interconnection."[4] The paper's authors, Vint Cerf and Bob Kahn, described an internetworking protocol for sharing resources using packet-switching among the nodes. A central control component of this model was the "Transmission Control Program" (TCP) that incorporated both connection-oriented links and datagram services between hosts. The monolithic Transmission Control Program was later divided into a modular architecture consisting of the Transmission Control Protocol at the connection-oriented layer and the Internet Protocol at the internetworking (datagram) layer. The model became known informally as TCP/IP, although formally referenced as the Internet Protocol Suite. The Internet Protocol is one of the determining elements that define the Internet. The dominant internetworking protocol in the Internet Layer in use today is IPv4; with number 4 assigned as the formal protocol version number carried in every IP datagram. IPv4 is described in RFC 791 (1981). The successor to IPv4 is IPv6. Its most prominent modification from version 4 is the addressing system. IPv4 uses 32-bit addresses (c. 4 billion, or 4.3109, addresses) while IPv6 uses 128-bit addresses (c. 340 undecillion, or 3.41038 addresses). Although adoption of IPv6 has been slow, as of June 2008, all United States government systems have demonstrated basic infrastructure support for IPv6 (if only at the backbone level).[5] Version numbers 0 through 3 were development versions of IPv4 used between 1977 and 1979.[citation needed] Version number 5 was used by the Internet Stream Protocol, an experimental streaming protocol. Version numbers 6 through 9 were proposed for various protocol models designed to replace IPv4: SIPP (Simple Internet Protocol Plus, known now as IPv6), TP/IX (RFC 1475), PIP (RFC 1621) and TUBA (TCP and UDP with Bigger Addresses, RFC 1347). Version number 6 was eventually chosen as the official assignment for the successor Internet protocol, subsequently standardized as IPv6.

A humorous Request for Comments that made an IPv9 protocol center of its storyline was published on April 1, 1994 by the IETF.[6] It was intended as an April Fool's Day joke. Other protocol proposals named "IPv9" and "IPv8" have also briefly surfaced, though these came with little or no support from the wider industry and academia.[7]

Types of Internet Protocols

There's more to the Internet than the World Wide Web

When we think of the Internet we often think only of the World Wide Web. The Web is one of several ways to retrieve information from the Internet. These different types of Internet connections are known as protocols. You could use separate software applications to access the Internet with each of these protocols, though you probably wouldn't need to. Many Internet Web browsers allow users to access files using most of the protocols. Following are three categories of Internet services and examples of types of services in each category. File retrieval protocols This type of service was one of the earliest ways of retrieving information from computers connected to the Internet. You could view the names of the files stored on the serving computer, but you didn't have any type of graphics and sometimes no description of a file's content. You would need to have advanced knowledge of which files contained the information you sought. FTP (File Transfer Protocol) This was one of the first Internet services developed and it allows users to move files from one computer to another. Using the FTP program, a user can logon to a remote computer, browse through its files, and either download or upload files (if the remote computer allows). These can be any type of file, but the user is only allowed to see the file name; no description of the file content is included. You might encounter the FTP protocol if you try to download any software applications from the World Wide Web. Many sites that offer downloadable applications use the FTP protocol.

Gopher Gopher offers downloadable files with some content description to make it easier to find the file you need. The files are arranged on the remote computer in a hierarchical manner, much like the files on your computer's hard drive are arranged. This protocol isn't widely used anymore, but you can still find some operational gopher sites. Telnet You can connect to and use a remote computer program by using the telnet protocol. Generally you would telnet into a specific application housed on a serving computer that would allow you to use that application as if it were on your own computer. Again, using this protocol requires special software

You might also like

- Raspberry PiDocument21 pagesRaspberry PikidomaNo ratings yet

- Ip Nat Guide CiscoDocument418 pagesIp Nat Guide CiscoAnirudhaNo ratings yet

- Network Topologies, Media and DevicesDocument66 pagesNetwork Topologies, Media and DevicesMichael John GregorioNo ratings yet

- Comprehensive Study of Attacks on SSL/TLSDocument23 pagesComprehensive Study of Attacks on SSL/TLSciuchino2No ratings yet

- ProtocolsDocument37 pagesProtocolsKOMERTDFDF100% (1)

- Wireless Connectivity To Vehicle Body Control ModuleDocument6 pagesWireless Connectivity To Vehicle Body Control ModuleInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Assignment 4.1Document6 pagesAssignment 4.1Navneet kumarNo ratings yet

- NMAP Command Guide SheetDocument5 pagesNMAP Command Guide SheetRafoo MGNo ratings yet

- IP ManagementDocument192 pagesIP ManagementanonNo ratings yet

- Ipv6 Addressing: Yashvant Singh Centre For Excellence in Telecom Technology and ManagementDocument45 pagesIpv6 Addressing: Yashvant Singh Centre For Excellence in Telecom Technology and ManagementRitesh SinghNo ratings yet

- ITN Module 16 STDDocument35 pagesITN Module 16 STDnurul husaifahNo ratings yet

- Highly Useful Linux CommandsDocument40 pagesHighly Useful Linux CommandsnagarajNo ratings yet

- Network Switch: For Other Uses, SeeDocument7 pagesNetwork Switch: For Other Uses, SeeMuhammad ZairulfikriNo ratings yet

- 06-MAC Address Table Management CommandsDocument16 pages06-MAC Address Table Management CommandsRandy DookheranNo ratings yet

- D-Bus Tutorial: Havoc PenningtonDocument19 pagesD-Bus Tutorial: Havoc Penningtonrk1825No ratings yet

- How To Install All Kali Linux Hacking Tools in Ubuntu or Linux Mint Safely Without Crashing SystemDocument1 pageHow To Install All Kali Linux Hacking Tools in Ubuntu or Linux Mint Safely Without Crashing Systemadam IskandarNo ratings yet

- How To Setup Wireless of Edimax CameraDocument5 pagesHow To Setup Wireless of Edimax CameraKuntal DasguptaNo ratings yet

- Advanced Nmap - NMap Script ScanningDocument5 pagesAdvanced Nmap - NMap Script Scanningksenthil77No ratings yet

- Netx Insiders GuideDocument261 pagesNetx Insiders GuideMapuka MuellerNo ratings yet

- N+ Study MaterailDocument59 pagesN+ Study MaterailasimalampNo ratings yet

- DOCSIS 3.0 - CM-SP-MULPIv3.0-I15-110210Document750 pagesDOCSIS 3.0 - CM-SP-MULPIv3.0-I15-110210Ti Nguyen100% (1)

- Cisco IOS Security Command Reference - Commands D To L Cisco IOS XEDocument160 pagesCisco IOS Security Command Reference - Commands D To L Cisco IOS XEsilverclericNo ratings yet

- Installing OpenWrtDocument4 pagesInstalling OpenWrtStancu MarcelNo ratings yet

- Wireless Sensor Network and Contiky OSDocument144 pagesWireless Sensor Network and Contiky OSMichel Zarzosa RojasNo ratings yet

- 77 Linux Commands and Utilities You'Ll Actually UseDocument16 pages77 Linux Commands and Utilities You'Ll Actually Usearun0076@gmail.comNo ratings yet

- EFI Shell Getting Started GuideVer0 31Document19 pagesEFI Shell Getting Started GuideVer0 31id444324No ratings yet

- VLAN and MAC Address Configuration CommandsDocument39 pagesVLAN and MAC Address Configuration CommandsPhạm Văn ThuânNo ratings yet

- USB BasicsDocument15 pagesUSB Basicspsrao.bangalore4407No ratings yet

- IbootDocument49 pagesIbootJulian Andres Perez BeltranNo ratings yet

- CR50-100 Ing QSGDocument12 pagesCR50-100 Ing QSGPaul Anim AmpaduNo ratings yet

- Wireless Captive PortalsDocument16 pagesWireless Captive PortalsLuka O TonyNo ratings yet

- FirmwareDocument5 pagesFirmwarehariNo ratings yet

- CIS 185 EIGRP Part 1 Class Begins ShortlyDocument104 pagesCIS 185 EIGRP Part 1 Class Begins ShortlyPhil GarbettNo ratings yet

- Linux Commands Cheat Sheet - Linux Training AcademyDocument26 pagesLinux Commands Cheat Sheet - Linux Training AcademyDark LordNo ratings yet

- It6601Mobile Computing Unit IV DR Gnanasekaran ThangavelDocument52 pagesIt6601Mobile Computing Unit IV DR Gnanasekaran ThangavelK Gowsic GowsicNo ratings yet

- DNS - GoogleDocument5 pagesDNS - GoogleMarciusNo ratings yet

- SS7 OverviewDocument7 pagesSS7 Overviewyahoo234422No ratings yet

- Configure FTD High Availability On Firepower Appliances: RequirementsDocument33 pagesConfigure FTD High Availability On Firepower Appliances: RequirementsShriji SharmaNo ratings yet

- How Do I Update Ubuntu Linux Software Using Command LineDocument15 pagesHow Do I Update Ubuntu Linux Software Using Command LinegaschonewegNo ratings yet

- Compile Software From Source Code - WIREDDocument6 pagesCompile Software From Source Code - WIREDMartín LehoczkyNo ratings yet

- Debugger HexagonDocument79 pagesDebugger Hexagoncarver_uaNo ratings yet

- FUM-T10-User Menu-English Video Server de La EnivisionDocument43 pagesFUM-T10-User Menu-English Video Server de La Enivisiond_ream80100% (1)

- Case Study 6Document5 pagesCase Study 6Chirry OoNo ratings yet

- Building A Server With FreeBSD 7 - 6Document2 pagesBuilding A Server With FreeBSD 7 - 6twn353091No ratings yet

- Crash Crash Linux KernelDocument36 pagesCrash Crash Linux Kernelhero_trojanNo ratings yet

- Intro To TCP-IPDocument12 pagesIntro To TCP-IPAnjali Sharma100% (1)

- Internet Access MethodsDocument4 pagesInternet Access Methodsdrgnarayanan100% (1)

- Universe/multiverse ListDocument502 pagesUniverse/multiverse ListLatchNo ratings yet

- Subdomain Takeover GuideDocument26 pagesSubdomain Takeover GuidemoujaNo ratings yet

- EC Council Certified Ethical Hacker CEH v9.0Document5 pagesEC Council Certified Ethical Hacker CEH v9.0Juan Ignacio Concha AguirreNo ratings yet

- Storagetek Libraries Cim Provider: Installation GuideDocument34 pagesStoragetek Libraries Cim Provider: Installation GuideMostefaTadjeddine100% (1)

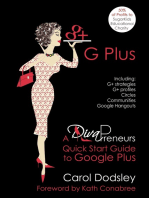

- GPlus: Google Plus Strategies, Profiles, Circles, Communities, & Hangouts. A DivaPreneurs Quick Start Guide to Google PlusFrom EverandGPlus: Google Plus Strategies, Profiles, Circles, Communities, & Hangouts. A DivaPreneurs Quick Start Guide to Google PlusNo ratings yet

- Packet Sniffing SKJDocument33 pagesPacket Sniffing SKJsigma67_67100% (1)

- TCP/IP Application InterfaceDocument22 pagesTCP/IP Application Interfacesiddurohit22No ratings yet

- IP Command Cheatsheet Rhel7Document3 pagesIP Command Cheatsheet Rhel7Manjunath BheemappaNo ratings yet

- Linux boot-up on XUP board and writing Device DriversDocument6 pagesLinux boot-up on XUP board and writing Device DriversVinod GuptaNo ratings yet

- Forensics Tutorial 12 - Network Forensics With WiresharkDocument20 pagesForensics Tutorial 12 - Network Forensics With WiresharkMary Amirtha Sagayee. GNo ratings yet

- Ddos Traffic Generation CommandsDocument9 pagesDdos Traffic Generation CommandsPrasad KdNo ratings yet

- IPv6 TransitionDocument20 pagesIPv6 Transitionchebbin80No ratings yet

- IP Address SubnetingDocument9 pagesIP Address SubnetingMeskatul Islam2No ratings yet

- VMware-NSX-VSphere Content Pack DescriptionDocument12 pagesVMware-NSX-VSphere Content Pack DescriptionCalin Damian TanaseNo ratings yet

- JNCIS SP ObjectivesDocument2 pagesJNCIS SP Objectivestienpq150987No ratings yet

- EIGRP Interview Questions - MCQ's Part-5 - ATech (Waqas Karim)Document11 pagesEIGRP Interview Questions - MCQ's Part-5 - ATech (Waqas Karim)Mery MeryNo ratings yet

- CCNA Routing and Switching: Introduction To Networks: C SCODocument22 pagesCCNA Routing and Switching: Introduction To Networks: C SCOIndra Pratama NugrahaNo ratings yet

- Acceptance Test ProcedureDocument2 pagesAcceptance Test Proceduremohammad fadli100% (1)

- PDF Nmap Tutorial PDF - CompressDocument20 pagesPDF Nmap Tutorial PDF - CompressAdem SylejmaniNo ratings yet

- Threat Landscape and Good Practice Guide for Internet Infrastructure AssetsDocument1 pageThreat Landscape and Good Practice Guide for Internet Infrastructure AssetsranamzeeshanNo ratings yet

- FTFA Remote SetupDocument3 pagesFTFA Remote Setupmahrez100% (1)

- Chapter 21 QuizDocument5 pagesChapter 21 QuizAmal SamuelNo ratings yet

- How To Subnet in Your HeadDocument67 pagesHow To Subnet in Your Headbaraynavab95% (20)

- Lab-7 1 6Document6 pagesLab-7 1 6Tiến Trung NguyễnNo ratings yet

- Lab - Calculate Ipv4 Subnets: Problem 1Document4 pagesLab - Calculate Ipv4 Subnets: Problem 1L HammeRNo ratings yet

- Load BalancingDocument44 pagesLoad BalancingMac KaNo ratings yet

- Ch11 OBE-Logical Addressing PDFDocument31 pagesCh11 OBE-Logical Addressing PDFRon ThieryNo ratings yet

- IP Addressing & Subnetting: Engr. Carlo Ferdinand C. Calma, CCNADocument15 pagesIP Addressing & Subnetting: Engr. Carlo Ferdinand C. Calma, CCNACarloNo ratings yet

- NmapDocument14 pagesNmapayeshashafeeqNo ratings yet

- Build and Implement a Nova Group Real Estate Enterprise NetworkDocument3 pagesBuild and Implement a Nova Group Real Estate Enterprise NetworkSalim HassenNo ratings yet

- RIP Version 1: © 2006 Cisco Systems, Inc. All Rights Reserved. Cisco Public ITE I Chapter 6Document36 pagesRIP Version 1: © 2006 Cisco Systems, Inc. All Rights Reserved. Cisco Public ITE I Chapter 6fauzi endraNo ratings yet

- Implementing Ipv6 As A Peer-To-Peer Overlay Network: ReviewDocument2 pagesImplementing Ipv6 As A Peer-To-Peer Overlay Network: ReviewMuhammad Waseem AnjumNo ratings yet

- 4.1.4 Packet Tracer - ACL DemonstrationDocument3 pages4.1.4 Packet Tracer - ACL DemonstrationAbid EkaNo ratings yet

- 05.static Routing LabDocument3 pages05.static Routing LabasegunloluNo ratings yet

- 9.1.4.7 Packet Tracer - Subnetting Scenario 2 Instructions IGDocument6 pages9.1.4.7 Packet Tracer - Subnetting Scenario 2 Instructions IGHuseyn AlkaramovNo ratings yet

- Open Shortest Path First (Ospf)Document34 pagesOpen Shortest Path First (Ospf)nvbinh2005No ratings yet

- Midterm Exam 2 (4 Pages, 5 Questions)Document4 pagesMidterm Exam 2 (4 Pages, 5 Questions)Nada FSNo ratings yet

- Lab Manual 1Document58 pagesLab Manual 1Ali HaiDerNo ratings yet

- IP Address and DNSDocument11 pagesIP Address and DNSAtiqah Rais100% (1)

- ASA5506 9-3-1-2 Lab - Configure ASA Basic Settings and Firewall Using CLI - 1-26Document31 pagesASA5506 9-3-1-2 Lab - Configure ASA Basic Settings and Firewall Using CLI - 1-26Hoàng Dũng0% (1)

- Template Script Mikrotik Routing Game Online: Ip Address Lokal 192 - 166 - Gateway Modem Game 192 - 168Document6 pagesTemplate Script Mikrotik Routing Game Online: Ip Address Lokal 192 - 166 - Gateway Modem Game 192 - 168Aris SetyawanNo ratings yet