Professional Documents

Culture Documents

Assay Validation

Uploaded by

Nedzad MulavdicOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Assay Validation

Uploaded by

Nedzad MulavdicCopyright:

Available Formats

PLEASE SCROLL DOWN FOR ARTICLE

This article was downloaded by: [University of Alberta]

On: 7 January 2009

Access details: Access Details: [subscription number 713587337]

Publisher Informa Healthcare

Informa Ltd Registered in England and Wales Registered Number: 1072954 Registered office: Mortimer House,

37-41 Mortimer Street, London W1T 3JH, UK

Encyclopedia of Biopharmaceutical Statistics

Publication details, including instructions for authors and subscription information:

http://www.informaworld.com/smpp/title~content=t713172960

Assay Validation

Timothy L. Schofield

a

a

Merck Research Laboratories, West Point, Pennsylvania, U.S.A.

Online Publication Date: 23 April 2003

To cite this Section Schofield, Timothy L.(2003)'Assay Validation',Encyclopedia of Biopharmaceutical Statistics,1:1,63 71

Full terms and conditions of use: http://www.informaworld.com/terms-and-conditions-of-access.pdf

This article may be used for research, teaching and private study purposes. Any substantial or

systematic reproduction, re-distribution, re-selling, loan or sub-licensing, systematic supply or

distribution in any form to anyone is expressly forbidden.

The publisher does not give any warranty express or implied or make any representation that the contents

will be complete or accurate or up to date. The accuracy of any instructions, formulae and drug doses

should be independently verified with primary sources. The publisher shall not be liable for any loss,

actions, claims, proceedings, demand or costs or damages whatsoever or howsoever caused arising directly

or indirectly in connection with or arising out of the use of this material.

Assay Validation

Timothy L. Schofield

Merck Research Laboratories, West Point, Pennsylvania, U.S.A.

INTRODUCTION

Upon completion of the development of an assay, and

prior to implementation, a well-conceived assay valida-

tion is carried out to demonstrate that the procedure is fit

for use. Regulatory requirements specify the assay

characteristics that are subject to validation and suggest

experimental strategies to study these properties. These

requirements, when united with sound statistical experi-

mental design, furnish an effective and efficient valida-

tion plan. That plan should also address the form of

statistical processing of the experimental results and

thereby ensure reliable and meaningful measures of the

properties of the method.

This entry will introduce the validation parameters

used to describe the characteristics of an analytical

method. Performance metrics associated with those

parameters will be defined, with illustrations of their

utility in application. Realistic and relevant acceptance

criteria are proposed, as a basis for evaluating a pro-

cedure. An example of a validation protocol will il-

lustrate the design of a comprehensive exploration, in

conjunction with the statistical methods used to extract

the relevant information.

TERMINOLOGY

Most of the terminology related to assay validation can

be found in the entry on Assay Development. Some terms

that are unique to this topic are relative standard devia-

tion, spike recovery, and dilution effect.

The relative standard deviation (RSD) is a measure of

the proportional variability of an assay and is usually

expressed as a percentage of the measured value:

RSD 100

^ s assay

^x

%

Note that the RSD is the same as the coefficient of

variation in statistics. Other conventions for calculating

the RSD, which are appropriate for measurements that are

lognormally distributed, utilize s

log

x^

, the log standard

deviation or the fold variability:

RSD 100^ s

log^x

% or RSD 100FV 1%

100e

^ slog^x

1%

Note that the log standard deviation provides an

approximation to the RSD; it should be restricted to

levels below 20% RSD. An important feature of the RSD

is that it is calculated from the predicted measurements

for a sample, such as concentrations, and not from an

intermediate, such as the instrument readout. These are

the same only in the case of linear calibration.

Spike recovery is the process of measuring an analyte

that has been distributed as a known amount into the

sample matrix, with results usually reported as percent

recovery or percent bias. Dilution effect is a measure

of the proportional bias measured across a dilution series

of a validation sample; it is usually reported as percent

bias per dilution increment (usually percent bias per

twofold dilution).

REGULATORY REQUIREMENTS

The U.S. Pharmacopeia specifies that the Validation of

an analytical method is the process by which it is

established, in laboratory studies, that the performance

characteristics of the method meet the requirements for

the intended analytical applications.

[1]

Key to this

definition is the treatment of a validation study as an

experiment, with the goal of that experiment being to

demonstrate that the assay is fit for use. As an experiment,

a validation study should be suitably designed to meet its

goal, while the acceptance criteria should yield oppor-

tunities to judge the assay as unsuitable as well as suit-

able, possibly requiring further development. This does

not suggest that it is the role of the validation study to

optimize the assay; this should be completed during the

assay development. In fact, the properties of the assay are

probably well understood by the time the validation

experiment is undertaken. The validation experiment

should serve as a snapshot of the long-term routine

performance of the assay, thereby documenting its

characteristics so that we can make reliable decisions

during drug development and control.

The International Conference on Harmonization (ICH)

has devoted two topics of its guidelines to analytical

method validation. The first topic, entitled Guideline on

Validation of Analytical Procedures: Definitions and

Terminology,

[2]

offers a list of validation parameters

that should be considered when implementing a validation

experiment and guidance toward the selection of those

Encyclopedia of Biopharmaceutical Statistics 63

DOI: 10.1081/E-EBS 120007388

Copyright D 2003 by Marcel Dekker, Inc. All rights reserved.

A

D

o

w

n

l

o

a

d

e

d

B

y

:

[

U

n

i

v

e

r

s

i

t

y

o

f

A

l

b

e

r

t

a

]

A

t

:

0

6

:

3

5

7

J

a

n

u

a

r

y

2

0

0

9

parameters. The second topic, entitled Validation of

Analytical Procedures: Methodology,

[3]

provides dir-

ection on the design and analysis of results from a

validation experiment.

ASSAY VALIDATION PARAMETERS

AND VALIDATION DESIGN

It is convenient for the purposes of planning a validation

experiment to dichotomize assay validation parameters

into two categories: parameters related to the accuracy of

the analytical method and parameters related to variabil-

ity. In this way, the analyst will choose the test sample set

that will be carried forward into the validation, in accord

with accuracy parameters, and devise a replication plan

for the validation to satisfy the estimation of the

variability parameters.

The validation parameters related to the accuracy of an

analytical method are accuracy, linearity, and specificity.

The accuracy of an analytical method expresses the

closeness of agreement between the value which is

accepted either as a conventional value or an accepted

reference value, and the value found. Accuracy is usu-

ally established by spiking known quantities of analyte

(see the entry Assay Development for definitions of terms)

into the sample matrix and demonstrating that these can

be completely recovered. Conventional practice is to

spike using five levels of the analyte: 50%, 75%, 100%,

125%, and 150% of the declared content of analyte in the

drug (note that this is more stringent than the ICH, which

requires a minimum of nine determinations over a

minimum of three concentration levels). The experi-

menter is not restricted to these levels but should plan

to spike through a region that embraces the range of

expected measurements from samples that will be tested

during development and manufacture. Accuracy can also

be determined relative to a referee method; in the case

of complex mixtures, such as combination vaccines,

accuracy can be judged relative to a monovalent control.

The linearity of an analytical procedure is its ability

(within a given range) to obtain test results that are

directly proportional to the concentration (amount) of

analyte in the sample. This parameter is often confused

with the graphical linearity of the standard curve; in

fact, the guidelines encourage the experimenter to eval-

uate linearity by visual inspection of a plot of signals

as a function of concentration or content. The prin-

ciple of analytical linearity, however, should not be

confused with an abstract property, such as the

straightness of the standard curve, but rather should

be based upon the attribute that graded concentrations

of the analyte yield measurements that are in proper

proportion. Thus for example, if a sample is tested in

twofold dilution, the measured concentrations in those

samples should yield a twofold series. In this way, linearity

can be established from the spiking experiment outlined

earlier, while a dilution series is usually employed when

the assessment of linearity cannot be achieved through

spiking (i.e., when the purified analyte does not exist, such

as in vaccines).

Specificity is the ability to assess unequivocally the

analyte in the presence of components which may be

present. Thus specificity speaks to the variety of sample

matrices containing the analyte, including process inter-

mediates and stability samples. The ICH guidelines

provide a comprehensive description of the means to es-

tablish specificity in identity tests and in analytical

methods for impurities and content. When the samples

are available, specificity can be established through

testing of the analyte-free matrix.

The assay validation parameters related to variability

are precision, repeatability, ruggedness, limit of detection,

and limit of quantitation. The precision of an assay

expresses the closeness of agreement (degree of scatter)

between a series of measurements obtained from multiple

sampling of the same homogeneous sample under the

prescribed conditions. Precision is frequently called in-

terrun variability, where a run of an assay represents the

independent preparation of assay reagents, tests samples,

and a standard curve. The repeatability of an assay

expresses the precision under the same operating con-

ditions over a short interval of time, and is frequently

called intrarun variability. Repeatability and precision

can be studied simultaneously, using the levels of a

sample required to establish accuracy and linearity, in a

consolidated validation study design such as that de-

picted in Table 1. Here precision is associated with the

multiple experimental runs, while repeatability is asso-

ciated with the run-by-level interaction. In many cases,

the experimenter may wish to test true within-run

replicates rather than employ this subtle statistical artifact.

The runs in this design can be strategically allocated

to ruggedness parameters, such as laboratories, opera-

tors, and reagent lots, using a combination of nested and

factorial experimental design strategies. Thus operators

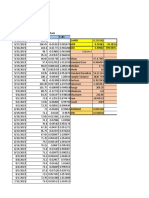

Table 1 Strategic validation design employing five levels of

the analyte tested in k independent runs of the assay

Level

Run 1 2 3 4 5

1 y

11

y

12

y

13

y

14

y

15

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

n y

n1

y

n2

y

n3

y

n4

y

n5

64 Assay Validation

D

o

w

n

l

o

a

d

e

d

B

y

:

[

U

n

i

v

e

r

s

i

t

y

o

f

A

l

b

e

r

t

a

]

A

t

:

0

6

:

3

5

7

J

a

n

u

a

r

y

2

0

0

9

might be nested within laboratory, while reagent lot can

be crossed with operator.

The robustness of an analytical method is a measure

of its capacity to remain unaffected by small, but

deliberate, variations in method parameters, and might

be more suitably established during the development

of the assay (see the entry on Assay Development).

Note that while ruggedness parameters represent uncon-

trollable factors affecting the analytical method, robust-

ness parameters can be varied and should be controlled

when it has been observed that they have an effect on

assay measurement.

The limit of detection (LOD) and limit of quantitation

(LOQ) of an assay can be obtained from replication of the

standard curve during the implementation of the con-

solidated validation experiment. The LOD of an analyt-

ical procedure is the lowest amount of analyte in a

sample that can be detected but not necessarily quanti-

tated as an exact value, while the LOQ is the lowest

amount of analyte in a sample that can be quantitatively

determined with suitable precision and accuracy. The

limit of quantitation need not be restricted to a lower

bound on the assay, but might be extended to an upper

bound of a standard curve, where the fit becomes flat (for

example, when using a four-parameter logistic regression

equation to fit the standard curve).

Finally, the composite of the ranges identified with

acceptable accuracy and precision is called the range of

the assay.

CHOICE OF VALIDATION PARAMETERS

The choice of parameters that will be explored during an

assay validation is determined by the intended use as well

as by the practical nature of the analytical method. For

example, a biochemical assay using a standard curve to

establish drug content might require the exploration of all

of these parameters if the assay is to be used to determine

low as well as high levels of the analyte (such as an assay

used to determine drug level in clinical samples or an

assay that will be used to measure the content of an

unstable analyte). If the assay is used, however, to de-

termine content in a stable preparation, the LOD and LOQ

need not be established. On the other hand, an assay for an

impurity requires adequate sensitivity to detect and/or

quantify the analyte; thus the LOD and LOQ become the

primary focus of the validation of an impurity assay.

Many of these assay validation parameters have lim-

ited meaning in the context of biological assay and

various other potency assays. There is no means to

explore the accuracy and linearity of assays in animals or

tissue culture, where the scale of the assay is defined by

the assay; thus validation experiments for this sort of

analytical method are usually restricted to a study of the

specificity and ruggedness of the procedure. As discussed

previously, the accuracy of a potency assay may be

limited to establishing linearity with dilution when the

purified analyte is unavailable to conduct a true spike-

recovery experiment.

VALIDATION METRICS AND

ACCEPTANCE CRITERIA

As previously defined, the goal of the validation ex-

periment is to establish that the analytical method is fit for

use. Thus for an impurity assay, for example, the goal of

the validation experiment should be to show that the

sensitivity of the analytical procedure (as measured by the

LOD for a limit assay and by the LOQ for a quantitative

assay) is adequate to detect a meaningful level of the im-

purity. Assays for content and potency are usually used to

establish that a drug conforms to specifications that have

been determined either through clinical trials with the

drug or from process/product performance. It is important

to point out that the process capability limits should

include the manufacturing distribution of the product

characteristic under study, the change in that character-

istic because of instability under recommended storage

conditions, as well as the effects on the measurement of

the characteristic because of the performance attributes of

the assay. In the end, the analytical method must be cap-

able of reliably discriminating satisfactory from unsat-

isfactory product against these limits. The portion of the

process range that is a result of measurement, including

measurement bias as well as measurement variability,

serves as the foundation for setting acceptance criteria for

assay validation parameters.

Validation parameters related to accuracy are rated

on the basis of recovery or bias (bias = 100 recovery,

usually expressed as a percentage), while validation para-

meters related to random variability are appraised on

the basis of variability (usually expressed as % RSD).

These can be combined with the process variability to

establish the process capability of the measured

characteristic:

[4]

CP

Specification range

6 Product variation

Specification range

6

s

2

product

s

2

assay

Bias

2

_

Process capability, in turn, is related to the percentage of

measurements that are likely to fall outside of the spe-

cifications. Thus acceptance criteria on the amount of

bias and analytical variability can be established based

Assay Validation 65

A

D

o

w

n

l

o

a

d

e

d

B

y

:

[

U

n

i

v

e

r

s

i

t

y

o

f

A

l

b

e

r

t

a

]

A

t

:

0

6

:

3

5

7

J

a

n

u

a

r

y

2

0

0

9

upon the knowledge of the product variability and the

desired process capability.

When the limits have not been established for a

particular product characteristic, such as during the early

development of a drug or biologic, acceptance criteria for

an assay attribute might be specified on the basis of a

typical expectation for the analytical method as well as on

the nature of the measurement in the particular sample.

Thus for example, high-performance liquid chromato-

graphy for the active compound in the final formulation of

a drug might be typically capable of yielding measure-

ments equal to or less than 10% RSD, while an immu-

noassay used to measure antigen content in a vaccine

might only be capable of achieving up to 20% RSD.

Measurement of a residual or an impurity by either me-

thod, on the other hand, may only achieve up to 50%

RSD, owing to the variable nature of measurement at low

analyte concentrations.

VALIDATION DATA ANALYSIS

Analysis of Parameters Related to Accuracy

Analytical accuracy and linearity can be parameterized as

a simple linear model, assuming either absolute or relative

bias. In one model, if we let m represent the known analyte

content and x is the measured amount, then the accuracy

can be expressed as x=m at a single concentration, or as

x=a+bm, where a=0 and b=1, across a series of

concentrations. This linear equation can be rewritten as

x m a b 1m

In this parameterization, a is related to the accuracy of the

analytical method and represents the constant bias in an

assay measurement (usually reported in the units of

measurement of the assay), while (b1) is related to the

linearity of the analytical method and represents the

proportional bias in an assay measurement (usually

reported in the units of measurement in the assay per

unit increase in that measurement, e.g., 0.02 mg per

microgram increase in content). Data from a spiking

experiment can be utilized to estimate a and b1, and

these (with statistical confidence limits if the validation

experiment has been designed to establish statistical con-

formance) can be compared with the acceptance cri-

teria established for these parameters.

To illustrate, consider the example in Table 2. A vali-

dation study has been performed in which samples have

been prepared with five levels of an analyte ([C] in mg),

and their content is determined in six runs of an assay.

These data are depicted graphically, along with the results

from their analysis, in Fig. 1. A linear fit to the data yields

the following estimates of intercept (note that the data

have been centered in order to obtain a centered

intercept) and slope:

^a

0

0:04 0:08; 0:01;

^

b 0:81 0:70; 0:85

The slope can be utilized to estimate the bias per unit

increase in drug concentration:

^

b 1 0:19 0:30; 0:15

This proportional bias is significant, owing to the fact that

the confidence interval excludes 0. It is more desirable,

however, to react to the magnitude of the bias and to

judge that there is an unacceptable nonlinearity when the

bias is in excess of a prespecified acceptance limit. Thus

there is a 0.2-mg decrease per unit increase in concen-

tration (as much as 0.3 mg per unit decrease in

concentration based on the confidence interval). If the

range in the concentration of the drug is typically 46 mg

(i.e., a 2-mg range), this would predict that the bias as a

result of nonlinearity is 0.4 mg (0.6 mg in the

confidence interval). This can be judged to be of con-

sequence or not in testing product; for example, if the

specification limits on the analyte are 51 mg/dose,

much of this range will be consumed by the proportional

Table 2 Example of results from a validation experiment

in which five levels of an analyte were tested in six runs of

the assay

[C] 1 2 3 4 5 6 Avg.

3 3.3 3.4 3.2 3.1 3.4 3.3 3.3

4 4.2 4.2 4.2 4.4 4.0 4.3 4.2

5 5.0 4.9 4.9 5.0 4.8 4.9 4.9

6 5.8 5.8 5.6 5.9 5.9 5.7 5.8

7 6.4 6.7 6.7 6.8 6.6 6.4 6.6

Fig. 1 Validation results from an assay demonstrating

proportional bias, with low-concentration samples yielding

high measurements and high-concentration samples yielding

low measurements.

66 Assay Validation

D

o

w

n

l

o

a

d

e

d

B

y

:

[

U

n

i

v

e

r

s

i

t

y

o

f

A

l

b

e

r

t

a

]

A

t

:

0

6

:

3

5

7

J

a

n

u

a

r

y

2

0

0

9

bias, potentially resulting in an undesirable failure rate in

the measurement of that analyte.

When the purified analyte is unavailable for spiking,

and the levels of the analyte in the validation have been

attained through dilution, then the data generated from

that series can be alternatively evaluated using the model

x = am

b

(note that m can represent either the expected

concentration upon dilution or the actual dilution). Taking

the logs, the data from the dilution series is used to fit the

linear model,

logx loga b logm

The intercept of this model has no practical meaning,

while the proportional bias (sometimes called dilution

effect) can be estimated as 2

b 1

1 if m is in units of

concentration and as 2

b + 1

1 if m is in units of dilution

(note that the units of dilution effect are percent bias

per dilution increment; the dilution increment used here

is twofold).

To illustrate, consider the example in Table 3. A

dilution series of a sample is performed in each of the five

runs of an assay. These data are depicted graphically,

along with the results from their analysis, in Fig. 2. A

linear fit of log-titer to log-dilution yields an estimated

slope equal to

^

b 1:01 1:04; 0:98. The corres-

ponding dilution effect is equal to 2

1.01 + 1

1= 0.007

(i.e., a 0.7% decrease per twofold dilution).

The dilution effect can also be calculated as a function

of the estimated slopes for the standard (slope as a

function of dilution rather than concentration) and the

test sample:

Dilution effect 2

1

^

b

T

=

^

b

S

with the corresponding standard error a function of the

standard error of a ratio:

SE

^

b

T

=

^

b

S

1

^

b

2

S

VAR

^

b

T

^

b

T

=

^

b

S

2

VAR

^

b

S

_ _

This has the advantage over the earlier calculation of

being a more reliable estimate of the underlying vari-

ability in the measurements, coming from the con-

tribution to the estimate of variability from the data for

the standard.

A special case of accuracy comes from a comparison

of an experimental analytical method with a validated

referee method. Paired measurements in the two

assays can be made on a panel of samples, and these

can be utilized to establish the linearity and the

accuracy of the experimental method relative to the

referee method. The paired measurements are a sample

from a multivariate population, and the linearity of

the experimental method to the referee method can

be estimated using principal component analysis.

[5]

A

concordance slope can be estimated from the elements

of the first characteristic vector of the sample covariance

matrix (a

21

, a

11

):

^

b

C

a

21

a

11

The standard error of the concordance slope is obtained

from these along with the other elements of the

characteristic value evaluation (a

i1

is the ith element of

the first characteristic vector and l

i

is the corresponding

characteristic roots):

SE

^

b

C

Va

21

a

2

21

Va

11

a

2

11

2

cova

21

; a

11

a

21

a

11

_ _

^

b

2

C

where

Va

i1

l

1

l

2

n l

2

l

1

2

_ _

a

2

i2

and

cova

21

; a

11

l

1

l

2

n l

2

l

1

2

_ _

a

12

a

22

Table 3 Example of results from a validation experiment in

which five twofold dilutions of an analyte were tested in five

runs of the assay

Dilution 1 2 3 4 5 Titer

1 75 90 93 79 72 81

2 43 45 46 35 40 83

4 20 23 22 18 21 83

8 11 10 12 10 10 85

16 4 5 6 4 5 78

Fig. 2 Results from a validation series demonstrating satisfact-

ory linearity, i.e., approximately a twofold decrease in response

with twofold dilution.

Assay Validation 67

A

D

o

w

n

l

o

a

d

e

d

B

y

:

[

U

n

i

v

e

r

s

i

t

y

o

f

A

l

b

e

r

t

a

]

A

t

:

0

6

:

3

5

7

J

a

n

u

a

r

y

2

0

0

9

The concordance slope can be used, along with the

standard error of that estimate, to measure the discordance

of the experimental analytical method relative to the

referee method and its corresponding confidence

interval:

Discordance 2

b

C

1

This is similar to the dilution effect discussed earlier

and represents the percentage difference in reported

potency per twofold increase in result as measured in

the referee method.

When the two assays are scaled alike, the accuracy

of experimental method relative to the referee me-

thod can be assessed through a simple paired variate

analysis. When the percent difference is expected to be

constant across the two methods, the data are analyzed

on a log scale. An estimate of the simple paired dif-

ference in the logs can be used, in conjunction with the

standard error of the paired difference, to estimate the

percentage difference in measurements obtained from

the experimental procedure relative to the referee

method:

[6]

d t

n1

s

d

=

n

p

Percent difference 100e

d

1%

Note that as usual, the significance of the percentage

difference should be judged primarily on the basis of

practical considerations rather than statistical significance.

The specificity of an analytical procedure can be

assessed through an evaluation of the results obtained

from a placebo sample (i.e., a sample containing all

constituents except the analyte of interest) relative to

background. A statistical evaluation might be composed

of either a comparison of instrument measurements

obtained for this placebo, with background measure-

ments (i.e., through background-corrected placebo

measurements), or from measurements made on the

placebo alone. In either case, an increase over the

detection level of the assay would indicate that some

constituent of the sample, in addition to analyte, is con-

tributing to the measurement in the sample. This should

be assessed by noting that the upper bound on a con-

fidence interval falls below some prespecified level in

the assay.

Analysis of Parameters Related to Variability

Estimates of repeatability (intrarun, or within-run, vari-

ability) and precision (interrun, or between-run, variabil-

ity) can be established by performing a variance com-

ponent analysis on the results obtained from the data

generated to assess accuracy and linearity.

[7]

Other

sources of variability (e.g., laboratories, operators, and

reagent lots) can be incorporated into the pattern of runs

performed during the validation to estimate the effects of

these sources of variability. The expected mean squares

are shown in Table 4. From this, ^ s

2

withinrun

mean square

error (MSE) and ^ s

2

betweenrun

mean square for runs

(MSR)MSE=5 (note that the MSE is a composite of

pure error and the interaction of run and level; pure error

could be separated from the interaction by performing

replicates at each level; however, the within-run variabil-

ity will still be reported as the composite of pure error

and the level-by-run interaction). The total variability

associated with testing a sample in the assay can be

calculated from these variance component estimates as:

^ s

2

Total

^ s

2

B

r

^ s

2

W

nr

Note that this is the variance of y, where r represents the

number of independent runs performed on the sample

and n represents the number of replicates within a run.

A confidence interval can be established on the total

variability as follows:

[8]

^ s

2

Total

^ s

2

B

r

^ s

2

W

nr

1

Jr

_ _

MSR

n J

Jnr

_ _

MSE

c

1

MSR c

2

MSE

q

c

q

S

q

where J represents the number of replicates of a sample

tested in each run of the assay validation experiment (here

J=5 for the number of levels of the sample). The 1 2a

confidence interval on ^ s

2

Total

becomes:

^ s

2

Total

q

G

2

q

c

2

q

S

4

q

; ^ s

2

Total

q

H

2

q

c

2

q

S

4

q

_

_

_

_

Table 4 Analysis of variance table showing expected mean

squares as functions of intrarun and interrun components

of variability

Effect df MS

Estimated

mean square F

Level 4 Mean square

for levels

(MSL)

^ s

2

W

QL

Run 5 MSR ^ s

2

W

5^ s

2

B

MSR/MSE

Error 20 MSE ^ s

2

W

68 Assay Validation

D

o

w

n

l

o

a

d

e

d

B

y

:

[

U

n

i

v

e

r

s

i

t

y

o

f

A

l

b

e

r

t

a

]

A

t

:

0

6

:

3

5

7

J

a

n

u

a

r

y

2

0

0

9

where

G

q

1

1

F

a;df

q

;1

; H

q

1

F

1a;df

q

;1

1;

F

a;df

q

;1

; F

1a;df

q

;1

represent the percentiles of the F-distribution.

The LOD and the LOQ are calculated using the

standard deviation of the responses (^ s) and the slope

of the calibration curve (

^

b) as LOD 3:3^ s=

^

b (note,

the 3.3 is derived from the 95th percentile of the stand-

ard normal distribution, 1.64; here, 2 1.64 = 3.3,

thereby limiting both the false-positive and false-

negative error rates to be equal to or less than 5%) and

LOQ 10^ s=

^

b (note, the 10 corresponds to the restriction

of no more than 10% RSD in the measurement variability;

RSD=100 ^ s=x < 0:10 gives x; thus if the restriction

was changed to not more than 20% RSD, then the

LOQ could be calculated as LOQ 5^ s=

^

b).

These estimates do not account for the calibration

curve, and they can be improved upon by acknowledging

the variability in prediction from the curve.

[9]

For a linear

fit, the LOD is depicted graphically in Fig. 3. The LOQ

can be calculated as prescribed in the ICH guideline,

solving for x

Q

in the following equation:

LOQ 10 ^ s

1

1

n

x

Q

x

2

SXX

_

_

_

_

_

_

The limit of quantitation is depicted graphically in Fig. 4.

The LOQ is more complicated to calculate from a

nonlinear calibration function, such as the four-parameter

logistic regression equation, and involves the first-or-

der Taylor series expansion of the solution equation for

x

Q

[10]

(see the entry on Assay Development). The calcu-

lation of the LOQ for this equation is illustrated in Fig. 5.

FOLLOW-UP

The information from the analytical method validation

can be utilized to establish a testing format for the

samples in the assay. In particular, variance component

estimates of the within-run and between-run variabilities

Fig. 3 Determination of the LOD using the linear fit of the

standard curve plus restrictions on the proportions of false

positives and false negatives.

Fig. 4 Determination of the LOQ using the linear fit of the

standard curve and a restriction on the variability (% RSD) in

the assay.

Fig. 5 Quantifiable range determined as limits within which

assay variability (% RSD) is less than or equal to 20%.

Table 5 Predicted variability (% RSD) for a given number of

runs (r) and a given number of replicates within each run (n)

r/n 1 2 3 1111

1 6% 5% 5% 5.0%

2 4% 4% 4% 3.5%

3 3% 3% 3% 2.9%

Assay Validation 69

A

D

o

w

n

l

o

a

d

e

d

B

y

:

[

U

n

i

v

e

r

s

i

t

y

o

f

A

l

b

e

r

t

a

]

A

t

:

0

6

:

3

5

7

J

a

n

u

a

r

y

2

0

0

9

can be used to predict the total variability associated

with a variety of testing formats. For example, consider

the case where component estimates have been deter-

mined to be equal to ^ s

B

0:05 (5%) and ^ s

W

0:03

(3%). Letting r represent the number of independent runs

that will be performed on a sample, and n be the number

of replicates within a run, the variabilities are listed in

Table 5. Suppose that, because of the process capability,

the analytical variability must be restricted to RSD3%.

As can be observed from the table, this cannot be ac-

complished using a single run of the assay; it must

therefore be achieved through a combination of multiple

runs with multiple replicates within each run.

ANALYTICAL METHOD QUALITY CONTROL

Assay validation does not end with the formal validation

experiment but is continuously assessed through assay

quality control. Control measures of variability and bias

are utilized to bridge to the assay validation and to

warrant the continued reliability of the procedure.

Extra variability in replicates can be detected utilizing

standard statistical process control metrics;

[4]

detection

and prespecified action upon detection can help maintain

the variability characteristics of an assay. For example,

when the measurement of an analyte is obtained from

multiple runs of an assay, those results can be examined

by noting the range (either log(max) log(min) or

max min, depending upon whether the measure-

ments are scaled proportionally or absolutely). A bound

on the range can be derived:

Range ^ s

dimension

d

2

kd

3

where ^ s

dimension

is the variability in the dimension of rep-

lication (here, run to run), d

2

and d

3

are the factors

used to scale the standard deviation to a range, and k is

a factor representing the desired degree of control (e.g.,

k = 2 represents approximately 95% confidence). Hav-

ing detected extra variability, additional measurements

can be obtained, and a result for the sample can be

determined from the remainder of the measurements

made after the maximum and minimum results have been

eliminated from the calculation. When extra variabil-

ity has been identified in instrument replicates of a

standard concentration or a dilution of a test sample, that

concentration of the standard or dilution of the test sample

might be eliminated from subsequent calculations.

Measurements on control sample(s) help identify shifts

in scale and therefore bias in a run of an assay. In-

formation in multiple control samples can typically be

reduced to one or two meaningful scores, such as an

average and/or a slope. These can help isolate problems

and provide information regarding the cause of a

deviation in a run of an assay. Suppose, for example,

that three controls of various levels are used to monitor

shifts in sensitivity in the assay. The average can be used

to detect absolute shifts, because of some underlying

source of constant variability, such as inappropriate

preparation of standards or the introduction of significant

background. The slope can be used to detect proportional

shifts as a result of some underlying source of pro-

portional variability.

Suitability criteria on the performance of column

peaks can detect degeneration of a column, while cha-

racteristics of the standard curve, such as the slope or

EC

50

(EC

50

corresponds to C in a four-parameter logistic

regression) help predict an assay with atypical or

substandard performance.

Care must be taken, however, to avoid either mean-

ingless or redundant controls. The increased false-positive

rate inherent in the multiplicity of a control strategy must

be considered, and a carefully contrived quality control

scheme should acknowledge this.

CONCLUSION

A carefully conceived assay validation should follow

assay development, and precede routine implementation

of the method for product characterization. The purpose

of the validation is to demonstrate that the assay is

fundamentally reliable, and possesses operating charac-

teristics that make it suitable for use. The statistical

estimates collected during the validation can also be used

to design a test plan, that provides the maximum relia-

bility for the minimum effort in the laboratory. Most

importantly, validation of the assay does not end with the

documentation of the validation experiment, but should

continue with assay quality control. The proper choice of

control samples and control parameters helps assure the

continued validity of the procedure during routine use in

the laboratory.

REFERENCES

1. USP XXII. General Chapter 1225, Validation of Compen-

dial Methods; 19821984.

2. Federal Register. International Conference on Harmoniza-

tion; Guideline on Validation of Analytical Procedures:

Definitions and Terminology; March 1, 1995; 11259

11262.

70 Assay Validation

D

o

w

n

l

o

a

d

e

d

B

y

:

[

U

n

i

v

e

r

s

i

t

y

o

f

A

l

b

e

r

t

a

]

A

t

:

0

6

:

3

5

7

J

a

n

u

a

r

y

2

0

0

9

3. International Conference on Harmonization; Guideline on

Validation of Analytical Procedures: Methodology;

November 6, 1996.

4. Montgomery, D. Introduction to Statistical Quality Con-

trol, 2nd Ed.; Wiley: New York, 1991; pp. 203206, 208

210.

5. Morrison, D. Multivariate Statistical Methods; McGraw-

Hill: New York, 1967; 221258.

6. Snedecor, G.; Cochran, W. Statistical Methods, 6th Ed.;

Iowa State Univ. Press: Ames, IA, 1967; 91100.

7. Winer, B. Statistical Principles in Experimental Design,

2nd Ed.; McGraw-Hill: New York, 1971; 244251.

8. Burdick, R.; Graybill, F. Confidence Intervals on Variance

Components; Marcel Dekker: New York, 1992; 2839.

9. Oppenheimer, L.; Capizzi, T.; Weppelman, R.; Mehta, H.

Determining the lowest limit of reliable assay measure-

ment. Anal. Chem. 1983, 55, 638643.

10. Morgan, B. Analysis of Quantal Response Data; Chapman

& Hall: New York, 1992; 370, 371.

11. Massart, D.L.; Dijkstra, A.; Kaufman, L. Evaluation and

Optimization of Laboratory Methods and Analytical

Procedures; Elsevier: New York, 1978.

12. Currie, L.A. Limits for qualitative detection and quanti-

tative determination: Application to radiochemistry. Anal.

Chem. 1968, 40, 586593.

13. Cardone, M.J. Detection and determination of error in

analytical methodology. Part I. The method verification

program. J. Assoc. Off. Anal. Chem. 1983, 66, 1257

1281.

14. Cardone, M.J. Detection and determination of error in

analytical methodology. Part II. Correction for cor-

rigible systematic error in the course of real sample

analysis. J. Assoc. Off. Anal. Chem. 1983, 66, 1283

1294.

15. Miller, J.C.; Miller, J.N. Statistics for Analytical Chem-

istry, 2nd Ed.; Wiley: New York, 1988.

16. Caulcutt, R.; Boddy, R. Statistics for Analytical Chemists;

Chapman & Hall: New York, 1983.

Assay Validation 71

A

D

o

w

n

l

o

a

d

e

d

B

y

:

[

U

n

i

v

e

r

s

i

t

y

o

f

A

l

b

e

r

t

a

]

A

t

:

0

6

:

3

5

7

J

a

n

u

a

r

y

2

0

0

9

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (120)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Pengaruh Dongeng Terhadap Perubahan Gangguan Tidur Anak Usia Prasekolah Akibat Hospitalisasi Di Rumah SakitDocument7 pagesPengaruh Dongeng Terhadap Perubahan Gangguan Tidur Anak Usia Prasekolah Akibat Hospitalisasi Di Rumah Sakitgita rahayuNo ratings yet

- Dmaic - GRR TemplateDocument25 pagesDmaic - GRR TemplateOMAR CECEÑASNo ratings yet

- Panel CookbookDocument98 pagesPanel CookbookMaruška VizekNo ratings yet

- June 13 s1Document6 pagesJune 13 s1annabellltfNo ratings yet

- Activity 5 StatDocument6 pagesActivity 5 StatGene Roy P. HernandezNo ratings yet

- Stats Chapter 8 Project - Jon and MeganDocument2 pagesStats Chapter 8 Project - Jon and Meganapi-442122486No ratings yet

- All About AcetDocument18 pagesAll About Acetkushal sNo ratings yet

- Von BertalanffyDocument54 pagesVon BertalanffyCaducas Rocha DuarteNo ratings yet

- Lab 1Document3 pagesLab 1gregNo ratings yet

- One Sample Sign TestDocument24 pagesOne Sample Sign TestRohaila RohaniNo ratings yet

- Econometrics I: TA Session 5: Giovanna UbidaDocument20 pagesEconometrics I: TA Session 5: Giovanna UbidaALAN BUENONo ratings yet

- Jurnal Ekonomi & Bisnis Dharma AndalasDocument13 pagesJurnal Ekonomi & Bisnis Dharma AndalasNicky BrenzNo ratings yet

- Real Statistics Examples Regression 1Document394 pagesReal Statistics Examples Regression 1arelismohammadNo ratings yet

- How To Perform T-Test in PandasDocument5 pagesHow To Perform T-Test in PandasGaruma AbdisaNo ratings yet

- Sample of Action Research ResultDocument4 pagesSample of Action Research ResultNick Cris GadorNo ratings yet

- Heckman Selection ModelsDocument4 pagesHeckman Selection ModelsrbmalasaNo ratings yet

- QTBDocument7 pagesQTBUmarNo ratings yet

- Solutions: Stat 101 FinalDocument15 pagesSolutions: Stat 101 Finalkaungwaiphyo89No ratings yet

- Unit Task - Introduction To Statistical Inference and Summary of The Different Basic Statistical Tests (I)Document3 pagesUnit Task - Introduction To Statistical Inference and Summary of The Different Basic Statistical Tests (I)Kia GraceNo ratings yet

- A Statistical Analysis of GDP and Final Consumption Using Simple Linear Regression. The Case of Romania 1990-2010Document7 pagesA Statistical Analysis of GDP and Final Consumption Using Simple Linear Regression. The Case of Romania 1990-2010Nouf ANo ratings yet

- Portfolio ManagementDocument419 pagesPortfolio ManagementPraveen GuptaNo ratings yet

- Tugas 2 - Kelompok 13 - Metode ShuffleDocument21 pagesTugas 2 - Kelompok 13 - Metode Shufflepinaka swastikaNo ratings yet

- Confidence Intervals For Proportions Take Home TestDocument5 pagesConfidence Intervals For Proportions Take Home Test1012219No ratings yet

- Statistics - Probability Q4 Mod1 Tests-of-HypothesisDocument20 pagesStatistics - Probability Q4 Mod1 Tests-of-HypothesisJoshNo ratings yet

- P1.T2. Quantitative AnalysisDocument13 pagesP1.T2. Quantitative AnalysisChristian Rey MagtibayNo ratings yet

- Chapter 10, Part A Statistical Inferences About Means and Proportions With Two PopulationsDocument48 pagesChapter 10, Part A Statistical Inferences About Means and Proportions With Two PopulationsgauravpalgarimapalNo ratings yet

- MAT 240 Real Estate DataDocument5 pagesMAT 240 Real Estate DataCyprian OchiengNo ratings yet

- Stat L2 2021 FallDocument58 pagesStat L2 2021 FallSara SantanaNo ratings yet

- STAT 2601 Final Exam Extra Practice QuestionsDocument9 pagesSTAT 2601 Final Exam Extra Practice Questionssubui613No ratings yet

- 06 SOM 2017 Service Quality Metrics Part II 1X SV 9 Jul 17 ReleaseDocument32 pages06 SOM 2017 Service Quality Metrics Part II 1X SV 9 Jul 17 ReleaseAbhishekNo ratings yet